Unmanned Aerial Vehicle-Scale Weed Segmentation Method Based on Image Analysis Technology for Enhanced Accuracy of Maize Seedling Counting

Abstract

1. Introduction

2. Material and Methods

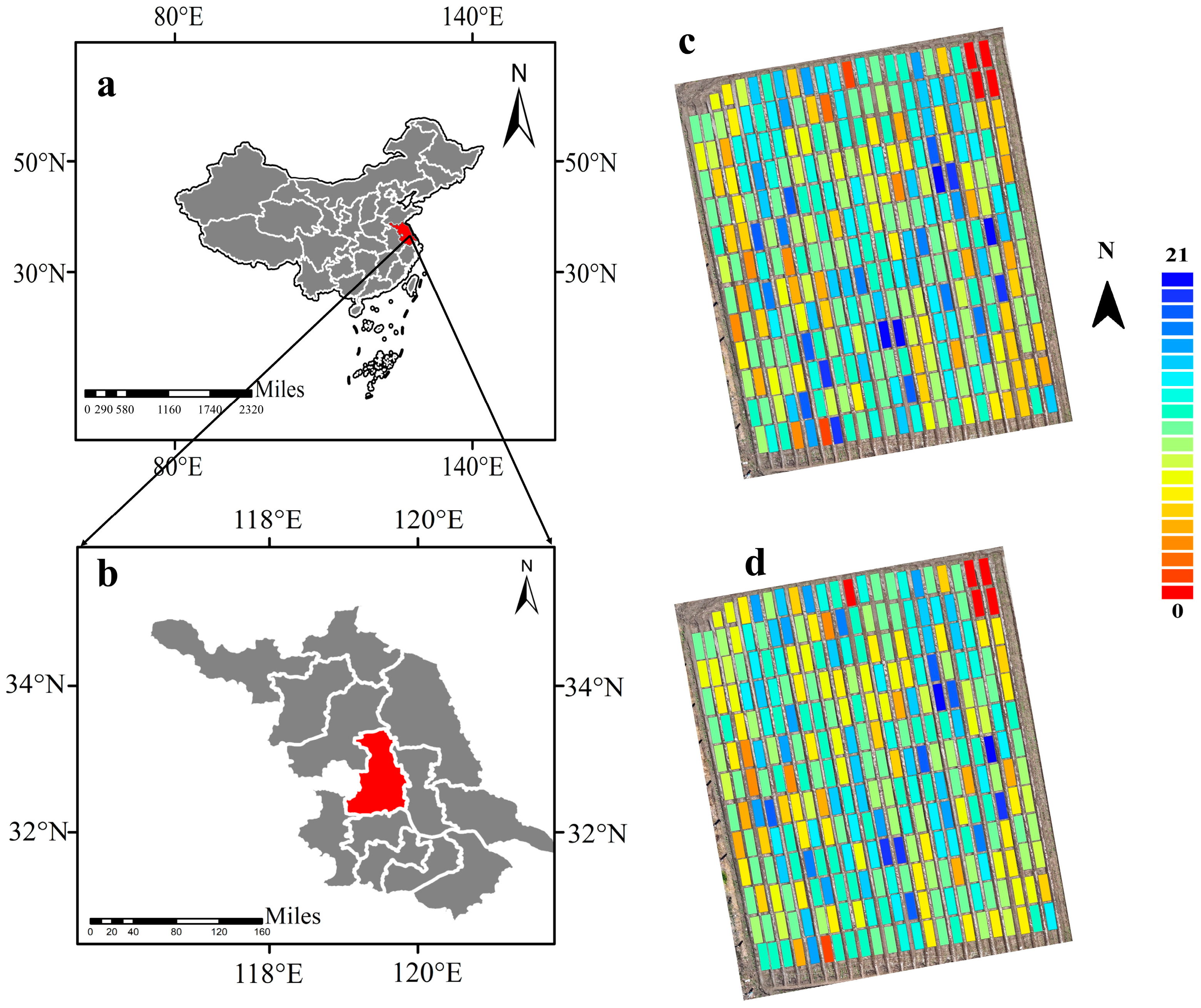

2.1. Experimental Site

2.2. Image Acquisition

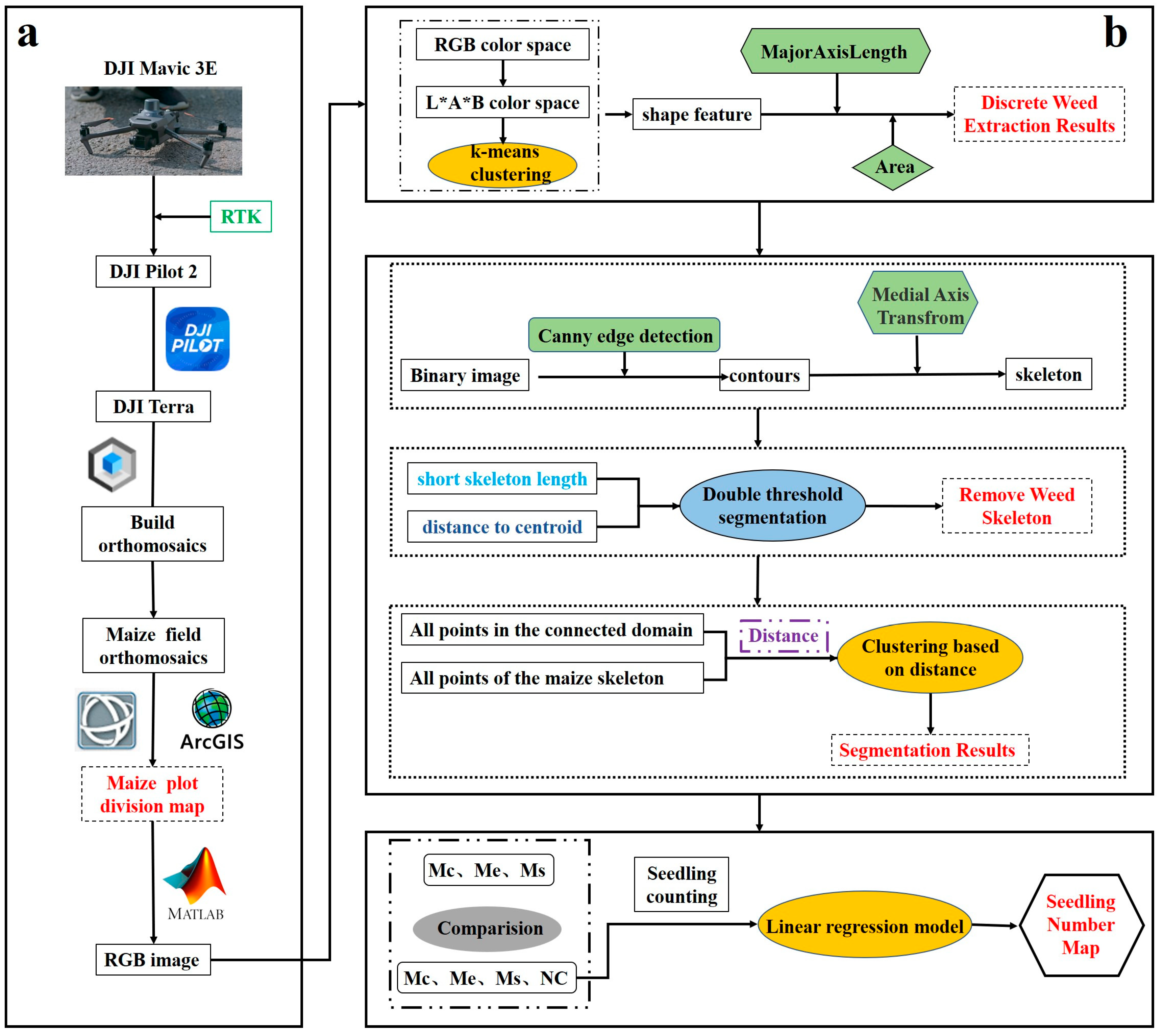

2.3. Image Processing Workflow

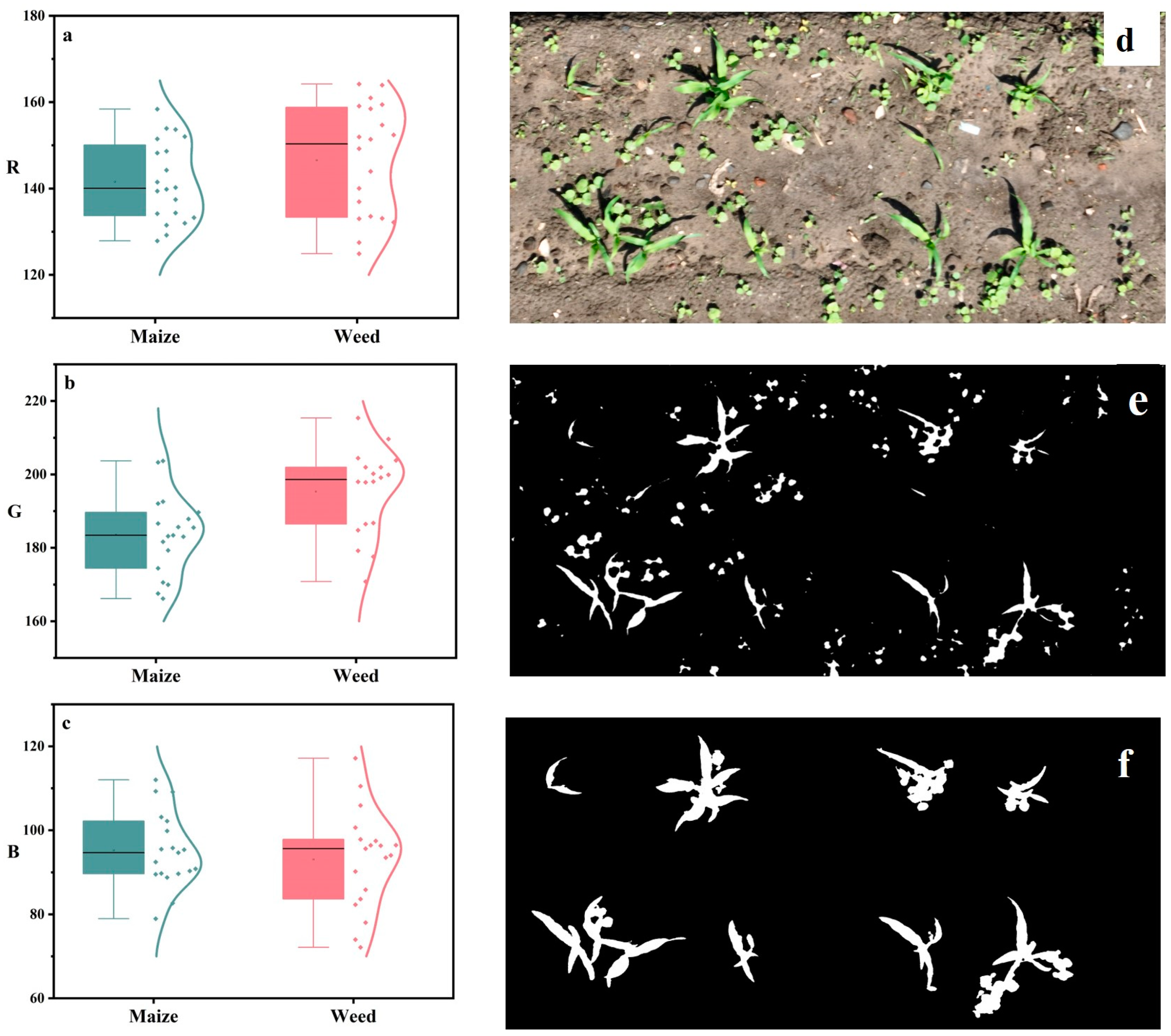

2.3.1. Method for Removing Discrete Weeds

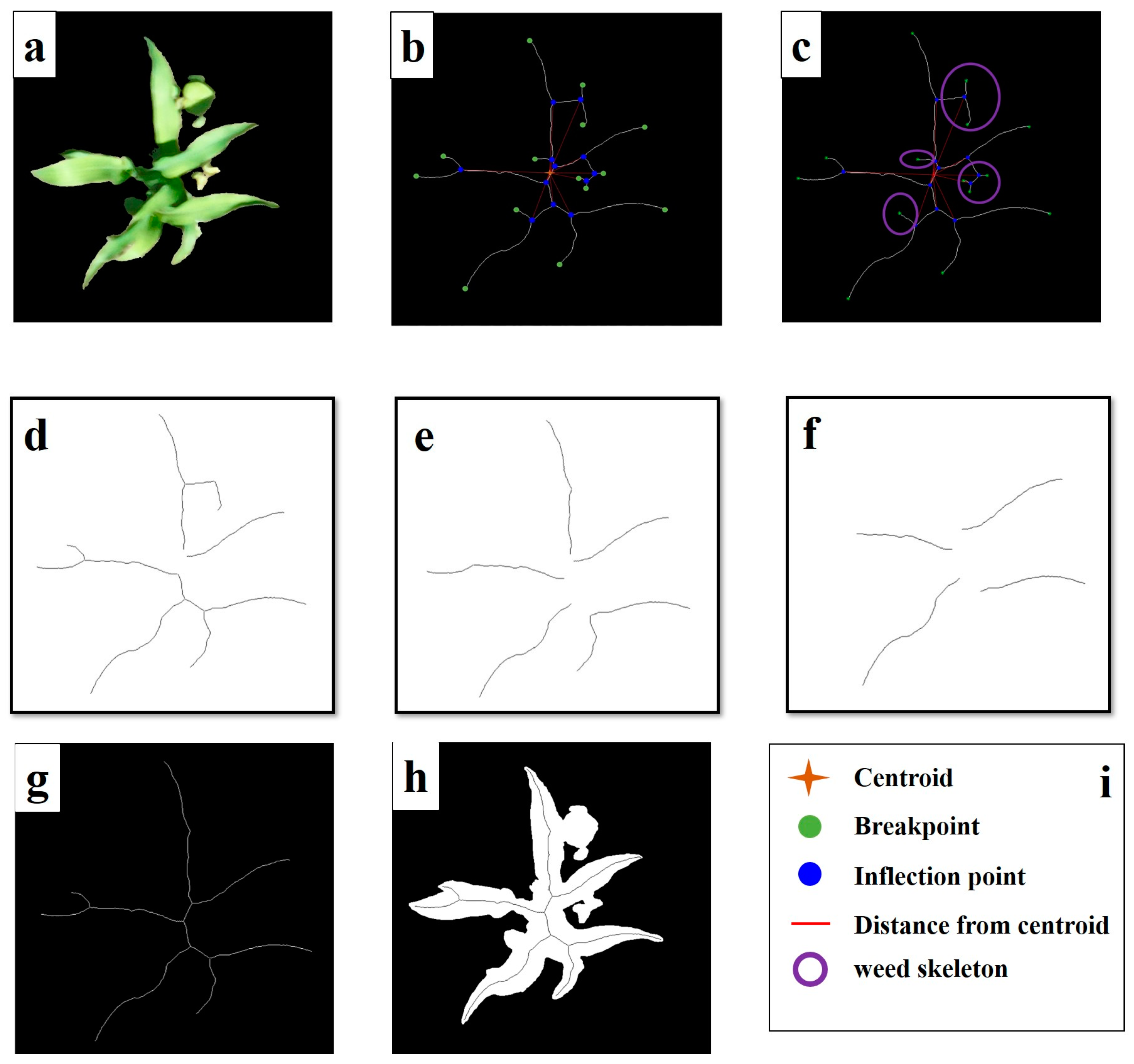

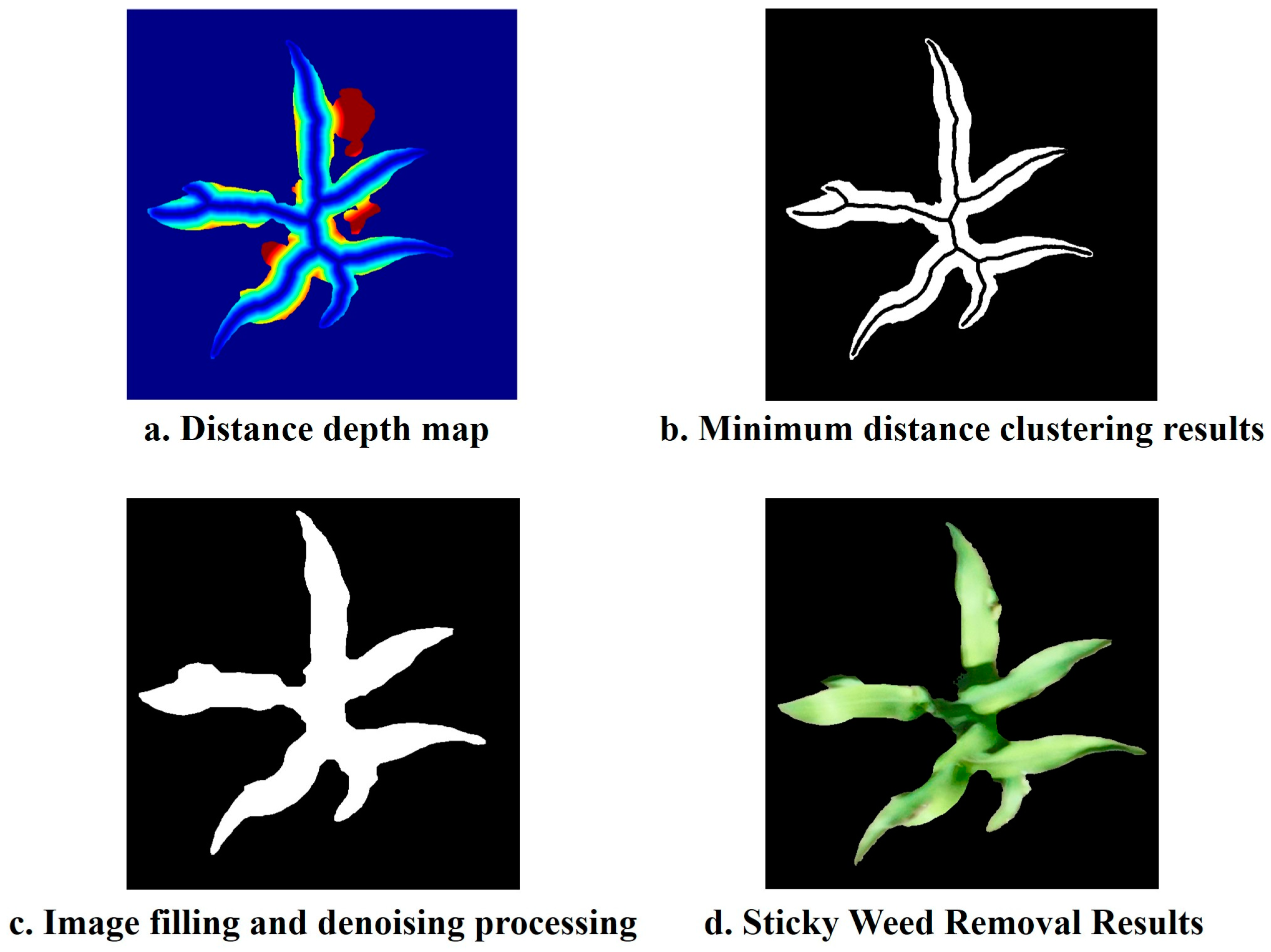

2.3.2. Method for Removing Attached Weeds

2.3.3. Evaluation of the Weed Removal Effect

2.3.4. Counting of Maize Seedlings

2.3.5. Evaluation of Maize Seedling Counting Accuracy

3. Results and Analysis

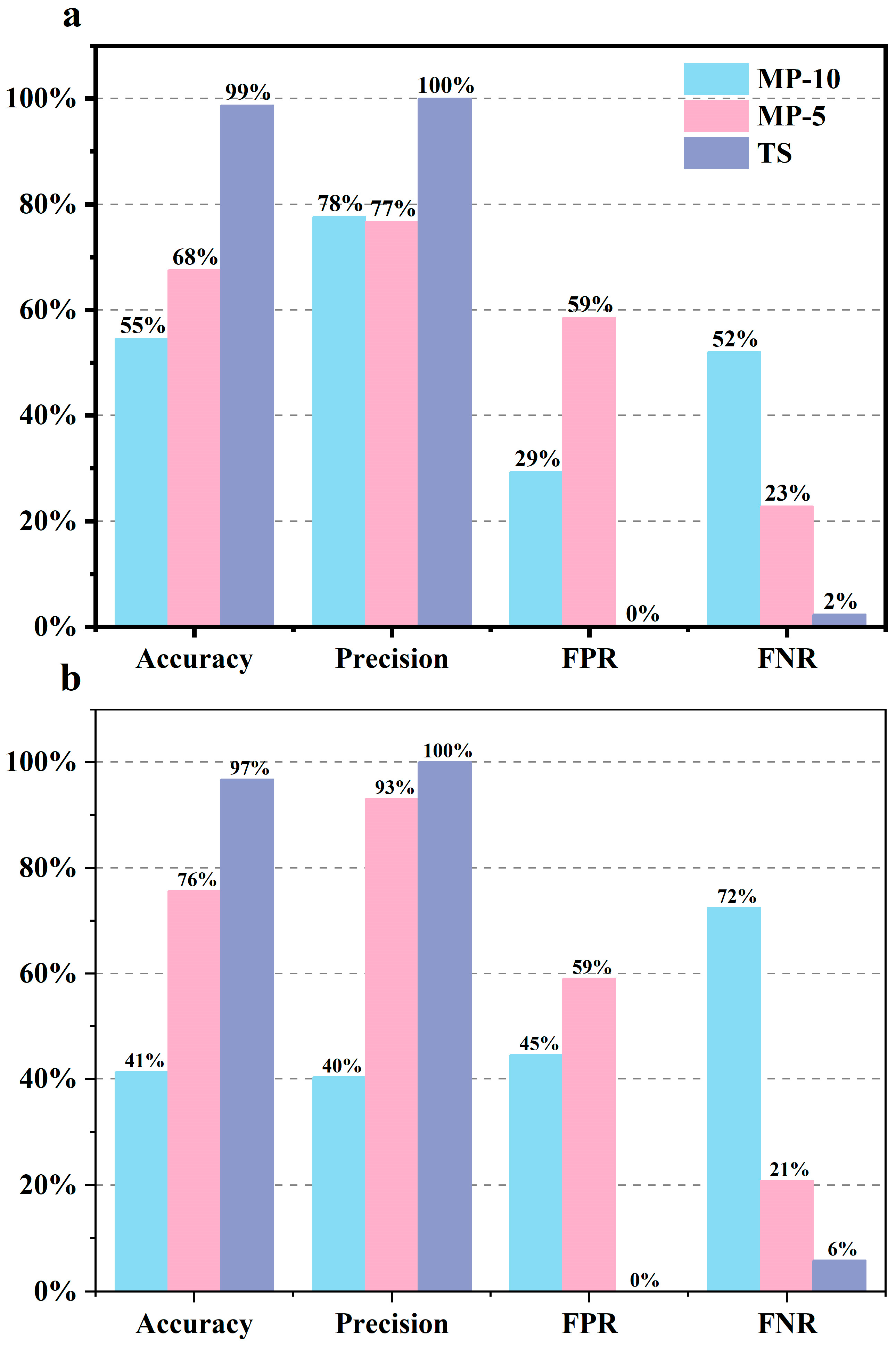

3.1. Discrete Weed Segmentation Results

3.2. Segmentation Results for Adherent Weeds

3.3. Comparison of Different Weed Removal Methods

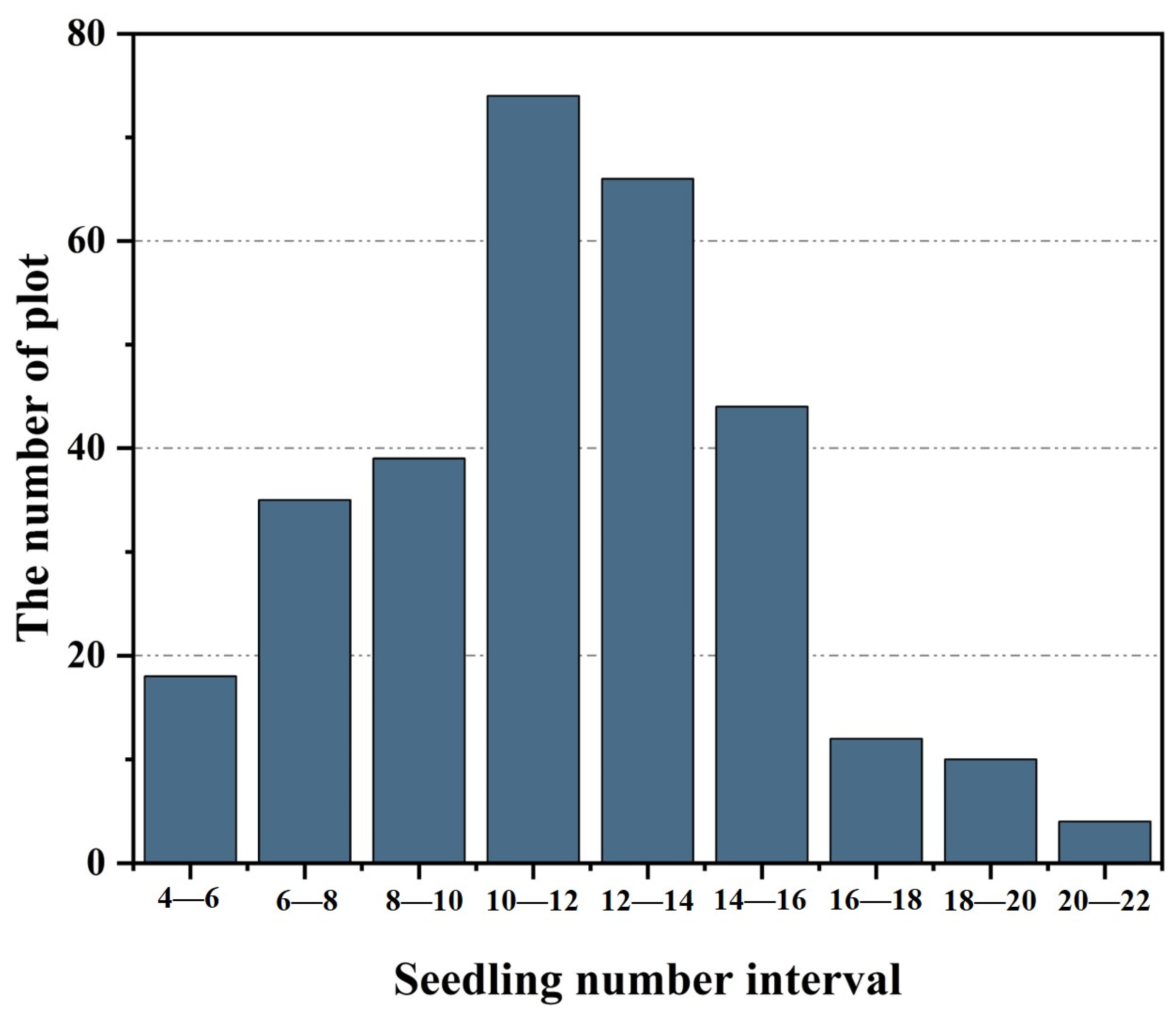

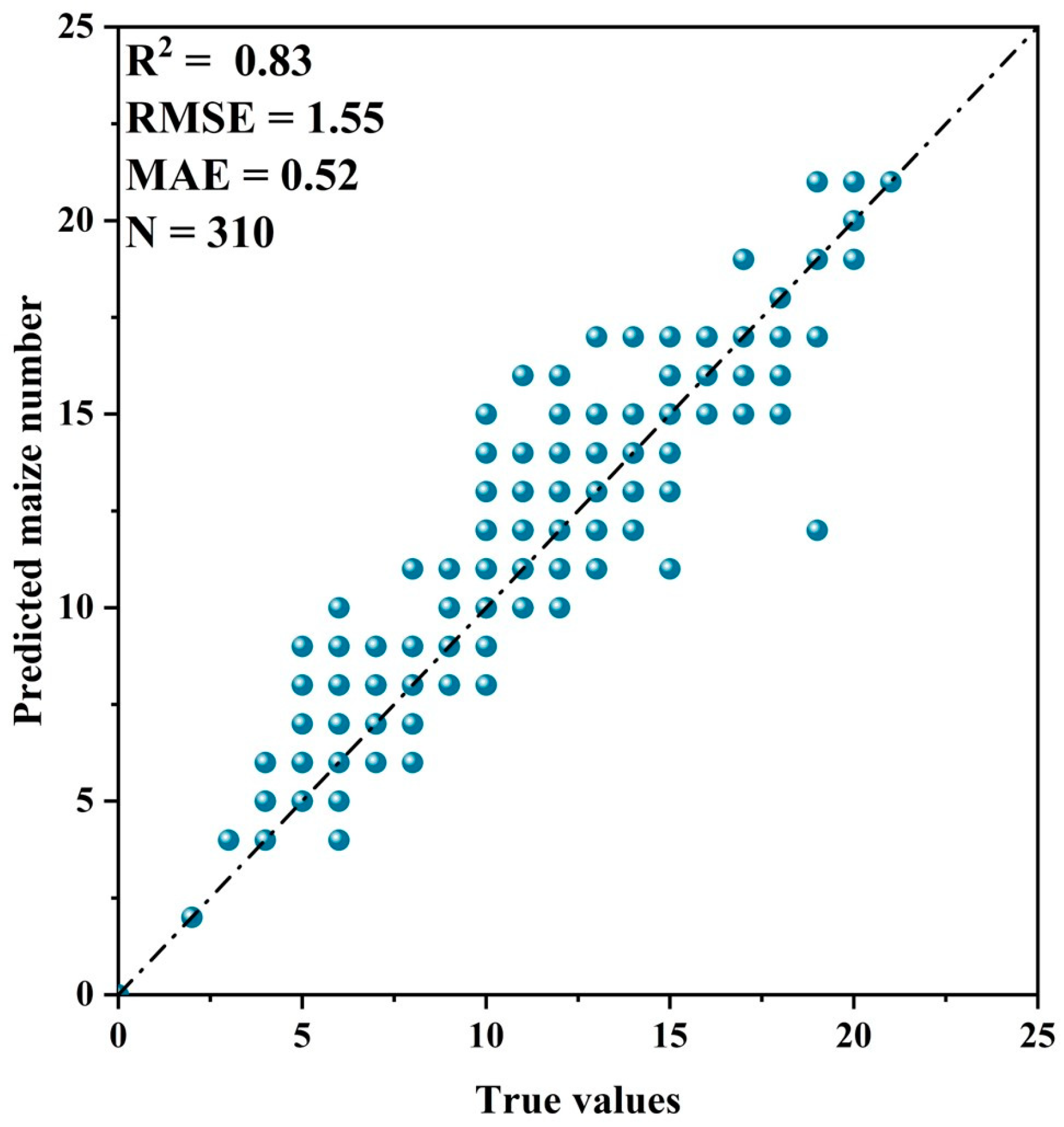

3.4. Counting of Maize Seedlings

3.5. Results of Maize Seedling Counts under Different Seedling Proportions

3.6. Application of Weed Division Method to Drone Images

4. Discussion

4.1. Impact of Weeds on the Monitoring of Maize Seedlings

4.2. Division Method of Adhesive Weeds

4.3. Effect of Different Flight Heights on the Recognition of Maize Plant

4.4. Application of the Weed Division Method to Drone Images

4.5. Comparison of the Efficiency of Different Models

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sylvester, G. E-Agriculture in Action: Drones for Agriculture; Food and Agriculture Organization ofn the United Nations and International: Roma, Italy, 2018. [Google Scholar]

- Steinwand, M.A.; Ronald, P.C. Crop Biotechnology and the Future of Food. Nat. Food 2020, 1, 273–283. [Google Scholar] [CrossRef]

- Gallandt, E.R.; Weiner, J. Crop–Weed Competition. In eLS; John Wiley & Sons, Ltd., Ed.; Wiley: Hoboken, NJ, USA, 2015; pp. 1–9. ISBN 978-0-470-01617-6. [Google Scholar]

- Korav, S.; Dhaka, A.K.; Singh, R.; Premaradhya, N.; Reddy, G.C. A Study on Crop Weed Competition in Field Crops. J. Pharmacogn. Phytochem. 2018, 7, 3235–3240. [Google Scholar]

- Horvath, D.P.; Clay, S.A.; Swanton, C.J.; Anderson, J.V.; Chao, W.S. Weed-Induced Crop Yield Loss: A New Paradigm and New Challenges. Trends Plant Sci. 2023, 28, 567–582. [Google Scholar] [CrossRef] [PubMed]

- Lou, Z.; Quan, L.; Sun, D.; Li, H.; Xia, F. Hyperspectral Remote Sensing to Assess Weed Competitiveness in Maize Farmland Ecosystems. Sci. Total Environ. 2022, 844, 157071. [Google Scholar] [CrossRef] [PubMed]

- Tadiello, T.; Potenza, E.; Marino, P.; Perego, A.; Torre, D.D.; Michelon, L.; Bechini, L. Growth, Weed Control, and Nitrogen Uptake of Winter-Killed Cover Crops, and Their Effects on Maize in Conservation Agriculture. Agron. Sustain. Dev. 2022, 42, 18. [Google Scholar] [CrossRef]

- Gao, X.; Zan, X.; Yang, S.; Zhang, R.; Chen, S.; Zhang, X.; Liu, Z.; Ma, Y.; Zhao, Y.; Li, S. Maize Seedling Information Extraction from UAV Images Based on Semi-Automatic Sample Generation and Mask R-CNN Model. Eur. J. Agron. 2023, 147, 126845. [Google Scholar] [CrossRef]

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J.; et al. DeepWeeds: A Multiclass Weed Species Image Dataset for Deep Learning. Sci. Rep. 2019, 9, 2058. [Google Scholar] [CrossRef]

- Bai, Y.; Nie, C.; Wang, H.; Cheng, M.; Liu, S.; Yu, X.; Shao, M.; Wang, Z.; Wang, S.; Tuohuti, N.; et al. A Fast and Robust Method for Plant Count in Sunflower and Maize at Different Seedling Stages Using High-Resolution UAV RGB Imagery. Precis. Agric. 2022, 23, 1720–1742. [Google Scholar] [CrossRef]

- Pang, Y.; Shi, Y.; Gao, S.; Jiang, F.; Veeranampalayam-Sivakumar, A.-N.; Thompson, L.; Luck, J.; Liu, C. Improved Crop Row Detection with Deep Neural Network for Early-Season Maize Stand Count in UAV Imagery. Comput. Electron. Agric. 2020, 178, 105766. [Google Scholar] [CrossRef]

- Jin, X.; Liu, S.; Baret, F.; Hemerlé, M.; Comar, A. Estimates of Plant Density of Wheat Crops at Emergence from Very Low Altitude UAV Imagery. Remote Sens. Environ. 2017, 198, 105–114. [Google Scholar] [CrossRef]

- Kumar, A.; Desai, S.V.; Balasubramanian, V.N.; Rajalakshmi, P.; Guo, W.; Balaji Naik, B.; Balram, M.; Desai, U.B. Efficient Maize Tassel-Detection Method Using UAV Based Remote Sensing. Remote Sens. Appl. Soc. Environ. 2021, 23, 100549. [Google Scholar] [CrossRef]

- Chen, Y.; Wu, Z.; Zhao, B.; Fan, C.; Shi, S. Weed and Corn Seedling Detection in Field Based on Multi Feature Fusion and Support Vector Machine. Sensors 2020, 21, 212. [Google Scholar] [CrossRef]

- Liu, T.; Wu, W.; Chen, W.; Sun, C.; Zhu, X.; Guo, W. Automated Image-Processing for Counting Seedlings in a Wheat Field. Precis. Agric. 2016, 17, 392–406. [Google Scholar] [CrossRef]

- Gnädinger, F.; Schmidhalter, U. Digital Counts of Maize Plants by Unmanned Aerial Vehicles (UAVs). Remote Sens. 2017, 9, 544. [Google Scholar] [CrossRef]

- Liu, T.; Zhao, Y.; Wu, F.; Wang, J.; Chen, C.; Zhou, Y.; Ju, C.; Huo, Z.; Zhong, X.; Liu, S.; et al. The Estimation of Wheat Tiller Number Based on UAV Images and Gradual Change Features (GCFs). Precis. Agric. 2022, 24, 353–374. [Google Scholar] [CrossRef]

- Fernandez-Gallego, J.A.; Lootens, P.; Borra-Serrano, I.; Derycke, V.; Haesaert, G.; Roldán-Ruiz, I.; Araus, J.L.; Kefauver, S.C. Automatic Wheat Ear Counting Using Machine Learning Based on RGB UAV Imagery. Plant J. 2020, 103, 1603–1613. [Google Scholar] [CrossRef]

- Liu, S.; Yin, D.; Feng, H.; Li, Z.; Xu, X.; Shi, L.; Jin, X. Estimating Maize Seedling Number with UAV RGB Images and Advanced Image Processing Methods. Precis. Agric. 2022, 23, 1604–1632. [Google Scholar] [CrossRef]

- Liu, M.; Su, W.-H.; Wang, X.-Q. Quantitative Evaluation of Maize Emergence Using UAV Imagery and Deep Learning. Remote Sens. 2023, 15, 1979. [Google Scholar] [CrossRef]

- Alchanatis, V.; Ridel, L.; Hetzroni, A.; Yaroslavsky, L. Weed Detection in Multi-Spectral Images of Cotton Fields. Comput. Electron. Agric. 2005, 47, 243–260. [Google Scholar] [CrossRef]

- Bakhshipour, A.; Jafari, A.; Nassiri, S.M.; Zare, D. Weed Segmentation Using Texture Features Extracted from Wavelet Sub-Images. Biosyst. Eng. 2017, 157, 1–12. [Google Scholar] [CrossRef]

- Espejo-Garcia, B.; Mylonas, N.; Athanasakos, L.; Vali, E.; Fountas, S. Combining Generative Adversarial Networks and Agricultural Transfer Learning for Weeds Identification. Biosyst. Eng. 2021, 204, 79–89. [Google Scholar] [CrossRef]

- Ahmad, A.; Saraswat, D.; Aggarwal, V.; Etienne, A.; Hancock, B. Performance of Deep Learning Models for Classifying and Detecting Common Weeds in Corn and Soybean Production Systems. Comput. Electron. Agric. 2021, 184, 106081. [Google Scholar] [CrossRef]

- Lin, F.; Zhang, D.; Huang, Y.; Wang, X.; Chen, X. Detection of Corn and Weed Species by the Combination of Spectral, Shape and Textural Features. Sustainability 2017, 9, 1335. [Google Scholar] [CrossRef]

- Pott, L.P.; Amado, T.J.; Schwalbert, R.A.; Sebem, E.; Jugulam, M.; Ciampitti, I.A. Pre-planting Weed Detection Based on Ground Field Spectral Data. Pest. Manag. Sci. 2020, 76, 1173–1182. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Wang, L.; Shu, M.; Liang, X.; Ghafoor, A.Z.; Liu, Y.; Ma, Y.; Zhu, J. Detection and Counting of Maize Leaves Based on Two-Stage Deep Learning with UAV-Based RGB Image. Remote Sens. 2022, 14, 5388. [Google Scholar] [CrossRef]

- Xu, B.; Meng, R.; Chen, G.; Liang, L.; Lv, Z.; Zhou, L.; Sun, R.; Zhao, F.; Yang, W. Improved Weed Mapping in Corn Fields by Combining UAV-Based Spectral, Textural, Structural, and Thermal Measurements. Pest. Manag. Sci. 2023, 79, 2591–2602. [Google Scholar] [CrossRef]

- Tang, J.; Wang, D.; Zhang, Z.; He, L.; Xin, J.; Xu, Y. Weed Identification Based on K-Means Feature Learning Combined with Convolutional Neural Network. Comput. Electron. Agric. 2017, 135, 63–70. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, C.; Qiao, Y.; Zhang, Z.; Zhang, W.; Song, C. CNN Feature Based Graph Convolutional Network for Weed and Crop Recognition in Smart Farming. Comput. Electron. Agric. 2020, 174, 105450. [Google Scholar] [CrossRef]

- Peng, H.; Li, Z.; Zhou, Z.; Shao, Y. Weed Detection in Paddy Field Using an Improved RetinaNet Network. Comput. Electron. Agric. 2022, 199, 107179. [Google Scholar] [CrossRef]

- Yu, F.; Jin, Z.; Guo, S.; Guo, Z.; Zhang, H.; Xu, T.; Chen, C. Research on Weed Identification Method in Rice Fields Based on UAV Remote Sensing. Front. Plant Sci. 2022, 13, 1037760. [Google Scholar] [CrossRef]

- Krestenitis, M.; Raptis, E.K.; Kapoutsis, A.C.; Ioannidis, K.; Kosmatopoulos, E.B.; Vrochidis, S.; Kompatsiaris, I. CoFly-WeedDB: A UAV Image Dataset for Weed Detection and Species Identification. Data Brief. 2022, 45, 108575. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Yang, M.; Ma, L.; Zhang, T.; Qin, W.; Li, W.; Zhang, Y.; Sun, Z.; Wang, Z.; Li, F.; et al. Estimation of Above-Ground Biomass of Winter Wheat Based on Consumer-Grade Multi-Spectral UAV. Remote Sens. 2022, 14, 1251. [Google Scholar] [CrossRef]

- Zamani, S.A.; Baleghi, Y. Early/Late Fusion Structures with Optimized Feature Selection for Weed Detection Using Visible and Thermal Images of Paddy Fields. Precis. Agric. 2023, 24, 482–510. [Google Scholar] [CrossRef]

- Wang, Z.; Li, H.; Zhu, Y.; Xu, T. Review of Plant Identification Based on Image Processing. Arch. Comput. Methods Eng. 2017, 24, 637–654. [Google Scholar] [CrossRef]

- Zhou, C.; Yang, G.; Liang, D.; Yang, X.; Xu, B. An Integrated Skeleton Extraction and Pruning Method for Spatial Recognition of Maize Seedlings in MGV and UAV Remote Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4618–4632. [Google Scholar] [CrossRef]

- Ma, J.; Li, Y.; Liu, H.; Du, K.; Zheng, F.; Wu, Y.; Zhang, L. Improving Segmentation Accuracy for Ears of Winter Wheat at Flowering Stage by Semantic Segmentation. Comput. Electron. Agric. 2020, 176, 105662. [Google Scholar] [CrossRef]

- Majeed, Y.; Zhang, J.; Zhang, X.; Fu, L.; Karkee, M.; Zhang, Q.; Whiting, M.D. Deep Learning Based Segmentation for Automated Training of Apple Trees on Trellis Wires. Comput. Electron. Agric. 2020, 170, 105277. [Google Scholar] [CrossRef]

- Yang, Q.; Ye, Y.; Gu, L.; Wu, Y. MSFCA-Net: A Multi-Scale Feature Convolutional Attention Network for Segmenting Crops and Weeds in the Field. Agriculture 2023, 13, 1176. [Google Scholar] [CrossRef]

| Treatment | Combination of Features | True Values | Predicted | Accuracy (%) |

|---|---|---|---|---|

| NW | Mc, Me, Ms | 1670 | 1587 | 95.02 |

| Mc, Me, Ms, NC | 1670 | 1590 | 95.21 | |

| MP-5 | Mc, Me, Ms | 1687 | 1624 | 96.27 |

| Mc, Me, Ms, NC | 1687 | 1630 | 96.62 | |

| TS | Mc, Me, Ms | 1620 | 1589 | 98.09 |

| Mc, Me, Ms, NC | 1620 | 1607 | 99.20 |

| Flight Height (m) | GSD (cm/px) | Flight Duration | R2 |

|---|---|---|---|

| 12 | 0.55 | 10 min 41 s | 0.83 |

| 15 | 0.69 | 8 min 28 s | 0.76 |

| 20 | 0.92 | 6 min 15 s | 0.72 |

| 25 | 1.15 | 4 min 45 s | 0.55 |

| 30 | 1.38 | 4 min 1 s | 0.49 |

| Model Training + Prediction Time | ||||||

|---|---|---|---|---|---|---|

| Number of samples | 50 | 100 | 150 | 200 | 300 | 1000 |

| K-means | 23.62 s | 44.25 s | 65.64 s | 84.98 s | 128.08 s | - |

| Corner detection model | 25.13 s | 48.76 s | 72.65 s | 95.24 s | 139.47 s | - |

| Methods in this article | 15.22 s | 30.08 s | 43.73 s | 57.45 s | 86.37 s | - |

| Faster R-CNN | - | - | - | - | - | 3 h 25 min |

| Detailed Configuration | |

|---|---|

| CPU | Intel(R) Core (TM) i7-10700K |

| RAM | 16 GB |

| GPU | NVIDIA GeForce GTX 1660 SUPER |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, T.; Zhu, S.; Zhang, W.; Zhao, Y.; Song, X.; Yang, G.; Yao, Z.; Wu, W.; Liu, T.; Sun, C.; et al. Unmanned Aerial Vehicle-Scale Weed Segmentation Method Based on Image Analysis Technology for Enhanced Accuracy of Maize Seedling Counting. Agriculture 2024, 14, 175. https://doi.org/10.3390/agriculture14020175

Yang T, Zhu S, Zhang W, Zhao Y, Song X, Yang G, Yao Z, Wu W, Liu T, Sun C, et al. Unmanned Aerial Vehicle-Scale Weed Segmentation Method Based on Image Analysis Technology for Enhanced Accuracy of Maize Seedling Counting. Agriculture. 2024; 14(2):175. https://doi.org/10.3390/agriculture14020175

Chicago/Turabian StyleYang, Tianle, Shaolong Zhu, Weijun Zhang, Yuanyuan Zhao, Xiaoxin Song, Guanshuo Yang, Zhaosheng Yao, Wei Wu, Tao Liu, Chengming Sun, and et al. 2024. "Unmanned Aerial Vehicle-Scale Weed Segmentation Method Based on Image Analysis Technology for Enhanced Accuracy of Maize Seedling Counting" Agriculture 14, no. 2: 175. https://doi.org/10.3390/agriculture14020175

APA StyleYang, T., Zhu, S., Zhang, W., Zhao, Y., Song, X., Yang, G., Yao, Z., Wu, W., Liu, T., Sun, C., & Zhang, Z. (2024). Unmanned Aerial Vehicle-Scale Weed Segmentation Method Based on Image Analysis Technology for Enhanced Accuracy of Maize Seedling Counting. Agriculture, 14(2), 175. https://doi.org/10.3390/agriculture14020175