Camellia oleifera Tree Detection and Counting Based on UAV RGB Image and YOLOv8

Abstract

1. Introduction

- (1)

- Research object selection: For this paper, we selected Camellia oleifera trees as the research object. Previous researchers have paid more attention to Camellia oleifera fruits but research on Camellia oleifera trees is lacking. By studying Camellia oleifera trees, we can create a new yield estimation model based on the number of Camellia oleifera trees and the number of Camellia oleifera fruits per tree.

- (2)

- Dataset construction: For this paper, we first designed two datasets based on previous experience (a DOM dataset and an original image dataset). In order to obtain better experimental results, a new dataset (a cropped original image dataset) was explored and created. The experiment showed that the new dataset led to a significant improvement in the results.

2. Materials and Methods

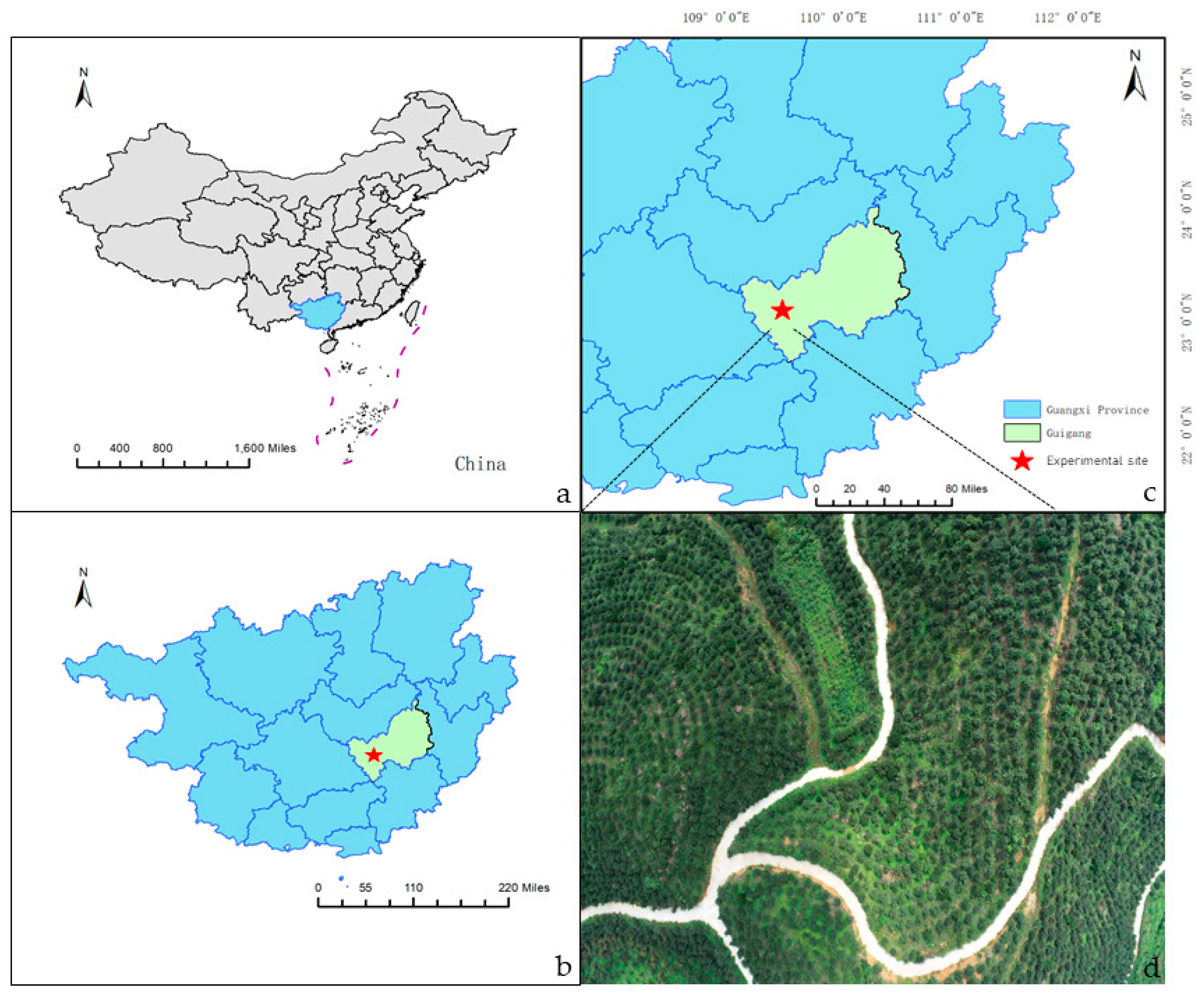

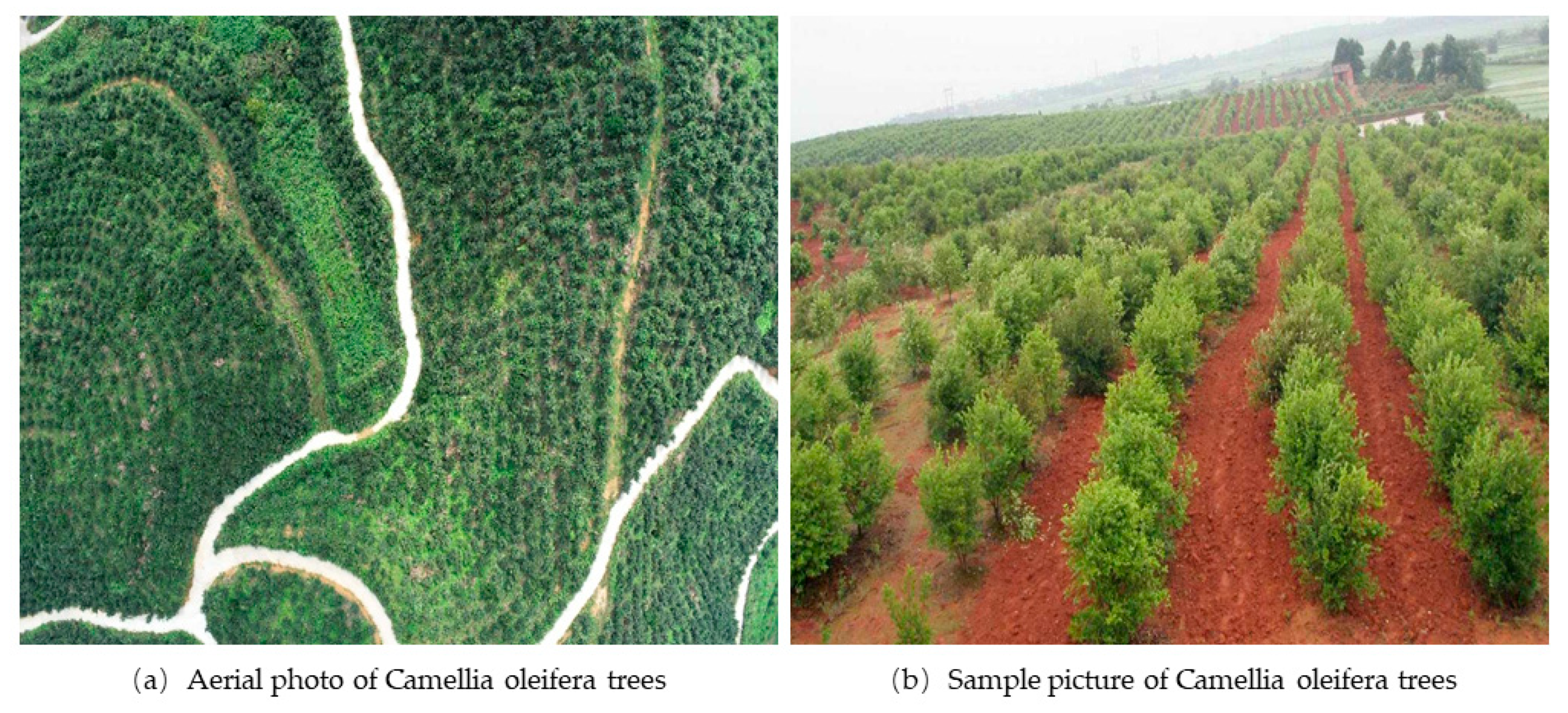

2.1. Survey Region

2.2. Data Collection

2.3. Data Preprocessing and Enhancement

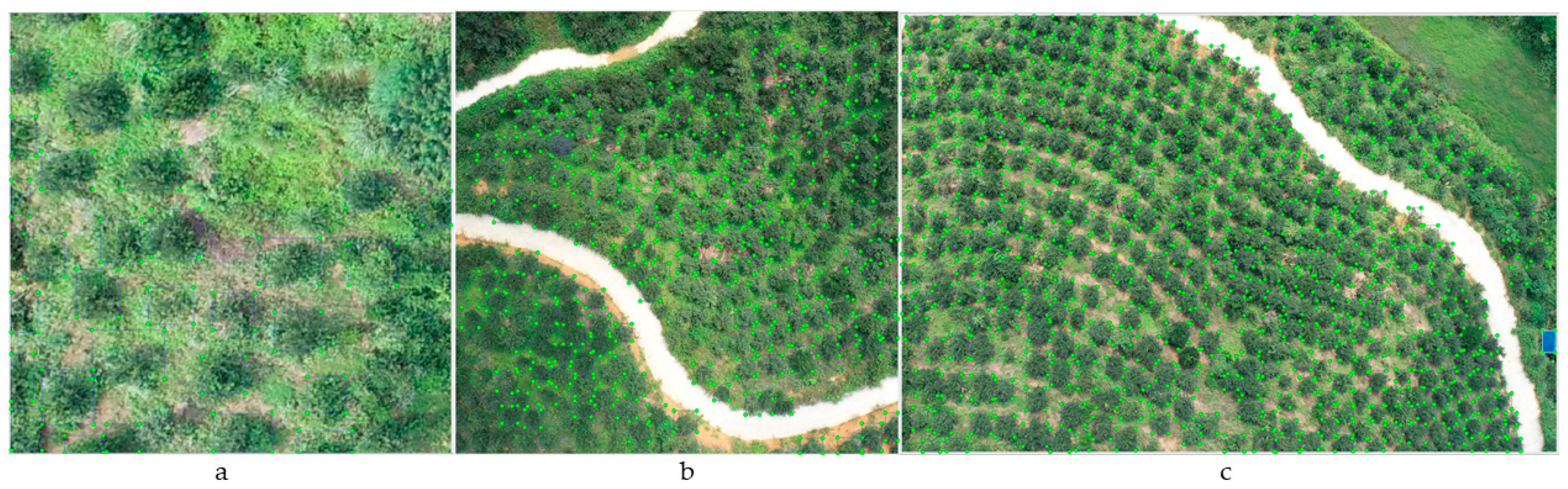

2.4. Dataset Construction and Annotation

- (1)

- DOM dataset: The DOM generated by Agisoft Metashape was cropped into 512 × 512 images, and a total of 1200 images were cropped.

- (2)

- Original image dataset: A total of 2400 images with an original image size of 5472 × 3648 were cut to a size of 1824 × 1824.

- (3)

- Cropped original image dataset: The top, bottom, and left and right parts of the original images were cropped to 1/4 of their original size, and a total of 1600 images with a size of 2736 × 1824 were obtained.

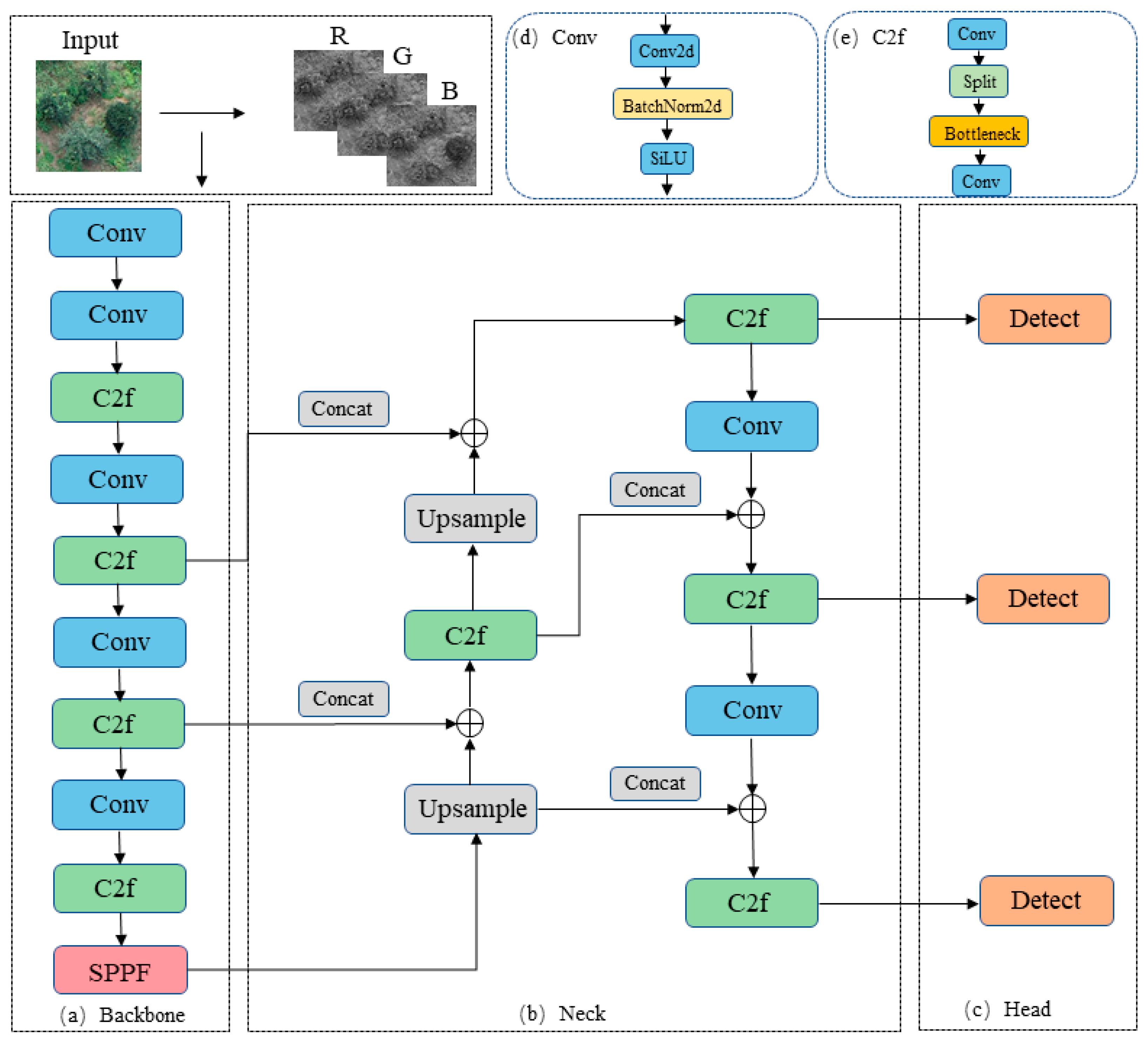

2.5. YOLOv8 Object Detection Model

3. Results

3.1. Experimental Environment

3.2. Evaluation Index

3.3. Experimental Results

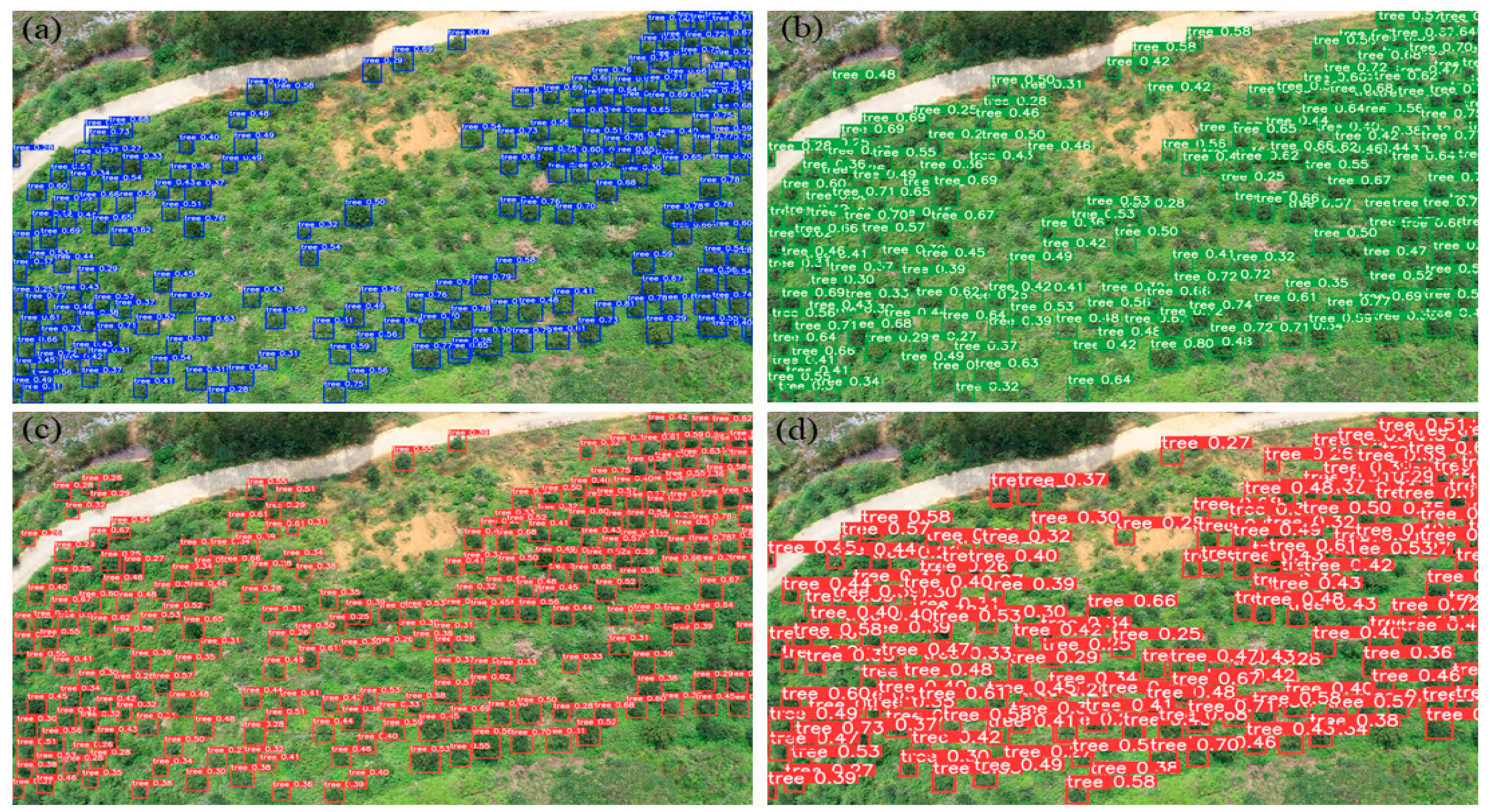

- (1)

- The resolution of the image was reduced after DOM generation, which led to the poor recognition effect of Camellia oleifera trees under the DOM dataset;

- (2)

- The ortho-projection images collected by the UAV had the problem of edge distortion, especially with the mountain terrain in the study area, which made the problem of distortion more serious, so the original image dataset had the worst performance of the three datasets;

- (3)

- While retaining high resolution of the data, the operation of clipping the upper, lower, left, and right parts of the original images to a quarter of their size minimized the image distortion, so the final effect was the best.

4. Discussion

5. Conclusions

- (1)

- Three different Camellia oleifera trees datasets were designed and trained on the YOLOv8 model. Through analysis and comparison, the training effect of the cropped original image dataset was the best; the training effect of the mAP was 8% and 11% higher than that of the DOM dataset and original image dataset respectively.

- (2)

- The cropped original image dataset was trained on the YOLOv5, YOLOv7, YOLOv8, YOLOv9, and YOLOv10 models, and YOLOv8 had the best effect. The precision, recall, mAP50, and F1 score were all higher than those of the other four models, and the mAP reached 0.82, which was 3–8% higher than those of YOLOv5, YOLOv7, YOLOv9, and YOLOv10.

- (3)

- Camellia oleifera tree prediction was carried out in two regions, and regression analysis was performed on the predicted value and the measured value. The difference between the predicted value and the actual value was very small; the R2 of Area 1 was 0.93, the R2 of Area 2 was 0.95, and the average R2 was 0.94.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Tang, N.; Tan, X.; Cai, Y.; He, M.Y.; Xiang, Z.Y.; Ye, H.; Ma, J.L. Characterizations and application potentials of the hemicel-luloses in waste oil-tea camellia fruit shells from Southern China. Ind. Crops Prod. 2022, 178, 114551. [Google Scholar] [CrossRef]

- Wu, D.L.; Fu, L.Q.; Cao, C.M.; Li, C.; Xu, Y.P.; Ding, D. Design and Experiment of Shaking-branch Fruit Picking Machine for Camellia Fruit. Trans. Chin. Soc. Agric. Mach. 2020, 51, 176–182. [Google Scholar] [CrossRef]

- Jiang, H.; Hu, Y.; Jiang, X.; Zhou, H. Maturity Stage Discrimination of Camellia oleifera Fruit Using Visible and Near-Infrared Hyperspectral Imaging. Molecules 2022, 27, 6318. [Google Scholar] [CrossRef]

- Ebneyamin, E.; Mansouri, P.; Rajabi, M.; Qomi, M.; Asgharian, R.; Azizian, Z. The Efficacy and Safety of Permethrin 2.5% with Tea Tree Oil Gel on Rosacea Treatment: A Double-Blind, Controlled Clinical Trial. J. Cosmet. Dermatol. 2020, 19, 1426–1431. [Google Scholar] [CrossRef]

- Deng, Q.; Li, J.; Gao, C.; Cheng, J.; Deng, X.; Jiang, D.; Li, L.; Yan, P. New perspective for evaluating the main Camellia oleifera cultivars in China. Sci. Rep. 2020, 10, 20676. [Google Scholar] [CrossRef]

- Chen, S.; Ji, L.; Yao, X.; Zhang, W.; Han, W.; Yin, L. Variation analysis on the main economic characters and nutrients of fruit from Camellia oleifera varieties. Non-Wood For. Res. 2022, 40, 1–9. [Google Scholar] [CrossRef]

- Chen, X.; Chen, B.; Wang, M.; Li, J.; Zou, Y.; Rong, J.; Deng, Z. Comparative Nutrient Composition of Seed Oils of Wild Camellia oleifera with Different Single Kernel Masses Grown in Ten Regions. Food Sci. 2019, 40, 227–234. [Google Scholar] [CrossRef]

- Wu, D.; Ding, D.; Cui, B.; Jiang, S.; Zhao, E.; Liu, Y.; Cao, C. Design and experiment of vibration plate type camellia fruit picking machine. Int. J. Agric. Biol. Eng. 2022, 15, 130–138. [Google Scholar] [CrossRef]

- Wu, D.L.; Zhao, E.L.; Jiang, S.; Wang, W.W.; Yuan, J.H.; Wang, K. Optimization and Experiment of Canopy Vibration Parameters of Camellia oleifera Based on Energy Transfer Characteristics. Trans. Chin. Soc. Agric. Mach. 2022, 53, 23–33. [Google Scholar] [CrossRef]

- Tran, H.T.; Balchanos, M.; Domerçant, J.C.; Mavris, D.N. A Framework for the Quantitative Assessment of Performance-Based System Resilience. Reliab. Eng. Syst. Saf. 2017, 158, 73–84. [Google Scholar] [CrossRef]

- Rao, H.H.; Wang, Y.L.; Li, Q.S.; Wang, B.Y.; Yang, J.L.; Liu, M.H. Design and Experiment of Camellia oleifera Fruit Layered Harvesting Device. Trans. Chin. Soc. Agric Mac. 2021, 52, 203–212. [Google Scholar] [CrossRef]

- Wu, D.L.; Zhao, E.L.; Fang, D.; Jiang, S.; Wu, C.; Wang, W.W.; Wang, R.Y. Determination of Vibration Picking Parameters of Camellia oleifera Fruit Based on Acceleration and Strain Response of Branches. Agriculture 2022, 12, 1222. [Google Scholar] [CrossRef]

- Wang, J.; Liu, R.; Zhang, Y.; Cheng, X.; Xu, J.; Liu, Y.; Chen, F. Analysis of Land-Use/Cover-Type Extraction Results of Tamarix Shrub Forest of China Based on Remote Sensing Technology. Forests 2024, 15, 1179. [Google Scholar] [CrossRef]

- Rogan, J.; Chen, D. Remote sensing technology for mapping and monitoring land-cover and land-use change. Prog. Plan. 2004, 61, 301–325. [Google Scholar] [CrossRef]

- Deng, L. The mnist database of handwritten digit images for machine learning research [best of the web]. IEEE Signal Process. Mag. 2012, 29, 141–142. [Google Scholar] [CrossRef]

- Brown, J.C.; Kastens, J.H.; Coutinho, A.C.; de Castro Victoria, D.; Bishop, C.R. Classifying multiyear agricultural land use datafrom Mato Grosso using time-series MODIS vegetation index data. Remote Sens. Environ. 2013, 130, 39–50. [Google Scholar] [CrossRef]

- Tyagi, A.C. Towards a Second Green Revolution. Irrig. Drain. 2016, 65, 388–389. [Google Scholar] [CrossRef]

- Mubin, N.A.; Nadarajoo, E.; Shafri, H.Z.M.; Hamedianfar, A. Young and Mature Oil Palm Tree Detection and Counting Using Convolutional Neural Network Deep Learning Method. Int. J. Remote Sens. 2019, 40, 7500–7515. [Google Scholar] [CrossRef]

- Zhang, Q.; Li, Z.; Xia, C.; Chen, J.; Peng, D. Tree Species Classification based on the New Bands of GF-6 Remote Sensing Satellite. J. Geo-Inf. Sci. 2019, 21, 1619–1628. [Google Scholar] [CrossRef]

- Grabska, E.; Frantz, D.; Ostapowicz, K. Evaluation of Machine Learning Algorithms for Forest Stand Species Mapping Using Sentinel-2 Imagery and Environmental Data in the Polish Carpathians. Remote Sens. Environ. 2020, 251, 112103. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Gregorio, E.; Auat Cheein, F.; Guevara, J.; Llorens, J.; Sanz-Cortiella, R.; Escolà, A.; Rosell-Polo, J.R. Fruit Detection, Yield Prediction and Canopy Geometric Characterization Using LiDAR with Forced Air Flow. Comput. Electron. Agric. 2020, 168, 105121. [Google Scholar] [CrossRef]

- Kim, S.; McGaughey, R.J.; Andersen, H.-E.; Schreuder, G. Tree Species Differentiation Using Intensity Data Derived from Leaf-on and Leaf-off Airborne Laser Scanner Data. Remote Sens. Environ. 2009, 113, 1575–1586. [Google Scholar] [CrossRef]

- Kukkonen, M.; Maltamo, M.; Korhonen, L.; Packalen, P. Multispectral Airborne LiDAR Data in the Prediction of Boreal Tree Species Composition. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3462–3471. [Google Scholar] [CrossRef]

- Lin, Y.; Hyyppä, J. A Comprehensive but Efficient Framework of Proposing and Validating Feature Parameters from Airborne LiDAR Data for Tree Species Classification. Int. J. Appl. Earth Obs. Geoinf. 2016, 46, 45–55. [Google Scholar] [CrossRef]

- Zhang, S.; Qi, L.; Han, W.; Zhu, X.; Xi, X.; Wang, C.; Wang, P.; Nie, S. Danger tree detection and tree number estimation based on UAV LiDAR data. J. Univ. Chin. Acad. Sci. 2020, 37, 760–766. [Google Scholar] [CrossRef]

- Yrttimaa, T.; Saarinen, N.; Kankare, V.; Liang, X.; Hyyppä, J.; Holopainen, M.; Vastaranta, M. Investigating the Feasibility of Multi-Scan Terrestrial Laser Scanning to Characterize Tree Communities in Southern Boreal Forests. Remote Sens. 2019, 11, 1423. [Google Scholar] [CrossRef]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef]

- Wu, C.; Jia, W.; Yang, J.; Zhang, T.; Dai, A.; Zhou, H. Economic Fruit Forest Classification Based on Improved U-Net Model in UAV Multispectral Imagery. Remote Sens. 2023, 15, 2500. [Google Scholar] [CrossRef]

- Li, D.; Sun, X.; Jia, Y.; Yao, Z.; Lin, P.; Chen, Y.; Zhou, H.; Zhou, Z.; Wu, K.; Shi, L.; et al. A Longan Yield Estimation Approach Based on UAV Images and Deep Learning. Front. Plant Sci. 2023, 14, 1132909. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Souza, J.; di Gennaro, S.F.; Mesas-Carrascosa, F.J. Editorial: Fruit Detection and Yield Prediction on Woody Crops Using Data from Unmanned Aerial Vehicles. Front. Plant Sci. 2022, 13, 1112445. [Google Scholar] [CrossRef]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping Forest Tree Species in High Resolution UAV-Based RGB-Imagery by Means of Convolutional Neural Networks. ISPRS J. Photogramm. Remote Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Xu, D.; Yang, Y. Extracting Individual Tree Crown Width from UAV Remote Sensing Images. Cent. South For. Inventory Plan. 2024, 43, 36–40. [Google Scholar] [CrossRef]

- Park, J.Y.; Muller-Landau, H.C.; Lichstein, J.W.; Rifai, S.W.; Dandois, J.P.; Bohlman, S.A. Quantifying Leaf Phenology of Individual Trees and Species in a Tropical Forest Using Unmanned Aerial Vehicle (UAV) Images. Remote Sens. 2019, 11, 1534. [Google Scholar] [CrossRef]

- Onishi, M.; Watanabe, S.; Nakashima, T.; Ise, T. Practicality and Robustness of Tree Species Identification Using UAV RGB Image and Deep Learning in Temperate Forest in Japan. Remote Sens. 2022, 14, 1710. [Google Scholar] [CrossRef]

- Nguyen, H.T.; Caceres, M.L.L.; Moritake, K.; Kentsch, S.; Shu, H.; Diez, Y. Individual Sick Fir Tree (Abies mariesii) Identification in Insect Infested Forests by Means of UAV Images and Deep Learning. Remote Sens. 2021, 13, 260. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A Small-Object-Detection Model Based on Improved YOLOv8 for UAV Aerial Photography Scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef]

- Li, G.; Suo, R.; Zhao, G.A.; Gao, C.Q.; Fu, L.S.; Shi, F.X.; Dhupia, J.; Li, R.; Cui, Y.J. Real-time detection of kiwifruit flower and bud simultaneously in orchard using YOLOv4 for robotic pollination. Comput. Electron. Agric. 2022, 193, 106641. [Google Scholar] [CrossRef]

- Ye, R.; Shao, G.; Gao, Q.; Zhang, H.; Li, T. CR-YOLOv9: Improved YOLOv9 Multi-Stage Strawberry Fruit Maturity Detection Application Integrated with CRNET. Foods 2024, 13, 2571. [Google Scholar] [CrossRef]

- Qiu, X.; Chen, Y.; Cai, W.; Niu, M.; Li, J. LD-YOLOv10: A Lightweight Target Detection Algorithm for Drone Scenarios Based on YOLOv10. Electronics 2024, 13, 3269. [Google Scholar] [CrossRef]

- Ma, N.; Wu, Y.; Bo, Y.; Yan, H. Chili Pepper Object Detection Method Based on Improved YOLOv8n. Plants 2024, 13, 2402. [Google Scholar] [CrossRef]

- Chen, X.; Wang, C.; Liu, C.; Zhu, X.; Zhang, Y.; Luo, T.; Zhang, J. Autonomous Crack Detection for Mountainous Roads Using UAV Inspection System. Sensors 2024, 24, 4751. [Google Scholar] [CrossRef] [PubMed]

| Research Object | Survey Region | Data Type | Accuracy |

|---|---|---|---|

| Forest | Alongshan Forestry Bureau | GF-6 WFV images | 68.74% |

| Forest | Polish Carpathians | Sentinel-2 images | 86.90% |

| Forest | Seurasaari Island | Airborne LiDAR | 92.50% |

| Forest | Southern boreal zone in Evo | Ground-based LiDAR | 82.33% |

| Forest | Forestry center | UAV images | 79.89% |

| Tropical forests | Barro Colorado Island | UAV images | 85.50% |

| Forest | Tropical forests in Japan | UAV images | 84.50% |

| Sick fir tree | Zao Mountain | UAV images | 85.70% |

| Parameters | Value |

|---|---|

| Data type | Optical image |

| Sensor | CMOS; 20 million effective pixels |

| Photo resolution | 5472 × 3648 |

| Maximum take-off altitude | 6000 m |

| Flight time | 30 min |

| Maximum working area | 1 km2 |

| Maximum ascent speed | 6 m/s (automatic flight) |

| Dataset | Precision | Recall | F1 Score | mAP |

|---|---|---|---|---|

| DOM | 0.72 | 0.68 | 0.70 | 0.74 |

| Original Image | 0.64 | 0.69 | 0.66 | 0.71 |

| Cropped Original Image | 0.77 | 0.78 | 0.78 | 0.82 |

| Model | Precision | Recall | F1 Score | mAP |

|---|---|---|---|---|

| YOLOv5 | 0.75 | 0.77 | 0.75 | 0.79 |

| YOLOv7 | 0.76 | 0.77 | 0.76 | 0.77 |

| YOLOv8 | 0.77 | 0.78 | 0.78 | 0.82 |

| YOLOv9 | 0.74 | 0.72 | 0.73 | 0.76 |

| YOLOv10 | 0.68 | 0.74 | 0.71 | 0.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, R.; Yuan, D.; Zhao, M.; Zhao, Z.; Zhang, L.; Fan, Y.; Liang, G.; Zhou, Y. Camellia oleifera Tree Detection and Counting Based on UAV RGB Image and YOLOv8. Agriculture 2024, 14, 1789. https://doi.org/10.3390/agriculture14101789

Yang R, Yuan D, Zhao M, Zhao Z, Zhang L, Fan Y, Liang G, Zhou Y. Camellia oleifera Tree Detection and Counting Based on UAV RGB Image and YOLOv8. Agriculture. 2024; 14(10):1789. https://doi.org/10.3390/agriculture14101789

Chicago/Turabian StyleYang, Renxu, Debao Yuan, Maochen Zhao, Zhao Zhao, Liuya Zhang, Yuqing Fan, Guangyu Liang, and Yifei Zhou. 2024. "Camellia oleifera Tree Detection and Counting Based on UAV RGB Image and YOLOv8" Agriculture 14, no. 10: 1789. https://doi.org/10.3390/agriculture14101789

APA StyleYang, R., Yuan, D., Zhao, M., Zhao, Z., Zhang, L., Fan, Y., Liang, G., & Zhou, Y. (2024). Camellia oleifera Tree Detection and Counting Based on UAV RGB Image and YOLOv8. Agriculture, 14(10), 1789. https://doi.org/10.3390/agriculture14101789