Abstract

The identification of a chicken’s sex is a massive task in the poultry industry. To solve the problems of traditional artificial observation in determining sex, such as time-consuming and laborious, a sex identification method of chicken embryos based on blood vessel images and deep learning was preliminarily investigated. In this study, we designed an image acquisition platform to capture clear blood vessel images with a black background. 19,748 images of 3024 Jingfen No. 6 breeding eggs were collected from days 3 to 5 of incubation in Beijing Huadu Yukou Poultry Industry. Sixteen thousand seven hundred sixty-one images were filtered via color sexing in 1-day-old chicks and constructed the dataset of this study. A sex identification model was proposed based on an improved YOLOv7 deep learning algorithm. An attention mechanism CBAM was introduced for YOLOv7 to improve the accuracy of sex identification of chicken eggs; the BiFPN feature fusion was used in the neck network of YOLOv7 to fuse the low-level and high-level features efficiently; and α-CIOU was used as the bounding box loss function to accelerate regression prediction and improve the positioning accuracy of the bounding box of the model. Results showed that the mean average precision (mAP) of 88.79% was achieved by modeling with the blood vessel data on day 4 of incubation of chicken eggs, with the male and female reaching 87.91% and 89.67%. Compared with the original YOLOv7 network, the mAP of the improved model was increased by 3.46%. The comparison of target detection model results showed that the mAP of our method was 32.49%, 17.17%, and 5.96% higher than that of SSD, Faster R-CNN, and YOLOv5, respectively. The average image processing time was 0.023 s. Our study indicates that using blood vessel images and deep learning has great potential applications in the sex identification of chicken embryos.

1. Introduction

In large commercial hatcheries, sex identification of newly born chicks is generally accomplished by vent, color, or feather from new hatching lines. However, these methods can only discriminate sex after the 21-day incubation cycle, which is time- and energy-consuming. If the sex can be identified at an early embryonic stage or even before incubation, male eggs could be used as feed components which is a safe and good circular approach to nutrient use. Furthermore, fewer eggs must be incubated, saving feed space, and resulting in lower CO2 emissions and energy consumption, benefiting farmers and the environment. Therefore, a method that can quickly and accurately identify male and female embryos at the early incubation stage should be studied.

Over the last few decades, scientists and researchers have attempted various strategies for the sex identification of chicken eggs before hatching or even incubation [1]. FT-IR [2,3] and Raman [4,5,6] spectroscopy were used to detect male and female embryonic eggs and achieved a high accuracy rate. However, these methods require perforation and are currently challenging to apply on a large scale in hatcheries. Non-destructive sex identification of chicken embryos has been performed by VIS/NIR, hyperspectral techniques, photoelectric detection, volatile organic compound techniques (VOCs), and computer vision. Pan [7] collected visible-NIR hyperspectral transmission images of breeder eggs from days 1 to 12 of incubation and established a discrimination model based on spectral information in the eggs’ blunt, tip, and middle parts. The results showed that the artificial neural network (ANN) model based on the spectral information collected in the middle part of the male and female eggs had an accuracy of 82.86% on the 10th day of incubation. Göhler [8] used VIS/NIR spectra of layer lines with sex-specific down feather colors, achieving an overall accuracy of 97% for 14-day-old embryos. Li [9] used VIS/NIR transmission spectral data in the wavelength range of 500–900 nm to build a 6-layer convolutional neural network (CNN) that can distinguish the gender of duck eggs on day 7 of incubation. Alin [10] considered opacity as the amount of light lost when passing through the hatching egg sample to sexing chicken eggs before incubation. This approach obtained an accuracy of 84.0% on days 16 to 18 of incubation.

Similarly, the ratio of two wavelengths in the longitudinal visible transmission spectrum (T575/T598) was used to identify the sex of chicken embryos [11]. The potential of SPME/GC-MS as a non-destructive tool for characterizing VOCs between male and female chicken eggs was also investigated [12,13,14]. The results have shown that some odor composition of eggs differs significantly. Commercially applicable methods must be rapid enough for real-time applications, economically feasible, highly accurate, and ethically acceptable [15]. However, most of the abovementioned methods can only achieve better recognition results in hatching eggs’ middle and late stages.

Machine vision-based blood vessel detection and sex identification can distinguish the sex of eggs in the early incubation stage. Machine vision images of 186 chicken embryos were collected on the fourth day of incubation, and 11-dimensional feature parameters were extracted [16]. A discriminative model was constructed based on back propagation neural network (BPNN) with an accuracy of 82.80%. Histogram of gradients (HOG) and gray horizontal cooccurrence matrix were used to extract blood vessel features, and the 96-dimensional features were simplified using sampling and principal component analysis (PCA) dimensionality reduction-gray cooccurrence matrix methods [17]. A Deep belief network (DBN) model was constructed and achieved accuracies of 76.67% (male) and 90% (female), with an average accuracy of 83.33%. Images and spectra data using Random Forests (RF) and the Dempster-Shafer (DS) theory of evidence were studied from 566 chicken eggs [18]. The machine vision model reached 78.00% accuracy with optimized parameters, while the spectral model was 82.67% accuracy for the test set. The fusion model reached 88.00% accuracy, particularly with 90.00% and 86.25% for female and male eggs. Overall, the above research results verify the feasibility of sex identification of chicken embryos based on blood vessel images and provide new ideas for designing subsequent algorithms. The shortcomings of the existing research of sex identification methods based on blood vessel images include low recognition accuracy and slow detection speed. Also, most sex identification studies are implemented in the laboratory without discussing the result of a large dataset.

Target detection is a basic but important task in computer vision technology, which mainly includes the recognition and location of single or multiple targets of interest in digital images [19]. Sex identification of early embryonic chicken eggs can be considered a target-detection task. Deep learning-based target-detection algorithms can be divided into region-proposal-based models and region-proposal-free models. Typical representatives of the model based on region proposal include R-CNN [20], SPP_Ne [21], Fast R-CNN [22], Faster RCNN [23], etc. Region-proposal-free models, such as SSD [24] and YOLO [25], are widely used in the current target detection methods. The YOLO algorithm is a single-stage target detection algorithm, which in-puts the whole image into the network without generating candidate boxes and predicts the position of the bounding box and the class it belongs to directly in the fully connected layer after extracting features through the convolutional layer, which is more beneficial to improve the detection efficiency compared with other deep learning-based target detection algorithms. YOLOv4 [26], Scaled-YOLOv4 [27], PPYOLO [28], YOLOX [29], YOLOv5 [30], and YOLOR [31] were proposed in 2020 and 2021. YOLOv7 [32] was proffered in 2022, outperforming most well-known object detectors such as R-CNN, YOLOv4, YOLOR, YOLOv5, etc. It uses a re-parameterized approach and dynamic label assignment to improve the learning ability of the network. The sex identification of chicken embryos based on object detection models, such as YOLOs series algorithms, can somewhat improve detection accuracy and detection speed.

This study develops an improved YOLOv7 framework to improve the detection accuracy and ensure the detection rate of sex identification for chicken embryos. The first contribution of this study is that we designed an image acquisition platform and carried out blood vessel image acquisition in the hatchery. Second, we introduced the attention mechanism CBAM to extract effective information for recognition and improve the performance of sex identification. Third, we integrated features of different scales with BiFPN, which improved attention to blood vessels. Moreover, α-CIoU was used to reduce undetected and false detection rates. Finally, the relationship between shape parameters and gender was analyzed. We used the image dataset of the blood vessels we created to compare the performance of the original YOLOv7, current target detection deep learning algorithms, and another existing research.

2. Materials and Methods

2.1. Data Collection

2.1.1. Materials and Instruments

All breeding eggs in this study belong to Jingfen No. 6 with white eggshells from Beijing Huadu Yukou Poultry Co., Ltd. (Beijing, China). Generally, 1-day-old chicks of Jingfen No. 6 are recognized by feather color from new hatching lines. Most female chicks were brown all over except for a light-yellow stripe down the back, brown on the head, light yellow on the body, or white on the head and brown on the body. In contrast, most newly born male chicks were light yellow all over but with one or more brown stripes on the back.

In our study, an electronic balance (Mengfu, range: 0–1 kg, accuracy: 0.01 g), vernier calipers, mesh pockets (20 × 35 cm), and image acquisition platforms were used. The electronic balance and vernier calipers were used for weighing and measuring the length and width of chicken eggs, respectively. The mesh pockets separated each egg on day 18 of incubation to distinguish the number of chicks after hatching.

2.1.2. Image Acquisition System

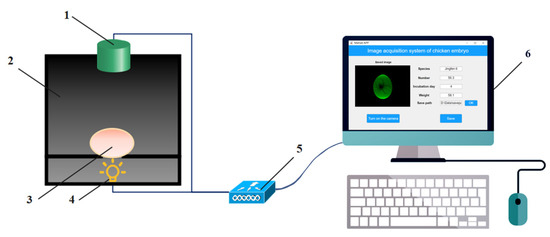

An image acquisition platform was designed and applied to collect transmission images of blood vessels (Figure 1). An image acquisition platform consists of a CCD vision camera module (Q8s, Jieruiweitong, Shenzhen, China), a lighting box, a LED light (ZYG-L627, Xinyiguangdian, Shenzhen, China), an STM32 microcontroller (STM32F407ZGT6, Qimingxinxin, Fujian, China) and a PC (with a dimension of 180 mm × 150 mm × 210 mm).

Figure 1.

Diagram of chicken eggs image acquisition system (1. RGB camera module; 2. Lighting box; 3. Egg; 4. LED light; 5. Controller; 6. PC).

A camera module was used for collecting the transmission images of egg samples, with a resolution of 3264 × 2448 pixels. The lighting box was designed in SolidWorks and printed by a 3D printer (X2-200, Tiertime, Beijing, China) using black ABS (acrylo-nitrile-butadiene-styrene copolymer). The lighting box was divided into two parts, the upper part being the darkroom part, separated by a carrier platform with a light-transmitting hole in the middle of the platform and an ellipsoidal slot at the upper end of the hole for placing the egg sample. The bottom half was the light source part. A green LED was chosen as a backlighting source because it has little effect on the chicken embryo in the early stages of incubation and improves the egg-hatching quality [33,34,35,36]. A green LED of the three grades (1 W, 3 W, and 6 W) of light was selected and installed at the bottom of the lighting box to obtain complete image information. The object distance of the image acquisition platform is 90 mm. The egg sample to be tested was placed laterally on the carrier platform for 1–2 min, and the images were acquired by the CCD camera module and transmitted to the PC via USB through the light source transmitted under the carrier platform. The image acquisition software was designed based on the MATLAB GUI visual interactive tool on PC to realize the collection, display, and storage of chicken egg images. We tested the platform and optimized the box structure to accommodate the transmission image acquisition of different egg sizes.

2.1.3. Data Acquisition Experiment

The field data acquisition experiment was conducted at Hall 3 of Beijing Huadu Yukou Poultry Co., Ltd., from April 28 to 23 May 2022. This study collected blood vessel images of 1512 (3024) Jingfen No. 6 breeding eggs twice. Figure 2 shows the flowchart of the image acquisition experiment. Two independent experiments were conducted with 1512 Jingfen No. 6 eggs. Since blood vessels start to develop on day three and occupy half of the egg by day 5 of incubation. To obtain images with clear blood vessels, images from days 3 to days 5 of incubation were collected. All eggs were stored not more than three days before the experiment. Weight accurate to ±0.1 g, length, and width accurate to ±0.1 mm. All acquired images were saved in jpeg format in 800 W (3264 × 2448).

Figure 2.

Flowchart of image acquisition experiment.

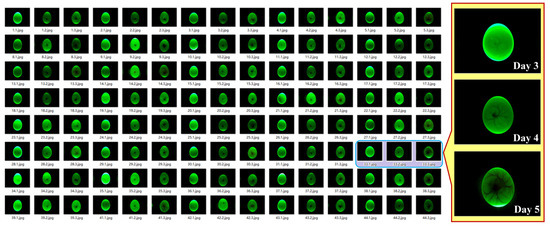

The specific experimental steps included: (1) selecting 36 trays (1512 eggs) of non-deformed eggs without dirty surfaces and sand-shell after steam sterilization, including 41–50 g (10 trays), 51–60 g (16 trays) and 61–70 g (10 trays) breeder eggs, then numbering each egg at the blunt end, marking the egg trays to distinguish trays, and measuring the long and short axis and weighed four trays of each size; (2) arranging the breeder eggs in the trays and put them into incubation workshop for preheating. (3) Trays were placed in the middle area of the incubation cart (5–10 layers, 15 layers in total) to reduce the differences in temperature and airflow field to which the breeding eggs were exposed. The incubation temperature and relative humidity were 37.8 °C and 65.5%, respectively, and the eggs were automatically turned every 2 h. (4) On days 3, days 4, and days 5 of incubation, image acquisition platforms were operated by three experimentalists to collect blood vessel images (Figure 3) of breeding eggs under appropriate light and suitable angles for photographing 1–3 images per day per egg. (5) On day 18 of incubation, the breeding eggs were placed in individual hatching bags and transferred to the chick dispenser. (6) On day 21 of incubation, the newly hatched female and male chicks were recognized by three experienced hatchery workers via the feather color according to the standard process and recorded as the basis for the sex identification model. In our two experiments, 3024 eggs were incubated and collected blood vessel images, and 2844 eggs were hatched after a 21-day incubation period. A total of 17,269 images are available for sex identification from the whole collected 19,748 images. As shown in Figure 3, the machine vision images were acquired by placing the eggs horizontally.

Figure 3.

Images of from days 3 to days 5 of incubation.

2.2. Dataset Construction

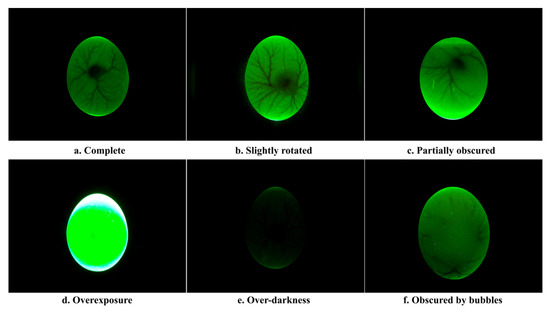

The first step in constructing the dataset is to filter the data. Figure 4 shows examples of accepted and rejected samples, respectively. Figure 4a presents complete blood vessels with some symmetry. When the blood vessels were slightly rotated (Figure 4b) and partially obscured (Figure 4c), these images could still be used to build the dataset. However, images that are overexposed (Figure 4d) or too dark (Figure 4e) and missing detailed information on blood vessels should be removed from the dataset. In addition, if small air bubbles existed between the inner and outer shell membranes (Figure 4f), the images were also removed from our dataset.

Figure 4.

Accept and reject image samples.

After manual discrimination, reject images consist of 231 overexposure, 207 over-darkness, and 70 obscured by bubbles. Accepted images contained 11,968 complete blood vessels, 3704 slightly rotated, and 1089 partially obscured. The distribution of the dataset is shown in Table 1. For training YOLOv7 deep learning network, the blood vessels region was annotated in images, and the dataset was constructed. Labeling software was used to annotate the blood vessel region of the whole dataset, and corresponding annotation files were obtained. To validate the impact of sex identification for days 3 to 5 of incubation, the dataset was divided into three training sets and three testing sets. This study randomly chose 1000 female and 1000 male eggs to form the training set. The remaining 854 eggs formed the test set of the sex identification model, of which 426 were male eggs and 418 were female eggs. Table 2 shows the number of eggs and images in training and testing sets.

Table 1.

Statistical table of the dataset.

Table 2.

Statistical table of training and testing sets.

2.3. YOLOv7 Deep Learning Network

Considering the characteristics of the blood vessels of chicken eggs are not easily observed by the naked eye, to improve the performance of the sex identification algorithm, deep learning-based algorithms were chosen to identify the sex of chicken embryos in this study to avoid the inefficient performance of traditional image feature extraction. Considering the number of parameters and the model’s performance, the YOLOv7 was selected for modification in this study. The whole network model structure is divided into four parts: input, backbone, neck, and head. Considering that the training of the YOLOv7 model will consider color, texture, and other features for sex identification., we do not perform other image processing operations.

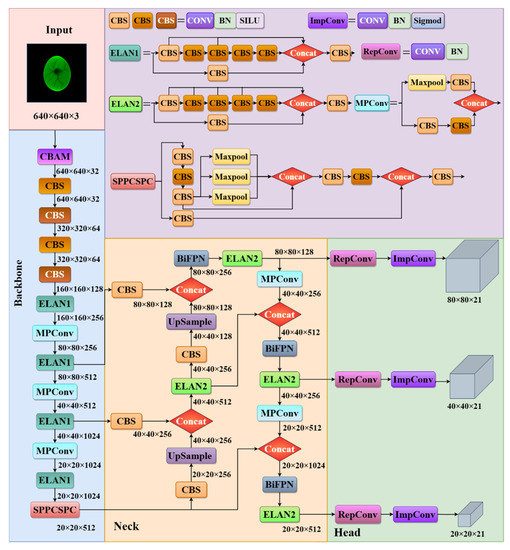

2.4. Improvement of the YOLOv7 Algorithm

A schematic diagram of the improved YOLOv7 for the sex identification algorithm of chicken eggs is shown in Figure 5. Since the background of the egg image is black, there is no need to use image segmentation to remove the background of the egg. No data enhancement operation was performed at the input. A size 640 × 640 × 3 blood vessel image was input into the backbone. As a result, YOLOv7 has three different scales of feature maps with sizes of (80 × 80 × 21), (40 × 40 × 21), and (20 × 20 × 21).

Figure 5.

The structure of the improved YOLOv7 network.

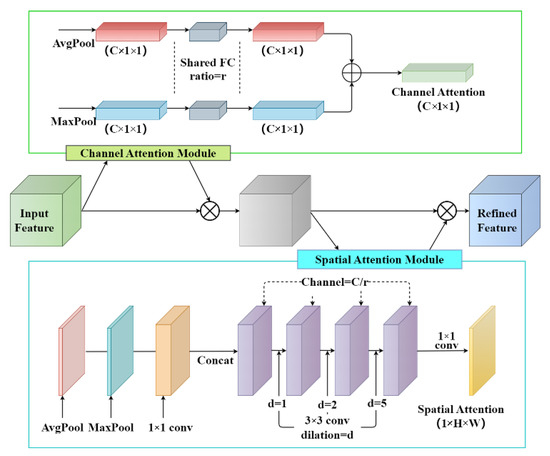

2.4.1. Backbone Attention Module

In YOLOv7, the innovative multi-branch stacking structure is used for feature extraction, and the hop-connected structure of the model is denser. The Convolutional Block Attention Module (CBAM) is an effective attention module for feedforward convolutional neural networks connecting the spatial attention module after the channel attention module [37]. The attention mechanism module is added before the first CBS modules in the improved network’s backbone section. We compare the effect of adding CBAM to different parts of the YOLOv7 network, such as input, backbone, and head, and finally choose to add CBAM at the input side because it allows the network to focus on the important feature information better when processing the input image, thus improving the accuracy and precision. The structure of CBAM is shown in Figure 6. The major advantages of this procedure are: first, the influence of interference information can be largely mitigated, thus improving the extraction of the main features at the output and, therefore, the performance of the whole network; second, the number of parameters added to the whole network is not large.

Figure 6.

The structure of CBAM.

The input feature maps were first processed in CBAM using global max and global average pooling and fed into a two-layer shared FC. Then, the final channel attention feature was multiplied elementwise to generate the input features required by the spatial attention module. Next, two feature maps were conducted based on the channel-based global max pooling and global average pooling, and then a concatenation operation (channel splicing) was performed. Finally, the spatial attention feature maps were obtained by the sigmoid activation function. The mathematical expressions of the channel and spatial attention module are shown as Equations (1) and (2), respectively.

where σ stands for the sigmoid activation function, and represents the reduction rate (r = 2 in this experiment). represents a convolution operation with a filter size of 7 × 7.

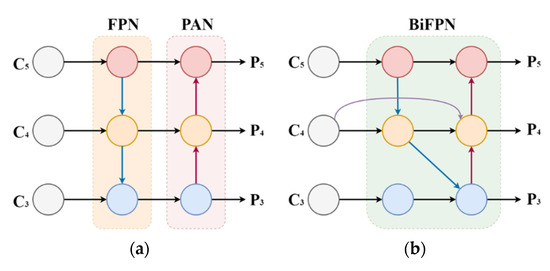

2.4.2. Multi-Scale Features Fusion

The purpose of feature fusion was to combine the feature information at different scales. YOLOv7 uses the same FPN + PAN structure as YOLOv5 for feature fusion of the feature maps obtained from the backbone feature extraction network. FPN is the enhanced feature extraction network of YOLOv7. Three feature layers were obtained in this part. Compared with YOLOv5, YOLOv7 replaced the CSP with the ELAN2, and the downsampling became the MPConv layer. However, the method had certain drawbacks. Due to the large-scale variation of targets, the original feature fusion technique destroyed the feature consistency of bloodlines at different scales. To solve this problem, this paper adopted the BiFPN structure of the feature fusion to improve the neck part of the YOLOv7 network. The structure of PAFPN and BiFPN is shown in Figure 7.

Figure 7.

Comparison diagram of PAFPN and BiFPN neck network structures. (a) PAFPN; (b) BiFPN.

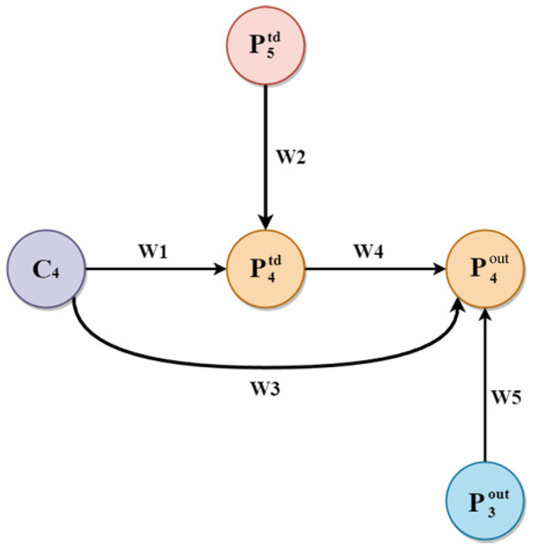

The original BiFPN network fuses the features in the feature extraction network directly with the relative size features in the bottom-up path [38]. In this study, one layer of the BiFPN module is stacked before the last three ELAN2 modules in the neck part of YOLOv7 due to the BiFPN module can be regarded as a basic unit. The bidirectional channel and cross-scale connection structure of BiFPN can efficiently extract more shallow semantic information without losing too much deep semantic information. It sets different weights according to the importance of different input features, and meanwhile, this structure is used repeatedly to strengthen feature fusion. The formula of weighted feature fusion is shown in Equation (3).

where I and O present input and output values, stands for the minimum value of the learning rate to constrain the oscillation of the values, and are the weights. Taking the layer as an example, feature fusion was calculated as,

where is the intermediate feature of the fourth layer, is the output feature of the fourth layer, Conv represents the convolution operation and resize represents the up-sampling or down-sampling operation. Parameters and operations are shown in Figure 8.

Figure 8.

Parameters and processes.

2.4.3. Loss Algorithm

The loss function of the YOLOv7 model consists of three parts: localization loss (), confidence loss (), and classification loss (). Among them, the confidence loss and classification loss functions use binary cross-entropy loss, and the localization loss uses the CIoU loss function. The total loss is the weighted sum of the three losses. In this paper, α-CIOU [39] was chosen to replace as the regression loss of the prediction box. Unlike GIOU, α-CIOU considers the overlap rate and centroid distance between the real and the prediction box and introduces the parameter α based on CIOU to solve the degradation problem of GIOU to IOU. The formula of α-CIOU is shown in Equation (6). Moreover, the detection head had more flexibility in achieving the regression accuracy of the prediction box at different scales and reducing the noise on the regression of the prediction box interference after introducing the parameter α.

where v is the aspect ratio consistency parameter defined as in Equation (7)

From Equation (7), it can be seen that adding the exponent α increases the gradient of to accelerate the convergence of the network when α > 1. α is not sensitive to different datasets for different models. The gradient decreased significantly when α = 3, and the best-trained model was obtained [39]. Therefore, α was set as 3 in this paper.

2.5. Experimental Setup and Evaluation Metrics

In this paper, the relationship between external morphological characteristics and the sex of chicken eggs was analyzed via SPSS version 26.0. blood vessel image experiments were conducted in Ubuntu 18.04 OS. We used Python 3.7 (version 3.7.14), Pytorch (version 1.12.1), and CUDA (version 11.2) for the YOLOv7 training. The Intel Core i9-10900X CPU, TITAN RTX (24G) GPU, and 16 GB RAM were utilized for the detection model training. The size of all images used for training in this experiment was 640 × 640, the batch size was 16, and epochs were 1000 times.

In this paper, we used precision (P), recall (R), average precision (AP), mean average precision (mAP), accuracy (Acc), processing time, and model size as the evaluation metrics for the sex identification algorithm of chicken eggs. The experimental results encapsulated four outcomes, True Positive (TP) refers to manually marked blood vessels being classified correctly, and False Positive (FP) means the object incorrectly detected as blood vessels. True Negative (TN) is to the negative samples with negative system prediction. Finally, a false Negative (FN) reflects missed blood vessels.

3. Results and Discussion

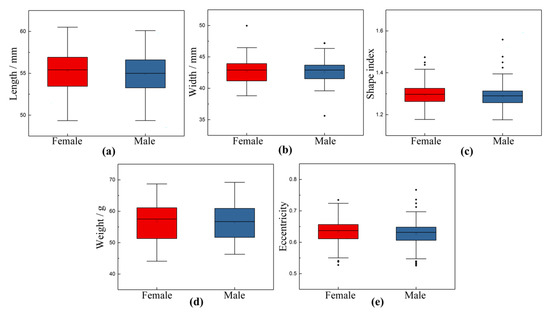

3.1. Relationship between External Morphological Characteristics and Sex of Chicken Eggs

External morphology feature measurements and analysis became a common and popular method or tool for classifying and predicting outputs in poultry eggs [40]. In previous studies, the measurement of eggshell shape characteristics was adopted as a rapid and non-destructive method to distinguish between male and female eggs [41,42,43,44]. In our experiment, 1008 eggs were selected at different sizes to measure and record weight, length, and width before incubation, and finally, 936 eggs (472 female and 464 male) were successfully hatched. The correlation between external morphological characteristics and the sex of chicken eggs was investigated by measuring and calculating the length, width, shape index, weight, and eccentricity. Shape index and eccentricity were calculated by Equations (13) and (14), respectively.

All external morphological parameters were normally distributed (p > 0.05). The mean and standard deviation of external morphological parameters of chicken eggs are shown in Table 3. The box line diagram of external morphological parameters distribution is shown in Figure 9. The length values for female samples range from 49.34 to 60.51. The average length for female eggs is 55.2755. For male eggs, the calculated average length value in this batch is 54.9282, ranging from 49.36 to 60.10. On average, male eggs have length values that are relatively lower than the length values of their female counterpart by about 0.628%. In addition, several studies used eccentricity to determine a specific threshold value to separate male and female eggs [42]. According to the statistics in this study, the eccentricity of female eggs ranges from 0.5276 to 0.7348, and the average value is 0.6326. On the other hand, the calculated average eccentricity value for the male eggs is 0.6279, ranging from 0.5256 to 0.7671. The average eccentricity value of male eggs is only about 0.743%, relatively lower than that of female eggs. On average, male eggs have eccentricity values that are somewhat lower than the eccentricity values of their female counterpart by about 0.743%.

Table 3.

Mean and standard deviation of morphological parameters of chicken eggs.

Figure 9.

Box line diagram of egg shape parameter distribution. (a) Length; (b) Width; (c) Shape index; (d) Weight; (e) Eccentricity.

The collected data were analyzed to determine the relationship between external morphological parameters and sex using Spearman rank correlation coefficients in Statistical Package for Social Sciences (SPSS version 26.0) software. The Spearman rank correlation coefficients were −0.040 (p = 0.471), 0.024 (p = 0.471), −0.090 (p = 0.859), 0.010 (p = 0.105), and 0.021 (p = 0.352) between length, width, shape index, weight, eccentricity, and sex, respectively. The results showed that the five parameters did not differ significantly between male and female eggs, which is consistent with the literature of Imholt [45]. Therefore, the morphological measurements of pre-hatched eggs might not indicate the sex of hatching chicks. However, this study focuses on the external characteristic or morphology features extraction of Jingfen No. 6 chicken eggs. Since there are no external differences between male and female eggs, the sex identification model we proposed will focus on the internal features of the blood vessels of chicken embryos.

3.2. Algorithm Performance Evaluation

3.2.1. Results of Different Incubation Days

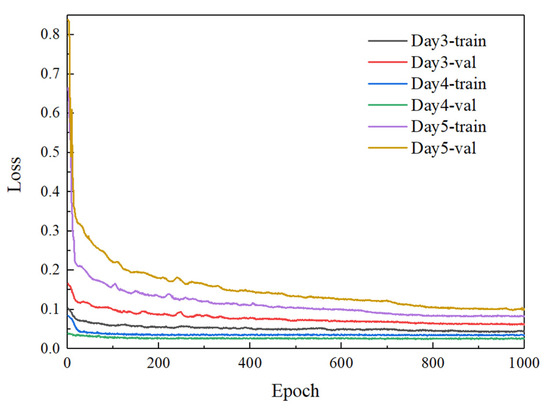

To verify an optimal day of incubation for blood vessel recognition and sex identification of chicken embryos, the improved YOLOv7 model was trained using the datasets of blood vessel images collected on days 3, days 4, and days 5 of incubation, respectively. After the training, the loss value was plotted through the curve, where the training process can be directly observed. Figure 10 depicts the loss curves of the improved YOLOv7 based on the blood vessel images from days 3 to 5 of incubation during the training and validation process. It can be seen from Figure 10 that the parameters tend to be stable after the network is trained about 800 times, and the loss value of the improved YOLOv7 decreases to about 0.04, 0.03, and 0.08, respectively. The analysis of the convergence of loss function shows that the improved YOLOv7 network based on days 4 of incubation outperforms that of days three and 4 in terms of convergence speed, indicating a relatively ideal training result. The performance comparison of sex determination from days 3 to days 5 of incubation is shown in Table 4.

Figure 10.

Comparison of loss graphs from days 3–5 of incubation.

Table 4.

Performance comparison of sex identification of chicken eggs at different days of incubation.

As shown in Table 4, the highest mAP for sex identification was 88.79% on day 4 of chicken egg incubation, which was 2.98% and 6.50% higher than that of datasets collected on days three and 5 of incubation, respectively. On day 3 of incubation, the blood vessels start to develop within a smaller area with fewer features. On day 4 of incubation, the blood vessels developed rapidly and covered one side of the breeder egg. The blood vessels cover over half of the egg on day 5 of incubation which contains complex features. Among the three days of incubation, the mAP of sex identification on day 5 of incubation was the lowest, probably due to the complex texture of the blood vessels on day five and the adhesion of blood vessels to the eggshell membrane. From the perspective of practical industrial applications, it is feasible to use embryonic vessel images of days 4 to construct a sex identification model for chicken eggs.

3.2.2. Impact of YOLOv7 Improvements on Sex Identification of Chicken Eggs

To further verify the effectiveness of the improved YOLOv7 algorithm in the sex identification of chicken eggs under the same experimental conditions, it is compared with the original YOLOv7 algorithm target detection algorithm. Four sets of ablation tests were conducted to analyze the effects of the CBAM attention module and BiFPN feature fusion on the sex identification results of chicken eggs. The results of the relevant ablation tests are shown in Table 5.

Table 5.

Contribution of different modules to the YOLOv7 network.

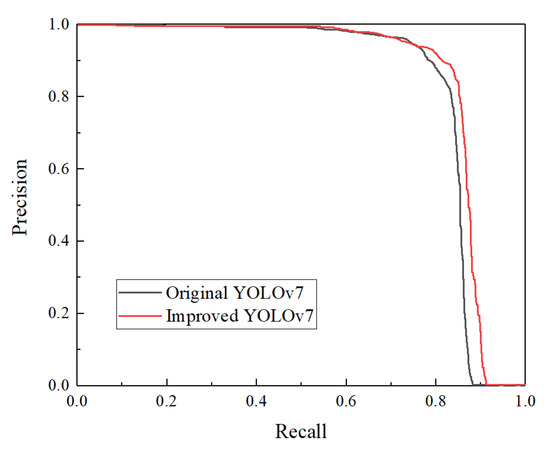

Compared with the original YOLOv7, the improved YOLOv7 introduces the CBAM attention mechanism with feature fusion, extracts, and aggregates features from multiple scales, making the global and local features fully fused and enhancing the feature expression ability of the network. For verifying the effectiveness of the CBAM attention module, the CBAM module was added before the first CBS in the backbone of the original YOLOv7 model. As shown in Table 4, the mAP was 87.11%, which was an improvement of 2.29% after the improvement with CBAM attention only, indicating that the introduction of the CBAM attention module to distinguish the importance between channel features has a contribution to building a better sex identification model of chicken eggs. To explore the effectiveness of the BiFPN feature fusion, the neck part was improved by the feature fusion network. The experiments showed that introducing the BiFPN improved the mAP of the model by 1.83%. The above results indicated that the combination of the CBAM attention mechanism and BiFPN function to improve YOLOv7 in this study was effective and reliable. After adding the CBAM attention mechanism and BiFPN feature fusion, the performance was improved, and the mAP increased from 84.82% to 88.28%, increasing by 3.46%. The Precision-recall curve comparison results of original and improved YOLOv7 algorithms are shown in Figure 11. It can be seen from the figure that the precision-recall curve of the improved YOLOv7 model is better than that original YOLOv7.

Figure 11.

Comparison of Precision-recall curve graphs before and after YOLOv7 improvements.

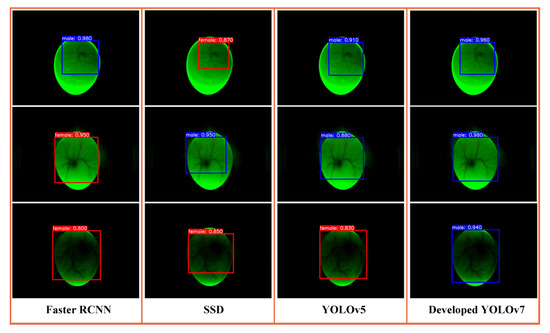

3.3. Comparison of Different Object Detection Algorithms

To evaluate the performance of the proposed sex identification algorithm, a comparison of four deep learning models, including faster RCNN, SSD, YOLOv5, and improved YOLOv7, was conducted with data modeling and analysis. The experiment used blood vessel images from day 4 of incubation to train the models and tried to keep the hyperparameters consistent during training. The test set was adopted to evaluate the model performance. The sex detection results of male eggs using the above models are shown in Figure 12. As can be seen, four sex identification models are better at detecting blood vessel regions. Faster R-CNN has false detection on day four and day 5 of incubation. SSD has missed the blood vessel region. YOLO models can more accurately identify the blood vessel region and differentiate between genders.

Figure 12.

Comparison of the effects of different detection models.

The P, R, AP, mAP, processing time, and model size of the above target detection models for sex identification of chicken embryos are shown in Table 6. Among the four target detection models, the mAP of SSD and Faster R-CNN was only 56.30% and 71.62%, respectively. Compared with SSD and Faster R-CNN networks, YOLOv5 and improved YOLOv7 networks achieved better performance. The mAP value of improved YOLOv7 increased by 5.97% compared with the original YOLOv5 and significantly improved the performance of detecting the blood vessel of chicken embryos. The comparison results show that the proposed algorithm has higher P, R, AP, and mAP values and stronger detection ability for blood vessel detection and classification.

Table 6.

Performance comparison of five detection algorithms.

Moreover, the processing time per image takes 23.90 ms using our method, which is 7.35 ms lower than YOLOv5. The results indicated that our method could meet the demand for real-time sex determination of chicken embryos. In addition, the size of the improved model increased by 1.7 MB but is still the smallest in these models. Overall, the proposed model achieved better performance for the sex determination of chicken embryos.

3.4. Comparison with Existing Research

Several studies have investigated sex identification methods using blood vessel images of chicken eggs. A performance comparison has been made between the proposed algorithm and existing studies in the accuracy of sex determination based on blood vessel images, and the results are given in Table 7.

Table 7.

Performance comparison with sex identification approaches using blood vessel images.

Our proposed model has the highest average accuracy of 89.25%, with 90.32% and 88.18% for female and male eggs, respectively. Moreover, the proposed method has a shorter discrimination time for sex identification of chicken embryos based on the improved YOLOv7 compared to previous studies. Experimental results and a detailed comparative analysis of all methods demonstrated the superiority of the YOLOv7 model. Therefore, the comparison cleared that the improved model identified in this study has advantages in terms of detection accuracy, efficiency, and stability for sex identification during incubation. Most earlier methods were evaluated using limited data, and the actual test set contained a relatively small number of egg samples.

Meanwhile, all egg samples were incubated in the laboratory in previous studies. In our study, all images in our created dataset were collected from a large-scale breeder hatchery with balanced data, where the experimental procedure was more in line with farm norms which can provide a practical reference for future studies. Extensive experiments were conducted on a relatively large dataset by considering several factors to determine the best-performing model for the sex identification of chicken embryos.

The proposed sex identification model of chicken embryos could be embedded into an automatic egg candling machine to identify chicken embryos on day 4 of incubation. Also, sex identification could be achieved by modifying the incubation facility and adding image sensors and edge computing equipment. The study’s weakness is that it is insufficiently accurate to meet the actual accuracy requirements of current poultry farms. In the future, we intend to improve and validate our method using multi-sensing techniques that combine large datasets. It should also be noted that the eggshell quality screening, egg storage, and incubation were under the hatchery standard. The performance of the proposed sex identification model for different incubation conditions, egg storage duration, and eggshell quality should be further validated. In addition, the eggs selected in our study were good eggshell quality. Dark spots or sandy shells might affect the image recognition effect, which needs further study.

4. Conclusions

Early and non-destructive sex identification of chicken eggs is a huge challenge for the poultry industry. In this study, a method based on blood vessel images and an improved YOLOv7 deep learning algorithm for the sex identification of chicken eggs was proposed and validated on a specie in a hatchery. A machine vision image acquisition platform was constructed to acquire blood vessel images from day 3 to day 5 of incubation. The CBAM attention mechanism and BiFPN were introduced to improve the YOLOv7 deep learning algorithm. The test results showed that the mAP of the algorithm was 3.46% higher than the original YOLOv7. A comparison with typical image recognition algorithms indicated that the accuracy of our study was higher than Faster R-CNN, SSD, and YOLOv5 to verify its accuracy and robustness for the sex identification of chicken eggs.

Further, the improved YOLOv7 was used to construct the sex identification models for chicken embryos on day 3, day 4, and day 5 of incubation, respectively. The mAP reached 88.79% on day 4 of incubation, with the correct discrimination rate of 87.91% for male samples and 89.67% for female eggs. The results of this study show that the combination of deep learning and blood vessel images can identify the sex of chicken embryos. Future work will focus on time-varying methods for the sex identification of chicken eggs and combine them with other non-destructive methods, such as near-infrared and hyper-spectral, to improve the sex identification accuracy. Different species, different days of preservation, different hatching conditions, and different shell qualities also need to be considered in further experiments.

Author Contributions

N.J.: Methodology, Investigation, Writing—original draft. B.L.: Conceptualization, Writing—review & editing, Supervision. Y.Z.: Software, Writing—review & editing. S.F.: Resources, Writing—review & editing. J.Z.: Formal analysis, Data curation. H.W.: Visualization, Data curation. W.Z.: Investigation, Project administration. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially funded by the Science and Technology Innovation Capacity Development Program of Beijing Academy of Agriculture and Forestry Sciences (No. KJCX20230204), the Postdoctoral Science Foundation of Beijing Academy of Agriculture and Forestry Sciences of China (No. 2021-ZZ-031), Beijing Postdoctoral Fund (No. 2022-ZZ-118), Agricultural Science and Technology Demonstration and Extension Project of Beijing Academy of Agricultural and Forestry Sciences (No. JP2023-06).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

The authors appreciate the funding organization for its financial support. The authors also thank Ruiqiao Liu for her professional guidance on the experimental site and chick sexing.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Krautwald-Junghanns, M.E.; Cramer, K.; Fischer, B.; Förster, A.; Galli, R.; Kremer, F.; Mapesa, E.U.; Meissner, S.; Preisinger, R.; Preusse, G.; et al. Current approaches to avoid the culling of day-old male chicks in the layer industry, with special reference to spectroscopic methods. Poult. Sci. 2018, 97, 749–757. [Google Scholar] [CrossRef] [PubMed]

- Steiner, G.; Bartels, T.; Stelling, A.; Krautwald-Junghanns, M.-E.; Fuhrmann, H.; Sablinskas, V.; Koch, E. Gender determination of fertilized unincubated chicken eggs by infrared spectroscopic imaging. Anal. Bioanal. Chem. 2011, 400, 2775–2782. [Google Scholar] [CrossRef] [PubMed]

- Steiner, G.; Koch, E.; Krautwald-Junghanns, M.E.; Bartels, T. Method and Device for Determining the Sex of Fertilized, Non-Incubated Bird Eggs. U.S. Patent 8,624,190, 7 January 2014. [Google Scholar]

- Galli, R.; Preusse, G.; Uckermann, O.; Bartels, T.; Krautwald-Junghanns, M.E.; Koch, E.; Steiner, G. In Ovo Sexing of Domestic Chicken Eggs by Raman Spectroscopy. Anal. Chem. 2016, 88, 8657–8663. [Google Scholar] [CrossRef] [PubMed]

- Galli, R.; Koch, E.; Preusse, G.; Schnabel, C.; Bartels, T.; Krautwald-Junghanns, M.-E.; Steiner, G. Contactless in ovo sex determination of chicken eggs. Curr. Dir. Biomed. Eng. 2017, 3, 131–134. [Google Scholar] [CrossRef]

- Galli, R.; Preusse, G.; Schnabel, C.; Bartels, T.; Cramer, K.; Krautwald-Junghanns, M.E.; Koch, E.; Steiner, G. Sexing of chicken eggs by fluorescence and Raman spectroscopy through the shell membrane. PLoS ONE 2018, 13, e0192554. [Google Scholar] [CrossRef] [PubMed]

- Pan, L.; Zhang, W.; Yu, M.; Sun, Y.; Gu, X.; Ma, L.; Li, Z.; Hu, P.; Tu, K. Gender determination of early chicken hatching eggs embryos by hyperspectral imaging. Trans. Chin. Soc. Agric. Eng. 2016, 32, 181–186. [Google Scholar]

- Göhler, D.; Fischer, B.; Meissner, S. In-ovo sexing of 14-day-old chicken embryos by pattern analysis in hyperspectral images (VIS/NIR spectra): A non-destructive method for layer lines with gender-specific down feather color. Poult. Sci. 2017, 96, 1–4. [Google Scholar] [CrossRef]

- Li, Q.; Wang, Q.; Ma, M.; Xiao, S.; Shi, H. Non-Destructive Detection of Male and Female Information of Early Duck Embryos Based on Visible/Near Infrared Spectroscopy and Deep Learning. Spectrosc. Spectr. Anal. 2021, 41, 1800–1805. [Google Scholar]

- Alin, K.; Fujitani, S.; Kashimori, A.; Suzuki, T.; Ogawa, Y.; Kondo, N. Non-invasive broiler chick embryo sexing based on opacity value of incubated eggs. Comput. Electron. Agric. 2019, 158, 30–35. [Google Scholar] [CrossRef]

- Rahman, A.; Syduzzaman, M.; Khaliduzzaman, A.; Fujitani, S.; Kashimori, A.; Suzuki, T.; Ogawa, Y.; Kondo, N. Nondestructive sex-specific monitoring of early embryonic development rate in white layer chicken eggs using visible light transmission. Br. Poult. Sci. 2020, 61, 209–216. [Google Scholar] [CrossRef]

- Xiang, X.; Wang, Y.; Yu, Z.; Ma, M.; Zhu, Z.; Jin, Y. Non-destructive characterization of egg odor and fertilization status by SPME/GC-MS coupled with electronic nose. J. Sci. Food Agric. 2019, 99, 3264–3275. [Google Scholar] [CrossRef]

- Corion, M.; De Ketelaere, B.; Hertog, M.; Lammertyn, J. Profiling the emission of volatile organic compounds from chicken hatching eggs in the first half of incubation. In Proceedings of the IFRG eMeeting 2021, Online, 14–15 October 2021. [Google Scholar]

- Xiang, X.; Hu, G.; Jin, Y.; Jin, G.; Ma, M. Nondestructive characterization gender of chicken eggs by odor using SPME/GC-MS coupled with chemometrics. Poult. Sci. 2022, 101, 101619. [Google Scholar] [CrossRef]

- Kaleta, E.F.; Redmann, T. Approaches to determine the sex prior to and after incubation of chicken eggs and of day-old chicks. Worlds. Poult. Sci. J. 2008, 64, 391–399. [Google Scholar] [CrossRef]

- Tang, Y.; Hong, Q.; Wang, Q.; Zhu, Z. Sex identification of chicken eggs based on blood line texture features and GA-BP neural network. J. Huazhong Agric. Univ. 2018, 37, 130–135. [Google Scholar]

- Zhu, Z.; Tang, Y.; Hong, Q.; Huang, P.; Wang, Q.; Ma, M. Female and male identification of early chicken embryo based on blood line features of hatching egg image and deep belief networks. Trans. Chin. Soc. Agric. Eng. 2018, 34, 197–203. [Google Scholar]

- Zhu, Z.; Zifan, Y.; Yuting, H.; Kai, Y.; Qiaohua, W.; Meihu, M. Gender identification of early chicken embryo based on RF-DS information fusion of spectroscopy and machine vision. Trans. Chin. Soc. Agric. Eng. 2022, 38, 308–315. [Google Scholar]

- Li, W.; Feng, X.S.; Zha, K.; Li, S.; Zhu, H.S. Summary of Target Detection Algorithms. J. Phys. Conf. Ser. 2021, 1757, 012003. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector BT-Computer Vision–ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A.; Impiombato, D.; Giarrusso, S.; Mineo, T.; Catalano, O.; Gargano, C.; La Rosa, G.; et al. You Only Look Once: Unified, Real-Time Object Detection. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2016, 794, 185–192. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Scaled-yolov4: Scaling cross stage partial network. In Proceedings of the IEEE/cvf Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13024–13033. [Google Scholar] [CrossRef]

- Long, X.; Deng, K.; Wang, G.; Zhang, Y.; Dang, Q.; Gao, Y.; Shen, H.; Ren, J.; Han, S.; Ding, E.; et al. PP-YOLO: An effective and efficient implementation of object detector. arXiv 2020, arXiv:2007.12099. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Paulguerrie Ultralytics.YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 19 September 2022).

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. You Only Learn One Representation: Unified Network for Multiple Tasks. arXiv 2021, arXiv:2105.04206. [Google Scholar]

- Wang, C.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable bag-of-freebies sets new stateof-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Shafey, T.M.; Al-Mohsen, T.H. Embryonic growth, hatching time and hatchability performance of meat breeder eggs incubated under continuous green light. Asian-Australas. J. Anim. Sci. 2002, 15, 1702–1707. [Google Scholar] [CrossRef]

- Rozenboim, I.; Piestun, Y.; Mobarkey, N.; Barak, M.; Hoyzman, A.; Halevy, O. Monochromatic light stimuli during embryogenesis enhance embryo development and posthatch growth. Poult. Sci. 2004, 83, 1413–1419. [Google Scholar] [CrossRef]

- Sobolewska, A.; Elminowska-Wenda, G.; Bogucka, J.; Szpinda, M.; Walasik, K.; Dankowiakowska, A.; Jóźwicki, W.; Wiśniewska, H.; Bednarczyk, M. The effect of two different green lighting schedules during embryogenesis on myogenesis in broiler chickens. Eur. Poult. Sci. 2019, 83, 1–14. [Google Scholar] [CrossRef]

- Abdulateef, S.; Farhan, S.M.; Awad, M.M.; Mohammed, T.T.; Al-Ani, M.Q.; Al-Hamdani, A. LBMON172 Impact Of Photoperiodic Green Light During Incubation On Change Hormone In The Embryo Of Chicken. J. Endocr. Soc. 2022, 6, A470–A471. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar] [CrossRef]

- He, J.; Erfani, S.; Ma, X.; Bailey, J.; Chi, Y.; Hua, X.S. Alpha-IoU: A Family of Power Intersection over Union Losses for Bounding Box Regression. Adv. Neural Inf. Process. Syst. 2021, 24, 20230–20242. [Google Scholar]

- Nyalala, I.; Okinda, C.; Kunjie, C.; Korohou, T.; Nyalala, L.; Chao, Q. Weight and volume estimation of poultry and products based on computer vision systems: A review. Poult. Sci. 2021, 100, 101072. [Google Scholar] [CrossRef]

- Yilmaz-Dikmen, B.; Dikmen, S. A morphometric method of sexing white layer Eggs. Rev. Bras. Cienc. Avic. 2013, 15, 203–210. [Google Scholar] [CrossRef]

- Mappatao, G. Duck Egg Sexing by Eccentricity Determination Using Image Processing. J. Telecommun. Electron. Comput. Eng. 2018, 10, 71–75. [Google Scholar]

- Dioses, J.L.; Medina, R.P.; Fajardo, A.C.; Hernandez, A.A. Performance of Classification Models in Japanese Quail Egg Sexing. In Proceedings of the 2021 IEEE 17th International Colloquium on Signal Processing & Its Applications (CSPA), Langkawi, Malaysia, 5–6 March 2021; pp. 29–34. [Google Scholar] [CrossRef]

- Pardo, J.I.S.; González, F.J.N.; Ariza, A.G.; Arbulu, A.A.; Jurado, J.M.L.; Bermejo, J.V.D.; Vallejo, M.E.C. Traditional sexing methods and external egg characteristics combination allow highly accurate early sex determination in an endangered native turkey breed. Front. Vet. Sci. 2022, 9, 948502. [Google Scholar] [CrossRef]

- Imholt, D. Morphometrische Studien an Eiern von Hybrid-und Rassehühnern mit Versuchen zur Detektion einer Beziehung zwischen der Form von Eiern und dem Geschlecht der darin befindlichen Küken: Eine oologische und mathematische Studie. VVB Laufersweiler. 2010. Available online: http://geb.uni-giessen.de/geb/volltexte/2010/7760/ (accessed on 6 March 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).