Evaluating the Canopy Chlorophyll Density of Maize at the Whole Growth Stage Based on Multi-Scale UAV Image Feature Fusion and Machine Learning Methods

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area and Experimental Design

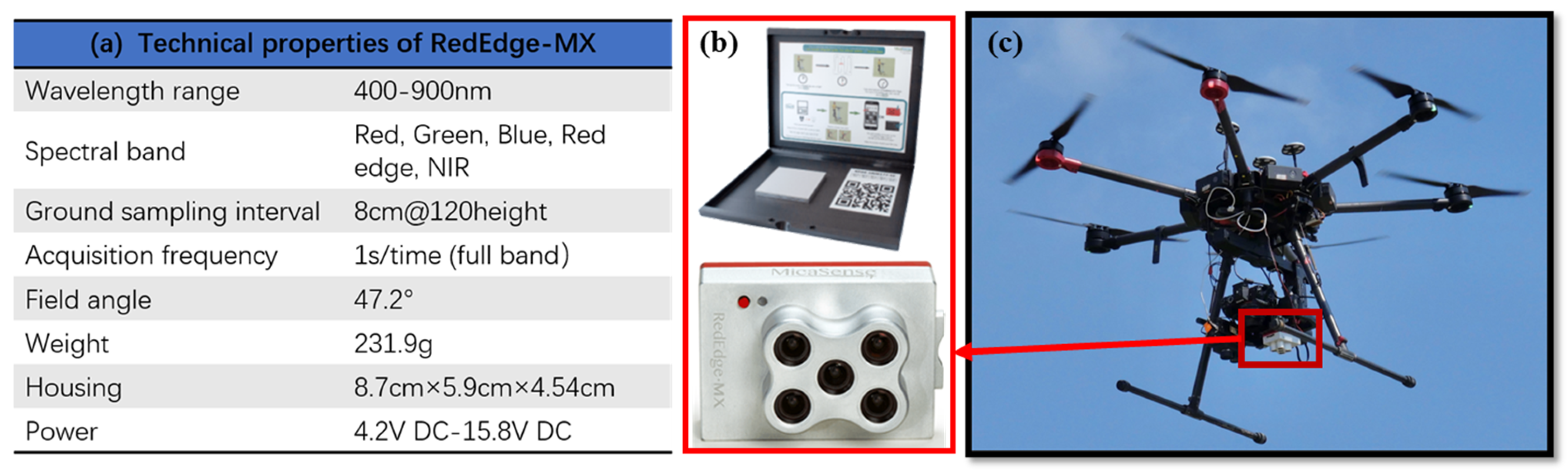

2.2. Data Acquisition

2.2.1. Canopy Spectral Data

2.2.2. Plant Measurements

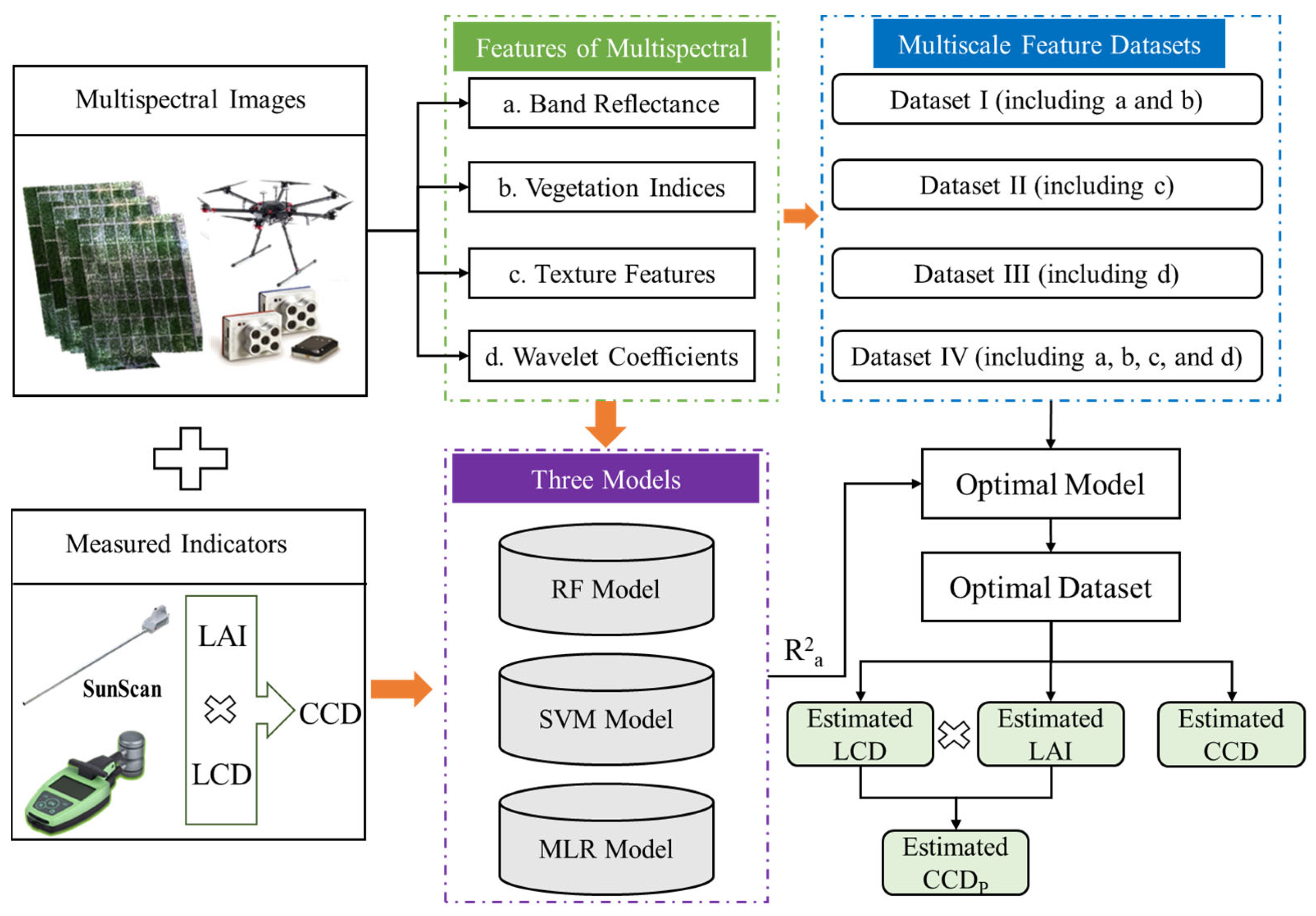

2.3. Data Processing

- (1)

- Three types of image features were extracted from the multispectral features, and four multiscale datasets were constructed based on these three types of features;

- (2)

- Combining the estimation accuracy and efficiency of LCD, LAI, and CCD, the best estimation model was selected;

- (3)

- Based on the optimal model, the optimal fusion feature set for estimating CCD was evaluated;

- (4)

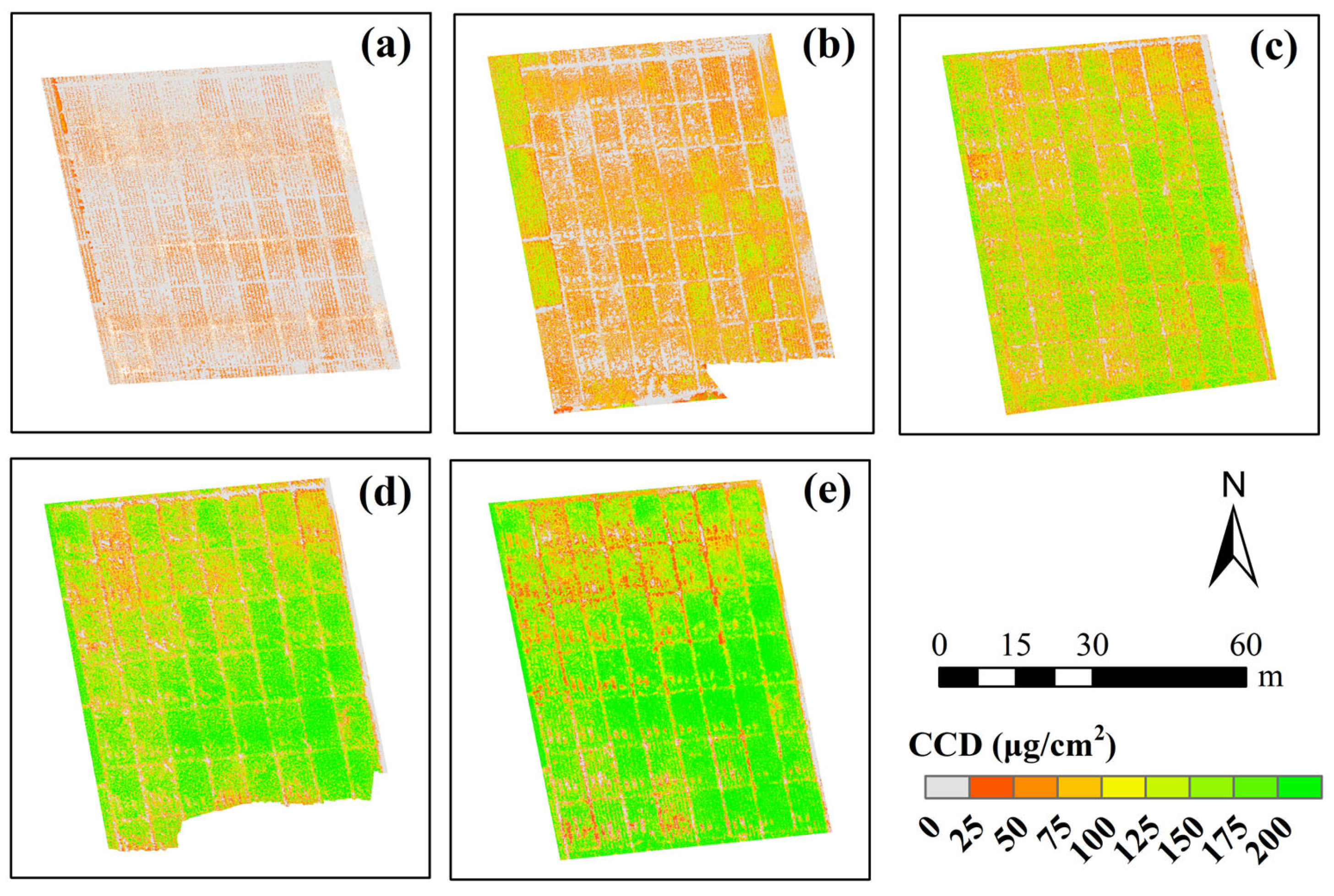

- After comparing the two CCD estimation results, the CCD mapping of the UAV scale was completed.

2.4. Multi-Scale Image Features

2.4.1. Vegetation Indices (VIs)

2.4.2. Texture Features

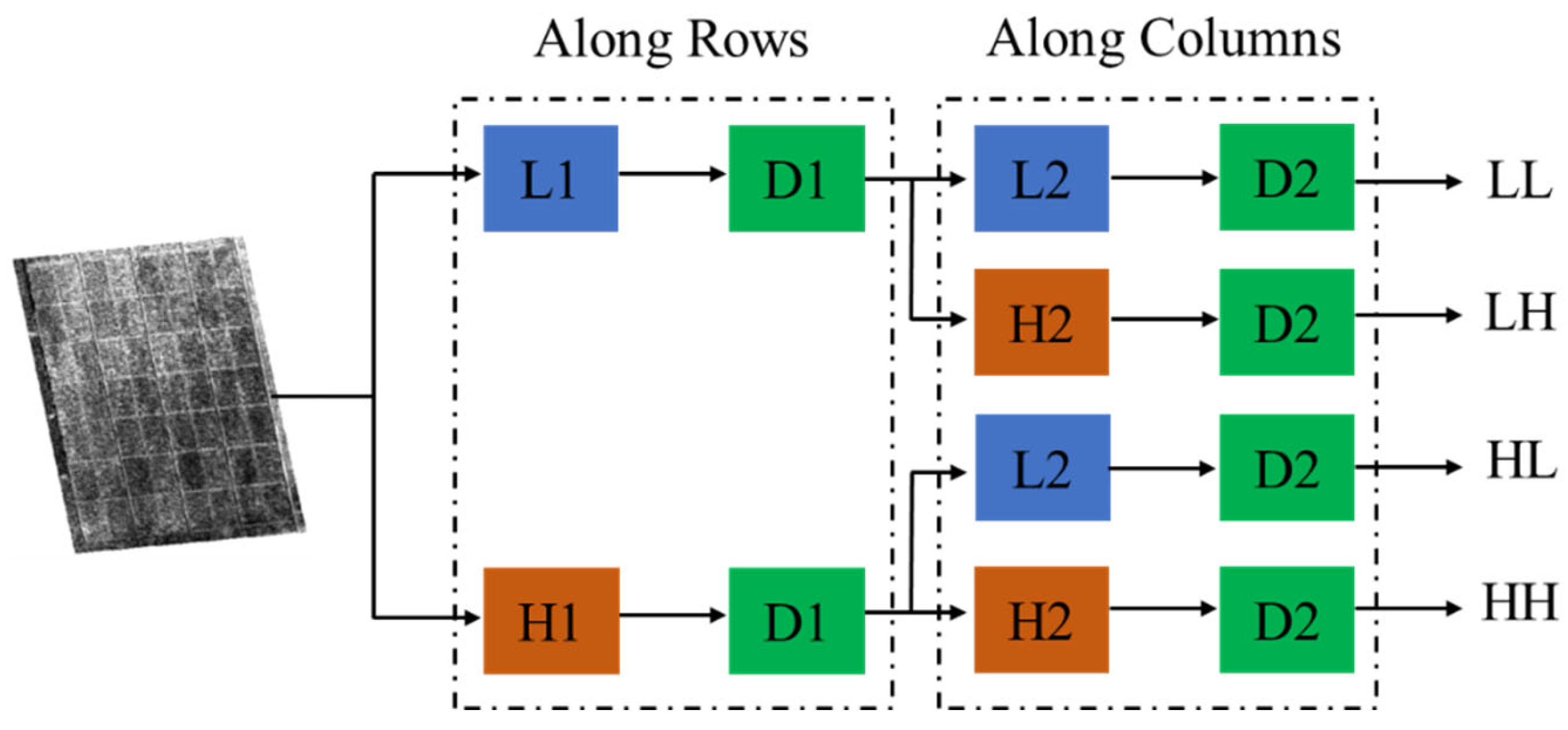

2.4.3. Discrete Wavelet Transformation (DWT)

2.4.4. Construction of a Multi-Scale Image Feature Dataset

2.5. Estimation Methods

2.6. Precision Evaluation

3. Results and Analysis

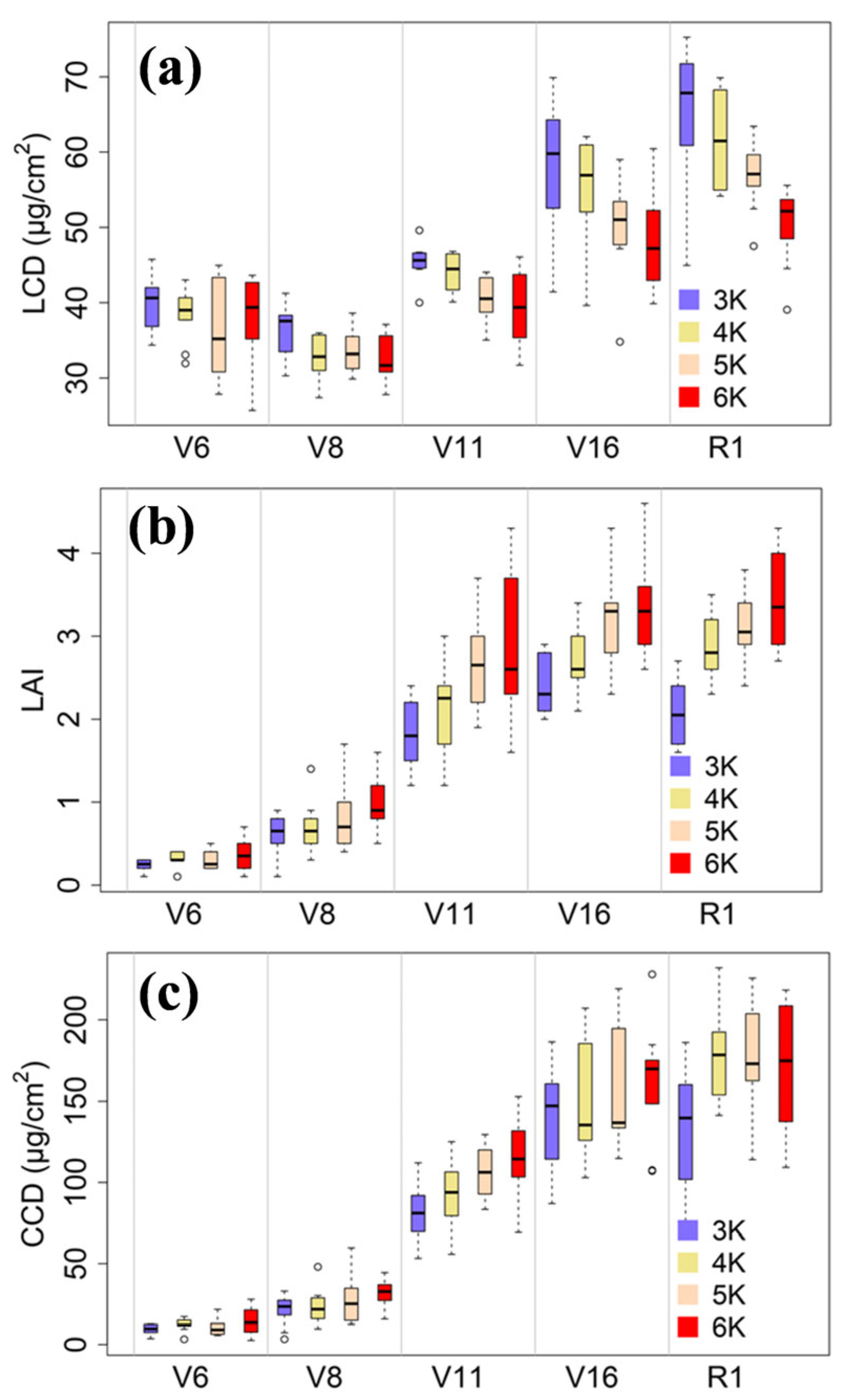

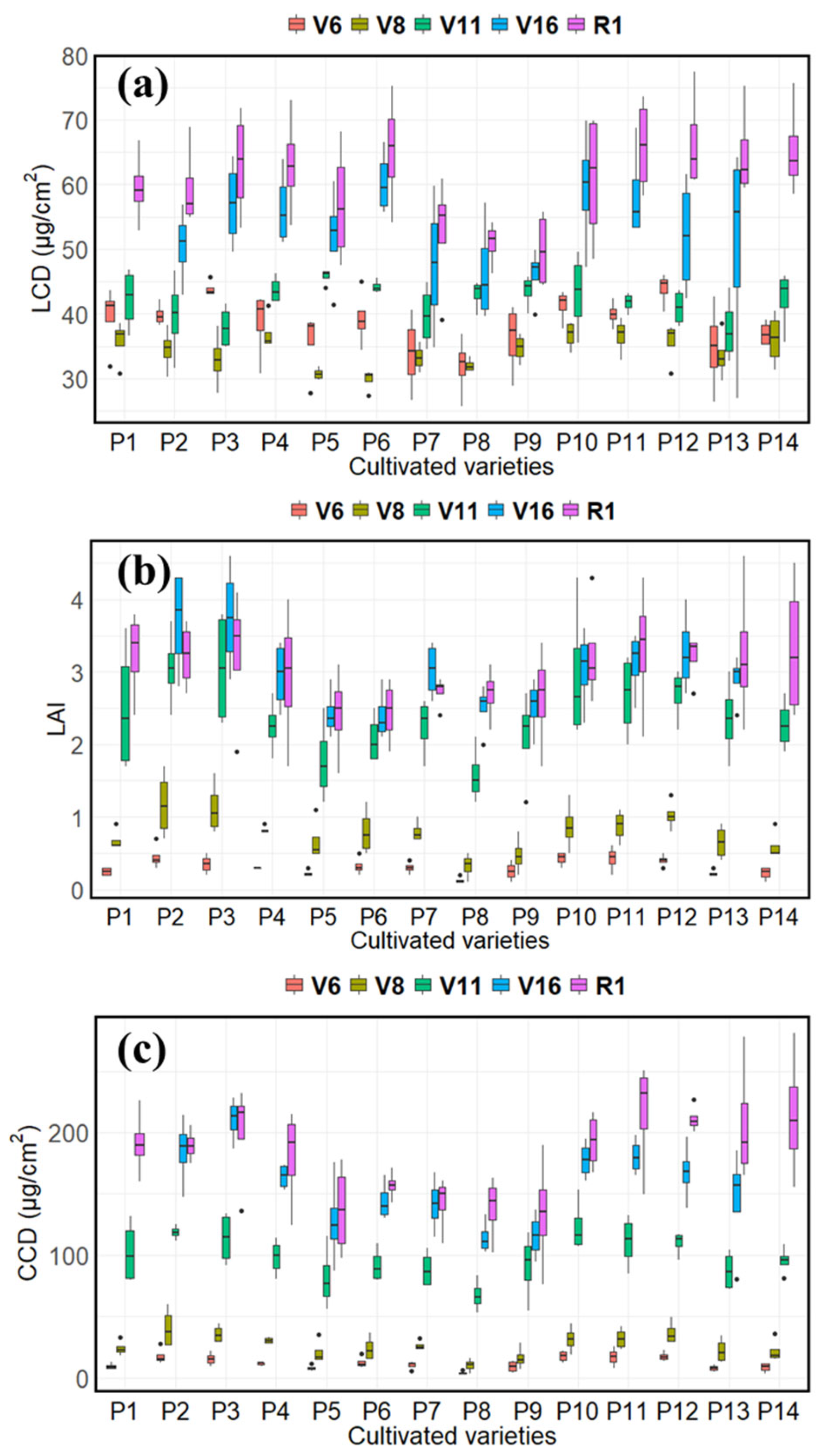

3.1. Dynamic Changes of the LCD, LAI, and CCD

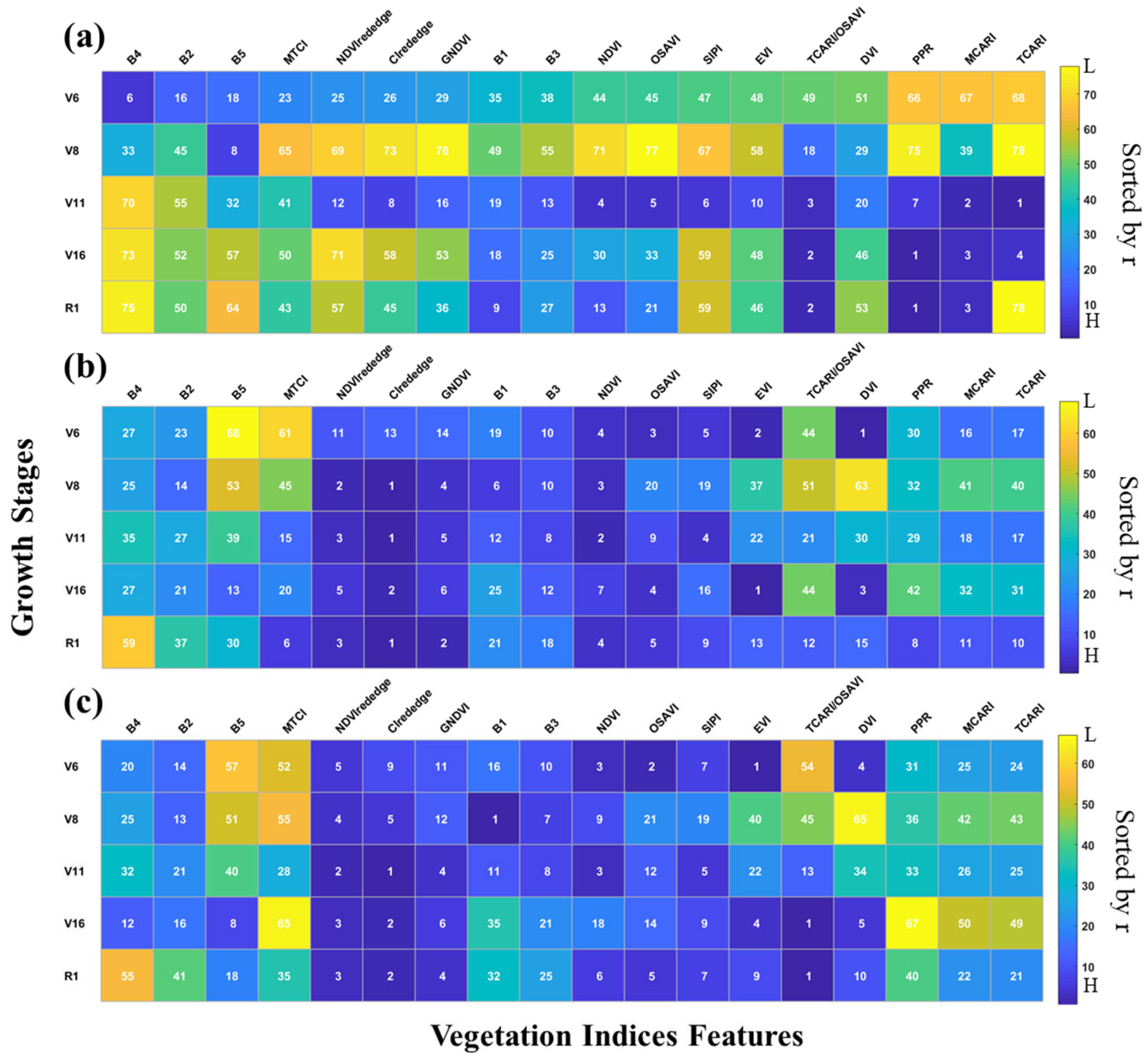

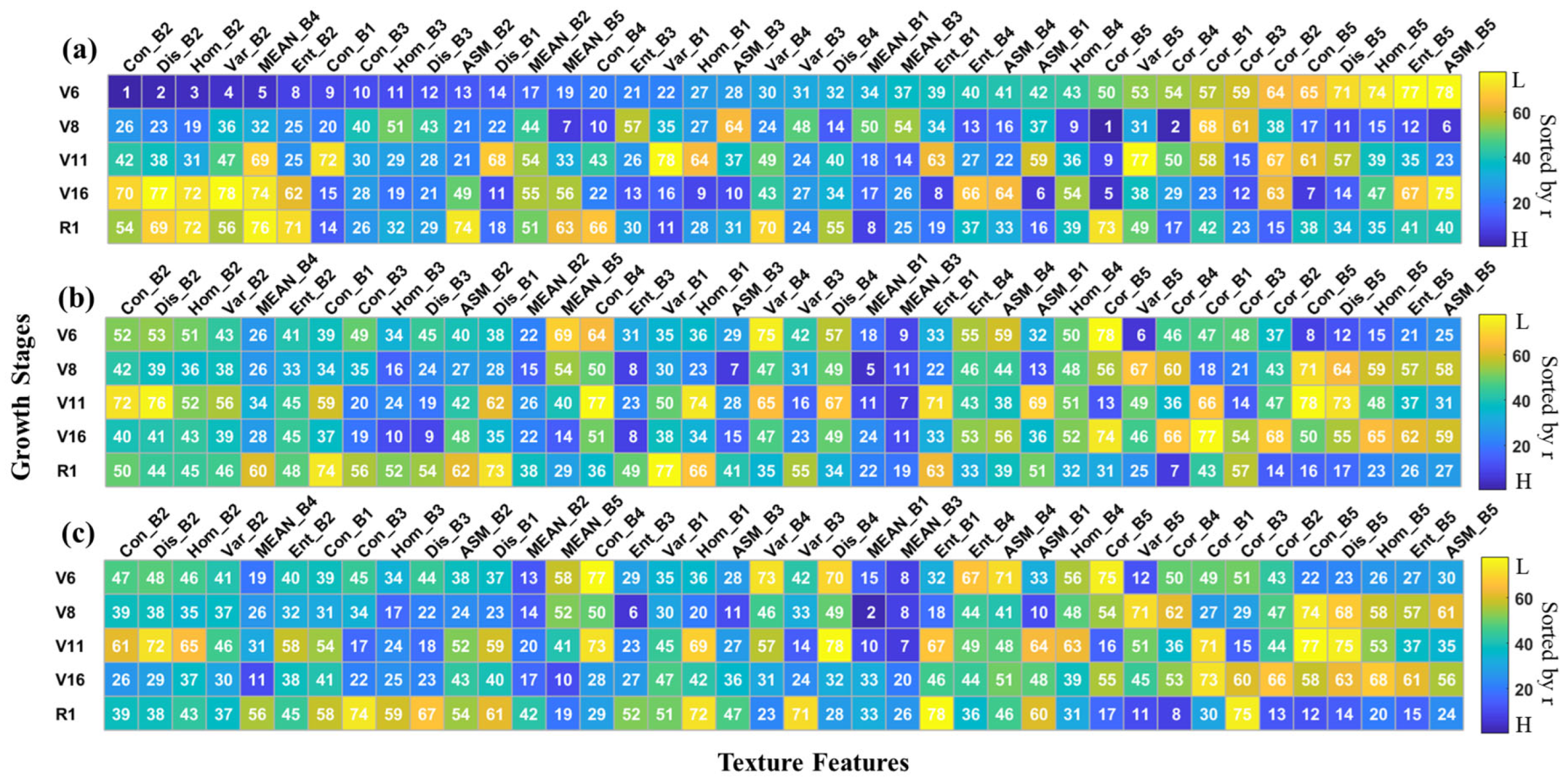

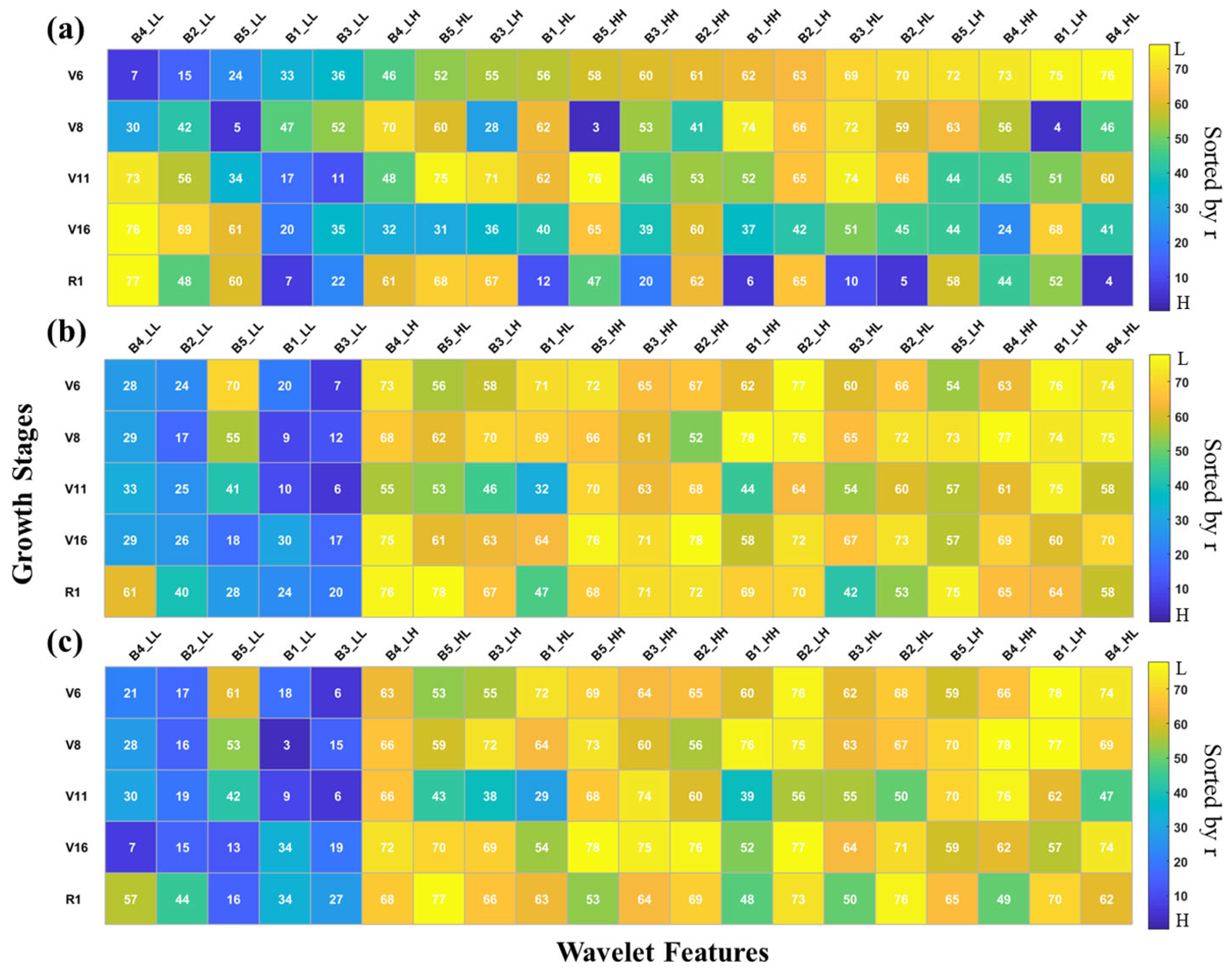

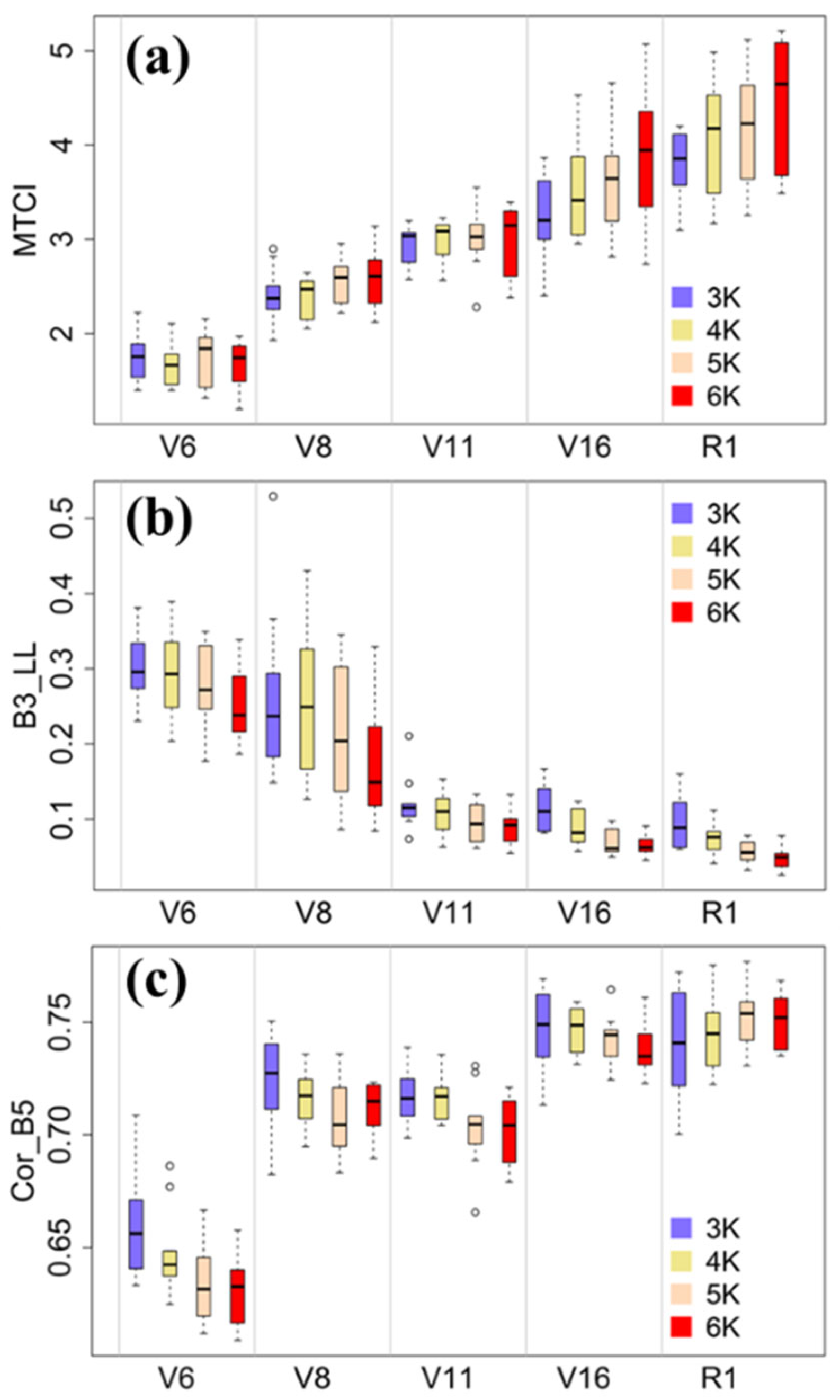

3.2. Correlation Analysis of Image Features at Different Growth Stages

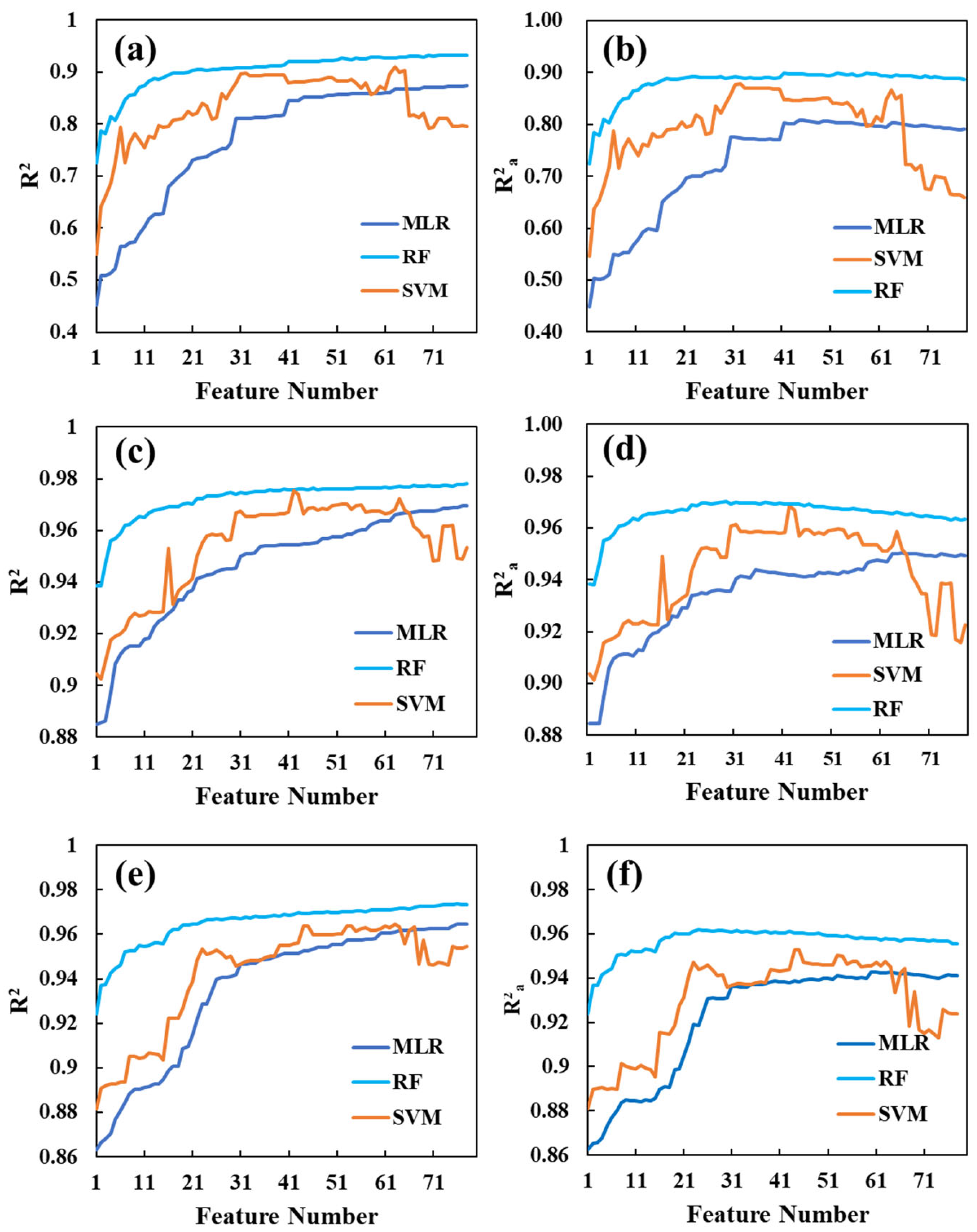

3.3. Estimation Results of LCD, LAI, and CCD

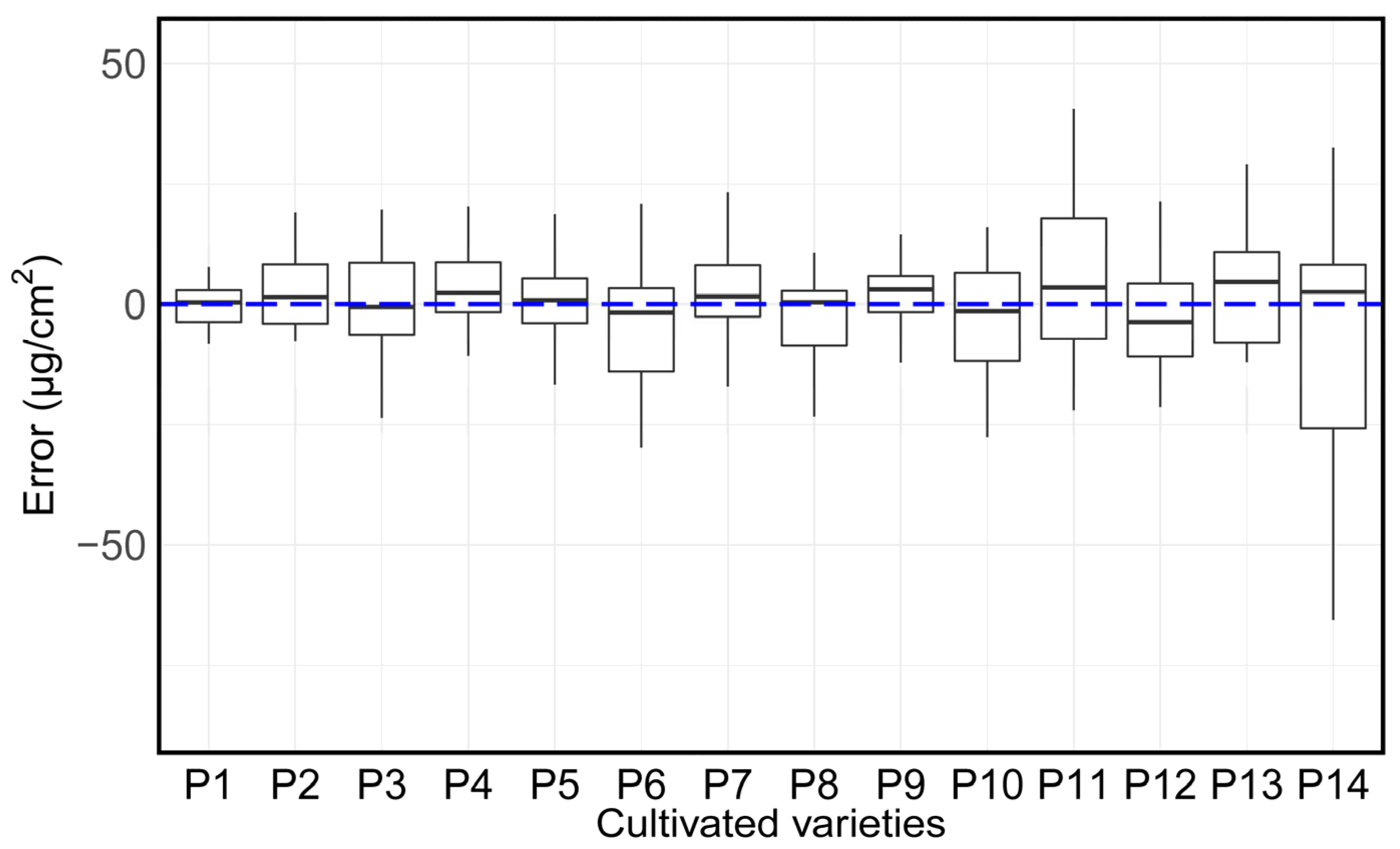

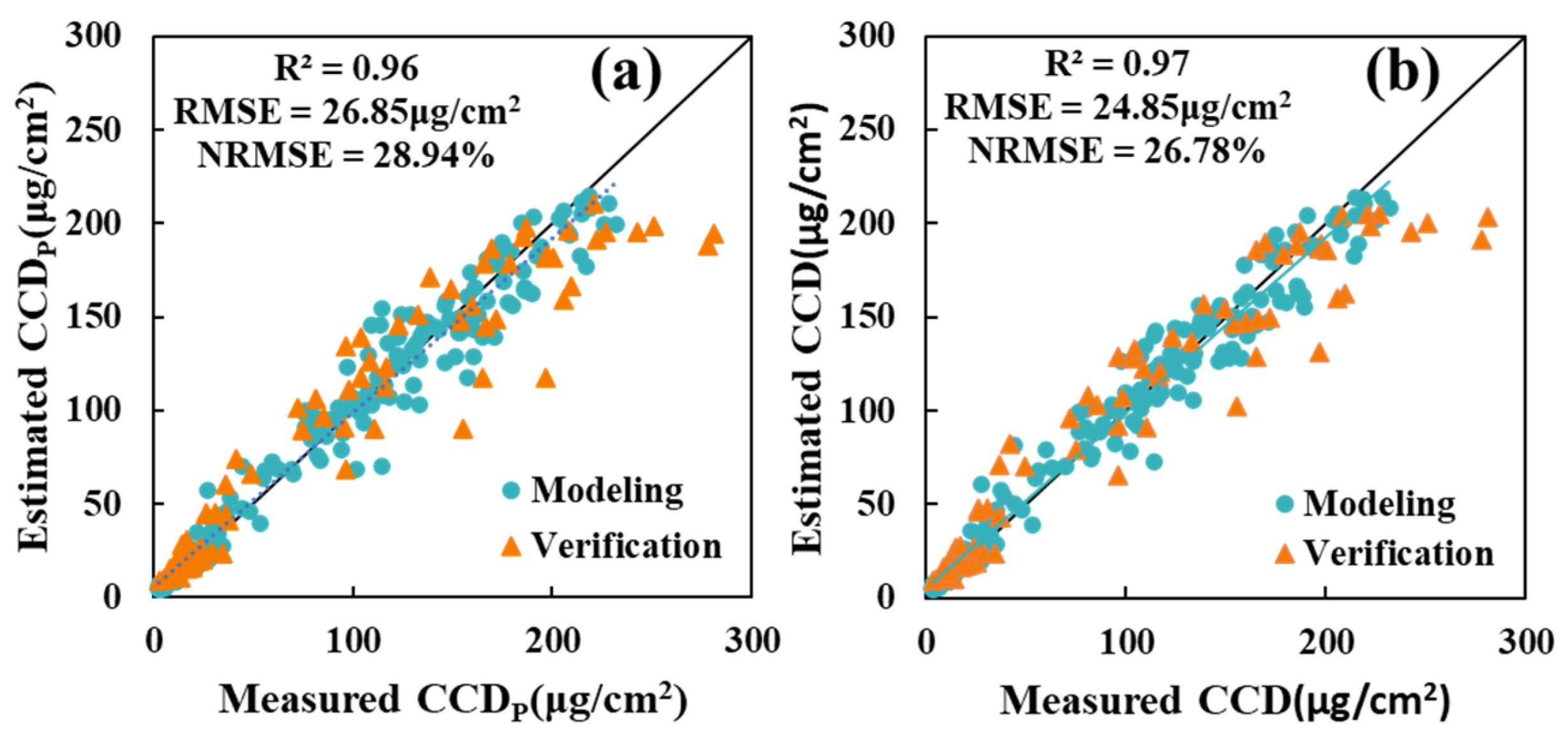

3.4. CCD Calculated Based on the Estimation Results of LCD and LAI

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Anderson, J.M. Photoregulation of the Composition, Function, and Structure of Thylakoid Membranes. Annu. Rev. Plant Physiol. 1986, 37, 93–136. [Google Scholar] [CrossRef]

- Croft, H.; Chen, J.M. Leaf Pigment Content. In Comprehensive Remote Sensing; Liang, S., Ed.; Elsevier: Oxford, UK, 2018; pp. 117–142. [Google Scholar] [CrossRef]

- Borrell, A.K.; Hammer, G.L. Nitrogen Dynamics and the Physiological Basis of Stay-Green in Sorghum. Crop Sci. 2000, 40, 1295–1307. [Google Scholar] [CrossRef]

- Guo, Y.; Senthilnath, J.; Wu, W.; Zhang, X.; Zeng, Z.; Huang, H. Radiometric Calibration for Multispectral Camera of Different Imaging Conditions Mounted on a UAV Platform. Sustainability 2019, 11, 978. [Google Scholar] [CrossRef]

- Croft, H.; Chen, J.M.; Wang, R.; Mo, G.; Luo, S.; Luo, X.; He, L.; Gonsamo, A.; Arabian, J.; Zhang, Y.; et al. The global distribution of leaf chlorophyll content. Remote Sens. Environ. 2020, 236, 111479. [Google Scholar] [CrossRef]

- Simic Milas, A.; Romanko, M.; Reil, P.; Abeysinghe, T.; Marambe, A. The importance of leaf area index in mapping chlorophyll content of corn under different agricultural treatments using UAV images. Int. J. Remote Sens. 2018, 39, 5415–5431. [Google Scholar] [CrossRef]

- Räsänen, A.; Juutinen, S.; Kalacska, M.; Aurela, M.; Heikkinen, P.; Mäenpää, K.; Rimali, A.; Virtanen, T. Peatland leaf-area index and biomass estimation with ultra-high resolution remote sensing. GISci. Remote Sens. 2020, 57, 943–964. [Google Scholar] [CrossRef]

- Chen, J.M.; Pavlic, G.; Brown, L.; Cihlar, J.; Leblanc, S.G.; White, H.P.; Hall, R.J.; Peddle, D.R.; King, D.J.; Trofymow, J.A.; et al. Derivation and validation of Canada-wide coarse-resolution leaf area index maps using high-resolution satellite imagery and ground measurements. Remote Sens. Environ. 2002, 80, 165–184. [Google Scholar] [CrossRef]

- Daughtry, C. Estimating Corn Leaf Chlorophyll Concentration from Leaf and Canopy Reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Sun, Y.; Qin, Q.; Ren, H.; Zhang, T.; Chen, S. Red-Edge Band Vegetation Indices for Leaf Area Index Estimation From Sentinel-2/MSI Imagery. IEEE Trans. Geosci. Remote Sens. 2020, 58, 826–840. [Google Scholar] [CrossRef]

- Li, D.; Chen, J.M.; Zhang, X.; Yan, Y.; Zhu, J.; Zheng, H.; Zhou, K.; Yao, X.; Tian, Y.; Zhu, Y.; et al. Improved estimation of leaf chlorophyll content of row crops from canopy reflectance spectra through minimizing canopy structural effects and optimizing off-noon observation time. Remote Sens. Environ. 2020, 248, 111985. [Google Scholar] [CrossRef]

- Huang, G.; Liu, H. Effect of Planting Density on Yield and Quality of Maize. Mod. Agric. Res. 2022, 28, 692–695. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P.J. The MERIS terrestrial chlorophyll index. Int. J. Remote Sens. 2010, 25, 5403–5413. [Google Scholar] [CrossRef]

- Broge, N.H.; Mortensen, J.V. Deriving green crop area index and canopy chlorophyll density of winter wheat from spectral reflectance data. Remote Sens. Environ. 2002, 81, 45–57. [Google Scholar] [CrossRef]

- Gitelson, A.A. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, 403-1–403-4. [Google Scholar] [CrossRef]

- Xu, J.; Quackenbush, L.J.; Volk, T.A.; Im, J. Estimation of shrub willow biophysical parameters across time and space from Sentinel-2 and unmanned aerial system (UAS) data. Field Crops Res. 2022, 287, 108655. [Google Scholar] [CrossRef]

- Lang, Q.; Weijie, T.; Dehua, G.; Ruomei, Z.; Lulu, A.; Minzan, L.; Hong, S.; Di, S. UAV-based chlorophyll content estimation by evaluating vegetation index responses under different crop coverages. Comput. Electron. Agric. 2022, 196, 106775. [Google Scholar]

- Tao, W.; Dong, Y.; Su, W.; Li, J.; Xuan, F.; Huang, J.; Yang, J.; Li, X.; Zeng, Y.; Li, B. Mapping the Corn Residue-Covered Types Using Multi-Scale Feature Fusion and Supervised Learning Method by Chinese GF-2 PMS Image. Front. Plant Sci. 2022, 13, 901042. [Google Scholar] [CrossRef] [PubMed]

- Yang, G.; Li, C.; Wang, Y.; Yuan, H.; Feng, H.; Xu, B.; Yang, X. The DOM Generation and Precise Radiometric Calibration of a UAV-Mounted Miniature Snapshot Hyperspectral Imager. Remote Sens. 2017, 9, 642. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Li, J.; Jiang, H.; Luo, W.; Ma, X.; Zhang, Y. Potato LAI estimation by fusing UAV multi-spectral and texture features. J. South China Agric. Univ. 2023, 44, 93–101. [Google Scholar]

- Yang, H.; Hu, Y.; Zheng, Z.; Qiao, Y.; Zhang, K.; Guo, T.; Chen, J. Estimation of Potato Chlorophyll Content from UAV Multispectral Images with Stacking Ensemble Algorithm. Agronomy 2022, 12, 2318. [Google Scholar] [CrossRef]

- Mallat, S.G. A Theory for Multiresolution Signal Decomposition:The Wavelet Representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 674–693. [Google Scholar] [CrossRef]

- Chen, D.; Hu, B.; Shao, X.; Su, Q. Variable selection by modified IPW (iterative predictor weighting)-PLS (partial least squares) in continuous wavelet regression models. Analyst 2004, 129, 664–669. [Google Scholar] [CrossRef]

- Arai, K.; Ragmad, C. Image Retrieval Method Utilizing Texture Information Derived from Discrete Wavelet Transformation Together with Color Information. Int. J. Adv. Res. Artif. Intell. (IJARAI) 2016, 5, 1–6. [Google Scholar] [CrossRef]

- Xu, X.; Li, Z.; Yang, X.; Yang, G.; Teng, C.; Zhu, H.; Liu, S. Predicting leaf chlorophyll content and its nonuniform vertical distribution of summer maize by using a radiation transfer model. J. Appl. Remote Sens. 2019, 13, 034505. [Google Scholar] [CrossRef]

- Gu, Y.; Chanussot, J.; Jia, X.; Benediktsson, J.A. Multiple Kernel Learning for Hyperspectral Image Classification: A Review. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6547–6565. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Z.; Ren, T.; Liu, D.; Ma, Z.; Tong, L.; Zhang, C.; Zhou, T.; Zhang, X.; Li, S. Identification of Seed Maize Fields With High Spatial Resolution and Multiple Spectral Remote Sensing Using Random Forest Classifier. Remote Sens. 2020, 12, 362. [Google Scholar] [CrossRef]

- Lu, J.; Eitel, J.U.H.; Engels, M.; Zhu, J.; Ma, Y.; Liao, F.; Zheng, H.; Wang, X.; Yao, X.; Cheng, T.; et al. Improving Unmanned Aerial Vehicle (UAV) remote sensing of rice plant potassium accumulation by fusing spectral and textural information. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102592. [Google Scholar] [CrossRef]

- Sun, Q.; Jiao, Q.; Qian, X.; Liu, L.; Liu, X.; Dai, H. Improving the Retrieval of Crop Canopy Chlorophyll Content Using Vegetation Index Combinations. Remote Sens. 2021, 13, 470. [Google Scholar] [CrossRef]

- Zhou, Y.; Lao, C.; Yang, Y.; Zhang, Z.; Chen, H.; Chen, Y.; Chen, J.; Ning, J.; Yang, N. Diagnosis of winter-wheat water stress based on UAV-borne multispectral image texture and vegetation indices. Agric. Water Manag. 2021, 256, 107076. [Google Scholar] [CrossRef]

- Drotar, P.; Gazda, J.; Smekal, Z. An experimental comparison of feature selection methods on two-class biomedical datasets. Comput. Biol. Med. 2015, 66, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Wang, N.; Li, Q.Z.; Du, X.; Zhang, Y.; Zhao, L.C.; Wang, H.Y. Identification of main crops based on the univariate feature selection in Subei. J. Appl. Remote Sens. 2017, 21, 519–530. [Google Scholar]

- Fu, Y.; Yang, G.; Li, Z.; Li, H.; Li, Z.; Xu, X.; Song, X.; Zhang, Y.; Duan, D.; Zhao, C.; et al. Progress of hyperspectral data processing and modelling for cereal crop nitrogen monitoring. Comput. Electron. Agric. 2020, 172, 105321. [Google Scholar] [CrossRef]

- Li, D.; Miao, Y.; Gupta, S.K.; Rosen, C.J.; Yuan, F.; Wang, C.; Wang, L.; Huang, Y. Improving Potato Yield Prediction by Combining Cultivar Information and UAV Remote Sensing Data Using Machine Learning. Remote Sens. 2021, 13, 3322. [Google Scholar] [CrossRef]

- Ta, N.; Chang, Q.; Zhang, Y. Estimation of Apple Tree Leaf Chlorophyll Content Based on Machine Learning Methods. Remote Sens. 2021, 13, 3902. [Google Scholar] [CrossRef]

- Ndlovu, H.S.; Odindi, J.; Sibanda, M.; Mutanga, O.; Clulow, A.; Chimonyo, V.G.P.; Mabhaudhi, T. A Comparative Estimation of Maize Leaf Water Content Using Machine Learning Techniques and Unmanned Aerial Vehicle (UAV)-Based Proximal and Remotely Sensed Data. Remote Sens. 2021, 13, 4091. [Google Scholar] [CrossRef]

- Xu, X.Q.; Lu, J.S.; Zhang, N.; Yang, T.C.; He, J.Y.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Inversion of rice canopy chlorophyll content and leaf area index based on coupling of radiative transfer and Bayesian network models. ISPRS J. Photogramm. Remote Sens. 2019, 150, 185–196. [Google Scholar] [CrossRef]

- Goulas, Y.; Cerovic, Z.G.; Cartelat, A.; Moya, I. Dualex: A new instrument for field measurements of epidermal ultraviolet absorbance by chlorophyll fluorescence. Appl. Opt. 2004, 43, 4488–4496. [Google Scholar] [CrossRef]

- Yang, H.; Ming, B.; Nie, C.; Xue, B.; Xin, J.; Lu, X.; Xue, J.; Hou, P.; Xie, R.; Wang, K.; et al. Maize Canopy and Leaf Chlorophyll Content Assessment from Leaf Spectral Reflectance: Estimation and Uncertainty Analysis across Growth Stages and Vertical Distribution. Remote Sens. 2022, 14, 2115. [Google Scholar] [CrossRef]

- Li, Z.; Wang, J.; He, P.; Zhang, Y.; Liu, H.; Chang, H.; Xu, X. Modelling of crop chlorophyll content based on Dualex. Trans. Chin. Soc. Agric. Eng. 2015, 31, 191–197. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A. Monitoring the Vernal Advancements and Retro Gradation of Natural Vegetation; Remote Remote Sensing Center: Greenbelt, MD, USA, 1974; Volume 371. [Google Scholar]

- Gitelson, A.A.; Merzlyak, M.N. Remote sensing of chlorophyll concentration in higher plant leaves. Adv. Space Res. 1998, 22, 689–692. [Google Scholar] [CrossRef]

- Pen¯Uelas, J.; Filella, I.; Lloret, P.; Mun¯Oz, F.; Vilajeliu, M. Reflectance assessment of mite effects on apple trees. Int. J. Remote Sens. 2010, 16, 2727–2733. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Metternicht, G. Vegetation indices derived from high-resolution airborne videography for precision crop management. Int. J. Remote Sens. 2010, 24, 2855–2877. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Spectral Reflectance Changes Associated with Autumn Senescence of Aesculus hippocastanum L. and Acer platanoides L. Leaves. Spectral Features and Relation to Chlorophyll Estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Haboudane, D.; Miller, J.R.; Tremblay, N.; Dextraze, L. Leaf Chlorophyll a + b and canopy LAI estimation in crops using RT models and Hyperspectral Reflectance Imagery. CSIC 2002. [Google Scholar]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Roberti de Siqueira, F.; Robson Schwartz, W.; Pedrini, H. Multi-scale gray level co-occurrence matrices for texture description. Neurocomputing 2013, 120, 336–345. [Google Scholar] [CrossRef]

- Bruce, L.M.; Morgan, C.; Larsen, S. Automated detection of subpixel hyperspectral targets with continuous and discrete wavelet transforms. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2217–2226. [Google Scholar] [CrossRef]

- Blackburn, G.; Ferwerda, J. Retrieval of chlorophyll concentration from leaf reflectance spectra using wavelet analysis. Remote Sens. Environ. 2008, 112, 1614–1632. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, K.; Sun, Y.; Zhao, Y.; Zhuang, H.; Ban, W.; Chen, Y.; Fu, E.; Chen, S.; Liu, J.; et al. Combining Spectral and Texture Features of UAS-Based Multispectral Images for Maize Leaf Area Index Estimation. Remote Sens. 2022, 14, 331. [Google Scholar] [CrossRef]

- Liao, Q.; Wang, J.; Yang, G.; Zhang, D.; Li, H.; Fu, Y.; Li, Z. Comparison of spectral indices and wavelet transform for estimating chlorophyll content of maize from hyperspectral reflectance. J. Appl. Remote Sens. 2013, 7, 073575. [Google Scholar] [CrossRef]

- Clevers, J.; Kooistra, L.; van den Brande, M. Using Sentinel-2 Data for Retrieving LAI and Leaf and Canopy Chlorophyll Content of a Potato Crop. Remote Sens. 2017, 9, 405. [Google Scholar] [CrossRef]

- Noi, P.; Degener, J.; Kappas, M. Comparison of Multiple Linear Regression, Cubist Regression, and Random Forest Algorithms to Estimate Daily Air Surface Temperature from Dynamic Combinations of MODIS LST Data. Remote Sens. 2017, 9, 398. [Google Scholar] [CrossRef]

- Narmilan, A.; Gonzalez, F.; Salgadoe, A.S.A.; Kumarasiri, U.W.L.M.; Weerasinghe, H.A.S.; Kulasekara, B.R. Predicting Canopy Chlorophyll Content in Sugarcane Crops Using Machine Learning Algorithms and Spectral Vegetation Indices Derived from UAV Multispectral Imagery. Remote Sens. 2022, 14, 1140. [Google Scholar] [CrossRef]

- Han, H.; Wan, R.; Li, B. Estimating Forest Aboveground Biomass Using Gaofen-1 Images, Sentinel-1 Images, and Machine Learning Algorithms: A Case Study of the Dabie Mountain Region, China. Remote Sens. 2021, 14, 176. [Google Scholar] [CrossRef]

- Zhang, L.; Shao, Z.; Liu, J.; Cheng, Q. Deep Learning Based Retrieval of Forest Aboveground Biomass from Combined LiDAR and Landsat 8 Data. Remote Sens. 2019, 11, 1459. [Google Scholar] [CrossRef]

- Awad, M. Toward Precision in Crop Yield Estimation Using Remote Sensing and Optimization Techniques. Agriculture 2019, 9, 54. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Zeng, N.; Ren, X.; He, H.; Zhang, L.; Zhao, D.; Ge, R.; Li, P.; Niu, Z. Estimating grassland aboveground biomass on the Tibetan Plateau using a random forest algorithm. Ecol. Indic. 2019, 102, 479–487. [Google Scholar] [CrossRef]

- Harel, O. The estimation of R2 and adjusted R2 in incomplete data sets using multiple imputation. J. Appl. Stat. 2009, 36, 1109–1118. [Google Scholar] [CrossRef]

- Li, Z.; Jin, X.; Yang, G.; Drummond, J.; Yang, H.; Clark, B.; Li, Z.; Zhao, C. Remote Sensing of Leaf and Canopy Nitrogen Status in Winter Wheat (Triticum aestivum L.) Based on N-PROSAIL Model. Remote Sens. 2018, 10, 1463. [Google Scholar] [CrossRef]

- Li, D.; Tian, L.; Wan, Z.; Jia, M.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. Assessment of unified models for estimating leaf chlorophyll content across directional-hemispherical reflectance and bidirectional reflectance spectra. Remote Sens. Environ. 2019, 231, 111240. [Google Scholar] [CrossRef]

- Parco, M.; D’Andrea, K.E.; Maddonni, G.Á. Maize prolificacy under contrasting plant densities and N supplies: I. Plant growth, biomass allocation and development of apical and sub-apical ears from floral induction to silking. Field Crops Res. 2022, 284, 108553. [Google Scholar] [CrossRef]

- Qiao, L.; Zhao, R.; Tang, W.; An, L.; Sun, H.; Li, M.; Wang, N.; Liu, Y.; Liu, G. Estimating maize LAI by exploring deep features of vegetation index map from UAV multispectral images. Field Crops Res. 2022, 289, 108739. [Google Scholar] [CrossRef]

- Li, F.; Miao, Y.; Feng, G.; Yuan, F.; Yue, S.; Gao, X.; Liu, Y.; Liu, B.; Ustin, S.L.; Chen, X. Improving estimation of summer maize nitrogen status with red edge-based spectral vegetation indices. Field Crops Res. 2014, 157, 111–123. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P.J.; Tallis, M.J.; Llewellyn, G.M.; Taylor, G.; Snoeij, P. Validating the MERIS Terrestrial Chlorophyll Index (MTCI) with ground chlorophyll content data at MERIS spatial resolution. Int. J. Remote Sens. 2010, 31, 5513–5532. [Google Scholar] [CrossRef]

- Huang, Y.; Ma, Q.; Wu, X.; Li, H.; Xu, K.; Ji, G.; Qian, F.; Li, L.; Huang, Q.; Long, Y.; et al. Estimation of chlorophyll content in Brassica napus based on unmanned aerial vehicle images. Oil Crop Sci. 2022, 7, 149–155. [Google Scholar] [CrossRef]

- Yang, K.; Gong, Y.; Fang, S.; Duan, B.; Yuan, N.; Peng, Y.; Wu, X.; Zhu, R. Combining Spectral and Texture Features of UAV Images for the Remote Estimation of Rice LAI throughout the Entire Growing Season. Remote Sens. 2021, 13, 3001. [Google Scholar] [CrossRef]

- Qiao, L.; Gao, D.; Zhao, R.; Tang, W.; An, L.; Li, M.; Sun, H. Improving estimation of LAI dynamic by fusion of morphological and vegetation indices based on UAV imagery. Comput. Comput. Electron. Electron. Agric. 2022, 192, 106603. [Google Scholar] [CrossRef]

- Hoła, A.; Czarnecki, S. Random forest algorithm and support vector machine for nondestructive assessment of mass moisture content of brick walls in historic buildings. Autom. Constr. 2023, 149, 104793. [Google Scholar] [CrossRef]

- Patonai, Z.; Kicsiny, R.; Geczi, G. Multiple linear regression based model for the indoor temperature of mobile containers. Heliyon 2022, 8, e12098. [Google Scholar] [CrossRef]

- Kayad, A.; Sozzi, M.; Paraforos, D.S.; Rodrigues, F.A.; Cohen, Y.; Fountas, S.; Francisco, M.-J.; Pezzuolo, A.; Grigolato, S.; Marinello, F. How many gigabytes per hectare are available in the digital agriculture era? A digitization footprint estimation. Comput. Electron. Agric. 2022, 198, 107080. [Google Scholar] [CrossRef]

- Rahman, M.S.; Di, L.; Yu, E.; Zhang, C.; Mohiuddin, H. In-Season Major Crop-Type Identification for US Cropland from Landsat Images Using Crop-Rotation Pattern and Progressive Data Classification. Agriculture 2019, 9, 17. [Google Scholar] [CrossRef]

- Xu, X.; Nie, C.; Jin, X.; Li, Z.; Zhu, H.; Xu, H.; Wang, J.; Zhao, Y.; Feng, H. A comprehensive yield evaluation indicator based on an improved fuzzy comprehensive evaluation method and hyperspectral data. Field Crops Res. 2021, 270, 108204. [Google Scholar] [CrossRef]

- Li, Y.; Song, H.; Zhou, L.; Xu, Z.; Zhou, G. Vertical distributions of chlorophyll and nitrogen and their associations with photosynthesis under drought and rewatering regimes in a maize field. Agric. For. Meteorol. 2019, 272–273, 40–54. [Google Scholar] [CrossRef]

| Vegetation Index | Formula | Reference |

|---|---|---|

| Normalize Difference Vegetation IndexKunakorn(NDVI) | [44] | |

| Green Normalized Difference Vegetation Index (GNDVI) | [45] | |

| Optimized Soil Adjusted Vegetation Index (OSAVI) | [13] | |

| Red-edge Chlorophyll Index (CIrededge) | [16] | |

| Structure-Insensitive Pigment IndexKunakorn(SIPI) | [46] | |

| Enhanced Vegetation Index (EVI) | [47] | |

| ROUPlant Pigment Ratio (PPR) | [48] | |

| Red-edge NDVI (NDVIrededge) | [49] | |

| MERIS Terrestrial Chlorophyll IndexKunakorn(MTCI) | [14] | |

| Modified Chlorophyll Absorption Ratio Index (MCARI) | [9] | |

| Transformed Chlorophyll Absorption Reflectance Index (TCARI) | [50] | |

| Combined Index (TCARI/OSAVI) | [51] | |

| Difference Vegetation Index (DVI) | [52] |

| GLCM-Based Feature | Abbreviation | Formula |

|---|---|---|

| Mean Value | MEAN | |

| Variance | Var | |

| Homogeneity | Hom | |

| Contrast | Con | |

| Dissimilarity | Dis | |

| Entropy | Ent | |

| Angular Second Moment | ASM | |

| Correlation | Cor |

| Feature Dataset | Band + VI | Texture | Wavelet |

|---|---|---|---|

| Ⅰ | √ | × | × |

| Ⅱ | × | √ | × |

| Ⅲ | × | × | √ |

| Ⅳ | √ | √ | √ |

| Index | Feature Set | R2 | RMSE (μg/cm2) | NRMSE (%) |

|---|---|---|---|---|

| LCD | Ⅰ | 0.90 | 6.70 | 14.32 |

| Ⅱ | 0.90 | 7.20 | 15.88 | |

| Ⅲ | 0.86 | 10.04 | 22.15 | |

| Ⅳ | 0.91 | 6.59 | 14.51 | |

| LAI | Ⅰ | 0.97 | 0.37 | 20.18 |

| Ⅱ | 0.96 | 0.43 | 23.28 | |

| Ⅲ | 0.96 | 0.42 | 22.62 | |

| Ⅳ | 0.97 | 0.35 | 18.76 | |

| CCD | Ⅰ | 0.96 | 26.22 | 28.26 |

| Ⅱ | 0.95 | 33.24 | 35.84 | |

| Ⅲ | 0.95 | 40.42 | 43.58 | |

| Ⅳ | 0.97 | 24.85 | 26.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, L.; Nie, C.; Su, T.; Xu, X.; Song, Y.; Yin, D.; Liu, S.; Liu, Y.; Bai, Y.; Jia, X.; et al. Evaluating the Canopy Chlorophyll Density of Maize at the Whole Growth Stage Based on Multi-Scale UAV Image Feature Fusion and Machine Learning Methods. Agriculture 2023, 13, 895. https://doi.org/10.3390/agriculture13040895

Zhou L, Nie C, Su T, Xu X, Song Y, Yin D, Liu S, Liu Y, Bai Y, Jia X, et al. Evaluating the Canopy Chlorophyll Density of Maize at the Whole Growth Stage Based on Multi-Scale UAV Image Feature Fusion and Machine Learning Methods. Agriculture. 2023; 13(4):895. https://doi.org/10.3390/agriculture13040895

Chicago/Turabian StyleZhou, Lili, Chenwei Nie, Tao Su, Xiaobin Xu, Yang Song, Dameng Yin, Shuaibing Liu, Yadong Liu, Yi Bai, Xiao Jia, and et al. 2023. "Evaluating the Canopy Chlorophyll Density of Maize at the Whole Growth Stage Based on Multi-Scale UAV Image Feature Fusion and Machine Learning Methods" Agriculture 13, no. 4: 895. https://doi.org/10.3390/agriculture13040895

APA StyleZhou, L., Nie, C., Su, T., Xu, X., Song, Y., Yin, D., Liu, S., Liu, Y., Bai, Y., Jia, X., & Jin, X. (2023). Evaluating the Canopy Chlorophyll Density of Maize at the Whole Growth Stage Based on Multi-Scale UAV Image Feature Fusion and Machine Learning Methods. Agriculture, 13(4), 895. https://doi.org/10.3390/agriculture13040895