Detection of the Corn Kernel Breakage Rate Based on an Improved Mask Region-Based Convolutional Neural Network

Abstract

:1. Introduction

2. Materials and Methods

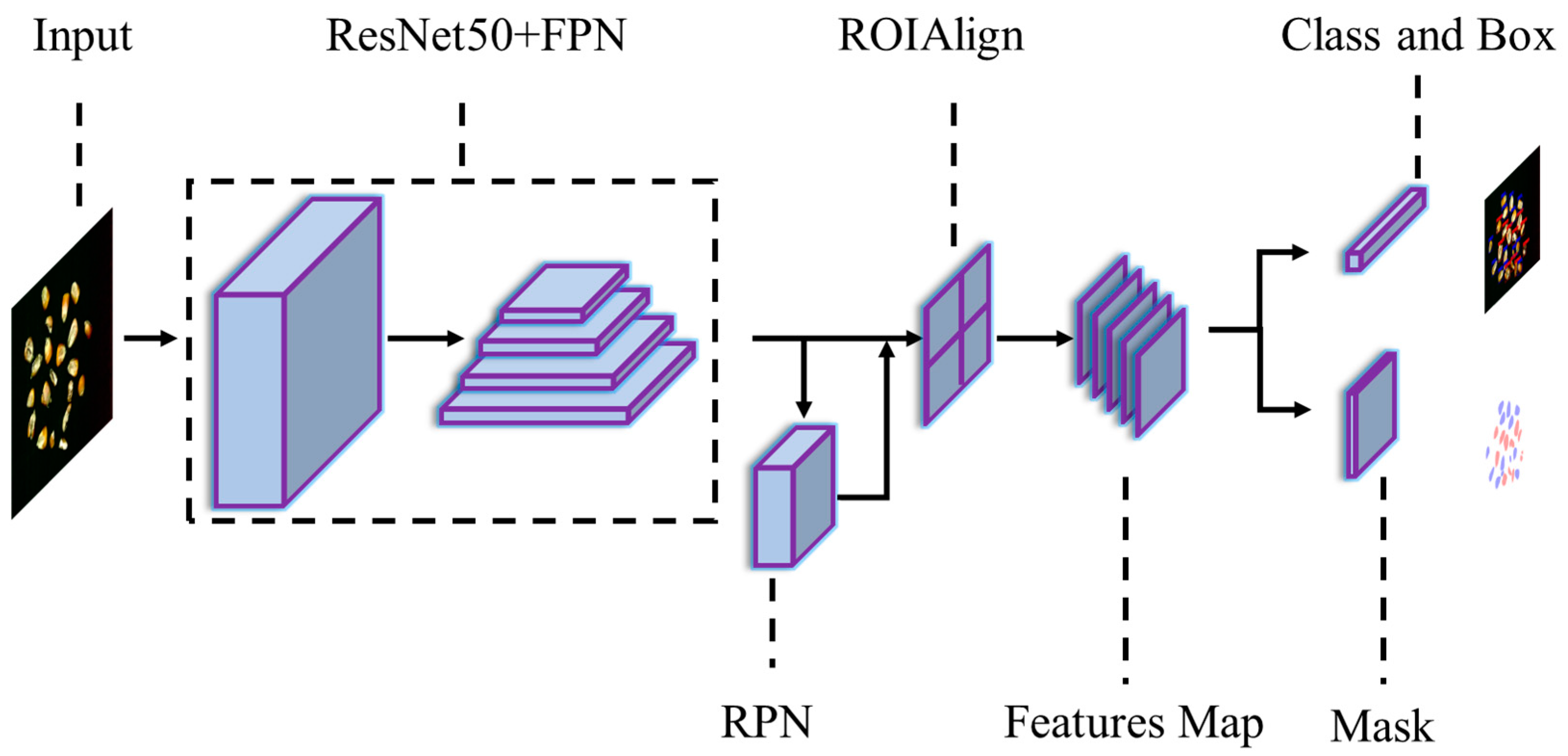

2.1. Description of the Mask RCNN

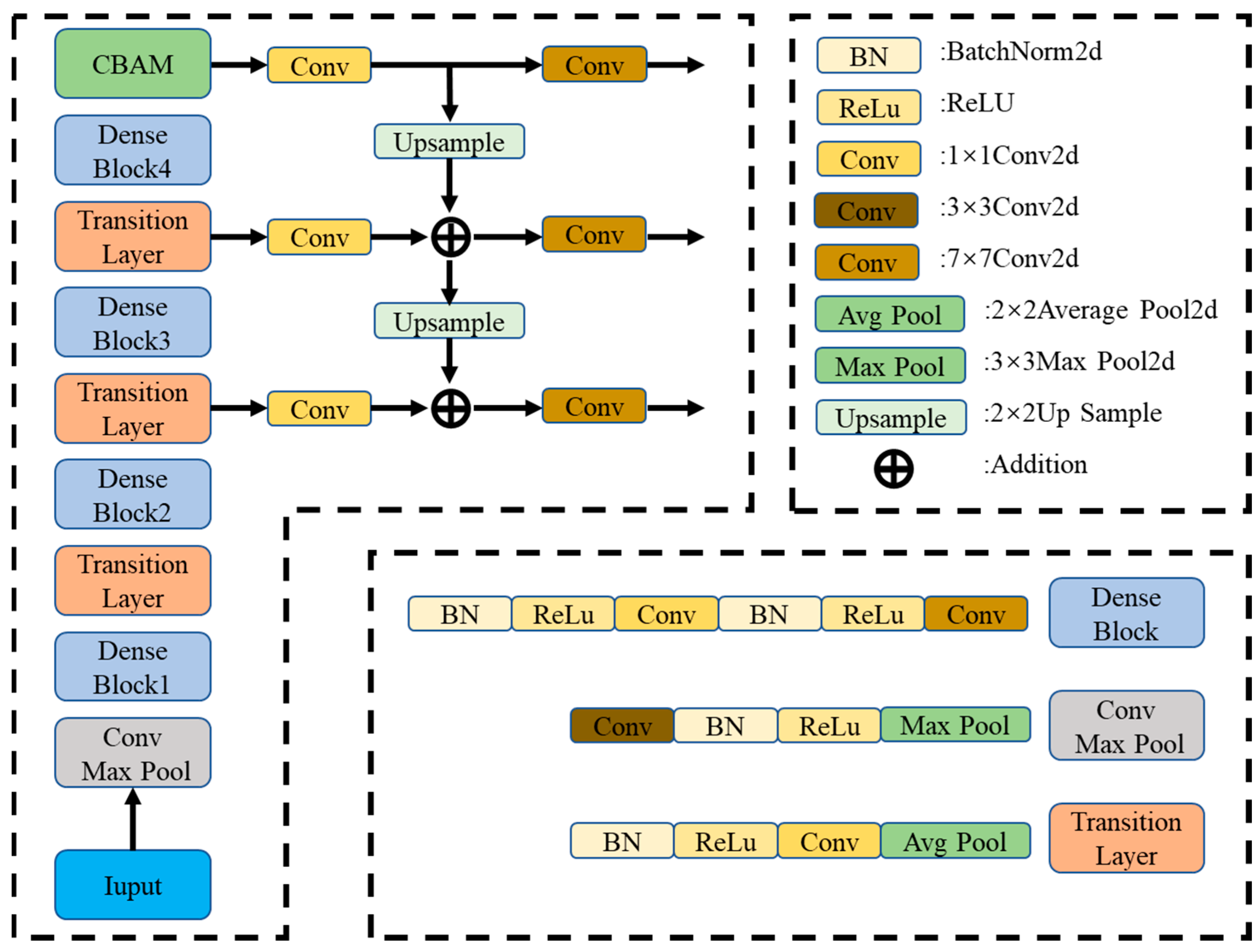

2.2. Improving the Mask RCNN

2.2.1. Replacing the Backbone Feature Extraction Network DenseNet

2.2.2. CBAM Attention Mechanism

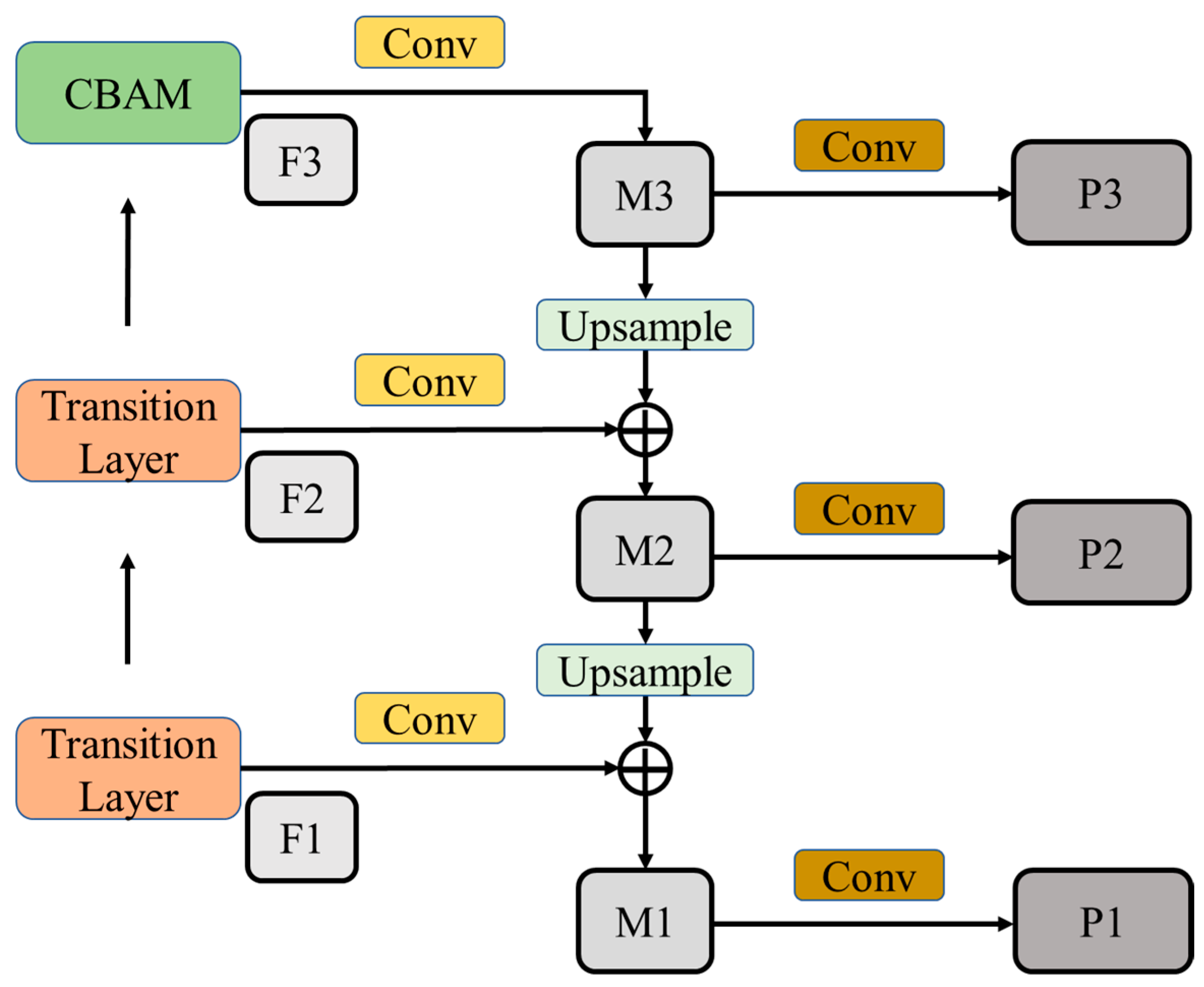

2.2.3. Multi-Scale Fusion FPN

2.2.4. Transfer Learning

2.3. Construction of a Corn Kernel Dataset and Establishment of the Pixel–Mass Relationship

2.3.1. Data Collection

2.3.2. Data Preprocessing

2.3.3. Establishment of the Pixel–Mass Relationship

2.4. Calculation Method of the Corn Kernel Breakage Rate

3. Results and Discussion

3.1. Experimental Environment and Model Training Parameter Settings

3.2. Evaluation Indicators

3.3. Model Training

- (1)

- The Mask RCNN model, which uses DenseNet121 as the backbone feature extraction network, was 97.16% and 98.18% on MAPb50 and MAPm50, respectively, its computation amount and parameter number were 2.88 GMac and 6.95 M, and the feature extraction time was 170 ms;

- (2)

- The Mask RCNN model incorporating the CBAM attention mechanism into the DenseNet121 network structure achieved 97.32% and 98.29% on MAPb50 and MAPm50, respectively, with a computational and parameter load of 2.88 GMAc and 7.08 M, and a feature extraction time of 176 ms;

- (3)

- The Mask RCNN model using an FPN structure in the DenseNet121 network structure had a performance of 97.28% and 98.35% on MAPb50 and MAPm50, respectively, with a computational and parameter load of 3.09 GMAc and 9.18 M, and a feature extraction time of 202 ms;

- (4)

- The Mask RCNN model incorporating the CBAM attention mechanism and FPN structure into the DenseNet121 network structure achieved 97.62% and 98.70%, respectively, on MAPb50 and MAPm50, with a computational and parameter load of 3.09 GMAc and 9.31 M, and a feature extraction time of 206 ms. Compared to the ResNet50_FPN backbone feature extraction network, it improved by 0.34% and 0.37% on MAPb50 and MAPm50, respectively, and reduced the computational complexity by 3.87 GMac, the parameter size by 17.32 M, and the feature extraction time by 22 ms. The results showed that DenseNet121_CBAM_FPN, as the backbone feature extraction network, improved the accuracy of corn kernel recognition and segmentation compared to the original Mask RCNN model, and reduced the computational burden, parameter quantity, and feature extraction time, proving the effectiveness of the improved Mask RCNN model.

3.4. Visualization Analysis of Different Segmented Networks

3.5. Real-Time Detection of the Corn Kernel Breakage Rate

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cheng, S.; Han, H.; Qi, J.; Ma, Q.; Liu, J.; An, D.; Yang, Y. Design and Experiment of Real-Time Grain Yield Monitoring System for Corn Kernel Harvester. Agriculture 2023, 13, 294. [Google Scholar] [CrossRef]

- Li, X.; Zhang, W.; Xu, S.; Du, Z.; Ma, Y.; Ma, F.; Liu, J. Low-Damage Corn Threshing Technology and Corn Threshing Devices: A Review of Recent Developments. Agriculture 2023, 13, 1006. [Google Scholar] [CrossRef]

- GB/T 21962-2020; Corn Combine Harvester. China Standards Press: Beijing, China, 2020.

- Qiu, Z.J.; Chen, J.; Zhao, Y.Y.; Zhu, S.S.; He, Y.; Zhang, C. Variety identification of single rice seed using hyperspectral imaging combined with convolutional neural network. Appl. Sci. 2018, 8, 212. [Google Scholar] [CrossRef]

- Han, Z.Z.; Gao, J.Y. Pixel-level aflatoxin detecting based on deep learning and hyperspectral imaging. Comput. Electron. Agric. 2019, 164, 104888. [Google Scholar] [CrossRef]

- Przybyło, J.; Jabłoński, M. Using deep convolutional neural network for oak acorn viability recognition based on color images of their sections. Comput. Electron. Agric. 2019, 156, 490–499. [Google Scholar] [CrossRef]

- Zhang, R.Q.; Li, Z.W.; Hao, J.J.; Sun, L.; Li, H.; Han, P. Image recognition of peanut pod grades based on transfer learning with convolutional neural network. Trans. Chin. Soc. Agric. Eng. 2020, 36, 171–180. [Google Scholar]

- Mao, R.; Zhang, Y.C.; Wang, Z.X.; Gao, S.C.; Zhu, T.; Wang, M.L.; Hu, X.P. Recognizing stripe rust and yellow dwarf of wheat using improved Faster-RCNN. Trans. Chin. Soc. Agric. Eng. 2022, 38, 176–185. [Google Scholar]

- Ni, B.; Paulsen, M.R.; Reid, J.F. Corn kernel crown shape identification using image processing. Trans. ASAB 1997, 40, 833–838. [Google Scholar] [CrossRef]

- Steenhoek, L.W.; Misra, M.K.; Batchelor, W.D.; Davidson, J.L. Probabilistic Neural Networks for Segmentation of Features in Corn Kernel Images. Proc. Nutr. Soc. 2001, 41, 225–234. [Google Scholar] [CrossRef]

- Zhao, Z.H.; Song, H.; Zhu, J.B.; Lu, L.; Sun, L. Identification algorithm and application of peanut kernel integrity based on convolution neural network. Trans. Chin. Soc. Agric. Eng. 2018, 34, 195–201. [Google Scholar]

- Chen, J.; Gu, Y.; Lian, Y.; Han, M.N. Online recognition method of impurities and broken paddy grains based on machine vision. Trans. Chin. Soc. Agric. Eng. 2018, 34, 187–194. [Google Scholar]

- Song, C.X.; Yu, C.Y.; Xing, Y.C.; Li, S.M.; He, H.; Yu, H.; Feng, X.Z. Algorith for acquiring multi-phenotype parameters of soybean seed based on OpenCV. Trans. Chin. Soc. Agric. Eng. 2022, 38, 156–163. [Google Scholar]

- Mahirah, J.; Kazuya, Y.; Munenori, M.; Naoshi, K.; Yuichi, O.; Tetsuhito, S.; Harshana, H.; Usman, A. Double Lighting Machine Vision System to Monitor Harvested Paddy Grain Quality during Head-Feeding Combine Harvester Operation. Machines 2015, 3, 352–363. [Google Scholar]

- Mahirah, J.; Kazuya, Y.; Munenori, M.; Naoshi, K.; Yuichi, O.; Tetsuhito, S.; Harshana, H.; Usman, A. Monitoring harvested paddy during combine harvesting using a machine vision-Double lighting system. Eng. Agric. Environ. Food. 2017, 10, 140–149. [Google Scholar] [CrossRef]

- Yang, L.; Wang, Z.; Bai, X.P.; Gao, L.; Hu, W.B. Design of on-line sampling device for maize kernel broken rate. J. Agric. Mech. Res. 2019, 41, 121–124+129. [Google Scholar]

- Chen, M.; Ni, Y.L.; Jin, C.Q.; Xun, J.S.; Zhang, G.Y. Online Monitoring Method of Mechanized Soybean Harvest Quality Based on Machine Vision. Trans. Chin. Soc. Agric. Eng. 2021, 52, 91–98. [Google Scholar]

- Jin, C.Q.; Liu, S.K.; Chen, M.; Yang, T.X.; Xu, J.S. Online quality detection of machine-harvested soybean based on improved U-Net network. Trans. Chin. Soc. Agric. Eng. 2022, 38, 70–80. [Google Scholar]

- Liu, S.K.; Jin, C.Q.; Chen, M.; Yang, T.X.; Xu, J.S. Online detection method of detecting crushing rate of soybean harvest based on DeepLabV3+ network. J. Chin. Agric. Mech. 2023, 44, 170–175. [Google Scholar]

- He, K.M.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN; ICCV: Venice, Italy, 2017; pp. 2980–2988. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Liu, Z.; Laurens, V.D.M.; Kilian, Q.W. Densely Connected Convolutional Networks; CVPR: Honolulu, HI, USA, 2017; pp. 2261–2269. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module; CVPR: Long Beach, CA, USA, 2018; pp. 3–19. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.Y.; Lee, Y.J. YOLACT: Real-Time Instance Segmentation; ICCV: Seoul, Republic of Korea, 2019; pp. 9156–9165. [Google Scholar]

- Yang, L. Research on Online Inspection Equipment and Method for Broken Rate of Maize Kernels. Master’s Thesis, Shenyang Institute of Automation, Chinese Academy of Sciences, Shengyang, China, 2018. [Google Scholar]

| Impurity Condition | Exposure Condition | Number of Images | Total |

|---|---|---|---|

| Minor impurity | Low exposure | 43 | 600 |

| Normal exposure | 80 | ||

| High exposure | 55 | ||

| Excess impurity | Low exposure | 37 | |

| Normal exposure | 60 | ||

| High exposure | 25 |

| Backbone | MAPb50 (%) | MAPm50 (%) | FLOPs (GMac) | Params (M) | Time (ms) |

|---|---|---|---|---|---|

| ResNet50_FPN | 97.28 | 98.33 | 6.96 | 26.63 | 228 |

| DenseNet121 | 97.16 | 98.18 | 2.88 | 6.95 | 170 |

| DenseNet121_CBAM | 97.32 | 98.29 | 2.88 | 7.08 | 176 |

| DenseNet121_FPN | 97.28 | 98.35 | 3.09 | 9.18 | 202 |

| DenseNet121_CBAM_FPN | 97.62 | 98.70 | 3.09 | 9.31 | 206 |

| Network Model | MAPb50 (%) | MAPm50 (%) | Time (ms) |

|---|---|---|---|

| Original Mask RCNN | 97.28 | 98.33 | 451 |

| Improved Mask RCNN | 97.62 | 98.70 | 76 |

| Yolact | 96.21 | 97.34 | 93 |

| Serial Number | Machine Vision (%) | Detection Time (ms) | Actual Calculation (%) | Calculation Time (ms) |

|---|---|---|---|---|

| 1 | 12.36 | 64 | 11.89 | 6725 |

| 2 | 8.57 | 88 | 9.29 | 8452 |

| 3 | 15.29 | 69 | 14.91 | 7684 |

| 4 | 20.16 | 79 | 19.51 | 7332 |

| 5 | 6.52 | 78 | 7.05 | 9246 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Li, Z.; Yang, Z.; Zhu, C.; Ding, Y.; Li, P.; He, X. Detection of the Corn Kernel Breakage Rate Based on an Improved Mask Region-Based Convolutional Neural Network. Agriculture 2023, 13, 2257. https://doi.org/10.3390/agriculture13122257

Zhang H, Li Z, Yang Z, Zhu C, Ding Y, Li P, He X. Detection of the Corn Kernel Breakage Rate Based on an Improved Mask Region-Based Convolutional Neural Network. Agriculture. 2023; 13(12):2257. https://doi.org/10.3390/agriculture13122257

Chicago/Turabian StyleZhang, Hongmei, Zhijie Li, Zishang Yang, Chenhui Zhu, Yinhai Ding, Pengchang Li, and Xun He. 2023. "Detection of the Corn Kernel Breakage Rate Based on an Improved Mask Region-Based Convolutional Neural Network" Agriculture 13, no. 12: 2257. https://doi.org/10.3390/agriculture13122257

APA StyleZhang, H., Li, Z., Yang, Z., Zhu, C., Ding, Y., Li, P., & He, X. (2023). Detection of the Corn Kernel Breakage Rate Based on an Improved Mask Region-Based Convolutional Neural Network. Agriculture, 13(12), 2257. https://doi.org/10.3390/agriculture13122257