Yolov5s-CA: An Improved Yolov5 Based on the Attention Mechanism for Mummy Berry Disease Detection

Abstract

1. Introduction

- The coordinate attention (CA) module is integrated into a Yolov5s backbone. This allows the network to increase the weight of key features and pay more attention to visual features related to disease to improve the performance of disease detection in various spatial scales.

- The loss function, General Intersection over Union (GIoU), is replaced by the loss function, Complete Intersection over Union (CIoU) to enhance bounding box regression and localization performance in identifying diseased plant parts with a complex background.

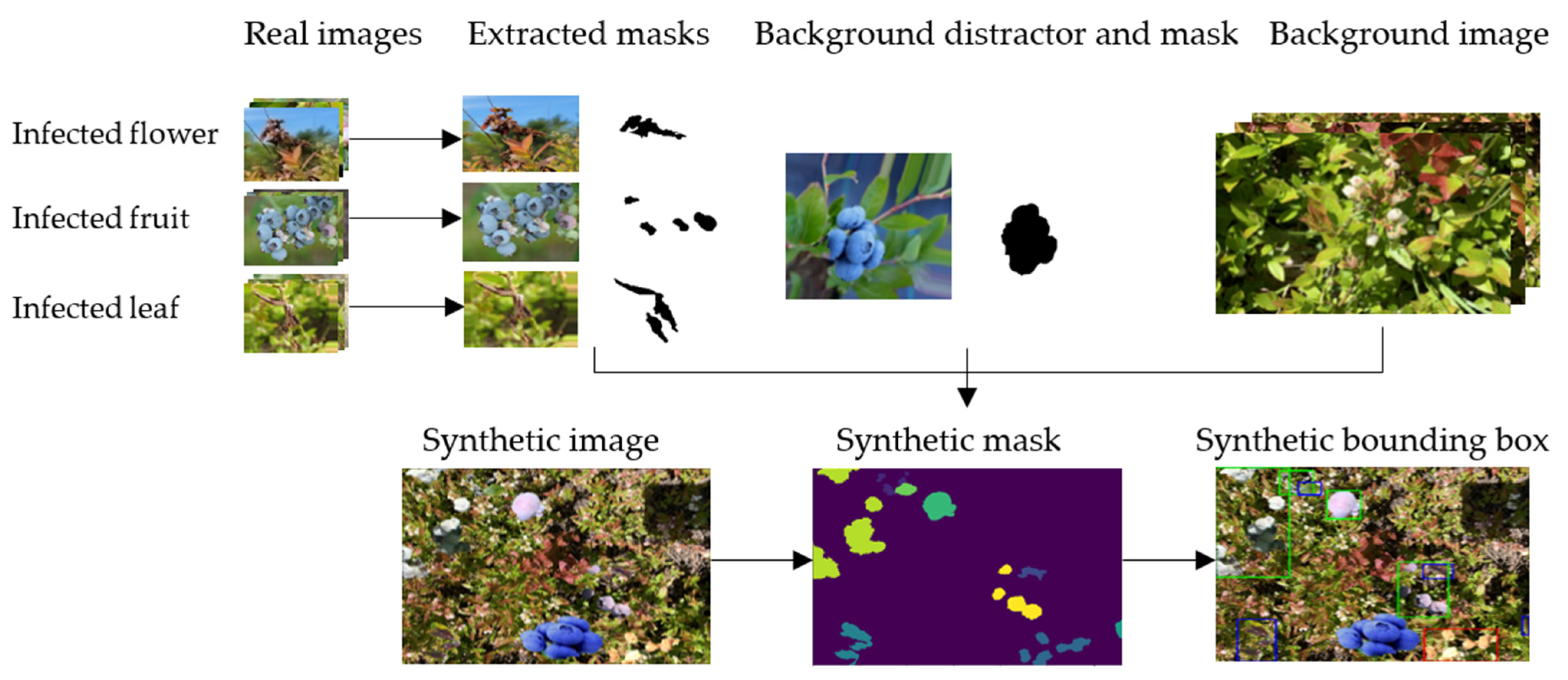

- A synthetic dataset generation method is presented that can reduce the effort of collecting and annotating large datasets and boost the performance of identification by artificially increasing available features in deep model training.

2. Related Work

2.1. Data Augmentation

2.2. Deep Learning for Plant Disease Detection

3. Materials and Methods

3.1. Data Source

3.2. Synthetic Data Generation

3.3. Coordinate Attention Module

3.4. Yolov5 Method

3.5. Improvement of Yolov5s-CA Network Model

3.6. Model Evaluation

4. Results

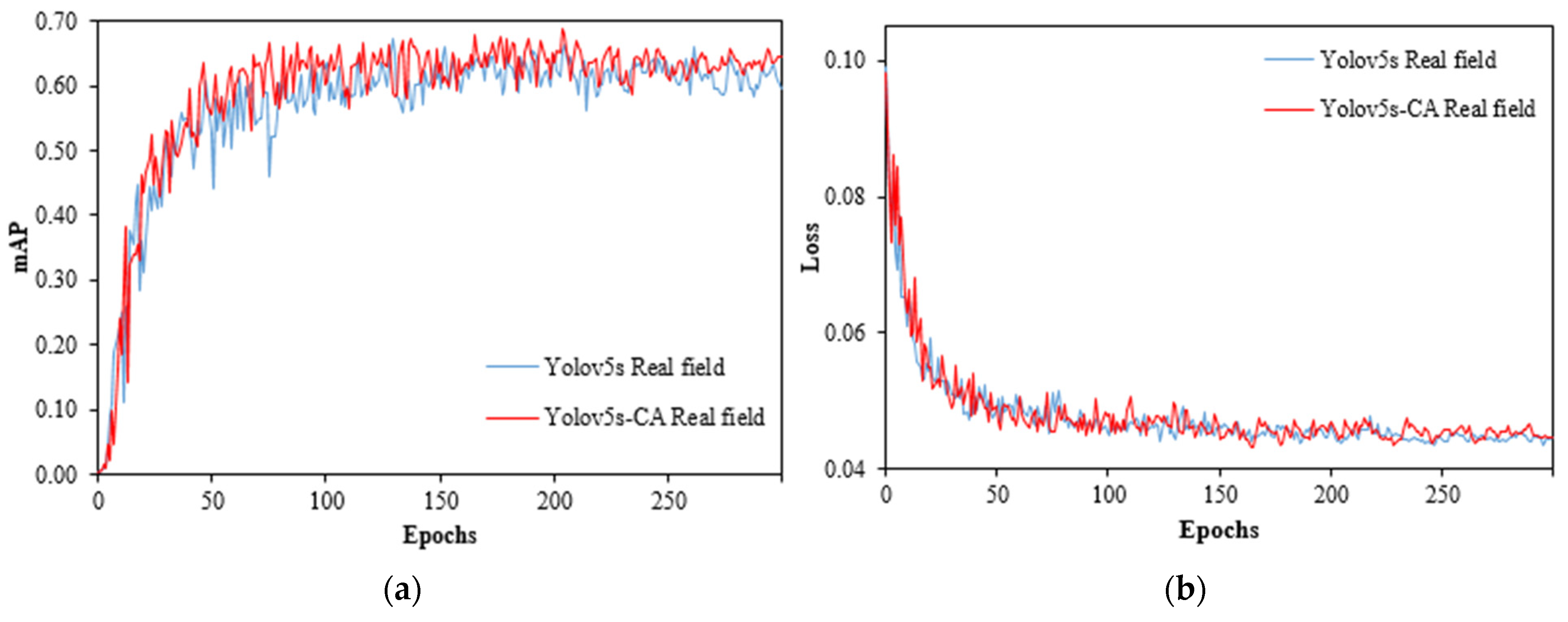

4.1. Comparison of Disease Detection Models Trained Only on the Field-Collected Dataset

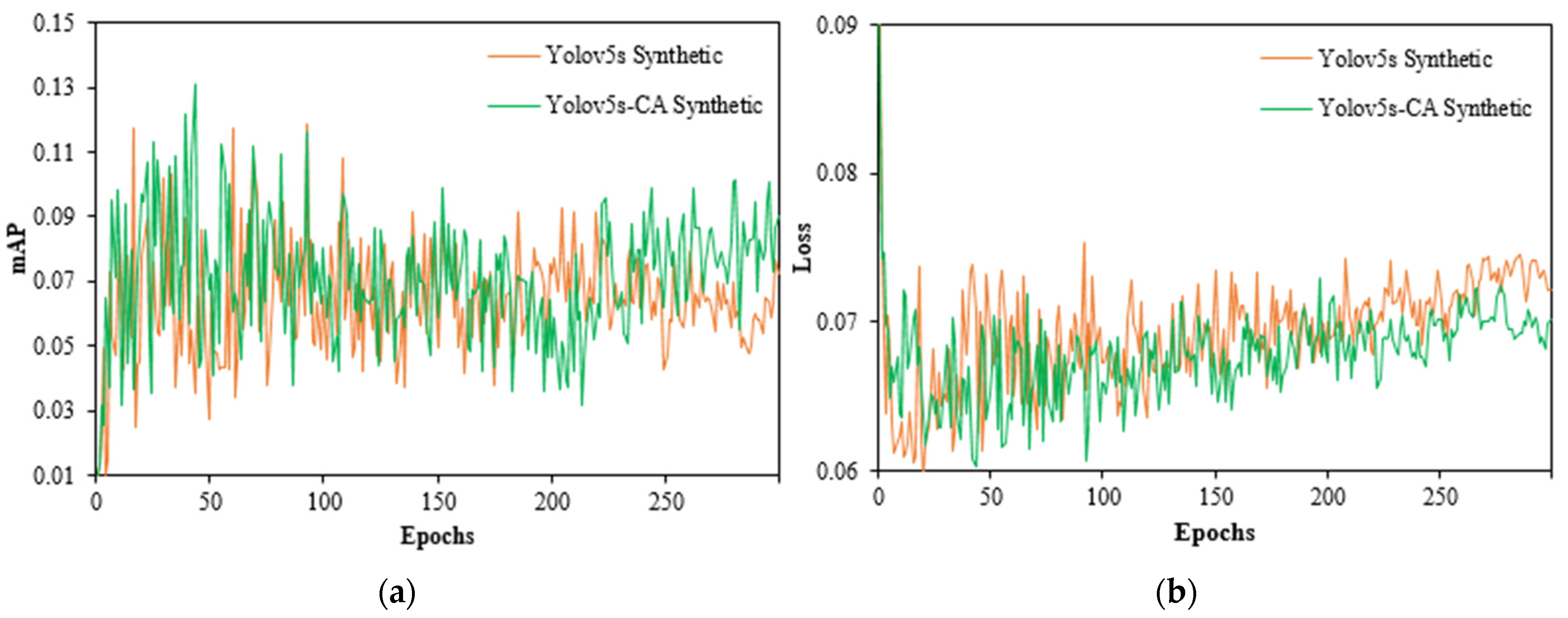

4.2. Comparison of Disease Detection Models Trained Only on the Synthetic Dataset

4.3. Comparison of Disease Detection Models Trained on a Combination of Synthetic and Field-Collected Datasets

4.4. Comparison of Detection Speed of the Models

4.5. Comparison of Detection at Different Spatial Scales

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Train | Validation | Test | |

|---|---|---|---|

| Real field | 367 | 46 | 46 |

| Synthetic | 1661 | - | - |

References

- Chatterjee, S.; Kuang, Y.; Splivallo, R.; Chatterjee, P.; Karlovsky, P. Interactions among Filamentous Fungi Aspergillus Niger, Fusarium Verticillioides and Clonostachys Rosea: Fungal Biomass, Diversity of Secreted Metabolites and Fumonisin Production. BMC Microbiol. 2016, 16, 83. [Google Scholar] [CrossRef] [PubMed]

- Asare, E.; Hoshide, A.K.; Drummond, F.A.; Criner, G.K.; Chen, X. Economic Risk of Bee Pollination in Maine Wild Blueberry, Vaccinium Angustifolium. J. Econ. Entomol. 2017, 110, 1980–1992. [Google Scholar] [CrossRef] [PubMed]

- Tasnim, R.; Calderwood, L.; Tooley, B.; Wang, L.; Zhang, Y.-J. Are Foliar Fertilizers Beneficial to Growth and Yield of Wild Lowbush Blueberries? Agronomy 2022, 12, 470. [Google Scholar] [CrossRef]

- Seireg, H.R.; Omar, Y.M.K.; Abd El-Samie, F.E.; El-Fishawy, A.S.; Elmahalawy, A. Ensemble Machine Learning Techniques Using Computer Simulation Data for Wild Blueberry Yield Prediction. IEEE Access 2022, 10, 64671–64687. [Google Scholar] [CrossRef]

- Hanes, S.P.; Collum, K.K.; Hoshide, A.K.; Asare, E. Grower Perceptions of Native Pollinators and Pollination Strategies in the Lowbush Blueberry Industry. Renew. Agric. Food Syst. 2015, 30, 124–131. [Google Scholar] [CrossRef]

- Drummond, F. Reproductive Biology of Wild Blueberry (Vaccinium Angustifolium Aiton). Agriculture 2019, 9, 69–80. [Google Scholar] [CrossRef]

- Strik, B.C.; Yarborough, D. Blueberry Production Trends in North America, 1992 to 2003, and Predictions for Growth. Horttechnology 2005, 15, 391–398. [Google Scholar] [CrossRef]

- Jones, M.S.; Vanhanen, H.; Peltola, R.; Drummond, F. A Global Review of Arthropod-Mediated Ecosystem-Services in Vaccinium Berry Agroecosystems. Terr. Arthropod Rev. 2014, 7, 41–78. [Google Scholar] [CrossRef]

- Obsie, E.Y.; Qu, H.; Drummond, F. Wild Blueberry Yield Prediction Using a Combination of Computer Simulation and Machine Learning Algorithms. Comput. Electron. Agric. 2020, 178, 105778. [Google Scholar] [CrossRef]

- Penman, L.N.; Annis, S.L. Leaf and Flower Blight Caused by Monilinia Vaccinii-Corymbosi on Lowbush Blueberry: Effects on Yield and Relationship to Bud Phenology. Phytopathology 2005, 95, 1174–1182. [Google Scholar] [CrossRef]

- Batra, L.R. Monilinia Vaccinii-Corymbosi (Sclerotiniaceae): Its Biology on Blueberry and Comparison with Related Species. Mycologia 1983, 75, 131–152. [Google Scholar] [CrossRef]

- Mcgovern, K.B.; Annis, S.L.; Yarborough, D.E. Efficacy of Organically Acceptable Materials for Control of Mummy Berry Disease on Lowbush Blueberries in Maine. Int. J. Fruit Sci. 2012, 12, 188–204. [Google Scholar] [CrossRef]

- Annis, S.L.; Slemmons, C.R.; Hildebrand, P.D.; Delbridge, R.W. An Internet-Served Forecast System for Mummy Berry Disease in Maine Lowbush Blueberry Fields Using Weather Stations with Cellular Telemetry. In Proceedings of the Phytopathology; The American Phytopathological Society: Saint Paul, MN, USA, 2013; Volume 103, p. 8. [Google Scholar]

- Annis, S.; Schwab, J.; Tooley, B.; Calderwood, L. 2022 Pest Management Guide: Disease. 2022. Available online: https://extension.umaine.edu/blueberries/wp-content/uploads/sites/41/2022/02/2022-fungicide-chart.pdf (accessed on 25 September 2022).

- Wang, X.; Liu, J.; Zhu, X. Early Real-Time Detection Algorithm of Tomato Diseases and Pests in the Natural Environment. Plant Methods 2021, 17, 43. [Google Scholar] [CrossRef]

- Singh, V.; Misra, A.K. Detection of Plant Leaf Diseases Using Image Segmentation and Soft Computing Techniques. Inf. Process. Agric. 2017, 4, 41–49. [Google Scholar] [CrossRef]

- Qu, H.; Sun, M. A Lightweight Network for Mummy Berry Disease Recognition. Smart Agric. Technol. 2022, 2, 100044. [Google Scholar] [CrossRef]

- Sullca, C.; Molina, C.; Rodríguez, C.; Fernández, T. Diseases Detection in Blueberry Leaves Using Computer Vision and Machine Learning Techniques. Int. J. Mach. Learn. Comput. 2019, 9, 656–661. [Google Scholar] [CrossRef]

- Arsenovic, M.; Karanovic, M.; Sladojevic, S.; Anderla, A.; Stefanovic, D. Solving Current Limitations of Deep Learning Based Approaches for Plant Disease Detection. Symmetry 2019, 11, 939. [Google Scholar] [CrossRef]

- Qi, J.; Liu, X.; Liu, K.; Xu, F.; Guo, H.; Tian, X.; Li, M.; Bao, Z.; Li, Y. An Improved YOLOv5 Model Based on Visual Attention Mechanism: Application to Recognition of Tomato Virus Disease. Comput. Electron. Agric. 2022, 194, 106780. [Google Scholar] [CrossRef]

- Dewi, C.; Chen, R.-C.; Liu, Y.-T.; Jiang, X.; Hartomo, K.D. Yolo V4 for Advanced Traffic Sign Recognition with Synthetic Training Data Generated by Various GAN. IEEE Access 2021, 9, 97228–97242. [Google Scholar] [CrossRef]

- Abbas, A.; Jain, S.; Gour, M.; Vankudothu, S. Tomato Plant Disease Detection Using Transfer Learning with C-GAN Synthetic Images. Comput. Electron. Agric. 2021, 187, 106279. [Google Scholar] [CrossRef]

- Ultralytics. Yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 18 July 2022).

- Shi, C.; Lin, L.; Sun, J.; Su, W.; Yang, H.; Wang, Y. A Lightweight YOLOv5 Transmission Line Defect Detection Method Based on Coordinate Attention. In Proceedings of the 2022 IEEE 6th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 4–6 March 2022; Volume 6, pp. 1779–1785. [Google Scholar]

- Guo, R.; Zuo, Z.; Su, S.; Sun, B. A Surface Target Recognition Algorithm Based on Coordinate Attention and Double-Layer Cascade. Wirel. Commun. Mob. Comput. 2022, 2022, 6317691. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Dwibedi, D.; Misra, I.; Hebert, M. Cut, Paste and Learn: Surprisingly Easy Synthesis for Instance Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1301–1310. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Dvornik, N.; Mairal, J.; Schmid, C. Modeling Visual Context Is Key to Augmenting Object Detection Datasets. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 364–380. [Google Scholar]

- Khoreva, A.; Benenson, R.; Ilg, E.; Brox, T.; Schiele, B. Lucid Data Dreaming for Video Object Segmentation. Int. J. Comput. Vis. 2019, 127, 1175–1197. [Google Scholar] [CrossRef]

- Ghiasi, G.; Cui, Y.; Srinivas, A.; Qian, R.; Lin, T.-Y.; Cubuk, E.D.; Le, Q.V.; Zoph, B. Simple Copy-Paste Is a Strong Data Augmentation Method for Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2918–2928. [Google Scholar]

- Rao, J.; Zhang, J. Cut and Paste: Generate Artificial Labels for Object Detection. In Proceedings of the International Conference on Video and Image Processing, Singapore, 27–29 December 2017; pp. 29–33. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Khalil, O.; Fathy, M.E.; El Kholy, D.K.; El Saban, M.; Kohli, P.; Shotton, J.; Badr, Y. Synthetic Training in Object Detection. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, VIC, Australia, 15–18 September 2013; pp. 3113–3117. [Google Scholar]

- Fang, H.-S.; Sun, J.; Wang, R.; Gou, M.; Li, Y.-L.; Lu, C. Instaboost: Boosting Instance Segmentation via Probability Map Guided Copy-Pasting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 682–691. [Google Scholar]

- Abayomi-Alli, O.O.; Damaševičius, R.; Misra, S.; Maskeliūnas, R. Cassava Disease Recognition from Low-quality Images Using Enhanced Data Augmentation Model and Deep Learning. Expert Syst. 2021, 38, e12746. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the 27th International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Su, H.; Qi, C.R.; Li, Y.; Guibas, L.J. Render for Cnn: Viewpoint Estimation in Images Using Cnns Trained with Rendered 3d Model Views. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2686–2694. [Google Scholar]

- Movshovitz-Attias, Y.; Kanade, T.; Sheikh, Y. How Useful Is Photo-Realistic Rendering for Visual Learning? In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 202–217. [Google Scholar]

- Gupta, A.; Vedaldi, A.; Zisserman, A. Synthetic Data for Text Localisation in Natural Images. In Proceedings of the IEEE Conference on Computer vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 30 2016; pp. 2315–2324. [Google Scholar]

- Georgakis, G.; Mousavian, A.; Berg, A.C.; Kosecka, J. Synthesizing Training Data for Object Detection in Indoor Scenes. arXiv 2017, arXiv:1702.07836. [Google Scholar]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A Robust Deep-Learning-Based Detector for Real-Time Tomato Plant Diseases and Pests Recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef] [PubMed]

- Roy, A.M.; Bhaduri, J. A Deep Learning Enabled Multi-Class Plant Disease Detection Model Based on Computer Vision. AI 2021, 2, 26. [Google Scholar] [CrossRef]

- Chen, Y.-Y.; Pahadi, P.; Calderwood, L.; Annis, S.; Drummond, F.; Zhang, Y.-J. Will Climate Warming Alter Biotic Stresses in Wild Lowbush Blueberries? Agronomy 2022, 12, 371. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 30 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Fu, C.Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional Single Shot Detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. Automatic Ship Detection Based on RetinaNet Using Multi-Resolution Gaofen-3 Imagery. Remote Sens. 2019, 11, 531. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-Cnn. In Proceedings of the Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-Cnn. In Proceedings of the Proceedings of the IEEE international conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-Cnn: Delving into High Quality Object Detection. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Liu, K.; Tang, H.; He, S.; Yu, Q.; Xiong, Y.; Wang, N. Performance Validation of YOLO Variants for Object Detection. In Proceedings of the 2021 International Conference on Bioinformatics and Intelligent Computing, Harbin, China, 22–24 January 2021; pp. 239–243. [Google Scholar]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Li, Y.; Sun, S.; Zhang, C.; Yang, G.; Ye, Q. One-Stage Disease Detection Method for Maize Leaf Based on Multi-Scale Feature Fusion. Appl. Sci. 2022, 12, 7960. [Google Scholar] [CrossRef]

- Wang, D.; He, D. Channel Pruned YOLO V5s-Based Deep Learning Approach for Rapid and Accurate Apple Fruitlet Detection before Fruit Thinning. Biosyst. Eng. 2021, 210, 271–281. [Google Scholar] [CrossRef]

- Dong, X.; Yan, S.; Duan, C. A Lightweight Vehicles Detection Network Model Based on YOLOv5. Eng. Appl. Artif. Intell. 2022, 113, 104914. [Google Scholar] [CrossRef]

| Models | Precision (%) | Recall (%) | mAP @0.5 (%) |

|---|---|---|---|

| Yolov5s | 67.5 | 60.8 | 64.7 |

| Yolov5s-CA | 70.2 1 | 61.3 1 | 65.8 1 |

| Models | Precision (%) | Recall (%) | mAP @0.5 (%) | |

|---|---|---|---|---|

| Yolov5s | 30 1 | 14.9 | 11.7 1 | |

| Yolov5s-CA | 24.1 | 19.8 1 | 11.3 | |

| Dataset Size | Models | Precision (%) | Recall (%) | mAP @0.5 (%) |

|---|---|---|---|---|

| Synthetic + Real field 10% | Yolov5s | 37.2 | 33 | 27.9 |

| Yolov5s-CA | 55.8 | 33 | 35 | |

| Synthetic + Real field 25% | Yolov5s | 40.4 | 40.7 | 35.2 |

| Yolov5s-CA | 45.9 | 43.8 | 41.2 | |

| Synthetic + Real field 40% | Yolov5s | 47.6 | 43.5 | 42.4 |

| Yolov5s-CA | 62 | 49.2 | 48.8 | |

| Synthetic + Real field 55% | Yolov5s | 62.6 | 47.3 | 52.4 |

| Yolov5s-CA | 69.6 | 48.9 | 54.2 | |

| Synthetic + Real field 70% | Yolov5s | 62.6 | 55.9 | 61.1 |

| Yolov5s-CA | 71.4 | 59.2 | 66.3 | |

| Synthetic + Real field 100% | Yolov5s | 71.6 | 54 | 62.3 |

| Yolov5s-CA | 75.2 1 | 61.2 1 | 68.2 1 |

| Models | Datasets | Frame Per Second (FPS) | Inference Time (ms) | Parameters | Model Size (MB) |

|---|---|---|---|---|---|

| Yolov5s | Real field | 109.89 | 9.1 | 7,027,720 | 13.7 |

| Synthetic | 93.46 | 10.7 | |||

| Mixed | 95.24 | 10.5 | |||

| Yolov5s-CA | Real field | 87.72 | 11.4 | 7,063,400 | 13.8 |

| Synthetic | 81.30 | 12.3 | |||

| Mixed | 84.03 | 11.9 |

| Models | Spatial Plant Scales | Total | |||

|---|---|---|---|---|---|

| Plant Part | Plant Stem | Clone 1 | |||

| Yolov5s | Number of objects detected correctly | 7 | 14 | 20 | 41 |

| Number of annotations | 9 | 21 | 48 | 78 | |

| Recall rate (%) | 77.78 | 66.67 | 41.67 | 52.56 | |

| Precision rate(%) | 87.50 | 93.33 | 83.33 | 87.23 | |

| Yolov5s-CA | Number of objects detected correctly | 7 | 17 | 28 | 52 |

| Number of annotations | 9 | 21 | 48 | 78 | |

| Recall rate (%) | 77.78 | 80.95 | 58.33 | 66.67 | |

| Precision rate(%) | 100.00 | 100.00 | 93.33 | 96.30 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Obsie, E.Y.; Qu, H.; Zhang, Y.-J.; Annis, S.; Drummond, F. Yolov5s-CA: An Improved Yolov5 Based on the Attention Mechanism for Mummy Berry Disease Detection. Agriculture 2023, 13, 78. https://doi.org/10.3390/agriculture13010078

Obsie EY, Qu H, Zhang Y-J, Annis S, Drummond F. Yolov5s-CA: An Improved Yolov5 Based on the Attention Mechanism for Mummy Berry Disease Detection. Agriculture. 2023; 13(1):78. https://doi.org/10.3390/agriculture13010078

Chicago/Turabian StyleObsie, Efrem Yohannes, Hongchun Qu, Yong-Jiang Zhang, Seanna Annis, and Francis Drummond. 2023. "Yolov5s-CA: An Improved Yolov5 Based on the Attention Mechanism for Mummy Berry Disease Detection" Agriculture 13, no. 1: 78. https://doi.org/10.3390/agriculture13010078

APA StyleObsie, E. Y., Qu, H., Zhang, Y.-J., Annis, S., & Drummond, F. (2023). Yolov5s-CA: An Improved Yolov5 Based on the Attention Mechanism for Mummy Berry Disease Detection. Agriculture, 13(1), 78. https://doi.org/10.3390/agriculture13010078