Codling Moth Monitoring with Camera-Equipped Automated Traps: A Review

Abstract

1. Introduction

2. Materials and Methods

3. Results

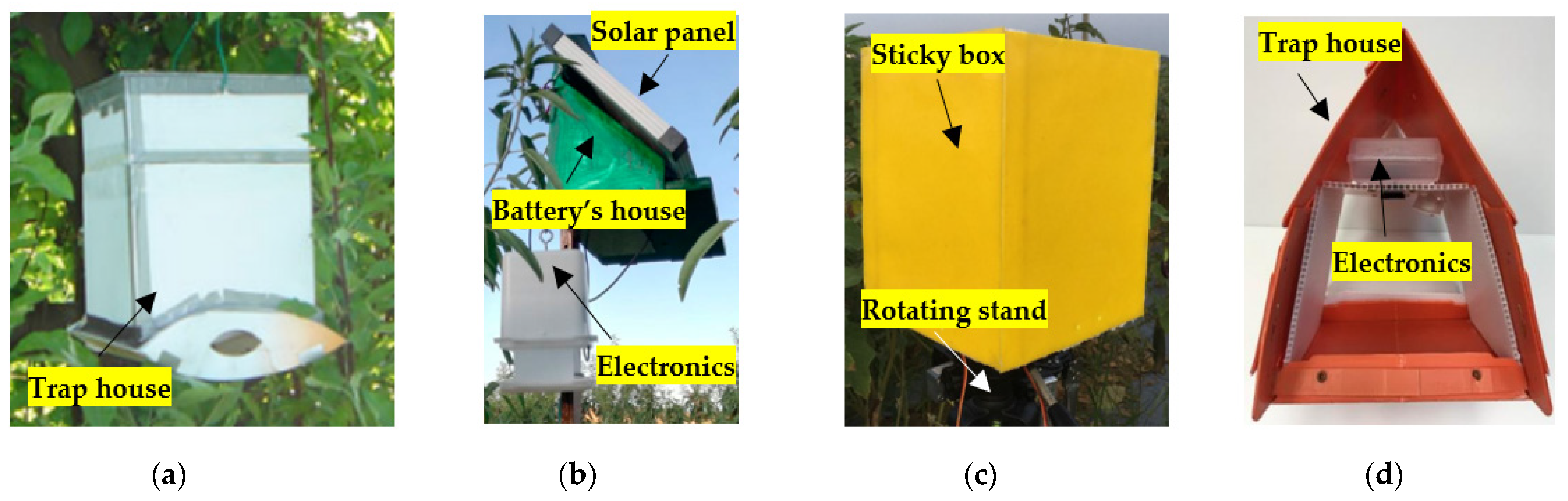

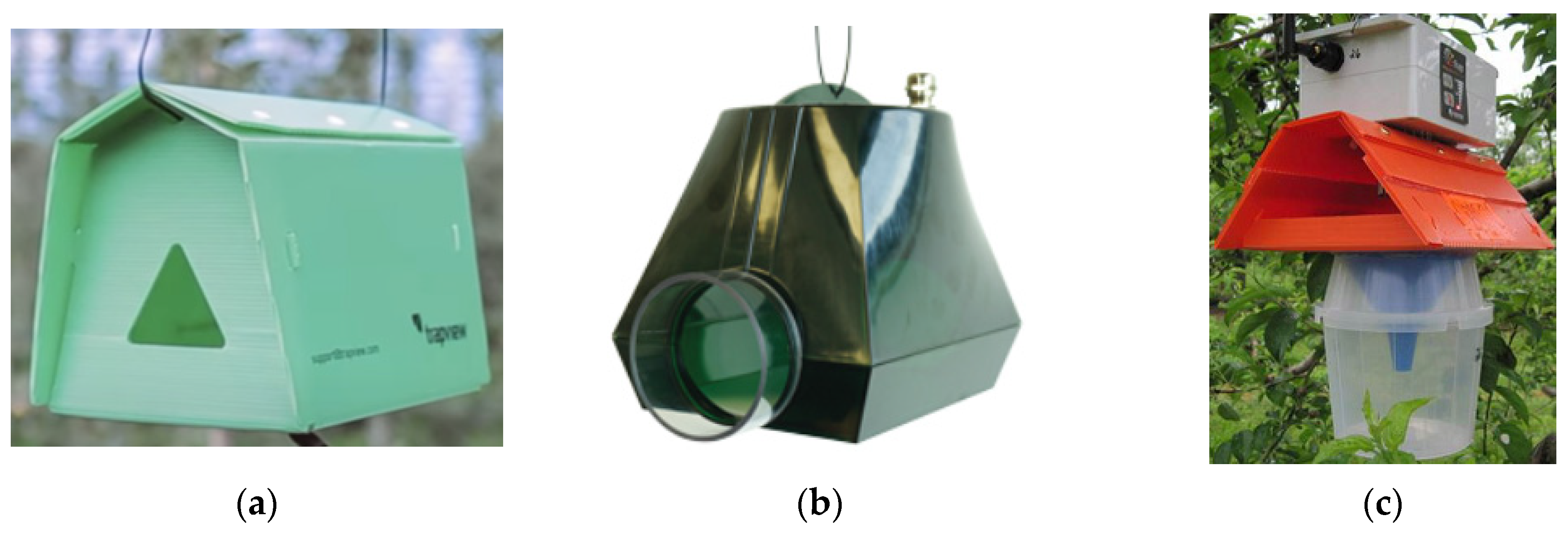

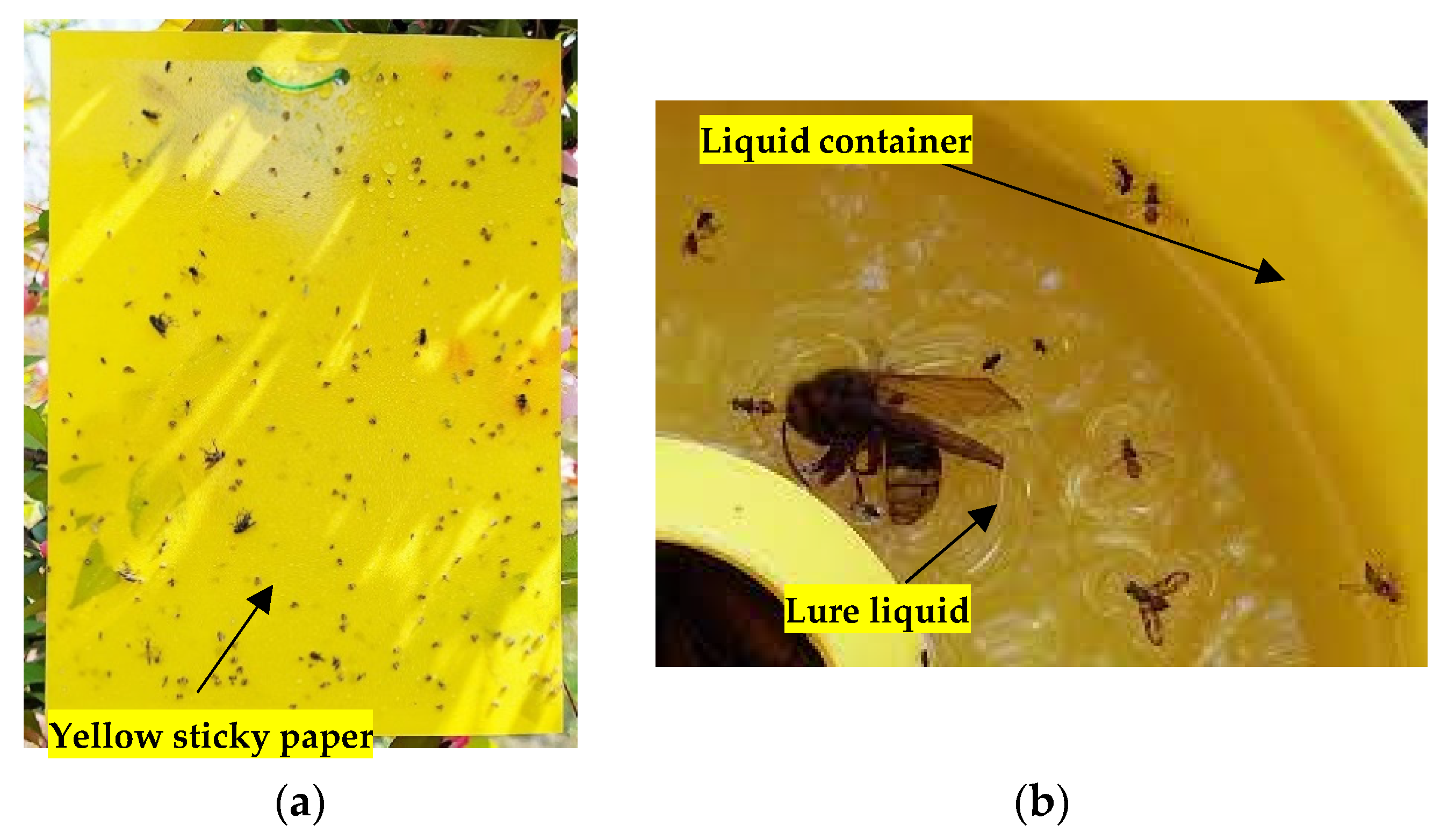

3.1. Commercial Camera-Equipped Traps

3.2. Earlier Prototype Traps

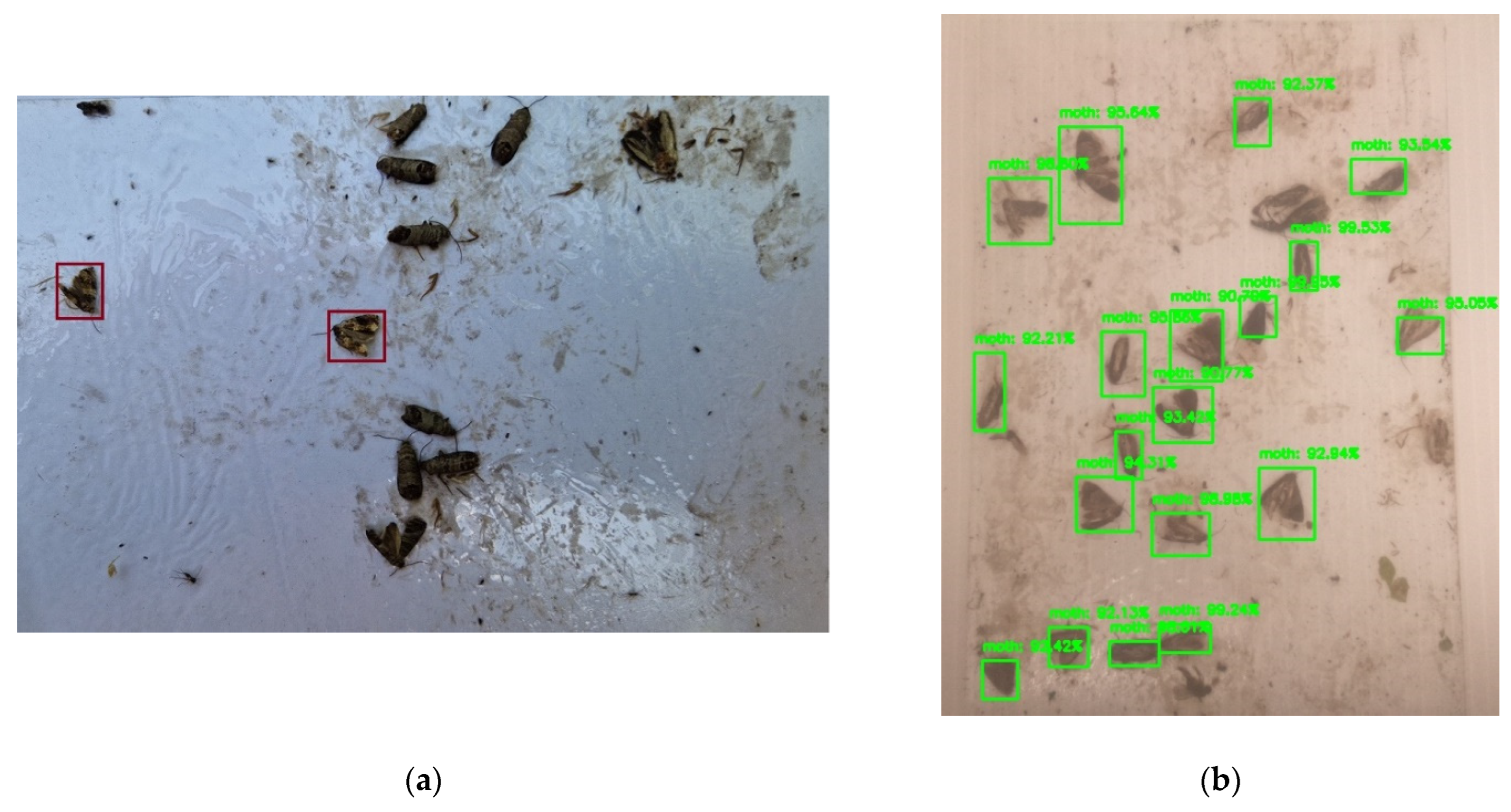

3.3. Current Prototype Traps

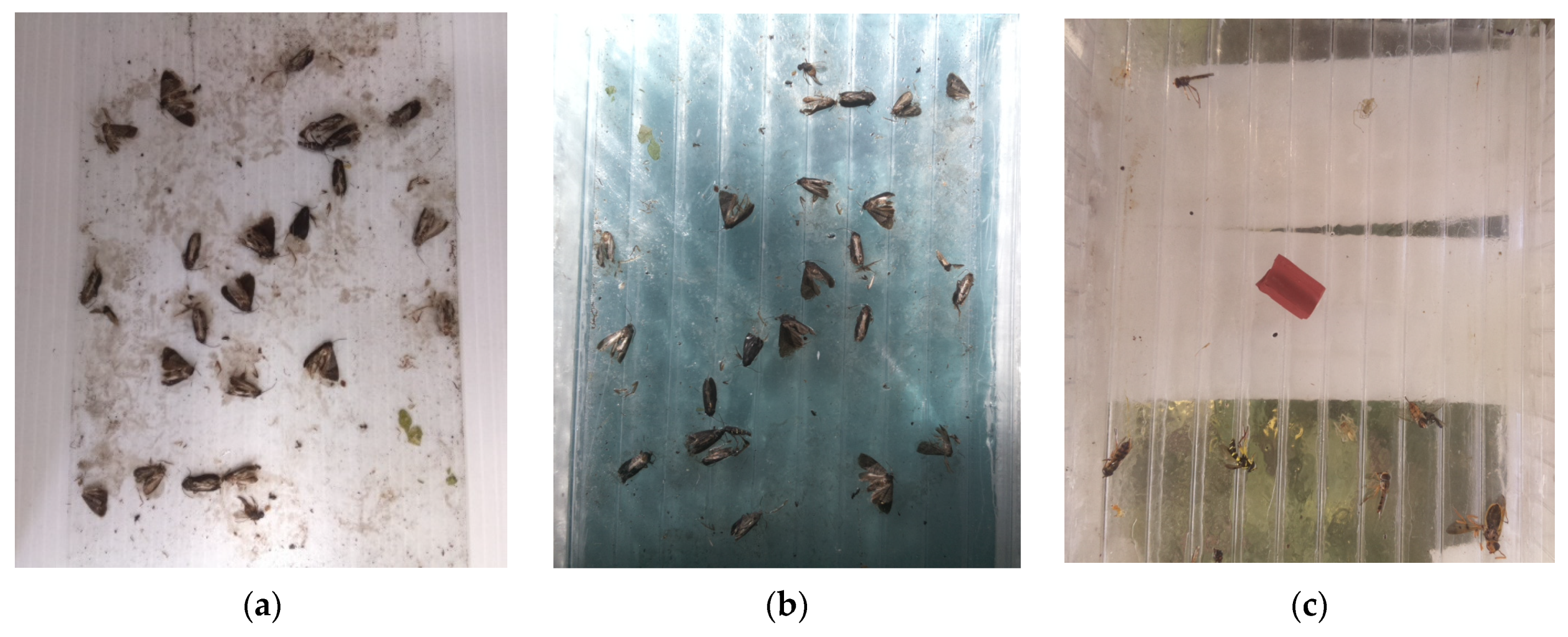

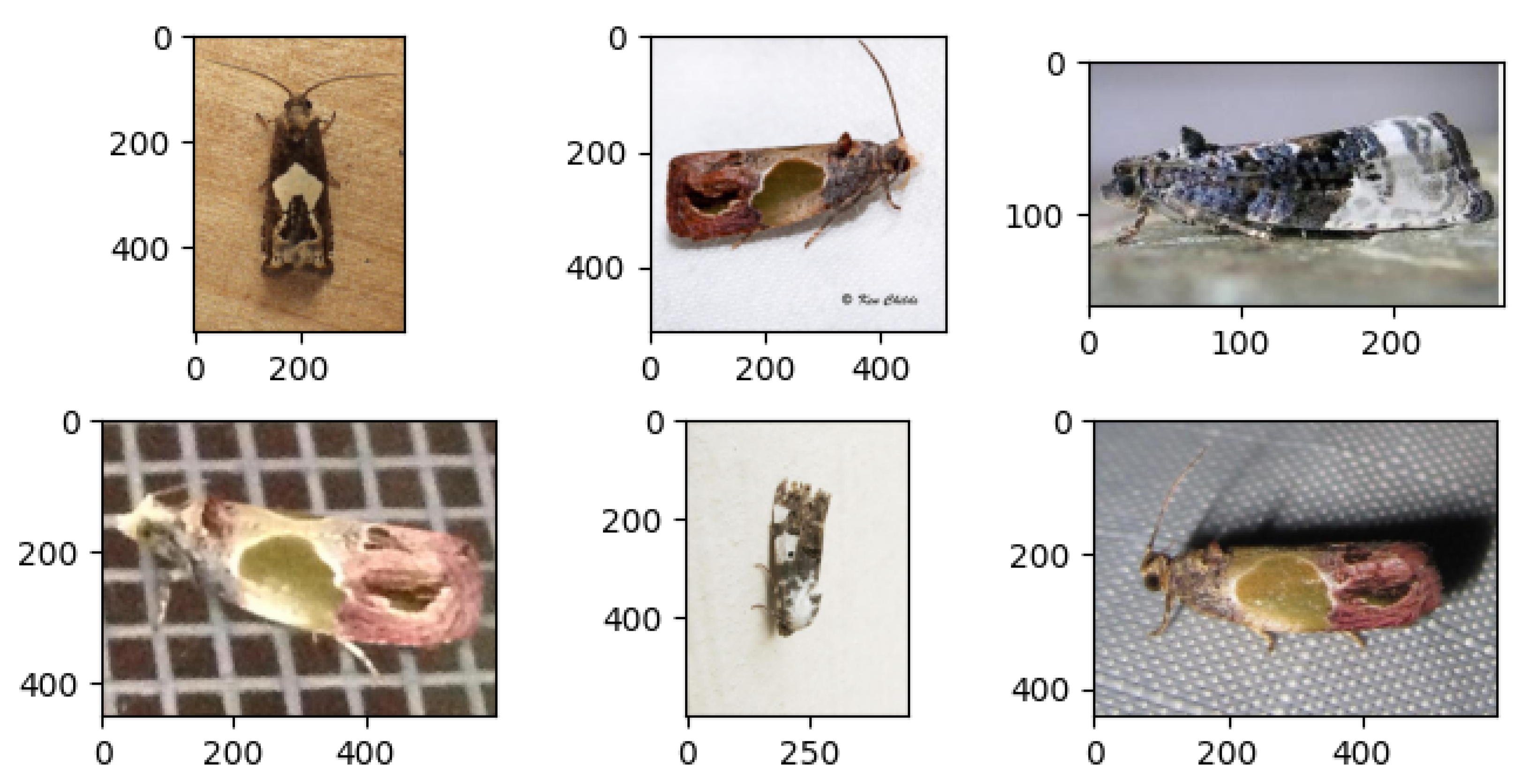

3.4. Databases and Data Generation

3.5. Evaluation Metrics

3.6. Insect Counting Methods

4. Discussion

4.1. Discussion on Camera-Equipped Traps

4.2. Discussion on Moth Counting Methods

5. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Cirjak, D.; Miklecic, I.; Lemic, D.; Kos, T.; Zivkovic, P.I. Automatic pest monitoring systems in apple production under changing climate conditions. Horticulturae 2022, 8, 520. [Google Scholar] [CrossRef]

- Witzgall, P.; Stelinski, L.; Gut, L.; Thomas, D. Codling moth management and chemical ecology. Annu. Rev. Entomol. 2008, 53, 503–522. [Google Scholar] [CrossRef] [PubMed]

- Son, A.R.; Suh, S.J.; Park, Y.M. Notes on insects captured in codling moth (Lepidoptera: Tortricidae) surveillance traps in South Korea over the last eight years. Bull. OEPP/EPPO 2018, 48, 578–582. [Google Scholar] [CrossRef]

- Jiang, D.; Chen, S.; Hao, M.; Fu, J.; Ding, F. Mapping the potential global codling moth (Cydia pomonella L.) distribution based on a machine learning method. Sci. Rep. 2018, 8, 13093. [Google Scholar] [CrossRef]

- Horner, R.M.; Lo, P.L.; Rogers, D.J.; Walker, J.T.S.; Suckling, D.M. Combined effect of mating disruption, insecticides, and the sterile insect technique on Cydia pomonella in New Zealand. Insects 2020, 11, 837. [Google Scholar] [CrossRef]

- Men, Q.L.; Chen, M.H.; Zhang, Y.L.; Feng, J.N. Genetic structure and diversity of a newly invasive species, the codling moth, Cydia pomonella (L.) (Lepidoptera: Tortricidae) in China. Biol. Invasions 2013, 15, 447–458. [Google Scholar] [CrossRef]

- Beers, E.H.; Horton, D.R.; Miliczky, E. Pesticides used against Cydia pomonella disrupt biological control of secondary pests of apple. Biol. Control 2016, 102, 35–43. [Google Scholar] [CrossRef]

- Hoye, T.T.; Arje, J.; Bjerge, K.; Hansen, O.L.P.; Iosifidis, A.; Leese, F.; Mann, H.M.R.; Meissner, K.; Melvad, C.; Raitoharju, J. Deep learning and computer vision will transform entomology. Proc. Natl. Acad. Sci. USA 2020, 118, e2002545117. [Google Scholar] [CrossRef]

- Higbee, B.S.; Calkins, C.O.; Temple, C.A. Overwintering of codling moth (Lepidoptera: Tortricidae) larvae in apple harvest bins and subsequent moth emergence. J. Econ. Entomol. 2001, 94, 1511–1517. [Google Scholar] [CrossRef]

- Lima, M.C.F.; Leandro, M.E.D.A.; Valero, C.; Coronel, L.C.P.; Bazzo, C.O.G. Automatic detection and monitoring of insect pests—A review. Agriculture 2020, 10, 161. [Google Scholar] [CrossRef]

- Muppala, C.; Guruviah, V. Machine vision detection of pests, diseases and weeds: A review. J. Phytol. 2019, 12, 9–19. [Google Scholar] [CrossRef]

- Preti, M.; Moretti, C.; Scarton, G.; Giannotta, G.; Angeli, S. Developing a smart trap prototype equipped with camera for tortricid pests remote monitoring. Bull. Insectol. 2021, 74, 147–160. [Google Scholar]

- Qing, Y.A.; Jin, F.E.; Jian, T.A.; Xu, W.G.; Zhu, X.H.; Yang, B.J.; Jun, L.Ü.; Xie, Y.Z.; Bo, Y.A.; Wu, S.Z.; et al. Development of an automatic monitoring system for rice light-trap pests based on machine vison. J. Integr. Agric. 2020, 19, 2500–2513. [Google Scholar]

- Tetila, E.C.; Machado, B.B.; Astolfi, G.; Belete, N.A.S.; Amori, W.P.; Roel, A.R.; Pistori, H. Detection and classification of soybean pests using deep learning with UAV images. Comput. Electron. Agric. 2020, 179, 105836. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeata-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Hong, S.J.; Kim, S.Y.; Kim, E.; Lee, C.H.; Lee, J.S.; Lee, D.S.; Bang, J.; Kim, G. Moth detection from pheromone trap images using deep learning object detectors. Agriculture 2020, 10, 170. [Google Scholar] [CrossRef]

- Mamdouh, N.; Khattab, A. YOLO-based deep learning framework for olive fruit fly detection and counting. IEEE Access 2021, 9, 84252–84262. [Google Scholar] [CrossRef]

- Preti, M.; Verheggen, F.; Angeli, S. Insect pest monitoring with camera-equipped traps: Strengths and limitations. J. Pest Sci. 2021, 94, 203–217. [Google Scholar] [CrossRef]

- Parsons, R.; Ross, R.; Robert, K. A survey on wireless sensor network technologies in pest management applications. SN Appl. Sci. 2020, 2, 28. [Google Scholar] [CrossRef]

- Lounghlin, D. Developments in the world of insect detection. Int. Pest Control 2013, 55, 88–90. [Google Scholar]

- Schrader, M.J.; Smytheman, P.; Beers, E.H.; Khot, L.R. An open-source low-cost imaging system plug-in for pheromone traps aiding remote insect pest population monitoring in fruit crops. Machines 2022, 10, 52. [Google Scholar] [CrossRef]

- Guarnieri, A.; Maini, S.; Molari, G.; Rondelli, V. Automatic trap for moth detection in integrated pest management. Bull. Insectology 2011, 64, 247–251. [Google Scholar]

- Brunelli, D.; Albanese, A.; d’Acundo, D.; Nardello, M. Energy neutral machine learning based IoT device for pest detection in precision agriculture. IEEE Internet Things Mag. 2019, 2, 10–13. [Google Scholar] [CrossRef]

- Segella, A.; Fiacco, G.; Tramarin, L.; Nardello, M.; Brunelli, D. Neural networks for pest detection in precision agriculture. In Proceedings of the 2020 IEEE International Workshop on Metrology for Agriculture and Forestry, Trento, Italy, 4–6 November 2020; pp. 7–12. [Google Scholar]

- Brunelli, D.; Polonelli, T.; Benini, L. Ultra-low energy pest detection for smart agriculture. In Proceedings of the 2020 IEEE Sensors, Rotterdam, The Netherlands, 25–28 October 2020; pp. 1–4. [Google Scholar]

- Perez-Aparicio, A.; Llorens, J.; Rosello-Polo, J.R.; Marti, J.; Gemeno, C. A cheap electronic sensor automated trap for monitoring the flight activity period of moths. Eur. J. Entomol. 2021, 118, 315–321. [Google Scholar] [CrossRef]

- Hadi, M.K.; Kassim, M.S.M.; Wayayok, A. Development of an automated multidirectional pest sampling detection system using motorized sticky traps. IEEE Access 2021, 9, 67391–67404. [Google Scholar] [CrossRef]

- Suto, J. A novel plug-in board for remote insect monitoring. Agriculture 2022, in press. [Google Scholar]

- Xie, C.; Zhang, J.; Rui, L.; Li, J.; Hong, P.; Xia, J.; Chen, P. Automatic classification for field crop insects via multiple-task sparse representation and multiple-kernel learning. Comput. Electron. Agric. 2015, 119, 123–132. [Google Scholar] [CrossRef]

- Wang, J.; Lin, C.; Ji, L.; Liang, A. A new automatic identification system of insect images at the order level. Knowl. Based Syst. 2012, 33, 102–110. [Google Scholar] [CrossRef]

- Wen, C.; Guyer, D. Image-based orchard insect automated identification and classification method. Comput. Electron. Agric. 2012, 89, 110–115. [Google Scholar] [CrossRef]

- Martineau, M.; Conte, D.; Raveaux, R.; Arnault, I.; Munier, D.; Venturini, G. A survey on image-based insect classification. Pattern Recognit. 2017, 65, 273–284. [Google Scholar] [CrossRef]

- Kalamatianos, R.; Karydis, I.; Doukakis, D.; Avlonitis, M. DIRT: The Dacus image recognition toolkit. J. Imaging 2018, 4, 129. [Google Scholar] [CrossRef]

- Xie, C.; Wang, R.; Zhang, J.; Chen, P.; Dong, W.; Li, R.; Chen, T.; Chen, H. Multi-level learning features for automatic classification of field crop pests. Comput. Electron. Agric. 2018, 152, 233–241. [Google Scholar] [CrossRef]

- Kasinathan, T.; Uyyala, S.R. Machine learning ensemble with image processing for pest identification and classification in field crops. Neural Comput. Appl. 2021, 33, 7491–7504. [Google Scholar] [CrossRef]

- Thenmonzi, K.; Reddy, U.S. Crop pest classification based on deep convolutional neural network and transfer learning. Comput. Electron. Agric. 2019, 164, 104906. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, C.; Lai, Y.K.; Cheng, M.M.; Yang, J. IP102: A large scale benchmark dataset for insect pest recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8787–8796. [Google Scholar]

- Suto, J. Embedded system -based sticky paper trap with deep learning-based insect-counting algorithm. Electronics 2021, 10, 1754. [Google Scholar] [CrossRef]

- Bjerge, K.; Nielsen, J.B.; Sepstrup, M.V.; Helsing-Nielsen, F.; Hoye, T.T. An automated light trap to monitor moth (Lepidoptera) using computer vision-based tracking and deep learning. Sensors 2021, 21, 343. [Google Scholar] [CrossRef]

- Ding, W.; Taylor, G. Automatic moth detection from trap images for pest management. Comput. Electron. Agric. 2016, 123, 17–28. [Google Scholar] [CrossRef]

- Chen, Y.S.; Hsu, C.S.; Lo, C.L. An entire-and-partial feature transfer learning approach for detecting the frequency of pest occurrence. IEEE Access 2020, 8, 92490–92502. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, X.; Yuan, M.; Ren, L.; Wang, J.; Chen, Z. Automatic in-trap pest detection using deep learning for pheromone-based Dendroctonus valens monitoring. Biosyst. Eng. 2018, 176, 140–150. [Google Scholar] [CrossRef]

- Zhong, Y.; Gao, J.; Lei, Q.; Zhou, Y. A vision-based counting and recognition system for flying insects in intelligent agriculture. Sensors 2018, 18, 1489. [Google Scholar] [CrossRef]

- Rustia, D.J.A.; Lin, C.E.; Chung, J.Y.; Zhuang, Y.J.; Hsu, J.C.; Lin, T.T. Application of image and environmental sensor network for automated greenhouse insect pest monitoring. J. Asia Pac. Entomol. 2020, 23, 17–28. [Google Scholar] [CrossRef]

- Deng, L.; Wang, Y.; Han, Z.; Yu, R. Research on insect pest image detection and recognition based on bio-inspired method. Biosyst. Eng. 2018, 169, 139–148. [Google Scholar] [CrossRef]

- Yalcin, H. Vision Based Automatic Inspection of Insects in Pheromone Traps. In Proceedings of the 2015 Fourth International Conference on Agro-Geoinformatics, Turkey, Istanbul, 20–24 July 2015; pp. 333–338. [Google Scholar]

- Dawei, W.; Limiao, D.; Jiangong, N.; Jiyue, G.; Hongfei, Z.; Zhongzhi, H. Recognition pest by image-based transfer learning. J. Sci. Food Agric. 2019, 99, 4524–4531. [Google Scholar] [CrossRef] [PubMed]

- Nanni, L.; Maguolo, G.; Pancino, F. Insect pest image detection and recognition based on bio-inspired methods. Ecol. Inform. 2020, 57, 101089. [Google Scholar] [CrossRef]

- Xia, D.; Chen, P.; Wang, B.; Zhang, J.; Xie, C. Insect detection and classification based on an improved convolutional neural network. Sensors 2018, 18, 4169. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 5th International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Zhao, Z.Q.; Zheng, P.; Xu, S.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed]

- Arel, I.; Rose, D.C.; Karnowski, T.P. Deep machine learning—A new frontier in artificial intelligence research. IEEE Comput. Intell. Mag. 2010, 5, 13–18. [Google Scholar] [CrossRef]

- Suto, J.; Oniga, S. Efficiency investigation from shallow to deep neural network techniques in human activity recognition. Cogn. Syst. Res. 2019, 54, 37–49. [Google Scholar] [CrossRef]

- Saradopoulos, I.; Potamitis, I.; Ntalampiras, S.; Konstantaras, A.I. Edge computing for vision-based, urban-insects traps in the context of smart cities. Sensors 2022, 22, 2006. [Google Scholar] [CrossRef] [PubMed]

- Bakkay, M.C.; Chambon, S.; Rashwan, H.A.; Lubat, C.; Barsotti, S. Automatic detection of individual and touching moths from trap images by combining contour-based and region-based segmentation. IET Comput. Vis. 2018, 12, 138–145. [Google Scholar] [CrossRef]

- Rustia, D.J.A.; Lin, C.E.; Chung, J.Y.; Lin, T.T. An object classifier using support vector machines for real-time insect pest counting. In Proceedings of the 2017 Conference on BioMechatronics and Agricultural Machinery Engineering, Taipei, Taiwan, 20–22 September 2017; pp. 275–278. [Google Scholar]

- Rong, M.; Wang, Z.; Ban, B.; Gou, X. Pest identification and counting of yellow plate in field based on improved Mask R-CNN. Discret. Dyn. Nat. Soc. 2022, 2022, 1913577. [Google Scholar] [CrossRef]

- Jolles, J.W. Broad scale applications of the Raspberry Pi: A Review and guide for biologist. Methods Ecol. Evol. 2021, 12, 1562–1579. [Google Scholar] [CrossRef]

- Wang, D.; Cao, W.; Zhang, F.; Li, Z.; Xu, S.; Wu, X. A review of deep learning in multiscale agricultural sensing. Remote Sens. 2022, 14, 559. [Google Scholar] [CrossRef]

- Alibabaei, K.; Gaspar, P.D.; Lima, T.M.; Campos, R.M.; Girao, I.; Monteiro, J.; Lopes, C.M. A review of the challenges of using deep learning algorithms to supply decision-making in agricultural activities. Remote Sens. 2022, 14, 638. [Google Scholar] [CrossRef]

- Preti, M.; Favaro, R.; Knight, A.L.; Angeli, S. Remote monitoring of Cydia pomonella adults among an assemblage of nontargets in sex pheromone-kairomone-baited smart traps. Pest Manag. Sci. 2020, 77, 4084–4090. [Google Scholar] [CrossRef]

| Trap Type | Controllable Unit | Battery Capacity | Power Consumption | Solar Charger | Camera | Communication | Insect Counting | Cost/ Unit |

|---|---|---|---|---|---|---|---|---|

| Trapview | - | 2 × 2.2 Ah | - | Yes | 4 × 5 MP | Mobile network | Server side | - |

| iSCOUT | - | 12 Ah | - | Yes | 10 MP | Mobile network | Server side | - |

| [12] | ESP 32 | 3.5 Ah | 1.5 W (active state) | Yes | 5 MP | Mobile network | Server side | EUR 375 |

| [21] | Arducam IoTai | 0.35 Ah | - | No | 2 MP | Not used | Not applied | USD 33 |

| [22] | S60 phone | 4 × 4.8 Ah | - | No | 3 MP | Mobile network | Not applied | - |

| [23] | Pi 3 + Movidius stick | 9 Ah | - | No | 8 MP | LoRa | In-trap | - |

| [24] | Pi 3 + Movidius stick | 1.8 Ah | 0.054 W (average) | Yes | - | LoRa | In-trap | - |

| [25] | GAP8 SoC | - | 30 uW (sleep mode) | No | >1 MP | LoRa | In-trap | - |

| [26] | Pi Zero W | 7 Ah | - | Yes | 8 MP | Not used | Not applied | EUR 150 |

| [27] | Pi 3 + Arduino Uno | 7.2 A | - | No | 8 MP | Not used | Not applied | USD 500 |

| [28] | Pi Zero | 11 Ah | 2.4 W (active state) 2 2 mW (inactive state) | Yes | 8 MP | LoRA/mobile network | In-trap | USD 75 |

| Article | Segmentation | Classifier | Image Resolution | Performance |

|---|---|---|---|---|

| [40] | Sliding-window | CNN | 640 × 480 | 93.1 AP |

| [23] | OpenCV’s blob detector | VGG16 | - | 94.38% (2) 92.6% (3) |

| [24] | - | LeNet | - | 97.6% (2) 100% (3) |

| [12] | - | CNN | 2592 × 1944 | >20% (2) |

| [38] | Selective Search | CNN | various | 0.164 (8) |

| Specification | Raspberry Pi Zero W | ESP32-Cam | Arduino Uno |

|---|---|---|---|

| Type | Single-board computer | Microcontroller | Microcontroller |

| Operating system | Raspberry Pi OS | FreeRTOS | None |

| Processor | 32-bit | 32-bit | 8-bit |

| Memory | 512 MB | 520 KB | 32 Kb |

| Clock frequency | 1 GHz | 160 MHz | 16 MHz |

| Type | Single-board computer | Microcontroller | Microcontroller |

| Operating system | Raspberry Pi OS | FreeRTOS | None |

| Camera port | Yes | Yes | No |

| Input voltage | 5 V | 5 V | 7–12 V |

| IO pins | 40 (PWR, GND, digital) | 16 (PWR, GND, digital, analogue) | 20 (PWR, GND, digital, analogue) |

| Background storage | MicroSD card (up to 1 TB) | MicroSD card (up to 4 GB) | Flash memory (32 KB) |

| Power consumption (in idle state) | 750 mW | ~900 mW | <250 mW |

| Sleep mode | No | Yes | Yes |

| Article | Year | Method | Insect(s) | Decision time | Performance |

|---|---|---|---|---|---|

| [40] | 2016 | Sliding-window + CNN | Codling moths | High | 93.1 AP |

| [23] | 2018 | RetinaNet | Red Turpentine Beetle | Medium | 0.751 AP |

| [43] | 2018 | YOLO + SVM | Bee, fly, fruit fly, etc. | High | 93.99% (3) |

| [44] | 2020 | Blob detector + SVM | Whiteflies, thrips, flies, and aphids | Low | <96% (6) |

| [16] | 2020 | Faster RCNN | S. liture, H. assulta, S. exigua | Medium | 90.25 AP |

| [13] | 2020 | Segmentation + CNN | S. inferens, C. suppressalis, C. medinalis | Medium | 88.9 mAP |

| [17] | 2021 | Modified YOLO | Olive fruit fly | Medium | 96.69 mAP |

| [57] | 2022 | Mask R-CNN | Aphids, leaf miner flies, grasshoppers | Medium | 80.2 mAP |

| [38] | 2022 | Selective search + CNN | Codling moth | High | 0.164 (8) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suto, J. Codling Moth Monitoring with Camera-Equipped Automated Traps: A Review. Agriculture 2022, 12, 1721. https://doi.org/10.3390/agriculture12101721

Suto J. Codling Moth Monitoring with Camera-Equipped Automated Traps: A Review. Agriculture. 2022; 12(10):1721. https://doi.org/10.3390/agriculture12101721

Chicago/Turabian StyleSuto, Jozsef. 2022. "Codling Moth Monitoring with Camera-Equipped Automated Traps: A Review" Agriculture 12, no. 10: 1721. https://doi.org/10.3390/agriculture12101721

APA StyleSuto, J. (2022). Codling Moth Monitoring with Camera-Equipped Automated Traps: A Review. Agriculture, 12(10), 1721. https://doi.org/10.3390/agriculture12101721