RobHortic: A Field Robot to Detect Pests and Diseases in Horticultural Crops by Proximal Sensing

Abstract

1. Introduction

2. Materials and Methods

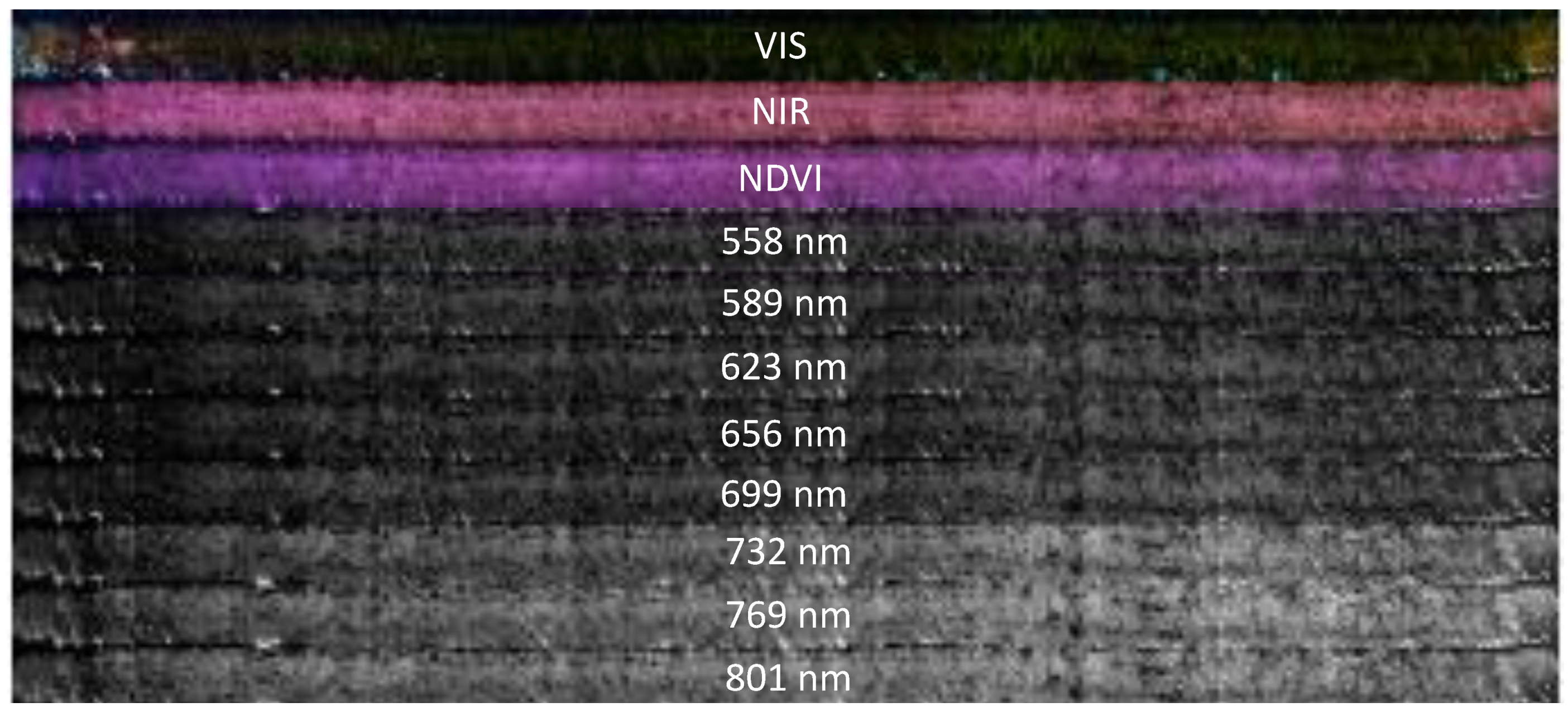

2.1. Robotic Platform and Onboard Equipment

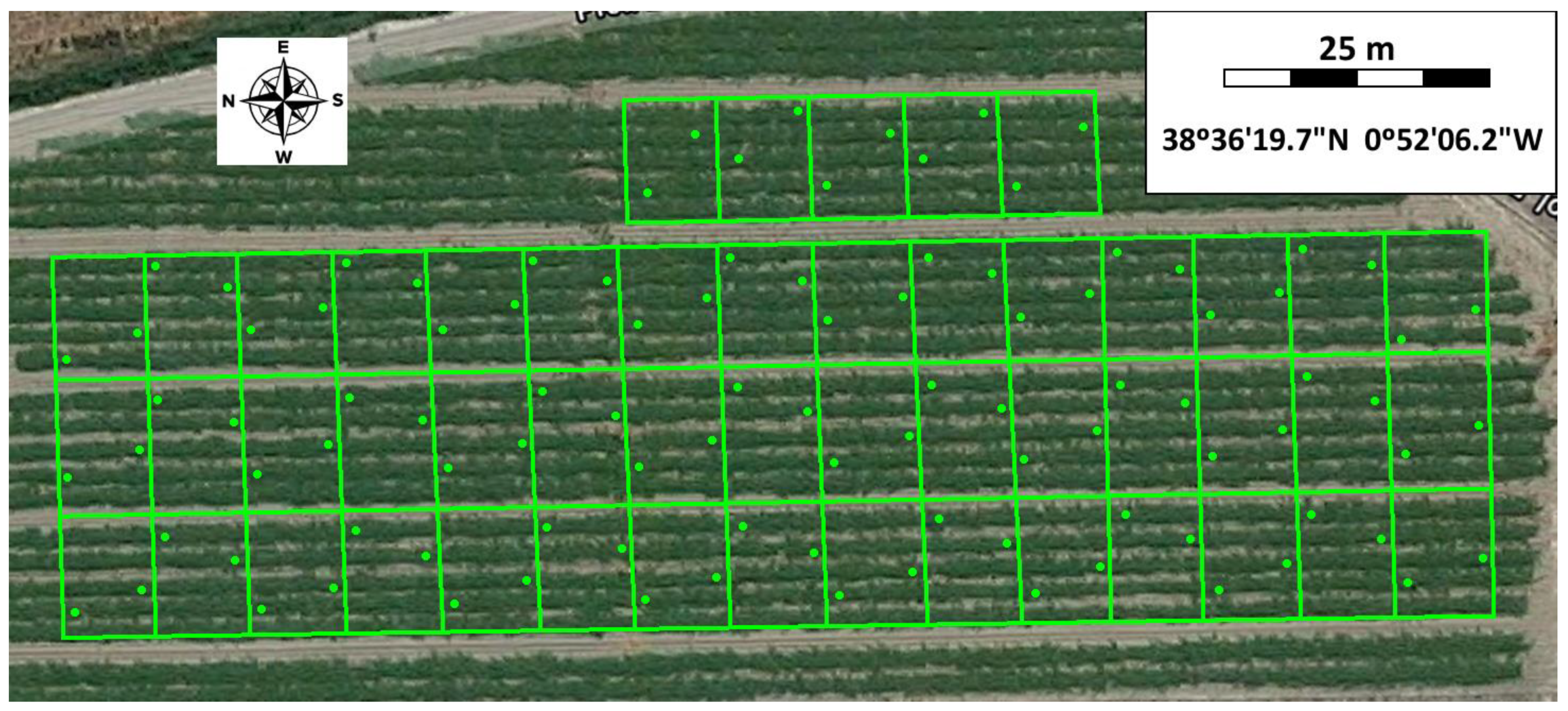

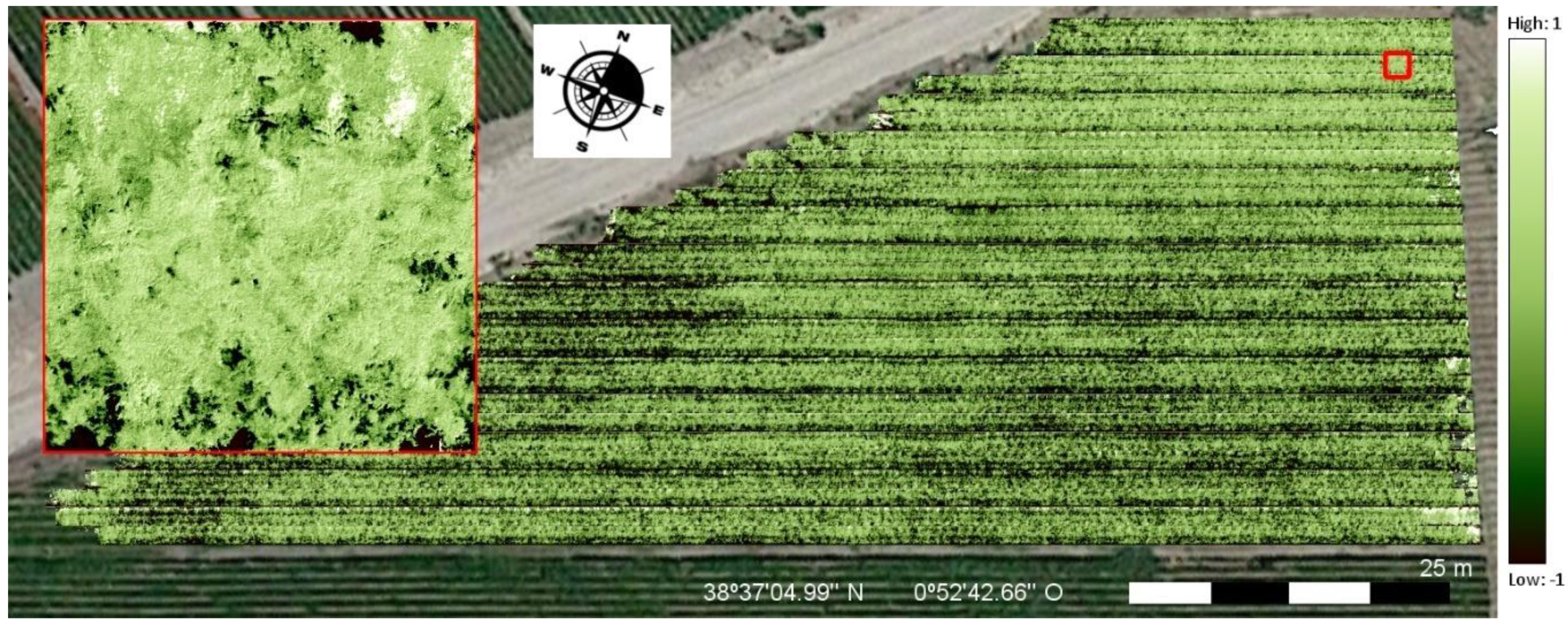

2.2. Field Tests

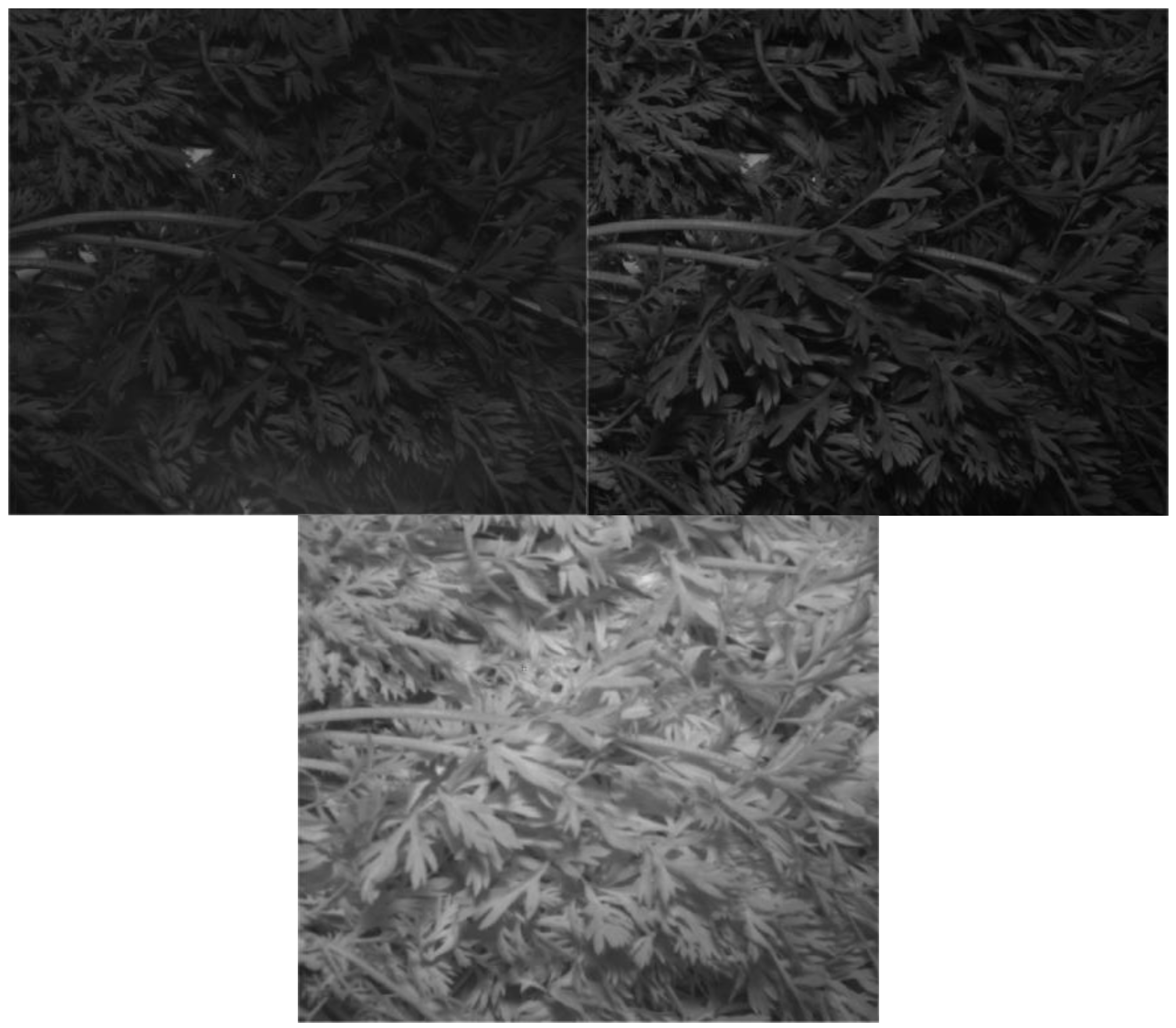

2.3. Laboratory Tests

2.4. Data Analysis

3. Results and Discussion

3.1. Robotic Platform Developed

3.2. Results in Detection of CaLsol

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- EPPO. Candidatus Liberibacter solanacearum. EPPO Bull. 2020, 50, 49–68. [Google Scholar]

- Antolinez, C.A.; Fereres, A.; Moreno, A. Risk assessment of ‘Candidatus Liberibacter solanacearum’ transmission by the psyllids Bactericera trigonica and B. tremblayi from Apiaceae crops to potato. Sci. Rep. 2017, 7, 45534. [Google Scholar]

- Bertolini, E.; Teresani, G.R.; Loiseau, M.; Tanaka, F.A.O.; Barbé, S.; Martínez, C.; Gentit, P.; López, M.M.; Cambra, M. Transmission of ‘Candidatus Liberibacter solanacearum’ in carrot seeds. Plant Pathol. 2015, 64, 276–285. [Google Scholar] [CrossRef]

- Antolínez, C.A.; Moreno, A.; Appezzato-da-Gloria, B.; Fereres, A. Characterization of the electrical penetration graphs of the psyllid Bactericera trigonica on carrots. Entomol. Exp. Appl. 2017, 163, 127–139. [Google Scholar]

- Nissinen, A.I.; Haapalainen, M.; Jauhiainen, L.; Lindman, M.; Pirhonen, M. Different symptoms in carrots caused by male and female carrot psyllid feeding and infection by ‘Candidatus Liberibacter solanacearum’. Plant Pathol. 2014, 63, 812–820. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Camino, C.; Beck, P.S.A.; Calderon, R.; Hornero, A.; Hernández-Clemente, R.; Kattenborn, T.; Montes-Borrego, M.; Susca, L.; Morelli, M.; et al. Pre-visual symptoms of Xylella fastidiosa infection revealed in spectral plant-trait alterations. Nat. Plants 2018, 4, 432–439. [Google Scholar] [CrossRef] [PubMed]

- Martinelli, F.; Scalenghe, R.; Davino, S.; Panno, S.; Scuderi, G.; Ruisi, P.; Villa, P.; Stroppiana, D.; Boschetti, M.; Goulart, L.R.; et al. Advanced methods of plant disease detection. A review. Agron. Sustain. Dev. 2014, 35, 1–25. [Google Scholar] [CrossRef]

- Vicent, A.; Blasco, J. When prevention fails. Towards more efficient strategies for plant disease eradication. New Phytol. 2017, 214, 905–908. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-de-Santos, P.; Ribeiro, A.; Fernandez-Quintanilla, C.; Lopez-Granados, F.; Brandstoetter, M.; Tomic, S.; Pedrazzi, S.; Peruzzi, A.; Pajares, G.; Kaplanis, G.; et al. Fleets of robots for environmentally-safe pest control in agriculture. Precis. Agric. 2017, 18, 574–614. [Google Scholar]

- Bac, C.W.; van Henten, E.J.; Hemming, J.; Edan, Y. Harvesting Robots for High-value Crops: State-of-the-art Review and Challenges Ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Edan, Y. Design of an autonomous agricultural robot. Appl. Intell. 1995, 5, 41–50. [Google Scholar] [CrossRef]

- Hiremath, S.A.; Van der Heijden, G.W.A.M.; Van Evert, F.K.; Stein, A.; Ter Braak, C.J.F. Laser range finder model for autonomous navigation of a robot in a maize field using a particle filter. Comput. Electron. Agric. 2014, 100, 41–50. [Google Scholar] [CrossRef]

- Bender, A.; Whelan, B.; Sukkarieh, S. A high-resolution, multimodal data set for agricultural robotics: A Ladybird’s-eye view of Brassica. J. Field Robot. 2020, 37, 73–96. [Google Scholar] [CrossRef]

- Stein, M.; Bargoti, S.; Underwood, J. Image based mango fruit detection, localisation and yield estimation using multiple view geometry. Sensors 2016, 16, 1915. [Google Scholar] [CrossRef] [PubMed]

- Diago, M.P.; Rovira-Más, F.; Saiz-Rubio, V.; Faenzi, E.; Evain, S.; Ben Ghozlen, N.; Labails, S.; Stoll, M.; Scheidweiler, M.; Millot, C.; et al. The “eyes” of the VineRobot: Non-destructive and autonomous vineyard monitoring on-the-go. In Proceedings of the 62nd German Winegrowers’ Congress, Stuttgart, Germany, 27–30 November 2016. [Google Scholar]

- Lopes, C.M.; Graça, J.; Sastre, J.; Reyes, M.; Guzmán, R.; Braga, R.; Monteiro, A.; Pinto, P.A. Vineyard yield estimation by VINBOT robot-preliminary results with the white variety Viosinho. In Proceedings of the 11th International Terroir Congress, McMinnville, OR, USA, 10–14 July 2016; Jones, G., Doran, N., Eds.; Southern Oregon University: Ashland, OR, USA, 2016; pp. 458–463. [Google Scholar]

- Roure, F.; Moreno, G.; Soler, M.; Faconti, D.; Serrano, D.; Astolfi, P.; Bardaro, G.; Gabrielli, A.; Bascetta, L.; Matteucci, M. GRAPE: Ground Robot for vineyArd Monitoring and ProtEction. Third Iberian Robotics Conference. Adv. Intell. Syst. Comput. 2017, 693, 249–260. [Google Scholar]

- Rey, B.; Aleixos, N.; Cubero, S.; Blasco, J. XF-ROVIM. A field robot to detect olive trees infected by Xylella fastidiosa using proximal sensing. Remote Sens. 2019, 11, 221. [Google Scholar] [CrossRef]

- Adamides, G.; Katsanos, C.; Parmet, Y.; Christou, G.; Xenos, M.; Hadzilacos, T.; Edan, Y. HRI usability evaluation of interaction modes for a teleoperated agricultural robotic sprayer. Appl. Ergon. 2017, 62, 237–246. [Google Scholar] [CrossRef]

- Kurtser, P.; Ringdahl, O.; Rotstein, N.; Berenstein, R.; Edan, Y. In-Field Grape Cluster Size Assessment for Vine Yield Estimation Using a Mobile Robot and a Consumer Level RGB-D Camera. IEEE Robot. Autom. Lett. 2020, 5, 2030–2037. [Google Scholar] [CrossRef]

- Blasco, J.; Aleixos, N.; Roger, J.M.; Rabatel, G.; Moltó, E. Robotic weed control using machine vision. Biosyst. Eng. 2002, 83, 149–157. [Google Scholar] [CrossRef]

- Matsumura, K. Unmanned Aerial Vehicle (UAV) for fertilizar management in grassland in Hokkaido, Japan. In Unmanned Aerial Vehicle: Applications in Agriculture and Environment 2020; Avtar, R., Watanabe, T., Eds.; Springer Nature Switzerland AG: Cham, Switzerland, 2020; ISBN 978-3-030.271-56-5. [Google Scholar]

- Cooperativa Agrícola de Villena. Available online: http://www.agricolavillena.es (accessed on 6 July 2020).

- Goolge Maps. Available online: https://www.google.com/maps/place/03400+Villena,+Alicante/@38.6056364,-0.8684742,358a,35y,90h/data=!3m1!1e3!4m5!3m4!1s0xd63df76534191cb:0x76613d2e79c91d2e!8m2!3d38.6318196!4d-0.8612206 (accessed on 6 July 2020).

- Murray, M.G.; Thompson, W.F. Rapid isolation of high molecular weight plant DNA. Nucleic Acids Res. 1980, 8, 4321–4325. [Google Scholar] [CrossRef]

- Li, W.; Abad, J.A.; French-Monar, R.D.; Rascoe, J.; Wen, A.; Gudmestad, N.C.; Secor, G.A.; Lee, I.M.; Duan, Y.; Levy, L. Multiplex real-time PCR for detection, identification and quantification of ‘Candidatus Liberibacter solanacearum’ in potato plants with zebra chip. J. Microbiol. Methods 2009, 78, 59–65. [Google Scholar] [CrossRef]

- Teresani, G.R.; Bertolini, E.; Alfaro-Fernández, A.; Martínez, C.; Tanaka, F.A.; Kitajima, E.W.; Roselló, M.; Sanjuán, S.; Ferrándiz, J.C.; López, M.M.; et al. Association of ‘Candidatus Liberibacter solanacearum’ with a vegetative disorder of celery in Spain and development of a real-time PCR method for its detection. Phytopathology 2014, 104, 804–811. [Google Scholar] [CrossRef] [PubMed]

- Hren, M.; Boben, J.; Rotter, A.; Kralj, P.; Gruden, K.; Ravnikar, M. Real-time PCR detection systems for Flavescence dorée and Bois noir phytoplasmas in grapevine: Comparison with conventional PCR detection and application in diagnostics. Plant Pathol. 2007, 56, 785–796. [Google Scholar] [CrossRef]

- Alfaro-Fernández, A.; Ibañez, I.; Bertolini, E.; Hernández-Llopis, D.; Cambra, M.; Font, M.I. Transmission of Spiroplasma citri in carrot seeds and development of a real-time PCR for its detection. J. Plant Pathol. 2017, 99, 371–379. [Google Scholar]

- Munera, S.; Aleixos, N.; Besada, C.; Gómez-Sanchís, J.; Salvador, A.; Cubero, S.; Talens, P.; Blasco, J. Discrimination of astringent and deastringed hard ‘Rojo Brillante’ persimmon fruit using a sensory threshold by means of hyperspectral imaging. J. Food Eng. 2019, 263, 173–180. [Google Scholar] [CrossRef]

- Munera, S.; Amigo, J.M.; Aleixos, N.; Talens, P.; Cubero, S.; Blasco, J. Potential of VIS-NIR hyperspectral imaging and chemometric methods to identify similar cultivars of nectarine. Food Control 2018, 86, 1–10. [Google Scholar]

- Dutta, M.K.; Sengar, N.; Minhas, N.; Sarkar, B.; Goon, A.; Banerjee, K. Image processing based classification of grapes after pesticide exposure. LWT-Food Sci. Technol. 2016, 72, 368–376. [Google Scholar] [CrossRef]

- Chebrolu, N.; Lottes, P.; Schaefer, A.; Winterhalter, W.; Burgard, W.; Stachniss, C. Agricultural robot dataset for plant classification, localization and mapping on sugar beet fields. Int. J. Robot. Res. 2019, 36, 1045–1052. [Google Scholar] [CrossRef]

- Polder, G.; Blok, P.M.; Hendrik, A.C.V.; van der Wolf, J.M.; Kamp, J. Potato Virus Y Detection in Seed Potatoes Using Deep Learning on Hyperspectral Images. Front. Plant Sci. 2019, 10, 209. [Google Scholar] [CrossRef]

- Vasudevan, A.; Kumar, D.A.; Bhuvaneswari, N.S. Precision farming using unmanned aerial and ground vehicles. In Proceedings of the 2016 IEEE Technological Innovations in ICT for Agriculture and Rural Development (TIAR), Chennai, India, 15–16 July 2016; pp. 146–150. [Google Scholar] [CrossRef]

| V | LV | Set | Class | Sensitivity | Specificity | Error | Accuracy (%) |

|---|---|---|---|---|---|---|---|

| 60 | 6 | Cross-Validation | + | 0.75 | 0.62 | 0.32 | 68.4 |

| − | 0.62 | 0.75 | |||||

| Test set | + | 0.75 | 0.59 | 0.43 | 66.4 | ||

| − | 0.59 | 0.75 |

| LDA | QDA | SVM | ||||

|---|---|---|---|---|---|---|

| Class | Positive | Negative | Positive | Negative | Positive | Negative |

| Positive | 60.4 | 39.6 | 61.4 | 38.6 | 61.8 | 38.2 |

| Negative | 31.8 | 68.2 | 31.0 | 69.0 | 30.6 | 70.4 |

| Methods | Success Rate (%) | |||

|---|---|---|---|---|

| LV | Positive | Negative | ||

| PLS-DA | Full spectrum | 5 | 62.2 | 72.4 |

| UV-VIS-NIR | 6 | 61.8 | 69.4 | |

| NIR | 8 | 56.2 | 59.2 | |

| LDA | Full spectrum | 14 | 62.2 | 70.2 |

| UV-VIS-NIR | 12 | 61.2 | 68.8 | |

| NIR | 5 | 55.4 | 58.4 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cubero, S.; Marco-Noales, E.; Aleixos, N.; Barbé, S.; Blasco, J. RobHortic: A Field Robot to Detect Pests and Diseases in Horticultural Crops by Proximal Sensing. Agriculture 2020, 10, 276. https://doi.org/10.3390/agriculture10070276

Cubero S, Marco-Noales E, Aleixos N, Barbé S, Blasco J. RobHortic: A Field Robot to Detect Pests and Diseases in Horticultural Crops by Proximal Sensing. Agriculture. 2020; 10(7):276. https://doi.org/10.3390/agriculture10070276

Chicago/Turabian StyleCubero, Sergio, Ester Marco-Noales, Nuria Aleixos, Silvia Barbé, and Jose Blasco. 2020. "RobHortic: A Field Robot to Detect Pests and Diseases in Horticultural Crops by Proximal Sensing" Agriculture 10, no. 7: 276. https://doi.org/10.3390/agriculture10070276

APA StyleCubero, S., Marco-Noales, E., Aleixos, N., Barbé, S., & Blasco, J. (2020). RobHortic: A Field Robot to Detect Pests and Diseases in Horticultural Crops by Proximal Sensing. Agriculture, 10(7), 276. https://doi.org/10.3390/agriculture10070276