STL-ATTLSTM: Vegetable Price Forecasting Using STL and Attention Mechanism-Based LSTM

Abstract

1. Introduction

2. Related Work

2.1. Agricultural Price Forecasting Using Statistical Methods

2.2. Agricultural Price Forecasting Using Machine Learning and Deep Learning Methods

2.3. Summary and Contribution

3. Methods

3.1. Time-Series Data Decomposition Using STL

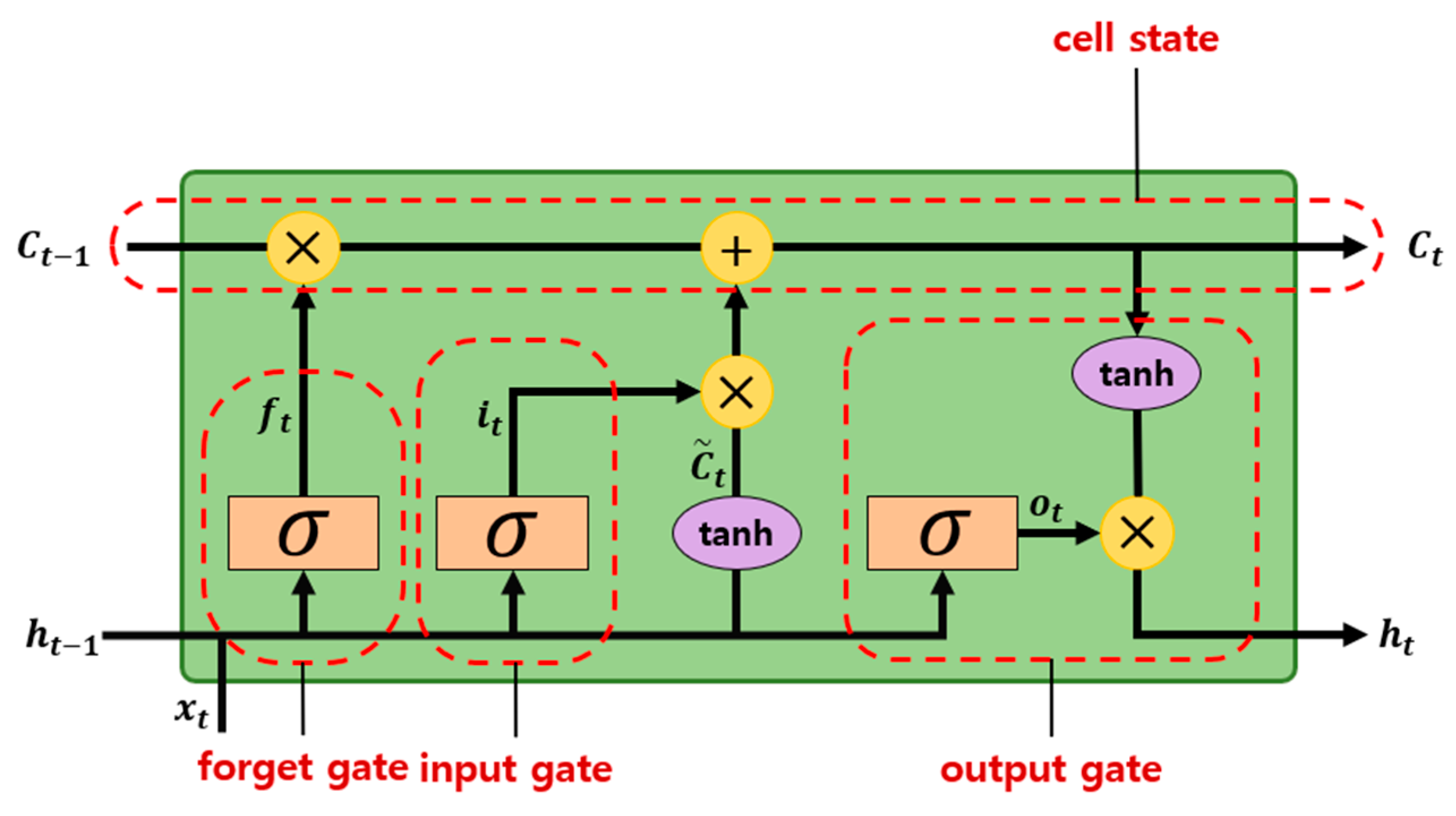

3.2. LSTM Model

3.3. Attention Mechanism

3.4. Proposed STL-ATTLSTM Method

4. Research Design

4.1. Dataset Description

4.2. Measurement Criteria

4.3. Optimal Time-Step Search

4.4. Performance Comparison between the Proposed Method and Benchmark Models

5. Results and Discussions

6. Conclusions and Future Research

Author Contributions

Funding

Conflicts of Interest

References

- FAO. 2018 FAOSTAT Oline Database. Available online: http://www.fao.org/faostat/en/#data (accessed on 7 December 2020).

- Rao, J.M. Agricultural Supply Response: A Survey. Agric. Econ. 1989, 3, 1–22. [Google Scholar] [CrossRef]

- Xiong, T.; Li, C.; Bao, Y. Seasonal forecasting of agricultural commodity price using a hybrid STL and ELM method: Evidence from the vegetable market in China. Neurocomputing 2018, 275, 2831–2844. [Google Scholar] [CrossRef]

- Yoo, D. Developing vegetable price forecasting model with climate factors. Korean J. Agric. Econ. 2016, 275, 2831–2844. [Google Scholar]

- Nam, K.-H.; Choe, Y.-C. A study on onion wholesale price forecasting model. J. Agric. Ext. Community Dev. 2015, 22, 423–434. [Google Scholar]

- Gu, Y.; Yoo, S.; Park, C.; Kim, Y.; Park, S.; Kim, J.; Lim, J. BLITE-SVR: New forecasting model for late blight on potato using support-vector regression. Comput. Electron. Agric. 2016, 130, 169–176. [Google Scholar] [CrossRef]

- Fafchamps, M.; Minten, B. Impact of SMS-based agricultural information on Indian farmers. World Bank Econ. Rev. 2012, 26, 383–414. [Google Scholar] [CrossRef]

- Lim, C.; Kim, G.; Lee, E.; Heo, S.; Kim, T.; Kim, Y.; Lee, W. Development on crop yield forecasting model for major vegetable crops using meteorological information of main production area. J. Clim. Chang. Res. 2016, 7, 193–203. [Google Scholar] [CrossRef]

- Ariyo, A.A.; Adewumi, A.O.; Ayo, C.K. Stock price prediction using the ARIMA model. In Proceedings of the 2014 UKSim-AMSS 16th International Conference on Computer Modelling and Simulation, Cambridge, UK, 26–28 March 2014; pp. 106–112. [Google Scholar]

- Qin, Y.; Song, D.; Chen, H.; Cheng, W.; Jiang, G.; Cottrell, G. A dual-stage attention-based recurrent neural network for time series prediction. arXiv 2017, arXiv:1704.02971. [Google Scholar]

- Li, Y.; Zhu, Z.; Kong, D.; Han, H.; Zhao, Y. EA-LSTM: Evolutionary attention-based LSTM for time series prediction. Knowl. Based Syst. 2019, 181, 104785. [Google Scholar] [CrossRef]

- Assis, K.; Amran, A.; Remali, Y. Forecasting cocoa bean prices using univariate time series models. Res. World 2010, 1, 71. [Google Scholar]

- Adanacioglu, H.; Yercan, M. An analysis of tomato prices at wholesale level in Turkey: An application of SARIMA model. Custos e @Gronegócio 2012, 8, 52–75. [Google Scholar]

- BV, B.P.; Dakshayini, M. Performance analysis of the regression and time series predictive models using parallel implementation for agricultural data. Procedia Comput. Sci. 2018, 132, 198–207. [Google Scholar]

- Darekar, A.; Reddy, A.A. Cotton price forecasting in major producing states. Econ. Aff. 2017, 62, 373–378. [Google Scholar] [CrossRef]

- Jadhav, V.; Chinnappa, R.B.; Gaddi, G. Application of ARIMA model for forecasting agricultural prices. J. Agric. Sci. Technol. 2017, 19, 981–992. [Google Scholar]

- Pardhi, R.; Singh, R.; Paul, R.K. Price Forecasting of Mango in Lucknow Market of Uttar Pradesh. Int. J. Agric. Environ. Biotechnol. 2018, 11, 357–363. [Google Scholar]

- Wei, M.; Zhou, Q.; Yang, Z.; Zheng, J. Prediction model of agricultural product’s price based on the improved BP neural network. In Proceedings of the 2012 7th International Conference on Computer Science & Education (ICCSE), Melbourne, VIC, Australia, 14–17 July 2012; pp. 613–617. [Google Scholar]

- Buddhakulsomsiri, J.; Parthanadee, P.; Pannakkong, W. Prediction models of starch content in fresh cassava roots for a tapioca starch manufacturer in Thailand. Comput. Electron. Agric. 2018, 154, 296–303. [Google Scholar] [CrossRef]

- Wang, B.; Liu, P.; Chao, Z.; Wang, J.; Chen, W.; Cao, N.; O’Hare, G.M.; Wen, F. Research on hybrid model of garlic short-term price forecasting based on big data. CMC Comput. Mater. Continua 2018, 57, 283–296. [Google Scholar] [CrossRef]

- Nasira, G.; Hemageetha, N. Vegetable price prediction using data mining classification technique. In Proceedings of the International Conference on Pattern Recognition, Informatics and Medical Engineering (PRIME-2012), Salem, India, 21–23 March 2012; pp. 99–102. [Google Scholar]

- Hemageetha, N.; Nasira, G. Radial basis function model for vegetable price prediction. In Proceedings of the 2013 International Conference on Pattern Recognition, Informatics and Mobile Engineering, Salem, India, 21–22 February 2013; pp. 424–428. [Google Scholar]

- Li, Z.-M.; Cui, L.-G.; Xu, S.-W.; Weng, L.-Y.; Dong, X.-X.; Li, G.-Q.; Yu, H.-P. (JIA2013–0072) The Prediction Model of Weekly Retail Price of Eggs Based on Chaotic Neural Network. J. Integr. Agric. 2013, 14, 2292–2299. [Google Scholar]

- Luo, C.; Wei, Q.; Zhou, L.; Zhang, J.; Sun, S. In Prediction of vegetable price based on Neural Network and Genetic Algorithm. In International Conference on Computer and Computing Technologies in Agriculture; Springer: Berlin/Heidelberg, Germany, 2010; pp. 672–681. [Google Scholar]

- Zhang, D.; Zang, G.; Li, J.; Ma, K.; Liu, H. Prediction of soybean price in China using QR-RBF neural network model. Comput. Electron. Agric. 2018, 154, 10–17. [Google Scholar] [CrossRef]

- Asgari, S.; Sahari, M.A.; Barzegar, M. Practical modeling and optimization of ultrasound-assisted bleaching of olive oil using hybrid artificial neural network-genetic algorithm technique. Comput. Electron. Agric. 2017, 140, 422–432. [Google Scholar] [CrossRef]

- Ebrahimi, M.; Sinegani, A.A.S.; Sarikhani, M.R.; Mohammadi, S.A. Comparison of artificial neural network and multivariate regression models for prediction of Azotobacteria population in soil under different land uses. Comput. Electron. Agric. 2017, 140, 409–421. [Google Scholar] [CrossRef]

- Subhasree, M.; Priya, C.A. Forecasting Vegetable Price Using Time Series Data. Int. J. Adv. Res. 2016, 3, 535–641. [Google Scholar]

- Li, Y.; Li, C.; Zheng, M. A Hybrid Neural Network and Hp Filter Model for Short-Term Vegetable Price Forecasting. Math. Probl. Eng. 2014, 2014, 135862. [Google Scholar]

- Jin, D.; Yin, H.; Gu, Y.; Yoo, S.J. Forecasting of Vegetable Prices using STL-LSTM Method. In Proceedings of the 2019 6th International Conference on Systems and Informatics (ICSAI), Shanghai, China, 2–4 November 2019; pp. 866–871. [Google Scholar]

- Liu, Y.; Duan, Q.; Wang, D.; Zhang, Z.; Liu, C. Prediction for Hog Prices Based on Similar Sub-Series Search and Support Vector Regression. Comput. Electron. Agric. 2019, 157, 581–588. [Google Scholar] [CrossRef]

- Leksakul, K.; Holimchayachotikul, P.; Sopadang, A. Forecast of off-season longan supply using fuzzy support vector regression and fuzzy artificial neural network. Comput. Electron. Agric. 2015, 118, 259–269. [Google Scholar] [CrossRef]

- Karimi, Y.; Prasher, S.; Patel, R.; Kim, S. Application of support vector machine technology for weed and nitrogen stress detection in corn. Comput. Electron. Agric. 2006, 51, 99–109. [Google Scholar] [CrossRef]

- Chen, Q.; Lin, X.; Zhong, Y.; Xie, Z. Price Prediction of Agricultural Products Based on Wavelet Analysis-LSTM. In Proceedings of the 2019 IEEE Intl Conf on Parallel & Distributed Processing with Applications, Big Data & Cloud Computing, Sustainable Computing & Communications, Social Computing & Networking (ISPA/BDCloud/SocialCom/SustainCom), Xiamen, China, 16–18 December 2019; pp. 984–990. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Zhang, X.; Liang, X.; Zhiyuli, A.; Zhang, S.; Xu, R.; Wu, B. AT-LSTM: An Attention-based LSTM Model for Financial Time Series Prediction. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Zhuhai, China, 17–19 May 2019; p. 052037. [Google Scholar]

- Ran, X.; Shan, Z.; Fang, Y.; Lin, C. An LSTM-based method with attention mechanism for travel time prediction. Sensors 2019, 19, 861. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Athanasopoulos, G. Forecasting: Principles and Practice; OTexts: Melbourne, Australia, 2018. [Google Scholar]

- Dagum, E.B.; Bianconcini, S. Seasonal Adjustment Methods and Real Time Trend-Cycle Estimation; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Mikolov, T.; Joulin, A.; Chopra, S.; Mathieu, M.; Ranzato, M.A. Learning longer memory in recurrent neural networks. arXiv 2014, arXiv:1412.7753. [Google Scholar]

- Hochreiter, S.; Bengio, Y.; Frasconi, P.; Schmidhuber, J. Gradient flow in recurrent nets: The difficulty of learning long-term dependencies. In A Field Guide to Dynamical Recurrent Neural Networks; IEEE Press: New York, NY, USA, 2001. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- KREI OASIS: Outlook & Agricultural Statistics Information System. Available online: https://oasis.krei.re.kr/index.do (accessed on 7 December 2020).

- aT KAMIS: Korea Agricultural Marketing Information Service. Available online: https://www.kamis.or.kr/customer/main/main.do (accessed on 7 December 2020).

- KMA: Korea Meteorological Administration. Available online: https://www.kma.go.kr/eng/index.jsp (accessed on 7 December 2020).

- KOSIS: KOrean Statistical Information Service. Available online: https://kosis.kr/index/index.do (accessed on 7 December 2020).

- aT NongNet: Korea Agro-Fisheries & Food Trade Corporation. Available online: https://www.nongnet.or.kr/index.do#homePage (accessed on 7 December 2020).

- Liu, Y.; Wang, Y.; Yang, X.; Zhang, L. Short-term travel time prediction by deep learning: A comparison of different LSTM-DNN models. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–8. [Google Scholar]

- Li, H.; Shen, Y.; Zhu, Y. Stock price prediction using attention-based multi-input LSTM. In Proceedings of the Asian Conference on Machine Learning, Beijing, China, 14–16 November 2018; pp. 454–469. [Google Scholar]

- Fan, M.; Hu, Y.; Zhang, X.; Yin, H.; Yang, Q.; Fan, L. Short-term Load Forecasting for Distribution Network Using Decomposition with Ensemble prediction. In Proceedings of the 2019 Chinese Automation Congress (CAC), Hangzhou, China, 22–24 November 2019; pp. 152–157. [Google Scholar]

| Author | Models | Type | Input Variable | Deal with Seasonal or Trend | Feature Engineering |

|---|---|---|---|---|---|

| Assis and Remali [12] | ARIMA/GARCH | Cocoa beans | Price | X | X |

| Adanacioglu and Yercan [13] | SARIMA | Tomato | Price | O | X |

| Darekar and Reddy [15] | ARIMA | Cotton | Price | X | X |

| Jadhav et al. [16] | ARIMA | Paddy, ragi, maize | Price | X | X |

| Pardhi et al. [17] | ARIMA | Mango | Price | X | X |

| Minghua [18] | Mean impact value with BPNN | Vegetable price index | Macro index, price index, production | X | O |

| Nasira and Hemageetha [21] | BPNN | Tomato | Price | X | X |

| Luo et al. [23] | BPNN, RBF-NN, GA-BPNN, integrated model | Lentinus edodes | Price | X | X |

| Hemageetha and Nasira [22] | RBF-NN | Tomato | Price | X | X |

| Li et al. [23] | Chaotic neural network | Egg | Price | X | X |

| Subhasree and Priya [28] | BPNN, RBF-NN, GA-BPNN | brinjal, ladies finger, tomato, broad beans, onion | Price | X | X |

| Zhang et al. [25] | QR-RBF neural network with GDGA | Soybean | Import/Output, consumer index, money supply | X | X |

| Li et al. [29] | H-P filter with ANN | Cabbage, pepper, cucumber, green bean, tomato | Price | O | X |

| Ge and Wu [6] | Multiple linear regression | Corn | Price, production | X | O |

| Yoo [4] | VAR and Bayesian structure time-series | Cabbage | Price, production, climate | O | X |

| Wang et al. [20] | ARIMA-SVM | Garlic | Price | O | X |

| BV and Dakshayini [14] | Holt’s Winter model | Tomato | Price, demand | O | X |

| Xiong et al. [3] | STL-ELM | Cabbage, pepper, cucumber, green bean, tomato | Price | O | X |

| Jin et al. [30] | STL-LSTM | Cabbage, radish | Price, climate, trading volume | O | X |

| Liu et al. [31] | Similar sub-series search-based SVR | Hog | Price | O | X |

| Chen et al. [34] | Wavelet analysis with LSTM | Cabbage | Price | X | X |

| Attention Layer | Unit Size | Number of Input Variables |

|---|---|---|

| Activation Function | Softmax | |

| LSTM layer | Unit size | 6 |

| Activation function | Tanh | |

| Stateful | True | |

| Fully connected layer | Dropout rate | 0.2 |

| Dense layer #1 unit size | 10 | |

| Dense layer #1 activation function | Linear | |

| Dense layer #2 unit size | 1 | |

| Dense layer #2 activation function | Linear |

| Vegetable | Cropping Type | Harvest Time | Main Production Area |

|---|---|---|---|

| Cabbage | Winter | Jan–Mar | Haenam, Jindo, Muan |

| Spring | Ap–Jun | Yeongwol, Naju, Mungyeong | |

| High Land | Jul–Sep | Gangneung, Taebaek, Pyeongchang | |

| Autumn | Oct–Dec | Haenam, Mungyeong, Yeongwol | |

| Radish | Winter | Jan– Mar | Jeju |

| Spring | Apr–Jun | Dangjin, Buan, Yeongam | |

| High Land | Jul–Sep | Pyeongchang, Hongcheon, Gangneung | |

| Autumn | Oct–Dec | Dangjin, Yeongam, Gochang |

| Category | Code | Description | Formula |

|---|---|---|---|

| Price | AV_P_A | Current price | |

| R_p | Monthly average price deviation | ||

| P_diff | Price difference to previous month | ||

| P_lag | Past monthly price | pt−n n: the amount of lag | |

| EMA | Exponential moving average | ||

| Year_res | Price difference to 12 months ago | ||

| P_sum | Sum of previous month prices | ||

| Residual | Remainder component value using STL | ||

| Trading volume | SUM_TOT | Monthly cumulative trading volume | |

| R_q | Trading volume deviation | ||

| Q_diff | Difference to previous month trading volume | ||

| Carry_res | Difference to normal year trading volume | ||

| Q_sum | Sum of previous month trading volume | ||

| Climate | AVGTA | Monthly average temperature | |

| MINTA | Monthly minimum temperature | ||

| AVGRHM | Monthly average humidity | ||

| SUMRN | Monthly cumulative precipitation | ||

| Min_ta_count | Days when average temperature < 5 | ||

| Mid_ta_count | Days when 15 < average temperature < 22 | ||

| Max_ta_count | Days when average temperature > 32 | ||

| Typhoon_advisory | Number of typhoon advisory days | ||

| Typhoon_warning | Number of typhoon warning days | ||

| Other | Quantity | Import amount | |

| Cost | Import unit price |

| Vegetable Type | Matric | Model | ||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 4 | 6 | 8 | 12 | 16 | ||

| Cabbage | RMSE | 4993 | 1681 | 1196 | 3376 | 3289 | 3771 | 4924 |

| MAPE | 41% | 18% | 9% | 34% | 23% | 27% | 49% | |

| Radish | RMSE | 121 | 170 | 105 | 149 | 118 | 196 | 247 |

| MAPE | 13% | 15% | 9% | 18% | 15% | 24% | 41% | |

| Onion | RMSE | 139 | 161 | 134 | 159 | 134 | 122 | 167 |

| MAPE | 20% | 15% | 12% | 28% | 22% | 15% | 32% | |

| Pepper | RMSE | 837 | 402 | 368 | 515 | 1930 | 1070 | 1894 |

| MAPE | 8% | 4% | 3% | 5% | 20% | 11% | 19% | |

| Garlic | RMSE | 142 | 383 | 96 | 369 | 1060 | 328 | 896 |

| MAPE | 3% | 9% | 2% | 8% | 26% | 8% | 21% | |

| Average | RMSE | 1247 | 559 | 380 | 913 | 1306 | 1097 | 1626 |

| MAPE | 17% | 12% | 7% | 19% | 21% | 17% | 32% | |

| Vegetable Type | LSTM | Attention LSTM | STL-LSTM | STL-ATTLSTM | ||||

|---|---|---|---|---|---|---|---|---|

| RMSE | MAPE | RMSE | MAPE | RMSE | MAPE | RMSE | MAPE | |

| Cabbage | 4972 | 55% | 3602 | 30% | 2033 | 19% | 1196 | 9% |

| Radish | 271 | 26% | 101 | 16% | 93 | 13% | 105 | 9% |

| Onion | 122 | 23% | 225 | 42% | 108 | 16% | 134 | 12% |

| Pepper | 844 | 8% | 914 | 7% | 539 | 5% | 368 | 3% |

| Garlic | 229 | 5% | 106 | 2% | 218 | 5% | 96 | 2% |

| Average | 1288 | 23% | 990 | 19% | 598 | 12% | 380 | 7% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yin, H.; Jin, D.; Gu, Y.H.; Park, C.J.; Han, S.K.; Yoo, S.J. STL-ATTLSTM: Vegetable Price Forecasting Using STL and Attention Mechanism-Based LSTM. Agriculture 2020, 10, 612. https://doi.org/10.3390/agriculture10120612

Yin H, Jin D, Gu YH, Park CJ, Han SK, Yoo SJ. STL-ATTLSTM: Vegetable Price Forecasting Using STL and Attention Mechanism-Based LSTM. Agriculture. 2020; 10(12):612. https://doi.org/10.3390/agriculture10120612

Chicago/Turabian StyleYin, Helin, Dong Jin, Yeong Hyeon Gu, Chang Jin Park, Sang Keun Han, and Seong Joon Yoo. 2020. "STL-ATTLSTM: Vegetable Price Forecasting Using STL and Attention Mechanism-Based LSTM" Agriculture 10, no. 12: 612. https://doi.org/10.3390/agriculture10120612

APA StyleYin, H., Jin, D., Gu, Y. H., Park, C. J., Han, S. K., & Yoo, S. J. (2020). STL-ATTLSTM: Vegetable Price Forecasting Using STL and Attention Mechanism-Based LSTM. Agriculture, 10(12), 612. https://doi.org/10.3390/agriculture10120612