Event-Related Potential to Conscious and Nonconscious Emotional Face Perception in Females with Autistic-Like Traits

Abstract

1. Introduction

1.1. Aims

1.2. Hypotheses

2. Methods

2.1. Participants

2.2. Personality Measures

- (1)

- Autism Spectrum Quotient (AQ). The AQ is a self-administered questionnaire consisting of 50 questions, devised to quantitatively measure the degree to which a person with normal intelligence has autistic traits [19,20]. Participants respond using a 4-point rating scale (definitely agree—slightly agree—slightly disagree—definitely disagree) across five domains: social skills, attention switching, attention to detail, communication, and imagination. The individual scores one point for each answer that reflects abnormal or autistic-like behavior. This measure is sensitive to autistic traits in nonclinical populations [74,75].

- (2)

- The Raven’s Advanced Progressive Matrices (RAPM). The RAPM is a standardized nonverbal intelligence test and is generally used as a test of general cognitive ability and intelligence [76]. It consists of visually presented geometric figures where one part is missing, and the missing part must be selected from a panel of suggested answers to complete the designs. The RAPM was used to eliminate general intelligence as a potential explanation of any differences found between AQ groups. On this basis, all participants had a RAPM score of at least 14, which is in the normal range of the Italian Population (M = 20.4, SD = 5.6, Age range: 15–47 years, N = 1762) [76].

2.3. Stimuli

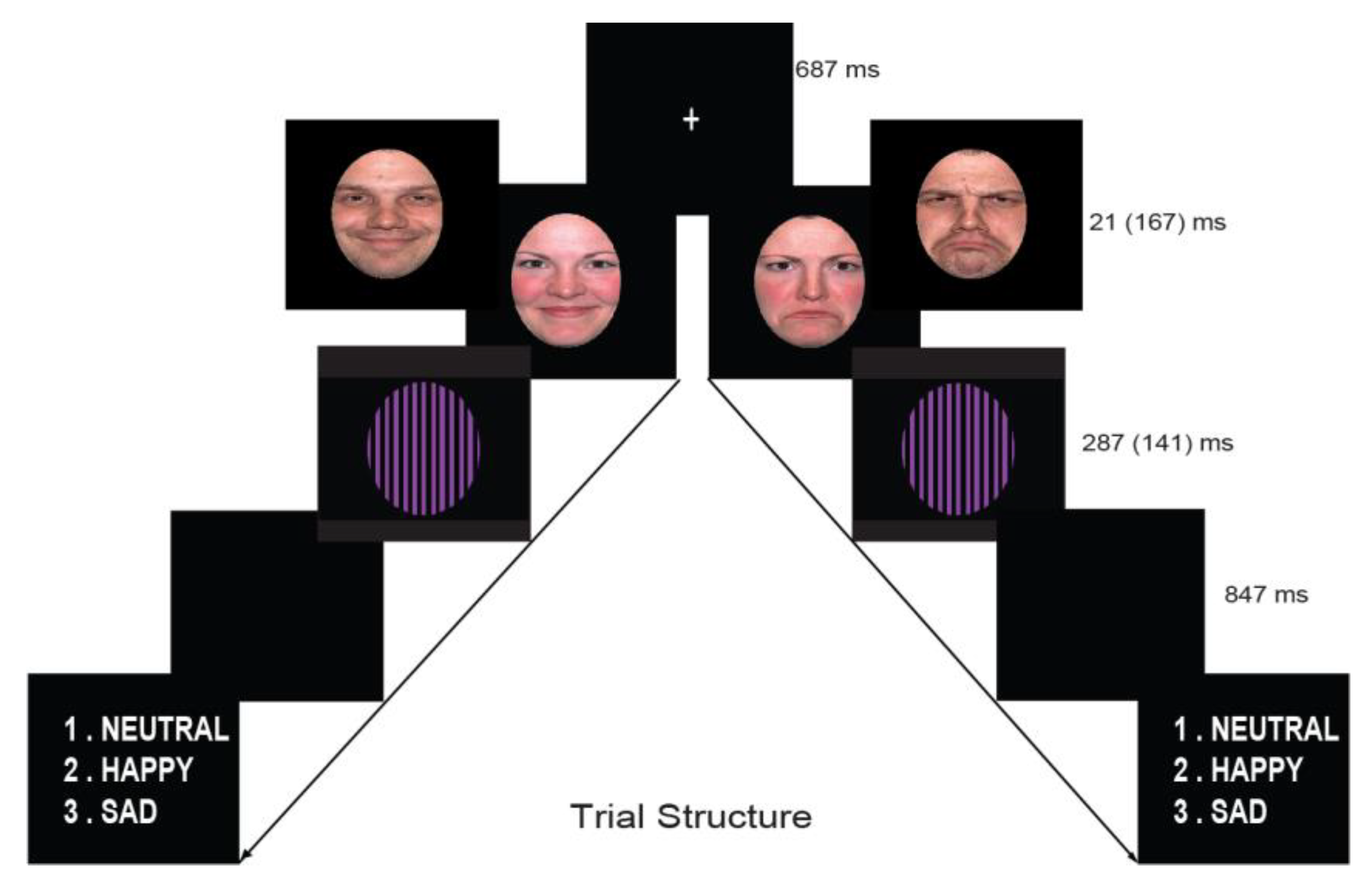

2.4. Procedure

2.5. EEG Recording

2.6. Behavioral Data Analysis

2.7. ERP Analyses

3. Results

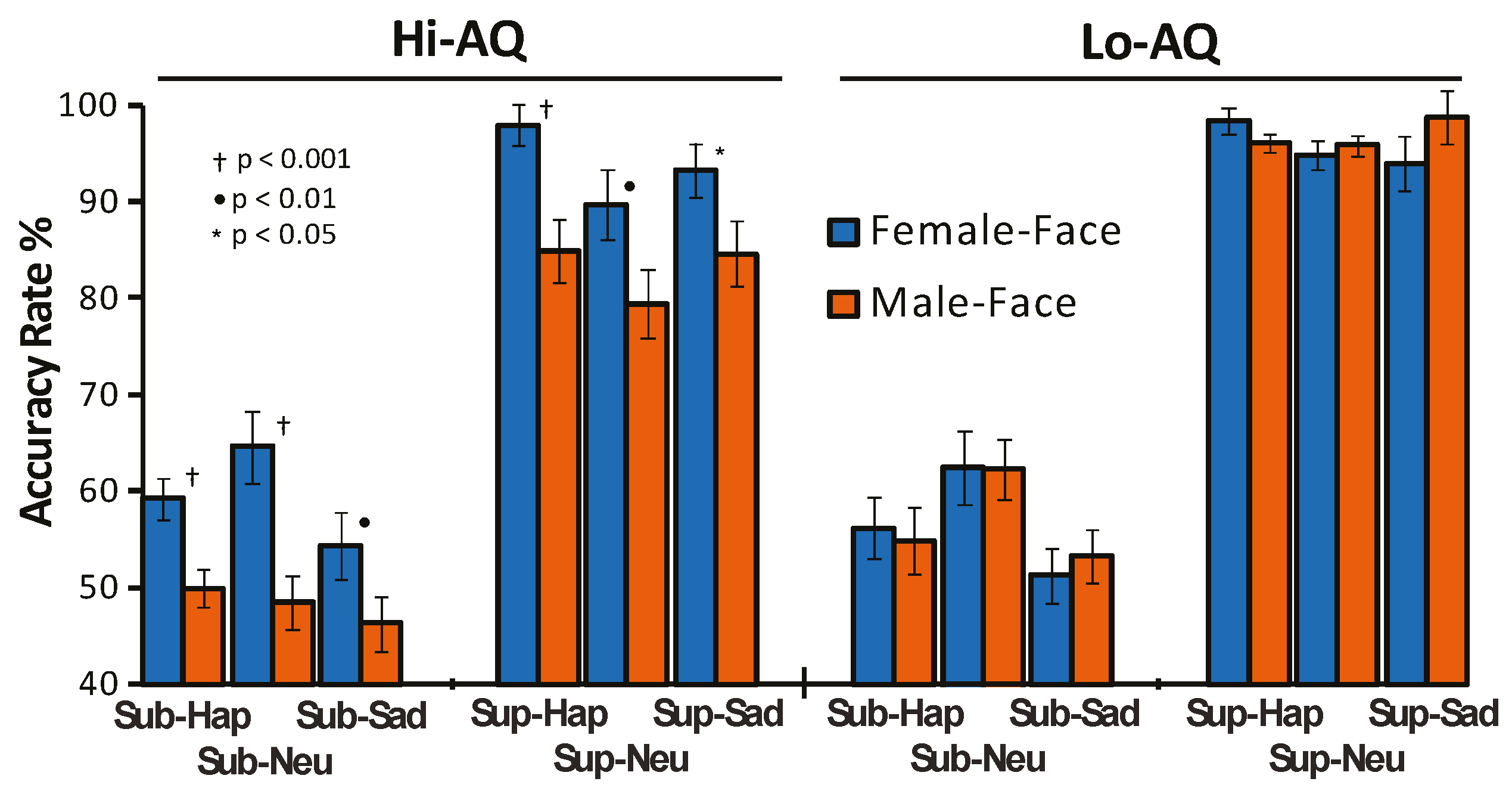

3.1. Behavioral and Personality Results

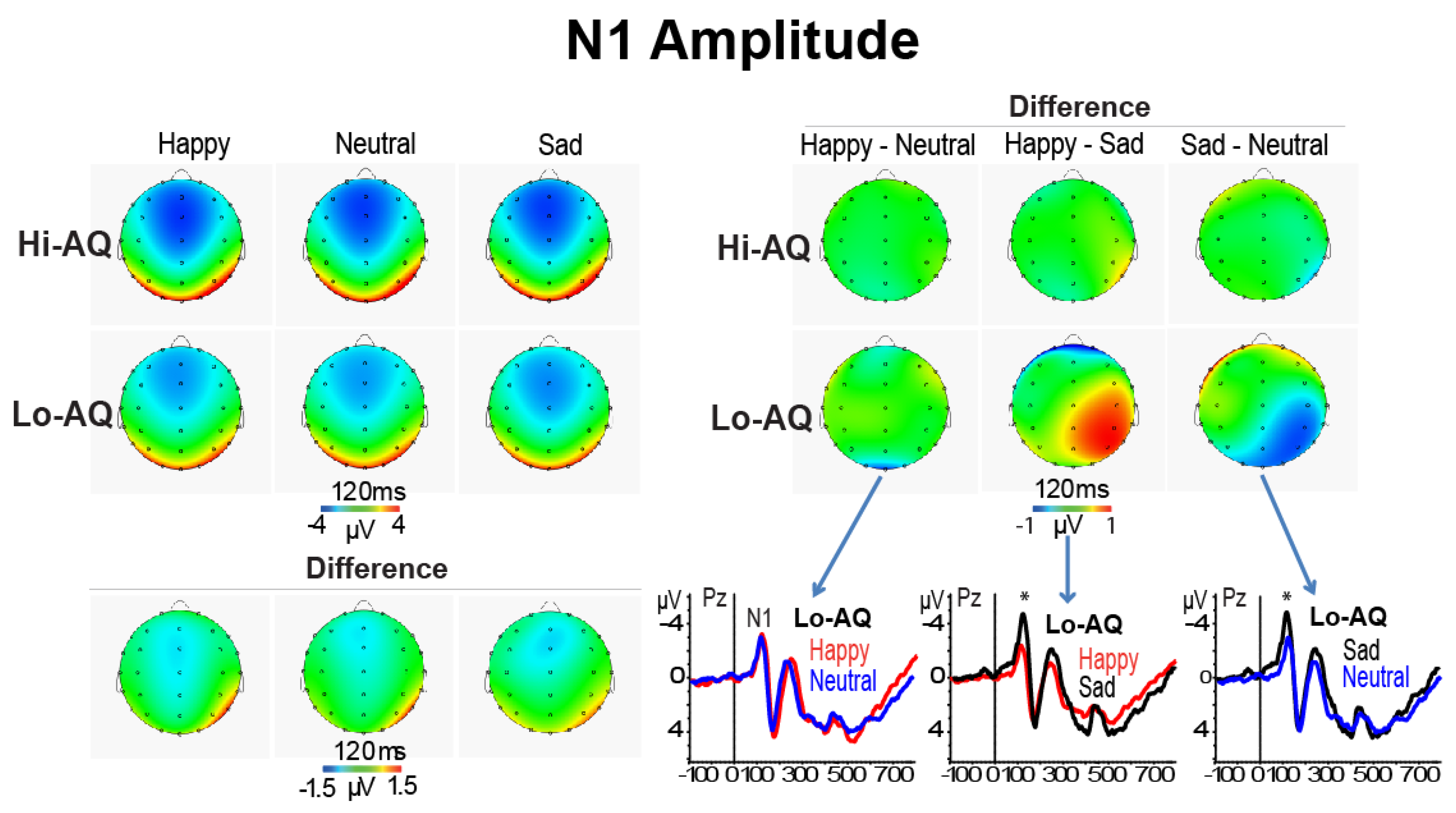

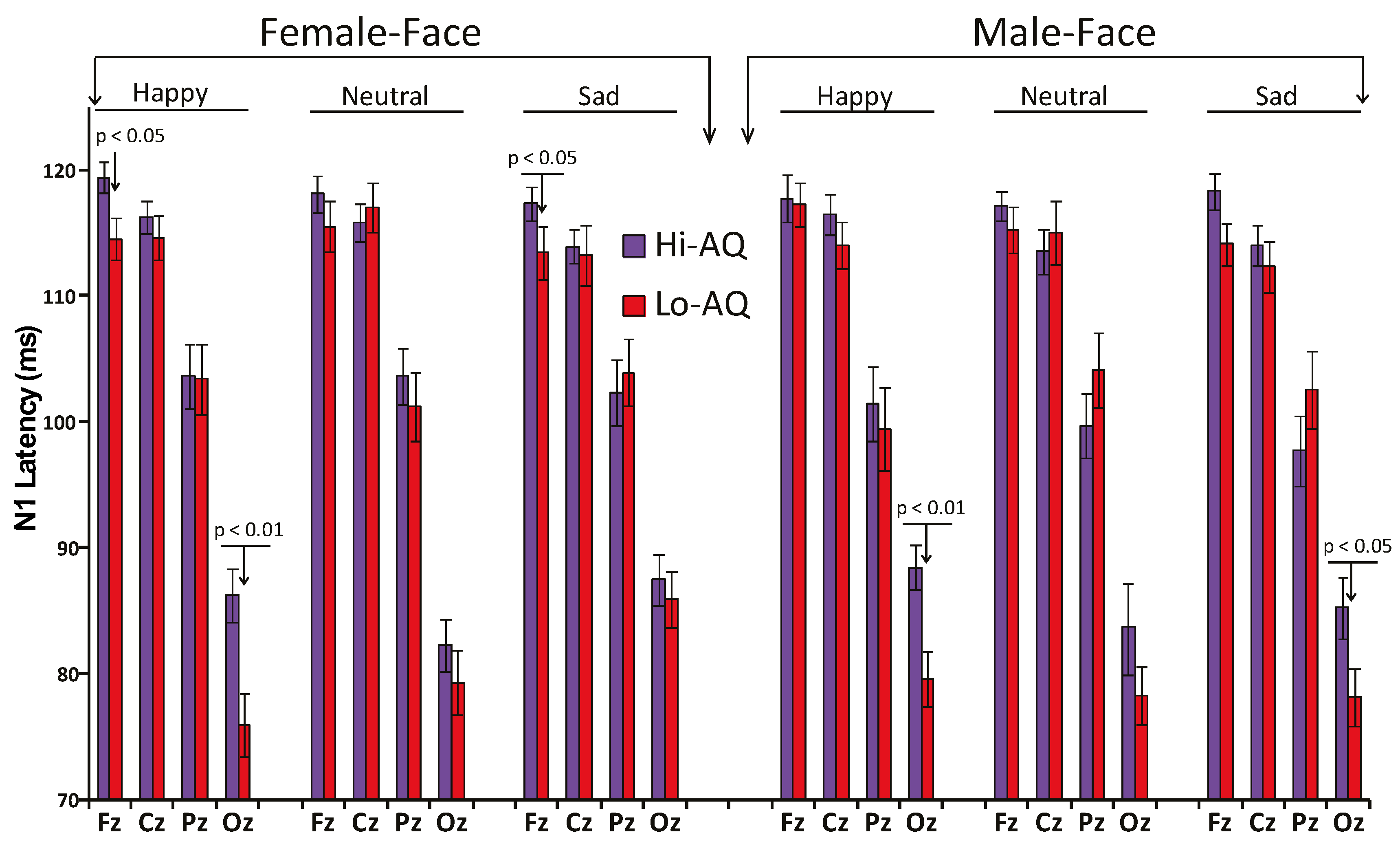

3.2. ERP Results

3.2.1. N1 Amplitude and Latency

3.2.2. N170 Amplitude and Latency

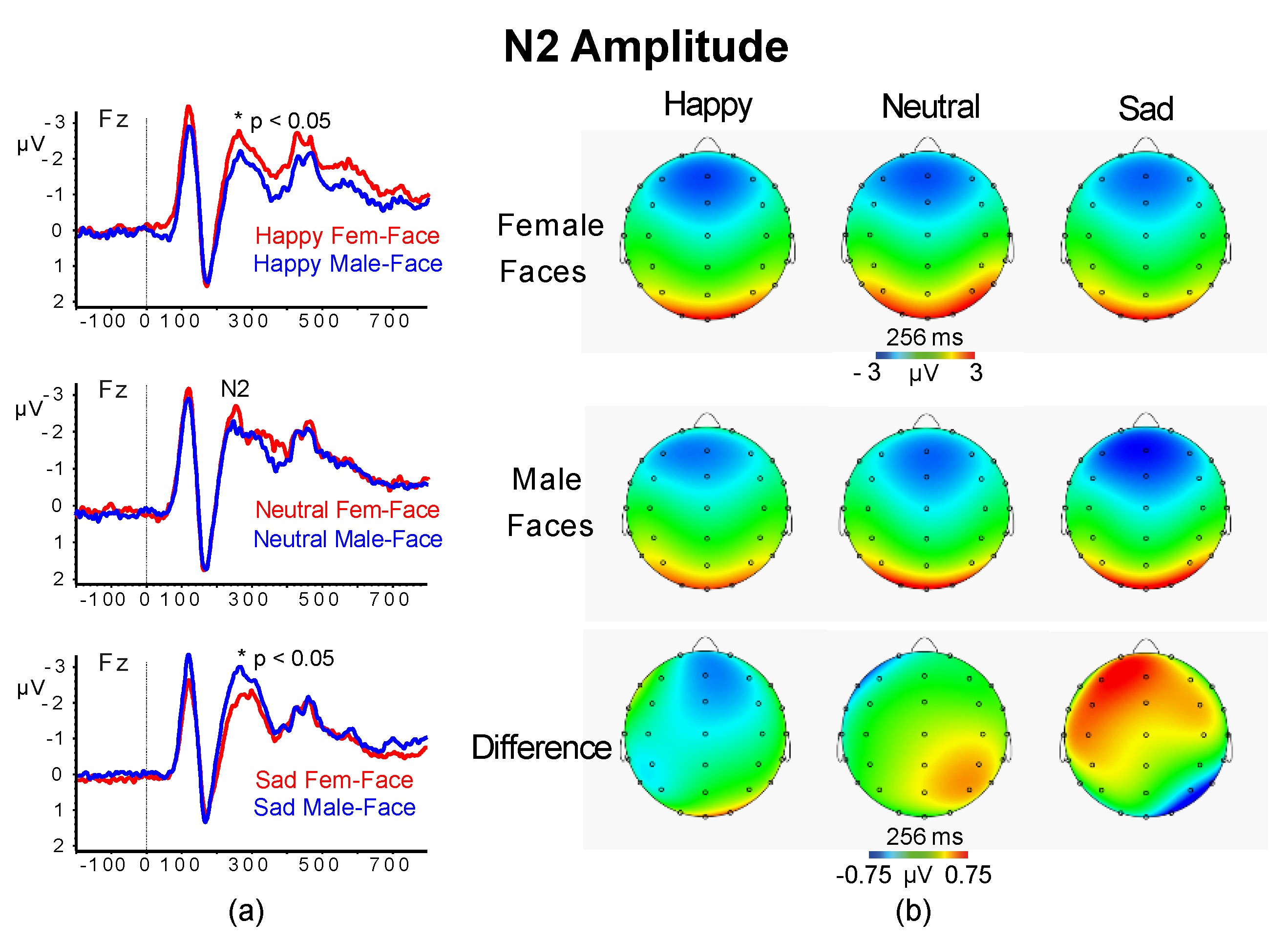

3.2.3. N2 Amplitude and Latency

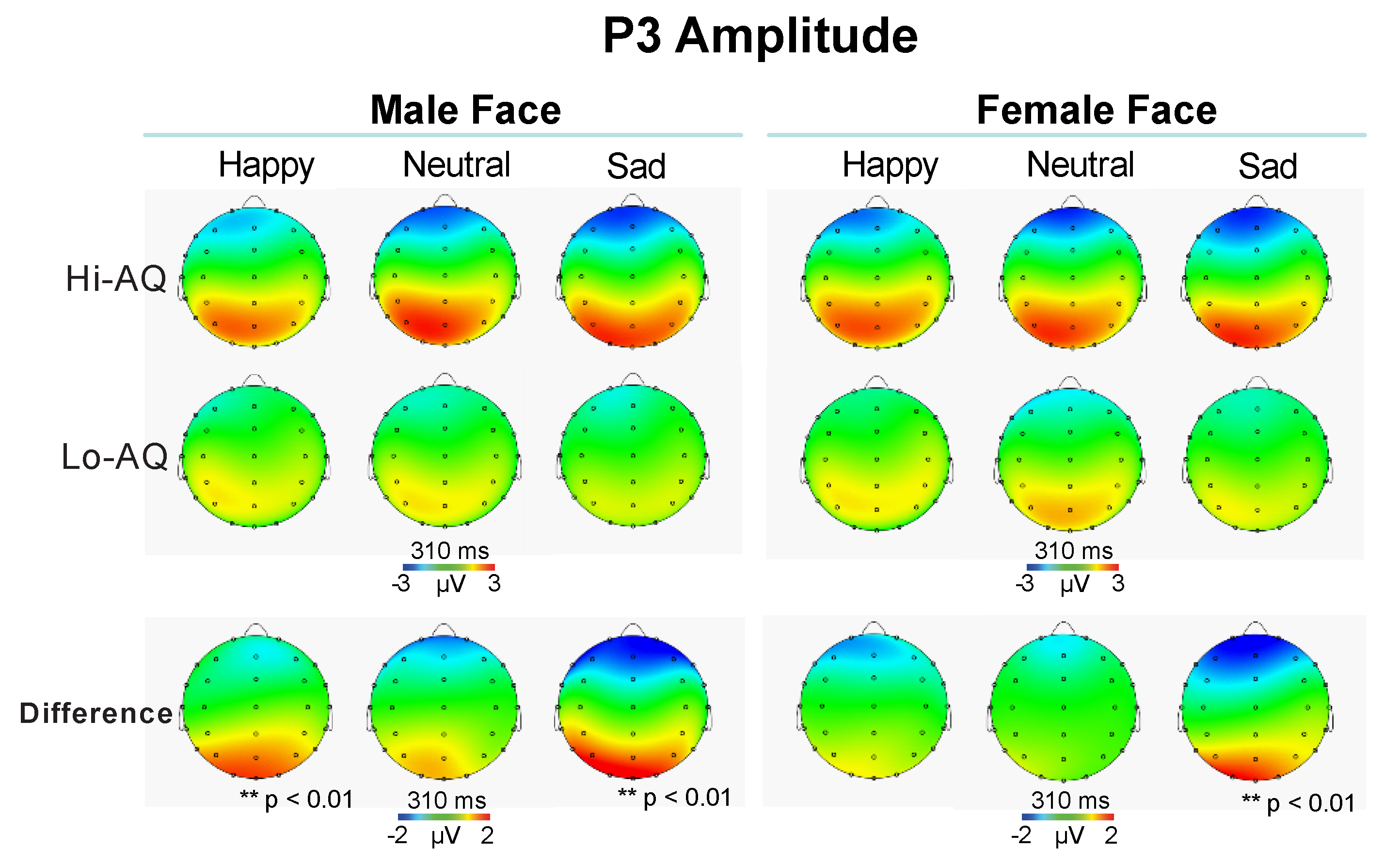

3.2.4. P3 Amplitude and Latency

3.2.5. N4 Amplitude and Latency

4. Discussion

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Baron-Cohen, S.; Gillberg, C. Mind blindness: An Essay on Autism and Theory of Mind; Developmental Medicine and Child Neurology; MIT Press: Cambridge, MA, USA, 1995. [Google Scholar]

- Deutsch, S.I.; Raffaele, C.T. Understanding facial expressivity in autism spectrum disorder: An inside out review of the biological basis and clinical implications. Prog. Neuropsychopharmacol. Biol. Psychiatry 2019, 88, 401–417. [Google Scholar] [CrossRef] [PubMed]

- Behrmann, M.; Avidan, G.; Leonard, G.L.; Kimchi, R.; Luna, B.; Humphreys, K.; Minshew, N. Configural processing in autism and its relationship to face processing. Neuropsychologia 2006, 44, 110–129. [Google Scholar] [CrossRef] [PubMed]

- Berger, M. A model of preverbal social development and its application to social dysfunctions in autism. J. Child Psychol. Psychiatry 2006, 47, 338–371. [Google Scholar] [CrossRef]

- Dawson, G.; Webb, S.J.; McPartland, J. Understanding the nature of face processing impairment in autism: Insights from behavioral and electrophysiological studies. Dev. Neuropsychol. 2005, 27, 403–424. [Google Scholar] [CrossRef] [PubMed]

- Grelotti, D.J.; Gauthier, I.; Schultz, R.T. Social interest and the development of cortical face specialization: What autism teaches us about face processing. Dev. Psychobiol. 2002, 40, 213–225. [Google Scholar] [CrossRef] [PubMed]

- Sasson, N. The development of face processing in autism. J. Autism Dev. Disord. 2006, 36, 381–394. [Google Scholar] [CrossRef]

- Schultz, R.T. Developmental deficits in social perception in autism: The role of the amygdala and fusiform face area. Int. J. Dev. Neurosci. 2005, 23, 125–141. [Google Scholar] [CrossRef]

- Baron-Cohen, S.; Hammer, J. Is autism an extreme form of the “male brain”? Adv. Infancy Res. 1997, 11, 193–218. [Google Scholar]

- Bölte, S.; Poustka, F. The recognition of facial affect in autistic and schizophrenic subjects and their first-degree relatives. Psychol. Med. 2003, 33, 907–915. [Google Scholar] [CrossRef]

- Wallace, S.; Sebastian, C.; Pellicano, E.; Parr, J.; Bailey, A. Face processing abilities in relatives of individuals with ASD. Autism Res. 2010, 3, 345–349. [Google Scholar] [CrossRef]

- Adolphs, R.; Sears, L.; Piven, J. Abnormal processing of social information from faces in autism. J. Cogn. Neurosci. 2001, 13, 232–240. [Google Scholar] [CrossRef] [PubMed]

- Castelli, F. Understanding emotions from standardized facial expressions in autism and normal development. Autism 2005, 9, 428–449. [Google Scholar] [CrossRef]

- Golan, O.; Baron-Cohen, S.; Hill, J.J.; Rutherford, M.D. The ‘Reading the Mind in the Voice’ test-revised: A study of complex emotion recognition in adults with and without autism spectrum conditions. J. Autism Dev. Disord. 2007, 37, 1096–1106. [Google Scholar] [CrossRef] [PubMed]

- Constantino, J.N.; Todd, R.D. Autistic traits in the general population: A twin study. JAMA Psychiatry 2003, 60, 524–530. [Google Scholar] [CrossRef] [PubMed]

- Pickles, A.; Starr, E.; Kazak, S.; Bolton, P.; Papanikolaou, K.; Bailey, A.; Goodman, R.; Rutter, M. Variable expression of the autism broader phenotype: Findings from extended pedigrees. J. Child Psychol. Psychiatry 2000, 41, 491–502. [Google Scholar] [CrossRef]

- Piven, J.; Palmer, P.; Jacobi, D.; Childress, D.; Arndt, S. Broader autism phenotype: Evidence from a family history study of multiple-incidence autism families. Am. J. Psychiatry 1997, 154, 185–190. [Google Scholar]

- Ronald, A.; Hoekstra, R.A. Autism spectrum disorders and autistic traits: A decade of new twin studies. Am. J. Med. Genet. B Neuropsychiatr. Genet. 2011, 156, 255–274. [Google Scholar] [CrossRef]

- Baron-Cohen, S.; Wheelwright, S.; Skinner, R.; Martin, J.; Clubley, E. The Autism-Spectrum Quotient (AQ): Evidence from asperger syndrome/high-functioning autism, malesand females, scientists and mathematicians. J. Autism Dev. Disord. 2001, 31, 5–17. [Google Scholar] [CrossRef]

- Woodbury-Smith, M.R.; Robinson, J.; Wheelwright, S.; Baron-Cohen, S. Screening adults for asperger syndrome using the aq: A preliminary study of its diagnostic validity in clinical practice. J. Autism Dev. Disord. 2005, 35, 331–335. [Google Scholar] [CrossRef]

- Dawson, G.; Meltzoff, A.N.; Osterling, J.; Rinaldi, J.; Brown, E. Children with autism fail to orient to naturally occurring social stimuli. J. Autism Dev. Disord. 1998, 28, 479–485. [Google Scholar] [CrossRef]

- Pelphrey, K.A.; Sasson, N.J.; Reznick, J.S.; Paul, G.; Goldman, B.D.; Piven, J. Visual scanning of faces in autism. J. Autism Dev. Disord. 2002, 32, 249–261. [Google Scholar] [CrossRef] [PubMed]

- Sasson, N.; Tsuchiya, N.; Hurley, R.; Couture, S.M.; Penn, D.L.; Adolphs, R.; Piven, J. Orienting to social stimuli differentiates social cognitive impairment in autism and schizophrenia. Neuropsychologia 2007, 45, 2580–2588. [Google Scholar] [CrossRef] [PubMed]

- Monk, C.S.; Weng, S.-J.; Wiggins, J.L.; Kurapati, N.; Louro, H.M.; Carrasco, M.; Maslowsky, J.; Risi, S.; Lord, C. Neural circuitry of emotional face processing in autism spectrum disorders. J. Psychiatry Neurosci. 2010, 35, 105–114. [Google Scholar] [CrossRef] [PubMed]

- Wang, A.T.; Dapretto, M.; Hariri, A.R.; Sigman, M.; Bookheimer, S.Y. Neural Correlates of facial affect processing in children and adolescents with autism spectrum disorder. J. Am. Acad. Child Adolesc. Psychiatry 2004, 43, 481–490. [Google Scholar] [CrossRef]

- Breiter, H.C.; Etcoff, N.L.; Whalen, P.J.; A Kennedy, W.; Rauch, S.L.; Buckner, R.L.; Strauss, M.M.; E Hyman, S.; Rosen, B.R. Response and habituation of the human amygdala during visual processing of facial expression. Neuron 1996, 17, 875–887. [Google Scholar] [CrossRef]

- Wright, C.I.; Martis, B.; Shin, L.M.; Fischer, H.; Rauch, S.L. Enhanced amygdala responses to emotional versus neutral schematic facial expressions. Neuroreport 2002, 13, 785–790. [Google Scholar] [CrossRef]

- Yang, T.T.; Menon, V.; Eliez, S.; Blasey, C.; White, C.D.; Reid, A.J.; Gotlib, I.H.; Reiss, A.L. Amygdalar activation associated with positive and negative facial expressions. Neuroreport 2002, 13, 1737–1741. [Google Scholar] [CrossRef]

- Derntl, B.; Seidel, E.-M.; Kryspin-Exner, I.; Hasmann, A.; Dobmeier, M. Facial emotion recognition in patients with bipolar I and bipolar II disorder. Br. J. Clin. Psychol. 2009, 48, 363–375. [Google Scholar] [CrossRef]

- Blair, R.J.R.; Morris, J.S.; Frith, C.D.; Perrett, D.I.; Dolan, R.J. Dissociable neural responses to facial expressions of sadness and anger. Brain 1999, 122, 883–893. [Google Scholar] [CrossRef]

- Goldin, P.R.; Hutcherson, C.A.C.; Ochsner, K.N.; Glover, G.H.; Gabrieli, J.D.E.; Gross, J.J. The neural bases of amusement and sadness: A comparison of block contrast and subject-specific emotion intensity regression approaches. NeuroImage 2005, 27, 26–36. [Google Scholar] [CrossRef]

- Morris, J.S.; Öhman, A.; Dolan, R.J. Conscious and unconscious emotional learning in the human amygdala. Nature 1998, 393, 467–470. [Google Scholar] [CrossRef] [PubMed]

- Esteves, F.; Öhman, A. Masking the face: Recognition of emotional facial expressions as a function of the parameters of backward masking. Scand. J. Psychol. 1993, 34, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Öhman, A.; Soares, J.J.F. “Unconscious anxiety”: Phobic responses to masked stimuli. J. Abnorm. Psychol. 1994, 103, 231–240. [Google Scholar] [CrossRef]

- Kiss, M.; Eimer, M. ERPs reveal subliminal processing of fearful faces. Psychophysiology 2008, 45, 318–326. [Google Scholar] [CrossRef]

- Pegna, A.; Landis, T.; Khateb, A. Electrophysiological evidence for early non-conscious processing of fearful facial expressions. Int. J. Psychophysiol. 2008, 70, 127–136. [Google Scholar] [CrossRef]

- Williams, M.A.; Morris, A.P.; McGlone, F.; Abbott, D.F.; Mattingley, J.B. Amygdala responses to fearful and happy facial expressions under conditions of binocular suppression. J. Neurosci. 2004, 24, 2898. [Google Scholar] [CrossRef]

- Hall, G.B.; West, D.; Szatmari, P. Backward masking: Evidence of reduced subcortical amygdala engagement in autism. Brain Cogn. 2007, 65, 100–106. [Google Scholar] [CrossRef]

- Kamio, Y.; Wolf, J.; Fein, D. Automatic processing of emotional faces in high-functioning pervasive developmental disorders: An affective priming study. J. Autism Devl. Disord. 2006, 36, 155–167. [Google Scholar] [CrossRef]

- Fujita, T.; Yamasaki, T.; Kamio, Y.; Hirose, S.; Tobimatsu, S. Parvocellular pathway impairment in autism spectrum disorder: Evidence from visual evoked potentials. Res. Autism Spectr. Disord. 2011, 5, 277–285. [Google Scholar] [CrossRef]

- Fujita, T.; Kamio, Y.; Yamasaki, T.; Yasumoto, S.; Hirose, S.; Tobimatsu, S. Altered automatic face processing in individuals with high-functioning autism spectrum disorders: Evidence from visual evoked potentials. Res. Autism Spectr. Disord. 2013, 7, 710–720. [Google Scholar] [CrossRef]

- Constantino, J.N.; Todd, R.D. Intergenerational transmission of subthreshold autistic traits in the general population. Biol. Psychiatry 2005, 57, 655–660. [Google Scholar] [CrossRef]

- Bentin, S.; Allison, T.; Puce, A.; Perez, E.; McCarthy, G. Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 1996, 8, 551–565. [Google Scholar] [CrossRef]

- Itier, R.J.; Taylor, M.J. N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb. Cortex 2004, 14, 132–142. [Google Scholar] [CrossRef] [PubMed]

- Batty, M.; Taylor, M.J. The development of emotional face processing during childhood. Dev. Sci. 2006, 9, 207–220. [Google Scholar] [CrossRef]

- Blau, V.C.; Maurer, U.; Tottenham, N.; McCandliss, B.D. The face-specific N170 component is modulated by emotional facial expression. Behav. Brain Funct. 2007, 3, 7. [Google Scholar] [CrossRef]

- Eimer, M.; Kiss, M.; Holmes, A. Links between rapid ERP responses to fearful faces and conscious awareness. J. Neuropsychol. 2008, 2, 165–181. [Google Scholar] [CrossRef]

- Dawson, G.; Webb, S.J.; Carver, L.; Panagiotides, H.; McPartland, J. Young children with autism show atypical brain responses to fearful versus neutral facial expressions of emotion. Dev. Sci. 2004, 7, 340–359. [Google Scholar] [CrossRef]

- O’Connor, K.; Hamm, J.P.; Kirk, I.J. The neurophysiological correlates of face processing in adults and children with Asperger’s syndrome. Brain Cogn. 2005, 59, 82–95. [Google Scholar] [CrossRef]

- Wong, T.K.W.; Fung, P.C.W.; Chua, S.E.; McAlonan, G.M. Abnormal spatiotemporal processing of emotional facial expressions in childhood autism: Dipole source analysis of event-related potentials. Eur. J. Neurosci. 2008, 28, 407–416. [Google Scholar] [CrossRef]

- Batty, M.; Meaux, E.; Wittemeyer, K.; Rogé, B.; Taylor, M.J. Early processing of emotional faces in children with autism: An event-related potential study. J. Exp. Child Psychol. 2011, 109, 430–444. [Google Scholar] [CrossRef]

- McPartland, J.C.; Dawson, G.; Webb, S.J.; Panagiotides, H.; Carver, L.J. Event-Related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. J. Child Psychol. Psychiatry 2004, 45, 1235–1245. [Google Scholar] [CrossRef] [PubMed]

- O’Connor, K.; Hamm, J.P.; Kirk, I.J. Neurophysiological responses to face, facial regions and objects in adults with Asperger’s syndrome: An ERP investigation. Int. J. Psychophysiol. 2007, 63, 283–293. [Google Scholar] [CrossRef] [PubMed]

- Hileman, C.M.; Henderson, H.; Mundy, P.; Newell, L.; Jaime, M. Developmental and individual differences on the P1 and N170 ERP components in children with and without autism. Dev. Neuropsychol. 2011, 36, 214–236. [Google Scholar] [CrossRef]

- Eimer, M.; Holmes, A. An ERP study on the time course of emotional face processing. Neuroreport 2002, 13, 427–431. [Google Scholar] [CrossRef]

- Bar-Haim, Y.; Lamy, D.; Glickman, S. Attentional bias in anxiety: A behavioral and ERP study. Brain Cogn. 2005, 59, 11–22. [Google Scholar] [CrossRef]

- Pegna, A.; Darque, A.; Berrut, C.; Khateb, A. Early ERP modulation for Task-Irrelevant subliminal faces. Front. Psychol. 2011, 2, 88. [Google Scholar] [CrossRef]

- Liddell, B.J.; Williams, L.M.; Rathjen, J.; Shevrin, H.; Gordon, E. A temporal dissociation of subliminal versus supraliminal fear perception: An event-related potential study. J. Cogn. Neurosci. 2004, 16, 479–486. [Google Scholar] [CrossRef]

- Campanella, S.; Gaspard, C.; Debatisse, D.; Bruyer, R.; Crommelinck, M.; Guerit, J.M. Discrimination of emotional facial expressions in a visual oddball task: An ERP study. Biol. Psychol. 2002, 59, 171–186. [Google Scholar] [CrossRef]

- Halgren, E.; Marinkovic, K. Neurophysiological networks integrating human emotions. In The Cognitive Neurosciences; The MIT Press: Cambridge, MA, USA, 1995; pp. 1137–1151. [Google Scholar]

- Polich, J. Updating P300: An integrative theory of P3a and P3b. Clin. Neurophysiol. 2007, 118, 2128–2148. [Google Scholar] [CrossRef]

- Stavropoulos, K.K.M.; Viktorinova, M.; Naples, A.; Foss-Feig, J.; McPartland, J.C. Autistic traits modulate conscious and nonconscious face perception. Soc. Neurosci. 2018, 13, 40–51. [Google Scholar] [CrossRef]

- Vukusic, S.; Ciorciari, J.; Crewther, D.P. Electrophysiological correlates of subliminal perception of facial expressions in individuals with autistic traits: A backward masking study. Front. Hum. Neurosci. 2017, 11, 256. [Google Scholar] [CrossRef] [PubMed]

- Nelson, C.A. The development and neural bases of face recognition. Infant Child Dev. 2001, 10, 3–18. [Google Scholar] [CrossRef]

- Pascalis, O.; de Schonen, S. Recognition memory in 3- to 4-day-old human neonates. Neuroreport 1994, 5, 1721–1724. [Google Scholar] [CrossRef] [PubMed]

- Bushnell, I.; Sai, F.; Mullin, J. Neonatal recognition of the mother’s face. Br. J. Dev. Psychol. 1989, 7, 3–15. [Google Scholar] [CrossRef]

- Boraston, Z.; Blakemore, S.-J.; Chilvers, R.; Skuse, D. Impaired sadness recognition is linked to social interaction deficit in autism. Neuropsychologia 2007, 45, 1501–1510. [Google Scholar] [CrossRef]

- Coffman, M.C.; Anderson, L.C.; Naples, A.J.; McPartland, J.C. Sex differences in social perception in children with ASD. J. Autism Dev. Disord. 2015, 45, 589–599. [Google Scholar] [CrossRef]

- Poljac, E.; Poljac, E.; Wagemans, J. Reduced accuracy and sensitivity in the perception of emotional facial expressions in individuals with high autism spectrum traits. Autism 2012, 17, 668–680. [Google Scholar] [CrossRef]

- Liu, X.; Liao, Y.; Zhou, L.; Sun, G.; Li, M.; Zhao, L. Mapping the time course of the positive classification advantage: An ERP study. Cogn. Affect. Behav. Neurosci. 2013, 13, 491–500. [Google Scholar] [CrossRef]

- Batty, M.; Taylor, M.J. Early processing of the six basic facial emotional expressions. Cogn. Brain Res. 2003, 17, 613–620. [Google Scholar] [CrossRef]

- Gayle, L.; Gal, D.; Kieffaber, P. Measuring affective reactivity in individuals with autism spectrum personality traits using the visual mismatch negativity event-related brain potential. Front. Hum. Neurosci. 2012, 6, 334. [Google Scholar] [CrossRef]

- Salmaso, D.; Longoni, A.M. Problems in the assessment of hand preference. Cortex 1985, 21, 533–549. [Google Scholar] [CrossRef]

- Bishop, D.V.M.; Maybery, M.; Maley, A.; Wong, D.; Hill, W.; Hallmayer, J. Using self-report to identify the broad phenotype in parents of children with autistic spectrum disorders: A study using the Autism-Spectrum Quotient. J. Child Psychol. Psychiatry 2004, 45, 1431–1436. [Google Scholar] [CrossRef]

- Puzzo, I.; Cooper, N.R.; Vetter, P.; Russo, R. EEG activation differences in the pre-motor cortex and supplementary motor area between normal individuals with high and low traits of autism. Brain Res. 2010, 1342, 104–110. [Google Scholar] [CrossRef] [PubMed]

- Raven, J.C.; Di Fabio, A.; Clarotti, S. APM Advanced Progressive Matrices Serie I E Ii: Manuale: Giunti Os Organizzazioni Speciali. 2013. Available online: https://www.giuntipsy.it/catalogo/test/apm (accessed on 29 April 2020).

- Goodin, P.; Lamp, G.; Hughes, M.E.; Rossell, S.L.; Ciorciari, J. Decreased response to positive facial affect in a depressed cohort in the dorsal striatum during a working memory task—A preliminary fMRI study. Front. Psychiatry 2019, 10, 60. [Google Scholar] [CrossRef]

- Willenbockel, V.; Sadr, J.; Fiset, D.; Horne, G.O.; Gosselin, F.; Tanaka, J.W. Controlling low-level image properties: The SHINE toolbox. Behav. Res. Methods. 2010, 42, 671–684. [Google Scholar] [CrossRef] [PubMed]

- Gratton, G.; Coles, M.G.; Donchin, E. A new method for off-line removal of ocular artifact. Electroencephalogr. Clin. Neurophysiol. 1983, 55, 468–484. [Google Scholar] [CrossRef]

- Joyce, C.; Rossion, B. The face-sensitive N170 and VPP components manifest the same brain processes: The effect of reference electrode site. Clin. Neurophysiol. 2005, 116, 2613–2631. [Google Scholar] [CrossRef] [PubMed]

- Benjamini, Y.; Hochberg, Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B Methodol. 1995, 57, 289–300. [Google Scholar] [CrossRef]

- Vasey, M.W.; Thayer, J.F. The continuing problem of false positives in repeated measures ANOVA in psychophysiology: A multivariate solution. Psychophysiology 1987, 24, 479–486. [Google Scholar] [CrossRef]

- Fugard, A.J.; Stewart, M.E.; Stenning, K. Visual/verbal-analytic reasoning bias as a function of self-reported autistic-like traits: A study of typically developing individuals solving Raven’s Advanced Progressive Matrices. Autism 2011, 15, 327–340. [Google Scholar] [CrossRef]

- Ashwin, C.; Chapman, E.; Colle, L.; Baron-Cohen, S. Impaired recognition of negative basic emotions in autism: A test of the amygdala theory. Soc. Neurosci. 2006, 1, 349–363. [Google Scholar] [CrossRef]

- Kelly, S.P.; Gomez-Ramirez, M.; Foxe, J.J. Spatial attention modulates initial afferent activity in human primary visual cortex. Cereb. Cortex 2008, 18, 2629–2636. [Google Scholar] [CrossRef]

- Folstein, S.; Rutter, M. Infantile autism: A genetic study of 21 twin pairs. J. Child Psychol. Psychiatry 1977, 18, 297–321. [Google Scholar] [CrossRef] [PubMed]

- McCleery, J.P.; Allman, E.; Carver, L.J.; Dobkins, K.R. Abnormal magnocellular pathway visual processing in infants at risk for autism. Biol. Psychiatry 2007, 62, 1007–1014. [Google Scholar] [CrossRef]

- Sutherland, A.; Crewther, D.P. Magnocellular visual evoked potential delay with high autism spectrum quotient yields a neural mechanism for altered perception. Brain 2010, 133, 2089–2097. [Google Scholar] [CrossRef] [PubMed]

- Kveraga, K.; Boshyan, J.; Bar, M. Magnocellular projections as the trigger of top-down facilitation in recognition. J. Neurosci. 2007, 27, 13232. [Google Scholar] [CrossRef] [PubMed]

- Merigan, W.; Maunsell, J. How parallel are the primate visual pathways? Ann. Rev. Neurosci. 1993, 16, 369–402. [Google Scholar] [CrossRef]

- Schroeder, C.E.; Tenke, C.E.; Arezzo, J.C.; Vaughan, H.G. Timing and distribution of flash-evoked activity in the lateral geniculate nucleus of the alert monkey. Brain Res. 1989, 477, 183–195. [Google Scholar] [CrossRef]

- Maunsell, J.H.R.; Ghose, G.M.; Assad, J.A.; McAdams, C.J.; Boudreau, C.E.; Noerager, B.D. Visual response latencies of magnocellular and parvocellular LGN neurons in macaque monkeys. Vis. Neurosci. 1999, 16, 1–14. [Google Scholar] [CrossRef]

- Vuilleumier, P.; Schwartz, S. Emotional facial expressions capture attention. Neurology 2001, 56, 153. [Google Scholar] [CrossRef]

- McPartland, J.C.; Wu, J.; Bailey, C.A.; Mayes, L.C.; Schultz, R.T.; Klin, A. Atypical neural specialization for social percepts in autism spectrum disorder. Soc. Neurosci. 2011, 6, 436–451. [Google Scholar] [CrossRef] [PubMed]

- Webb, S.J.; Dawson, G.; Bernier, R.; Panagiotides, H. ERP evidence of atypical face processing in young children with autism. J. Autism Dev. Disord. 2006, 36, 881. [Google Scholar] [CrossRef] [PubMed]

- Webb, S.J.; Jones, E.J.H.; Merkle, K.; Murias, M.; Greenson, J.; Richards, T.; Aylward, E.; Dawson, G. Response to familiar faces, newly familiar faces, and novel faces as assessed by ERPs is intact in adults with autism spectrum disorders. Int. J. Psychophysiol. 2010, 77, 106–117. [Google Scholar] [CrossRef]

- Ashley, V.; Vuilleumier, P.; Swick, D. Time course and specificity of event-related potentials to emotional expressions. Neuroreport 2004, 15, 211–216. [Google Scholar] [CrossRef]

- Ioannides, A.A.; Liu, L.C.; Kwapien, J.; Drozdz, S.; Streit, M. Coupling of regional activations in a human brain during an object and face affect recognition task. Hum. Brain Mapp. 2000, 11, 77–92. [Google Scholar] [CrossRef]

- Kasai, T.; Murohashi, H. Global visual processing decreases with autistic-like traits: A study of early lateralized potentials with spatial attention. Jpn. Psychol. Res. 2013, 55, 131–143. [Google Scholar] [CrossRef]

- Law Smith, M.J.; Montagne, B.; Perrett, D.I.; Gill, M.; Gallagher, L. Detecting subtle facial emotion recognition deficits in high-functioning Autism using dynamic stimuli of varying intensities. Neuropsychologia 2010, 48, 2777–2781. [Google Scholar] [CrossRef]

- Lewin, C.; Herlitz, A. Sex differences in face recognition—Women’s faces make the difference. Brain Cogn. 2002, 50, 121–128. [Google Scholar] [CrossRef]

- Ellis, H.; Shepherd, J.; Bruce, A. The effects of age and sex upon adolescents’ recognition of faces. J. Genet. Psychol. 1973, 123, 173–174. [Google Scholar] [CrossRef]

- Nemrodov, D.; Niemeier, M.; Mok, J.N.Y.; Nestor, A. The time course of individual face recognition: A pattern analysis of ERP signals. NeuroImage 2016, 132, 469–476. [Google Scholar] [CrossRef]

- Eimer, M. Effects of face inversion on the structural encoding and recognition of faces: Evidence from event-related brain potentials. Cogn. Brain Res. 2000, 10, 145–158. [Google Scholar] [CrossRef]

- Mouchetant-Rostaing, Y.; Giard, M.-H.; Bentin, S.; Aguera, P.-E.; Pernier, J. Neurophysiological correlates of face gender processing in humans. Eur. J. Neurosci. 2000, 12, 303–310. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Gao, X.; Han, S. Sex differences in face gender recognition: An event-related potential study. Brain Res. 2010, 1327, 69–76. [Google Scholar] [CrossRef] [PubMed]

| AQ | RAPM | Age | |

|---|---|---|---|

| AQ | 1 | ||

| RAPM | −0.034 | 1 | |

| Age | 0.144 | −0.192 | 1 |

| Mean | 14.9 | 22.5 | 22.5 |

| SD | 7.2 | 4.7 | 3.1 |

| Range | 3–26 | 14–34 | 18–30 |

| ERP Peak Latencies (ms) | Subliminal | SD | Supraliminal | SD | p Values (FDR Correction) |

|---|---|---|---|---|---|

| N170 | |||||

| (T5, T6) | 183.2 | 14.5 | 178.2 | 13.8 | 0.0019 |

| T5 | 188.3 | 20.3 | 184.4 | 20.6 | 0.066 |

| T6 | 178.1 | 12.9 | 172 | 12.6 | <0.001 |

| N1 | |||||

| (Fz, Cz, Pz, Oz) | 102.6 | 5.7 | 105.4 | 5.5 | 0.0019 |

| Fz | 114.5 | 7.5 | 118.4 | 8.4 | 0.0019 |

| Cz | 112.9 | 8.3 | 116.4 | 8.3 | 0.0019 |

| PZ | 102 | 12.7 | 102.8 | 10.7 | 0.648 |

| Oz | 81.1 | 9.4 | 84.0 | 9.8 | 0.0456 |

| N2 | |||||

| (Fz, Cz, Pz, Oz) | 210 | 10.1 | 225.6 | 10.7 | <0.001 |

| Fz | 235.9 | 10.9 | 251.3 | 13.3 | <0.001 |

| Cz | 234.1 | 11.4 | 251.2 | 13.8 | <0.001 |

| Pz | 207.8 | 23.1 | 224.1 | 24.3 | <0.001 |

| Oz | 161.5 | 16.2 | 175.7 | 16.4 | <0.001 |

| P3 | |||||

| (Fz, Cz, Pz, Oz) | 288.5 | 12.4 | 308.8 | 13.8 | <0.001 |

| Fz | 314.6 | 15.2 | 327.9 | 12.9 | <0.001 |

| Cz | 315 | 17.8 | 334.5 | 19.6 | <0.001 |

| Pz | 288.3 | 25.0 | 306.3 | 21.9 | <0.001 |

| Oz | 238.1 | 19.9 | 266.7 | 25.2 | <0.001 |

| N4 | |||||

| (Fz, Cz, Pz, Oz) | 382 | 12.2 | 382.4 | 10.5 | 0.822 |

| Fz | 393.8 | 5.8 | 393.2 | 4.4 | 0.453 |

| Cz | 392.9 | 5.6 | 392.4 | 6.1 | 0.515 |

| Pz | 383.8 | 18.7 | 387.7 | 13.3 | 0.186 |

| Oz | 357.5 | 34.4 | 356.5 | 31.3 | 0.866 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

De Pascalis, V.; Cirillo, G.; Vecchio, A.; Ciorciari, J. Event-Related Potential to Conscious and Nonconscious Emotional Face Perception in Females with Autistic-Like Traits. J. Clin. Med. 2020, 9, 2306. https://doi.org/10.3390/jcm9072306

De Pascalis V, Cirillo G, Vecchio A, Ciorciari J. Event-Related Potential to Conscious and Nonconscious Emotional Face Perception in Females with Autistic-Like Traits. Journal of Clinical Medicine. 2020; 9(7):2306. https://doi.org/10.3390/jcm9072306

Chicago/Turabian StyleDe Pascalis, Vilfredo, Giuliana Cirillo, Arianna Vecchio, and Joseph Ciorciari. 2020. "Event-Related Potential to Conscious and Nonconscious Emotional Face Perception in Females with Autistic-Like Traits" Journal of Clinical Medicine 9, no. 7: 2306. https://doi.org/10.3390/jcm9072306

APA StyleDe Pascalis, V., Cirillo, G., Vecchio, A., & Ciorciari, J. (2020). Event-Related Potential to Conscious and Nonconscious Emotional Face Perception in Females with Autistic-Like Traits. Journal of Clinical Medicine, 9(7), 2306. https://doi.org/10.3390/jcm9072306