Lights and Shadows on Artificial Intelligence in Glaucoma: Transforming Screening, Monitoring, and Prognosis

Abstract

1. Introduction

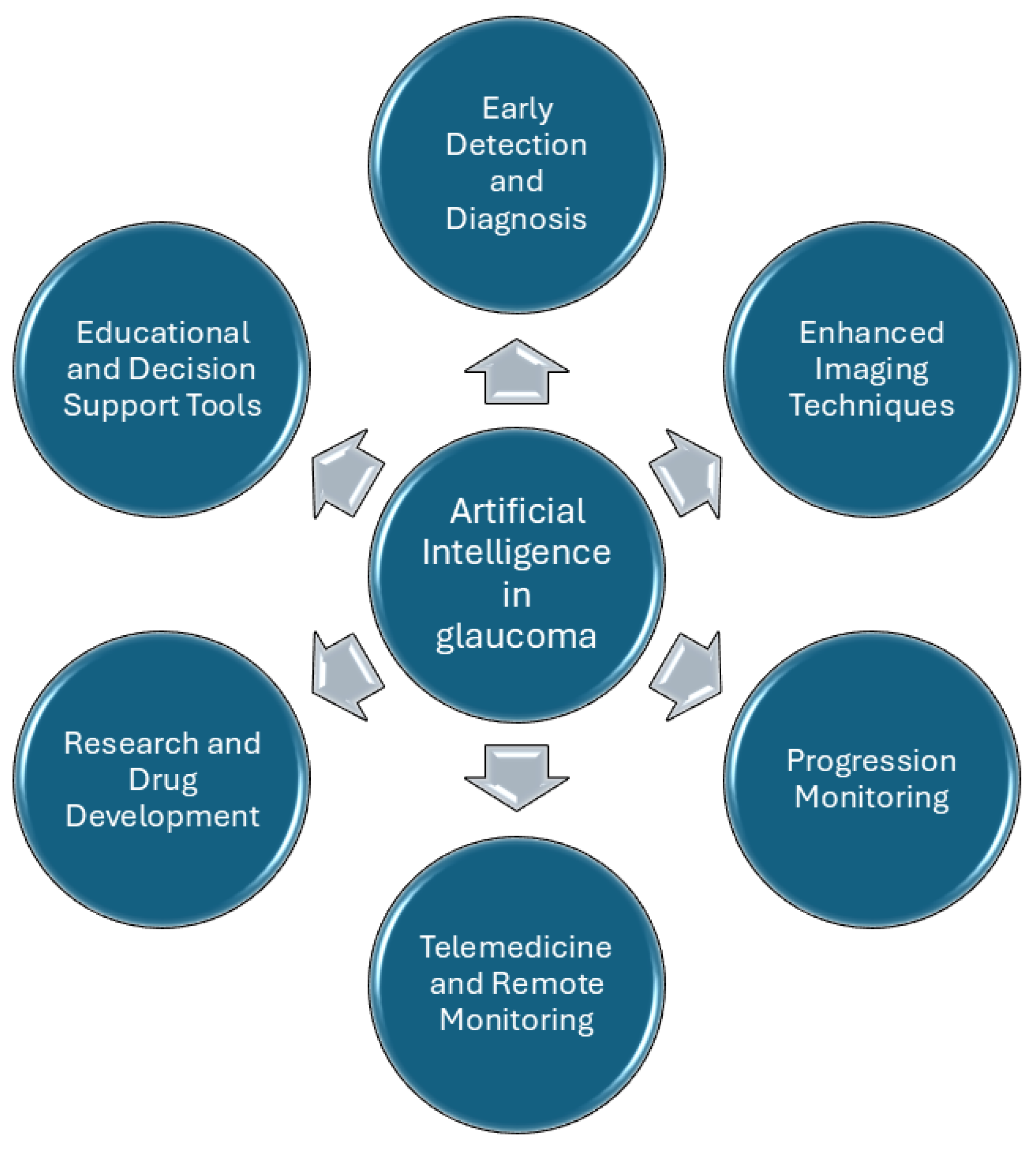

2. Clinical Applications of AI

2.1. Fundus Photographs and AI

2.1.1. Segmentation of AI Fundus Images

2.1.2. AI and Optic Disc Classification

2.1.3. AI-Based Prediction of Glaucoma Based on Fundus Photographs

2.1.4. AI Fundus Picture-Based Tele-Glaucoma

2.2. Visual Field Test and AI

2.2.1. Convolutional Neural Network-Based Visual Field Test Progression Prediction

2.2.2. Variational Autoencoder-Based Prediction of Visual Field Test Progression

2.2.3. Recurrent Neural Network-Based Prediction of Visual Field Test Progression

2.2.4. Archetypal Analysis-Based Prediction of Visual Field Test Progression

2.2.5. Other ML-Based Methods of Visual Field Test Progression Prediction

2.3. Optical Coherence Tomography and AI

2.3.1. Digital Stain of OCT Images

2.3.2. Spectral Domain OCT-Based Glaucoma AI Detection

2.3.3. Anterior Segment OCT-Based AI Algorithms for the Detection of Open vs. Closed Iridocorneal Angle

2.3.4. OCT Angiography-Based AI Algorithms for the Detection of Glaucoma

2.4. AI Combined Approach in Glaucoma Diagnosis

2.5. AI and Medical Advanced Imaging in Glaucoma

3. Ethical Implications

4. Discussion and Future Perspectives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Martucci, A.; Nucci, C. Evidence on neuroprotective properties of coenzyme Q10 in the treatment of glaucoma. Neural Regen. Res. 2019, 14, 197. [Google Scholar] [PubMed]

- Trouilloud, A.; Ferry, E.; Boucart, M.; Kauffmann, L.; Warniez, A.; Rouland, J.F.; Peyrin, C. Impact of glaucoma on the spatial frequency processing of scenes in central vision. Vis. Neurosci. 2023, 40, E001. [Google Scholar]

- Gallo Afflitto, G.; Aiello, F.; Cesareo, M.; Nucci, C. Primary Open Angle Glaucoma Prevalence in Europe: A Systematic Review and Meta-Analysis. J. Glaucoma 2022, 31, 783–788. [Google Scholar]

- Tham, Y.C.; Li, X.; Wong, T.Y.; Quigley, H.A.; Aung, T.; Cheng, C.Y. Global Prevalence of Glaucoma and Projections of Glaucoma Burden through 2040. Ophthalmology 2014, 121, 2081–2090. [Google Scholar] [PubMed]

- Steinmetz, J.D.; Bourne, R.R.A.; Briant, P.S.; Flaxman, S.R.; Taylor, H.R.B.; Jonas, J.B.; Abdoli, A.A.; Abrha, W.A.; Abualhasan, A.; Abu-Gharbieh, E.G.; et al. Causes of blindness and vision impairment in 2020 and trends over 30 years, and prevalence of avoidable blindness in relation to VISION 2020: The Right to Sight: An analysis for the Global Burden of Disease Study. Lancet Glob. Health 2021, 9, e144–e160. [Google Scholar]

- Spaeth, G.L. European Glaucoma Society Terminology and Guidelines for Glaucoma, 5th Edition. Br. J. Ophthalmol. 2021, 105 (Suppl. S1), 1–169. [Google Scholar]

- Kass, M.A. The Ocular Hypertension Treatment Study. Arch. Ophthalmol. 2002, 120, 701. [Google Scholar] [PubMed]

- European Glaucoma Prevention Study (EGPS) Group. Results of the European Glaucoma Prevention Study. Ophthalmology 2005, 112, 366–375. [Google Scholar]

- Benet, D.; Pellicer-Valero, O.J. Artificial intelligence: The unstoppable revolution in ophthalmology. In Survey of Ophthalmology; Elsevier: Amsterdam, The Netherlands, 2022; Volume 67, pp. 252–270. [Google Scholar]

- Schuman, J.S.; De Los Angeles Ramos Cadena, M.; McGee, R.; Al-Aswad, L.A.; Medeiros, F.A.; Abramoff, M.; Blumenkranz, M.; Chew, E.; Chiang, M.; Eydelman, M.; et al. A Case for the Use of Artificial Intelligence in Glaucoma Assessment. Ophthalmol. Glaucoma 2022, 5, e3–e13. [Google Scholar]

- Kapoor, R.; Walters, S.P.; Al-Aswad, L.A. The current state of artificial intelligence in ophthalmology. In Survey of Ophthalmology; Elsevier: Amsterdam, The Netherlands, 2019; Volume 64, pp. 233–240. [Google Scholar]

- Popescu, P.S.; Muşat, A.; Patoni, C.; Popescu, M.; Munteanu, M.; Costache, I.; Pîrvulescu, R.A.; Mușat, O. Artificial intelligence in ophthalmology. Rom. J. Ophthalmol. 2023, 67, 207–213. [Google Scholar]

- Ting, D.S.J.; Foo, V.H.X.; Yang, L.W.Y.; Sia, J.T.; Ang, M.; Lin, H.; Chodosh, J.; Mehta, J.S. Artificial intelligence for anterior segment diseases: Emerging applications in ophthalmology. In British Journal of Ophthalmology; BMJ Publishing Group: London, UK, 2021; Volume 105, pp. 158–168. [Google Scholar]

- Salazar, H.; Misra, V.; Swaminathan, S.S. Artificial intelligence and complex statistical modeling in glaucoma diagnosis and management. Curr. Opin. Ophthalmol. 2021, 32, 105–117. [Google Scholar] [CrossRef]

- Devalla, S.K.; Liang, Z.; Pham, T.H.; Boote, C.; Strouthidis, N.G.; Thiery, A.H.; A Girard, M.J. Glaucoma management in the era of artificial intelligence. In British Journal of Ophthalmology; BMJ Publishing Group: London, UK, 2020; Volume 104, pp. 301–311. [Google Scholar]

- Eslami, M.; Kim, J.A.; Zhang, M.; Boland, M.V.; Wang, M.; Chang, D.S.; Elze, T. Visual Field Prediction. Ophthalmol. Sci. 2023, 3, 100222. [Google Scholar] [CrossRef] [PubMed]

- Al-Hamadani, M.; Fadhel, M.; Alzubaidi, L.; Harangi, B. Reinforcement Learning Algorithms and Applications in Healthcare and Robotics: A Comprehensive and Systematic Review. Sensors 2024, 24, 2461. [Google Scholar] [CrossRef] [PubMed]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef] [PubMed]

- Najjar, R. Redefining Radiology: A Review of Artificial Intelligence Integration in Medical Imaging. Diagnostics 2023, 13, 2760. [Google Scholar] [CrossRef]

- Mello-Thoms, C.; Mello, C.A.B. Clinical applications of artificial intelligence in radiology. Br. J. Radiol. 2023, 96, 20221031. [Google Scholar] [CrossRef]

- Medeiros, F.; Tatham, A.; Weinreb, R. Strategies for improving early detection of glaucoma: The combined structure and function index. Clin. Ophthalmol. 2014, 8, 611–621. [Google Scholar] [CrossRef]

- Camara, J.; Neto, A.; Pires, I.M.; Villasana, M.V.; Zdravevski, E.; Cunha, A. Literature Review on Artificial Intelligence Methods for Glaucoma Screening, Segmentation, and Classification. J. Imaging 2022, 8, 19. [Google Scholar] [CrossRef]

- Srivastava, O.; Tennant, M.; Grewal, P.; Rubin, U.; Seamone, M. Artificial intelligence and machine learning in ophthalmology: A review. In Indian Journal of Ophthalmology; Wolters Kluwer Medknow Publications: Mumbai, India, 2023; Volume 71, pp. 11–17. [Google Scholar]

- Nguyen, V.; Iyengar, S.; Rasheed, H.; Apolo, G.; Li, Z.; Kumar, A.; Nguyen, H.; Bohner, A.; Dhodapkar, R.; Do, J.; et al. Expert-Level Detection of Referable Glaucoma from Fundus Photographs in a Safety Net Population: The AI and Teleophthalmology in Los Angeles Initiative. medRxiv 2024. [Google Scholar] [CrossRef]

- Li, M.; Wan, C. The use of deep learning technology for the detection of optic neuropathy. Quant. Imaging Med. Surg. 2022, 12, 2129–2143. [Google Scholar] [CrossRef]

- Abidin, Z.U.; Naqvi, R.A.; Kim, H.S.; Kim, H.S.; Jeong, D.; Lee, S.W. Optimizing optic cup and optic disc delineation: Introducing the efficient feature preservation segmentation network. Eng. Appl. Artif. Intell. 2025, 144, 110038. [Google Scholar]

- Girard, F.; Hurtut, T.; Kavalec, C.; Cheriet, F. Atlas-based score for automatic glaucoma risk stratification. Comput. Med Imaging Graph. 2021, 87, 101797. [Google Scholar]

- Li, Z.; He, Y.; Keel, S.; Meng, W.; Chang, R.T.; He, M. Efficacy of a Deep Learning System for Detecting Glaucomatous Optic Neuropathy Based on Color Fundus Photographs. Ophthalmology 2018, 125, 1199–1206. [Google Scholar]

- Nam, Y.; Kim, J.; Kim, K.; Park, K.A.; Kang, M.; Cho, B.H.; Oh, S.Y.; Kee, C.; Han, J.; Lee, G.-I.; et al. Deep learning-based optic disc classification is affected by optic-disc tilt. Sci. Rep. 2024, 14, 498. [Google Scholar]

- Thakur, A.; Goldbaum, M.; Yousefi, S. Predicting Glaucoma before Onset Using Deep Learning. Ophthalmol. Glaucoma 2020, 3, 262–268. [Google Scholar] [PubMed]

- Thomas, S.M.; Jeyaraman, M.; Hodge, W.G.; Hutnik, C.; Costella, J.; Malvankar-Mehta, M.S. The Effectiveness of Teleglaucoma versus In-Patient Examination for Glaucoma Screening: A Systematic Review and Meta-Analysis. PLoS ONE 2014, 9, e113779. [Google Scholar]

- Liu, W.W.; Margeta, M.A. Imaging Retinal Ganglion Cell Death and Dysfunction in Glaucoma. Int. Ophthalmol. Clin. 2019, 59, 41–54. [Google Scholar]

- Kako, N.A.; Abdulazeez, A.M.; Abdulqader, D.N. Multi-label deep learning for comprehensive optic nerve head segmentation through data of fundus images. Heliyon 2024, 10, e36996. [Google Scholar]

- Khalil, M.; Naeem, A.; Naqvi, R.A.; Zahra, K.; Moqurrab, S.A.; Lee, S.W. Deep Learning-Based Classification of Abrasion and Ischemic Diabetic Foot Sores Using Camera-Captured Images. Mathematics. Mathematics 2023, 11, 3793. [Google Scholar]

- Sekimitsu, S.; Zebardast, N. Glaucoma and Machine Learning: A Call for Increased Diversity in Data. Ophthalmol. Glaucoma 2021, 4, 339–342. [Google Scholar]

- Qian, X.; Xian, S.; Yifei, S.; Wei, G.; Liu, H.; Xiaoming, X.; Chu, C.; Yilong, Y.; Shuang, Y.; Kai, M.; et al. External validation of a deep learning detection system for glaucomatous optic neuropathy: A real-world multicentre study. Eye 2023, 37, 3813–3818. [Google Scholar] [PubMed]

- Li, J.P.O.; Liu, H.; Ting, D.S.J.; Jeon, S.; Chan, R.V.P.; Kim, J.E.; Sim, D.A.; Thomas, P.B.M.; Lin, H.; Chen, Y.; et al. Digital technology, tele-medicine and artificial intelligence in ophthalmology: A global perspective. In Progress in Retinal and Eye Research; Elsevier: Amsterdam, The Netherlands, 2021; Volume 82. [Google Scholar]

- Martucci, A.; Nucci, C.; Pinazo-Durán, M.D. Editorial: New perspectives in glaucoma pathophysiology, diagnosis, and treatment. Front. Med. 2023, 10, 1200427. [Google Scholar]

- Kopilaš, V.; Kopilaš, M. Quality of life and mental health status of glaucoma patients. Front. Med. 2024, 11, 1402604. [Google Scholar]

- European Glacoma Society. Terminology and Guidelines for Glaucoma, 5th ed.; European Glacoma Society: Zug, Switzerland, 2020. [Google Scholar]

- Park, K.; Kim, J.; Lee, J. Visual Field Prediction using Recurrent Neural Network. Sci. Rep. 2019, 9, 8385. [Google Scholar]

- Wang, M.; Shen, L.Q.; Pasquale, L.R.; Petrakos, P.; Formica, S.; Boland, M.V.; Wellik, S.R.; De Moraes, C.G.; Myers, J.S.; Saeedi, O.; et al. An Artificial Intelligence Approach to Detect Visual Field Progression in Glaucoma Based on Spatial Pattern Analysis. Investig. Opthalmology Vis. Sci. 2019, 60, 365. [Google Scholar]

- Girard, M.J.A.; Schmetterer, L. Artificial intelligence and deep learning in glaucoma: Current state and future prospects. Prog. Brain Res. 2020, 257, 37–64. [Google Scholar]

- Brigatti, L. Automatic Detection of Glaucomatous Visual Field Progression With Neural Networks. Arch. Ophthalmol. 1997, 115, 725. [Google Scholar] [PubMed]

- Sample, P.A.; Goldbaum, M.H.; Chan, K.; Boden, C.; Lee, T.W.; Vasile, C.; Boehm, A.G.; Sejnowski, T.; A Johnson, C.; Weinreb, R.N. Using machine learning classifiers to identify glaucomatous change earlier in standard visual fields. Invest. Ophthalmol. Vis. Sci. 2002, 43, 2660–2665. [Google Scholar]

- Wen, J.C.; Lee, C.S.; Keane, P.A.; Xiao, S.; Rokem, A.S.; Chen, P.P.; Wu, Y.; Lee, A.Y. Forecasting future Humphrey Visual Fields using deep learning. PLoS ONE 2019, 14, e0214875. [Google Scholar]

- Berchuck, S.I.; Mukherjee, S.; Medeiros, F.A. Estimating Rates of Progression and Predicting Future Visual Fields in Glaucoma Using a Deep Variational Autoencoder. Sci. Rep. 2019, 9, 18113. [Google Scholar]

- Yousefi, S.; Kiwaki, T.; Zheng, Y.; Sugiura, H.; Asaoka, R.; Murata, H.; Lemij, H.; Yamanishi, K. Detection of Longitudinal Visual Field Progression in Glaucoma Using Machine Learning. Am. J. Ophthalmol. 2018, 193, 71–79. [Google Scholar] [PubMed]

- Lipton, Z.C.; Berkowitz, J.; Elkan, C. A Critical Review of Recurrent Neural Networks for Sequence Learning. arXiv 2015, arXiv:1506.00019. [Google Scholar]

- Cutler, A.; Breiman, L. Archetypal Analysis. Technometrics 1994, 36, 338. [Google Scholar]

- Mørup, M.; Hansen, L.K. Archetypal analysis for machine learning and data mining. Neurocomputing 2012, 80, 54–63. [Google Scholar]

- Hood, D.C.; La Bruna, S.; Tsamis, E.; Thakoor, K.A.; Rai, A.; Leshno, A.; de Moraes, C.G.; Cioffi, G.A.; Liebmann, J.M. Detecting glaucoma with only OCT: Implications for the clinic, research, screening, and AI development. Prog. Retin. Eye Res. 2022, 90, 101052. [Google Scholar] [CrossRef]

- Devalla, S.K.; Chin, K.S.; Mari, J.M.; Tun, T.A.; Strouthidis, N.G.; Aung, T.; Thiéry, A.H.; Girard, M.J.A. A Deep Learning Approach to Digitally Stain Optical Coherence Tomography Images of the Optic Nerve Head. Investig. Opthalmology Vis. Sci. 2018, 59, 63. [Google Scholar]

- Ran, A.R.; Cheung, C.Y.; Wang, X.; Chen, H.; Luo, L.Y.; Chan, P.P.; Wong, M.O.M.; Chang, R.T.; Mannil, S.S.; Young, A.L.; et al. Detection of glaucomatous optic neuropathy with spectral-domain optical coherence tomography: A retrospective training and validation deep-learning analysis. Lancet Digit. Health 2019, 1, e172–e182. [Google Scholar]

- García, G.; Colomer, A.; Naranjo, V. Glaucoma Detection from Raw SD-OCT Volumes: A Novel Approach Focused on Spatial Dependencies. Comput. Methods Programs Biomed. 2021, 200, 105855. [Google Scholar]

- Akter, N.; Fletcher, J.; Perry, S.; Simunovic, M.P.; Briggs, N.; Roy, M. Glaucoma diagnosis using multi-feature analysis and a deep learning technique. Sci. Rep. 2022, 12, 8064. [Google Scholar]

- Xu, Y.; Liu, J.; Cheng, J.; Lee, B.H.; Wong, D.W.K.; Baskaran, M.; Perera, S.; Aung, T. Automated anterior chamber angle localization and glaucoma type classification in OCT images. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 7380–7383. [Google Scholar]

- Issac Niwas, S.; Lin, W.; Kwoh, C.K.; Kuo, C.C.J.; Sng, C.C.; Aquino, M.C.; Chew, P.T.K. Cross-Examination for Angle-Closure Glaucoma Feature Detection. IEEE J. Biomed. Health Inform. 2015, 20, 343–354. [Google Scholar]

- Park, K.; Kim, J.; Lee, J. Macular Vessel Density and Ganglion Cell/Inner Plexiform Layer Thickness and Their Combinational Index Using Artificial Intelligence. J. Glaucoma 2018, 27, 750–760. [Google Scholar] [PubMed]

- Miguel, A.; Silva, B. Artificial Intelligence to detect glaucoma through OCT Angiography—A pilot study. Invest. Ophthalmol. Vis. Sci. 2021, 62, 2166. [Google Scholar]

- Angmo, D.; Nongpiur, M.; Sharma, R.; Sidhu, T.; Sihota, R.; Dada, T. Clinical utility of anterior segment swept-source optical coherence tomography in glaucoma. Oman J. Ophthalmol. 2016, 9, 3. [Google Scholar]

- Yuan, Y.; Wang, W.; Xiong, R.; Zhang, J.; Li, C.; Yang, S.; Friedman, D.S.; Foster, P.J.; He, M. Fourteen-Year Outcome of Angle-Closure Prevention with Laser Iridotomy in the Zhongshan Angle-Closure Prevention Study. Ophthalmology 2023, 130, 786–794. [Google Scholar] [PubMed]

- Coviltir, V.; Burcel, M.G.; Baltă, G.; Marinescu, M.C. Interplay Between Ocular Ischemia and Glaucoma: An Update. Int. J. Mol. Sci. 2024, 25, 12400. [Google Scholar] [CrossRef]

- Micheletti, E.; Moghimi, S.; Nishida, T.; El-Nimri, N.; Mahmoudinedzah, G.; Kamalipour, A.; Mohammadzadeh, V.; Zangwill, L.M.; Weinreb, R.N. Factors associated with choroidal microvascular dropout change. Br. J. Ophthalmol. 2023, 107, 1444–1451. [Google Scholar] [CrossRef] [PubMed]

- Ma, D.; Pasquale, L.R.; Girard, M.J.A.; Leung, C.K.S.; Jia, Y.; Sarunic, M.V.; Sappington, R.M.; Chan, K.C. Reverse translation of artificial intelligence in glaucoma: Connecting basic science with clinical applications. Front. Ophthalmol. 2023, 2, 1057896. [Google Scholar]

- Martucci, A.; Giannini, C.; Di Marino, M.; Sorge RPietro Aiello, F.; Scuteri, D.; Mancino, R.; Nucci, C.; Cesareo, M. Evaluation of putative differences in vessel density and flow area in normal tension and high-pressure glaucoma using OCT-angiography. Prog. Brain Res. 2020, 257, 85–98. [Google Scholar]

- Mariottoni, E.B.; Datta, S.; Dov, D.; Jammal, A.A.; Berchuck, S.I.; Tavares, I.M.; Carin, L.; Medeiros, F.A. Artificial Intelligence Mapping of Structure to Function in Glaucoma. Transl. Vis. Sci. Technol. 2020, 9, 19. [Google Scholar] [CrossRef]

- Miri, M.S.; Abramoff, M.D.; Lee, K.; Niemeijer, M.; Wang, J.K.; Kwon, Y.H.; Garvin, M.K. Multimodal Segmentation of Optic Disc and Cup From SD-OCT and Color Fundus Photographs Using a Machine-Learning Graph-Based Approach. IEEE Trans. Med. Imaging 2015, 34, 1854–1866. [Google Scholar]

- Hussain, S.; Chua, J.; Wong, D.; Lo, J.; Kadziauskiene, A.; Asoklis, R.; Barbastathis, G.; Schmetterer, L.; Yong, L. Predicting glaucoma progression using deep learning framework guided by generative algorithm. Sci. Rep. 2023, 13, 19960. [Google Scholar]

- Thompson, A.C.; Jammal, A.A.; Medeiros, F.A. A Deep Learning Algorithm to Quantify Neuroretinal Rim Loss From Optic Disc Photographs. Am. J. Ophthalmol. 2019, 201, 9–18. [Google Scholar] [PubMed]

- Xiong, J.; Li, F.; Song, D.; Tang, G.; He, J.; Gao, K.; Zhang, H.; Cheng, W.; Song, Y.; Lin, F.; et al. Multimodal Machine Learning Using Visual Fields and Peripapillary Circular OCT Scans in Detection of Glaucomatous Optic Neuropathy. Ophthalmology 2022, 129, 171–180. [Google Scholar] [PubMed]

- Thrall, J.H.; Li, X.; Li, Q.; Cruz, C.; Do, S.; Dreyer, K.; Brink, J. Artificial Intelligence and Machine Learning in Radiology: Opportunities, Challenges, Pitfalls, and Criteria for Success. J. Am. Coll. Radiol. 2018, 15, 504–508. [Google Scholar]

- Kulkarni, S.; Seneviratne, N.; Baig, M.S.; Khan, A.H.A. Artificial Intelligence in Medicine: Where Are We Now? In Academic Radiology; Elsevier: Philadelphia, PA, USA, 2020; Volume 27, pp. 62–70. [Google Scholar]

- Zhang, Z.; Li, G.; Xu, Y.; Tang, X. Application of Artificial Intelligence in the MRI Classification Task of Human Brain Neurological and Psychiatric Diseases: A Scoping Review. Diagnostics 2021, 11, 1402. [Google Scholar] [CrossRef]

- Leong, Y.Y.; Vasseneix, C.; Finkelstein, M.T.; Milea, D.; Najjar, R.P. Artificial Intelligence Meets Neuro-Ophthalmology. Asia-Pac. J. Ophthalmol. 2022, 11, 111–125. [Google Scholar]

- Martucci, A.; Cesareo, M.; Toschi, N.; Garaci, F.G.; Bagetta, G.; Nucci, C. Brain networks reorganization and functional disability in glaucoma. Prog. Brain Res. 2020, 257, 65–76. [Google Scholar]

- Martucci, A.; Di Giuliano, F.; Minosse, S.; Pocobelli, G.; Nucci, C.; Garaci, F.G. MRI and Clinical Biomarkers Overlap between Glaucoma and Alzheimer’s Disease. Int. J. Mol. Sci. 2023, 24, 14932. [Google Scholar] [CrossRef]

- Martucci, A.; Picchi, E.; Di Giuliano, F.; Pocobelli, G.; Mancino, R.; Toschi, N.; Russo, R.; Floris, R.; Garaci, F.; Nucci, C. Imaging biomarkers for Alzheimer’s disease and glaucoma: Current and future practices. Curr. Opin. Pharmacol. 2022, 62, 137–144. [Google Scholar]

- Abidin, Z.U.; Naqvi, R.A.; Haider, A.; Kim, H.S.; Jeong, D.; Lee, S.W. Recent deep learning-based brain tumor segmentation models using multi-modality magnetic resonance imaging: A prospective survey. Front. Bioeng. Biotechnol. 2024, 12, 1392807. [Google Scholar]

- Pang, Y.; Bang, J.W.; Kasi, A.; Li, J.; Parra, C.; Fieremans, E.; Wollstein, G.; Schuman, J.S.; Wang, M.; Chan, K.C. Contributions of Brain Microstructures and Metabolism to Visual Field Loss Patterns in Glaucoma Using Archetypal and Information Gain Analyses. Investig. Ophthalmol. Vis. Sci. 2024, 65, 15. [Google Scholar] [CrossRef] [PubMed]

- Farhud, D.D.; Zokaei, S. Ethical Issues of Artificial Intelligence in Medicine and Healthcare. Iran. J. Public Health 2021, 50, I–V. [Google Scholar] [CrossRef] [PubMed]

- Elendu, C.; Amaechi, D.C.; Elendu, T.C.; Jingwa, K.A.; Okoye, O.K.; John Okah, M.; Ladele, J.A.M.; Farah, A.H.; Alimi, H.A.M. Ethical implications of AI and robotics in healthcare: A review. Medicine 2023, 102, e36671. [Google Scholar] [CrossRef] [PubMed]

- Abdullah, Y.I.; Schuman, J.S.; Shabsigh, R.; Caplan, A.; Al-Aswad, L.A. Ethics of Artificial Intelligence in Medicine and Ophthalmology. In Asia-Pacific Journal of Ophthalmology; Lippincott Williams and Wilkins: Philadelphia, PA, USA, 2021; Volume 10, pp. 289–298. [Google Scholar]

- Naik, N.; Hameed, B.M.Z.; Shetty, D.K.; Swain, D.; Shah, M.; Paul, R.; Aggarwal, K.; Ibrahim, S.; Patil, V.; Smriti, K.; et al. Legal and Ethical Consideration in Artificial Intelligence in Healthcare: Who Takes Responsibility? Front. Surg. 2022, 9, 862322. [Google Scholar] [CrossRef]

- Tang, L.; Li, J.; Fantus, S. Medical artificial intelligence ethics: A systematic review of empirical studies. Digit. Health 2023, 9, 20552076231186064. [Google Scholar]

- Jin, K.; Ye, J. Artificial intelligence and deep learning in ophthalmology: Current status and future perspectives. Adv. Ophthalmol. Pract. Res. 2022, 2, 100078. [Google Scholar] [CrossRef]

- Grzybowski, A.; Brona, P. Approval and Certification of Ophthalmic AI Devices in the European Union. Ophthalmol. Ther. 2023, 12, 633–638. [Google Scholar] [CrossRef]

- Yousefi, S. Clinical Applications of Artificial Intelligence in Glaucoma. J. Ophthalmic Vis. Res. 2023, 18, 99–112. [Google Scholar]

- Hashemian, H.; Peto, T.; Ambrósio, R., Jr.; Lengyel, I.; Kafieh, R.; Muhammed Noori, A.; Khorrami-Nezhad, M. Application of Artificial Intelligence in Ophthalmology: An Updated Comprehensive Review. J. Ophthalmic Vis. Res. 2024, 19, 354–367. [Google Scholar] [CrossRef]

- Barry, S.; Wang, S.Y. Predicting Glaucoma Surgical Outcomes Using Neural Networks and Machine Learning on Electronic Health Records. Transl. Vis. Sci. Technol. 2024, 13, 15. [Google Scholar] [CrossRef]

- Mariottoni, E.B.; Jammal, A.A.; Berchuck, S.I.; Shigueoka, L.S.; Tavares, I.M.; Medeiros, F.A. An objective structural and functional reference standard in glaucoma. Sci. Rep. 2021, 11, 1752. [Google Scholar]

- Iyer, J.V.; Boland, M.V.; Jefferys, J.; Quigley, H. Defining glaucomatous optic neuropathy using objective criteria from structural and functional testing. Br. J. Ophthalmol. 2021, 105, 789–793. [Google Scholar] [CrossRef] [PubMed]

- Chaurasia, A.K.; Greatbatch, C.J.; Hewitt, A.W. Diagnostic Accuracy of Artificial Intelligence in Glaucoma Screening and Clinical Practice. J. Glaucoma 2022, 31, 285–299. [Google Scholar] [PubMed]

- Al-Aswad, L.A.; Ramachandran, R.; Schuman, J.S.; Medeiros, F.; Eydelman, M.B.; Abramoff, M.D.; Antony, B.J.; Boland, M.V.; Chauhan, B.C.; Chiang, M.; et al. Artificial Intelligence for Glaucoma: Creating and Implementing Artificial Intelligence for Disease Detection and Progression. Ophthalmol. Glaucoma 2022, 5, e16–e25. [Google Scholar]

- Zaidi, S.A.J.; Ghafoor, A.; Kim, J.; Abbas, Z.; Lee, S.W. HeartEnsembleNet: An Innovative Hybrid Ensemble Learning Approach for Cardiovascular Risk Prediction. Healthcare 2025, 13, 507. [Google Scholar] [CrossRef]

- Ashtari-Majlan, M.; Dehshibi, M.M.; Masip, D. Glaucoma diagnosis in the era of deep learning: A survey. In Expert Systems with Applications; Elsevier: Amsterdam, The Netherlands, 2024; Volume 256. [Google Scholar]

| Authors | Modality Analyzed | Aims | Methods | Results | Dataset |

|---|---|---|---|---|---|

| Nguyen et al. [24] | Fundus Images | Referable glaucoma | VGG-19 | DL algorithm approximates or exceeds performance by ophthalmologists (AUC = 0.92) and optometrists (AUC = 0.93) | Training dataset: 12,098 images from 5616 patients Test dataset: 1000 images from 500 patients |

| Abidin et al. [26] | Fundus Images | Optic disc and cup segmentation | EFPS-Net | Proposed model exhibits superior computational performance with only 2.63 million parameters | |

| Girard et al. [27] | Fundus Images | Optic disc and cup segmentation | U-Net model | AGS performs better than CDR (AUC= 98.2%) | Datasets: INTERNAL (n = 350), RIM-ONE (n = 159), aRIGA650 (n = 650) |

| Li et al. [28] | Fundus Images | Referable GON | Inception-v3 | DL system achieved AUC of 0.986 with sensitivity of 95.6% and specificity of 92.0% | 48,116 fundus photographs |

| Nam et al. [29] | Fundus Images | OD classification model | CNNs: VGG16, VGG19, DenseNet121 | Non-tilted discs’ AUC: 0.98, 0.99, and 0.98 Tilted discs’ AUC: 0.92, 0.92, and 0.93 For VGG16, VGG19, and DenseNet121, respectively | 2507 fundus photographs |

| Thakur et al. [30] | Fundus Images | Glaucoma development prediction | MobileNetV2 | Glaucoma development prediction 4 to 7 years before disease onset AUC: 0.77 Model accuracy in predicting glaucoma development 1 to 3 years before disease onset: 0.88 Model accuracy in detecting glaucoma after onset: 0.95 | 66,721 fundus photographs |

| Thomas et al. [31] | Meta-analysis | Tele-glaucoma service | Estimates of diagnostic accuracy, odds ratio, and relative percentage of glaucoma cases detected | Tele-glaucoma can accurately discriminate glaucoma with sensitivity of 83.2% and specificity of 79% | 45 studies |

| Authors | Modality Analyzed | Aims | Methods | Results | Dataset |

|---|---|---|---|---|---|

| Brigatti et al. [44] | Visual Field | Determine VF progression | Back-propagation neural network | Neural network sensitivity was 73%, and specificity was 88% | 233 series of Octopus G1 VF |

| Sample et al. [45] | Visual Field | Predict development of abnormal VF at follow-up in OHT | Classifiers included two types of support vector machines: constrained MoG and mixture of generalized Gaussian models | ML classifiers predicted abnormality 3.92 years earlier than Statpac-like methods | 114 VF |

| Wen et al. [46] | Visual Field | Forecast future 24–2 HVFs | Fully Connected, FullBN-3, FullBN-5, FullBN-7, Residual-3, Residual-5, Residual-7, Cascade-3, Cascade-5 | Up to 5.5 years HVFS prediction with correlation of 0.92 between MD of predicted and actual future HVF | 32,443 VF |

| Berchuck et al. [47] | Visual Field | Estimate rates of progression and predict future patterns of VF loss in glaucoma | Variational auto-encoder | VAE detected significantly higher proportion of progression than MD at two (25% vs. 9%) and four (35% vs. 15%) years from baseline | 29,161 VF |

| Park et al. [41] | Visual Field | Predict future VF damage | RNN | RNN and OLR showed strong negative correlation with VF MD (Spearman’s rho = −0.734 vs. −0.618); in linear regression analysis, r2 was 0.380 vs. 0.215 (RNN vs. OLR) | Training dataset: 1408 eyes. Test dataset: 281 eyes |

| Wang et al. [42] | Visual Field | Detect VF progression | Archetype method | Agreement (kappa) and accuracy of archetype method (0.51 and 0.77) significantly (p < 0.001 for all) outperformed AGIS (0.06 and 0.52), CIGTS (0.24 and 0.59), MD slope (0.21 and 0.59), and PoPLR (0.26 and 0.60) | Development cohort: 11,817 eyes Clinical test dataset: 397 eyes |

| Yousefi et al. [48] | Visual Field | Detect glaucoma progression | Unsupervised Gaussian mixture model with expectation maximization | ML analysis detects progressing eyes earlier (3.5 years) than other methods consistently; ML detects more slowly progressing eyes than other methods (5.1 years) | VF of 1421 subjects. |

| Authors | Modality Analyzed | Aims | Methods | Results | Dataset |

|---|---|---|---|---|---|

| Devalla et al. [53] | SD-OCT | Digitally stain OCT images of ONH | Custom DL | Dice coefficient (0.84), sensitivity (92%), specificity (99%), intersection over union (0.89 ± 0.03), and accuracy (94%) | 100 eyes |

| Ran et al. [54] | SD-OCT | Detect GON | Residual network | AUROCs of 0.89–0.89, sensitivities of 78–90%, specificities of 79–86%, and accuracies of 80–86% | 4877 SD-OCT volumes of optic disc cube |

| Garcia et al. [55] | SD-OCT | Glaucoma prediction | LSTM network | In prediction stage: AUC > 0.93 both in primary and external test sets. Combination of CNN and LSTM networks achieves AUC = 0.88 | 176 healthy and 144 glaucomatous SD-OCT volumes |

| Akter et al. [56] | SD-OCT | Diagnostic glaucoma assessment | CNN architecture | DL model trained from optimal features: AUC = 0.98 and accuracy of 97% on validation data and 96% on test data DL model used in pilot study: AUC = 0.99 and accuracy of 98.6% | 200 subjects, consisting of 100 healthy subjects and 100 subjects with glaucoma |

| Xu et al. [57] | AS-OCT | Identify glaucoma type | Image processing and machine learning-based framework | Proposed method only requires 0.26 s per image; framework achieves 0.92 AUC value and 84.0% balanced accuracy at 85% specificity | 2048 images |

| Niwas et al. [58] | AS-OCT | Complex disease diagnosis | L-score and MRMR algorithms, AdaBoost | Study found that unsupervised L-score method achieved classification accuracy of 86.6% using 40 features Supervised MRMR method reached accuracy of 79.3% with 40 features and 84.3% with smaller set of 10 features | 84 features and 156 samples |

| Park et al. [59] | OCTA | Diagnostic performance of macular vessel density and GCIPLT | Multilayer neural network | When incorporated into macular GCIPL using artificial neural network, combined parameter showed better performance than macular GCIPL alone | 173 subjects |

| Miguel et al. [60] | OCTA | Assist in glaucoma diagnosis | Custom DL software | AI system successfully discriminated glaucoma from healthy eyes based on OCT-A scans with sensitivity of 99.5%, specificity of 92.5%, and AUC of 85% | 262 patients |

| Authors | Modality Analyzed | Aims | Methods | Results | Dataset |

|---|---|---|---|---|---|

| Miri et al. [68] | Multimodal | Optic disc and cup boundary segmentation | Unimodal and two multimodal machine-learning graph-based approaches for automated segmentation of optic disc and cup | Multimodal approaches outperform the unimodal approach in segmenting the optic disc and cup. | 25 multimodal image pairs from 25 subjects |

| Hussain et al. [69] | Multimodal | Predict glaucoma progression | Multimodal DL model that combines CNN with LSTM network | The proposed model achieved an AUC of 0.83 for predicting progression six months earlier. | Model was trained on OCT images, VF values, and demographic and clinical data from 86 glaucoma patients over five visits spanning 12 months |

| Thompson et al. [70] | Multimodal | Quantify glaucomatous neuroretinal damage | DL CNN | AUCs for discriminating glaucomatous from healthy eyes with the DL predictions and actual SD-OCT global BMO-MRW measurements were 0.94 and 0.93, respectively (p = 0.587). | Total of 9282 pairs of optic disc photographs and SDOCT optic nerve head scans |

| Xiong et al. [71] | Multimodal | Detect GON | FusionNet based on bimodal input of VF and OCT paired data were developed to detect GON | FusionNet achieved an AUC of 0.95. | 2463 pairs of VF and OCT images from 1083 patients |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martucci, A.; Gallo Afflitto, G.; Pocobelli, G.; Aiello, F.; Mancino, R.; Nucci, C. Lights and Shadows on Artificial Intelligence in Glaucoma: Transforming Screening, Monitoring, and Prognosis. J. Clin. Med. 2025, 14, 2139. https://doi.org/10.3390/jcm14072139

Martucci A, Gallo Afflitto G, Pocobelli G, Aiello F, Mancino R, Nucci C. Lights and Shadows on Artificial Intelligence in Glaucoma: Transforming Screening, Monitoring, and Prognosis. Journal of Clinical Medicine. 2025; 14(7):2139. https://doi.org/10.3390/jcm14072139

Chicago/Turabian StyleMartucci, Alessio, Gabriele Gallo Afflitto, Giulio Pocobelli, Francesco Aiello, Raffaele Mancino, and Carlo Nucci. 2025. "Lights and Shadows on Artificial Intelligence in Glaucoma: Transforming Screening, Monitoring, and Prognosis" Journal of Clinical Medicine 14, no. 7: 2139. https://doi.org/10.3390/jcm14072139

APA StyleMartucci, A., Gallo Afflitto, G., Pocobelli, G., Aiello, F., Mancino, R., & Nucci, C. (2025). Lights and Shadows on Artificial Intelligence in Glaucoma: Transforming Screening, Monitoring, and Prognosis. Journal of Clinical Medicine, 14(7), 2139. https://doi.org/10.3390/jcm14072139