Assessing ChatGPT’s Role in Sarcopenia and Nutrition: Insights from a Descriptive Study on AI-Driven Solutions

Abstract

1. Background

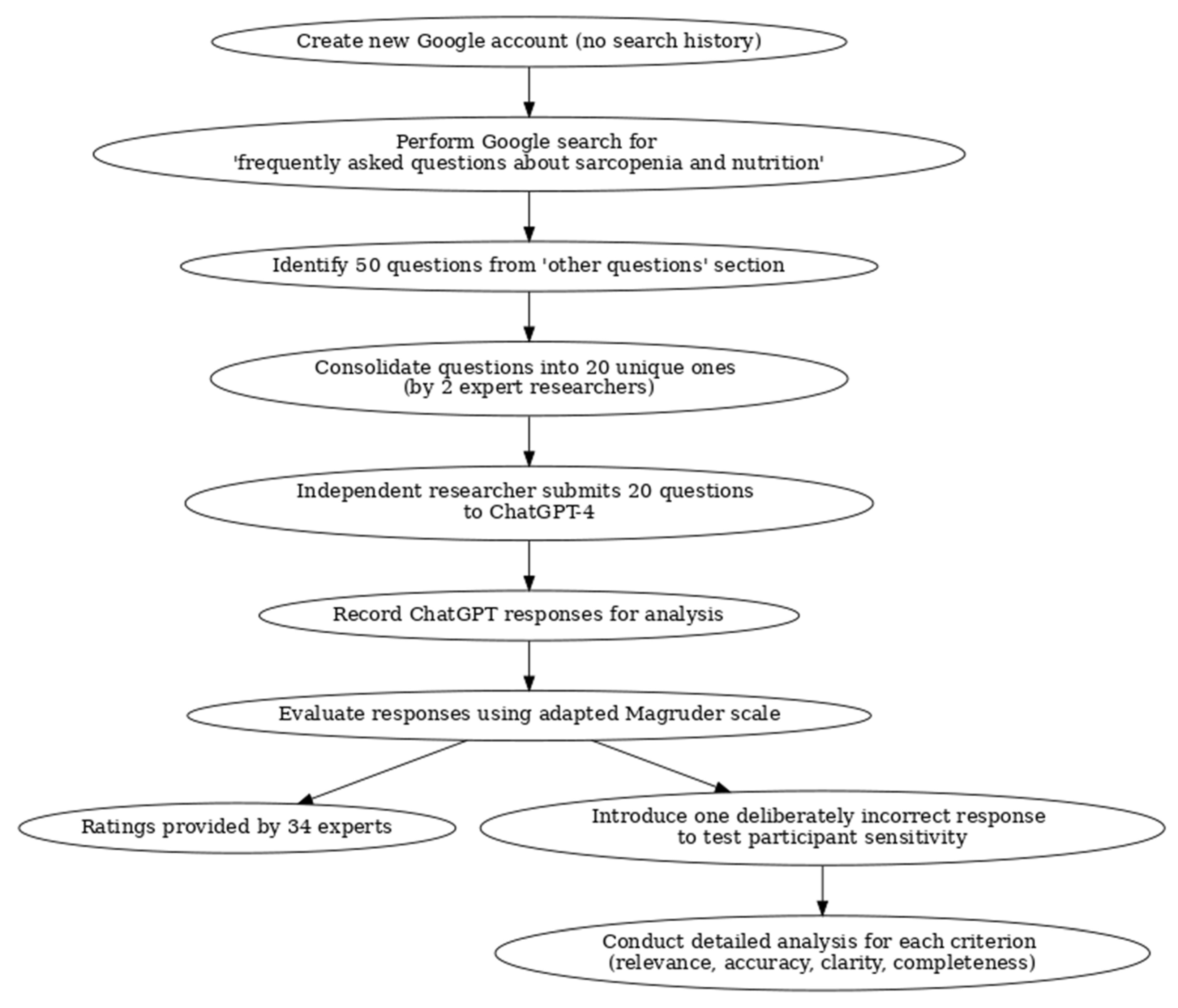

2. Methods

3. Statistical Analysis

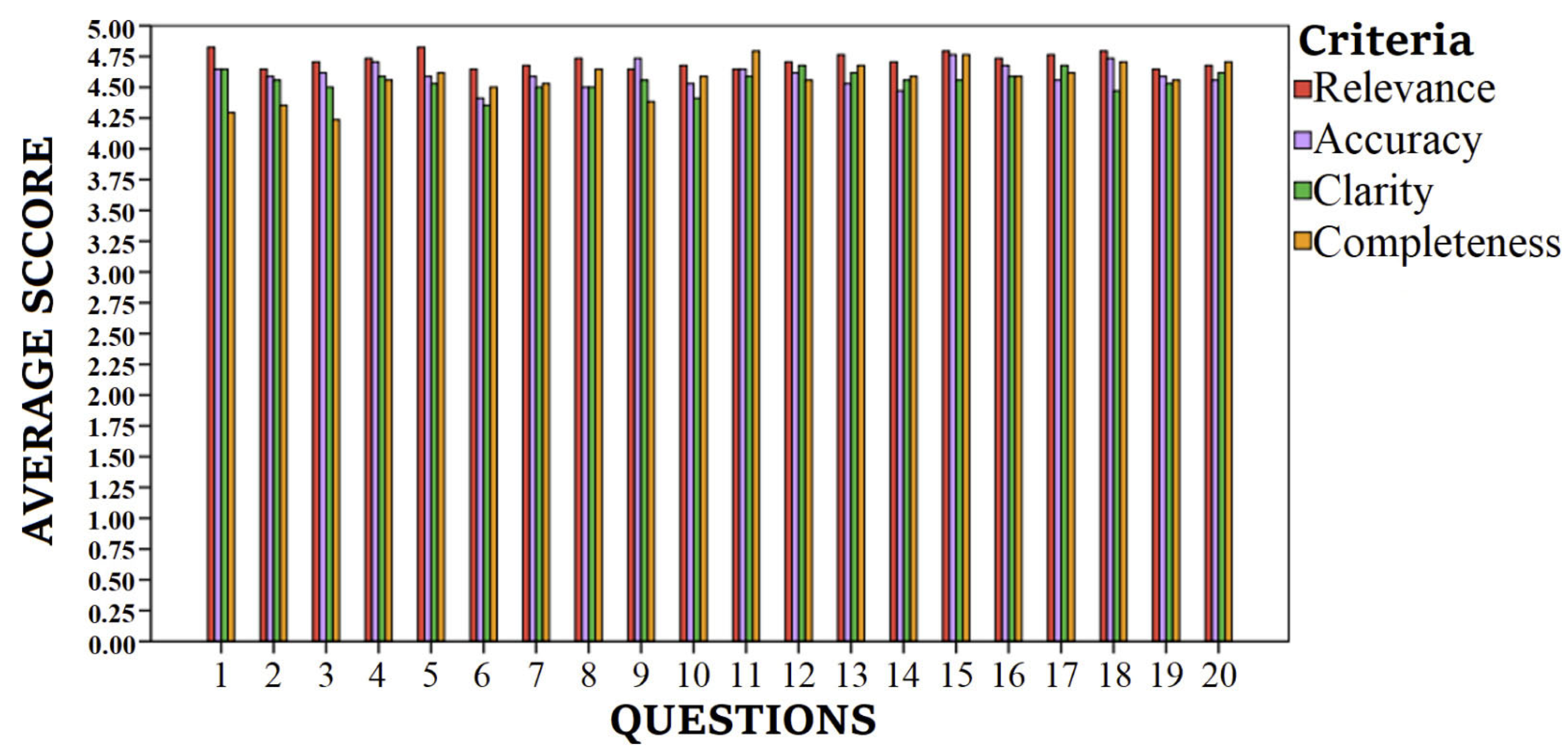

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rosenberg, I.H. Sarcopenia: Origins and clinical relevance. J. Nutr. 1997, 127 (Suppl. S5), 990S–991S. [Google Scholar] [CrossRef] [PubMed]

- Cruz-Jentoft, A.J.; Bahat, G.; Bauer, J.; Boirie, Y.; Bruyère, O.; Cederholm, T.; Cooper, C.; Landi, F.; Rolland, Y.; Sayer, A.A.; et al. Sarcopenia: Revised European consensus on definition and diagnosis. Age Ageing 2019, 48, 16–31. [Google Scholar] [CrossRef] [PubMed]

- Fielding, R.A.; Vellas, B.; Evans, W.J.; Bhasin, S.; Morley, J.E.; Newman, A.B.; van Kan, G.A.; Andrieu, S.; Bauer, J.; Breuille, D.; et al. Sarcopenia: An undiagnosed condition in older adults. Current consensus definition: Prevalence, etiology, and consequences. J. Am. Med. Dir. Assoc. 2011, 12, 249–256. [Google Scholar] [CrossRef] [PubMed]

- Bauer, J.M.; Verlaan, S.; Bautmans, I.; Brandt, K.; Donini, L.M.; Maggio, M.; McMurdo, M.E.; Mets, T.; Seal, C.; Wijers, S.L.; et al. Effects of a vitamin D and leucine-enriched whey protein nutritional supplement on measures of sarcopenia in older adults, the PROVIDE study: A randomized, double-blind, placebo-controlled trial. J. Am. Med. Dir. Assoc. 2015, 16, 740–747. [Google Scholar] [CrossRef]

- He, J.; Baxter, S.L.; Xu, J.; Xu, J.; Zhou, X.; Zhang, K. The practical implementation of artificial intelligence technologies in medicine. Nat. Med. 2019, 25, 30–36. [Google Scholar] [CrossRef]

- Shatte, A.B.R.; Hutchinson, D.M.; Teague, S.J. Machine learning in mental health: A scoping review of methods and applications. Psychol. Med. 2019, 49, 1426–1448. [Google Scholar] [CrossRef]

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G.; Maningo, J.; et al. Performance of ChatGPT on USMLE: Potential for AI-Assisted Medical Education Using Large Language Models. PLoS Digit. Health 2023, 2, e0000198. [Google Scholar] [CrossRef]

- Miner, A.S.; Milstein, A.; Schueller, S.; Hegde, R.; Mangurian, C.; Linos, E. Smartphone-based conversational agents and responses to questions about mental health, interpersonal violence, and physical health. JAMA Intern. Med. 2016, 176, 619–625. [Google Scholar] [CrossRef]

- Laranjo, L.; Dunn, A.G.; Tong, H.L.; Kocaballi, A.B.; Chen, J.; Bashir, R.; Surian, D.; Gallego, B.; Magrabi, F.; Lau, A.Y.S.; et al. Conversational agents in healthcare: A systematic review. J. Am. Med. Inform. Assoc. 2018, 25, 1248–1258. [Google Scholar] [CrossRef]

- Gilson, A.; Safranek, C.W.; Huang, T.; Socrates, V.; Chi, L.; Taylor, R.A.; Chartash, D. How Well Does ChatGPT Do When Taking the Medical Licensing Exams? The Implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Med. Educ. 2023, 9, e45312. [Google Scholar] [CrossRef]

- Magruder, M.L.; Rodriguez, A.N.; Wong, J.C.J.; Erez, O.; Piuzzi, N.S.; Scuderi, G.R.; Slover, J.D.; Oh, J.H.; Schwarzkopf, R.; Chen, A.F.; et al. Assessing ability for ChatGPT to answer total knee arthroplasty-related questions. J. Arthroplast. 2024, 39, 2022–2027. [Google Scholar] [CrossRef] [PubMed]

- Bauer, J.; Biolo, G.; Cederholm, T.; Cesari, M.; Cruz-Jentoft, A.J.; Morley, J.E.; Phillips, S.; Sieber, C.; Stehle, P.; Teta, D.; et al. Evidence-based recommendations for optimal dietary protein intake in older people: A position paper from the PROT-AGE Study Group. J. Am. Med. Dir. Assoc. 2013, 14, 542–559. [Google Scholar] [CrossRef] [PubMed]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Shrout, P.E.; Fleiss, J.L. Intraclass correlations: Uses in assessing rater reliability. Psychol. Bull. 1979, 86, 420–428. [Google Scholar] [CrossRef] [PubMed]

- Wei, Q.; Yao, Z.; Cui, Y.; Wei, B.; Jin, Z.; Xu, X. Evaluation of ChatGPT-generated medical responses: A systematic review and meta-analysis. J. Biomed. Inform. 2024, 151, 104620. [Google Scholar] [CrossRef]

- Kirk, D.; Van Eijnatten, E.; Camps, G. Comparison of answers between ChatGPT and human dieticians to common nutrition questions. J. Nutr. Metab. 2023, 2023, 5548684. [Google Scholar] [CrossRef]

- Chatelan, A.; Clerc, A.; Fonta, P.A. ChatGPT and future artificial intelligence chatbots: What may be the influence on credentialed nutrition and dietetics practitioners? J. Acad. Nutr. Diet. 2023, 123, 1525–1531. [Google Scholar] [CrossRef]

- Papastratis, I.; Stergioulas, A.; Konstantinidis, D.; Daras, P.; Dimitropoulos, K. Can ChatGPT provide appropriate meal plans for NCD patients? Nutrition 2023, 121, 112291. [Google Scholar] [CrossRef]

- Sallam, M. ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns. Healthcare 2023, 11, 887. [Google Scholar] [CrossRef]

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial intelligence in healthcare: Past, present and future. Stroke Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef] [PubMed]

- Yapar, D.; Demir Avcı, Y.; Tokur Sonuvar, E.; Eğerci, Ö.F.; Yapar, A. ChatGPT’s potential to support home care for patients in the early period after orthopedic interventions and enhance public health. Jt. Dis. Relat. Surg. 2024, 35, 169–176. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef] [PubMed]

- Kalla, D.; Smith, N. Study and analysis of chat GPT and its impact on different fields of study. Int. J. Innov. Sci. Res. Technol. 2023, 8, 827–833. [Google Scholar]

- Atwal, K. Artificial intelligence in clinical nutrition and dietetics: A brief overview of current evidence. Nutr. Clin. Pract. 2024, 39, 736–742. [Google Scholar] [CrossRef] [PubMed]

- Luo, J.; Li, T.; Wu, D.; Jenkin, M.; Liu, S.; Dudek, G. Hallucination Detection and Hallucination Mitigation: An Investigation. arXiv 2024, arXiv:2401.08358. [Google Scholar]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of hallucination in natural language generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- You, M.; Chen, X.; Liu, D.; Lin, Y.; Chen, G.; Li, J. ChatGPT-4 and wearable device assisted Intelligent Exercise Therapy for co-existing Sarcopenia and Osteoarthritis (GAISO): A feasibility study and design for a randomized controlled PROBE non-inferiority trial. J. Orthop. Surg. Res. 2024, 19, 635. [Google Scholar] [CrossRef]

- Sharma, S.; Pajai, S.; Prasad, R.; Wanjari, M.B.; Munjewar, K.P.; Sharma, R.; Pathade, A. A critical review of ChatGPT as a potential substitute for diabetes educators. Cureus 2023, 15, e38380. [Google Scholar] [CrossRef]

| Average Score for All Criteria | Median (Min–Max) for All Criteria | Rate of Respondents Giving a Rating of ≥4 for All Criteria, % | ||

|---|---|---|---|---|

| Q1 | What is sarcopenia and how is it related to nutrition? | 4.60 | 5.0 (2.0–5.0) | 97.06 |

| Q2 | Which nutrients should I prioritize to increase or maintain my muscle mass (proteins, vitamins, minerals)? | 4.54 | 5.0 (2.0–5.0) | 97.06 |

| Q3 | How much daily protein do I need, and from which foods should I obtain these proteins? | 4.51 | 5.0 (2.0–5.0) | 89.71 |

| Q4 | Are plant-based protein sources sufficient to reduce the risk of sarcopenia, or should I necessarily consume animal proteins? | 4.65 | 5.0 (3.0–5.0) | 94.85 |

| Q5 | How frequently should I consume protein sources such as eggs, fish, chicken, or red meat? | 4.64 | 5.0 (3.0–5.0) | 95.59 |

| Q6 | Are protein powders or amino acid supplements recommended for sarcopenia? When and how should I use them? | 4.48 | 5.0 (2.0–5.0) | 90.44 |

| Q7 | Do vitamin D, calcium, or omega-3 fatty acids help maintain muscle mass? From which foods can I obtain these nutrients? | 4.57 | 5.0 (3.0–5.0) | 99.26 |

| Q8 | How should I plan the number of meals and the intervals between meals to prevent or slow down sarcopenia? | 4.60 | 5.0 (3.0–5.0) | 94.85 |

| Q9 | In cases of overweight or obesity, how can I lose weight healthily while preserving muscle mass? | 4.58 | 5.0 (2.0–5.0) | 95.59 |

| Q10 | What are the nutritional differences in age groups at high risk of sarcopenia (e.g., over 65 years old)? | 4.55 | 5.0 (3.0–5.0) | 94.85 |

| Q11 | What type of diet should I adopt to preserve my muscles? For example, does a Mediterranean diet benefit sarcopenia? | 4.67 | 5.0 (3.0–5.0) | 97.79 |

| Q12 | How do lifestyle factors (exercise, sleep, stress management) affect sarcopenia, and what is their interaction with nutrition? | 4.64 | 5.0 (3.0–5.0) | 98.53 |

| Q13 | When grocery shopping, which foods should I prioritize to support my muscle mass, and which should I avoid? | 4.65 | 5.0 (2.0–5.0) | 96.32 |

| Q14 | Can dietary supplements (e.g., creatine, BCAAs, collagen) contribute to the management of sarcopenia, or should I be cautious about them? | 4.58 | 5.0 (2.0–5.0) | 91.91 |

| Q15 | As a person with sarcopenia, should I seek help from a dietitian or specialist when planning my nutrition, or are general recommendations sufficient? | 4.72 | 5.0 (2.0–5.0) | 96.32 |

| Q16 | Are dietary approaches like intermittent fasting effective in preventing or treating sarcopenia? | 4.65 | 5.0 (2.0–5.0) | 94.85 |

| Q17 | Which vitamins and minerals support muscle health and are important in preventing sarcopenia (e.g., magnesium, zinc, vitamin D)? | 4.65 | 5.0 (3.0–5.0) | 94.85 |

| Q18 | How does appetite loss in elderly individuals affect sarcopenia, and what nutritional strategies can be applied to increase appetite? | 4.68 | 5.0 (3.0–5.0) | 99.26 |

| Q19 | Does limiting carbohydrate intake increase the risk of sarcopenia, or is it more important to focus on protein? | 4.58 | 5.0 (2.0–5.0) | 90.44 |

| Q20 | What types of foods should I consume before or after exercise, and how does this make a difference in the treatment of sarcopenia? | 4.64 | 5.0 (2.0–5.0) | 94.12 |

| Relevance * | Accuracy * | Clarity * | Completeness * | p ** (Cohen’s d) | |

|---|---|---|---|---|---|

| All Questions | |||||

| Mean ± SD | 4.72 ± 0.06 | 4.60 ± 0.09 | 4.55 ± 0.08 | 4.56 ± 0.15 | Relevance vs. Accuracy < 0.001 (1.56) Relevance vs. Clarity< 0.001 (2.40) Relevance vs. Completeness = 0.001 (1.40) Accuracy vs. Clarity = 0.054 (0.58) Accuracy vs. Completeness = 0.642 (0.32) Clarity vs. Completeness = 0.586 (0.08) |

| Median (min–max) | 4.71 (4.65–4.82) | 4.58 (4.41–4.76) | 4.55 (4.35–4.68) | 4.58 (4.24–4.79) |

| ICC Value | 95%CI | p | |

|---|---|---|---|

| Relevance | −0.104 | −0.391; −0.287 | 0.684 |

| Accuracy | 0.127 | −0.195; 0.493 | 0.208 |

| Clarity | 0.022 | −0.350; 0.437 | 0.417 |

| Completeness | 0.569 | 0.324; 0.780 | <0.001 |

| Total | 0.416 | 0.261; 0.562 | <0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karataş, Ö.; Demirci, S.; Pota, K.; Tuna, S. Assessing ChatGPT’s Role in Sarcopenia and Nutrition: Insights from a Descriptive Study on AI-Driven Solutions. J. Clin. Med. 2025, 14, 1747. https://doi.org/10.3390/jcm14051747

Karataş Ö, Demirci S, Pota K, Tuna S. Assessing ChatGPT’s Role in Sarcopenia and Nutrition: Insights from a Descriptive Study on AI-Driven Solutions. Journal of Clinical Medicine. 2025; 14(5):1747. https://doi.org/10.3390/jcm14051747

Chicago/Turabian StyleKarataş, Özlem, Seden Demirci, Kaan Pota, and Serpil Tuna. 2025. "Assessing ChatGPT’s Role in Sarcopenia and Nutrition: Insights from a Descriptive Study on AI-Driven Solutions" Journal of Clinical Medicine 14, no. 5: 1747. https://doi.org/10.3390/jcm14051747

APA StyleKarataş, Ö., Demirci, S., Pota, K., & Tuna, S. (2025). Assessing ChatGPT’s Role in Sarcopenia and Nutrition: Insights from a Descriptive Study on AI-Driven Solutions. Journal of Clinical Medicine, 14(5), 1747. https://doi.org/10.3390/jcm14051747