Assessing the Recognition of Social Interactions Through Body Motion in the Routine Care of Patients with Post-Lingual Sensorineural Hearing Loss

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

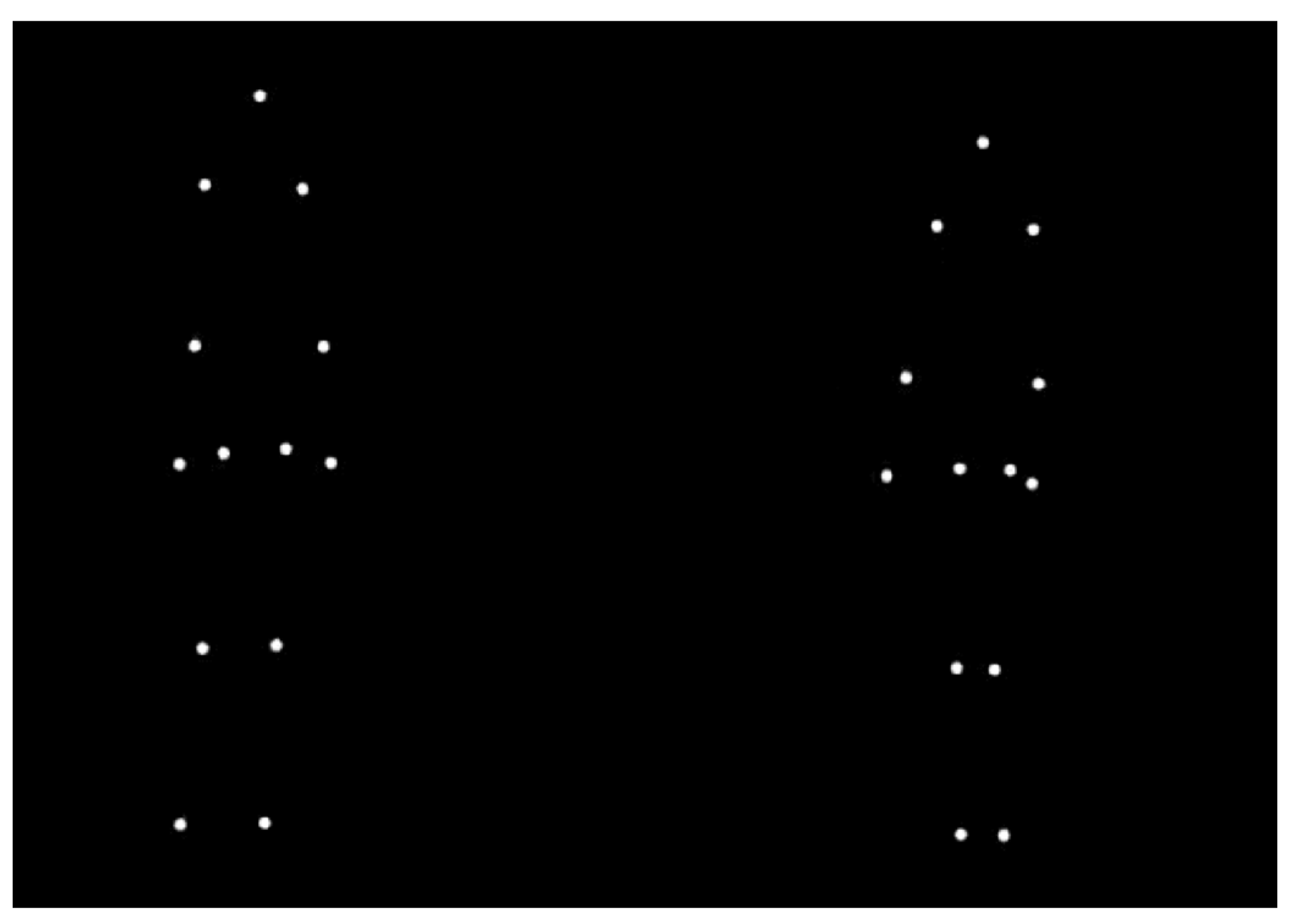

2.2. Materials and Procedure

2.3. Data Analysis

3. Results

3.1. Participants

3.2. Results, Task A

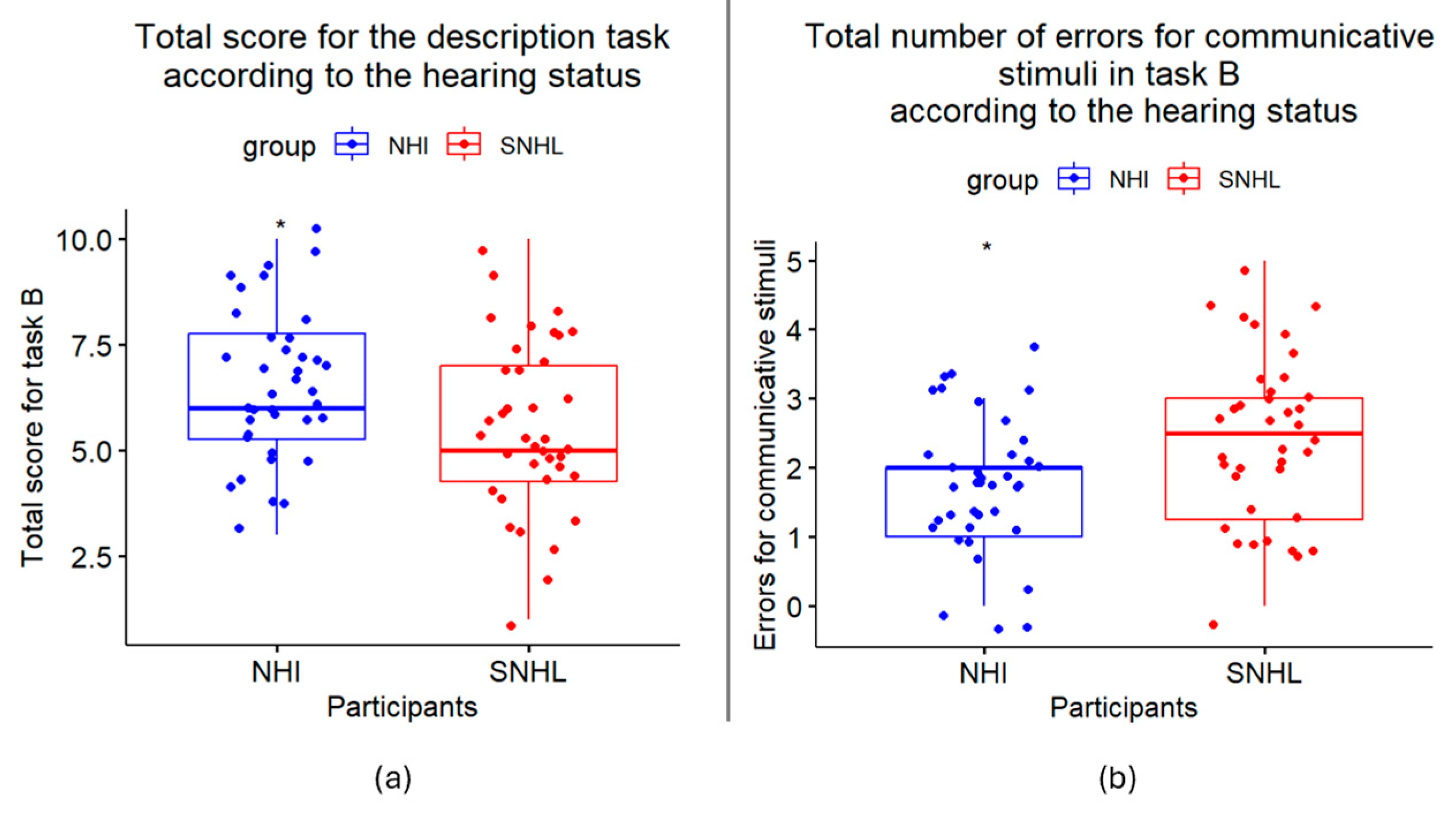

3.3. Results, Task B

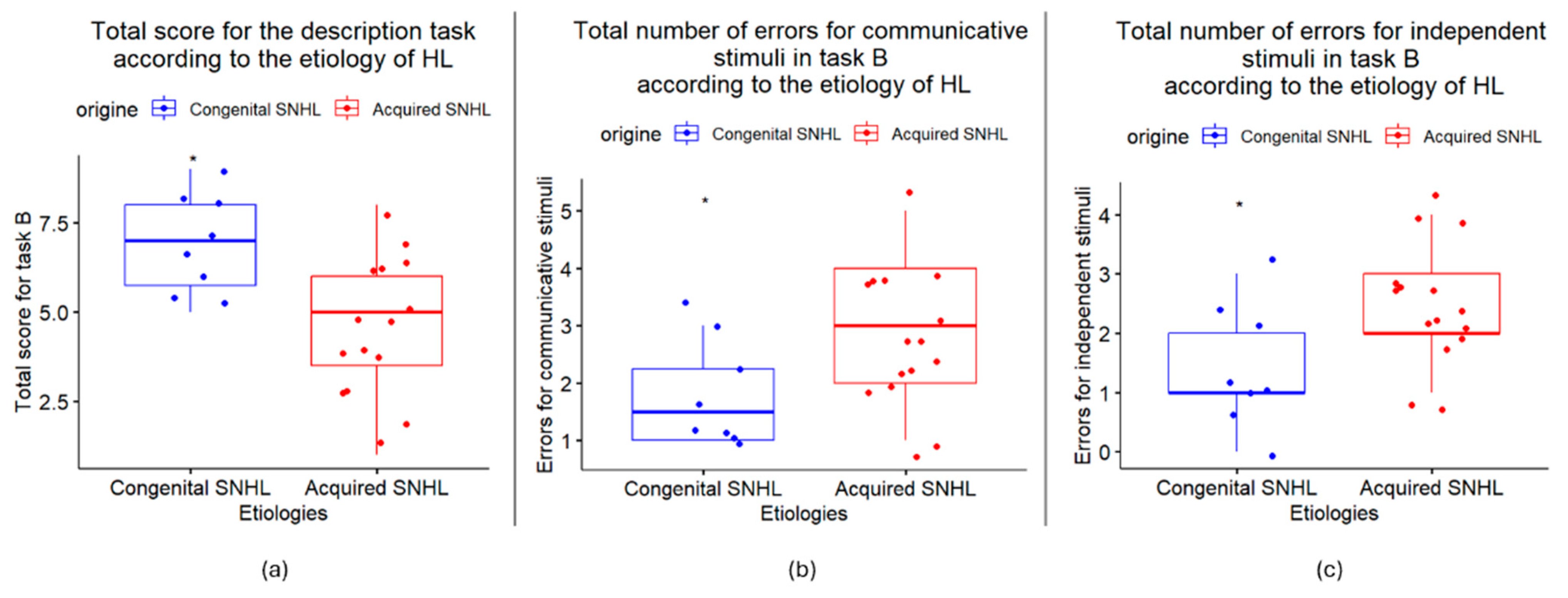

3.4. Exploration of Differences in HL

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tye-Murray, N.; Sommers, M.; Spehar, B. Auditory and Visual Lexical Neighborhoods in Audiovisual Speech Perception. Trends Amplif. 2007, 11, 233–241. [Google Scholar] [CrossRef] [PubMed]

- Adolphs, R. The neurobiology of social cognition. Curr. Opin. Neurobiol. 2001, 11, 231–239. [Google Scholar] [CrossRef] [PubMed]

- Hall, J.A.; Horgan, T.G.; Murphy, N.A. Nonverbal Communication. Annu. Rev. Psychol. 2019, 70, 271–294. [Google Scholar] [CrossRef] [PubMed]

- Blakemore, S.J.; Decety, J. From the perception of action to the understanding of intention. Nat. Rev. Neurosci. 2001, 2, 561–567. [Google Scholar] [CrossRef]

- Decety, J.; Sommerville, J.A. Shared representations between self and other: A social cognitive neuroscience view. Trends Cogn. Sci. 2003, 7, 527–533. [Google Scholar] [CrossRef]

- Jacob, P.; Jeannerod, M. The motor theory of social cognition: A critique. Trends Cogn. Sci. 2005, 9, 21–25. [Google Scholar] [CrossRef]

- Pavlova, M.A. Biological motion processing as a hallmark of social cognition. Cereb. Cortex 2012, 22, 981–995. [Google Scholar] [CrossRef]

- Dittrich, W.H. Action categories and the perception of biological motion. Perception 1993, 22, 15–22. [Google Scholar] [CrossRef]

- Vanrie, J.; Verfaillie, K. Perception of biological motion: A stimulus set of human point-light actions. Behav. Res. Methods Instrum. Comput. 2004, 36, 625–629. [Google Scholar] [CrossRef]

- Manera, V.; Schouten, B.; Becchio, C.; Bara, B.G.; Verfaillie, K. Inferring intentions from biological motion: A stimulus set of point-light communicative interactions. Behav. Res. Methods 2010, 42, 168–178. [Google Scholar] [CrossRef]

- Manera, V.; Ianì, F.; Bourgeois, J.; Haman, M.; Okruszek, Ł.P.; Rivera, S.M.; Robert, P.; Schilbach, L.; Sievers, E.; Verfaillie, K.; et al. The Multilingual CID-5: A New Tool to Study the Perception of Communicative Interactions in Different Languages. Front. Psychol. 2015, 6, 1724. [Google Scholar] [CrossRef] [PubMed]

- Okruszek, Ł.; Chrustowicz, M. Social Perception and Interaction Database-A Novel Tool to Study Social Cognitive Processes With Point-Light Displays. Front. Psychiatry 2020, 11, 123. [Google Scholar] [CrossRef] [PubMed]

- Clarke, T.J.; Bradshaw, M.F.; Field, D.T.; Hampson, S.E.; Rose, D. The perception of emotion from body movement in point-light displays of interpersonal dialogue. Perception 2005, 34, 1171–1180. [Google Scholar] [CrossRef]

- Simon, M.; Lazzouni, L.; Campbell, E.; Delcenserie, A.; Muise-Hennessey, A.; Newman, A.J.; Champoux, F.; Lepore, F. Enhancement of visual biological motion recognition in early-deaf adults: Functional and behavioral correlates. PLoS ONE 2020, 15, e0236800. [Google Scholar] [CrossRef]

- Monzani, D.; Galeazzi, G.; Genovese, E.; Marrara, A.; Martini, A. Psychological profile and social behaviour of working adults with mild or moderate hearing loss. Acta Otorhinolaryngol. Ital. 2008, 28, 61–66. [Google Scholar]

- Thai-Van, H.; Mosnier, I.; Dejean, F.; Ambert-Dahan, E.; Bakhos, D.; Belmin, J.; Bonnard, D.; Borel, S.; Ceccato, J.-C.; Coez, A.; et al. Early management of presbycusis: Recommendations from the French Society of Otorhinolaryngology and Head and Neck Surgery, the French Society of Audiology, and the French Society of Geriatrics and Gerontology. Gériatrie Psychol. Neuropsychiatr. Vieil. 2023, 21, 9–20. [Google Scholar] [CrossRef]

- Mavrogeni, P.; Molnár, A.; Molnár, V.; Tamás, L.; Maihoub, S. Correlation Between the Pitch and Loudness of Tinnitus, Hearing Levels, and Tinnitus Handicap Inventory Scores in Patients with Chronic Subjective Tinnitus. J. Clin. Med. 2024, 13, 7261. [Google Scholar] [CrossRef]

- Garnefski, N.; Kraaij, V. Effects of a Cognitive Behavioral Self-help program on emotional problems for people with acquired hearing loss: A randomized controlled trial. J. Deaf. Stud. Deaf. Educ. 2012, 17, 75–84. [Google Scholar] [CrossRef]

- Pichon, S.; de Gelder, B.; Grezes, J. Emotional modulation of visual and motor areas by dynamic body expressions of anger. Soc. Neurosci. 2008, 3, 199–212. [Google Scholar] [CrossRef]

- Schild, S.; Dalenberg, C.J. Psychoform and somatoform dissociation and PTSD in deaf adults. J. Trauma Dissociation 2012, 13, 361–376. [Google Scholar] [CrossRef]

- Lomber, S.G.; Meredith, M.A.; Kral, A. Cross-modal plasticity in specific auditory cortices underlies visual compensations in the deaf. Nat. Neurosci. 2010, 13, 1421–1427. [Google Scholar] [CrossRef] [PubMed]

- Fine, I.; Finney, E.M.; Boynton, G.M.; Dobkins, K.R. Comparing the effects of auditory deprivation and sign language within the auditory and visual cortex. J. Cogn. Neurosci. 2005, 17, 1621–1637. [Google Scholar] [CrossRef] [PubMed]

- Merabet, L.B.; Pascual-Leone, A. Neural reorganization following sensory loss: The opportunity of change. Nat. Rev. Neurosci. 2010, 11, 44–52. [Google Scholar] [CrossRef] [PubMed]

- Smittenaar, C.R.; MacSweeney, M.; Sereno, M.I.; Schwarzkopf, D.S. Does Congenital Deafness Affect the Structural and Functional Architecture of Primary Visual Cortex? Open Neuroimaging J. 2016, 10, 1–19. [Google Scholar] [CrossRef][Green Version]

- Finney, E.M.; Fine, I.; Dobkins, K.R. Visual stimuli activate auditory cortex in the deaf. Nat. Neurosci. 2001, 4, 1171–1173. [Google Scholar] [CrossRef]

- Capek, C.M.; Macsweeney, M.; Woll, B.; Waters, D.; McGuire, P.K.; David, A.S.; Brammer, M.J.; Campbell, R. Cortical circuits for silent speechreading in deaf and hearing people. Neuropsychologia 2008, 46, 1233–1241. [Google Scholar] [CrossRef]

- Lazard, D.S.; Innes-Brown, H.; Barone, P. Adaptation of the communicative brain to post-lingual deafness. Evidence from functional imaging. Hear. Res. 2014, 307, 136–143. [Google Scholar] [CrossRef]

- Lee, H.-J.; Truy, E.; Mamou, G.; Sappey-Marinier, D.; Giraud, A.-L. Visual speech circuits in profound acquired deafness: A possible role for latent multimodal connectivity. Brain 2007, 130, 2929–2941. [Google Scholar] [CrossRef]

- Pavani, F.; Bottari, D. Visual Abilities in Individuals with Profound Deafness A Critical Review. In The Neural Bases of Multisensory Processes; Murray, M.M., Wallace, M.T., Eds.; CRC Press/Taylor & Francis: Boca Raton, FL, USA, 2012; ISBN 978-1-4398-1217-4. [Google Scholar]

- Codina, C.; Pascalis, O.; Mody, C.; Toomey, P.; Rose, J.; Gummer, L.; Buckley, D. Visual advantage in deaf adults linked to retinal changes. PLoS ONE 2011, 6, e20417. [Google Scholar] [CrossRef]

- Lomber, S.G.; Meredith, M.A.; Kral, A. Adaptive crossmodal plasticity in deaf auditory cortex: Areal and laminar contributions to supranormal vision in the deaf. Prog. Brain Res. 2011, 191, 251–270. [Google Scholar] [CrossRef]

- Iversen, J.R.; Patel, A.D.; Nicodemus, B.; Emmorey, K. Synchronization to auditory and visual rhythms in hearing and deaf individuals. Cognition 2015, 134, 232–244. [Google Scholar] [CrossRef] [PubMed]

- Stevens, C.; Neville, H. Neuroplasticity as a double-edged sword: Deaf enhancements and dyslexic deficits in motion processing. J. Cogn. Neurosci. 2006, 18, 701–714. [Google Scholar] [CrossRef] [PubMed]

- Shiell, M.M.; Champoux, F.; Zatorre, R.J. Enhancement of visual motion detection thresholds in early deaf people. PLoS ONE 2014, 9, e90498. [Google Scholar] [CrossRef] [PubMed]

- Alencar, C.D.C.; Butler, B.E.; Lomber, S.G. What and How the Deaf Brain Sees. J. Cogn. Neurosci. 2019, 31, 1091–1109. [Google Scholar] [CrossRef] [PubMed]

- Brozinsky, C.J.; Bavelier, D. Motion velocity thresholds in deaf signers: Changes in lateralization but not in overall sensitivity. Brain Res. Cogn. Brain Res. 2004, 21, 1–10. [Google Scholar] [CrossRef]

- Megreya, A.M.; Bindemann, M. A visual processing advantage for young-adolescent deaf observers: Evidence from face and object matching tasks. Sci. Rep. 2017, 7, 41133. [Google Scholar] [CrossRef]

- Bavelier, D.; Brozinsky, C.; Tomann, A.; Mitchell, T.; Neville, H.; Liu, G. Impact of early deafness and early exposure to sign language on the cerebral organization for motion processing. J. Neurosci. 2001, 21, 8931–8942. [Google Scholar] [CrossRef]

- Bosworth, R.G.; Dobkins, K.R. Visual field asymmetries for motion processing in deaf and hearing signers. Brain Cogn. 2002, 49, 170–181. [Google Scholar] [CrossRef]

- Suh, M.-W.; Lee, H.-J.; Kim, J.S.; Chung, C.K.; Oh, S.-H. Speech experience shapes the speechreading network and subsequent deafness facilitates it. Brain 2009, 132, 2761–2771. [Google Scholar] [CrossRef]

- Arnold, P.; Murray, C. Memory for faces and objects by deaf and hearing signers and hearing nonsigners. J. Psycholinguist. Res. 1998, 27, 481–497. [Google Scholar] [CrossRef]

- McCullough, S.; Emmorey, K. Face processing by deaf ASL signers: Evidence for expertise in distinguished local features. J. Deaf. Stud. Deaf. Educ. 1997, 2, 212–222. [Google Scholar] [CrossRef] [PubMed]

- Krejtz, I.; Krejtz, K.; Wisiecka, K.; Abramczyk, M.; Olszanowski, M.; Duchowski, A.T. Attention Dynamics During Emotion Recognition by Deaf and Hearing Individuals. J. Deaf. Stud. Deaf. Educ. 2020, 25, 10–21. [Google Scholar] [CrossRef] [PubMed]

- Ambert-Dahan, E.; Giraud, A.-L.; Mecheri, H.; Sterkers, O.; Mosnier, I.; Samson, S. Emotional recognition of dynamic facial expressions before and after cochlear implantation in adults with progressive deafness. Hear. Res. 2017, 354, 64–72. [Google Scholar] [CrossRef]

- Strelnikov, K.; Rouger, J.; Demonet, J.-F.; Lagleyre, S.; Fraysse, B.; Deguine, O.; Barone, P. Visual activity predicts auditory recovery from deafness after adult cochlear implantation. Brain 2013, 136, 3682–3695. [Google Scholar] [CrossRef]

- Quandt, L.C.; Kubicek, E.; Willis, A.; Lamberton, J. Enhanced biological motion perception in deaf native signers. Neuropsychologia 2021, 161, 107996. [Google Scholar] [CrossRef]

- Cardon, G.; Campbell, J.; Sharma, A. Plasticity in the Developing Auditory Cortex: Evidence from Children with Sensorineural Hearing Loss and Auditory Neuropathy Spectrum Disorder. J. Am. Acad. Audiol. 2012, 23, 396–495. [Google Scholar] [CrossRef]

- World Health Organization. Deafness and Hearing Loss. 2024. Available online: https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss (accessed on 4 April 2024).

- Fullerton, A.M.; Vickers, D.A.; Luke, R.; Billing, A.N.; McAlpine, D.; Hernandez-Perez, H.; Peelle, J.E.; Monaghan, J.J.M.; McMahon, C.M. Cross-modal functional connectivity supports speech understanding in cochlear implant users. Cereb. Cortex 2023, 33, 3350–3371. [Google Scholar] [CrossRef]

- Leclercq, F.; Renard, C.; Vincent, C. Speech audiometry in noise: Development of the French-language VRB (vocale rapide dans le bruit) test. Eur. Ann. Otorhinolaryngol. Head. Neck Dis. 2018, 135, 315–319. [Google Scholar] [CrossRef]

- Grober, E.; Buschke, H.; Crystal, H.; Bang, S.; Dresner, R. Screening for dementia by memory testing. Neurology 1988, 38, 900. [Google Scholar] [CrossRef]

- Baddeley, A.D.; Emslie, H.; Nimmo-Smith, I. Doors and People: A Test of Visual and Verbal Recall and Recognition. Manual; Thames Valley Test Company: Bury St. Edmunds, UK, 1994. [Google Scholar]

- Manera, V.; von der Lühe, T.; Schilbach, L.; Verfaillie, K.; Becchio, C. Communicative interactions in point-light displays: Choosing among multiple response alternatives. Behav. Res. Methods 2016, 48, 1580–1590. [Google Scholar] [CrossRef]

- Heimler, B.; Weisz, N.; Collignon, O. Revisiting the adaptive and maladaptive effects of crossmodal plasticity. Neuroscience 2014, 283, 44–63. [Google Scholar] [CrossRef] [PubMed]

- Buckley, K.A.; Tobey, E.A. Cross-modal plasticity and speech perception in pre- and postlingually deaf cochlear implant users. Ear Hear. 2011, 32, 2–15. [Google Scholar] [CrossRef] [PubMed]

- Glick, H.; Sharma, A. Cross-modal plasticity in developmental and age-related hearing loss: Clinical implications. Hear. Res. 2017, 343, 191–201. [Google Scholar] [CrossRef] [PubMed]

- Livingston, G.; Huntley, J.; Liu, K.Y.; Costafreda, S.G.; Selbæk, G.; Alladi, S.; Ames, D.; Banerjee, S.; Burns, A.; Brayne, C.; et al. Dementia prevention, intervention, and care: 2024 report of the Lancet standing Commission. Lancet 2024, 404, 572–628. [Google Scholar] [CrossRef]

- Cacioppo, J.T.; Hawkley, L.C. Perceived social isolation and cognition. Trends Cogn. Sci. 2009, 13, 447–454. [Google Scholar] [CrossRef]

- Uchida, Y.; Sugiura, S.; Nishita, Y.; Saji, N.; Sone, M.; Ueda, H. Age-related hearing loss and cognitive decline—The potential mechanisms linking the two. Auris Nasus Larynx 2019, 46, 1–9. [Google Scholar] [CrossRef]

- Tye-Murray, N.; Sommers, M.; Spehar, B.; Myerson, J.; Hale, S. Aging, audiovisual integration, and the principle of inverse effectiveness. Ear Hear. 2010, 31, 636–644. [Google Scholar] [CrossRef]

- Sommers, M.S.; Tye-Murray, N.; Spehar, B. Auditory-visual speech perception and auditory-visual enhancement in normal-hearing younger and older adults. Ear Hear. 2005, 26, 263–275. [Google Scholar] [CrossRef]

| SNHL (n = 38) | NHIs (n = 38) | Total (N = 76) | ||

|---|---|---|---|---|

| Sex | Male | 12 | 12 | 24 |

| Female | 26 | 26 | 52 | |

| Age | Median (IQR) | 56.5 (30) | 57 (28.75) | 56.5 (30.5) |

| Q1–Q3 | 39.5–69.5 | 41–69.75 | 39.5–70 | |

| Min.–Max. | 19–82 | 20–82 | 19–82 | |

| Education | Primary | 8 | 4 | 12 |

| Secondary | 8 | 7 | 15 | |

| Higher | 22 | 27 | 49 |

| Congenital (n = 8) | Acquired (n = 15) | ||

|---|---|---|---|

| Sex | Male | 1 | 7 |

| Female | 7 | 8 | |

| Age | Median (IQR) | 30 (10) | 67 (19.5) |

| Q1–Q3 | 28–38 | 54.5–74 | |

| Min.–Max. | 19–52 | 38–82 | |

| Education | Primary | 1 | 3 |

| Secondary | 3 | 0 | |

| Higher | 4 | 12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fauvet, C.; Cantini, L.; Chaudoreille, A.-E.; Cancian, E.; Bonnel, B.; Sérignac, C.; Derreumaux, A.; Robert, P.; Guevara, N.; Gros, A.; et al. Assessing the Recognition of Social Interactions Through Body Motion in the Routine Care of Patients with Post-Lingual Sensorineural Hearing Loss. J. Clin. Med. 2025, 14, 1604. https://doi.org/10.3390/jcm14051604

Fauvet C, Cantini L, Chaudoreille A-E, Cancian E, Bonnel B, Sérignac C, Derreumaux A, Robert P, Guevara N, Gros A, et al. Assessing the Recognition of Social Interactions Through Body Motion in the Routine Care of Patients with Post-Lingual Sensorineural Hearing Loss. Journal of Clinical Medicine. 2025; 14(5):1604. https://doi.org/10.3390/jcm14051604

Chicago/Turabian StyleFauvet, Cordélia, Léa Cantini, Aude-Eva Chaudoreille, Elisa Cancian, Barbara Bonnel, Chloé Sérignac, Alexandre Derreumaux, Philippe Robert, Nicolas Guevara, Auriane Gros, and et al. 2025. "Assessing the Recognition of Social Interactions Through Body Motion in the Routine Care of Patients with Post-Lingual Sensorineural Hearing Loss" Journal of Clinical Medicine 14, no. 5: 1604. https://doi.org/10.3390/jcm14051604

APA StyleFauvet, C., Cantini, L., Chaudoreille, A.-E., Cancian, E., Bonnel, B., Sérignac, C., Derreumaux, A., Robert, P., Guevara, N., Gros, A., & Manera, V. (2025). Assessing the Recognition of Social Interactions Through Body Motion in the Routine Care of Patients with Post-Lingual Sensorineural Hearing Loss. Journal of Clinical Medicine, 14(5), 1604. https://doi.org/10.3390/jcm14051604