Validated Microsurgical Training Programmes: A Systematic Review of the Current Literature

Abstract

1. Introduction

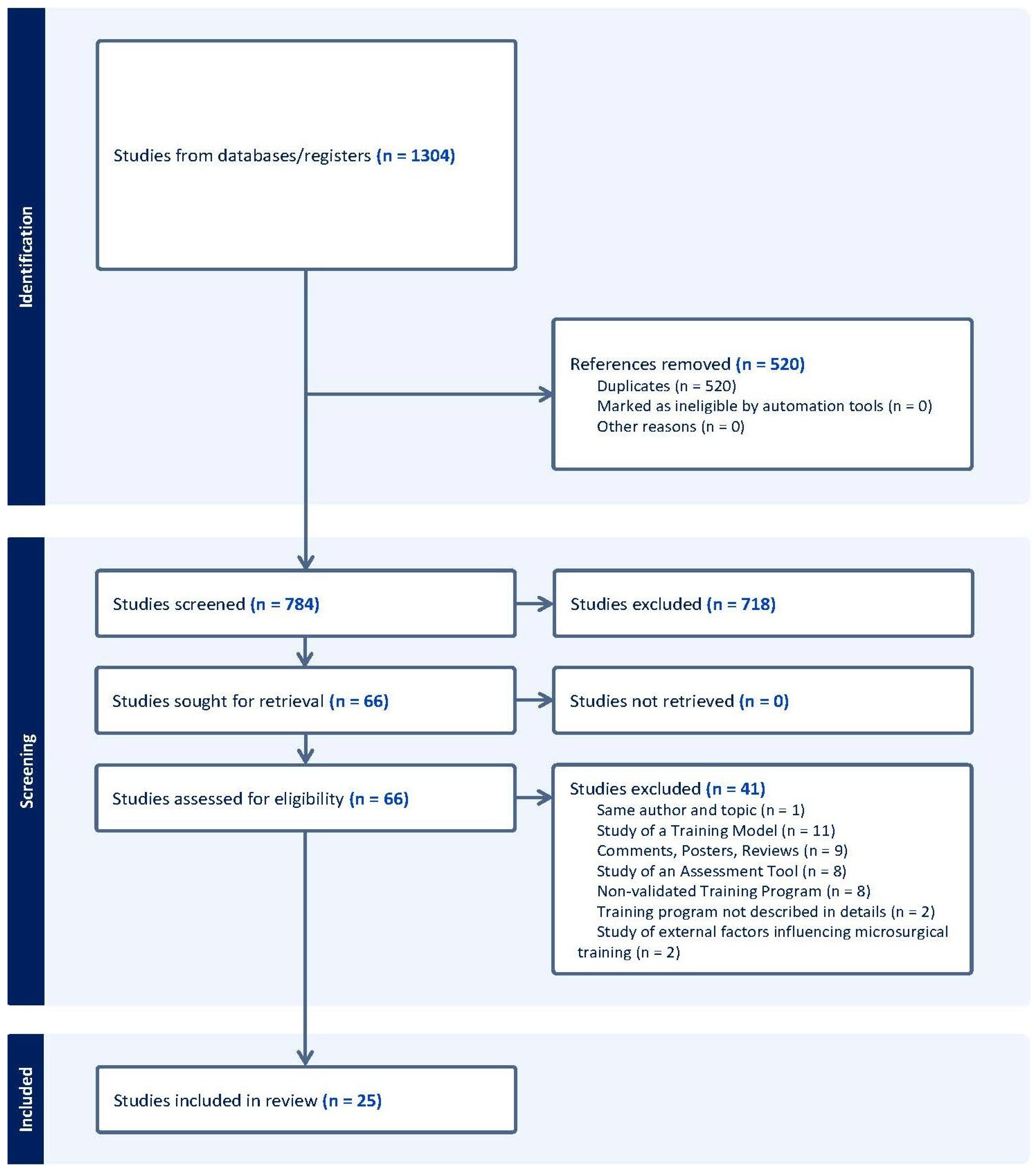

2. Materials and Methods

2.1. Search Strategy

2.2. Selection and Eligibility Criteria (PICOS)

2.3. Data Extraction and Analysis

2.4. Quality Assessment (Risk of Bias) and Validity

3. Results

3.1. Duration and Trainees

3.2. Training Programme Characteristics

3.3. Limitations and Evidence

3.4. Quality Assessment

3.5. Narrative Synthesis (SWiM)

4. Discussions

- Structured Progression—Beginning with validated bench-top or synthetic models for basic microsurgical suturing, microscope accommodation, and instrument manipulation. It should progress to ex vivo models as the mainstay of training, with minimal and strategic use of live models at predefined timepoints for skill verification.

- Validated, Multi-faceted Assessment—Implementation of a variety of standardised, objective measures (OSATS, GRS, SMaRT scale) to capture technical proficiency over time and across various facets of the learning curve. Ideally, the programme should also invest in confirming long-term skill retention.

- Longitudinal Format (multi-session, spaced curricula, as opposed to a single time-limited course);

- Controlled Learning Environment and Expert Feedback—Essential prerequisites to ensure progression happens in a controlled environment. Each participant should follow a tailored training regimen.

- 5.

- Integration of Criterion/Predictive Validity—To be incorporated as ultimate, measurable objectives during clinical cases to monitor progression and provide closed-loop feedback for the tailored training regimen.

- 6.

- An Objective Assessment Tool—To reliably assess progression across the various stages of the programme, incorporating predefined checkpoints to determine the optimal moment for advancement. This tool should be used in conjunction with continuous expert feedback.

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ALI | Anastomosis Lapse Index |

| CoTeMi | Cours de Techniques Microchirurgicales instrument |

| CT | Chicken thigh |

| E-to-E (EtoE) | End-to-End (anastomosis) |

| E-to-S (EtoS) | End-to-Side (anastomosis) |

| GRADE | Grading of Recommendations, Assessment, Development and Evaluations |

| GRS | Global Rating Scale |

| IQR | Interquartile Range |

| LoE | Level of Evidence |

| LoR | Level of Recommendation |

| MRCP | Microvascular Research Center Training Program |

| NASA TLX | National Aeronautics and Space Administration Task Load Index |

| OCEBM | Oxford Centre for Evidence-Based Medicine (Levels of Evidence) |

| OSATS | Objective Structured Assessment of Technical Skills |

| PGY | Postgraduate Year (e.g., PGY-1, PGY-2, PGY-4, PGY-5, PGY-6) |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| PTFE | Polytetrafluoroethylene |

| SAMS | Structured Assessment of Microsurgery Skills |

| SD | Standard Deviation |

| SIEA | Superficial Inferior Epigastric Artery |

| SMaRT | Stanford Microsurgical Assessment Tool |

| SOFI | Swedish Occupational Fatigue Inventory |

| STAI-6 | Six-item State-Trait Anxiety Inventory |

| SURGTLX (SURG-TLX) | Surgery Task Load Index |

| SWiM | Synthesis Without Meta-analysis |

| TSS | Task-Specific Score |

| UWOMSA | University of Western Ontario Microsurgical Skills Acquisition/Assessment |

References

- Lascar, I.; Totir, D.; Cinca, A.; Cortan, S.; Stefanescu, A.; Bratianu, R.; Udrescu, G.; Calcaianu, N.; Zamfirescu, D.G. Training Program and Learning Curve in Experimental Microsurgery During the Residency in Plastic Surgery. Microsurgery 2007, 27, 263–267. [Google Scholar] [CrossRef]

- Khachatryan, A.; Arakelyan, G.; Tevosyan, A.; Nazarian, D.; Kovaleva, D.; Arutyunyan, G.; Gabriyanchik, M.S.; Dzhuganova, V.S.; Yushkevich, A.S. How to Organize Affordable Microsurgical Training Laboratory: Optimal Price-Quality Solution. Plast. Reconstr. Surg. Glob. Open 2021, 9, e3791. [Google Scholar] [CrossRef] [PubMed]

- European Union. Directive 2003/88/EC of the European Parliament and of the Council of 4 November 2003 Concerning Certain Aspects of the Organisation of Working Time. Off. J. Eur. Union 2003, L299, 9–19. [Google Scholar]

- Hamdorf, J.M.; Hall, J.C. Acquiring Surgical Skills. Br. J. Surg. 2000, 87, 28–37. [Google Scholar] [CrossRef]

- Wanzel, K.R.; Ward, M.; Reznick, R.K. Teaching the Surgical Craft: From Selection to Certification. Curr. Probl. Surg. 2002, 39, 583–659. [Google Scholar] [CrossRef] [PubMed]

- Vajsbaher, T.; Schultheis, H.; Francis, N.K. Spatial Cognition in Minimally Invasive Surgery: A Systematic Review. BMC Surg. 2018, 18, 94. [Google Scholar] [CrossRef]

- Chan, W.; Matteucci, P.; Southern, S.J. Validation of Microsurgical Models in Microsurgery Training and Competence: A Review. Microsurgery 2007, 27, 494–499. [Google Scholar] [CrossRef]

- Ilie, V.G.; Ilie, V.I.; Dobreanu, C.; Ghetu, N.; Luchian, S.; Pieptu, D. Training of Microsurgical Skills on Nonliving Models. Microsurgery 2008, 28, 571–577. [Google Scholar] [CrossRef]

- Volovici, V.; Dammers, R.; Lawton, M.T.; Dirven, C.M.F.; Ketelaar, T.; Lanzino, G.; Zamfirescu, D.G. The Flower Petal Training System in Microsurgery: Validation of a Training Model Using a Randomized Controlled Trial. Ann. Plast. Surg. 2019, 83, 697–701. [Google Scholar] [CrossRef]

- Lahiri, A.; Muttath, S.S.; Yusoff, S.K.; Chong, A.K.S. Maintaining Effective Microsurgery Training with Reduced Utilisation of Live Rats. J. Hand Surg. Asian Pac. Vol. 2020, 25, 206–213. [Google Scholar] [CrossRef]

- Schöffl, H.; Froschauer, S.M.; Dunst, K.M.; Hager, D.; Kwasny, O.; Huemer, G.M. Strategies for the Reduction of Live Animal Use in Microsurgical Training and Education. Altern. Lab. Anim. 2008, 36, 153–160. [Google Scholar] [CrossRef]

- Meier, A.H.; Rawn, C.L.; Krummel, T.M. Virtual Reality: Surgical Application—Challenge for the New Millennium. J. Am. Coll. Surg. 2001, 192, 372–384. [Google Scholar] [CrossRef]

- Davis, G.L.; Abebe, M.W.; Vyas, R.M.; Rohde, C.H.; Coriddi, M.R.; Pusic, A.L.; Gosman, A.A. Results of a Pilot Virtual Microsurgery Course for Plastic Surgeons in LMICs. Plast. Reconstr. Surg. Glob. Open 2024, 12, e5582. [Google Scholar] [CrossRef]

- El Ahmadieh, T.Y.; Aoun, S.G.; El Tecle, N.E.; Nanney, A.D.; Daou, M.R.; Harrop, J.; Batjer, H.H.; Bendok, B.R. A Didactic and Hands-On Module Enhances Resident Microsurgical Knowledge and Technical Skill. Neurosurgery 2013, 73 (Suppl. S1), S51–S56. [Google Scholar] [CrossRef]

- Satterwhite, T.; Son, J.; Carey, J.; Zeidler, K.; Bari, S.; Gurtner, G.; Chang, J.; Lee, G.K. Microsurgery Education in Residency Training: Validating an Online Curriculum. Ann. Plast. Surg. 2012, 68, 410–414. [Google Scholar] [CrossRef]

- Kern, D.E. Curriculum Development for Medical Education: A Six-Step Approach, 2nd ed.; Johns Hopkins University Press: Baltimore, MD, USA, 2009; ISBN 978-0801893667. [Google Scholar]

- Sakamoto, Y.; Okamoto, S.; Shimizu, K.; Araki, Y.; Hirakawa, A.; Wakabayashi, T. Hands-On Simulation Versus Traditional Video-Learning in Teaching Microsurgery Technique. Neurol. Med. Chir. 2017, 57, 238–245. [Google Scholar] [CrossRef]

- Rajan, S.; Sathyan, R.; Sreelesh, L.S.; Kallerey, A.A.; Antharjanam, A.; Sumitha, R.; Sundar, J.; John, R.J.; Soumya, S. Objective Assessment of Microsurgery Competency—In Search of a Validated Tool. Indian J. Plast. Surg. 2019, 52, 216–221. [Google Scholar] [CrossRef]

- OCEBM Levels of Evidence Working Group. The Oxford 2011 Levels of Evidence. Oxford Centre for Evidence-Based Medicine. 2011. Available online: http://www.cebm.net/index.aspx?o=5653 (accessed on 8 September 2025).

- Reed, D.A.; Cook, D.A.; Beckman, T.J.; Levine, R.B.; Kern, D.E.; Wright, S.M. Association Between Funding and Quality of Published Medical Education Research. JAMA 2007, 298, 1002–1009. [Google Scholar] [CrossRef]

- Carmines, E.G.; Zeller, R.A. Reliability and Validity Assessment; Quantitative Applications in the Social Sciences, 17; Sage Publications: Beverly Hills, CA, USA, 1979. [Google Scholar] [CrossRef]

- Campbell, M.; McKenzie, J.E.; Sowden, A.; Katikireddi, S.V.; Brennan, S.E.; Ellis, S.; Hartmann-Boyce, J.; Ryan, R.; Shepperd, S.; Thomas, J.; et al. Synthesis without meta-analysis (SWiM) in systematic reviews: Reporting guideline. BMJ 2020, 368, l6890. [Google Scholar] [CrossRef]

- Berretti, G.; Colletti, G.; Parrinello, G.; Iavarone, A.; Vannucchi, P.; Deganello, A. Pilot Study on Microvascular Anastomosis: Performance and Future Educational Prospects. Acta Otorhinolaryngol. Ital. 2018, 38, 304–309. [Google Scholar] [CrossRef]

- Bigorre, N.; Saint-Cast, Y.; Cambon-Binder, A.; Gomez, M.; Petit, A.; Jeudy, J.; Fournier, H.D. Fast-Track Teaching in Microsurgery. Orthop. Traumatol. Surg. Res. 2020, 106, 725–729. [Google Scholar] [CrossRef]

- Juratli, M.A.; Becker, F.; Palmes, D.; Stöppeler, S.; Bahde, R.; Kebschull, L.; Spiegel, H.-U.; Hölzen, J.P. Microsurgical Training Course for Clinicians and Scientists: A 10-Year Experience at the Münster University Hospital. BMC Med. Educ. 2021, 21, 295. [Google Scholar] [CrossRef]

- Le Hanneur, M.; Bouché, P.A.; Vignes, J.L.; Poitevin, N.; Legagneux, J.; Fitoussi, F. Nonliving Versus Living Animal Models for Microvascular Surgery Training: A Randomized Comparative Study. Plast. Reconstr. Surg. 2024, 153, 853–860. [Google Scholar] [CrossRef]

- Luther, G.; Blazar, P.; Dyer, G. Achieving Microsurgical Competency in Orthopaedic Residents Utilizing a Self-Directed Microvascular Training Curriculum. J. Bone Jt. Surg. Am. 2019, 101, e10. [Google Scholar] [CrossRef]

- Perez-Abadia, G.; Janko, M.; Pindur, L.; Sauerbier, M.; Barker, J.H.; Joshua, I.; Marzi, I.; Frank, J. Frankfurt Microsurgery Course: The First 175 Trainees. Eur. J. Trauma Emerg. Surg. 2017, 43, 377–386. [Google Scholar] [CrossRef]

- Perez-Abadia, G.; Pindur, L.; Frank, J.; Marzi, I.; Sauerbier, M.; Carroll, S.M.; Schnapp, L.; Mendez, M.; Sepulveda, S.; Werker, P.; et al. Intensive Hands-On Microsurgery Course Provides a Solid Foundation for Performing Clinical Microvascular Surgery. Eur. J. Trauma Emerg. Surg. 2023, 49, 115–123. [Google Scholar] [CrossRef]

- Trignano, E.; Fallico, N.; Zingone, G.; Dessy, L.; Campus, G. Microsurgical Training with the Three-Step Approach. J. Reconstr. Microsurg. 2017, 33, 87–91. [Google Scholar] [CrossRef]

- Chacon, M.A.; Myers, P.L.; Patel, A.U.; Mitchell, D.C.; Langstein, H.N.; Leckenby, J.I. Pretest and Posttest Evaluation of a Longitudinal, Residency-Integrated Microsurgery Course. Ann. Plast. Surg. 2020, 85 (Suppl. S1), S122–S126. [Google Scholar] [CrossRef]

- Chauhan, R.; Ingersol, C.; Wooden, W.A.; Gordillo, G.M.; Stefanidis, D.; Hassanein, A.H.; Lester, M.E. Fundamentals of Microsurgery: A Novel Simulation Curriculum Based on Validated Laparoscopic Education Approaches. J. Reconstr. Microsurg. 2023, 39, 517–525. [Google Scholar] [CrossRef]

- Cui, L.; Han, Y.; Liu, X.; Jiao, B.L.; Su, H.G.; Chai, M.; Chen, M.; Shu, J.; Pu, W.W.; He, L.R.; et al. Innovative Clinical Scenario Simulator for Step-by-Step Microsurgical Training. J. Reconstr. Microsurg. 2024, 40, 542–550. [Google Scholar] [CrossRef]

- Eșanu, V.; Stoia, A.I.; Dindelegan, G.C.; Colosi, H.A.; Dindelegan, M.G.; Volovici, V. Reduction of the Number of Live Animals Used for Microsurgical Skill Acquisition: An Experimental Randomized Noninferiority Trial. J. Reconstr. Microsurg. 2022, 38, 604–612. [Google Scholar] [CrossRef]

- Geoghegan, L.; Papadopoulos, D.; Petrie, N.; Teo, I.; Papavasiliou, T. Utilization of a 3D Printed Simulation Training Model to Improve Microsurgical Training. Plast. Reconstr. Surg. Glob. Open 2023, 11, e4898. [Google Scholar] [CrossRef]

- Guerreschi, P.; Qassemyar, A.; Thevenet, J.; Hubert, T.; Fontaine, C.; Duquennoy-Martinot, V. Reducing the Number of Animals Used for Microsurgery Training Programs by Using a Task-Trainer Simulator. Lab. Anim. 2014, 48, 72–77. [Google Scholar] [CrossRef]

- Jensen, M.A.; Bhandarkar, A.R.; Bauman, M.M.J.; Riviere-Cazaux, C.; Wang, K.; Carlstrom, L.P.; Graffeo, C.S.; Spinner, R.J.; Jensen, M.A. The LazyBox Educational Intervention Trial: Can Longitudinal Practice on a Low-Fidelity Microsurgery Simulator Improve Microsurgical Skills? Cureus 2023, 15, e49675. [Google Scholar] [CrossRef]

- Ko, J.W.K.; Lorzano, A.; Mirarchi, A.J. Effectiveness of a Microvascular Surgery Training Curriculum for Orthopaedic Surgery Residents. J. Bone Jt. Surg. Am. 2015, 97, 950–955. [Google Scholar] [CrossRef]

- Komatsu, S.; Yamada, K.; Yamashita, S.; Sugiyama, N.; Tokuyama, E.; Matsumoto, K.; Takara, A.; Kimata, Y. Evaluation of the Microvascular Research Center Training Program for Assessing Microsurgical Skills in Trainee Surgeons. Arch. Plast. Surg. 2013, 40, 214–219. [Google Scholar] [CrossRef]

- Masud, D.; Haram, N.; Moustaki, M.; Chow, W.; Saour, S.; Mohanna, P.N. Microsurgery Simulation Training System and Set Up: An Essential System to Complement Every Training Programme. J. Plast. Reconstr. Aesthetic Surg. 2017, 70, 893–900. [Google Scholar] [CrossRef]

- Mattar, T.G.D.M.; Santos, G.B.D.; Telles, J.P.M.; Rezende, M.R.D.; Wei, T.H.; Mattar, R. Structured Evaluation of a Comprehensive Microsurgical Training Program. Clinics 2021, 76, e3194. [Google Scholar] [CrossRef]

- Onoda, S.; Kimata, Y.; Sugiyama, N.; Tokuyama, E.; Matsumoto, K.; Ota, T.; Thuzar, M.; Onoda, S. Analysis of 10-Year Training Results of Medical Students Using the Microvascular Research Center Training Program. J. Reconstr. Microsurg. 2016, 32, 336–341. [Google Scholar] [CrossRef]

- Rodriguez, J.R.; Yañez, R.; Cifuentes, I.; Varas, J.; Dagnino, B. Microsurgery Workout: A Novel Simulation Training Curriculum Based on Nonliving Models. Plast. Reconstr. Surg. 2016, 138, 739e–747e. [Google Scholar] [CrossRef]

- Santyr, B.; Abbass, M.; Chalil, A.; Vivekanandan, A.; Krivosheya, D.; Denning, L.M.; Mattingly, T.K.; Haji, F.A.; Lownie, S.P. High-Fidelity, Simulation-Based Microsurgical Training for Neurosurgical Residents. Neurosurg. Focus 2022, 53, E3. [Google Scholar] [CrossRef]

- Zambrano-Jerez, L.C.; Ramírez-Blanco, M.A.; Alarcón-Ariza, D.F.; Meléndez-Flórez, G.L.; Pinzón-Mantilla, D.; Rodríguez-Santos, M.A.; Arias-Valero, C.L. Novel and Easy Curriculum with Simulated Models for Microsurgery for Plastic Surgery Residents: Reducing Animal Use. Eur. J. Plast. Surg. 2024, 47, 36. [Google Scholar] [CrossRef]

- Zyluk, A.; Szlosser, Z.; Puchalski, P. Undergraduate Microsurgical Training: A Preliminary Experience. Handchir. Mikrochir. Plast. Chir. 2019, 51, 477–483. [Google Scholar] [CrossRef]

- Chan, W.; Niranjan, N.; Ramakrishnan, V. Structured Assessment of Microsurgery Skills in the Clinical Setting. J. Plast. Reconstr. Aesthetic Surg. 2010, 63, 1329–1334. [Google Scholar] [CrossRef]

- Martin, J.A.; Regehr, G.; Reznick, R.; MacRae, H.; Murnaghan, J.; Hutchison, C.; Brown, M. Objective Structured Assessment of Technical Skill (OSATS) for Surgical Residents. Br. J. Surg. 1997, 84, 273–278. [Google Scholar] [CrossRef]

- Applebaum, M.A.; Doren, E.L.; Ghanem, A.M.; Myers, S.R.; Harrington, M.; Smith, D.J. Microsurgery Competency During Plastic Surgery Residency: An Objective Skills Assessment of an Integrated Residency Training Program. Eplasty 2018, 18, e25. [Google Scholar]

- Moulton, C.A.E.; Dubrowski, A.; MacRae, H.; Graham, B.; Grober, E.; Reznick, R. Teaching Surgical Skills: What Kind of Practice Makes Perfect? A Randomized, Controlled Trial. Ann. Surg. 2006, 244, 400–409. [Google Scholar] [CrossRef]

- Teo, W.Z.W.; Dong, X.; Yusoff, S.K.B.M.; Das De, S.; Chong, A.K.S. Randomized Controlled Trial Comparing the Effectiveness of Mass and Spaced Learning in Microsurgical Procedures Using Computer-Aided Assessment. Sci. Rep. 2021, 11, 2810. [Google Scholar] [CrossRef]

- Schoeff, S.; Hernandez, B.; Robinson, D.J.; Jameson, M.J.; Shonka, D.C. Microvascular Anastomosis Simulation Using a Chicken Thigh Model: Interval Versus Massed Training. Laryngoscope 2017, 127, 2490–2494. [Google Scholar] [CrossRef]

- Mohan, A.T.; Abdelrahman, A.M.; Anding, W.J.; Lowndes, B.R.; Blocker, R.C.; Hallbeck, M.S.; Bakri, K.; Moran, S.L.; Mardini, S. Microsurgical Skills Training Course and Impact on Trainee Confidence and Workload. J. Plast. Reconstr. Aesthetic Surg. 2022, 75, 2135–2142. [Google Scholar] [CrossRef]

| General | Participants | Programme Characteristics | OCEBM | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Author | No. | Type | Exp* | Duration & Frequency | Validation Type | Assessment Methods | Training Outcomes | Study Limitations | LoE | LoR |

| Berretti et al. (2018) [23] | 30 | 10MS 10SR 10SS | None | 3–5 h | Face Content |

|

|

| 4 | C |

| Bigorre et al. (2020) [24] | 10 | 9SR 1SS | None | 2 weeks 36 h total | Face Content Construct |

|

|

| 4 | C |

| Juratli et al. (2021) [25] | 120 | 96R 20SS | 90% None | 2.5 days (20 h) | Face Content Construct |

|

|

| 4 | C |

| Lahiri et al. (2020) [10] | 58 | 58SR | Minimal | 5 days | Face Construct content |

|

|

| 3 | B |

| LeHanneur et al. (2024) [26] | 93 | 93SR | None | 3 days, 6 sessions, 3 h/session | Face Content Construct |

|

|

| 2 | B |

| Luther et al. (2019) [27] | 25 | 25SR | Only clinical | 5.5 ±1.4 h | Face Construct |

|

|

| 2 | B |

| Perez-Abadia et al. (2023 + 2017) [28,29] | 624 | 595SS 29O | 40.5% — None 59.5% + | 5 days, 8 h/day | Face Content Construct |

|

|

| 4 | C |

| Trignano et al. (2017) [30] | 20 | 20SR | None | 5 days (~35–40 h total) | Face Construct Predictive |

|

|

| 2 | B |

| General | Participants | Programme Characteristics | OCEBM | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Author | No. | Type | Exp* | Duration & Frequency | Validation Type | Assessment Methods | Training Outcomes | Study Limitations | LoE | LoR |

| Chacon et al. (2020) [31] | 5 | 5SR | 4+. 1 none | 7 weeks, 3 h/week | Face Content Construct |

|

|

| 4 | C |

| Chauhan et al. (2023) [32] | 7 | 7SR | none | Self-paced | Face Construct Content Predictive |

|

|

| 4 | C |

| Cui et al. (2024) [33] | 20 | 20SR | none | 4 weeks, 40 h/week | Face Content Construct Predictive |

|

|

| 1 | A |

| Esanu et al. (2022) [34] | 9 | 9MS | minimal | 24 weeks, weekly | Face Content Construct |

|

|

| 2 | B |

| Geoghegan et al. (2023) [35] | 10 | 10SR | 4 none, 6+ | 15 h (over 1 month) | Face Construct |

|

|

| 2 | B |

| Guerreschi et al. (2014) [36] | 14 | 14SR | none | 6.3 h of simulatio, 30 half-days overall | Face Content |

|

|

| 2 | B |

| Jensen et al. (2023) [37] | 24 | 24MS | 3 none, 21+ | 4 weeks (12 sessions, 3/week) | Face |

|

|

| 2 | B |

| Ko et al. (2015) [38] | 12 | 12SR | none | 8 weeks, weekly, 3 h/week | Face Content Construct |

|

|

| 3 | C |

| Komatsu et al. (2013) [39] | 22 | 22MS | none | 3 months | Face Content Costruct |

|

|

| 3 | B |

| Masud et al. (2017) [40] | 37 | 37SR | none | 3 months, weekly | Face Construct Content |

|

|

| 2 | B |

| Mattar et al. (2021) [41] | 89 | 13SR 76SS | none | 16 sessions, 4 h each | Face Content Construct |

|

|

| 3 | B |

| Onoda et al. (2016) [42] | 29 | 29MS | none | 3 weeks, 15 days total (7–8 h/day) | Face Content Construct |

|

|

| 3 | C |

| Rodriguez et al. (2016) [43] | 10 | 10SS | none | 17 sessions~90 min each (7 months total) | Face Content Construct |

|

|

| 3 | B |

| Santyr et al. (2022) [44] | 18 | 18SR | minimal | 17 sessions (2 half-day sessions/ month) | Face Content Construct Predictive |

|

|

| 2 | B |

| Zambrano- Jerez et al. (2024) [45] | 11 | 11SR | none | 40 h—13 sessions 3 h each | Face Content Construct |

|

|

| 3 | B |

| Zyluk et al. (2019) [46] | 12 | 12MS | none | 30 h, 15 weeks 2 h/w | Face Content |

|

|

| 4 | C |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Esanu, V.; Carciumaru, T.Z.; Ilie-Ene, A.; Stoia, A.I.; Dindelegan, G.; Dirven, C.M.F.; Meling, T.; Vasilic, D.; Volovici, V. Validated Microsurgical Training Programmes: A Systematic Review of the Current Literature. J. Clin. Med. 2025, 14, 7452. https://doi.org/10.3390/jcm14217452

Esanu V, Carciumaru TZ, Ilie-Ene A, Stoia AI, Dindelegan G, Dirven CMF, Meling T, Vasilic D, Volovici V. Validated Microsurgical Training Programmes: A Systematic Review of the Current Literature. Journal of Clinical Medicine. 2025; 14(21):7452. https://doi.org/10.3390/jcm14217452

Chicago/Turabian StyleEsanu, Victor, Teona Z. Carciumaru, Alexandru Ilie-Ene, Alexandra I. Stoia, George Dindelegan, Clemens M. F. Dirven, Torstein Meling, Dalibor Vasilic, and Victor Volovici. 2025. "Validated Microsurgical Training Programmes: A Systematic Review of the Current Literature" Journal of Clinical Medicine 14, no. 21: 7452. https://doi.org/10.3390/jcm14217452

APA StyleEsanu, V., Carciumaru, T. Z., Ilie-Ene, A., Stoia, A. I., Dindelegan, G., Dirven, C. M. F., Meling, T., Vasilic, D., & Volovici, V. (2025). Validated Microsurgical Training Programmes: A Systematic Review of the Current Literature. Journal of Clinical Medicine, 14(21), 7452. https://doi.org/10.3390/jcm14217452