Age Estimation from Lateral Cephalograms Using Deep Learning: A Pilot Study from Early Childhood to Older Adults

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Overview and Ethical Approval

2.2. Dataset and Experimental Design

2.3. Network Architecture and Deep Learning

2.4. Performance Metrics

2.5. Statistical Analysis

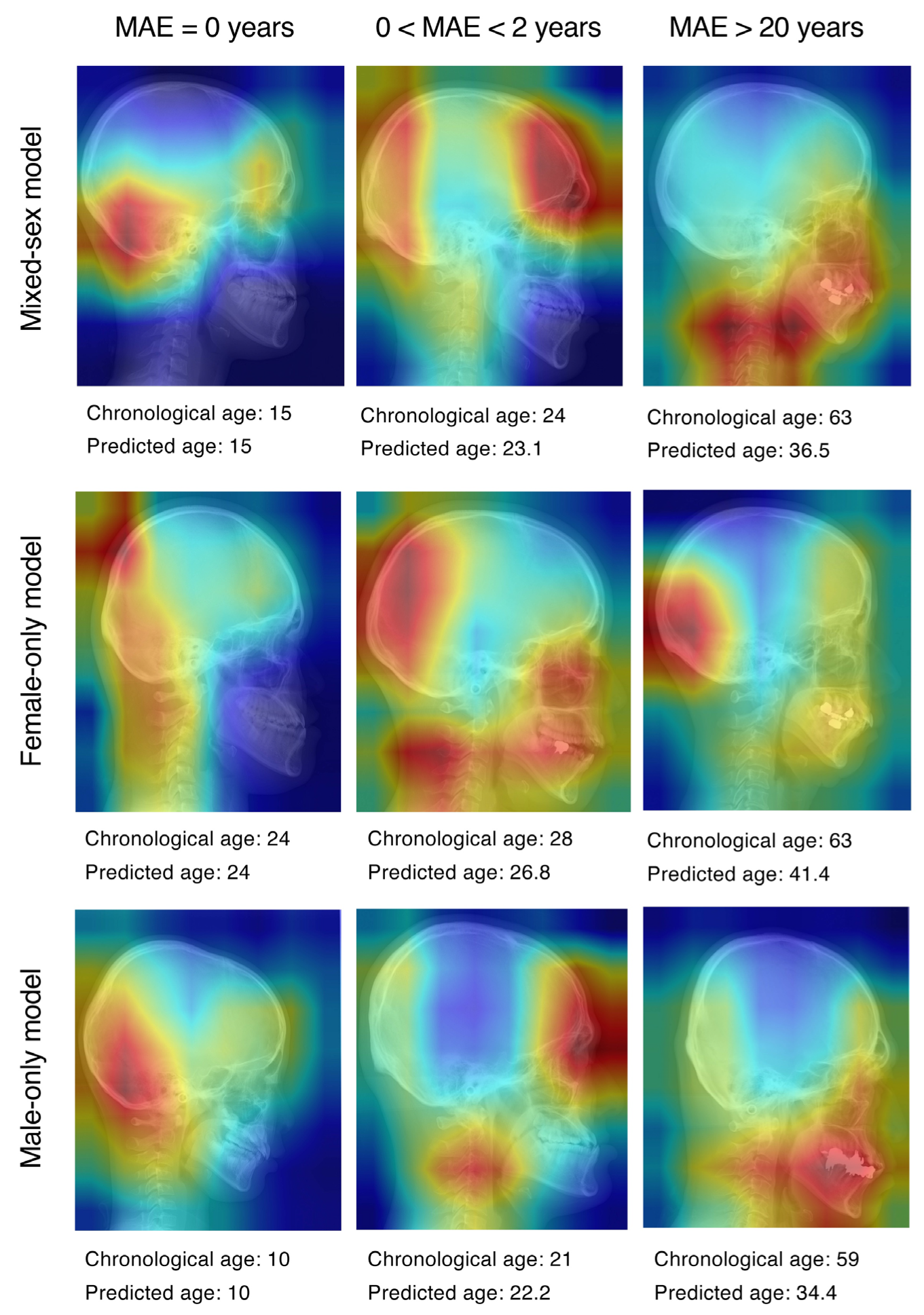

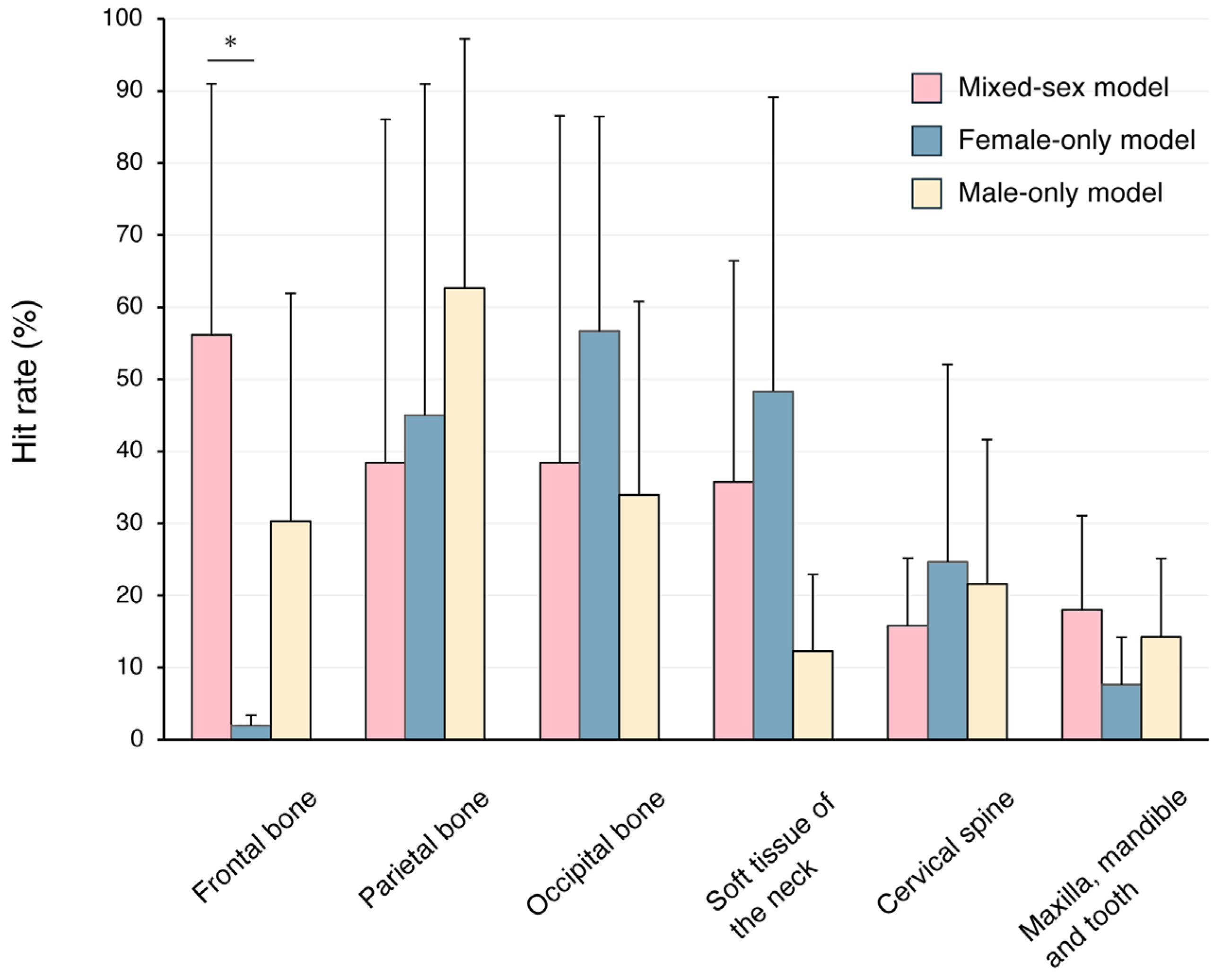

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| MAE | mean absolute error |

| Grad-CAM | gradient-weighted class activation mapping |

| CNN | convolutional neural network |

References

- Pittayapat, P.; Jacobs, R.; De Valck, E.; Vandermeulen, D.; Willems, G. Forensic odontology in the disaster victim identification process. J. Forensic Odontostomatol. 2012, 30, 1–12. [Google Scholar]

- Ruder, T.D.; Kuhnen, S.C.; Zech, W.D.; Klaus, J.B.; Lombardo, P.; Ith, M. Standards of practice in forensic age estimation with CT of the medial clavicular epiphysis-a systematic review. Int. J. Leg. Med. 2023, 137, 1757–1766. [Google Scholar] [CrossRef]

- Khatam-Lashgari, A.; Harving, M.L.; Villa, C.; Lynnerup, N.; Larsen, S.T. Forensic age estimation of the knee by post-mortem DR, CT, and MR imaging: A comparative study. Int. J. Leg. Med. 2024, 138, 971–981. [Google Scholar] [CrossRef] [PubMed]

- Nestman, T.S.; Marshall, S.D.; Qian, F.; Holton, N.; Franciscus, R.G.; Southard, T.E. Cervical vertebrae maturation method morphologic criteria: Poor reproducibility. Am. J. Orthod. Dentofacial. Orthop. 2011, 140, 182–188. [Google Scholar] [CrossRef] [PubMed]

- De Donno, A.; Angrisani, C.; Mele, F.; Introna, F.; Santoro, V. Dental age estimation: Demirjian’s versus the other methods in different populations. A literature review. Med. Sci. Law 2021, 61, 125–129. [Google Scholar] [CrossRef] [PubMed]

- Khanagar, S.B.; Albalawi, F.; Alshehri, A.; Awawdeh, M.; Iyer, K.; Alsomaie, B.; Aldhebaib, A.; Singh, O.G.; Alfadley, A. Performance of Artificial Intelligence Models Designed for Automated Estimation of Age Using Dento-Maxillofacial Radiographs-A Systematic Review. Diagnostics 2024, 14, 1079. [Google Scholar] [CrossRef]

- Bağ, İ.; Bilgir, E.; Bayrakdar, İ.Ş.; Baydar, O.; Atak, F.M.; Çelik, Ö.; Orhan, K. An artificial intelligence study: Automatic description of anatomic landmarks on panoramic radiographs in the pediatric population. BMC Oral. Health 2023, 23, 764. [Google Scholar] [CrossRef]

- Wesp, P.; Schachtner, B.M.; Jeblick, K.; Topalis, J.; Weber, M.; Fischer, F.; Penning, R.; Ricke, J.; Ingrisch, M.; Sabel, B.O. Radiological age assessment based on clavicle ossification in CT: Enhanced accuracy through deep learning. Int. J. Leg. Med. 2024, 138, 1497–1507. [Google Scholar] [CrossRef]

- Rokhshad, R.; Nasiri, F.; Saberi, N.; Shoorgashti, R.; Ehsani, S.S.; Nasiri, Z.; Azadi, A.; Schwendicke, F. Deep learning for age estimation from panoramic radiographs: A systematic review and meta-analysis. J. Dent. 2025, 154, 105560. [Google Scholar] [CrossRef]

- Bizjak, Ž.; Robič, T. DentAge: Deep learning for automated age prediction using panoramic dental X-ray images. J. Forensic Sci. 2024, 69, 2069–2074. [Google Scholar] [CrossRef]

- Gandikota, C.S.; Rayapudi, N.; Challa, P.L.; Juvvadi, S.R.; Yudhister, P.V.; Rao, G.H. A comparative study of linear measurements on facial skeleton with frontal and lateral cephalogram. Contemp. Clin. Dent. 2012, 3, 176–179. [Google Scholar] [CrossRef] [PubMed]

- Takeda, S.; Mine, Y.; Yoshimi, Y.; Ito, S.; Tanimoto, K.; Murayama, T. Landmark annotation and mandibular lateral deviation analysis of posteroanterior cephalograms using a convolutional neural network. J. Dent. Sci. 2021, 16, 957–963. [Google Scholar] [CrossRef] [PubMed]

- Ito, S.; Mine, Y.; Urabe, S.; Yoshimi, Y.; Okazaki, S.; Sano, M.; Koizumi, Y.; Peng, T.Y.; Kakimoto, N.; Murayama, T.; et al. Prediction of a Cephalometric Parameter and Skeletal Patterns from Lateral Profile Photographs: A Retrospective Comparative Analysis of Regression Convolutional Neural Networks. J. Clin. Med. 2024, 13, 6346. [Google Scholar] [CrossRef] [PubMed]

- Ramírez-Sotelo, L.R.; Almeida, S.; Ambrosano, G.M.; Bóscolo, F. Validity and reproducibility of cephalometric measurements performed in full and hemifacial reconstructions derived from cone beam computed tomography. Angle Orthod. 2012, 82, 827–832. [Google Scholar] [CrossRef]

- Baldini, B.; Cavagnetto, D.; Baselli, G.; Sforza, C.; Tartaglia, G.M. Cephalometric measurements performed on CBCT and reconstructed lateral cephalograms: A cross-sectional study providing a quantitative approach of differences and bias. BMC Oral. Health 2022, 22, 98. [Google Scholar] [CrossRef]

- Suomalainen, A.; Pakbaznejad Esmaeili, E.; Robinson, S. Dentomaxillofacial imaging with panoramic views and cone beam CT. Insights Imaging 2015, 6, 1–16. [Google Scholar] [CrossRef]

- Devlin, H.; Yuan, J. Object position and image magnification in dental panoramic radiography: A theoretical analysis. Dentomaxillofac. Radiol. 2013, 42, 29951683. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, N.; Guo, Z.; Jiao, L.; Fenster, A.; Jin, W.; Zhang, Y.; Chen, J.; Yan, C.; Gou, S. Ageing and degeneration analysis using ageing-related dynamic attention on lateral cephalometric radiographs. NPJ Digit. Med. 2022, 5, 151. [Google Scholar] [CrossRef]

- Pecora, N.G.; Baccetti, T.; McNamara, J.A., Jr. The aging craniofacial complex: A longitudinal cephalometric study from late adolescence to late adulthood. Am. J. Orthod. Dentofac. Orthop. 2008, 134, 496–505. [Google Scholar] [CrossRef]

- Van’t Spijker, A.; Rodriguez, J.M.; Kreulen, C.M.; Bronkhorst, E.M.; Bartlett, D.W.; Creugers, N.H. Prevalence of tooth wear in adults. Int. J. Prosthodont. 2009, 22, 35–42. [Google Scholar]

- Kushchayev, S.V.; Glushko, T.; Jarraya, M.; Schuleri, K.H.; Preul, M.C.; Brooks, M.L.; Teytelboym, O.M. ABCs of the degenerative spine. Insights Imaging 2018, 9, 253–274. [Google Scholar] [CrossRef] [PubMed]

- Hase, H.; Mine, Y.; Okazaki, S.; Yoshimi, Y.; Ito, S.; Peng, T.Y.; Sano, M.; Koizumi, Y.; Kakimoto, N.; Tanimoto, K.; et al. Sex estimation from maxillofacial radiographs using a deep learning approach. Dent. Mater. J. 2024, 43, 394–399. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. arXiv 2016, arXiv:1608.06993. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. Int. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Saporta, A.; Gui, X.; Agrawal, A.; Pareek, A.; Truong, S.Q.H.; Nguyen, C.D.T.; Ngo, V.D.; Seekins, J.; Blankenberg, F.G.; Ng, A.Y.; et al. Benchmarking saliency methods for chest X-ray interpretation. Nat. Mach. Intell. 2022, 4, 867–878. [Google Scholar] [CrossRef]

- Patcas, R.; Wiedemeier, D.B.; Markic, G.; Beit, P.; Keller, H. Evidence of secular trend in mandibular pubertal growth. Eur. J. Orthod. 2017, 39, 680–685. [Google Scholar] [CrossRef]

- Franchi, L.; Nieri, M.; Lomonaco, I.; McNamara, J.A.; Giuntini, V. Predicting the mandibular growth spurt. Angle Orthod. 2021, 91, 307–312. [Google Scholar] [CrossRef]

- Oliveira, W.; Albuquerque Santos, M.; Burgardt, C.A.P.; Anjos Pontual, M.L.; Zanchettin, C. Estimation of human age using machine learning on panoramic radiographs for Brazilian patients. Sci. Rep. 2024, 14, 19689. [Google Scholar] [CrossRef]

- Mu, C.C.; Li, G. Age Estimation using Panoramic Radiographs by Transfer Learning. Chin. J. Dent. Res. 2022, 25, 119–124. [Google Scholar]

- Fan, F.; Ke, W.; Dai, X.; Shi, L.; Liu, Y.; Lin, Y.; Cheng, Z.; Zhang, Y.; Chen, H.; Deng, Z. Semi-supervised automatic dental age and sex estimation using a hybrid transformer model. Int. J. Leg. Med. 2023, 137, 721–731. [Google Scholar] [CrossRef]

| 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–65 | Total | |

|---|---|---|---|---|---|---|---|---|

| Female | 68 | 145 | 50 | 18 | 9 | 7 | 3 | 300 |

| Male | 84 | 121 | 74 | 11 | 3 | 6 | 1 | 300 |

| Total | 152 | 266 | 124 | 29 | 12 | 13 | 4 | 600 |

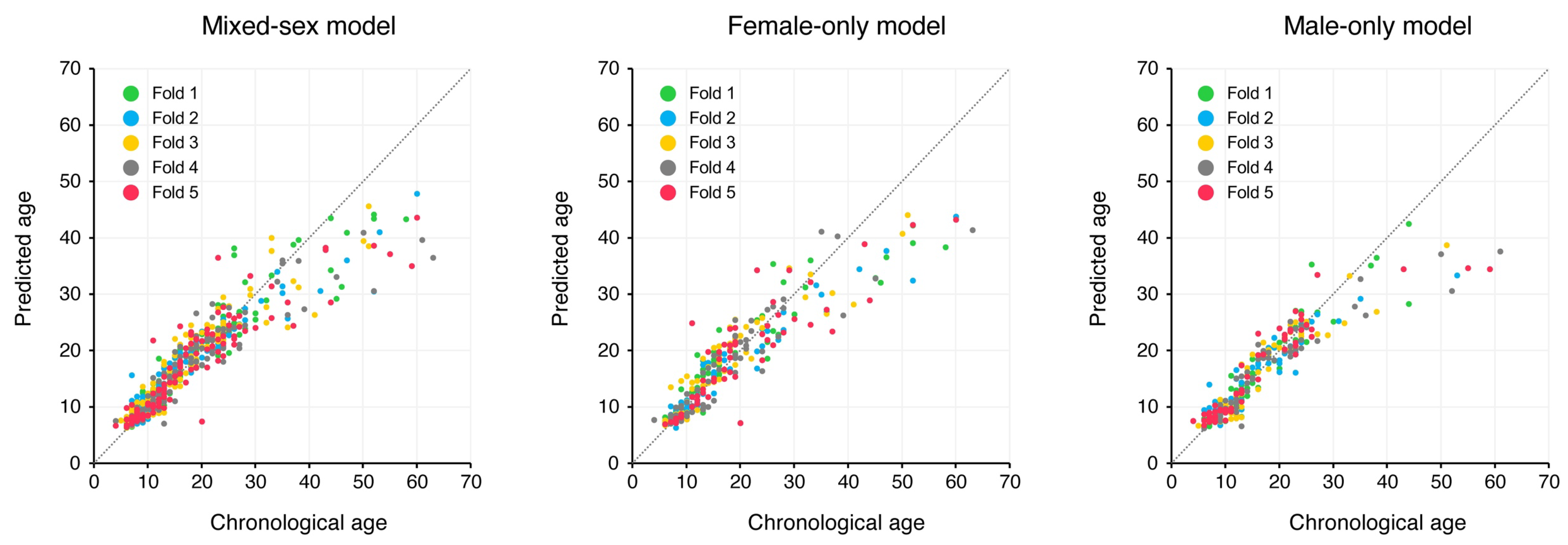

| Mixed-Sex Model | Female-Only Model | Male-Only Model | |

|---|---|---|---|

| MAE | 2.50 ± 0.27 | 3.04 ± 0.37 | 2.29 ± 0.27 |

| R2 | 0.84 ± 0.04 | 0.82 ± 0.04 | 0.83 ± 0.04 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tokinaga, R.; Mine, Y.; Yoshimi, Y.; Okazaki, S.; Ito, S.; Takeda, S.; Ogawa, S.; Peng, T.-Y.; Kakimoto, N.; Tanimoto, K.; et al. Age Estimation from Lateral Cephalograms Using Deep Learning: A Pilot Study from Early Childhood to Older Adults. J. Clin. Med. 2025, 14, 7084. https://doi.org/10.3390/jcm14197084

Tokinaga R, Mine Y, Yoshimi Y, Okazaki S, Ito S, Takeda S, Ogawa S, Peng T-Y, Kakimoto N, Tanimoto K, et al. Age Estimation from Lateral Cephalograms Using Deep Learning: A Pilot Study from Early Childhood to Older Adults. Journal of Clinical Medicine. 2025; 14(19):7084. https://doi.org/10.3390/jcm14197084

Chicago/Turabian StyleTokinaga, Ryohei, Yuichi Mine, Yuki Yoshimi, Shota Okazaki, Shota Ito, Saori Takeda, Saki Ogawa, Tzu-Yu Peng, Naoya Kakimoto, Kotaro Tanimoto, and et al. 2025. "Age Estimation from Lateral Cephalograms Using Deep Learning: A Pilot Study from Early Childhood to Older Adults" Journal of Clinical Medicine 14, no. 19: 7084. https://doi.org/10.3390/jcm14197084

APA StyleTokinaga, R., Mine, Y., Yoshimi, Y., Okazaki, S., Ito, S., Takeda, S., Ogawa, S., Peng, T.-Y., Kakimoto, N., Tanimoto, K., & Murayama, T. (2025). Age Estimation from Lateral Cephalograms Using Deep Learning: A Pilot Study from Early Childhood to Older Adults. Journal of Clinical Medicine, 14(19), 7084. https://doi.org/10.3390/jcm14197084