Development and Validation of Echocardiography Artificial Intelligence Models: A Narrative Review

Abstract

1. Introduction

2. Development of AI Models Within Echocardiography

2.1. Choice of Echocardiography Artificial Intelligence Model

2.2. Echocardiography Datasets

2.3. Dataset Preprocessing

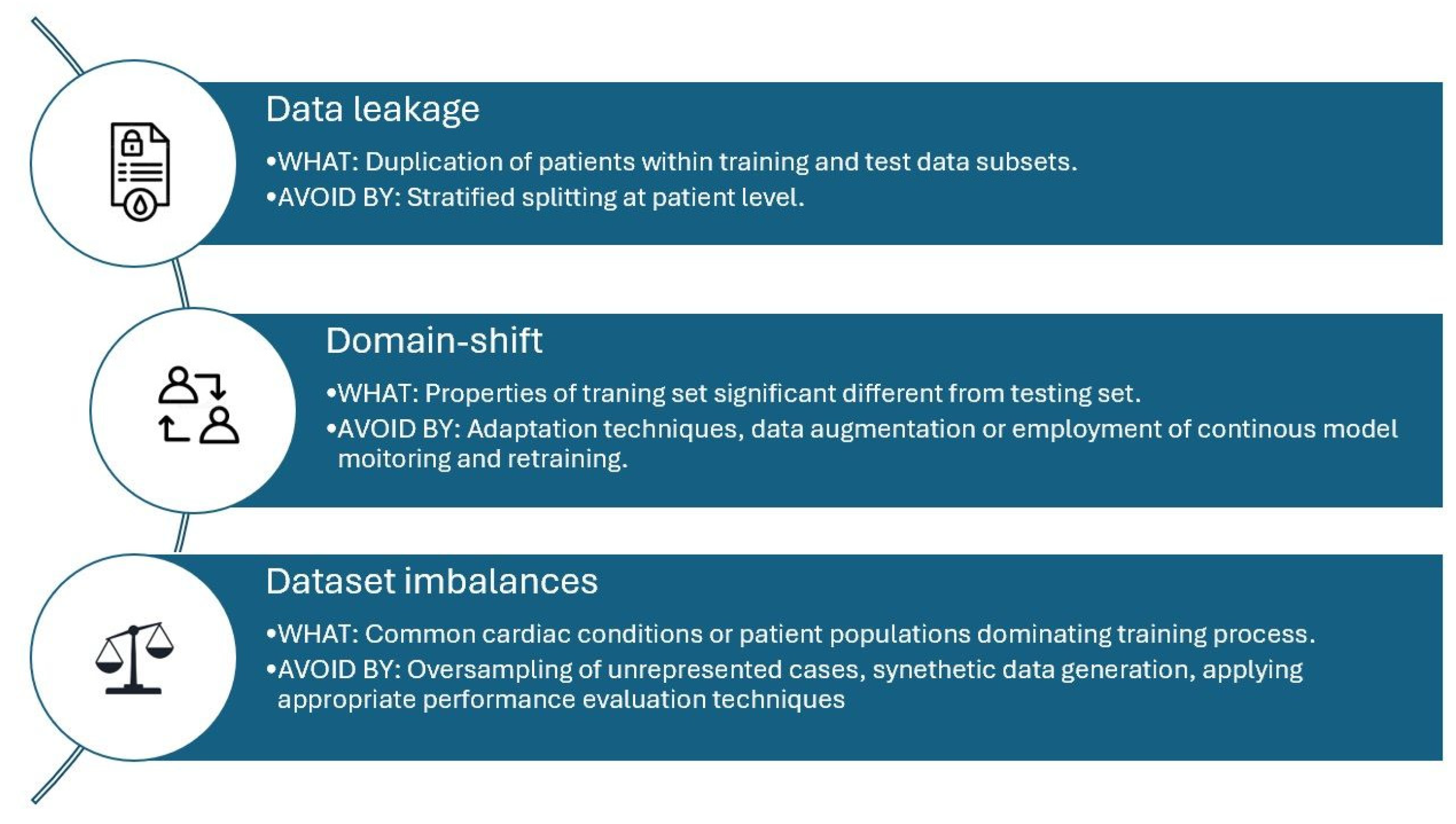

3. Training of Echocardiography Artificial Intelligence Models

3.1. Training

3.2. Development

3.3. Performance Evaluation

4. Validation of Echocardiography Artificial Intelligence Models

4.1. Internal Validation

4.2. External Validation

4.3. Clinical Validation

4.4. Regulatory and Medical Device Approval

4.5. Post Marketing Surveillance

5. Future Considerations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| DICOM | Digital Imaging and Communications in Medicine |

| FDA | Food and Drug Administration |

| IT | Information Technology |

| ML | Machine Learning |

| NCT | National Trial Number |

| RCT | Randomised Controlled Trial |

| PACS | Picture Archiving and Communication Systems |

References

- Azarmehr, N.; Ye, X.; Howard, J.P.; Lane, E.S.; Labs, R.; Shun-Shin, M.J.; Cole, G.D.; Bidaut, L.; Francis, D.P.; Zolgharni, M. Neural Architecture Search of Echocardiography View Classifiers. J. Med. Imaging 2021, 8, 034002. [Google Scholar] [CrossRef]

- Lancellotti, P.; Price, S.; Edvardsen, T.; Cosyns, B.; Neskovic, A.N.; Dulgheru, R.; Flachskampf, F.A.; Hassager, C.; Pasquet, A.; Gargani, L.; et al. The Use of Echocardiography in Acute Cardiovascular Care: Recommendations of the European Association of Cardiovascular Imaging and the Acute Cardiovascular Care Association. Eur. Heart J. Acute Cardiovasc. Care 2015, 4, 3–5. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Zhao, L.; Tu, B.; Wang, J.; He, Y.; Jiang, R.; Wu, X.; Wen, W.; Liu, J. Application of Artificial Intelligence in Echocardiography from 2009 to 2024: A Bibliometric Analysis. Front. Med. 2025, 12, 1587364. [Google Scholar] [CrossRef] [PubMed]

- Bajwa, J.; Munir, U.; Nori, A.; Williams, B. Artificial Intelligence in Healthcare: Transforming the Practice of Medicine. Future Heal. J. 2021, 8, e188–e194. [Google Scholar] [CrossRef] [PubMed]

- Jordan, M.I.; Mitchell, T.M. Machine Learning: Trends, Perspectives, and Prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Dey, D.; Slomka, P.J.; Leeson, P.; Comaniciu, D.; Shrestha, S.; Sengupta, P.P.; Marwick, T.H. Artificial Intelligence in Cardiovascular Imaging. JACC 2019, 73, 1317–1335. [Google Scholar] [CrossRef]

- Narang, A.; Bae, R.; Hong, H.; Thomas, Y.; Surette, S.; Cadieu, C.; Chaudhry, A.; Martin, R.P.; McCarthy, P.M.; Rubenson, D.S.; et al. Utility of a Deep-Learning Algorithm to Guide Novices to Acquire Echocardiograms for Limited Diagnostic Use. JAMA Cardiol. 2021, 6, 624–632. [Google Scholar] [CrossRef]

- Meucci, M.C.; Delgado, V. Artificial Intelligence to Speed Up Training in Echocardiography: The Next Frontier. Circ. Cardiovasc. Imaging 2023, 16, e016148. [Google Scholar] [CrossRef]

- Upton, R.; Mumith, A.; Beqiri, A.; Parker, A.; Hawkes, W.; Gao, S.; Porumb, M.; Sarwar, R.; Marques, P.; Markham, D.; et al. Automated Echocardiographic Detection of Severe Coronary Artery Disease Using Artificial Intelligence. JACC Cardiovasc. Imaging 2022, 15, 715–727. [Google Scholar] [CrossRef]

- Akerman, A.P.; Porumb, M.; Scott, C.G.; Beqiri, A.; Chartsias, A.; Ryu, A.J.; Hawkes, W.; Huntley, G.D.; Arystan, A.Z.; Kane, G.C.; et al. Automated Echocardiographic Detection of Heart Failure With Preserved Ejection Fraction Using Artificial Intelligence. JACC Adv. 2023, 2, 100452. [Google Scholar] [CrossRef]

- Moghaddasi, H.; Nourian, S. Automatic Assessment of Mitral Regurgitation Severity Based on Extensive Textural Features on 2D Echocardiography Videos. Comput. Biol. Med. 2016, 73, 47–55. [Google Scholar] [CrossRef] [PubMed]

- Salte, I.M.; Østvik, A.; Smistad, E.; Melichova, D.; Nguyen, T.M.; Karlsen, S.; Brunvand, H.; Haugaa, K.H.; Edvardsen, T.; Lovstakken, L.; et al. Artificial Intelligence for Automatic Measurement of Left Ventricular Strain in Echocardiography. JACC Cardiovasc. Imaging 2021, 14, 1918–1928. [Google Scholar] [CrossRef] [PubMed]

- Howard, J.P.; Stowell, C.C.; Cole, G.D.; Ananthan, K.; Demetrescu, C.D.; Pearce, K.; Rajani, R.; Sehmi, J.; Vimalesvaran, K.; Kanaganayagam, G.S.; et al. Automated Left Ventricular Dimension Assessment Using Artificial Intelligence Developed and Validated by a UK-Wide Collaborative. Circ. Cardiovasc. Imaging 2021, 14, e011951. [Google Scholar] [CrossRef] [PubMed]

- He, B.; Kwan, A.C.; Cho, J.H.; Yuan, N.; Pollick, C.; Shiota, T.; Ebinger, J.; Bello, N.A.; Wei, J.; Josan, K.; et al. Blinded, Randomized Trial of Sonographer versus AI Cardiac Function Assessment. Nature 2023, 616, 520–524. [Google Scholar] [CrossRef]

- Barry, T.; Farina, J.M.; Chao, C.-J.; Ayoub, C.; Jeong, J.; Patel, B.N.; Banerjee, I.; Arsanjani, R. The Role of Artificial Intelligence in Echocardiography. J. Imaging 2023, 9, 50. [Google Scholar] [CrossRef]

- Ferraz, S.; Coimbra, M.; Pedrosa, J. Assisted Probe Guidance in Cardiac Ultrasound: A Review. Front. Cardiovasc. Med. 2023, 10, 1056055. [Google Scholar] [CrossRef]

- Alsharqi, M.; Lapidaire, W.; Iturria-Medina, Y.; Xiong, Z.; Williamson, W.; Mohamed, A.; Tan, C.M.J.; Kitt, J.; Burchert, H.; Fletcher, A.; et al. A Machine Learning-Based Score for Precise Echocardiographic Assessment of Cardiac Remodelling in Hypertensive Young Adults. Eur. Heart J.-Imaging Methods Pract. 2023, 1, qyad029. [Google Scholar] [CrossRef]

- Davis, A.; Billick, K.; Horton, K.; Jankowski, M.; Knoll, P.; Marshall, J.E.; Paloma, A.; Palma, R.; Adams, D.B. Artificial Intelligence and Echocardiography: A Primer for Cardiac Sonographers. J. Am. Soc. Echocardiogr. 2020, 33, 1061–1066. [Google Scholar] [CrossRef]

- Day, T.G.; Kainz, B.; Hajnal, J.; Razavi, R.; Simpson, J.M. Artificial Intelligence, Fetal Echocardiography, and Congenital Heart Disease. Prenat. Diagn. 2021, 41, 733–742. [Google Scholar] [CrossRef]

- An, Q.; Rahman, S.; Zhou, J.; Kang, J.J. A Comprehensive Review on Machine Learning in Healthcare Industry: Classification, Restrictions, Opportunities and Challenges. Sensors 2023, 23, 4178. [Google Scholar] [CrossRef]

- Al-Zaiti, S.S.; Alghwiri, A.A.; Hu, X.; Clermont, G.; Peace, A.; Macfarlane, P.; Bond, R. A Clinician’s Guide to Understanding and Critically Appraising Machine Learning Studies: A Checklist for Ruling Out Bias Using Standard Tools in Machine Learning (ROBUST-ML). Eur. Heart J. Digit. Health 2022, 3, 125–140. [Google Scholar] [CrossRef]

- Jone, P.-N.; Gearhart, A.; Lei, H.; Xing, F.; Nahar, J.; Lopez-Jimenez, F.; Diller, G.-P.; Marelli, A.; Wilson, L.; Saidi, A.; et al. Artificial Intelligence in Congenital Heart Disease. JACC Adv. 2022, 1, 100153. [Google Scholar] [CrossRef]

- Pham, T.M.; Pandis, N.; White, I.R. Missing Data: Issues, Concepts, Methods. Semin. Orthod. 2024, 30, 37–44. [Google Scholar] [CrossRef]

- Liu, M.; Li, S.; Yuan, H.; Ong, M.E.H.; Ning, Y.; Xie, F.; Saffari, S.E.; Shang, Y.; Volovici, V.; Chakraborty, B.; et al. Handling Missing Values in Healthcare Data: A Systematic Review of Deep Learning-Based Imputation Techniques. Artif. Intell. Med. 2023, 142, 102587. [Google Scholar] [CrossRef] [PubMed]

- Nijman, S.; Leeuwenberg, A.; Beekers, I.; Verkouter, I.; Jacobs, J.; Bots, M.; Asselbergs, F.; Moons, K.; Debray, T. Missing Data Is Poorly Handled and Reported in Prediction Model Studies Using Machine Learning: A Literature Review. J. Clin. Epidemiol. 2022, 142, 218–229. [Google Scholar] [CrossRef]

- Gupta, K.; Attri, J.; Singh, A.; Kaur, H.; Kaur, G. Basic Concepts for Sample Size Calculation: Critical Step for Any Clinical Trials! Saudi J. Anaesth. 2016, 10, 328–331. [Google Scholar] [CrossRef] [PubMed]

- Collins, G.S.; Moons, K.G.M.; Dhiman, P.; Riley, R.D.; Beam, A.L.; Calster, B.V.; Ghassemi, M.; Liu, X.; Reitsma, J.B.; van Smeden, M.; et al. TRIPOD+AI Statement: Updated Guidance for Reporting Clinical Prediction Models That Use Regression or Machine Learning Methods. BMJ 2024, 385, e078378. [Google Scholar] [CrossRef]

- U.S. Food and Drug Administration. Medicines and Healthcare Productes Good Machine Learning Practice for Medical Device Development: Guiding Principles. Available online: https://www.gov.uk/government/publications/good-machine-learning-practice-for-medical-device-development-guiding-principles (accessed on 26 July 2025).

- Liu, X.; Rivera, S.C.; Moher, D.; Calvert, M.J.; Denniston, A.K. Reporting Guidelines for Clinical Trial Reports for Interventions Involving Artificial Intelligence: The CONSORT-AI Extension. BMJ 2020, 370, m3164. [Google Scholar] [CrossRef]

- Raissi-Dehkordi, N.; Raissi-Dehkordi, N.; Xu, B. Contemporary Applications of Artificial Intelligence and Machine Learning in Echocardiography. npj Cardiovasc. Health 2025, 2, 30. [Google Scholar] [CrossRef]

- Foody, G.M. Ground Truth in Classification Accuracy Assessment: Myth and Reality. Geomatics 2024, 4, 81–90. [Google Scholar] [CrossRef]

- Luijten, B.; Chennakeshava, N.; Eldar, Y.C.; Mischi, M.; Sloun, R.J.G. van Ultrasound Signal Processing: From Models to Deep Learning. Ultrasound Med. Biol. 2023, 49, 677–698. [Google Scholar] [CrossRef] [PubMed]

- Ostvik, A.; Smistad, E.; Aase, S.A.; Haugen, B.O.; Lovstakken, L. Real-Time Standard View Classification in Transthoracic Echocardiography Using Convolutional Neural Networks. Ultrasound Med. Biol. 2019, 45, 374–384. [Google Scholar] [CrossRef] [PubMed]

- Ferrão, J.C.; Oliveira, M.D.; Janela, F.; Martins, H.M.G. Preprocessing Structured Clinical Data for Predictive Modeling and Decision Support. Appl. Clin. Inf. 2016, 7, 1135–1153. [Google Scholar] [CrossRef]

- Diaz, O.; Kushibar, K.; Osuala, R.; Linardos, A.; Garrucho, L.; Igual, L.; Radeva, P.; Prior, F.; Gkontra, P.; Lekadir, K. Data Preparation for Artificial Intelligence in Medical Imaging: A Comprehensive Guide to Open-Access Platforms and Tools. Phys. Medica 2021, 83, 25–37. [Google Scholar] [CrossRef]

- Sylolypavan, A.; Sleeman, D.; Wu, H.; Sim, M. The Impact of Inconsistent Human Annotations on AI Driven Clinical Decision Making. npj Digit. Med. 2023, 6, 26. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Oikonomou, E.K.; Vaid, A.; Holste, G.; Coppi, A.; McNamara, R.L.; Baloescu, C.; Krumholz, H.M.; Wang, Z.; Apakama, D.J.; Nadkarni, G.N.; et al. Artificial Intelligence-Guided Detection of under-Recognised Cardiomyopathies on Point-of-Care Cardiac Ultrasonography: A Multicentre Study. Lancet Digit. Health 2025, 7, e113–e123. [Google Scholar] [CrossRef]

- Liu, X.; Faes, L.; Kale, A.U.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. A Comparison of Deep Learning Performance against Health-Care Professionals in Detecting Diseases from Medical Imaging: A Systematic Review and Meta-Analysis. Lancet Digit. Health 2019, 1, e271–e297. [Google Scholar] [CrossRef]

- Tromp, J.; Seekings, P.J.; Hung, C.-L.; Iversen, M.B.; Frost, M.J.; Ouwerkerk, W.; Jiang, Z.; Eisenhaber, F.; Goh, R.S.M.; Zhao, H.; et al. Automated Interpretation of Systolic and Diastolic Function on the Echocardiogram: A Multicohort Study. Lancet Digit. Health 2022, 4, e46–e54. [Google Scholar] [CrossRef]

- Chao, C.-J.; Kato, N.; Scott, C.G.; Lopez-Jimenez, F.; Lin, G.; Kane, G.C.; Pellikka, P.A. Unsupervised Machine Learning for Assessment of Left Ventricular Diastolic Function and Risk Stratification. J. Am. Soc. Echocardiogr. 2022, 35, 1214–1225.e8. [Google Scholar] [CrossRef]

- Ouyang, D.; He, B.; Ghorbani, A.; Yuan, N.; Ebinger, J.; Langlotz, C.P.; Heidenreich, P.A.; Harrington, R.A.; Liang, D.H.; Ashley, E.A.; et al. Video-Based AI for Beat-to-Beat Assessment of Cardiac Function. Nature 2020, 580, 252–256. [Google Scholar] [CrossRef]

- Nagata, Y.; Kado, Y.; Onoue, T.; Otani, K.; Nakazono, A.; Otsuji, Y.; Takeuchi, M. Impact of Image Quality on Reliability of the Measurements of Left Ventricular Systolic Function and Global Longitudinal Strain in 2D Echocardiography. Echo Res. Pr. 2018, 5, 27–39. [Google Scholar] [CrossRef]

- Tsang, W.; Salgo, I.S.; Medvedofsky, D.; Takeuchi, M.; Prater, D.; Weinert, L.; Yamat, M.; Mor-Avi, V.; Patel, A.R.; Lang, R.M. Transthoracic 3D Echocardiographic Left Heart Chamber Quantification Using an Automated Adaptive Analytics Algorithm. JACC Cardiovasc. Imaging 2016, 9, 769–782. [Google Scholar] [CrossRef]

- Samad, M.D.; Ulloa, A.; Wehner, G.J.; Jing, L.; Hartzel, D.; Good, C.W.; Williams, B.A.; Haggerty, C.M.; Fornwalt, B.K. Predicting Survival From Large Echocardiography and Electronic Health Record Datasets: Optimization With Machine Learning. JACC Cardiovasc. Imaging 2019, 12, 681–689. [Google Scholar] [CrossRef]

- Zhou, M.; Deng, Y.; Liu, Y.; Su, X.; Zeng, X. Echocardiography-Based Machine Learning Algorithm for Distinguishing Ischemic Cardiomyopathy from Dilated Cardiomyopathy. BMC Cardiovasc. Disord. 2023, 23, 476. [Google Scholar] [CrossRef]

- Valsaraj, A.; Kalmady, S.V.; Sharma, V.; Frost, M.; Sun, W.; Sepehrvand, N.; Ong, M.; Equilbec, C.; Dyck, J.R.B.; Anderson, T.; et al. Development and Validation of Echocardiography-Based Machine-Learning Models to Predict Mortality. eBioMedicine 2023, 90, 104479. [Google Scholar] [CrossRef]

- Ho, S.Y.; Phua, K.; Wong, L.; Bin Goh, W.W. Extensions of the External Validation for Checking Learned Model Interpretability and Generalizability. Patterns 2020, 1, 100129. [Google Scholar] [CrossRef]

- Fletcher, A.J.; Lapidaire, W.; Leeson, P. Machine Learning Augmented Echocardiography for Diastolic Function Assessment. Front. Cardiovasc. Med. 2021, 8, 711611. [Google Scholar] [CrossRef]

- Singh, H.; Mhasawade, V.; Chunara, R. Generalizability Challenges of Mortality Risk Prediction Models: A Retrospective Analysis on a Multi-Center Database. PLoS Digit. Health 2022, 1, e0000023. [Google Scholar] [CrossRef]

- Morbach, C.; Gelbrich, G.; Schreckenberg, M.; Hedemann, M.; Pelin, D.; Scholz, N.; Miljukov, O.; Wagner, A.; Theisen, F.; Hitschrich, N.; et al. Population Data-Based Federated Machine Learning Improves Automated Echocardiographic Quantification of Cardiac Structure and Function: The Automatisierte Vermessung Der Echokardiographie Project. Eur. Heart J. Digit. Health 2024, 5, 77–88. [Google Scholar] [CrossRef]

- Myhre, P.L.; Hung, C.-L.; Frost, M.J.; Jiang, Z.; Ouwerkerk, W.; Teramoto, K.; Svedlund, S.; Saraste, A.; Hage, C.; Tan, R.-S.; et al. External Validation of a Deep Learning Algorithm for Automated Echocardiographic Strain Measurements. Eur. Heart J. Digit. Health 2024, 5, 60–68. [Google Scholar] [CrossRef]

- Upton, R.; Akerman, A.P.; Marwick, T.H.; Johnson, C.L.; Piotrowska, H.; Bajre, M.; Breen, M.; Dawes, H.; Dehbi, H.-M.; Descamps, T.; et al. PROTEUS: A Prospective RCT Evaluating Use of AI in Stress Echocardiography. NEJM AI 2024, 1, AIoa2400865. [Google Scholar] [CrossRef]

- Narula, S.; Shameer, K.; Salem, O.A.M.; Dudley, J.T.; Sengupta, P.P. Machine-Learning Algorithms to Automate Morphological and Functional Assessments in 2D Echocardiography. JACC 2016, 68, 2287–2295. [Google Scholar] [CrossRef]

- Shah, S.J.; Lam, C.S.P.; Svedlund, S.; Saraste, A.; Hage, C.; Tan, R.-S.; Beussink-Nelson, L.; Ljung Faxén, U.; Fermer, M.L.; Broberg, M.A.; et al. Prevalence and Correlates of Coronary Microvascular Dysfunction in Heart Failure with Preserved Ejection Fraction: PROMIS-HFpEF. Eur. Heart J. 2018, 39, 3439–3450. [Google Scholar] [CrossRef]

- Long, A.; Finer, J.; Hartman, H.; Hartzel, D.; Jing, L.; Kelsey, C.; Rocha, D.; Ruhl, J.; vanMaanen, D.; Elnabawi, Y.; et al. Deep Learning for Echocardiographic Assessment and Risk Stratification of Aortic, Mitral, and Tricuspid Regurgitation: The DELINEATE-Regurgitation Study. Eur. Heart J. 2025, 46, 2780–2791. [Google Scholar] [CrossRef]

- Sahashi, Y.; Ouyang, D.; Okura, H.; Kagiyama, N. AI-Echocardiography: Current Status and Future Direction. J. Cardiol. 2025, 85, 458–464. [Google Scholar] [CrossRef]

- Malins, J.G.; Anisuzzaman, D.M.; Jackson, J.I.; Lee, E.; Naser, J.A.; Rostami, B.; Bird, J.G.; Spiegelstein, D.; Amar, T.; Oh, J.K.; et al. Snapshot Artificial Intelligence-Determination of Ejection Fraction from a Single Frame Still Image: A Multi-Institutional, Retrospective Model Development and Validation Study. Lancet Digit. Health 2025, 7, e255–e263. [Google Scholar] [CrossRef]

- Roberts, M.; Driggs, D.; Thorpe, M.; Gilbey, J.; Yeung, M.; Ursprung, S.; Aviles-Rivero, A.I.; Etmann, C.; McCague, C.; Beer, L.; et al. Common Pitfalls and Recommendations for Using Machine Learning to Detect and Prognosticate for COVID-19 Using Chest Radiographs and CT Scans. Nat. Mach. Intell. 2021, 3, 199–217. [Google Scholar] [CrossRef]

- Sounderajah, V.; Ashrafian, H.; Rose, S.; Shah, N.H.; Ghassemi, M.; Golub, R.; Kahn, C.E.; Esteva, A.; Karthikesalingam, A.; Mateen, B.; et al. A Quality Assessment Tool for Artificial Intelligence-Centered Diagnostic Test Accuracy Studies: QUADAS-AI. Nat. Med. 2021, 27, 1663–1665. [Google Scholar] [CrossRef]

- FDA Artificial Intelligence-Enabled Device Software Functions: Lifecycle Management and Marketing Submission Recommendations. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/artificial-intelligence-enabled-device-software-functions-lifecycle-management-and-marketing (accessed on 29 August 2025).

- Topol, E.J. High-Performance Medicine: The Convergence of Human and Artificial Intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef]

- Park, S.H.; Choi, J.-I.; Fournier, L.; Vasey, B. Randomized Clinical Trials of Artificial Intelligence in Medicine: Why, When, and How? Korean J. Radiol. 2022, 23, 1119–1125. [Google Scholar] [CrossRef]

- Roope, L.S.J.; Walsh, J.; Welland, M.; Samuel, G.; Johansen-Berg, H.; Nobre, A.C.; Clare, S.; Higham, H.; Campbell, J.; Denison, T.; et al. Reducing Inequalities through Greater Diversity in Clinical Trials—As Important for Medical Devices as for Drugs and Therapeutics. Contemp. Clin. Trials Commun. 2025, 45, 101467. [Google Scholar] [CrossRef] [PubMed]

- Babic, B.; Glenn Cohen, I.; Stern, A.D.; Li, Y.; Ouellet, M. A General Framework for Governing Marketed AI/ML Medical Devices. npj Digit. Med. 2025, 8, 328. [Google Scholar] [CrossRef] [PubMed]

- Dolin, P.; Li, W.; Dasarathy, G.; Berisha, V. Statistically Valid Post-Deployment Monitoring Should Be Standard for AI-Based Digital Health. arXiv 2025, arXiv:2506.05701v1. [Google Scholar]

- Kim, D.W.; Jang, H.Y.; Kim, K.W.; Shin, Y.; Park, S.H. Design Characteristics of Studies Reporting the Performance of Artificial Intelligence Algorithms for Diagnostic Analysis of Medical Images: Results from Recently Published Papers. Korean J. Radiol. 2019, 20, 405. [Google Scholar] [CrossRef]

- Yusuf, M.; Atal, I.; Li, J.; Smith, P.; Ravaud, P.; Fergie, M.; Callaghan, M.; Selfe, J. Reporting Quality of Studies Using Machine Learning Models for Medical Diagnosis: A Systematic Review. BMJ Open 2020, 10, e034568. [Google Scholar] [CrossRef]

- Sen Sengupta, P.P.; Shrestha, S.; Berthon, B.; Messas, E.; Donal, E.; Tison, G.H.; Min, J.K.; D’hOoge, J.; Voigt, J.-U.; Dudley, J.; et al. Proposed Requirements for Cardiovascular Imaging-Related Machine Learning Evaluation (PRIME): A Checklist. JACC Cardiovasc. Imaging 2020, 13, 2017–2035. [Google Scholar] [CrossRef]

- Theriault-Lauzier, P.; Corbin, D.; Tastet, O.; Langlais, E.L.; Taji, B.; Kang, G.; Chong, A.-Y.; So, D.; Tang, A.; Gichoya, J.W.; et al. A Responsible Framework for Applying Artificial Intelligence on Medical Images and Signals at the Point of Care: The PACS-AI Platform. Can. J. Cardiol. 2024, 40, 1828–1840. [Google Scholar] [CrossRef]

- Lafitte, S.; Lafitte, L.; Jonveaux, M.; Pascual, Z.; Ternacle, J.; Dijos, M.; Bonnet, G.; Reant, P.; Bernard, A. Integrating Artificial Intelligence into an Echocardiography Department: Feasibility and Comparative Study of Automated versus Human Measurements in a High-Volume Clinical Setting. Arch. Cardiovasc. Dis. 2025, 118, 477–488. [Google Scholar] [CrossRef]

- Mollura, D.J.; Culp, M.P.; Pollack, E.; Battino, G.; Scheel, J.R.; Mango, V.L.; Elahi, A.; Schweitzer, A.; Dako, F. Artificial Intelligence in Low- and Middle-Income Countries: Innovating Global Health Radiology. Radiology 2020, 297, 513–520. [Google Scholar] [CrossRef]

- Price, W.N., II.; Gerke, S.; Cohen, I.G. Potential Liability for Physicians Using Artificial Intelligence. JAMA 2019, 322, 1765–1766. [Google Scholar] [CrossRef]

- Whicher, D.; Ahmed, M.; Israni, S.T.; Matheny, M. Artificial Intelligence Model Development and Validation. In Artificial Intelligence in Health Care: The Hope, the Hype, the Promise, the Peril; National Academies Press: New York, NY, USA, 2023. [Google Scholar]

- Hollitt, K.J.; Milanese, S.; Joseph, M.; Perry, R. Can Automation and Artificial Intelligence Reduce Echocardiography Scan Time and Ultrasound System Interaction? Echo Res. Pr. 2025, 12, 1–9. [Google Scholar] [CrossRef]

- Krishna, H.; Desai, K.; Slostad, B.; Bhayani, S.; Arnold, J.H.; Ouwerkerk, W.; Hummel, Y.; Lam, C.S.P.; Ezekowitz, J.; Frost, M.; et al. Fully Automated Artificial Intelligence Assessment of Aortic Stenosis by Echocardiography. J. Am. Soc. Echocardiogr. 2023, 36, 769–777. [Google Scholar] [CrossRef]

- Ioannou, A.; Patel, R.K.; Razvi, Y.; Hanger, M.; Martinez-Naharro, A.; Venneri, L.; Chi Lim, S.; Yoran, H.; Frost, M.; Lam, C.; et al. Automated Analysis of Echocardiograms at Diagnosis Is Able to Predict Prognosis in ATTR Cardiomyopathy. Eur. Heart J. Cardiovasc. Imaging 2023, 24, jead119.387. [Google Scholar] [CrossRef]

- Rajkomar, A.; Dean, J.; Kohane, I. Machine Learning in Medicine. N. Engl. J. Med. 2019, 380, 1347–1358. [Google Scholar] [CrossRef] [PubMed]

| Preprocessing Stage | Summary | Considerations/Challenges |

|---|---|---|

| De-identification | Patient identifiable data needs to be removed prior to analysis, whether this entails patient identification on an image or patient-specific structures/anatomy that could lead to their identification. | Manually removing this data can be time-consuming; automatic processes need to be verified to appropriate data protection standards. Essential anatomical data may be unintentionally removed. |

| Missing data | Occurrence of missing data (images or tabular data) from the dataset. This can occur due to data not being recorded, data being unavailable, or due to the removal of known errors or outliers. | Missing data can introduce bias as valuable data may be missing. This is especially the case when data is missing, not at random. There is a trade-off between a cleaned dataset and the preservation of the data to allow for appropriate model performance. Computation imputations can assist with missing data. |

| Format standardisation | Typically, echocardiographic images used in clinical practice are acquired in DICOM format; however, images may need to be converted to a simpler file format to allow adequate analysis. | Available data conversion tools can allow for visual inspection of data from the user during standardisation, allowing image inconsistencies to be identified before analysis. |

| Noise reduction | Removal of artefactual image noise, such as with a low-pass filter or through data smoothing to enhance image quality. | Risk of unintentionally filtering true cardiac structures if inappropriate filters are applied. |

| Normalisation | Ensuring a scalable relationship of frames per second (FPS) on echocardiographic images to allow for consistent image analysis. | Lower FPS values than desired could introduce accidental data bias if inappropriately augmented; conversely the removal of frames from higher FPS images could introduce asynchronous image analysis. |

| Performance Metric | Definition | Common AI Model Applications |

|---|---|---|

| Accuracy | Percentage of correct prediction made by an AI model. For instance, the diagnostic accuracy of contrast echocardiography for detecting left ventricular thrombus. | Classification and diagnostic models |

| Sensitivity | True positive rate, how well an AI model detects a positive case. For instance, detecting the presence of aortic stenosis when this is truly present. | Disease detection and screening classification tasks |

| Specificity | True negative rates, how well an AI model identifies normal cases correctly. For instance, patients without obstructive ischemic heart disease having a normal stress echocardiogram. | Rule-out classification tasks |

| AUC-ROC | How well an AI model can distinguish between different classes. For instance, distinguishing between patients who have severe left ventricular systolic impairment, impaired left ventricular systolic function, and normal left ventricular systolic function. | Binary and multi-class classification tasks |

| Precision and Recall | How many of the AI model’s positive predictions are correct (precision) and how many positive cases are identified by the AI model (recall). | Imbalanced classification tasks |

| Study/AI Model/Author | AI Task | Internal Training Dataset | External Validation Dataset | Performance |

|---|---|---|---|---|

| PROTEUS [9,52] | Detection of coronary artery disease on stress echocardiography and appropriate referral to invasive coronary angiography | EVAREST, multiple UK NHS Trusts (NCT03674255). | Rainier study (Oregon Health Science University Study, Portland, OR, USA. | Internal training dataset: AUROC: 0.934. Specificity: 85.7% (95% CI: 82.7, 88.9%). Sensitivity of 86.7% (95% CI: 80.2, 94.3%) External validation dataset: AUROC of 0.927. Specificity of 92.7% (95% CI: 87.8, 97.6%). Sensitivity of 84.4% (95% CI: 73.9, 95.0%). |

| EchoNet-Dynamic [42] | LVEF | EchoNet-Dynamic (Stanford University) | Cedars-Sinai Medical Centre | AUROC 0.97 for classifying LVEF thresholds of <40% and >60% in internal training and external validation dataset. |

| ML algorithm to Automate Morphological and Functional Assessments in 2D Echocardiography [53] | HCM vs. athlete’s heart classification | Mount Sinai Hospital (New York) | Independent external cohort | AUROC of >0.93 in internal training and external validation dataset. |

| EchoGo Heart Failure [10] | Classification of HFpEF, no HFpEF, or non-diagnostic. | Mayo Clinic, Minnesota, US and St Georges Hospital, London, UK. | Mayo Clinic, Minnesota US (patients from a geographically distinct area than those who were included in the internal training set). | Internal training dataset: AUROC: 0.97 (95% CI: 0.96, 0.97). External validation dataset: AUROC: 0.95 (95% CI: 0.93, 0.96). |

| DL algorithm for automated global longitudinal strain [51] | Automated global longitudinal strain | Mackay Memorial Hospital, Taipei | Prospective multi-national observation study PROMISE-HFpEF [54] | Internal training dataset: Automated measurements showed good agreement versus manual measurements: −18.9 ± 4.5% vs. −18.2 ± 4.4%, bias 0.68 ± 2.52%, MAD 2.0 ± 1.67, RMSE = 2.61, R = 0.84. External training dataset: Automated measurements showed good agreement versus manual measurements: −15.4 ± 4.1% vs. −15.9 ± 3.6%, bias −0.65 ± 2.71%, MAD 2.19 ± 1.71, RMSE = 2.78, R = 0.76. |

| DELINEATE-regurgitation study [55] | Deep learning algorithm for the assessment and risk stratification of aortic, mitral, and tricuspid regurgitation. | Internal training dataset: Weighted kappa for regurgitation classification: Aortic regurgitation: 0.81 Mitral regurgitation: 0.76 Tricuspid regurgitation: 0.73 External training dataset: Weighted kappa for regurgitation classification: Aortic regurgitation: 0.76 Mitral regurgitation: 0.72 Tricuspid regurgitation: 0.64. |

| Prospective Trial | Study Description | Estimated Patient Enrolment | Estimated Year of Study Completion |

|---|---|---|---|

| AGILE-ECHO: Use of artificial intelligence-guided echocardiography to assist cardiovascular patient management. (NCT05558605) | Evaluate effectiveness of AI-guided echocardiography acquisition for triage and management of patients with suspected heart failure and valvular heart disease in rural and remote Australia | 612 | 2025 |

| MAIQUEE: A multi-centre study on artificial intelligence-based quantitative evaluation of echocardiography. (NCT07133516) | Comparison of echocardiographic analysis between automatic AI-performed measurements with manual measurements from physicians of varying experience levels | 1600 | 2025 |

| EchoNet-Screening: Artificial intelligence-guided echocardiographic screening of rare diseases. (NCT05139797) | Evaluation of the EchoNet-LVH algorithm to accurately detect cardiac hypertrophy and identify patients that require additional screening for cardiac amyloidosis | 300 | 2027 |

| AISEARHF: Artificial intelligence versus sonographer echocardiogram analysis and reporting in patients with heart failure. (NCT07021599) | Multicentre RCT comparing echocardiographic analysis between AV versus experienced sonographers | 514 | 2028 |

| AI-SEE: Artificial intelligence stress echo (sub-study of stress echo 2030) (NCT05081115) | AI-SEE images: Operator-independent image interpretation of stress echocardiography, including assessment of coronary flow reserve, diastolic function, and right ventricular function AI-SEE data: Use of DL algorithms to create personalised patient risk prediction models. | 10,000 | 2030 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bennett, S.; Johnson, C.L.; Fisher, G.; Erskine, F.; Krasner, S.; Fletcher, A.J.; Leeson, P. Development and Validation of Echocardiography Artificial Intelligence Models: A Narrative Review. J. Clin. Med. 2025, 14, 7066. https://doi.org/10.3390/jcm14197066

Bennett S, Johnson CL, Fisher G, Erskine F, Krasner S, Fletcher AJ, Leeson P. Development and Validation of Echocardiography Artificial Intelligence Models: A Narrative Review. Journal of Clinical Medicine. 2025; 14(19):7066. https://doi.org/10.3390/jcm14197066

Chicago/Turabian StyleBennett, Sadie, Casey L. Johnson, George Fisher, Fiona Erskine, Samuel Krasner, Andrew J. Fletcher, and Paul Leeson. 2025. "Development and Validation of Echocardiography Artificial Intelligence Models: A Narrative Review" Journal of Clinical Medicine 14, no. 19: 7066. https://doi.org/10.3390/jcm14197066

APA StyleBennett, S., Johnson, C. L., Fisher, G., Erskine, F., Krasner, S., Fletcher, A. J., & Leeson, P. (2025). Development and Validation of Echocardiography Artificial Intelligence Models: A Narrative Review. Journal of Clinical Medicine, 14(19), 7066. https://doi.org/10.3390/jcm14197066