1. Introduction

Within ophthalmology, there are complex disorders and treatments associated with the cornea and anterior chamber. Ophthalmology has its own language and precise anatomy, which can be confusing for patients to understand. Clear and effective patient education materials (PEMs) are essential for enhancing understanding, supporting informed decision-making, and improving adherence to treatment plans. In ophthalmology, limited health literacy can directly contribute to medication and drop nonadherence and delayed recognition of urgent symptoms that signify potentially devastating disease processes or complications. Thus, improving readability of PEMs has direct implications for patient safety and vision outcomes. PEMs must prioritize accessibility, readability, and comprehension, ensuring that patients can fully benefit from the information provided and feel empowered in their own care.

The average American adult is estimated to read at an eighth-grade level, with 47% struggling to synthesize information from complex texts and nearly 20% unable to understand fourth-grade-level text [

1]. Based on this, the American Medical Association and National Institute of Health recommend PEMs are below a 7th-grade reading level [

2]. Organizations like the American Academy of Ophthalmology (AAO) and specialty-specific organizations have improved accessibility of high-quality online resources. While PEMs written by AAO are relatively easy to read, the range of topics is limited. PEMs published by other ophthalmology associations have been found to be too difficult to read [

3]. For example, Eid et al. found that 16 brochures from the American Society of Ophthalmic Plastic and Reconstructive Surgery averaged an 11.2-grade reading level [

4], while Cheng et al. [

5] reported glaucoma PEMs at a 10.3-grade level, and pediatric ophthalmology online PEMs had an average grade level of 11.8 [

6]. As such, there is a need for easily accessible patient education materials (PEMs) that cover a broader range of topics in ophthalmology, especially covering conditions affecting the cornea and anterior segment. AI chatbots may help clinicians optimize PEMs for better understanding.

In recent years, we have seen the rise of large language models (LLMs) and artificial intelligence (AI), and these have since been extensively explored as tools in healthcare. One interfacing format of these LLMs are chatbots, which allow the user to message the model in a conversational manner. After many years of development, OpenAI released ChatGPT (San Francisco, CA, USA,

http://chatgpt.com) to the public in November 2022, with Microsoft following with Bing Chat (Copilot) (Redmond, WA, USA,

https://copilot.microsoft.com) February 2023. Meta’s Meta-Llama, an open-source LLM, also debuted in February 2023 (Menlo Park, CA, USA,

https://www.meta.ai/).

In ophthalmology, AI chatbots have been shown to be useful in assisting with charting, scientific writing, and are even capable of accurately answering board questions [

7,

8,

9,

10]. Through prompting, they are able to generate patient education materials (PEMs) on demand, though the quality and readability of AI-generated ophthalmic PEMs remain uncertain, as AI models can hallucinate.

Our study uniquely contributes to the field by being the first, to our knowledge, to evaluate the use of large language models (LLMs) in generating patient education materials (PEMs) specifically for corneal and anterior chamber diagnoses. Unlike previous research, we included open-source LLMs such as Meta-Llama-3.1-70B-Instruct, alongside ChatGPT-4o and Microsoft Copilot, thereby broadening the scope of AI tools assessed.

This study aims to compare the readability of PEMs generated by ChatGPT4o, Microsoft Copilot, and Meta-Llama-3.1-70B-Instruct (utilized the software versions available online when accessed on 21 September 2024) with those on the AAO website,

https://www.aao.org/eye-health/a-z (accessed on 7 January 2025) [

11]. We hypothesize that by using prompt modifiers to adjust to a 6th-grade reading level, we can produce more readable PEMs than the existing ones. In addition to accessing readability, our goal is to explore the feasibility of integrating AI-generated and clinician edited PEMs into clinical workflows, where they could supplement existing resources, fill gaps in underserved topics, and be adapted in real-time to meet real-world needs.

2. Materials and Methods

The research was conducted in accordance with the principles of the Declaration of Helsinki, and no Health Insurance Portability and Accountability Act (HIPAA)-related information was used throughout the study. IRB/Ethics Committee ruled that approval was not required for this study.

The latest available editions of ChatGPT-4o, Microsoft Bing Chat/Copilot (Copilot), and the open-source Meta-Llama-3.1-70B-Instruct were prompted in late September 2024 on the following 15 common diagnoses relating to cornea and anterior chamber: corneal abrasion, cataract, keratitis, keratoconus, Fuchs dystrophy, conjunctivitis, corneal laceration, dry eye, iridocorneal endothelial syndrome, myopia, hyperopia, astigmatism, pinguecula and pterygium, scleritis, and trichiasis. ChatGPT’s temporary chat feature was utilized to discard any user memories and prevent the conversation from becoming training data. To emulate the temporary chat feature, a new chat was used each time in Copilot and Llama. To minimize confounding variables, web-search was disabled for all models.

This initial prompt was utilized as follows: act as a board-certified and fellowship-trained cornea, external diseases and refractive surgery ophthalmologist. For the following questions about an ophthalmic diagnosis, generate a 675-word patient education document (plus or minus 40 words) that is easily readable, comprehensible, and logical for patients of all educational backgrounds.

The topic is “[Topic]”.

The document must address the following questions:

What is the topic?

What are diagnostics involved, if any?

Why is treatment or procedure needed?

What are the risks and complications associated with the topic?

The target length of output was based on existing PEM materials from AAO. We picked AAO online PEMs due to their free, public availability and already high readability on several topics relevant to a cornea specialist.

Immediately after this, the following follow-up readability-optimized (FRO) prompt was used as follows: “Please reword your PEM output answer above at a 6th-grade reading level. Please try to keep word output as similar as possible”.

The outputs were saved as plain-text files and accessed using the following open-source program, cdimasci’s py-readability-metrics (

https://github.com/cdimascio/py-readability-metrics accessed 22 September 2024) [

12], which evaluates text though the following nine readability analysis libraries: Flesch–Kincaid Grade Level, Flesch Reading Ease Score, Dale–Chall Readability Score, Automated Readability Index (ARI), Coleman–Liau Index Grade Level, Gunning Fog Grade Level, Simple Measure of Gobbledygook (SMOG) Grade Level, Spache Readability Grade Level, and the Linsear Write Grade Level. An average of all Grade Level scores was used to create a combined grade level score. These libraries use various formulas based on factors like character and syllable numbers and sentence length.

2.1. Statistical Methods

Jamovi (Jamovi Project, Sydney, Australia, version 2.6.2 ), Python (Python Software Foundation, Beaverton, OR, USA, version 3.11.3), and R (The R Foundation, Online, version 4.5) were used to conduct statistical analyses as well as generate figures. The Shapiro–Wilk Test and F-test were used to analyze normality and equality of variance, respectively. Welch’s Analysis of Variance (ANOVA) was conducted with a Games–Howell (unequal variances) post hoc analysis to compare groups to each other and then to create pair wise comparisons, respectively. A threshold

p-value of 0.05 was used to determine statistical significance. For a flowsheet detailing our methods, see

Figure 1.

2.2. AI Assistance

During the preparation of this work, the authors used ChatGPT, Copilot, and Llama in order to create sample PEMs that were used in this analysis. Additionally, ChatGPT 4o was used in some areas to improve language and readability such as spelling and grammar. After using this service, the authors reviewed and edited the content as needed and take full responsibility for the content of the publication

3. Results

For all 15 topics, ChatGPT, Copilot, and Llama were able to create PEMs successfully based on the prompting process previously described.

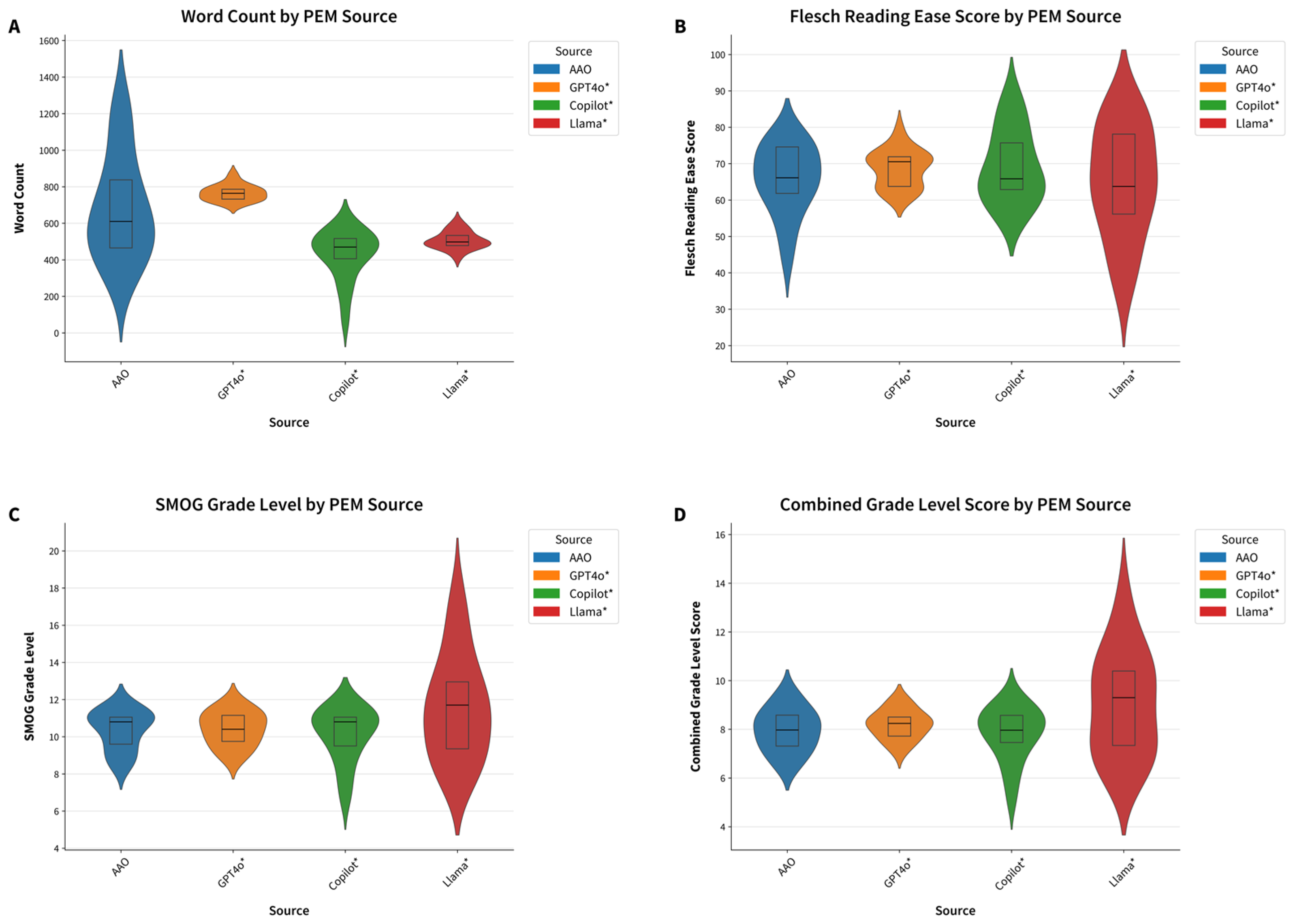

Across all indices, the readability of all PEMs was generally at a higher grade level than the recommended 6th-grade level on average. The average of all grade-level readability indices for AAO PEMs was 8.0 ± 1.5 (

Table 1).

In comparison, initially prompted GPT4o, Copilot, and Llama had higher average readability grade levels of 10.8 ± 0.9, 12.2 ± 1.9, and 13.2 ± 3.1, respectively. When the FRO prompt was used to produce PEMs at a 6th-grade reading level, all AI models significantly improved their average readability grade levels: GPT4o to 8.3 ± 1.0, Copilot to 11.2 ± 1.9, and Llama to 9.3 ± 2.7 (

p < 0.001 for all models comparing results of initial prompt and follow-up readability-optimized prompt is visualized in

Figure 1). Similarly, word count improved after the readability-optimized prompt for all models, GPT4o 858 ± 30.4 to 766 ± 43.5, Copilot from 558 ± 68.6 to 428 ± 135, and Llama from 557 ± 39.9 to 507 ± 48.3 words, the latter two being significantly shorter than AAO materials at 681 ± 286 words (

p-value 0.0006 and 0.034, respectively). However, while follow-up prompting significantly improved readability scores compared to the initial prompt, the AI-generated PEMs were not statistically easier to read than AAO materials (

Figure 2 and

Figure 3).

The initial outputs from all three AI chatbots had higher reading difficulty scores across all metrics compared to AAO materials, indicating that initially prompted AI-generated content was harder to read. Among the initial AI responses, Llama had the highest, most difficult-to-read Flesch–Kincaid Grade Level at 14.4 ± 5.0 and Flesch Reading Ease Score of 35.8 ± 16.0. In contrast, on average, GPT-4o had the lowest Flesch–Kincaid Grade Level at 10.9 ± 1.0 and the highest, more readable Flesch Reading Ease Score of 48.7 ± 6.9, but it still higher than AAO at 7.6 ± 1.4 and 65.7 ± 9.5, respectively.

A per topic, sub-analysis was also performed to further explore the data. FRO LLMs generated PEMs with word counts similar to AAO materials, though this varied by topic. It should be noted that Copilot outputs were much shorter in word count for most topics, even after FRO prompting (

Supplementary Materials Figure S1A). Regarding word length, AAO alone had PEMs with over 1000 words for cataract, dry eye, and myopia.

The FRO prompts produced more readable PEMs in some topics when comparing Flesch Reading Ease Scores. See visualization in

Supplementary Materials Figure S1B. A Flesch–Reading Ease Score > 80, indicating 6th-grade reading level, was achieved by Copilot for cataracts (87.5), corneal abrasion (85.6), and dry eye (81.0) as well as Llama for cataract (82.5), corneal abrasion (83.7), hyperopia (83.8), and myopia (81.7). For astigmatism, all LLMs had higher scores than AAO with FRO-prompted Copilot, Llama, and GPT4o with a reading ease of 74.8, 73.2, 72.6, and 64.5, respectively, as compared to AAO’s 64.5. However, AAO had a higher Flesch Reading Ease Score as compared to all LLM chatbots for conjunctivitis (66.1), Fuchs dystrophy (75.0), iridocorneal endothelial syndrome (76.9), and pinguecula and pterygium (70.0). See

Supplementary Materials Figure S1B.

The other topics showed variance in the most readable PEM and the differences between topics were relatively minimal.

A qualitative comparison was performed between AAO and FRO LLM outputs for a representative topic, iridocorneal endothelial (ICE) syndrome. The AAO resource uniquely provided links to

Supplementary Materials, a feature potentially reproducible by LLMs when web-search capability is enabled, although verification of source quality remains essential. This functionality was disabled during the present study to minimize confounding variables, preventing full application of the DISCERN criteria; however, several of its elements were incorporated into the subsequent analysis. Among the evaluated PEMs, AAO was the only source to acknowledge uncertainties and controversies in the pathogenesis of ICE, satisfying a key DISCERN criterion [

13]. Both AAO and ChatGPT-4o addressed demographic risk factors, whereas only ChatGPT-4o delineated the three distinct ICE subtypes; in contrast, the AAO patient-facing article presented ICE as a collective group of disorders and this simplification may be easier to understand. ChatGPT-4o more explicitly linked diagnostic modalities to the underlying pathophysiology compared with Copilot and Llama, which provided only general descriptions of the tests. The AAO resource offered a concise explanation of expected ocular examination findings in plain language without naming specific tests. All FRO-prompted LLMs correctly indicated that management is directed toward the treatment of complications; however, only AAO explicitly emphasized that disease progression itself cannot be slowed by treatment. Copilot, the most brief of all PEMs, did not mention specific treatment strategies, but all other PEMs did. Llama uniquely discussed treatment-associated risks, thereby offering a more comprehensive risk–benefit perspective and its concision mainly from the use of bullet points instead of complete sentences.

These findings suggest that, with appropriate prompts and careful human review for nuance, accuracy, and completeness, AI language models can help physicians more quickly create PEMs that are comparable in length and readability to AAO content, which could aid in patient understanding. However, on average, both AI and AAO materials still exceeded the recommended 6th-grade reading level.

4. Discussion

This study indicates that prompted LLM AI chatbots can be a useful tool in creating easy-to-read patient PEMs. By prompting, we were able to generate PEMs with the LLMs that were at the 7–8th-grade reading level, much improved from the unprompted versions, but many topics were still higher than the NIH and AMA recommendation of 6th-grade. Even when directly prompted to write at below a 6th-grade reading level, the chatbots produced outputs on average that were above the 6th-grade reading level and did not outperform existing human-written materials (AAO). However, only one follow-up prompt was used, and more research is needed to explore prompt engineering to best optimize the creation of accurate and readable PEMs. It is of interest to investigate iterative prompting strategies and test differences in temperature or tone control, which may further enhance readability and preserve content integrity. Another potential investigation would be to directly incorporate the readability formulas as well as the DISCERN or PEMAT criteria. For example, iterative prompting (where prompts are refined across several rounds with feedback or clarification) has been shown to improve evidence recall, self-correction, and adaptability in LLM outputs, outperforming static prompting methods [

14]. The of use chain-of-thought prompting to simplify complex terms could be also studied, though Jeon and Kim found that it did not significantly improve performance compared to simper approaches in medical question answering [

15]. Advanced prompt engineering parameters should also be studied, as temperature can control randomness of the outputs. Specifically, lower temperatures (e.g., ≤0.3) produce more deterministic, predictable outputs while higher temperatures (e.g., ≥0.8) encourage creativity and linguistic variety but may reduce precision and factual fidelity [

16]. Explicitly defining tone (e.g., empathetic, plain-language, narrative, etc.) represents another promising variable for future investigations, as instructing the model to adopt a conversational, empathetic, or instructive tone can influence readability and engagement without sacrificing content accuracy. Investigating whether specific tones (e.g., plain-language, narrative, or clinical) yield greater patient comprehension could inform best practices for tailoring PEMs to diverse literacy levels.

The average readability of AAO PEM was more readable than specialty-specific brochures assessed by similar studies, which showed PEMs having reading levels above 10th-grade. This investigation shows that prompted LLMs can be used to write readable PEMs that are on average around 7th-grade reading level when asked to output at a 6th-grade reading level. Given the average American is estimated to read at an eighth-grade reading level [

1], integrating into a hybrid AI-ophthalmologist workflow could facilitate shared decision-making by increasing the number of available patient education materials, where patients better comprehend risks, benefits, and alternatives of surgical or medical interventions.

Performance varied by topic, and in some cases were more readable than AAO. Clinically, this means that AI may help reduce health literacy barriers for patients with anterior segment conditions where poor understanding of treatment adherence directly impacts outcomes. Given the limited number of topics that AAO’s website covers, AI could be a potential tool to assist in the creation of more PEMs, though physician review is essential to ensure accuracy and completeness. As our qualitative analysis shows, LLMs may omit nuance, particularly regarding areas of uncertainty or controversy. Nevertheless, ophthalmologists could employ AI as a structural scaffold to rapidly generate customized patient education materials with workflows that can be further customized to individual ophthalmologists and patients. For example, a human–AI hybrid workflow for pre- and postoperative care instructions, adjusted to the literacy level of the target population, has the potential to reduce severe complications such as endophthalmitis or corneal melt. Integration of such systems into electronic medical records could further streamline PEM creation, though physician editing and co-signature would remain essential to minimize harm and mitigate medicolegal liability from potential inaccuracies. Ethical considerations must be addressed, as certain tools lack source citations or may extract information out of context. Consequently, only audited and revised AI-generated materials should be used as final patient education resources due to the dynamic nature of model outputs and the aforementioned limitations.

A key strength of this study is its novel exploration of AI chatbots to enhance the readability of ophthalmology PEMs. However, limitations include the focus solely on readability rather than overall quality, such as content comprehensiveness and accuracy. Additionally, only one follow-up prompt was utilized, and variations in chatbot updates may affect reproducibility. The brevity of outputs from models such as Copilot raises concern for potential omission of essential content; however, this may reflect a stylistic preference for bullet points rather than full sentences rather than a true content deficiency, but a more comprehensive analysis is necessary. Additionally, readability algorithms may not fully capture advanced comprehension strategies like analogies or metaphors. Most importantly, human oversight and editing is instrumental as oversimplification may risk omitting important safety information.

The average readability of AAO PEMs in this study aligns with previous findings, showing they are more accessible than specialty-specific brochures, which often exceed a 10th-grade reading level. Unlike prior studies focusing solely on AAO materials, this investigation highlights the potential for LLMs to achieve comparable readability (though not at the 6th-grade gold standard) with broader customization and scalability. Such scalability is clinically relevant for various ophthalmic subspecialties, where existing PEMs are scarce, leaving patients with few reliable resources.

The higher-than-desired grade levels in AI-generated PEMs, even with direct prompting, may stem from limitations in prompt engineering and the intrinsic complexity of ophthalmologic topics. Despite these challenges, targeted prompting demonstrated the capability to improve readability and LLMs are helpful in brainstorming analogies, and these literary devices may enhance understanding in ways not measured by the readability analysis libraries. Additionally, AI models are becoming increasingly accessible to the public, with frequent models and upgrades. Variability in readability across topics suggests that AI-generated content could be optimized further with additional iterations or customized datasets. Clinicians could use this iterative refinement and prompt engineering to balance readability with accuracy, ensuring critical details (e.g., clinician specific nuances in clinical diagnosis and management, medication tapering schedules, and signs of urgent complications) are retained.

Even with their limitations, AI chatbot tools are a great starting point for physicians to improve the number of accessible and readable PEMs for patients and careful prompting can reduce hallucinations, especially if instructed to use specific reference material only and cite sources properly. The authors have created a simple tool accessible at

https://akufta.pythonanywhere.com (Chicago, IL, USA, Version 1, created 22 September 2024) to access readability of any body of text to make the creation and evaluation of PEMs easier (

Figure 4). This tool could be integrated into clinical workflow, allowing physicians or other healthcare personnel to rapidly evaluate whether discharge instructions or informed consent documents meet literacy benchmarks before distribution.

Future research should evaluate AI-generated PEMs through subjective expert review and validated tools, such as DISCERN or PEMAT, to ensure both readability and quality. Exploring other models and how to correctly optimize prompts for readability would be of interest as well. Ultimately, artificial intelligence can be leveraged to more efficiently create PEMs that meet literacy standards, and therefore facilitate improved adherence, reduced anxiety, and increased patient safety.

AI chatbots show great potential for increasing the availability of easy-to-understand PEMs. While they are ever-evolving, can hallucinate, and their outputs require manual review, they can provide an excellent, efficient starting point for clinicians to create effective and accessible PEMs.

Supplementary Materials

The following supporting information can be downloaded at:

https://www.mdpi.com/article/10.3390/jcm14196968/s1, Figure S1: Comparison of LLMs by PEM Ophthalmology Topic and Source. (a) Word Count; (b) Flesch Reading Ease Score * denotes statistical significance

p-value <0.001.

Author Contributions

Conceptualization, A.Y.K.; methodology, A.Y.K. and A.R.D.; software, A.Y.K.; validation, A.Y.K. and A.R.D.; formal analysis, A.Y.K. and A.R.D.; investigation, A.Y.K.; resources, A.Y.K. and A.R.D.; data curation, A.Y.K.; writing—original draft preparation, A.Y.K.; writing—review and editing, A.Y.K. and A.R.D.; visualization, A.Y.K.; supervision, A.R.D.; project administration, A.Y.K. and A.R.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study asthis retrospective study qualifies for exemption under the U.S. Common Rule (45 CFR 46.104(d)(4)). The project involved secondary analysis of existing medical records; data were abstracted and recorded in de-identified form with no direct identifiers or re-identification keys retained; there was no interaction or intervention with patients and no impact on clinical care. Accordingly, the research posed no more than minimal risk and met criteria for exemption..

Informed Consent Statement

Informed consent was not required for this study due to its retrospective design and the use of anonymized patient data.

Data Availability Statement

Dataset available on request from the authors due to legal reasons regarding proprietary AI models, though raw AI outputs can be closely reproduced with prompts included in the paper. In addition to generating patient education materials, no other data was created. Data analysis libraries used are in the public domain, and the python package used is available at

https://github.com/cdimascio/py-readability-metrics accessed 22 September 2024.

Acknowledgments

Anubhav Pradeep, for his biostatistics consultation and verification of our methods. During the preparation of this work, the authors used ChatGPT, Copilot, and Llama in order to create sample PEMs that were used in this analysis. Additionally, ChatGPT was used in some areas to improve language and readability. After using this service, the authors reviewed and edited the content as needed and take full responsibility for the content of the publication.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PEMs | Patient Education Materials |

| LLM | Large Language Model |

| AAO | American Academy of Ophthalmology |

| SMOG | Simple Measure of Gobbledygook |

| AI | Artificial Intelligence |

| ANOVA | Analysis of Variance |

References

- Doak, L.G.; Doak, C.C. Lowering the Silent Barriers to Compliance for Patients with Low Literacy Skills. Promot. Health 1987, 8, 6–8. [Google Scholar] [PubMed]

- Ad Hoc Committee On Health Literacy For The Council On Scientific Affairs, American Medical Association Health Literacy: Report of the Council on Scientific Affairs. JAMA 1999, 281, 552–557. [CrossRef]

- Huang, G.; Fang, C.H.; Agarwal, N.; Bhagat, N.; Eloy, J.A.; Langer, P.D. Assessment of Online Patient Education Materials from Major Ophthalmologic Associations. JAMA Ophthalmol. 2015, 133, 449–454. [Google Scholar] [CrossRef] [PubMed]

- Eid, K.; Eid, A.; Wang, D.; Raiker, R.S.; Chen, S.; Nguyen, J. Optimizing Ophthalmology Patient Education via ChatBot-Generated Materials: Readability Analysis of AI-Generated Patient Education Materials and The American Society of Ophthalmic Plastic and Reconstructive Surgery Patient Brochures. Ophthalmic Plast. Reconstr. Surg. 2023, 40, 212–216. [Google Scholar] [CrossRef] [PubMed]

- Cheng, B.T.; Kim, A.B.; Tanna, A.P. Readability of Online Patient Education Materials for Glaucoma. J. Glaucoma 2022, 31, 438–442. [Google Scholar] [CrossRef] [PubMed]

- John, A.M.; John, E.S.; Hansberry, D.R.; Thomas, P.J.; Guo, S. Analysis of Online Patient Education Materials in Pediatric Ophthalmology. J. Am. Assoc. Pediatr. Ophthalmol. Strabismus 2015, 19, 430–434. [Google Scholar] [CrossRef] [PubMed]

- Dossantos, J.; An, J.; Javan, R. Eyes on AI: ChatGPT’s Transformative Potential Impact on Ophthalmology. Cureus 2023, 15, e40765. [Google Scholar] [CrossRef] [PubMed]

- Ali, M.J.; Singh, S. ChatGPT and Scientific Abstract Writing: Pitfalls and Caution. Graefes Arch. Clin. Exp. Ophthalmol. 2023, 261, 3205–3206. [Google Scholar] [CrossRef] [PubMed]

- Teebagy, S.; Colwell, L.; Wood, E.; Yaghy, A.; Faustina, M. Improved Performance of ChatGPT-4 on the OKAP Examination: A Comparative Study with ChatGPT-3.5. J. Acad. Ophthalmol. 2023, 15, e184–e187. [Google Scholar] [CrossRef] [PubMed]

- Singh, S.; Djalilian, A.; Ali, M.J. ChatGPT and Ophthalmology: Exploring Its Potential with Discharge Summaries and Operative Notes. Semin. Ophthalmol. 2023, 38, 503–507. [Google Scholar] [CrossRef] [PubMed]

- Eye Health A–Z—American Academy of Ophthalmology. Available online: https://www.aao.org/eye-health/a-z (accessed on 25 September 2024).

- Carmine DiMascio Py-Readability Metrics. Available online: https://github.com/cdimascio/py-readability-metrics (accessed on 22 September 2024).

- Charnock, D.; Shepperd, S.; Needham, G.; Gann, R. DISCERN: An Instrument for Judging the Quality of Written Consumer Health Information on Treatment Choices. J. Epidemiol. Community Health 1999, 53, 105–111. [Google Scholar] [CrossRef] [PubMed]

- Krishna, S.; Agarwal, C.; Lakkaraju, H. Understanding the Effects of Iterative Prompting on Truthfulness 2024. arXiv 2024, arXiv:2402.06625. [Google Scholar]

- Jeon, S.; Kim, H.-G. A Comparative Evaluation of Chain-of-Thought-Based Prompt Engineering Techniques for Medical Question Answering. Comput. Biol. Med. 2025, 196, 110614. [Google Scholar] [CrossRef] [PubMed]

- Windisch, P.; Dennstädt, F.; Koechli, C.; Schröder, C.; Aebersold, D.M.; Förster, R.; Zwahlen, D.R. The Impact of Temperature on Extracting Information from Clinical Trial Publications Using Large Language Models. Cureus 2024, 16, e75748. [Google Scholar] [CrossRef] [PubMed]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).