Artificial Intelligence in Predictive Healthcare: A Systematic Review

Abstract

1. Introduction

2. Methodology

2.1. Selection Criteria and Search Strategy

2.2. Study Review Process and Data Extraction

3. Results

3.1. Healthcare Domains

3.2. Applied Datasets

3.3. Feature Extraction Methods

3.4. Machine Learning Models

3.5. Evaluation Metrics

4. Discussion

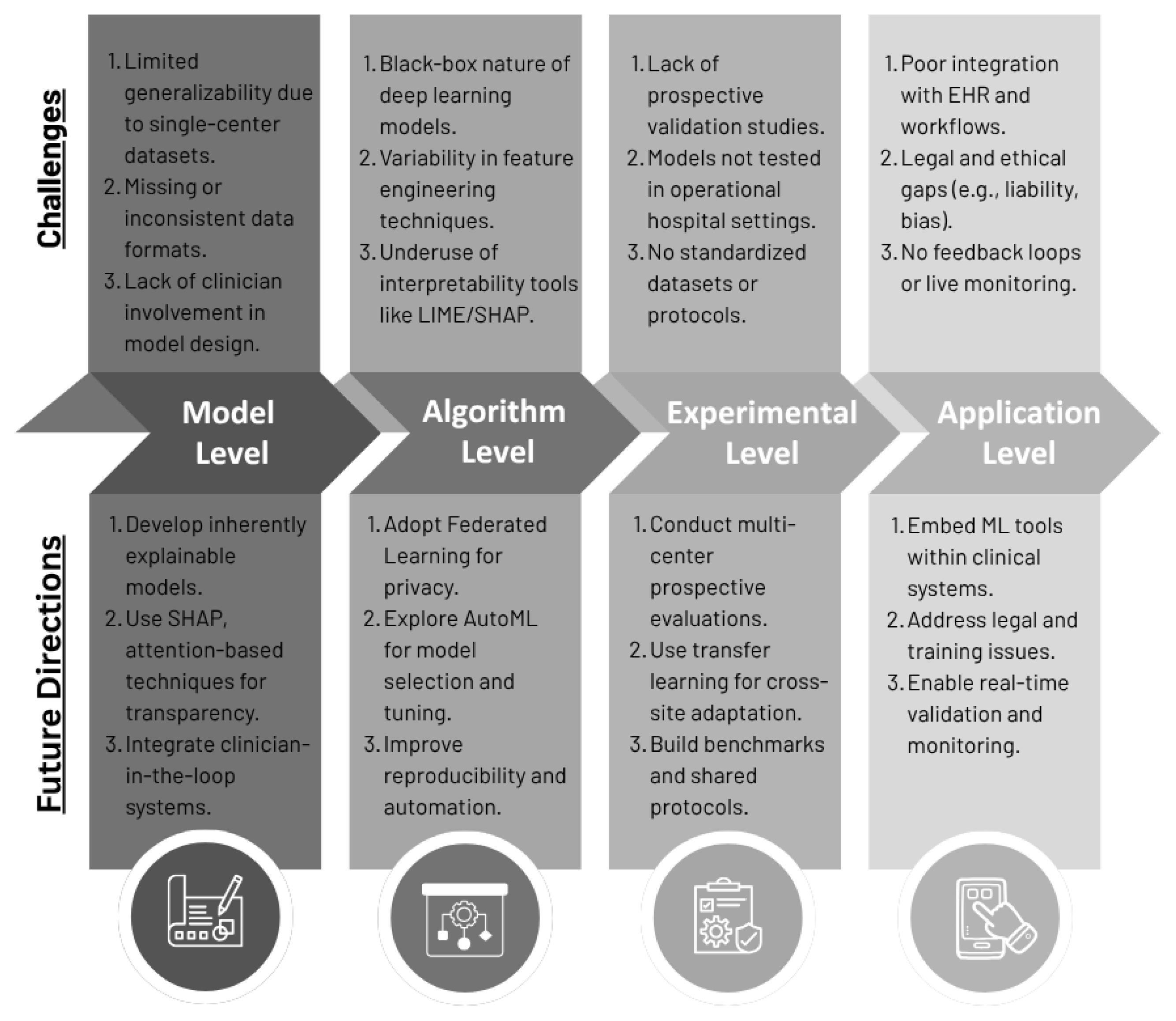

4.1. Challenges and Issues

4.1.1. Data and Generalizability

4.1.2. Algorithm and Interpretability

4.1.3. Clinical Integration and Real-World Use

4.1.4. Privacy, Ethics, and Regulatory Issues

4.2. Future Research Directions

4.2.1. Model Level

4.2.2. Algorithm Level

4.2.3. Experimental Research Level

4.2.4. Application Level

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chang, V.; Bhavani, V.R.; Xu, A.Q.; Hossain, M. An Artificial Intelligence Model for Heart Disease Detection Using Machine Learning Algorithms. Healthc. Anal. 2022, 2, 100016. [Google Scholar] [CrossRef]

- Hennebelle, A.; Materwala, H.; Ismail, L. HealthEdge: A Machine Learning-Based Smart Healthcare Framework for Prediction of Type 2 Diabetes in an Integrated IoT, Edge, and Cloud Computing System. Procedia Comput. Sci. 2023, 220, 331–338. [Google Scholar] [CrossRef]

- Kabiru, G.; Zainab, S.; Kingsley, G.; Abubakar, W.; Zayyanu, S.; Auwal Adam, B.; Safiya Bala, B.; Sa’adatu, M.J.; Mustapha, M. Harnessing Machine Learning for Predictive Healthcare: A Path to Efficient Health Systems in Africa. Health Inf. Inf. Manag. 2025, 1, 1–10. [Google Scholar] [CrossRef]

- Gao, J.; Lu, Y.; Ashrafi, N.; Domingo, I.; Alaei, K.; Pishgar, M. Prediction of Sepsis Mortality in ICU Patients Using Machine Learning Methods. BMC Med. Inf. Decis. Mak. 2024, 24, 228. [Google Scholar] [CrossRef]

- Li, J.; Xi, F.; Yu, W.; Sun, C.; Wang, X. Real-Time Prediction of Sepsis in Critical Trauma Patients: Machine Learning–Based Modeling Study. JMIR Form. Res. 2023, 7, e42452. [Google Scholar] [CrossRef]

- Wang, D.; Li, J.; Sun, Y.; Ding, X.; Zhang, X.; Liu, S.; Han, B.; Wang, H.; Duan, X.; Sun, T. A Machine Learning Model for Accurate Prediction of Sepsis in ICU Patients. Front. Public Health 2021, 9, 754348. [Google Scholar] [CrossRef]

- Yue, S.; Li, S.; Huang, X.; Liu, J.; Hou, X.; Zhao, Y.; Niu, D.; Wang, Y.; Tan, W.; Wu, J. Machine Learning for the Prediction of Acute Kidney Injury in Patients with Sepsis. J. Transl. Med. 2022, 20, 215. [Google Scholar] [CrossRef]

- Camacho-Cogollo, J.E.; Bonet, I.; Gil, B.; Iadanza, E. Machine Learning Models for Early Prediction of Sepsis on Large Healthcare Datasets. Electronics 2022, 11, 1507. [Google Scholar] [CrossRef]

- Ghias, N.; Haq, S.U.; Arshad, H.; Sultan, H.; Bashir, F.; Ghaznavi, S.A.; Shabbir, M.; Badshah, Y.; Rafiq, M. Explainable Machine Learning Models to Predict Early Risk of Mortality in ICU: A Multicenter Study. medRxiv 2022. [Google Scholar] [CrossRef]

- Tang, L.; Li, Y.; Zhang, J.; Zhang, F.; Tang, Q.; Zhang, X.; Wang, S.; Zhang, Y.; Ma, S.; Liu, R.; et al. Machine Learning Model to Predict Sepsis in ICU Patients with Intracerebral Hemorrhage. Sci. Rep. 2025, 15, 16326. [Google Scholar] [CrossRef]

- Ekundayo, F.; Nyavor, H. AI-Driven Predictive Analytics in Cardiovascular Diseases: Integrating Big Data and Machine Learning for Early Diagnosis and Risk Prediction. Int. J. Res. Publ. Rev. 2024, 5, 1240–1256. [Google Scholar] [CrossRef]

- Liu, Z.; Shu, W.; Li, T.; Zhang, X.; Chong, W. Interpretable Machine Learning for Predicting Sepsis Risk in Emergency Triage Patients. Sci. Rep. 2025, 15, 887. [Google Scholar] [CrossRef] [PubMed]

- Komolafe, O.O.; Mei, Z.; Zarate, D.M.; Spangenberg, G.W. Early Prediction of Sepsis: Feature-Aligned Transfer Learning. arXiv 2025, arXiv:2505.02889. [Google Scholar] [CrossRef]

- Shashikumar, S.P.; Mohammadi, S.; Krishnamoorthy, R.; Patel, A.; Wardi, G.; Ahn, J.C.; Singh, K.; Aronoff-Spencer, E.; Nemati, S. Development and Prospective Implementation of a Large Language Model Based System for Early Sepsis Prediction. npj Digit. Med. 2025, 8, 290. [Google Scholar] [CrossRef]

- Rakers, M.M.; Van Buchem, M.M.; Kucenko, S.; De Hond, A.; Kant, I.; Van Smeden, M.; Moons, K.G.M.; Leeuwenberg, A.M.; Chavannes, N.; Villalobos-Quesada, M.; et al. Availability of Evidence for Predictive Machine Learning Algorithms in Primary Care: A Systematic Review. JAMA Netw. Open 2024, 7, e2432990. [Google Scholar] [CrossRef]

- Liew, C.-H.; Ong, S.-Q.; Ng, D.C.-E. Utilizing Machine Learning to Predict Hospital Admissions for Pediatric COVID-19 Patients (PrepCOVID-Machine). Sci. Rep. 2025, 15, 3131. [Google Scholar] [CrossRef]

- Tran, N.D.T.; Leung, C.K.; Madill, E.W.R.; Binh, P.T. A Deep Learning Based Predictive Model for Healthcare Analytics. In Proceedings of the 2022 IEEE 10th International Conference on Healthcare Informatics (ICHI), Rochester, MN, USA, 11–14 June 2022; pp. 547–549. [Google Scholar] [CrossRef]

- Badawy, M.; Ramadan, N.; Hefny, H.A. Healthcare Predictive Analytics Using Machine Learning and Deep Learning Techniques: A Survey. J. Electr. Syst. Inf. Technol. 2023, 10, 40. [Google Scholar] [CrossRef]

- Ben Khalfallah, H.; Jelassi, M.; Demongeot, J.; Bellamine Ben Saoud, N. Advancements in Predictive Analytics: Machine Learning Approaches to Estimating Length of Stay and Mortality in Sepsis. Computation 2025, 13, 8. [Google Scholar] [CrossRef]

- Thatha, V.N.; Chalichalamala, S.; Pamula, U.; Krishna, D.P.; Chinthakunta, M.; Mantena, S.V.; Vahiduddin, S.; Vatambeti, R. Optimized Machine Learning Mechanism for Big Data Healthcare System to Predict Disease Risk Factor. Sci. Rep. 2025, 15, 14327. [Google Scholar] [CrossRef]

- Baniecki, H.; Sobieski, B.; Szatkowski, P.; Bombinski, P.; Biecek, P. Interpretable Machine Learning for Time-to-Event Prediction in Medicine and Healthcare. Artif. Intell. Med. 2025, 159, 103026. [Google Scholar] [CrossRef]

- Imrie, F.; Cebere, B.; McKinney, E.F.; Van Der Schaar, M. AutoPrognosis 2.0: Democratizing Diagnostic and Prognostic Modeling in Healthcare with Automated Machine Learning. PLoS Digit. Health 2023, 2, e0000276. [Google Scholar] [CrossRef]

- Orhan, F.; Kurutkan, M.N. Predicting Total Healthcare Demand Using Machine Learning: Separate and Combined Analysis of Predisposing, Enabling, and Need Factors. BMC Health Serv. Res. 2025, 25, 366. [Google Scholar] [CrossRef]

- Mondrejevski, L.; Miliou, I.; Montanino, A.; Pitts, D.; Hollmén, J.; Papapetrou, P. FLICU: A Federated Learning Workflow for Intensive Care Unit Mortality Prediction. arXiv 2022, arXiv:2205.15104. [Google Scholar] [CrossRef]

| Healthcare Domain | References |

|---|---|

| ICU and Critical Care | [4,6,8,10,19] |

| Emergency Department (ED) | [5,12,14] |

| Cardiovascular Diseases | [11,20] |

| Oncology | [17,21] |

| Diabetes and Chronic Diseases | [2,22] |

| COVID-19 | [16,17] |

| General Healthcare/Primary Care | [15,18,23] |

| Other Specific Conditions | [2,7,19] |

| Dataset | Description | References |

|---|---|---|

| MIMIC-III | Medical Information Mart for Intensive Care (MIMIC) is an openly available database comprising de-identified health-related data for over 40,000 patients admitted to critical care units at the Beth Israel Deaconess Medical Center between 2001 and 2012. It includes demographics, bedside vital signs (recorded approximately hourly), lab results, procedures, medications, clinical notes, imaging reports, and mortality outcomes. Available at https://mimic.physionet.org/ (accessed on 19 September 2025) | [7,24] |

| MIMIC-IV | An updated version of MIMIC-III, this openly available database contains ICU records from the same hospital between 2008 and 2019. It reflects a more modern healthcare practice and integrates structured clinical data with improved coding. Available at https://mimic.physionet.org/ (accessed on 19 September 2025) | [4,5,8,10,12] |

| PhysioNet | This dataset was developed for the PhysioNet 2019 Challenge on early prediction of sepsis. It includes time-series data from over 40,000 ICU patients across various hospital systems. Each record contains hourly vital signs (HR, MAP, Resp, O2Sat, Temp), demographics, and timestamps. The goal was to predict sepsis onset within a 6-hour window. Available at https://physionet.org/content/challenge-2019/1.0.0/ (accessed on 19 September 2025) | [9] |

| eICU | This dataset includes over 200,000 ICU admissions from 208 U.S. hospitals between 2014 and 2015. It is used alongside MIMIC-IV for external validation in some studies and provides rich clinical data for predictive model development. Available at https://eicu-crd.mit.edu/ (accessed on 19 September 2025) | [10] |

| Feature Extraction Method | Feature Type | References |

|---|---|---|

| Manual Statistical Aggregation (mean, std, etc.) | Time-series features (e.g., vitals, lab data) | [4,8,19] |

| Recursive Feature Elimination (RFE) | Mixed clinical features (structured data) | [2,11,13] |

| LASSO | High-dimensional EHR features | [10,16] |

| Boruta | Structured EHR data (relevant variables) | [7,16] |

| Principal Component Analysis (PCA) | Imaging or physiological features (dimensionality reduction) | [11] |

| Autoencoders | Signal-based or imaging features (latent representations) | [11,17] |

| SHAP-based Feature Importance | Model-agnostic feature importance across various types | [5,10,22] |

| Filter Methods/Information Gain | Discrete clinical and categorical features | [8] |

| Expert/Clinically Selected Features | Clinical scores, demographic and risk factors | [4,12,14] |

| Feature-Aligned Transfer Learning (FATL) | Harmonized features from heterogeneous datasets | [13] |

| ML Model | Description | References |

|---|---|---|

| Random Forest (RF) | Ensemble of decision trees combined to enhance prediction accuracy. | [4,6,8,10] |

| Logistic Regression (LR) | Predicts probability of binary outcomes. | [8,23] |

| Support Vector Machine (SVM) | Finds the optimal hyperplane separating two classes using support vectors. | [8,12] |

| XGBoost | Algorithm known for efficiency and high performance on large datasets. | [4,8] |

| Neural Networks (generic) | Deep-learning models effective for complex, high-dimensional data. | [11,24] |

| Decision Tree | Simple supervised models that split data by feature values using tree-like decision rules. | [4,8] |

| Gradient Boosting | Sequential ensemble method for classification and regression that minimizes prediction errors. | [4,8] |

| Naive Bayes | Probabilistic classifier effective when features are conditionally independent. | [8,19] |

| K-Nearest Neighbors (k-NN) | Assigns a label based on majority class of nearest neighbors | [8] |

| Multi-Layer Perceptron (MLP) | Feedforward neural network with input, hidden, and output layers. | [4,8] |

| LightGBM | Optimized gradient-boosting algorithm with leaf-wise tree growth for faster training and improved accuracy. | [4] |

| Convolutional Neural Networks (CNNs) | Deep learning models for processing spatial data. | [11] |

| Recurrent Neural Networks (RNNs) | Process sequential data to capture temporal dependencies. | [24] |

| Long Short-Term Memory (LSTM) | RNN variant that captures long-term dependencies over time. | [24] |

| Deep Belief Network (DBN) | Extract hierarchical features from complex datasets. | [20] |

| Autoencoder | Unsupervised models for dimensionality reduction and feature extraction. | [17] |

| Ensemble Methods (Custom) | Generally high accuracy and robust predictions due to combining multiple models; reduces overfitting compared to single models. | [22] |

| Domain | ML Models | Best Model(s) | No. of Studies | References |

|---|---|---|---|---|

| ICU and Critical Care | RF, XGBoost, LR, Ensemble | XGBoost, Ensemble | 5 | [4,6,8,10,19] |

| Emergency Department (ED) | Deep Learning, RF | Deep Learning | 3 | [5,12,14] |

| Cardiovascular Diseases | LR, DL (imaging), RF | DL (imaging) | 2 | [11,20] |

| Oncology | ML + Genomic, Survival models | Integrated genomic ML | 2 | [17,21] |

| Diabetes and Chronic Diseases | IoT + ML pipelines, RF, SVM | RF/IoT–ML hybrid | 2 | [2,22] |

| COVID-19 | RF, DL, Ensemble | DL-based classifiers | 2 | [16,17] |

| General Healthcare/ Primary Care | Risk scoring models, RF, LR | Risk scoring + RF | 3 | [15,18,23] |

| Other Specific Conditions | GBM, RF | GBM | 3 | [2,7,19] |

| Evaluation Metric | Description | No. of Studies | References |

|---|---|---|---|

| AUC/AUROC/ ROC | It evaluates a model’s ability to differentiate between positive and negative cases, also with imbalanced data. | 15 | [2,3,4,5,6,8,9,10,11,14,16,17,19,20,23] |

| F1-score | It balances precision and recall, making it ideal for scenarios where both false positives and false negatives can have serious clinical consequences. | 13 | [2,3,4,5,6,8,9,10,11,14,17,20,23] |

| Accuracy | It is commonly reported but can be misleading in healthcare with imbalanced data; it may appear high even when critical positive cases are missed. | 12 | [2,3,4,5,9,14,16,17,19,20,21,23] |

| Recall/Sensitivity | It measures the model’s ability to correctly identify true positive cases, which helps ensure that high-risk patients are not missed. | 11 | [2,3,4,6,8,9,11,14,20,21,23] |

| Precision/PPV | It measures how many of the predicted positive cases are actually true positives, helping to reduce false alarms and avoid unnecessary treatments. | 10 | [2,3,9,10,11,14,16,19,20,23] |

| Specificity | It measures the model’s ability to correctly identify negative cases, helping to prevent unnecessary worry for patients who do not have the condition. | 4 | [6,14,21,22] |

| Negative Predictive Value | NPV represents the proportion of negative predictions that are correct. | 3 | [6,14,22] |

| Brier Score | It measures the accuracy of predicted probabilities. | 3 | [14,22,24] |

| C-index | It is suitable for time-to-event or survival prediction models. | 3 | [21,22,24] |

| AUPRC | It focuses on the precision-recall tradeoff, especially useful for imbalanced datasets. | 3 | [5,8,21] |

| Calibration Curves | It assess whether predicted probabilities match observed outcomes. | 2 | [14,24] |

| Error Rate | It is the overall proportion of incorrect predictions. | 1 | [20] |

| Confidence Interval | CI indicates the uncertainty range around metric estimates. | 1 | [20] |

| p-value | It is used for hypothesis testing to determine statistical significance. | 1 | [20] |

| Decision Curve Analysis | DCA evaluates the clinical benefit of predictive models across different threshold probabilities. | 1 | [14] |

| Integrated Brier Score | IBS is an integrated measure of prediction accuracy over time. | 1 | [22] |

| Integrated AUC | iAUC is the time-integrated area under the ROC curve, assessing model performance longitudinally. | 1 | [22] |

| Absolute Calibration Error | ACE measures the absolute difference between predicted probabilities and observed outcomes. | 1 | [8] |

| RQ | Addressed In | Summary of Findings |

|---|---|---|

| RQ1 | Healthcare Domains (Section 3.1) | Predictive ML has been applied in various healthcare domains such as ICU (sepsis, mortality), oncology, cardiology, diabetes management, and COVID-19. ICU and chronic disease studies [4,6,8,10,19] were the most frequent focus. |

| RQ2 | Machine Learning Models (Section 3.4) | The most commonly used algorithms were Random Forest (RF) [4,6,8,10]. Deep learning models such as CNNs and LSTMs were increasingly applied in imaging and time-series tasks. |

| RQ3 | Evaluation Metrics (Section 3.5) | More than half of the studies used metrics such as AUC, accuracy, and F1-score [2,3,4,5,6,8,9,10,11,14,16,17,19,20,23]. Some studies also reported calibration metrics such as the C-index and Brier score [22,24], particularly in survival analysis. |

| RQ4 | Challenges and Issues (Section 4.1) | Key challenges included limited and imbalanced datasets, lack of generalizability across populations, poor interpretability of models, integration difficulties into clinical practice, and privacy concerns. |

| RQ5 | Future Research Directions (Section 4.2) | Future directions highlighted the need for federated learning to address privacy, AutoML for easier adoption, and integration with clinical workflows for real-world deployment. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Nafjan, A.; Aljuhani, A.; Alshebel, A.; Alharbi, A.; Alshehri, A. Artificial Intelligence in Predictive Healthcare: A Systematic Review. J. Clin. Med. 2025, 14, 6752. https://doi.org/10.3390/jcm14196752

Al-Nafjan A, Aljuhani A, Alshebel A, Alharbi A, Alshehri A. Artificial Intelligence in Predictive Healthcare: A Systematic Review. Journal of Clinical Medicine. 2025; 14(19):6752. https://doi.org/10.3390/jcm14196752

Chicago/Turabian StyleAl-Nafjan, Abeer, Amaal Aljuhani, Arwa Alshebel, Asma Alharbi, and Atheer Alshehri. 2025. "Artificial Intelligence in Predictive Healthcare: A Systematic Review" Journal of Clinical Medicine 14, no. 19: 6752. https://doi.org/10.3390/jcm14196752

APA StyleAl-Nafjan, A., Aljuhani, A., Alshebel, A., Alharbi, A., & Alshehri, A. (2025). Artificial Intelligence in Predictive Healthcare: A Systematic Review. Journal of Clinical Medicine, 14(19), 6752. https://doi.org/10.3390/jcm14196752