Building a Machine Learning Model to Predict Postpartum Depression from Electronic Health Records in a Tertiary Care Setting

Abstract

1. Introduction

2. Materials and Methods

2.1. Cohort Selection

| (a) | |||

|---|---|---|---|

| Variable | PPD (N = 1986; 22.1%) | Non-PPD (N = 7008; 77.9%) | p-Value |

| Mother age | 28.2 ± 5.9 | 28.9 ± 5.9 | p < 0.001 |

| Gestation age at delivery (in days) | 268.0 ± 13.0 | 269.5 ± 10.2 | p < 0.001 |

| Parity | 1 (0–2) | 1 (0–2) | p = 0.002 |

| BMI | 32.1 ± 8.0 | 31.5 ± 12.4 | p = 0.005 |

| Mother height | 64.2 ± 2.8 | 63.9 ± 2.8 | p < 0.001 |

| Prenatal care visit | |||

| Counts of visits | 36.6 ± 16.3 | 32.5 ± 14.3 | p < 0.001 |

| Counts of Ambulatory Visit (AV) | 17.8 ± 7.8 | 16.3 ± 6.9 | p < 0.001 |

| Counts of Inpatient Hospital Stay (IP) | 0.7 ± 0.7 | 0.6 ± 0.7 | p < 0.001 |

| Counts of Emergency Department (ED) visits | 0.6 ± 1.3 | 0.4 ± 0.9 | p < 0.001 |

| Counts of Telehealth (TH) visits | 0.2 ± 0.9 | 0.1 ± 0.6 | p < 0.001 |

| Counts of Other (OT) visits | 17.3 ± 8.6 | 15.1 ± 7.8 | p < 0.001 |

| PHQ * score max | |||

| phq_21012948 | 0.4 ± 0.9 | 0.1 ± 0.5 | p < 0.001 |

| phq_21012949 | 0.5 ± 0.9 | 0.1 ± 0.5 | p < 0.001 |

| phq_21012950 | 1.8 ± 1.2 | 1.3 ± 1.2 | p < 0.001 |

| phq_21012951 | 2.0 ± 1.1 | 1.5 ± 1.2 | p < 0.001 |

| phq_21012953 | 1.5 ± 1.2 | 1.0 ± 1.2 | p < 0.001 |

| phq_21012954 | 1.4 ± 1.2 | 0.9 ± 1.1 | p < 0.001 |

| phq_21012955 | 1.3 ± 1.2 | 0.9 ± 1.1 | p < 0.001 |

| phq_21012956 | 0.9 ± 1.1 | 0.6 ± 1.0 | p < 0.001 |

| phq_21012958 | 0.3 ± 0.7 | 0.2 ± 0.6 | p = 0.041 |

| phq_21012959 | 11.9 ± 6.8 | 8.6 ± 6.7 | p < 0.001 |

| Edinburgh Postnatal Depression Screen total score max ** | |||

| EPDS_99046_max | 7.9 ± 7.0 | 3.7 ± 4.4 | p < 0.001 |

| EPDS_71354_max | 5.1 ± 5.8 | 1.9 ± 3.1 | p < 0.001 |

| (b) | |||

| Variable | PPD (N = 1986; 22.1%) | Non-PPD (N = 7008; 77.9%) | OR (CI) |

| Mother self-reported race/ethnicity | |||

| Black | 463 (23.3) | 1497 (21.4) | 1.12 (0.99, 1.26) |

| Hispanic | 196 (9.9) | 1287 (18.4) | 0.49 (0.42, 0.57) |

| White | 1153 (58.1) | 3447 (49.2) | 1.43 (1.29, 1.58) |

| Other/Unknown | 190 (9.6) | 891 (12.7) | 0.73 (0.62, 0.86) |

| Insurance type | |||

| Medicaid | 971 (48.9) | 3340 (47.7) | 1.05 (0.95, 1.16) |

| Managed Care (Private) | 1075 (54.1) | 3627 (51.8) | 1.10 (1.00, 1.22) |

| Self-Pay | 972 (48.9) | 3718 (53.1) | 0.85 (0.77, 0.94) |

| Legal Liability/Liability Insurance | 13 (0.7) | 54 (0.8) | 0.85 (0.46, 1.56) |

| Medicare | 21 (1.1) | 38 (0.5) | 1.96 (1.15, 3.35) |

| Private health insurance—other commercial Indemnity | 41 (2.1) | 142 (2.0) | 1.02 (0.72, 1.45) |

| TRICARE (CHAMPUS) | 18 (0.9) | 64 (0.9) | 0.99 (0.59, 1.68) |

| Other Government (Federal, State, Local not specified) | 26 (1.3) | 96 (1.4) | 0.96 (0.62, 1.48) |

| Worker’s Compensation | 6 (0.3) | 19 (0.3) | 1.11 (0.44, 2.79) |

| Medicaid Applicant | 17 (0.9) | 123 (1.8) | 0.48 (0.29, 0.80) |

| Delivery mode | |||

| Vaginal, Spontaneous | 1086 (54.7) | 4140 (59.1) | 0.84 (0.76, 0.92) |

| C-Section, Low Transverse | 764 (38.5) | 2413 (34.4) | 1.19 (1.07, 1.32) |

| Vaginal, Vacuum (Extractor) | 31 (1.6) | 145 (2.1) | 0.75 (0.51, 1.11) |

| VBAC, Spontaneous | 25 (1.3) | 105 (1.5) | 0.84 (0.54, 1.30) |

| Vaginal, Forceps | 27 (1.4) | 65 (0.9) | 1.47 (0.94, 2.31) |

| C-Section, Classical | 16 (0.8) | 26 (0.4) | 2.18 (1.17, 4.07) |

| C-Section, Low Vertical | 10 (0.5) | 31 (0.4) | 1.14 (0.56, 2.33) |

| Vaginal, Breech | 14 (0.7) | 23 (0.3) | 2.16 (1.11, 4.20) |

| C-Section, Other Specified Type | 4 (0.2) | 21 (0.3) | 0.67 (0.23, 1.96) |

| C-Section, Unspecified | 3 (0.2) | 13 (0.2) | 0.81 (0.23, 2.86) |

| Smoking status | |||

| smoking | 772 (38.9) | 2036 (29.1) | 1.55 (1.40, 1.72) |

| tobacco | 16 (0.8) | 48 (0.7) | 1.18 (0.67, 2.08) |

| Obesity from diagnosis code | |||

| morbid (E66.01) | 374 (18.8) | 1006 (14.4) | 1.38 (1.21, 1.58) |

| obese (E66.09, E66.8, E66.9) | 610 (30.7) | 1904 (27.2) | 1.19 (1.07, 1.33) |

| overweight (E66.3) | 27 (1.4) | 76 (1.1) | 1.26 (0.81, 1.96) |

| Obesity complicating pregnancy, childbirth, and the puerperium (O99.21) | 843 (42.4) | 2665 (38.0) | 1.20 (1.09, 1.33) |

| Delivery year | |||

| 2018–2019 | 357 (18.0) | 1159 (16.5) | 1.11 (0.97, 1.26) |

| 2020 | 489 (24.6) | 1619 (23.1) | 1.09 (0.97, 1.22) |

| 2021 | 654 (32.9) | 2314 (33.0) | 1.00 (0.90, 1.11) |

| 2022 | 344 (17.3) | 1326 (18.9) | 0.90 (0.79, 1.02) |

| 2023 | 142 (7.2) | 590 (8.4) | 0.84 (0.69, 1.01) |

| Method for Constructing the Target Variable | N (pos%) | AUC | Specificity | Sensitivity |

|---|---|---|---|---|

| 1. F53 only | 12,284 (9.9%) | 0.699 ±0.011 | 0.930 ± 0.047 | 0.236 ± 0.090 |

| 2. EPDS ≥ 10 only | 8809 (15.6%) | 0.728 ± 0.020 | 0.886 ± 0.036 | 0.358 ± 0.094 |

| 3. PHQ9 ≥ 10 only | 942 (45.1%) | 0.661 ± 0.022 | 0.666 ± 0.071 | 0.541 ± 0.054 |

| 4. EPDS ≥ 10 or PHQ9 ≥ 10 | 8994 (18.3%) | 0.739 ± 0.014 | 0.893 ± 0.033 | 0.380 ± 0.074 |

| 5. F53 or EPDS ≥ 10 or PHQ9 ≥ 10 | 8994 (22.1%) | 0.733 ± 0.008 | 0.858 ± 0.030 | 0.446 ± 0.053 |

| 6. Same as 5, except removing patients with F32, F33, F34.1 or F53.0, or EPDS ≥ 10 or PHQ9 ≥ 10 during pregnancy | 7105 (15.8%) | 0.659 ± 0.019 | 0.890 ± 0.033 | 0.265 ± 0.063 |

| 7. F53 and other diagnosis code for depression (F32, F33, F34.1) | 12,284 (15.9%) | 0.754 ± 0.010 | 0.881 ± 0.024 | 0.450 ± 0.046 |

2.2. Constructing the Primary Data Source

2.3. Machine Learning Model

2.3.1. Target Variable for Predictive Modeling

- (a)

- (b)

- (c)

- (d)

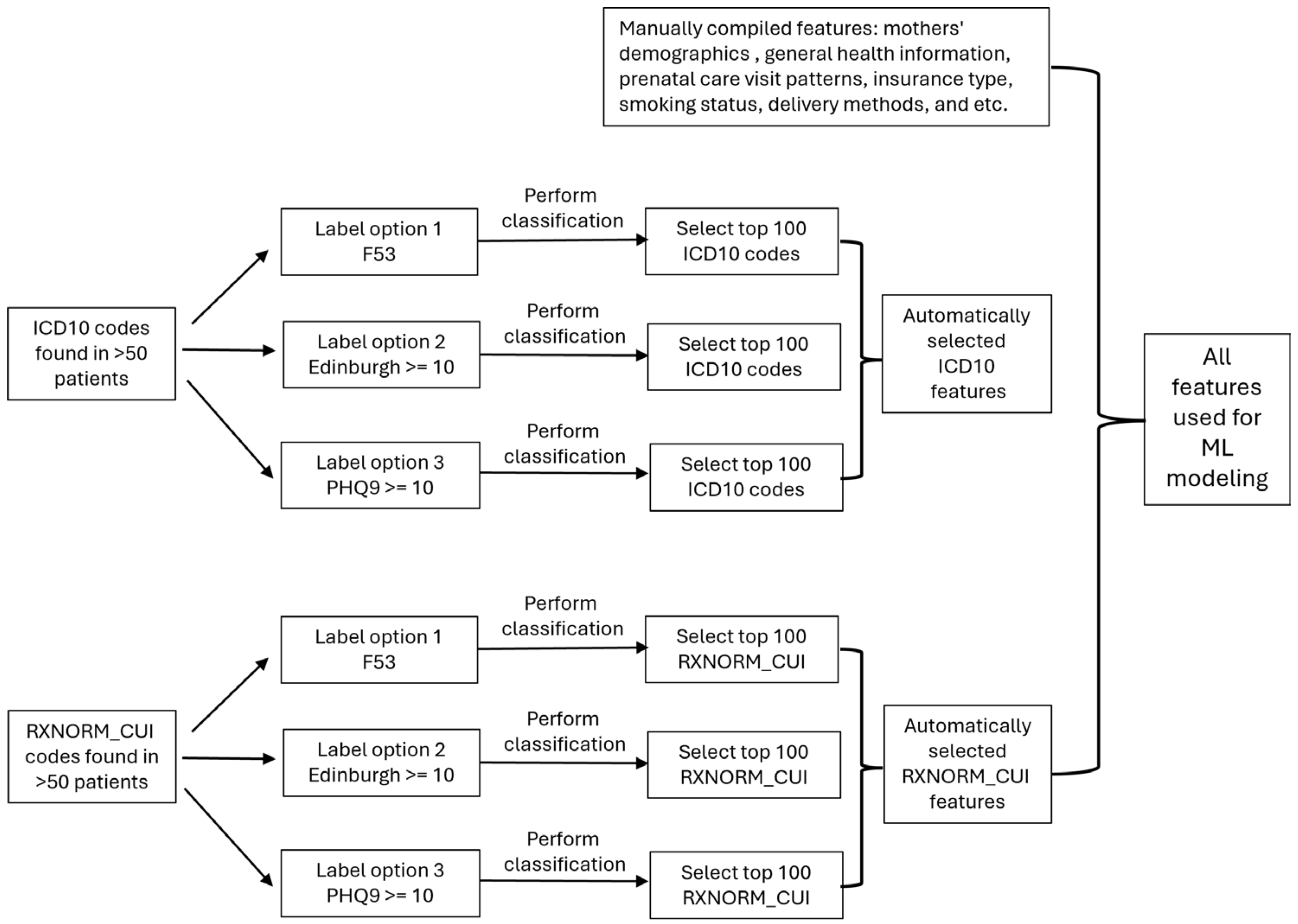

2.3.2. Feature Extraction and Selection

2.3.3. Classification Modeling

2.3.4. Evaluation of Model

2.4. External Data: Census Tract Data

2.5. External Data: Pregnancy Risk Assessment Monitoring System (PRAMS)

3. Results

3.1. Different Methods for Creating the Target Variables

3.2. Feature Importance

3.3. Comparison of Model Performance with and Without Census Tract Data

3.4. Using PRAM to Build a Model to Make a Prediction on the WFU Dataset

3.5. Model Performance Among Different Races

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| PPD | Postpartum depression |

| ML | Machine Learning |

| EHR | Electronic Health Record |

| AUC | Area Under the Receiver Operating Characteristic Curve |

| PRAMS | Pregnancy Risk Assessment Monitoring System |

| PCORnet | Patient-Centered Clinical Research Network |

| ICD | International Classification of Diseases |

| EPDS | Edinburgh Postnatal Depression Scale |

| PHQ | Patient Health Questionnaire |

| SHAP | Shapley Additive Explanations |

| WFU | Wake Forest University |

| SVI | Social Vulnerability Index |

| SDOH | Social Determinants of Health Database |

| OR | Unadjusted Odds Ratio |

| SMOTE | Synthetic Minority Over-sampling Technique |

References

- Wang, Z.; Liu, J.; Shuai, H.; Cai, Z.; Fu, X.; Liu, Y.; Xiao, X.; Zhang, W.; Krabbendam, E.; Liu, S. Mapping global prevalence of depression among postpartum women. Transl. Psychiatry 2021, 11, 543. [Google Scholar] [CrossRef]

- Simas, T.A.M.; Hoffman, M.C.; Miller, E.S.; Metz, T.; Byatt, N.; Roussos-Ross, K. Screening and Diagnosis of Mental Health Conditions During Pregnancy and Postpartum. Obstet. Gynecol. 2023, 141, 1232–1261. [Google Scholar] [CrossRef]

- Moraes, G.P.d.A.; Lorenzo, L.; Pontes, G.A.R.; Montenegro, M.C.; Cantilino, A. Screening and diagnosing postpartum depression: When and how? Trends Psychiatry Psychother. 2017, 39, 54–61. [Google Scholar] [CrossRef] [PubMed]

- Hannon, S.; Gartland, D.; Higgins, A.; Brown, S.J.; Carroll, M.; Begley, C.; Daly, D. Physical health and comorbid anxiety and depression across the first year postpartum in Ireland (MAMMI study): A longitudinal population-based study. J. Affect. Disord. 2023, 328, 228–237. [Google Scholar] [CrossRef] [PubMed]

- Frankel, L.A.; Sampige, R.; Pfeffer, K.; Zopatti, K.L. Depression during the postpartum period and impacts on parent–child relationships: A narrative review. J. Genet. Psychol. 2024, 185, 146–154. [Google Scholar] [CrossRef]

- Wierzbinska, E.M.; Susser, L.C. Postpartum Depression and Child Mental Health Outcomes: Evidenced-Based Interventions. Curr. Treat. Options Psychiatry 2025, 12, 12. [Google Scholar] [CrossRef]

- Beck, C.T. Predictors of postpartum depression: An update. Nurs. Res. 2001, 50, 275–285. [Google Scholar] [CrossRef] [PubMed]

- Silverman, M.E.; Smith, L.; Lichtenstein, P.; Reichenberg, A.; Sandin, S. The association between body mass index and postpartum depression: A population-based study. J. Affect. Disord. 2018, 240, 193–198. [Google Scholar] [CrossRef]

- Matsumura, K.; Hamazaki, K.; Tsuchida, A.; Kasamatsu, H.; Inadera, H.; Japan, E.; Children’s Study, G. Education level and risk of postpartum depression: Results from the Japan Environment and Children’s Study (JECS). BMC Psychiatry 2019, 19, 419. [Google Scholar] [CrossRef]

- Baba, S.; Ikehara, S.; Eshak, E.S.; Ueda, K.; Kimura, T.; Iso, H. Association Between Mode of Delivery and Postpartum Depression: The Japan Environment and Children’s Study (JECS). J. Epidemiol. 2023, 33, 209–216. [Google Scholar] [CrossRef]

- Habehh, H.; Gohel, S. Machine learning in healthcare. Curr. Genom. 2021, 22, 291–300. [Google Scholar] [CrossRef]

- Zhong, M.; Zhang, H.; Yu, C.; Jiang, J.; Duan, X. Application of machine learning in predicting the risk of postpartum depression: A systematic review. J. Affect. Disord. 2022, 318, 364–379. [Google Scholar] [CrossRef]

- Shin, D.; Lee, K.J.; Adeluwa, T.; Hur, J. Machine Learning-Based Predictive Modeling of Postpartum Depression. J. Clin. Med. 2020, 9, 2899. [Google Scholar] [CrossRef] [PubMed]

- Park, Y.; Hu, J.; Singh, M.; Sylla, I.; Dankwa-Mullan, I.; Koski, E.; Das, A.K. Comparison of Methods to Reduce Bias from Clinical Prediction Models of Postpartum Depression. JAMA Netw. Open 2021, 4, e213909. [Google Scholar] [CrossRef]

- Jimenez-Serrano, S.; Tortajada, S.; Garcia-Gomez, J.M. A Mobile Health Application to Predict Postpartum Depression Based on Machine Learning. Telemed. J. E Health 2015, 21, 567–574. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Liu, H.; Silenzio, V.M.B.; Qiu, P.; Gong, W. Machine Learning Models for the Prediction of Postpartum Depression: Application and Comparison Based on a Cohort Study. JMIR Med. Inf. 2020, 8, e15516. [Google Scholar] [CrossRef]

- Williams, B.A. Constructing epidemiologic cohorts from electronic health record data. Int. J. Environ. Res. Public Health 2021, 18, 13193. [Google Scholar] [CrossRef] [PubMed]

- Amit, G.; Girshovitz, I.; Marcus, K.; Zhang, Y.; Pathak, J.; Bar, V.; Akiva, P. Estimation of postpartum depression risk from electronic health records using machine learning. BMC Pregnancy Childbirth 2021, 21, 630. [Google Scholar] [CrossRef]

- Forrest, C.B.; McTigue, K.M.; Hernandez, A.F.; Cohen, L.W.; Cruz, H.; Haynes, K.; Kaushal, R.; Kho, A.N.; Marsolo, K.A.; Nair, V.P. PCORnet® 2020: Current state, accomplishments, and future directions. J. Clin. Epidemiol. 2021, 129, 60–67. [Google Scholar] [CrossRef]

- Wang, S.; Pathak, J.; Zhang, Y. Using Electronic Health Records and Machine Learning to Predict Postpartum Depression. Stud. Health Technol. Inf. 2019, 264, 888–892. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, S.; Hermann, A.; Joly, R.; Pathak, J. Development and validation of a machine learning algorithm for predicting the risk of postpartum depression among pregnant women. J. Affect. Disord. 2021, 279, 1–8. [Google Scholar] [CrossRef]

- Andersson, S.; Bathula, D.R.; Iliadis, S.I.; Walter, M.; Skalkidou, A. Predicting women with depressive symptoms postpartum with machine learning methods. Sci. Rep. 2021, 11, 7877. [Google Scholar] [CrossRef]

- Hochman, E.; Feldman, B.; Weizman, A.; Krivoy, A.; Gur, S.; Barzilay, E.; Gabay, H.; Levy, J.; Levinkron, O.; Lawrence, G. Development and validation of a machine learning-based postpartum depression prediction model: A nationwide cohort study. Depress. Anxiety 2021, 38, 400–411. [Google Scholar] [CrossRef]

- Dietz, P.M.; Williams, S.B.; Callaghan, W.M.; Bachman, D.J.; Whitlock, E.P.; Hornbrook, M.C. Clinically identified maternal depression before, during, and after pregnancies ending in live births. Am. J. Psychiatry 2007, 164, 1515–1520. [Google Scholar] [CrossRef] [PubMed]

- Schofield, P.; Das-Munshi, J.; Mathur, R.; Congdon, P.; Hull, S. Does depression diagnosis and antidepressant prescribing vary by location? Analysis of ethnic density associations using a large primary-care dataset. Psychol. Med. 2016, 46, 1321–1329. [Google Scholar] [CrossRef] [PubMed]

- Gibson, J.; McKenzie-McHarg, K.; Shakespeare, J.; Price, J.; Gray, R. A systematic review of studies validating the Edinburgh Postnatal Depression Scale in antepartum and postpartum women. Acta Psychiatr. Scand. 2009, 119, 350–364. [Google Scholar] [CrossRef]

- Post, M.J.; Van Der Putten, P.; Van Rijn, J.N. Does feature selection improve classification? A large scale experiment in OpenML. In Proceedings of the Advances in Intelligent Data Analysis XV: 15th International Symposium, IDA 2016, Stockholm, Sweden, 13–15 October 2016; pp. 158–170. [Google Scholar]

- Chen, R.-C.; Dewi, C.; Huang, S.-W.; Caraka, R.E. Selecting critical features for data classification based on machine learning methods. J. Big Data 2020, 7, 52. [Google Scholar] [CrossRef]

- Géron, A. Hands-on Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2022. [Google Scholar]

- King, G.; Zeng, L. Logistic regression in rare events data. Political Anal. 2001, 9, 137–163. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE international conference on computer vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Messer, L.C.; Laraia, B.A.; Kaufman, J.S.; Eyster, J.; Holzman, C.; Culhane, J.; Elo, I.; Burke, J.G.; O’campo, P. The development of a standardized neighborhood deprivation index. J. Urban. Health 2006, 83, 1041–1062. [Google Scholar] [CrossRef]

- Flanagan, B.E.; Gregory, E.W.; Hallisey, E.J.; Heitgerd, J.L.; Lewis, B. A social vulnerability index for disaster management. J. Homel. Secur. Emerg. Manag. 2011, 8, 0000102202154773551792. [Google Scholar] [CrossRef]

- Maroko, A.R.; Doan, T.M.; Arno, P.S.; Hubel, M.; Yi, S.; Viola, D. Integrating social determinants of health with treatment and prevention: A new tool to assess local area deprivation. Prev. Chronic Dis. 2016, 13, E128. [Google Scholar] [CrossRef]

- Solar, O.; Irwin, A. A Conceptual Framework for Action on the Social Determinants of Health; WHO Document Production Services: Geneva, Switzerland, 2010. [Google Scholar]

- Salas-González, J.; Heredia-Rizo, A.M.; Fricke-Comellas, H.; Chimenti, R.L.; Casuso-Holgado, M.J. Patterns of pain perception in individuals with anxiety or depressive disorders: A systematic review and meta-analysis of experimental pain research. J. Pain 2025, 13, 105530. [Google Scholar] [CrossRef]

- Bradshaw, H.; Riddle, J.N.; Salimgaraev, R.; Zhaunova, L.; Payne, J.L. Risk factors associated with postpartum depressive symptoms: A multinational study. J. Affect. Disord. 2022, 301, 345–351. [Google Scholar] [CrossRef]

- Mitchell-Jones, N.; Lawson, K.; Bobdiwala, S.; Farren, J.A.; Tobias, A.; Bourne, T.; Bottomley, C. Association between hyperemesis gravidarum and psychological symptoms, psychosocial outcomes and infant bonding: A two-point prospective case–control multicentre survey study in an inner city setting. BMJ Open 2020, 10, e039715. [Google Scholar] [CrossRef]

- Wong, E.F.; Saini, A.K.; Accortt, E.E.; Wong, M.S.; Moore, J.H.; Bright, T.J. Evaluating Bias-Mitigated Predictive Models of Perinatal Mood and Anxiety Disorders. JAMA Netw. Open 2024, 7, e2438152. [Google Scholar] [CrossRef]

- Cellini, P.; Pigoni, A.; Delvecchio, G.; Moltrasio, C.; Brambilla, P. Machine learning in the prediction of postpartum depression: A review. J. Affect. Disord. 2022, 309, 350–357. [Google Scholar] [CrossRef]

- Fazraningtyas, W.A.; Rahmatullah, B.; Salmarini, D.D.; Ariffin, S.A.; Ismail, A. Recent advancements in postpartum depression prediction through machine learning approaches: A systematic review. Bull. Electr. Eng. Inform. 2024, 13, 2729–2737. [Google Scholar] [CrossRef]

- Huang, Y.; Alvernaz, S.; Kim, S.J.; Maki, P.; Dai, Y.; Penalver Bernabe, B. Predicting Prenatal Depression and Assessing Model Bias Using Machine Learning Models. Biol Psychiatry Glob Open Sci 2024, 4, 100376. [Google Scholar] [CrossRef] [PubMed]

- Callister, L.C.; Beckstrand, R.L.; Corbett, C. Postpartum depression and help-seeking behaviors in immigrant Hispanic women. J. Obstet. Gynecol. Neonatal Nurs. 2011, 40, 440–449. [Google Scholar] [CrossRef] [PubMed]

- American College of Obstetricians and Gynecologists. Treatment and Management of Mental Health Conditions During Pregnancy and Postpartum. Obs. Gynecol. 2023, 141, 1262–1268. [Google Scholar] [CrossRef] [PubMed]

- Chen, N.; Pan, J. The causal effect of delivery volume on severe maternal morbidity: An instrumental variable analysis in Sichuan, China. BMJ Glob. Health 2022, 7, e008428. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Jiang, Q.; Yang, Y.; Pan, J. The burden of travel for care and its influencing factors in China: An inpatient-based study of travel time. J. Transp. Health 2022, 25, 101353. [Google Scholar] [CrossRef]

| Dataset | N (pos%) | AUC | Specificity | Sensitivity |

|---|---|---|---|---|

| A: Baseline | 7946 (22.8%) | 0.725 ± 0.011 | 0.845 ± 0.024 | 0.453 ± 0.034 |

| B: Baseline with tract ID | 5153 (23.4%) | 0.714 ± 0.022 | 0.823 ± 0.044 | 0.456 ± 0.046 |

| C: Baseline with tract ID + census tract | 5153 (23.4%) | 0.707 ± 0.019 | 0.835 ± 0.052 | 0.430 ± 0.083 |

| D: Baseline + census tract (filled with mean) | 7946 (22.8%) | 0.721 ± 0.008 | 0.830 ± 0.039 | 0.476 ± 0.061 |

| E: Baseline + census tract (filled with zip) | 7946 (22.8%) | 0.719 ± 0.008 | 0.826 ± 0.024 | 0.486 ± 0.049 |

| Dataset | N (pos%) | AUC | Specificity | Sensitivity |

|---|---|---|---|---|

| PRAM with all features | 41,948 (11.0%) | 0.730 ±0.008 | 0.836 ± 0.040 | 0.458 ± 0.059 |

| PRAM with top 50 features | 41,948 (11.0%) | 0.729 ± 0.009 | 0.750 ± 0.018 | 0.584 ± 0.030 |

| PRAM with common features | 85,259 (12.7%) | 0.673 ± 0.004 | 0.653 ± 0.009 | 0.594 ± 0.008 |

| WFU with common features | 3063 (7.4%) | 0.646 ± 0.002 | 0.554 ± 0.011 | 0.643 ± 0.020 |

| PRAM with common features + INCOME7 | 80,193 (12.8%) | 0.682 ± 0.005 | 0.650 ± 0.009 | 0.610 ± 0.015 |

| WFU with common features + INCOME7 | 2748 (7.8%) | 0.630 ± 0.002 | 0.617 ± 0.011 | 0.571 ± 0.016 |

| Dataset | Subset for Prediction | N (pos%) | AUC | p Value |

|---|---|---|---|---|

| WFU | Hispanic | 1483 (13.2%) | 0.713 ± 0.040 | 0.170 |

| Black | 1960 (23.6%) | 0.726 ± 0.029 | 0.400 | |

| White | 4600 (25.1%) | 0.722 ± 0.016 | reference | |

| WFU downsampled | Hispanic | 1200 (13.2%) | 0.703 ± 0.047 | 0.275 |

| Black | 1200 (23.6%) | 0.706 ± 0.039 | 0.538 | |

| White | 1200 (24.5%) | 0.712 ± 0.041 | reference | |

| PRAM selected race/ethnicity only | Hispanic | 6539 (11.1%) | 0.699 ± 0.019 | <0.01 |

| Black | 7740 (15.6%) | 0.682 ± 0.016 | <0.01 | |

| White | 33,307 (10.0%) | 0.735 ± 0.010 | reference | |

| PRAM selected race/ethnicity only downsampled | Hispanic | 6000 (11.0%) | 0.697 ± 0.022 | <0.01 |

| Black | 6000 (15.5%) | 0.677 ± 0.024 | <0.01 | |

| White | 6000 (10.7%) | 0.714 ± 0.024 | reference |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Z.; Horvath, M.; Stamilio, D.M.; Sekyere, K.; Gurcan, M.N. Building a Machine Learning Model to Predict Postpartum Depression from Electronic Health Records in a Tertiary Care Setting. J. Clin. Med. 2025, 14, 6644. https://doi.org/10.3390/jcm14186644

Ma Z, Horvath M, Stamilio DM, Sekyere K, Gurcan MN. Building a Machine Learning Model to Predict Postpartum Depression from Electronic Health Records in a Tertiary Care Setting. Journal of Clinical Medicine. 2025; 14(18):6644. https://doi.org/10.3390/jcm14186644

Chicago/Turabian StyleMa, Zhitu, Michael Horvath, David Michael Stamilio, Kobby Sekyere, and Metin Nafi Gurcan. 2025. "Building a Machine Learning Model to Predict Postpartum Depression from Electronic Health Records in a Tertiary Care Setting" Journal of Clinical Medicine 14, no. 18: 6644. https://doi.org/10.3390/jcm14186644

APA StyleMa, Z., Horvath, M., Stamilio, D. M., Sekyere, K., & Gurcan, M. N. (2025). Building a Machine Learning Model to Predict Postpartum Depression from Electronic Health Records in a Tertiary Care Setting. Journal of Clinical Medicine, 14(18), 6644. https://doi.org/10.3390/jcm14186644