Evaluating the Concordance Between ChatGPT and Multidisciplinary Teams in Breast Cancer Treatment Planning: A Study from Bosnia and Herzegovina

Abstract

1. Introduction

2. Materials and Methods

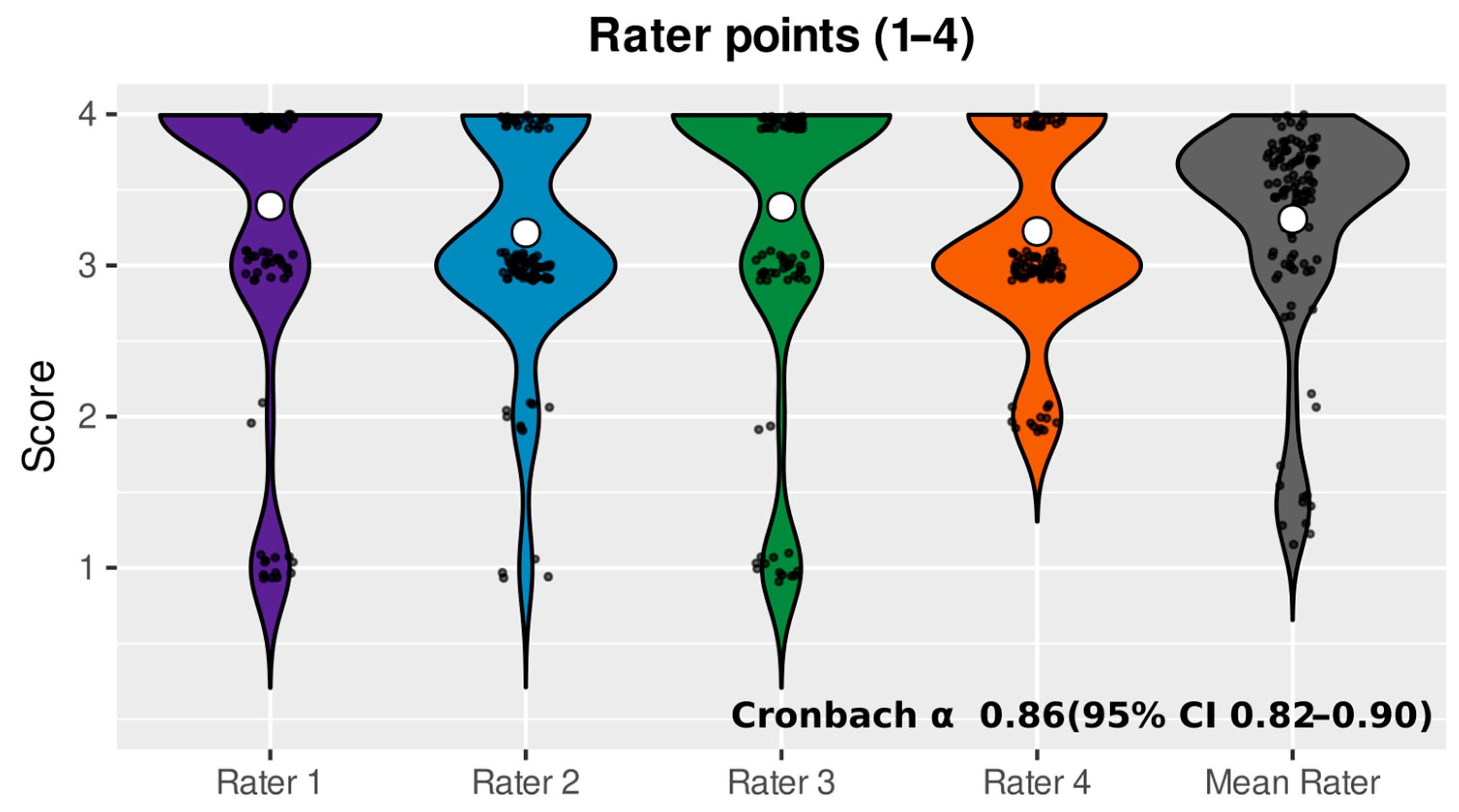

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| LLM | Large Language Model |

| MDT | Multidisciplinary Team |

| LMIC | Low- and Middle-Income Country |

| ER | Estrogen Receptor |

| PR | Progesterone Receptor |

| HER2 | Human Epidermal Growth Factor Receptor 2 |

| AC-T | Doxorubicin (Adriamycin), Cyclophosphamide, followed by Taxane |

| TCHP | Docetaxel, Carboplatin, Trastuzumab, and Pertuzumab |

| FISH | Fluorescence In Situ Hybridization |

References

- Gennari, A.; André, F.; Barrios, C.H.; Cortés, J.; de Azambuja, E.; DeMichele, A.; Dent, R.; Fenlon, D.; Gligorov, J.; Hurvitz, S.A.; et al. ESMO Clinical Practice Guideline for the diagnosis, staging and treatment of patients with metastatic breast cancer. Ann. Oncol. 2021, 32, 1475–1495. [Google Scholar] [CrossRef] [PubMed]

- Surakasula, A.; Nagarjunapu, G.; Raghavaiah, K. A comparative study of pre- and post-menopausal breast cancer: Risk factors, presentation, characteristics and management. J. Res. Pharm. Pract. 2014, 3, 12. [Google Scholar] [CrossRef] [PubMed]

- Ponce-Chazarri, L.; Ponce-Blandón, J.A.; Immordino, P.; Giordano, A.; Morales, F. Barriers to Breast Cancer-Screening Adherence in Vulnerable Populations. Cancers 2023, 15, 604. [Google Scholar] [CrossRef] [PubMed]

- Barragan-Carrillo, R.; Asirwa, F.C.; Dienstmann, R.; Pendhakar, D.; Ruiz-Garcia, E. Global Oncology: Tackling Disparities and Promoting Innovations in Low- and Middle-Income Countries. Am. Soc. Clin. Oncol. Educ. Book 2025, 45, e473930. [Google Scholar] [CrossRef] [PubMed]

- Dimitrova, M.; Lakic, D.; Petrova, G.; Bešlija, S.; Culig, J. Comparative analysis of the access to health-care services and breast cancer therapy in 10 Eastern European countries. SAGE Open Med. 2020, 8, 2050312120922029. [Google Scholar] [CrossRef] [PubMed]

- Deng, L.; Wang, T.; Zhang, Z.; Tao, W.; Li, J.; Zhao, Y.; Luo, S.; Xu, J. Evaluation of large language models in breast cancer clinical scenarios: A comparative analysis based on ChatGPT-3.5, ChatGPT-4.0, and Claude2. Int. J. Surg. 2024, 110, 1941–1950. [Google Scholar] [CrossRef] [PubMed]

- Benary, M.; Wang, X.D.; Schmidt, M.; Soll, D.; Hilfenhaus, G.; Nassir, M.; Sigler, C.; Knödler, M.; Keller, U.; Beule, D.; et al. Leveraging Large Language Models for Decision Support in Personalized Oncology. JAMA Netw. Open 2023, 6, E2343689. [Google Scholar] [CrossRef]

- Emani, S.; Rui, A.; Rocha, H.A.L.; Rizvi, R.F.; Juaçaba, S.F.; Jackson, G.P.; Bates, D.W. Physicians’ Perceptions of and Satisfaction With Artificial Intelligence in Cancer Treatment: A Clinical Decision Support System Experience and Implications for Low-Middle–Income Countries. JMIR Cancer 2022, 8, e31461. [Google Scholar] [CrossRef]

- Sorin, V.; Klang, E.; Sklair-Levy, M.; Cohen, I.; Zippel, D.B.; Balint Lahat, N.; Konen, E.; Barash, Y. Large language model (ChatGPT) as a support tool for breast tumor board. NPJ Breast Cancer 2023, 9, 44. [Google Scholar] [CrossRef]

- Griewing, S.; Gremke, N.; Wagner, U.; Lingenfelder, M.; Kuhn, S.; Boekhoff, J. Challenging ChatGPT 3.5 in Senology—An Assessment of Concordance with Breast Cancer Tumor Board Decision Making. J. Pers. Med. 2023, 13, 1502. [Google Scholar] [CrossRef] [PubMed]

- Ammo, T.; Guillaume, V.G.J.; Hofmann, U.K.; Ulmer, N.M.; Buenting, N.; Laenger, F.; Beier, J.P.; Leypold, T. Evaluating ChatGPT-4o as a decision support tool in multidisciplinary sarcoma tumor boards: Heterogeneous performance across various specialties. Front. Oncol. 2025, 14, 1526288. [Google Scholar] [CrossRef]

- Aghamaliyev, U.; Karimbayli, J.; Giessen-Jung, C.; Ilmer, M.; Unger, K.; Andrade, D.; Hofmann, F.O.; Weniger, M.; Angele, M.K.; Westphalen, C.B.; et al. ChatGPT’s Gastrointestinal Tumor Board Tango: A limping dance partner? Eur. J. Cancer 2024, 205, 114100. [Google Scholar] [CrossRef] [PubMed]

- Park, Y.-E.; Chae, H. The Fidelity of Artificial Intelligence to Multidisciplinary Tumor Board Recommendations for Patients with Gastric Cancer: A Retrospective Study. J. Gastrointest. Cancer 2024, 55, 365–372. [Google Scholar] [CrossRef]

- Zhao, X.; Zhang, Y.; Ma, X.; Chen, Y.; Xi, J.; Yin, X.; Kang, H.; Guan, H.; Dai, Z.; Liu, D.; et al. Concordance between treatment recommendations provided by IBM Watson for Oncology and a multidisciplinary tumor board for breast cancer in China. Jpn. J. Clin. Oncol. 2020, 50, 852–858. [Google Scholar] [CrossRef] [PubMed]

- Uzun Ozsahin, D.; Ikechukwu Emegano, D.; Uzun, B.; Ozsahin, I. The Systematic Review of Artificial Intelligence Applications in Breast Cancer Diagnosis. Diagnostics 2022, 13, 45. [Google Scholar] [CrossRef]

- Huynh, E.; Hosny, A.; Guthier, C.; Bitterman, D.S.; Petit, S.F.; Haas-Kogan, D.A.; Kann, B.; Aerts, H.J.W.L.; Mak, R.H. Artificial intelligence in radiation oncology. Nat. Rev. Clin. Oncol. 2020, 17, 771–781. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.-S.; Park, H.-Y.; Kho, B.-G.; Park, C.-K.; Oh, I.-J.; Kim, Y.-C.; Kim, S.; Yun, J.-S.; Song, S.-Y.; Na, K.-J.; et al. Artificial intelligence and lung cancer treatment decision: Agreement with recommendation of multidisciplinary tumor board. Transl. Lung Cancer Res. 2020, 9, 507–514. [Google Scholar] [CrossRef] [PubMed]

- Thavanesan, N.; Vigneswaran, G.; Bodala, I.; Underwood, T.J. The Oesophageal Cancer Multidisciplinary Team: Can Machine Learning Assist Decision-Making? J. Gastrointest. Surg. 2023, 27, 807–822. [Google Scholar] [CrossRef] [PubMed]

- Ferdush, J.; Begum, M.; Hossain, S.T. ChatGPT and Clinical Decision Support: Scope, Application, and Limitations. Ann. Biomed. Eng. 2024, 52, 1119–1124. [Google Scholar] [CrossRef]

- van Leeuwen, K.G.; Doorn, L.; Gelderblom, E. The AI Act: Responsibilities and obligations for healthcare professionals and organizations. Diagn. Interv. Radiol. 2025. [Google Scholar] [CrossRef] [PubMed]

| Variables | N (%) | |

|---|---|---|

| Age (median (IQR)) (years) | 60 (50–70) | |

| Marital status | Married | 71 (78.0) |

| Widowed | 19 (20.9) | |

| Divorced | 1 (1.1) | |

| Menopausal status | Premenopausal | 6 (6.6) |

| Postmenopausal | 76 (83.5) | |

| Unknown | 9 (9.9) | |

| Partus | Yes | 42 (46.2) |

| Nno | 18 (19.8) | |

| Unknown | 31 (34.0) | |

| Comorbidities | 0 | 37 (40.7) |

| 1 | 30 (33.0) | |

| 2 | 13 (14.3) | |

| 3+ | 11 (12.0) | |

| Tumor type | Ductal | 66 (72.5) |

| Lobular | 11 (12.1) | |

| Other | 14 (15.4) | |

| Histological grading (NG) | in situ | 1 (1.1) |

| 1 | 3 (3.3) | |

| 2 | 61 (67.0) | |

| 3 | 23 (25.3) | |

| Unknown | 3 (3.3) | |

| ER receptor | Positive | 70 (76.9) |

| Negative | 21 (23.1) | |

| PR receptor | Positive | 63 (69.2) |

| Negative | 28 (30.8) | |

| Her2 receptor | Positive | 13 (14.3) |

| Negative | 78 (85.7) | |

| Ki67 | ≤20 | 52 (57.1) |

| >20 | 39 (42.9) |

| Category | Group | Number of Patients | Mean Score | SD | Rate ≤ 2 (%) | Rate > 2 (%) |

|---|---|---|---|---|---|---|

| Partus | Yes | 42 | 3.45 | 0.18 | 3.0 | 97.0 |

| No | 18 | 3.49 | 0.27 | 4.2 | 95.8 | |

| Unknown | 31 | 2.94 | 0.12 | 32.3 | 67.7 | |

| Tumor type | Ductal | 66 | 3.22 | 0.06 | 15.5 | 84.5 |

| Lobular | 11 | 3.52 | 0.18 | 4.5 | 95.5 | |

| Other | 14 | 3.38 | 0.20 | 8.9 | 91.1 | |

| Histological grading | In situ | 1 | 2.75 | 0.33 | 25 | 75 |

| 1 | 3 | 2.84 | 0.12 | 33.3 | 66.7 | |

| 2 | 61 | 3.26 | 0.09 | 12.7 | 87.3 | |

| 3 | 23 | 3.45 | 0.15 | 8.7 | 91.3 | |

| Histological grading | Unknown | 3 | 3.00 | 0.19 | 33.3 | 66.7 |

| ER receptor | Positive | 70 | 3.22 | 0.05 | 13.1 | 86.9 |

| Negative | 21 | 3.52 | 0.18 | 2.5 | 97.5 | |

| PR receptor | Positive | 63 | 3.14 | 0.04 | 16.7 | 83.3 |

| Negative | 28 | 3.59 | 0.22 | 5.6 | 94.4 | |

| HER2 receptor | Positive | 13 | 3.5 | 0.32 | 9.6 | 90.4 |

| Negative | 78 | 3.24 | 0.05 | 13.8 | 86.2 | |

| Ki-67 | ||||||

| ≤20 | 52 | 3.29 | 0.07 | 12.5 | 87.5 | |

| >20 | 39 | 3.29 | 0.12 | 14.1 | 85.9 | |

| Neoadjuvant AC-T + carboplatin | Yes | 2 | 3.68 | 0.48 | 3.6 | 96.4 |

| No | 55 | 3.22 | 0.13 | 15.9 | 84.1 | |

| Indecisive | 44 | 3.20 | 0.06 | 14.2 | 85.8 | |

| Neoadjuvant AC-T + platinum | Yes | 2 | 3.12 | 0.48 | 12.5 | 87.5 |

| No | 46 | 3.32 | 0.12 | 13.0 | 87.0 | |

| Indecisive | 43 | 3.25 | 0.04 | 12.2 | 87.8 | |

| Surgery | Yes | 76 | 3.32 | 0.11 | 12.5 | 87.5 |

| No | 7 | 3.36 | 0.14 | 7.1 | 92.9 | |

| Indecisive | 8 | 2.91 | 0.24 | 25 | 75 | |

| Radiotherapy | Yes | 47 | 3.38 | 0.13 | 10.6 | 89.4 |

| No | 6 | 3.58 | 0.29 | 0 | 100 | |

| Indecisive | 38 | 3.12 | 0.04 | 18.4 | 81.6 | |

| Endocrine therapy | Yes | 63 | 3.36 | 0.09 | 7.5 | 92.5 |

| No | 23 | 3.18 | 0.11 | 21.7 | 78.3 | |

| Indecisive | 5 | 2.75 | 0.25 | 45 | 55 | |

| Palliative care | Yes | 6 | 3.42 | 0.29 | 4.2 | 95.8 |

| No | 85 | 3.28 | 0.07 | 13.8 | 86.2 | |

| FISH | Yes | 4 | 3.38 | 0.75 | 18.9 | 81.1 |

| No | 87 | 3.28 | 0.07 | 12.9 | 87.1 | |

| Neoadjuvant TCHP | Yes | 2 | 3.88 | 0.25 | 0 | 100 |

| No | 50 | 3.27 | 0.12 | 15 | 85 | |

| Indecisive | 39 | 3.26 | 0.05 | 11.5 | 88.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Umihanic, S.; Osmanovic, H.; Selak, N.; Kopric, D.; Huseinbasic, A.; Sehic-Kozica, E.; Babic, B.; Umihanic, F. Evaluating the Concordance Between ChatGPT and Multidisciplinary Teams in Breast Cancer Treatment Planning: A Study from Bosnia and Herzegovina. J. Clin. Med. 2025, 14, 6460. https://doi.org/10.3390/jcm14186460

Umihanic S, Osmanovic H, Selak N, Kopric D, Huseinbasic A, Sehic-Kozica E, Babic B, Umihanic F. Evaluating the Concordance Between ChatGPT and Multidisciplinary Teams in Breast Cancer Treatment Planning: A Study from Bosnia and Herzegovina. Journal of Clinical Medicine. 2025; 14(18):6460. https://doi.org/10.3390/jcm14186460

Chicago/Turabian StyleUmihanic, Sefika, Hedim Osmanovic, Nejra Selak, Dijana Kopric, Asija Huseinbasic, Erna Sehic-Kozica, Belma Babic, and Fadil Umihanic. 2025. "Evaluating the Concordance Between ChatGPT and Multidisciplinary Teams in Breast Cancer Treatment Planning: A Study from Bosnia and Herzegovina" Journal of Clinical Medicine 14, no. 18: 6460. https://doi.org/10.3390/jcm14186460

APA StyleUmihanic, S., Osmanovic, H., Selak, N., Kopric, D., Huseinbasic, A., Sehic-Kozica, E., Babic, B., & Umihanic, F. (2025). Evaluating the Concordance Between ChatGPT and Multidisciplinary Teams in Breast Cancer Treatment Planning: A Study from Bosnia and Herzegovina. Journal of Clinical Medicine, 14(18), 6460. https://doi.org/10.3390/jcm14186460