1. Introduction

The human body’s musculoskeletal and visual systems share intricate biomechanical, vascular, and neural pathways historically underexplored in clinical diagnostics. Emerging studies have demonstrated notable correlations between visual disturbances and musculoskeletal dysfunction, particularly in the musculature of the neck and shoulder regions [

1,

2]. For instance, individuals with age-related macular degeneration (AMD) tend to experience more musculoskeletal discomfort, likely stemming from compensatory postural adjustments and increased strain during visually demanding tasks [

1]. One study found strong correlations between visual complaints and musculoskeletal discomfort seen in both AMD and age-matched control patients (

ρ = 0.60 and 0.59, respectively,

p < 0.005) [

1]. Supporting these findings, a meta-analysis by Sánchez-González et al. (2019) synthesized 21 studies to investigate the relationship between visual systems and musculoskeletal complaints, showing consistent associations between visual system disorders in accommodation and non-strabismic binocular dysfunctions and chronic neck pain and shoulder discomfort [

2]. The authors suggest that visual disturbances may alter head posture and visuomotor behavior, exacerbating neck issues and strain. However, they also noted a limitation in the lack of standardized assessment methods across studies, which reduced the statistical power to unify clinical protocols [

2].

Building on these foundations, recent advancements in AI, particularly convolutional neural networks (CNNs), have begun to uncover latent connections between ocular biomarkers and systemic musculoskeletal pathologies. These technologies offer a powerful means to uncover non-invasive indicators of systemic pathology, aligning with the aim of this review: to explore and evaluate the diagnostic potential of AI-driven ocular imaging in the context of musculoskeletal disease. For instance, retinal microvascular changes detected via optical coherence tomography (OCT) may have been linked to early-stage osteoporosis [

3], while spinal misalignment correlates with optic nerve compression and glaucoma progression [

2].

Integrating AI with high-resolution imaging modalities—such as OCT, fundus photography, and MRI—drives the development of predictive models that combine biomechanical, genetic, and proteomic data. These models enhance diagnostic accuracy and guide personalized surgical planning and long-term postoperative rehabilitation. This review evaluates the transformative potential of AI in musculoskeletal and ocular medicine, focusing on validated applications in diagnostics, surgical robotics, and long-term prognostication [

1,

2,

3].

2. Methodology

This narrative review was conducted to evaluate the application of artificial intelligence (AI) in linking ocular biomarkers with musculoskeletal disorders. A comprehensive literature search was performed across four major databases: PubMed, IEEE Xplore, Scopus, and ClinicalTrials.gov, covering publications from January 2000 to March 2025. Search terms were developed to reflect the multidisciplinary nature of the topic and included combinations of “artificial intelligence”, “machine learning”, “deep learning”, “convolutional neural networks”, “transformer models”, “ocular imaging”, “retinal biomarkers”, “choroidal thickness”, “optic nerve head morphology”, “osteoporosis”, “cervical spine instability”, “inflammatory arthritis”, “OCT”, “fundus photography”, “MRI”, “digital twins”, “surgical robotics”, and “multi-modal diagnostics”.

To ensure rigor, two independent reviewers conducted the screening process. Titles and abstracts were first screened for relevance, followed by a full-text review. Inclusion criteria were as follows: (1) peer-reviewed studies involving AI applications in ocular or musculoskeletal diagnostics, (2) studies demonstrating clinical validation or translational potential, and (3) studies published in English. Exclusion criteria included non-peer-reviewed literature, animal-only studies without human data relevance, duplicate entries, and articles lacking adequate methodological detail. Discrepancies between reviewers were resolved through consensus or consultation with a third reviewer.

In addition to original research articles, systematic reviews, regulatory white papers, and consensus guidelines were reviewed to contextualize clinical adoption challenges, ethical implications, and model interpretability. Standardized quality assessment tools, including the SANRA (Scale for the Assessment of Narrative Review Articles), were applied to ensure transparency and methodological rigor throughout the review process.

3. AI Integration in Musculoskeletal and Ocular Diagnostics as Independent Fields

Integrating AI into musculoskeletal and ocular diagnostics follows a shared methodological framework involving high-resolution data acquisition, algorithmic training, and clinical validation. However, comparative differences in tissue properties, imaging modalities, and disease manifestations necessitate domain-specific strategies for effective implementation. This section outlines the parallels and distinctions in how AI technologies advance each field, while previewing the role of newer AI architectures and learning paradigms that unify both specialties.

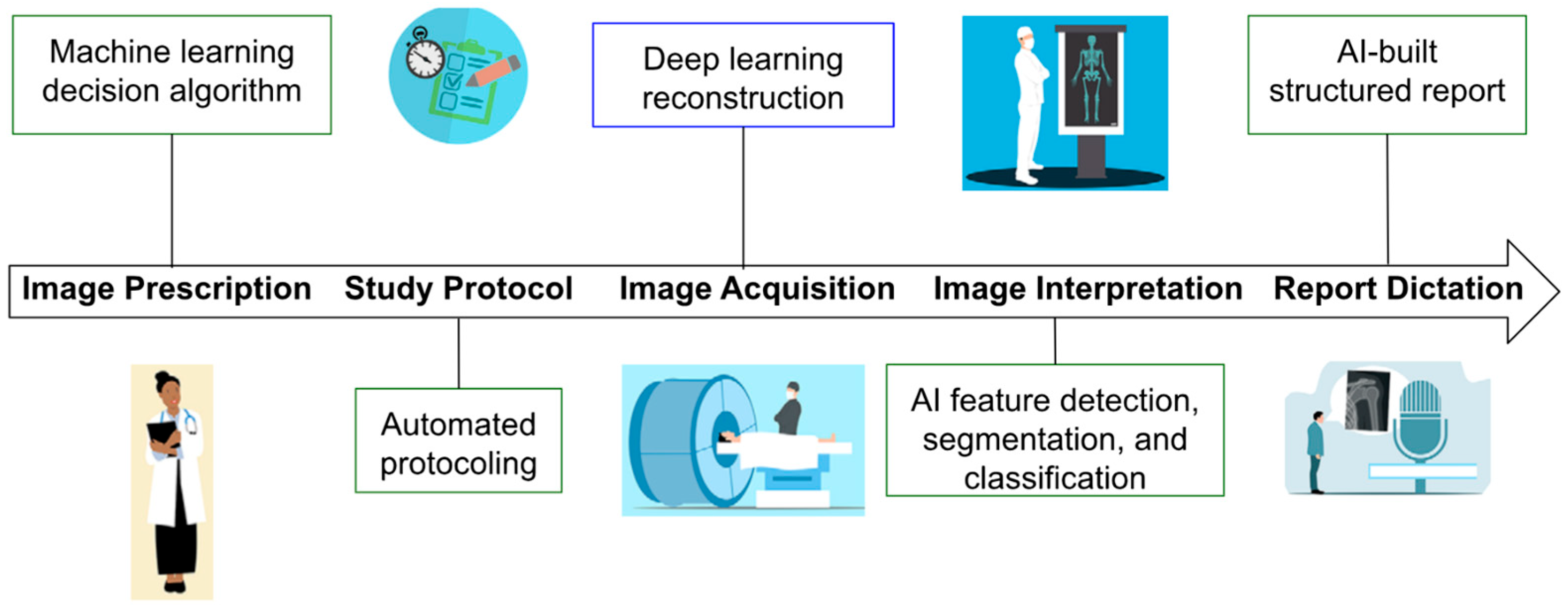

3.1. Data Acquisition

AI-driven diagnostic workflows in musculoskeletal and ocular care depend on high-resolution imaging modalities, including spectral-domain OCT (SD-OCT), 3-tesla MRI, and musculoskeletal ultrasound (MSK-US) (

Figure 1). These technologies generate complex datasets with the granularity needed to train CNNs to detect subtle patterns indicative of systemic pathologies [

4,

5,

6,

7].

In ocular imaging, SD-OCT and swept-source OCT (SS-OCT) provide layered retinal scans critical for detecting early degenerative and vascular eye disease. Bellemo et al. demonstrated that CNNs trained on SD-OCT images could detect micrometer-scale deviations in choroidal thickness, which have been associated with musculoskeletal issues such as lumbar disc degeneration [

7,

8]. Meanwhile, synthetic enhancement of SD-OCT images using generative models has been shown to replicate SS-OCT clarity, improving choroidal visibility in low-resource settings [

7,

8,

9,

10,

11].

In contrast, musculoskeletal imaging relies more heavily on ultrasound and MRI for dynamic and structural assessment. For example, Clarius MSK AI employs U-Net-based models for real-time tendon segmentation, achieving a Dice coefficient of 0.89 and reducing inter-observer variability in synovitis grading and soft tissue abnormalities [

9,

10,

12].

However, despite the widespread use of SD-OCT in clinical ophthalmology, its limited penetration depth hampers detailed visualization of the choroid, a vascular layer critical for diagnosing chorioretinal diseases. Swept-source OCT (SS-OCT; PLEX Elite 9000, Carl Zeiss Meditec AG, Jena, Germany) overcomes this limitation with enhanced depth imaging but remains costly and less accessible. Bellemo et al. also developed a generative deep learning model that synthetically enhances SD-OCT images (Spectralis OCT, Heidelberg Engineering GmbH, Heidelberg, Germany) to mimic the choroidal clarity of SS-OCT scans. This model was trained on 150,784 paired SD-OCT and SS-OCT images from 735 eyes diagnosed with glaucoma, diabetic retinopathy, or deemed healthy. The AI learned to replicate deep anatomical features, thereby enabling improved visualization of the choroid using standard SD-OCT devices [

7,

8,

10,

11].

Performance evaluation using an external test dataset of 37,376 images revealed that clinicians could not distinguish real SS-OCT images from AI-enhanced SD-OCT scans, achieving only 47.5% accuracy, suggesting high realism in the synthetic outputs. Quantitative comparisons further validated the approach: choroidal thickness, area, volume, and vascularity index measurements derived from the AI-enhanced SD-OCT scans showed strong concordance with those from SS-OCT, with Pearson correlation coefficients up to 0.97 and intra-class correlation values as high as 0.99. These findings suggest that deep learning can democratize access to high-quality choroidal imaging, particularly in low-resource settings where SS-OCT is not readily available [

7,

8,

10]. These advancements collectively demonstrate how AI can enhance the quality and accessibility of diagnostic imaging across ophthalmology and orthopedics. While both domains benefit from enhanced spatial resolution and reduced operator variability, ocular imaging is often limited by depth penetration. In contrast, musculoskeletal applications must account for motion artifacts and heterogeneous soft tissue density. These differences guide the choice of imaging modalities and impact AI model design. The evolution of synthetic image generation and enhanced segmentation now sets the stage for advanced model training strategies, including transformer-based architectures and contrastive learning approaches [

11].

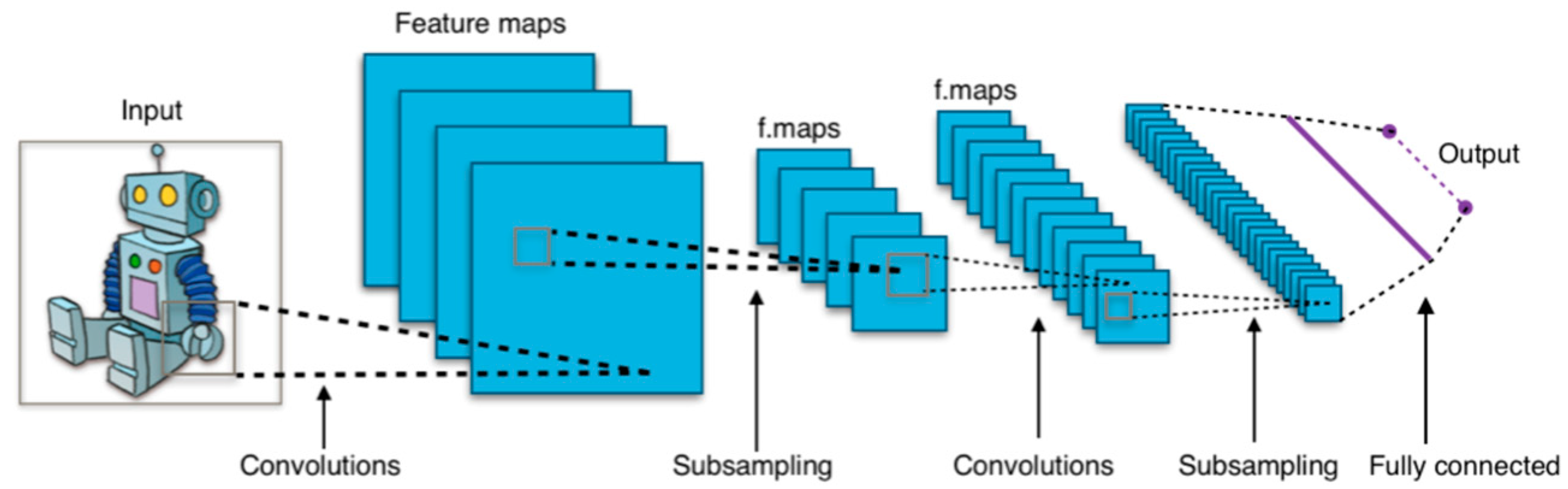

3.2. Algorithm Training

AI systems in ophthalmic and musculoskeletal imaging rely on increasingly sophisticated machine learning (ML) and deep learning (DL) algorithms for tasks such as classification, regression, clustering, and feature extraction [

3,

13]. Early applications leveraged conventional ML methods—such as logistic regression, decision trees, and nearest-neighbor searches—to identify patterns in radiographic data, including fracture classification and unsupervised lesion clustering [

3,

11,

14]. However, these traditional approaches lacked scalability and spatial awareness, which led to the widespread adoption of CNNs [

3,

11,

14].

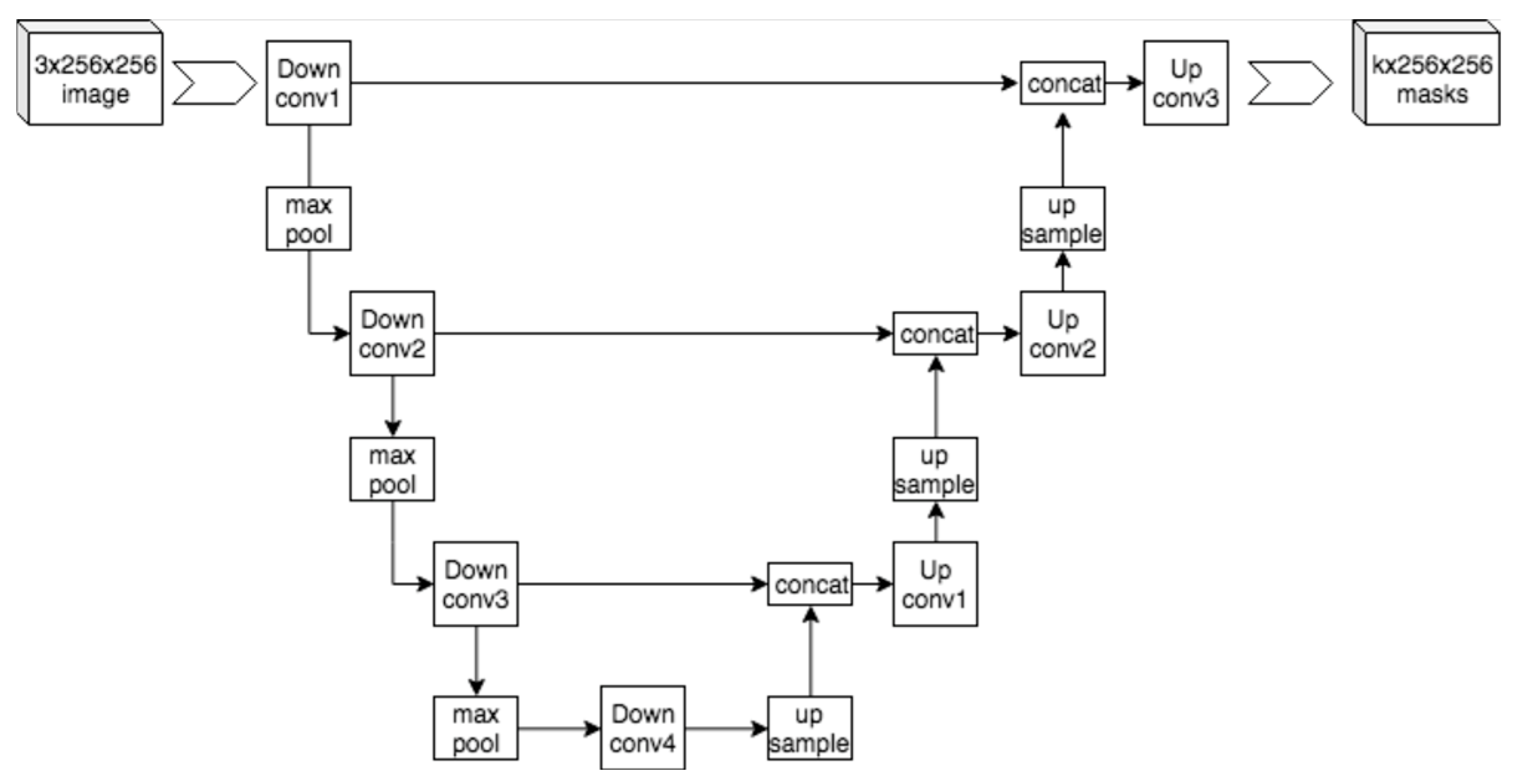

CNNs have become foundational in medical imaging analysis for their ability to extract spatial hierarchies and achieve translational invariance [

3,

11,

14]. In both domains, CNNs are used to segment anatomical structures, detect pathologies, and predict outcomes. A notable advancement in segmentation came with U-Net architectures, which demonstrated high accuracy even with limited annotated data (

Figure 2) [

11,

14,

15,

16]. Despite their success, CNNs are limited by high data demands, overfitting risk, and interpretability challenges [

11,

14].

Newer approaches have emerged to address these limitations. Generational adversarial networks (GANs) have been applied to medical image synthesis, domain translation, and resolution enhancement, particularly in synthesizing high-quality musculoskeletal MRI data and enhancing ocular OCT data. However, stability and mode collapse issues persist.

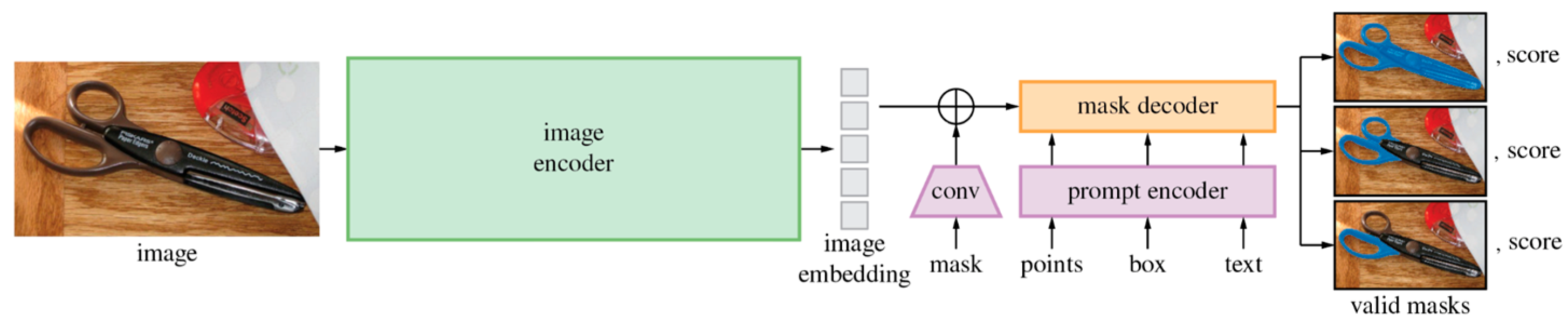

Meanwhile, transformer-based architectures offer a compelling alternative by modeling long-range dependencies in data. Vision transformers (ViTs) process images as token sequences, capturing global context more effectively than CNNs (

Figure 3) [

11,

14,

17,

18]. Swin Transformers enhance this capability by introducing hierarchical attention, making them well suited for volumetric musculoskeletal imaging tasks such as spinal segmentation [

11,

14,

17]. Similarly, the Swin-Poly Transformer, inspired by the Swin Transformer, is specifically designed for OCT image classification, achieving high accuracy and outperforming previous CNN- and ViT-based methods in retinal disease diagnosis [

19].

Incorporating textual and anatomical labels into training, foundation models such as Contrastive Language–Image Pretraining (CLIP) (

Figure 4) and the Segment Anything Model (SAM) (

Figure 5) align multi-modal inputs using contrastive learning [

20,

21,

22,

23,

24,

25]. These models enable zero-shot inference and improved generalization, particularly beneficial in ophthalmology, where descriptors like “optic disc pallor” or “macular edema” align closely with visual patterns [

22,

23,

26].

Additionally, diffusion models are gaining traction for their ability to generate high-fidelity synthetic medical images [

27]. In musculoskeletal radiology, these models augment datasets for rare pathologies like avascular necrosis, while in ophthalmology, they allow precise simulation of retinal layer abnormalities [

27]. Multi-modal learning strategies that fuse imaging, genomic data, and clinical notes further advance model robustness and diagnostic value across both domains [

26].

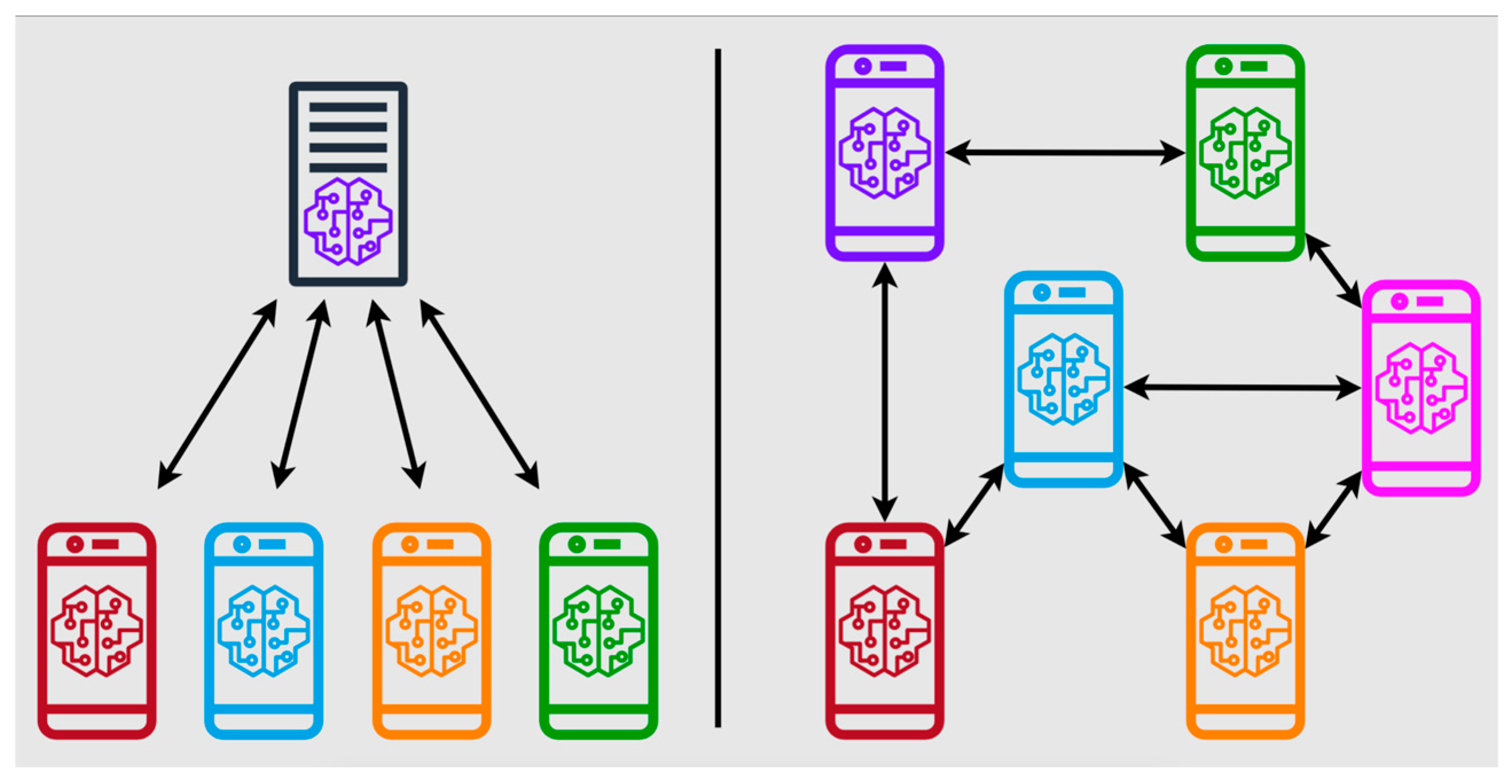

To overcome the challenges of data scarcity and privacy concerns, federated learning frameworks have emerged as key enablers, allowing decentralized training across heterogeneous datasets while preserving patient confidentiality (

Figure 6) [

11,

14,

19,

28,

29]. Quantum machine learning (QML) has evolved with these trends, accelerating model training using quantum processors. One QML prototype reduced training time for musculoskeletal radiomics models by 72% with 54-qubit processors, enabling rapid analysis of T2-weighted MRI scans to predict lumbar disc herniation recurrence [

11,

30].

Together, these algorithmic innovations drive a shift from static imaging interpretation to intelligent, adaptive diagnostic platforms. Their clinical relevance now hinges on rigorous validation against expert standards—a transition explored in the following section on clinical validation.

3.3. Clinical Validation

Comparative clinical validation efforts reveal that AI tools in musculoskeletal diagnostics generally focus on detecting structural pathology, such as fractures, soft tissue injuries, and degenerative disc disease. In contrast, ocular AI applications prioritize vascular and neurodegenerative features, like diabetic macular edema or glaucoma [

3,

11,

14,

32].

In musculoskeletal diagnostics, a meta-analysis by Droppelmann et al. reported that 13 AI models achieved 92.6% sensitivity and 90.8% specificity for upper extremity pathologies, outperforming radiologist assessments in multi-center trials [

3,

11,

30]. Similarly, AI models have matched expert-level accuracy in fracture detection [

3,

11,

14,

31].

In ocular diagnostics, AI models have successfully identified diabetic macular edema after being trained on fundus photography and OCT images [

32]. Other innovations include DL-based ultrasound computer-aided detection and diagnosis (CADe/CADx) systems, which benefit from large-scale ophthalmic image repositories to deliver real-time disease screening in community settings [

32].

While musculoskeletal models increasingly integrate radiomic and biomechanical data for prognostication, ocular AI tools are expanding into functional prediction, such as forecasting visual field deterioration or intraocular pressure fluctuations. Both domains now benefit from AI-assisted treatment planning and surgical robotics, underscoring the convergence of diagnostic and interventional AI [

3,

11,

14,

31].

This convergence sets the stage for the next section, which explores cross-disciplinary applications and future directions, including AI-driven surgical planning, postoperative outcome prediction, and ethical frameworks for integration in real-world settings.

4. AI-Driven Diagnostic Synergies Between Ocular and Musculoskeletal Systems

4.1. Convolutional Neural Networks in Multi-Modal Imaging

CNNs have become pivotal tools for analyzing spatially structured imaging data across ocular and musculoskeletal modalities (

Figure 7). For example, a CNN trained on 8260 knee radiographs achieved 92.3% accuracy in classifying osteoarthritis [

33,

34,

35]. These networks also leverage hierarchical feature extraction in ophthalmology to identify subtle patterns, such as microaneurysms in diabetic retinopathy. These are hypothesized to correlate with accelerated cartilage degradation in osteoarthritis due to shared collagen dysregulation pathways [

3,

11,

36]. Similarly, optical coherence tomography angiography (OCT-A) shows promise in detecting vascular abnormalities associated with musculoskeletal decline [

35,

36,

37].

GANs further enhance diagnostic precision by synthesizing cross-modality images. For example, Spine-GAN segments vertebrae and neural foramina with 94% spatial consistency [

3,

14,

39], while AI-driven OCT analysis quantifies choroidal thickness with submicron resolution, revealing associations with lumbar disc degeneration mediated by impaired glymphatic drainage [

6]. Advanced techniques like StarGAN enable synthetic MRI reconstruction from single scans, improving quantitative assessments of musculoskeletal disorders [

37,

40]. Transfer learning techniques, such as fine-tuning pre-trained ResNet-50 models on retinal fundus images, improve fracture detection sensitivity in upper extremity radiographs [

30].

4.2. Non-Invasive Ocular Biomarkers for Musculoskeletal Pathologies

Ocular imaging provides a window into systemic health, with AI identifying subtle patterns between ocular biomarkers and systemic musculoskeletal conditions with a high positive predictive value. RNFL thinning, quantified via SD-OCT, predicts cervical spine instability post-whiplash with 91.3% sensitivity and 87.6% specificity [

35,

37]. Similarly, choroidal thickness variance, measured using enhanced-depth imaging OCT, correlates with lumbar disc degeneration (AUC = 0.82), likely due to shared disruptions in cerebrospinal fluid dynamics [

33,

37]. In rheumatology, retinal vein tortuosity is lower in ankylosing spondylitis patients than in controls (

β = 0.1 vs.

β = 0.5) [

11,

41]. Simultaneously, Türkcü et al. used OCT-A to reveal reduced vessel density in both the superficial and deep capillary plexuses in psoriasis patients compared to controls, demonstrating systemic inflammatory effects on ocular vasculature [

42]. All these studies demonstrate the distinct retinal vessel tortuosity and density patterns across inflammatory musculoskeletal pathologies.

Further supporting the link between ocular imaging and systemic health, Jiang et al. found a significant correlation between reduced bone mineral density (BMD) and decreased choroidal thickness, as assessed by swept-source optical coherence tomography (SS-OCT). In a large SS-OCT study, patients with low bone mineral density (BMD) showed significantly thinner choroidal layers than controls (215.50 μm vs. 229.73 μm,

p = 0.003) [

43,

44], supporting hypotheses of shared vitamin D-related and vascular mechanisms [

43,

44].

Further evidence links ocular findings to neurodegeneration and musculoskeletal decline. In Parkinson’s Disease (PD), reduced choroidal thickness correlates with greater motor symptom severity, higher dopaminergic therapy usage, and increased MRI pathology in the substantia nigra compacta (SNc) [

45,

46].

In rheumatoid arthritis (RA), CNN-assisted fluorescence optical imaging (FOI) identifies synovitis patterns with 95% concordance with radiologists. In comparison, concurrent OCT scans reveal choroidal thickening in 68% of patients, implicating shared inflammatory pathways [

36,

44]. Additionally, a study published in Rheumatology (2022) introduced the FOIE-GRAS (Fluorescence Optical Imaging Enhancement-Generated RA Score) system, which confirms these findings and demonstrates high reliability in detecting synovitis in RA patients, with intra-class correlation coefficients ranging from 0.76 to 0.98 [

47]. A 2024 systematic review by Fekrazad et al. further confirmed that RA patients exhibited significantly lower choroidal thickness at certain sites than healthy controls, reinforcing ocular imaging’s role in rheumatic disease monitoring [

48].

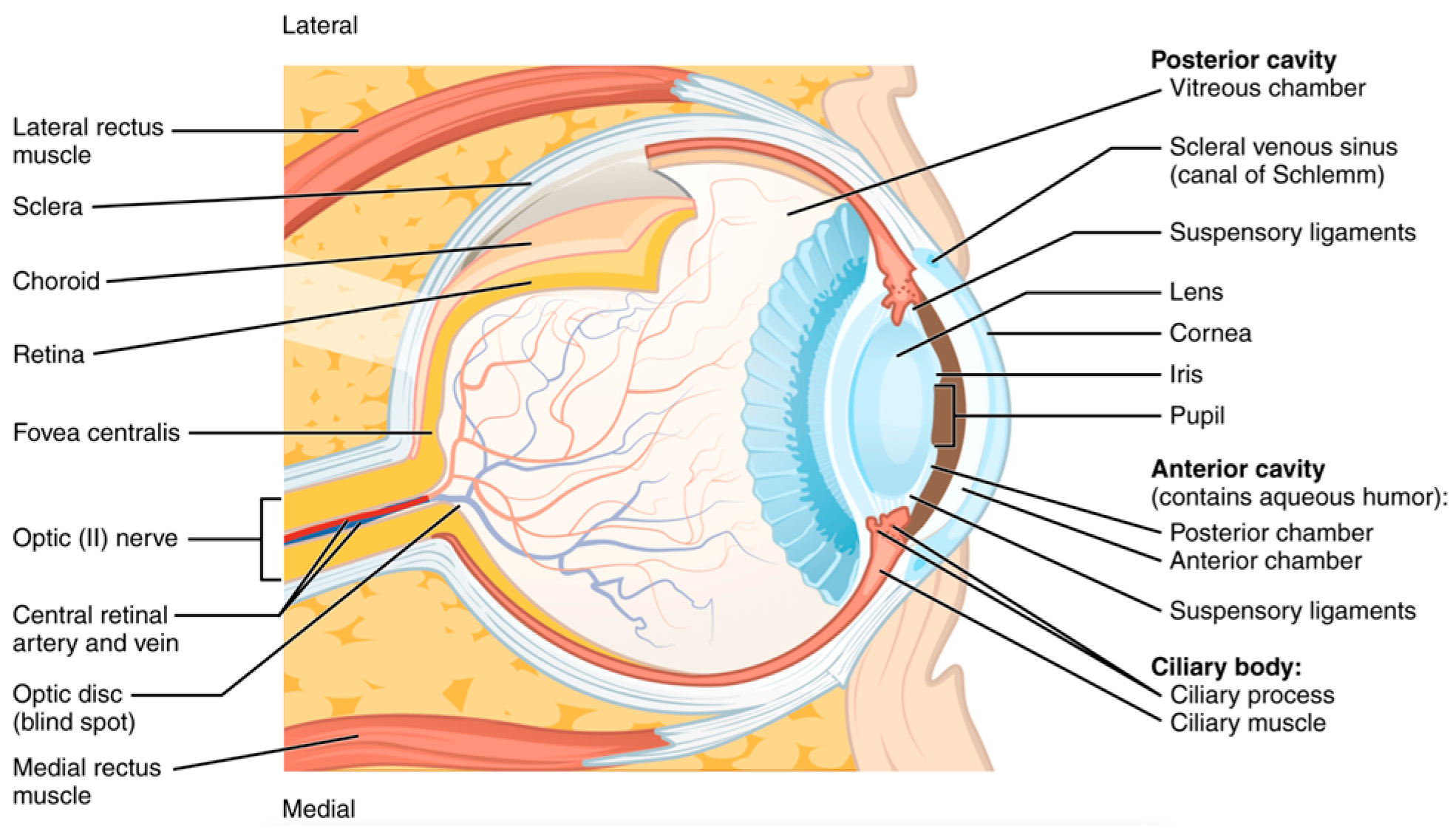

The retina’s structural and vascular integrity has long been considered a surrogate marker for central nervous system (CNS) health (

Figure 8). Ocular imaging has revealed markers of CNS health. RNFL thinning, ganglion cell complex (GCC) loss, and microvascular anomalies in the optic nerve head have been linked to cognitive impairment and Parkinsonian motor dysfunction, which often coexist with musculoskeletal frailty and increased fall risk [

49,

50,

51,

52]. AI models trained on OCT data have shown sensitivity exceeding 87% in distinguishing Alzheimer’s patients from healthy controls based on RNFL and macular thickness maps [

52,

53].

In aging populations, musculoskeletal degeneration is frequently paralleled by declining cognitive and visual function—a phenomenon that AI is helping to quantify through multi-modal diagnostics [

55,

56]. For instance, conjunctive analysis of OCT-derived GCIPL thickness and MRI-based cortical atrophy can improve risk stratification for falls and vertebral fractures in elderly adults [

51,

52,

53,

55].

Moreover, ocular motor dysfunctions such as altered saccadic eye movements, measurable via AI-enhanced infrared tracking systems, have emerged as early indicators of cerebellar degeneration, which affects gait and postural control [

51,

56]. These biomarkers could be key in preemptively identifying patients at risk of musculoskeletal complications secondary to neurodegeneration [

49,

50,

55]. Collagen profiling via retinal imaging has identified subtype-specific structural vulnerabilities in patients with Ehlers–Danlos syndrome (EDS)—a connective tissue disorder with musculoskeletal and ocular manifestations. Gharbiya et al. reported that ~16% of hypermobile EDS patients exhibited significant retinal atrophy and choroidal thickening. High myopia in hEDS patients was associated with changes in the vitreous extracellular matrix and scleral composition [

55,

57,

58,

59]. Another study found that patients with musculocontractural EDS (mcEDS), caused by dermatan sulfate epimerase deficiency, presented with ocular complications such as high refractive errors and retinal detachment [

60]. These findings help explain patterns like early-onset joint hypermobility co-occurring with myopic degeneration or retinal detachment [

55,

56].

Multi-omics integration is expanding this synergy [

52,

53,

55]. RNA-seq from synovial fluid and retinal biopsies reveals overlapping inflammatory signatures in autoimmune diseases such as RA and uveitis [

55,

57]. This evidence supports the concept of shared proteomic fingerprints across organ systems, enabling cross-disciplinary diagnosis and treatment optimization [

52,

57]. In RA, AI-driven transcriptomic profiling of synovial tissue has predicted therapy response to standard biological therapies [

61,

62]. Similarly, single-cell RNA sequencing (scRNA-seq) has been used in ocular disease to characterize intraocular leukocytes in anterior uveitis, identifying unique intraocular immune profiles associated with disease activity [

63].

Quantum-enhanced models like Structural Analysis of Gene and protein Expression Signatures (SAGES) combine sequence-based predictions and 3D structural models to analyze expression data, enhancing the understanding of protein evolution and function [

61]. While the application of quantum-enhanced models in this context is still in early development, integrating multi-omics data with imaging holds promise for identifying patients at risk for drug-induced musculoskeletal toxicity or predicting the progression from ocular findings to systemic diseases. A summary of all the AI-driven diagnostic findings to elucidate the connection between ocular biomarkers and musculoskeletal disease can be found in

Table 1.

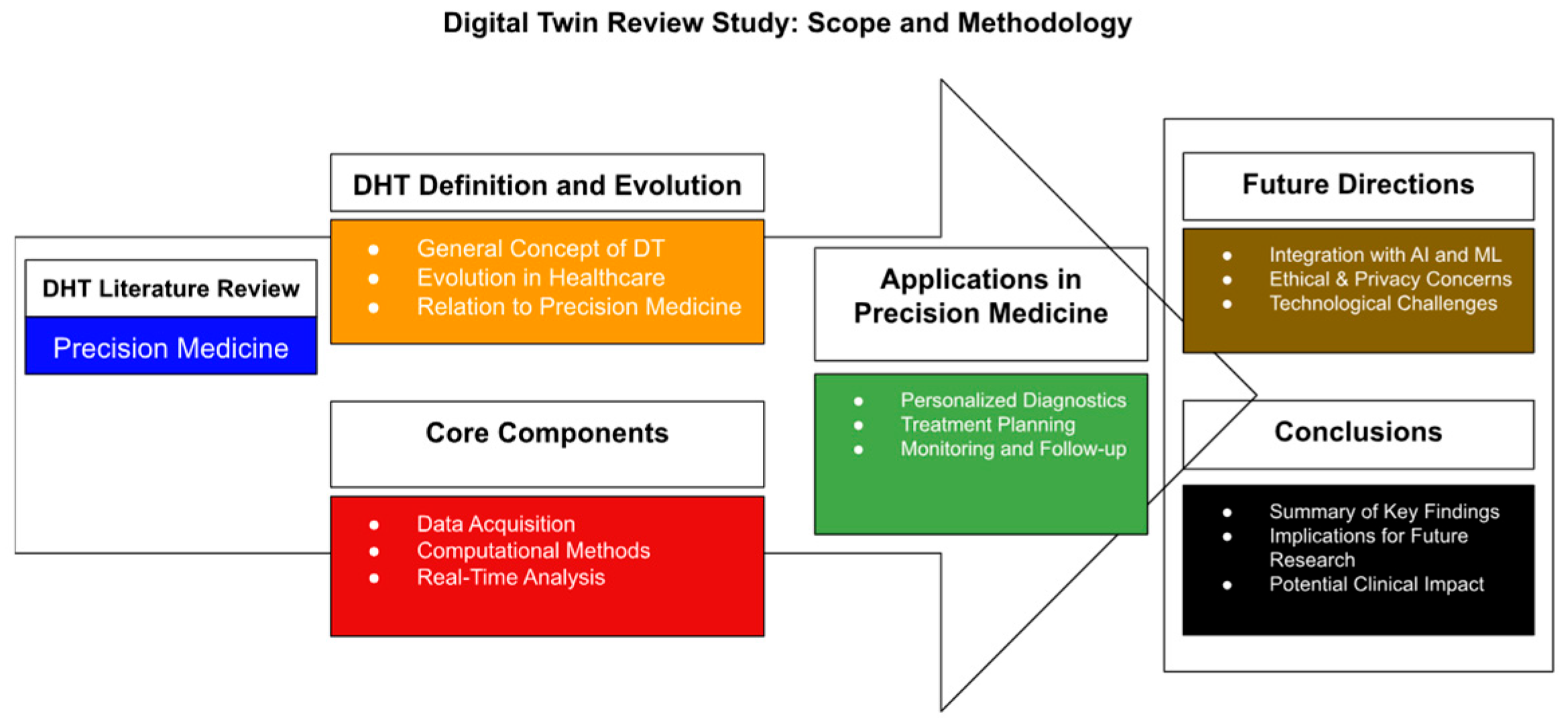

4.3. AI-Enhanced Surgical Interventions

Robotic-Assisted Orthopedic and Ophthalmic Systems

These diagnostic insights extend into AI-enhanced interventions. Digital twin technology—virtual, AI-enhanced replicas of individual patients—is emerging as a transformative tool in precision medicine (

Figure 9). By modeling patient-specific anatomical and biomechanical data, digital twins can simulate disease progression and surgical outcomes in real-time [

64,

65]. In musculoskeletal care, digital twins have been used to replicate lumbar spine dynamics, predicting outcomes of spinal decompression surgery with 92.1% accuracy [

64,

65,

66,

67,

68]. These twins integrate MRI, CT, and intraoperative OCT data to refine implant sizing, trajectory planning, and postoperative rehabilitation strategies [

68].

Ophthalmology applications are also evolving. Corneal and retinal digital twins are being developed using OCT and adaptive optics data to simulate disease progression in glaucoma and AMD. These platforms allow testing of virtual interventions, such as IOP-lowering surgery or anti-VEGF injection timing, before real-world deployment [

68]. The Siemens Healthineers Cardiac Digital Twin program is now being extended to orthopedic and ophthalmic use cases, offering predictive analytics incorporating genetic, proteomic, and radiologic profiles [

64,

68]. Such simulations enable clinicians to test multiple interventions in silico before entering the operating room [

64,

65,

66,

67,

68].

AI-driven robotic platforms like Stryker’s Mako system enhance surgical precision by integrating real-time imaging feedback. The Mako system utilizes preoperative CT scans to create patient-specific 3D anatomical models, enabling surgeons to plan and execute procedures accurately. While the Mako system is primarily designed for orthopedic procedures, advancements in intraoperative imaging, such as optical coherence tomography (OCT), have shown promise in improving surgical outcomes in various specialties. For instance, intraoperative 3D imaging has been demonstrated to reduce pedicle screw-related complications and reoperations in spinal fusion surgeries [

69,

70]. Additionally, OCT angiography (OCT-A) has emerged as a valuable tool in visualizing choroidal neovascularization in diabetic patients, aiding in the assessment and management of diabetic retinopathy [

71]. These technologies, when integrated with AI algorithms, have the potential to dynamically adjust surgical trajectories based on live imaging metrics, further enhancing the precision and safety of surgical interventions.

In joint arthroplasty, AI-enhanced navigation systems achieve submillimeter implant alignment accuracy, with femoral cuts measured within 2° and tibial cuts within 3° of CT-based measurements [

65,

72,

73]. Autonomous surgical robots have shown high accuracy in pedicle screw placement in spine surgeries, with studies reporting accuracy rates up to 99%, resulting in smaller incisions, reduced blood loss, and shorter hospital stays [

74,

75]. Real-time feedback loops, incorporating intraoperative OCT and inertial measurement units (IMUs), enable adaptive control of robotic end-effectors, minimizing iatrogenic nerve damage during complex spinal revisions [

68,

69,

70,

71,

72,

73,

74,

75,

76].

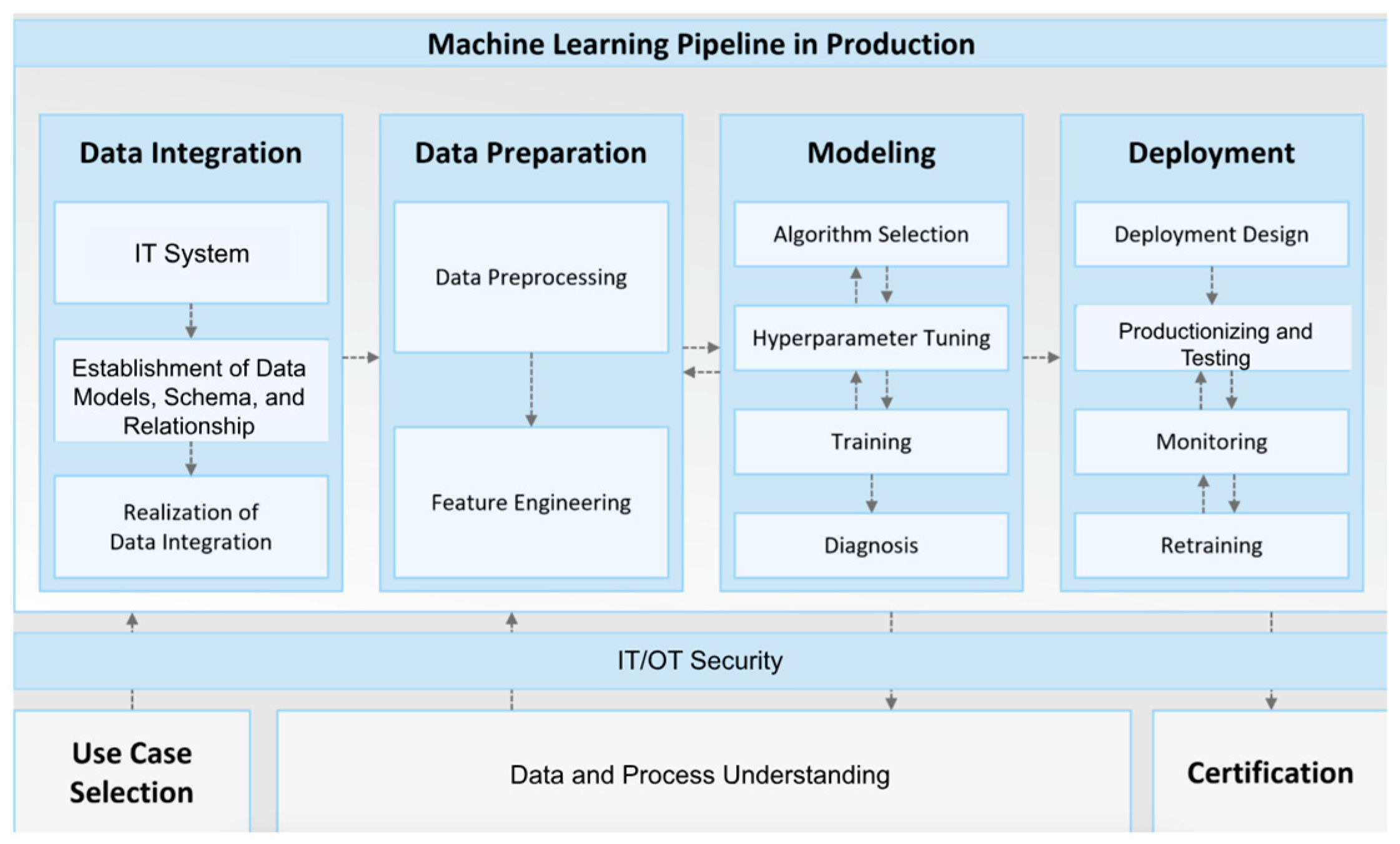

4.4. Postoperative Outcome Prediction

CNNs trained on electronic health records (EHRs) and postoperative imaging accurately predict complications. Studies have shown that DL models outperform traditional machine learning methods in predicting postoperative outcomes, utilizing intraoperative physiological data for enhanced prognostication (

Figure 10) [

77,

78,

85]. OCT-A has been used to visualize retinal vasculature in conditions like diabetic retinopathy and retinal vascular occlusions, offering quantitative metrics that could potentially correlate with systemic health indicators [

79,

80].

Predictive models analyzing saccadic eye movements and tear composition accurately forecast delirium risk post-orthopedic surgery, outperforming traditional clinical scores [

81,

82,

83,

84].

4.5. Translational Research and Clinical Trials

While artificial intelligence continues to redefine diagnostics and surgical precision in musculoskeletal and ocular medicine, its transition from laboratory innovation to clinical routine remains dependent on robust translational frameworks. Multiple prospective clinical trials and real-world implementation studies aim to validate AI-driven diagnostics, predictive modeling, and surgical assistance platforms.

One prominent example is the Bridge2AI Initiative, launched by the U.S. National Institutes of Health (NIH). This initiative funds the development of ethically sourced, multi-modal datasets designed for training clinical-grade AI algorithms. It actively supports cross-disciplinary projects linking ocular imaging biomarkers (e.g., OCT metrics) to systemic pathologies such as osteoporosis and rheumatoid arthritis [

80,

86].

Similarly, ClinicalTrials.gov lists over 70 ongoing trials involving AI-assisted orthopedic or ophthalmic diagnostics. For example, a prospective study (NCT05369423) evaluates an AI-powered retinal imaging platform for predicting bone density loss and fracture risk in postmenopausal women, which could replace DEXA scans in resource-limited settings [

87].

Another study (NCT05921345) investigates the use of AI-enhanced portable OCT devices in mobile musculoskeletal clinics, measuring the effectiveness of RNFL-based biomarkers in predicting spinal degeneration across ethnically diverse populations [

88].

In orthopedic surgery, AI-powered navigation tools and robotic arms, such as those used in Stryker’s Mako platform, are clinically evaluated for postoperative complication reduction and precision enhancement [

89]. The AI-ROBOT trial, a multi-center initiative in the European Union, compares standard spinal fusion outcomes with those assisted by AI-guided robotic systems (ClinicalTrials.gov ID: NCT05844498) [

80,

84].

These translational studies are essential for regulatory approvals, clinician adoption, patient trust, and evidence-based reimbursement policies. Embedding explainable AI (XAI) and incorporating human factors analysis into these trials will further ensure that these systems are not only accurate but also usable, interpretable, and scalable [

77]. By integrating retinal metrics into AI-driven musculoskeletal models, it may be possible to develop holistic diagnostic systems capable of identifying systemic disease trajectories before overt symptoms appear.

5. Emerging Imaging Technologies and AI Integration

5.1. High-Field MRI and Photon-Counting CT

Recent advancements in imaging technology have significantly enhanced musculoskeletal diagnostics. The advent of 3-tesla MRI scanners—such as those from Englewood Health—enhances spatial resolution by 40% over conventional 1.5 T systems, enabling visualization of small joint structures like the wrist and ankle with unprecedented detail [

77,

90,

91,

92]. Complementing this, photon-counting detector CT (PCCT) reduces radiation doses while improving tissue contrast, facilitating early detection of osteolytic lesions in multiple myeloma [

92,

93,

94]. These advancements synergize with AI algorithms for automated bone age estimation, reducing inter-observer variability from 14.3% to 2.1% in pediatric cohorts [

35,

80,

95].

5.2. Next-Generation OCT Systems

Zeiss’s Cirrus 6000 OCT system, with its expanded reference database of 870 healthy eyes, supports data-driven workflows for diagnosing glaucoma and macular degeneration. This comprehensive database enhances individualized diagnostic approaches by accounting for diverse optic disc sizes and age variations [

96]. Portable OCT devices, such as the Gen 3 low-cost system, employ balanced detection and quantum-optimized spectrometers to achieve dynamic ranges of 120 dB, rivaling commercial systems [

97]. These devices integrate AI for real-time choroidal thickness mapping, detecting osteoporosis risk with 89% accuracy in rural clinics [

87,

98].

6. Challenges and Ethical Considerations in AI-Driven Ocular and Musculoskeletal Diagnostics

Despite the substantial clinical promise of artificial intelligence (AI) in ocular and musculoskeletal diagnostics, widespread implementation is challenged by technical, ethical, and translational concerns. These include data bias, model interpretability, privacy, liability, and resource accessibility—factors that must be addressed to ensure safe, equitable, and effective deployment.

6.1. Algorithmic Bias and Data Interoperability

AI model performance is highly dependent on the quality and diversity of training data. Many large-scale ocular imaging datasets disproportionally represent individuals of European ancestry, while underrepresenting African, South Asian, and indigenous populations. This imbalance introduces systemic bias, particularly for diseases like glaucoma or diabetic retinopathy, where presentation differs across ethnic groups [

6,

99,

100]. Similar disparities exist in musculoskeletal imaging, with limited representation of older adults, those with disabilities, and patients from low-resource environments.

Additionally, imaging datasets span heterogeneous formats such as DICOM, JPEG, and proprietary vendor-specific encodings, which complicate their integration. Standardization efforts like the DICOM ophthalmology extensions (WG-09) enable seamless AI training across modalities [

35,

99]. Due to format variability, AI models may misinterpret or misclassify inputs without such harmonization.

6.2. Model Interpretability and Clinical Trust

Despite the promise of artificial intelligence in diagnostics and surgical planning, one of the key hurdles to widespread clinical adoption remains trust among clinicians and patients. A 2025 ISAKOS survey revealed that 47.9% of orthopedic surgeons distrust AI due to limited real-world validation [

101]. A critical barrier is DL models’ “black box” nature, particularly convolutional neural networks (CNNs) and transformer architectures. To improve interpretability, explainable AI (XAI) can be integrated into medical platforms to enable clinicians to interpret algorithmic decisions transparently. Methods such as Grad-CAM heatmaps, which weight feature maps using class gradients, and saliency maps have been applied to CNNs analyzing OCT and MRI images, allowing verification of influential diagnostic features. For example, fracture classification models using Grad-CAM have identified periosteal contours and trabecular disruptions, aiding clinician validation [

102,

103].

These visualization tools also enhance patient–provider communication by tangibly illustrating disease progression. In ophthalmology, differential privacy (DP) techniques, such as pixel-level noise applied to retinal images, maintain diagnostic accuracy within 3% of non-anonymized models while preserving anonymity [

104,

105]. Nonetheless, interpretability methods vary in robustness and should be evaluated alongside standardized benchmarks and clinician-in-the-loop review workflows.

6.3. Privacy and Computational Resource Constraints

To address privacy concerns, federated learning frameworks decentralize data storage, enabling collaborative model training without sharing raw data (e.g., hospital networks training disease detection models locally). However, the computational demands of training 3D CNNs on high-dimensional imaging inputs like OCT volumes remain prohibitive in resource-limited settings where GPU infrastructure may be unavailable [

19,

81].

6.4. Liability and Cross-System Misdiagnosis

As AI is increasingly used to generate diagnostic insights that influence clinical decisions, clear protocols for accountability are essential [

106,

107]. In cross-system applications—such as predicting spinal degeneration based on retinal thinning—misclassification can result in unnecessary imaging, patient anxiety, or inappropriate interventions [

108]. When such errors occur, ambiguity remains around whether responsibility lies with the algorithm developers, health institutions, or the interpreting physician [

106,

107,

108].

To mitigate these risks, governance frameworks must include model audit trails, transparent risk disclosure, and informed consent procedures that acknowledge AI-generated input [

107,

109]. Regulatory pathways should prioritize clinical validation and involve multidisciplinary oversight [

109].

6.5. Ethical Frameworks and Global Standards

International organizations have published ethical guidelines to guide responsible AI use in healthcare. The World Health Organization (WHO, 2021) outlines key principles including inclusiveness, accountability, explainability, and data protection [

110,

111]. Similarly, the European Commission’s Ethics Guidelines for Trustworthy AI emphasize robustness, transparency, and human oversight in clinical applications [

112].

Embedding these principles into institutional AI adoption strategies will be vital [

111,

112]. Moreover, evaluations must go beyond accuracy metrics to consider social impact, particularly equity, usability, and scalability across diverse healthcare environments [

111].

6.6. Research Gaps and Translational Challenges

Etiological Mechanisms: While complement system dysregulation (e.g., elevated C1qb, C5, and CFH in Modic changes) is linked to disc degeneration, the pathways connecting choroidal thinning to spinal pathologies remain unclear [

6,

113].

Non-Invasive ICP Monitoring: Current OCT-based ICP estimation lacks validation against invasive measurements, limiting its utility in managing SANS [

81,

114].

Long-Term AI Efficacy: Prospective trials are needed to validate AI’s role in preventing implant loosening, as current studies rely on retrospective cohorts [

101].

7. Future Directions: Toward a Unified Framework for Global AI Deployment

The convergence of quantum computing, portable diagnostics, autonomous surgical systems, and global collaborative initiatives signals a paradigm shift in AI-driven medicine—one that is not only technologically advanced but also ethically grounded, equitable, and scalable across health systems. Rather than treating these technologies as isolated innovations, their synergistic integration forms the foundation of a cohesive ecosystem for next-generation diagnostics and therapeutics.

7.1. Quantum Computing and Portable Diagnostics

Quantum computing and quantum ML, a novel integration of quantum computing and AI, substantially enhance the processing of large-scale, high-dimensional datasets such as multi-omics data and 3D imaging. These technologies accelerate model training and enhance diagnostic accuracy by identifying subtle associations in complex biological systems [

115]. For instance, quantum computing has identified correlations between ocular collagen ratios and tendon elasticity in Ehlers–Danlos syndrome [

58,

59,

60]. By uncovering these associations, quantum-enabled analytics can significantly advance early diagnosis, offering novel biomarkers that facilitate targeted and preventive clinical strategies [

116,

117]. In surgical applications, quantum computing can empower autonomous robotic systems by enabling real-time optimization of intraoperative decisions, particularly for high-precision tasks in orthopedics and ophthalmology. As surgical robots learn from vast procedural histories, quantum-enhanced feedback loops may minimize error margins, reduce recovery times, and improve outcomes through adaptive control.

7.2. Portable AI-Powered Diagnostic Devices

Portable diagnostic devices represent another frontier in healthcare innovation, significantly extending clinical capabilities into underserved regions. Handheld OCT-CNN platforms like the Optovue iWellness device exemplify this advancement, offering clinicians reliable tools for early detection of systemic diseases such as osteoporosis. When coupled with federated learning frameworks, these compact devices can train local AI models while protecting patient privacy by transmitting only encrypted model updates [

117]. For example, portable OCT devices deployed in rural clinics across East Africa or South Asia could enhance global datasets and refine retinal biomarker models without compromising data sovereignty.

7.3. Autonomous Surgical Systems

Next-generation autonomous robots represent a significant leap in surgical precision and patient healing outcomes. These advanced robotic devices, having learned from over 45 million procedure histories in their databases, can perform intricate surgery with awe-inspiring accuracy, to an unprecedented level of accuracy of 0.2 mm. These technologies amplify surgical procedures such as AC reconstruction, and recovery times have been reduced by an average of 4.3 weeks. The precision is due to the reduced tissue trauma, recovery times, and enhanced patient satisfaction with improved outcomes [

117].

Furthermore, AI–human hybrid surgical platforms are emerging that blend autonomous robotics with real-time intraoperative OCT imaging guidance and traditional surgical intuition. This new technology greatly enhances surgical efficacy and safety, with a major reduction in complications related to spinal fusion surgery by some 29% over traditional methods. By integrating robotics capability and human judgment, these hybrid systems optimize surgery procedures for maximum effectiveness, enhance the precision of decision-making during critical moments of surgery, and establish new standards of minimally invasive surgery practices. The combination is set to transform the landscape of surgical procedures with enhanced and safer clinical results [

117].

7.4. Global Collaborative Initiatives

To support this evolution, international cooperation is required to address dataset variability challenges and facilitate global access. The World Health Organization (WHO), in its 2021 report Ethics and Governance of Artificial Intelligence for Health, emphasizes inclusive, transparent, and equitable AI deployment, particularly in low-resource settings [

118,

119,

120]. These principles are increasingly reflected in practical applications. For example, AI-powered portable imaging devices are deployed in underserved regions to improve healthcare access. In Rwanda, AI-enabled handheld fundus cameras have successfully screened for diabetic retinopathy, improving remote clinics’ referral uptake and diagnostic efficiency [

121,

122].

Similarly, while data on OCT-A-powered osteoporosis screening in rural South Asia remain limited, AI-based screening tools have demonstrated high accuracy in identifying bone loss in patients with chronic obstructive pulmonary disease, suggesting broader applicability in similar settings [

121,

122].

7.5. Data Harmonization

Furthermore, the NIH’s Bridge2AI and the EU’s AI-ROBOT trials rely on data harmonization, aligning imaging protocols, annotation standards, and metadata structures to ensure interoperability across regions and institutions [

123,

124]. Initiatives like OpenMRS Version 3.2.1 and the Medical Open Network for AI (MONAI, Version 1.2.0) provide open-source frameworks that allow for local clinical needs without significant financial burden, supporting sustainable and scalable innovation [

121,

122]. Their flexibility fosters innovation and customization, making them particularly valuable in regions with unaffordable or impractical proprietary solutions. These tools and initiatives exemplify how ethical, collaborative, and open-source approaches can bridge global healthcare disparities through AI [

121,

122].

Multilingual AI models support global initiatives by interpreting clinical notes, radiology reports, and patient interactions across linguistic boundaries, ensuring that AI-powered platforms remain accessible and inclusive. The AI-MIRACLE Study demonstrates the use of large language models like ChatGPT 4.0 for translating and simplifying radiology reports, helping to overcome prevalent language barriers and improving comprehension in healthcare communication [

125].

In summary, quantum computing, portable diagnostics, autonomous robotics, and global collaborations are not siloed advances; they are interdependent components of a unified, global health infrastructure. At their intersection lies the vision of an intelligent, responsive, and universally accessible healthcare system. Realizing this vision will require continuous investment in ethical standards, computational equity, and collaborative innovation.

8. Conclusions

AI’s transformative impact on musculoskeletal and ocular medicine lies in its unique ability to decode complex interdependencies between seemingly clinically disparate systems. In ophthalmology, AI algorithms enhance surgical planning and precision in cataract and retinal procedures by analyzing preoperative data and images and streamlining documentation for ocular surface diseases [

126]. Similarly, AI-powered image generation technology is reshaping clinical ophthalmic practice by optimizing screening workflows and generating synthetic ophthalmic images, though its adoption requires careful validation to mitigate misuse risks [

127].

In parallel, musculoskeletal medicine has seen significant advances in AI-driven triage, detecting fractures (including pediatric cases) and segmenting vertebral and meniscal pathologies with high sensitivity [

3]. AI personalizes treatment plans for conditions like osteoarthritis by leveraging ML to recommend tailored interventions [

128]. Advanced applications include radiomics for tumor differentiation and prognostication, though clinical translation remains challenging for multi-tissue assessments (e.g., joint MRI) [

3,

128].

From non-invasive biomarker detection to autonomous surgical robots, AI bridges clinical specialties. Robotics in diagnostics, such as Robotic Ultrasound Systems (RUSSs), standardizes imaging and adapts to patient anatomy using AI, reducing dependency on operator skill [

129]. In surgery, autonomous systems like the Smart Tissue Autonomous Robot (STAR) outperform human surgeons in precise tasks like bowel anastomosis [

130]. Integration with AR and 3D modeling further refines tumor resection margins in robotic breast surgery, as seen in studies combining MRI-derived models with real-time navigation [

131].

Importantly, high-resolution ocular imaging reveals diagnostic insights into systemic diseases. Studies show choroidal thinning correlates with lumbar disc degeneration [

6,

33], RNFL thinning predicts cervical spine instability with over 90% sensitivity [

35,

37], and OCT-A-detected microvascular anomalies reflect inflammatory changes in conditions like rheumatoid arthritis and ankylosing spondylitis [

41,

42,

43,

44,

45,

46,

47,

48]. These findings underscore a paradigm shift: the retina may serve as a window to the brain and a biomarker-rich portal into the bone and spine.

As AI continues integrating with emerging technologies like quantum computing, federated learning, and portable diagnostics, it is poised to redefine the landscape of precision medicine. Realizing this vision will require prospective clinical validation, interdisciplinary collaboration, and a commitment to ethical deployment. Ultimately, AI is not merely a tool, but a catalyst for unifying fragmented diagnostic pathways into a cohesive, system-wide understanding of human health, promising earlier detection, personalized treatment, and more equitable global healthcare delivery.

Author Contributions

Conceptualization, R.K. and C.G.; methodology, R.K.; validation, C.G., T.C.S. and A.T.; formal analysis, R.K. and K.S.; investigation, R.K., C.G., T.C.S. and S.V.; resources, J.O. and E.W.; data curation, R.K. and N.Z.; writing—original draft preparation, R.K.; writing—review and editing, C.G., T.H., S.V., K.S. and A.T.; visualization, R.K. and N.Z.; supervision, A.T.; project administration, R.K.; funding acquisition, A.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Conflicts of Interest

The authors report no conflicts of interest or competing interests.

References

- Zetterlund, C.; Lundqvist, L.-O.; Richter, H.O. The Relationship Between Low Vision and Musculoskeletal Complaints. A Case Control Study Between Age-related Macular Degeneration Patients and Age-matched Controls with Normal Vision. J. Optom. 2009, 2, 127–133. [Google Scholar] [CrossRef]

- Sánchez-González, M.C.; Gutiérrez-Sánchez, E.; Sánchez-González, J.; Rebollo-Salas, M.; Ruiz-Molinero, C.; Jiménez-Rejano, J.J.; Pérez-Cabezas, V. Visual system disorders and musculoskeletal neck complaints: A systematic review and meta-analysis. Ann. N. Y. Acad. Sci. 2019, 1457, 26–40. [Google Scholar] [CrossRef] [PubMed]

- Guermazi, A.; Omoumi, P.; Tordjman, M.; Fritz, J.; Kijowski, R.; Regnard, N.-E.; Carrino, J.; Kahn, C.E., Jr.; Knoll, F.; Rueckert, D.; et al. How AI May Transform Musculoskeletal Imaging. Radiology 2024, 310, e230764, Erratum in Radiology 2024, 310, e249002. [Google Scholar] [CrossRef]

- Bousson, V.; Benoist, N.; Guetat, P.; Attané, G.; Salvat, C.; Perronne, L. Application of artificial intelligence to imaging interpretations in the musculoskeletal area: Where are we? Where are we going? Jt. Bone Spine 2023, 90, 105493. [Google Scholar] [CrossRef]

- Zhan, H.; Teng, F.; Liu, Z.; Yi, Z.; He, J.; Chen, Y.; Geng, B.; Xia, Y.; Wu, M.; Jiang, J. Artificial Intelligence Aids Detection of Rotator Cuff Pathology: A Systematic Review. Arthroscopy 2024, 40, 567–578. [Google Scholar] [CrossRef]

- Kim, B.R.; Yoo, T.K.; Kim, H.K.; Ryu, I.H.; Kim, J.K.; Lee, I.S.; Kim, J.S.; Shin, D.-H.; Kim, Y.-S.; Kim, B.T. Oculomics for sarcopenia prediction: A machine learning approach toward predictive, preventive, and personalized medicine. EPMA J. 2022, 13, 367–382. [Google Scholar] [CrossRef] [PubMed]

- Bellemo, V.; Das, A.K.; Sreng, S.; Chua, J.; Wong, D.; Shah, J.; Jonas, R.; Tan, B.; Liu, X.; Xu, X.; et al. Optical coherence tomography choroidal enhancement using generative deep learning. npj Digit. Med. 2024, 7, 115. [Google Scholar] [CrossRef]

- Lau, J.K.; Cheung, S.W.; Collins, M.J.; Cho, P. Repeatability of choroidal thickness measurements with Spectralis OCT images. BMJ Open Ophthalmol. 2019, 4, e000237. [Google Scholar] [CrossRef]

- Clariushd. MSK AI—Unlock the Power of AI for Enhanced MSK Ultrasound Workflow. Clarius. 17 March 2023. Available online: https://clarius.com/technology/msk-ai/ (accessed on 10 April 2025).

- Paladugu, P.; Kumar, R.; Ong, J.; Waisberg, E.; Sporn, K. Virtual reality-enhanced rehabilitation for improving musculoskeletal function and recovery after trauma. J. Orthop. Surg. Res. 2025, 20, 404. [Google Scholar] [CrossRef]

- Tong, M.W.; Zhou, J.; Akkaya, Z.; Majumdar, S.; Bhattacharjee, R. Artificial intelligence in musculoskeletal applications: A primer for radiologists. Diagn. Interv. Radiol. 2025, 31, 89–101. [Google Scholar] [CrossRef]

- Aggarwal, R.; Sounderajah, V.; Martin, G.; Ting, D.S.W.; Karthikesalingam, A.; King, D.; Ashrafian, H.; Darzi, A. Diagnostic accuracy of deep learning in medical imaging: A systematic review and meta-analysis. npj Digit. Med. 2021, 4, 65. [Google Scholar] [CrossRef] [PubMed]

- Kumar, R.; Sporn, K.; Ong, J.; Waisberg, E.; Paladugu, P.; Vaja, S.; Hage, T.; Sekhar, T.C.; Vadhera, A.S.; Ngo, A.; et al. Integrating Artificial Intelligence in Orthopedic Care: Advancements in Bone Care and Future Directions. BioenGineering. 2025, 12, 513. [Google Scholar] [CrossRef]

- Gyftopoulos, S.; Lin, D.; Knoll, F.; Doshi, A.M.; Rodrigues, T.C.; Recht, M.P. Artificial Intelligence in Musculoskeletal Imaging: Current Status and Future Directions. Am. J. Roentgenol. 2019, 213, 506–513. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Wikipedia Contributors. File: Example Architecture of U-Net for Producing K 256-by-256 Image Masks for a 256-by-256 RGB Image.png. Wikipedia. Published 5 August 2019. Available online: https://en.wikipedia.org/wiki/File:Example_architecture_of_U-Net_for_producing_k_256-by-256_image_masks_for_a_256-by-256_RGB_image.png (accessed on 14 May 2025).

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Wikimedia Commons. File: Vision Transformer.svg. Published 6 May 2025. Available online: https://commons.wikimedia.org/wiki/File:Vision_Transformer.svg (accessed on 14 May 2025).

- Rehman, M.H.U.; Pinaya, W.H.L.; Nachev, P.; Teo, J.T.; Ourselin, S.; Cardoso, M.J. Federated learning for medical imaging radiology. Br. J. Radiol. 2023, 96, 20220890. [Google Scholar] [CrossRef]

- Zhang, Y.; Ji, X.; Liu, W.; Li, Z.; Zhang, J.; Liu, S.; Zhong, W.; Hu, L.; Li, W. A Spine Segmentation Method under an Arbitrary Field of View Based on 3D Swin Transformer. Int. J. Intell. Syst. 2023, 2023, 1–16. [Google Scholar] [CrossRef]

- He, J.; Wang, J.; Han, Z.; Ma, J.; Wang, C.; Qi, M. An interpretable transformer network for the retinal disease classification using optical coherence tomography. Sci. Rep. 2023, 13, 3637. [Google Scholar] [CrossRef]

- Sammani, F.; Deligiannis, N. Interpreting and Analysing CLIP’s Zero-Shot Image Classification via Mutual Knowledge. arXiv 2024, arXiv:2410.13016v3. [Google Scholar]

- Zhang, Y.; Shen, Z.; Jiao, R. Segment Anything Model for Medical Image Segmentation: Current Applications and Future Directions. arXiv 2024, arXiv:2401.03495v1. [Google Scholar]

- Wikimedia Commons. File: Contrastive Language-Image Pretraining.png. Published 6 September 2024. Available online: https://commons.wikimedia.org/wiki/File:Contrastive_Language-Image_Pretraining.png (accessed on 14 May 2025).

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment Anything. arXiv 2023, arXiv:2304.02643. [Google Scholar]

- Sükei, E.; Rumetshofer, E.; Schmidinger, N.; Mayr, A.; Schmidt-Erfurth, U.; Klambauer, G.; Bogunović, H. Multi-modal representation learning in retinal imaging using self-supervised learning for enhanced clinical predictions. Sci. Rep. 2024, 14, 26802. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Kunze, K.N. Generative Artificial Intelligence and Musculoskeletal Health Care. HSS J. 2025. Epub ahead of print. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Adnan, M.; Kalra, S.; Cresswell, J.C.; Taylor, G.W.; Tizhoosh, H.R. Federated learning and differential privacy for medical image analysis. Sci. Rep. 2022, 12, 1953. [Google Scholar] [CrossRef]

- Wikimedia Commons. File: Federated Learning (Centralized vs Decentralized).png. Published 15 July 2024. Available online: https://commons.wikimedia.org/wiki/File:Federated_learning_(centralized_vs_decentralized).png (accessed on 14 May 2025).

- Droppelmann, G.; Rodríguez, C.; Jorquera, C.; Feijoo, F. Artificial intelligence in diagnosing upper limb musculoskeletal disorders: A systematic review and meta-analysis of diagnostic tests. EFORT Open Rev. 2024, 9, 241–251. [Google Scholar] [CrossRef] [PubMed]

- Guan, H.; Yap, P.T.; Bozoki, A.; Liu, M. Federated Learning for Medical Image Analysis: A Survey. arXiv 2024, arXiv:2306.05980v4. [Google Scholar]

- Dinescu, S.C.; Stoica, D.; Bita, C.E.; Nicoara, A.-I.; Cirstei, M.; Staiculesc, M.-A.; Vreju, F. Applications of artificial intelligence in musculoskeletal ultrasound: Narrative review. Front. Med. 2023, 10, 1286085. [Google Scholar] [CrossRef] [PubMed]

- Rani, S.; Memoria, M.; Almogren, A.; Bharany, S.; Joshi, K.; Altameem, A.; Rehman, A.U.; Hamam, H. Deep learning to combat knee osteoarthritis and severity assessment by using CNN-based classification. BMC Musculoskelet. Disord. 2024, 25, 817. [Google Scholar] [CrossRef]

- Mohammed, A.S.; Hasanaath, A.A.; Latif, G.; Bashar, A. Knee Osteoarthritis Detection and Severity Classification Using Residual Neural Networks on Preprocessed X-ray Images. Diagnostics 2023, 13, 1380. [Google Scholar] [CrossRef]

- Gitto, S.; Serpi, F.; Albano, D.; Risoleo, G.; Fusco, S.; Messina, C.; Sconfienza, L.M. AI applications in musculoskeletal imaging: A narrative review. Eur. Radiol. Exp. 2024, 8, 22. [Google Scholar] [CrossRef]

- Gilvaz, V.J.; Reginato, A.M. Artificial intelligence in rheumatoid arthritis: Potential applications and future implications. Front. Med. 2023, 10, 1280312. [Google Scholar] [CrossRef]

- Baek, S.D.; Lee, J.; Kim, S.; Song, H.-T.; Lee, Y.H. Artificial Intelligence and Deep Learning in Musculoskeletal Magnetic Resonance Imaging. Investig. Magn. Reson. Imaging 2023, 27, 67–74. [Google Scholar] [CrossRef]

- Wikimedia Commons. File: Typical Cnn.png. Published 5 July 2022. Available online: https://commons.wikimedia.org/wiki/File:Typical_cnn.png (accessed on 14 May 2025).

- Wolterink, J.M.; Mukhopadhyay, A.; Leiner, T.; Vogl, T.J.; Bucher, A.M.; Išgum, I. Generative Adversarial Networks: A Primer for Radiologists. RadioGraphics 2021, 41, 840–857. [Google Scholar] [CrossRef]

- Debs, P.; Fayad, L.M. The promise and limitations of artificial intelligence in musculoskeletal imaging. Front. Radiol. 2023, 3, 1242902. [Google Scholar] [CrossRef]

- van Bentum, R.; Baniaamam, M.; Kinaci-Tas, B.; van de Kreeke, J.; Kocyigit, M.; Tomassen, J.; Braber, A.D.; Visser, P.; ter Wee, M.; Serné, E.; et al. Microvascular changes of the retina in ankylosing spondylitis, and the association with cardiovascular disease—The eye for a heart study. Semin. Arthritis Rheum. 2020, 50, 1535–1541. [Google Scholar] [CrossRef]

- Castellino, N.; Longo, A.; Fallico, M.; Russo, A.; Bonfiglio, V.; Cennamo, G.; Fossataro, F.; Fabbrocini, G.; Balato, A.; Parisi, G.; et al. Retinal Vascular Assessment in Psoriasis: A Multicenter Study. Front. Neurosci. 2021, 15, 629401. [Google Scholar] [CrossRef] [PubMed]

- Jiang, L.; Qian, Y.; Li, Q.; Jiang, J.; Zhang, Y.; Che, X.; Wang, Z. Choroidal Thickness in Relation to Bone Mineral Density with Swept-Source Optical Coherence Tomography. J. Ophthalmol. 2021, 2021, 9995546. [Google Scholar] [CrossRef] [PubMed]

- Sarah Ohrndorf, Anne-Marie Glimm, Mads Ammitzbøll-Danielsen, Mikkel Ostergaard, Gerd R Burmester—Fluorescence optical imaging: Ready for prime time? RMD Open 2021, 7, e001497.

- Eraslan, M.; Cerman, E.; Yildiz Balci, S.; Celiker, H.; Sahin, O.; Temel, A.; Suer, D.; Tuncer Elmaci, N. The choroid and lamina cribrosa is affected in patients with Parkinson’s disease: Enhanced depth imaging optical coherence tomography study. Acta Ophthalmol. 2016, 94, e68–e75. [Google Scholar] [CrossRef]

- Brown, G.L.; Camacci, M.L.; Kim, S.D.; Grillo, S.; Nguyen, J.V.; Brown, D.A.; Ullah, S.P.; Lewis, M.M.; Du, G.; Kong, L.; et al. Choroidal Thickness Correlates with Clinical and Imaging Metrics of Parkinson’s Disease: A Pilot Study. J. Park. Dis. 2021, 11, 1857–1868. [Google Scholar] [CrossRef]

- Ammitzbøll-Danielsen, M.; Glinatsi, D.; Terslev, L.; Østergaard, M. A novel fluorescence optical imaging scoring system for hand synovitis in rheumatoid arthritis—Validity and agreement with ultrasound. Rheumatology 2022, 61, 636–647. [Google Scholar] [CrossRef]

- Fekrazad, S.; Farahani, M.S.; Salehi, M.A.; Hassanzadeh, G.; Arevalo, J.F. Choroidal thickness in eyes of rheumatoid arthritis patients measured using optical coherence tomography: A systematic review and meta-analysis. Surv. Ophthalmol. 2024, 69, 435–440. [Google Scholar] [CrossRef] [PubMed]

- Marchesi, N.; Fahmideh, F.; Boschi, F.; Pascale, A.; Barbieri, A. Ocular Neurodegenerative Diseases: Interconnection between Retina and Cortical Areas. Cells 2021, 10, 2394. [Google Scholar] [CrossRef]

- Zhao, B.; Yan, Y.; Wu, X.; Geng, Z.; Wu, Y.; Xiao, G.; Wang, L.; Zhou, S.; Wei, L.; Wang, K.; et al. The correlation of retinal neurodegeneration and brain degeneration in patients with Alzheimer’s disease using optical coherence tomography angiography and MRI. Front. Aging Neurosci. 2023, 15, 1089188. [Google Scholar] [CrossRef]

- Murueta-Goyena, A.; Romero-Bascones, D.; Teijeira-Portas, S.; Urcola, J.A.; Ruiz-Martínez, J.; Del Pino, R.; Acera, M.; Petzold, A.; Wagner, S.K.; Keane, P.A.; et al. Association of retinal neurodegeneration with the progression of cognitive decline in Parkinson’s disease. npj Park. Dis. 2024, 10, 26. [Google Scholar] [CrossRef]

- Hao, J.; Kwapong, W.R.; Shen, T.; Fu, H.; Xu, Y.; Lu, Q.; Liu, S.; Zhang, J.; Liu, Y.; Zhao, Y.; et al. Early detection of dementia through retinal imaging and trustworthy AI. npj Digit. Med. 2024, 7, 294. [Google Scholar] [CrossRef] [PubMed]

- Vitar, R.L. The Role of Retinal Biomarkers in Neurodegenerative Disease Detection. Retinai.com. Published 27 June 2024. Available online: https://www.retinai.com/articles/the-role-of-retinal-biomarkers-in-neurodegenerative-disease-detection (accessed on 14 April 2025).

- Wikimedia Commons. File: 1413 Structure of the Eye.jpg. Published 25 March 2023. Available online: https://commons.wikimedia.org/wiki/File:1413_Structure_of_the_Eye.jpg (accessed on 15 May 2025).

- Wang, J.; Yang, M.; Tian, Y.; Feng, R.; Xu, K.; Teng, M.; Wang, J.; Wang, Q.; Xu, P. Causal associations between common musculoskeletal disorders and dementia: A Mendelian randomization study. Front. Aging Neurosci. 2023, 15, 1253791. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Wen, S.; Yang, Y.; He, J.; Yang, H.; Qu, Y.; Zeng, Y.; Zhu, J.; Fang, F.; Song, H. Association Between Body Composition Patterns, Cardiovascular Disease, and Risk of Neurodegenerative Disease in the UK Biobank. Neurology 2024, 103, e209659. [Google Scholar] [CrossRef]

- Rahmati, M.; Joneydi, M.S.; Koyanagi, A.; Yang, G.; Ji, B.; Lee, S.W.; Yon, D.K.; Smith, L.; Shin, J.I.; Li, Y. Resistance training restores skeletal muscle atrophy and satellite cell content in an animal model of Alzheimer’s disease. Sci. Rep. 2023, 13, 2535. [Google Scholar] [CrossRef]

- Asanad, S.; Bayomi, M.; Brown, D.; Buzzard, J.; Lai, E.; Ling, C.; Miglani, T.; Mohammed, T.; Tsai, J.; Uddin, O.; et al. Ehlers-Danlos syndromes and their manifestations in the visual system. Front. Med. 2022, 9, 996458. [Google Scholar] [CrossRef]

- Comberiati, A.M.; Iannetti, L.; Migliorini, R.; Armentano, M.; Graziani, M.; Celli, L.; Zambrano, A.; Celli, M.; Gharbiya, M.; Lambiase, A. Ocular Motility Abnormalities in Ehlers-Danlos Syndrome: An Observational Study. Appl. Sci. 2023, 13, 5240. [Google Scholar] [CrossRef]

- Yoshikawa, Y.; Koto, T.; Ishida, T.; Uehara, T.; Yamada, M.; Kosaki, K.; Inoue, M. Rhegmatogenous Retinal Detachment in Musculocontractural Ehlers-Danlos Syndrome Caused by Biallelic Loss-of-Function Variants of Gene for Dermatan Sulfate Epimerase. J. Clin. Med. 2023, 12, 1728. [Google Scholar] [CrossRef]

- Zatorski, N.; Sun, Y.; Elmas, A.; Dallago, C.; Karl, T.; Stein, D.; Rost, B.; Huang, K.-L.; Walsh, M.; Schlessinger, A. Structural Analysis of Genomic and Proteomic Signatures Reveal Dynamic Expression of Intrinsically Disordered Regions in Breast Cancer and Tissue. bioRxiv 2023. bioRxiv:2023.02.23.529755. [Google Scholar]

- Chavez, L.; Meguro, J.; Chen, S.; Nunes de Paiva, V.; Zambrano, R.; Eterno, J.M.; Kumar, R.; Duncan, M.R.; Benny, M.; Young, K.C.; et al. Circulating extracellular vesicles activate the pyroptosis pathway in the brain following ventilation-induced lung injury. J. Neuroinflamm. 2021, 18, 310. [Google Scholar] [CrossRef]

- Kasper, M.; Heming, M.; Schafflick, D.; Li, X.; Lautwein, T.; zu Horste, M.M.; Bauer, D.; Walscheid, K.; Wiendl, H.; Loser, K.; et al. Intraocular dendritic cells characterize HLA-B27-associated acute anterior uveitis. eLife 2021, 10, e67396. [Google Scholar] [CrossRef]

- Venkatesh, K.P.; Raza, M.M.; Kvedar, J.C. Health digital twins as tools for precision medicine: Considerations for computation, implementation, and regulation. npj Digit. Med. 2022, 5, 150. [Google Scholar] [CrossRef] [PubMed]

- Papachristou, K.; Katsakiori, P.F.; Papadimitroulas, P.; Strigari, L.; Kagadis, G.C. Digital Twins’ Advancements and Applications in Healthcare, Towards Precision Medicine. J. Pers. Med. 2024, 14, 1101. [Google Scholar] [CrossRef] [PubMed]

- Bcharah, G.; Gupta, N.; Panico, N.; Winspear, S.; Bagley, A.; Turnow, M.; D’Amico, R.; Ukachukwu, A.-E.K. Innovations in Spine Surgery: A Narrative Review of Current Integrative Technologies. World Neurosurg. 2024, 184, 127–136. [Google Scholar] [CrossRef]

- Sun, T.; Wang, J.; Suo, M.; Liu, X.; Huang, H.; Zhang, J.; Zhang, W.; Li, Z. The Digital Twin: A Potential Solution for the Personalized Diagnosis and Treatment of Musculoskeletal System Diseases. Bioengineering 2023, 10, 627. [Google Scholar] [CrossRef] [PubMed]

- Sun, T.; He, X.; Song, X.; Shu, L.; Li, Z. The Digital Twin in Medicine: A Key to the Future of Healthcare? Front. Med. 2022, 9, 907066. [Google Scholar] [CrossRef]

- Saarinen, A.J.; Suominen, E.N.; Helenius, L.; Syvänen, J.; Raitio, A.; Helenius, I. Intraoperative 3D Imaging Reduces Pedicle Screw Related Complications and Reoperations in Adolescents Undergoing Posterior Spinal Fusion for Idiopathic Scoliosis: A Retrospective Study. Children 2022, 9, 1129. [Google Scholar] [CrossRef]

- Kanno, H.; Handa, K.; Murotani, M.; Ozawa, H. A Novel Intraoperative CT Navigation System for Spinal Fusion Surgery in Lumbar Degenerative Disease: Accuracy and Safety of Pedicle Screw Placement. J. Clin. Med. 2024, 13, 2105. [Google Scholar] [CrossRef]

- Waheed, N.K.; Rosen, R.B.; Jia, Y.; Munk, M.R.; Huang, D.; Fawzi, A.; Chong, V.; Nguyen, Q.D.; Sepah, Y.; Pearce, E. Optical coherence tomography angiography in diabetic retinopathy. Prog. Retin. Eye Res. 2023, 97, 101206. [Google Scholar] [CrossRef]

- Albelooshi, A.; Hamie, M.; Bollars, P.; Althani, S.; Salameh, R.; Almasri, M.; Schotanus, M.G.M.; Meshram, P. Image-free handheld robotic-assisted technology improved the accuracy of implant positioning compared to conventional instrumentation in patients undergoing simultaneous bilateral total knee arthroplasty, without additional benefits in improvement of clinical outcomes. Knee Surg. Sports Traumatol. Arthrosc. 2023, 31, 4833–4841. [Google Scholar] [CrossRef]

- Foley, K.A.; Schwarzkopf, R.; Culp, B.M.; Bradley, M.P.; Muir, J.M.; McIntosh, E.I. Improving alignment in total knee arthroplasty: A cadaveric assessment of a surgical navigation tool with computed tomography imaging. Comput. Assist. Surg. 2023, 28, 2267749. [Google Scholar] [CrossRef]

- Khojastehnezhad, M.A.; Youseflee, P.; Moradi, A.; Ebrahimzadeh, M.H.; Jirofti, N. Artificial Intelligence and the State of the Art of Or-thopedic Surgery. Arch. Bone Jt. Surg. 2025, 13, 17–22. [Google Scholar] [PubMed]

- Reddy, K.; Gharde, P.; Tayade, H.; Patil, M.; Reddy, L.S.; Surya, D. Advancements in Robotic Surgery: A Comprehensive Overview of Current Utilizations and Upcoming Frontiers. Cureus 2023, 15, e50415. [Google Scholar] [CrossRef] [PubMed]

- Fisher, C.; Harty, J.; Yee, A.J.M.; Li, C.L.; Komolibus, K.; Grygoryev, K.; Lu, H.; Burke, R.; Wilson, B.C.; Andersson-Engels, S. Perspective on the integration of optical sensing into orthopedic surgical devices. J. Biomed. Opt. 2022, 27, 010601. [Google Scholar] [CrossRef] [PubMed]

- Shickel, B.; Loftus, T.J.; Ruppert, M.; Upchurch, G.R.; Ozrazgat-Baslanti, T.; Rashidi, P.; Bihorac, A. Dynamic predictions of postoperative complications from explainable, uncertainty-aware, and multi-task deep neural networks. Sci. Rep. 2023, 13, 1224. [Google Scholar] [CrossRef]

- A Balch, J.; Ruppert, M.M.; Shickel, B.; Ozrazgat-Baslanti, T.; Tighe, P.J.; A Efron, P.; Upchurch, G.R.; Rashidi, P.; Bihorac, A.; Loftus, T.J. Building an automated, machine learning-enabled platform for predicting post-operative complications. Physiol. Meas. 2023, 44, 024001. [Google Scholar] [CrossRef]

- Ong, C.J.T.; Wong, M.Y.Z.; Cheong, K.X.; Zhao, J.; Teo, K.Y.C.; Tan, T.-E. Optical Coherence Tomography Angiography in Retinal Vascular Disorders. Diagnostics 2023, 13, 1620. [Google Scholar] [CrossRef]

- McLean, K.A.; Sgrò, A.; Brown, L.R.; Buijs, L.F.; Mountain, K.E.; Shaw, C.A.; Drake, T.M.; Pius, R.; Knight, S.R.; Fairfield, C.J.; et al. Multimodal machine learning to predict surgical site infection with healthcare workload impact assessment. npj Digit. Med. 2025, 8, 121. [Google Scholar] [CrossRef]

- Price, H.B.; Song, G.; Wang, W.; O’kane, E.; Lu, K.; Jelly, E.; Miller, D.A.; Wax, A. Development of next generation low-cost OCT towards improved point-of-care retinal imaging. Biomed. Opt. Express 2025, 16, 748–759. [Google Scholar] [CrossRef]

- van der Kooi, A.W.; Rots, M.L.; Huiskamp, G.; Klijn, F.A.; Koek, H.L.; Kluin, J.; Leijten, F.S.; Slooter, A.J. Delirium detection based on monitoring of blinks and eye movements. Am. J. Geriatr. Psychiatry 2014, 22, 1575–1582. [Google Scholar] [CrossRef]

- Noah, A.M.; Spendlove, J.; Tu, Z.; Proudlock, F.; Constantinescu, C.S.; Gottlob, I.; Auer, D.P.; Dineen, R.A.; Moppett, I.K. Retinal imaging with hand-held optical coherence tomography in older people with or without postoperative delirium after hip fracture surgery: A feasibility study. PLoS ONE 2024, 19, e0305964. [Google Scholar] [CrossRef]

- Mohammadi, I.; Rajai Firouzabadi, S.; Hosseinpour, M.; Akhlaghpasand, M.; Hajikarimloo, B.; Zeraatian-Nejad, S.; Nia, P.S. Using artificial intelligence to predict post-operative outcomes in congenital heart surgeries: A systematic review. BMC Cardiovasc. Disord. 2024, 24, 718, Erratum in BMC Cardiovasc. Disord. 2025, 25, 14. [Google Scholar] [CrossRef]

- Wikimedia Commons. File: Machine Learning Pipeline in Production.png. Published 4 May 2024. Available online: https://commons.wikimedia.org/wiki/File:Machine_Learning_Pipeline_in_Production.png (accessed on 15 May 2025).

- Green, E.; Gilchrist, D.; Sen, S.; Di Francesco, V.; Kaufman, D.; Morris, S. Bridge to Artificial Intelligence (Bridge2AI). Genome.gov. Published 11 March 2025. Available online: https://www.genome.gov/Funded-Programs-Projects/Bridge-to-Artificial-Intelligence (accessed on 14 April 2025).

- Shapey, I.M.; Sultan, M. Machine learning for prediction of postoperative complications after hepato-biliary and pancreatic surgery. Artif. Intell. Surg. 2023, 3, 1–13. [Google Scholar] [CrossRef]

- Feng, X.; Gu, J.; Zhou, Y. Primary total hip arthroplasty failure: Aseptic loosening remains the most common cause of revision. Am. J. Transl. Res. 2022, 14, 7080–7089. [Google Scholar]

- Rasouli, J.J.; Shao, J.; Neifert, S.; Gibbs, W.N.; Habboub, G.; Steinmetz, M.P.; Benzel, E.; Mroz, T.E. Artificial Intelligence and Robotics in Spine Surgery. Glob. Spine J. 2021, 11, 556–564. [Google Scholar] [CrossRef]

- Latest MRI Technology Enables Faster, More Accurate Results. Englewood Health. Available online: https://www.englewoodhealth.org/news-and-stories/latest-mri-technology-enables-faster-more-accurate-results (accessed on 11 April 2025).

- Barr, C.; Bauer, J.S.; Malfair, D.; Ma, B.; Henning, T.D.; Steinbach, L.; Link, T.M. MR imaging of the ankle at 3 Tesla and 1.5 Tesla: Protocol optimization and application to cartilage, ligament and tendon pathology in cadaver specimens. Eur. Radiol. 2006, 17, 1518–1528. [Google Scholar] [CrossRef]

- Amrami, K.; Felmlee, J. 3-Tesla imaging of the wrist and hand: Techniques and applications. Semin. Musculoskelet. Radiol. 2008, 12, 223–237. [Google Scholar] [CrossRef] [PubMed]

- Bhatnagar, A.; Kekatpure, A.L.; Velagala, V.R.; Kekatpure, A. A Review on the Use of Artificial Intelligence in Fracture Detection. Cureus 2024, 16, e58364. [Google Scholar] [CrossRef] [PubMed]

- Baffour, F.I.; Huber, N.R.; Ferrero, A.; Rajendran, K.; Glazebrook, K.N.; Larson, N.B.; Kumar, S.; Cook, J.M.; Leng, S.; Shanblatt, E.R.; et al. Photon-counting Detector CT with Deep Learning Noise Reduction to Detect Multiple Myeloma. Radiology 2023, 306, 229–236. [Google Scholar] [CrossRef]

- Zhao, K.; Ma, S.; Sun, Z.; Liu, X.; Zhu, Y.; Xu, Y.; Wang, X. Effect of AI-assisted software on inter- and intra-observer variability for the X-ray bone age assessment of preschool children. BMC Pediatr. 2022, 22, 644. [Google Scholar] [CrossRef]

- Zeiss. ZEISS Announces OCT Technology Enhancements to Better Support Growing Era of Data-Driven Patient Care. Ophthalmologyweb.com. Published 4 June 2024. Available online: https://www.ophthalmologyweb.com/Specialty/Retina/1315-News/613374-ZEISS-Announces-OCT-Technology-Enhancements-to-Better-Support-Growing-Era-of-Data-Driven-Patient-Care/ (accessed on 14 April 2025).

- Hutton, D. Zeiss Announces OCT Technology Enhancements to Better Support Growing Era of Data-Driven Patient Care. Modern Retina. Published 5 June 2024. Available online: https://www.modernretina.com/view/zeiss-announces-oct-technology-enhancements-to-better-support-growing-era-of-data-driven-patient-care (accessed on 10 April 2025).

- Mjöberg, B. Hip prosthetic loosening: A very personal review. World J. Orthop. 2021, 12, 629–639. [Google Scholar] [CrossRef]

- Lum, F.; Lee, A.Y. WG-09 Ophthalmology. DICOM. Published 23 October 2023. Available online: https://www.dicomstandard.org/activity/wgs/wg-09 (accessed on 14 April 2025).

- Bafiq, R.; Mathew, R.; Pearce, E.; Abdel-Hey, A.; Richardson, M.; Bailey, T.; Sivaprasad, S. Age, Sex, and Ethnic Variations in Inner and Outer Retinal and Choroidal Thickness on Spectral-Domain Optical Coherence Tomography. Am. J. Ophthalmol. 2015, 160, 1034–1043.e1. [Google Scholar] [CrossRef] [PubMed]

- Familiari, F.; Saithna, A.; Martinez-Cano, J.P.; Chahla, J.; Del Castillo, J.M.; DePhillipo, N.N.; Moatshe, G.; Monaco, E.; Lucio, J.P.; D’Hooghe, P.; et al. Exploring artificial intelligence in orthopaedics: A collaborative survey from the ISAKOS Young Professional Task Force. J. Exp. Orthop. 2025, 12, e70181. [Google Scholar] [CrossRef]

- O’Sullivan, C. Grad-CAM for Explaining Computer Vision Models. A Data Odyssey. Published 31 January 2025. Available online: https://adataodyssey.com/grad-cam/ (accessed on 14 April 2025).

- Papastratis, I. Explainable AI (XAI): A Survey of Recents Methods, Applications and Frameworks. AI Summer. Published 4 March 2021. Available online: https://theaisummer.com/xai/ (accessed on 14 April 2025).

- Nakayama, L.F.; Choi, J.; Cui, H.; Gilkes, E.G.; Wu, C.; Yang, X.; Pan, W.; Celi, L.A. Pixel Snow and Differential Privacy in Retinal fundus photos de-identification. Investig. Ophthalmol. Vis. Sci. 2023, 64, 2399. [Google Scholar]

- Arasteh, S.T.; Ziller, A.; Kuhl, C.; Makowski, M.; Nebelung, S.; Braren, R.; Rueckert, D.; Truhn, D.; Kaissis, G. Preserving fairness and diagnostic accuracy in private large-scale AI models for medical imaging. Commun. Med. 2024, 4, 46. [Google Scholar] [CrossRef] [PubMed]

- Habli, I.; Lawton, T.; Porter, Z. Artificial intelligence in health care: Accountability and safety. Bull. World Health Organ. 2020, 98, 251–256. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Digital Health Insights. The Rise of Healthcare AI Governance: Building Ethical and Sustainable Data Strategies. Digital Health Insights. Published 14 April 2025. Available online: https://dhinsights.org/news/the-rise-of-healthcare-ai-governance-building-ethical-and-sustainable-data-strategies (accessed on 12 May 2025).

- Cross, J.L.; Choma, M.A.; Onofrey, J.A. Bias in medical AI: Implications for clinical decision-making. PLoS Digit. Health 2024, 3, e0000651. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Palaniappan, K.; Lin, E.Y.T.; Vogel, S. Global Regulatory Frameworks for the Use of Artificial Intelligence (AI) in the Healthcare Services Sector. Healthcare 2024, 12, 562. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- World Health Organization. Ethics and Governance of Artificial Intelligence for Health. www.who.int. Published 28 June 2021. Available online: https://www.who.int/publications/i/item/9789240029200 (accessed on 12 May 2025).

- Bouderhem, R. Shaping the future of AI in healthcare through ethics and governance. Humanit. Soc. Sci. Commun. 2024, 11, 416. [Google Scholar] [CrossRef]

- MedTech Europe. Trustworthy Artificial Intelligence (AI) in Healthcare MedTech Europe’s Response to the Pilot on the Trustworthy AI Assessment List 2.0.; 2019. Available online: https://www.medtecheurope.org/wp-content/uploads/2019/11/MTE_Nov19_Trustworthy-Artificial-Intelligence-in-healthcare-1.pdf (accessed on 12 May 2025).

- Heggli, I.; Teixeira, G.Q.; Iatridis, J.C.; Neidlinger-Wilke, C.; Dudli, S. The role of the complement system in disc degeneration and Modic changes. JOR Spine 2024, 7, e1312. [Google Scholar] [CrossRef]

- Dong, J.; Li, Q.; Wang, X.; Fan, Y. A Review of the Methods of Non-Invasive Assessment of Intracranial Pressure through Ocular Measurement. Bioengineering 2022, 9, 304. [Google Scholar] [CrossRef]

- Kaur, S.M.; Bhatia, A.S.; Isaiah, M.; Gowher, H.; Kais, S. Multi-Omic and Quantum Machine Learning Integration for Lung Subtypes Classification. arXiv 2024, arXiv:2410.02085. [Google Scholar]

- Athreya, A.P.; Lazaridis, K.N. Discovery and Opportunities With Integrative Analytics Using Multiple-Omics Data. Hepatology 2021, 74, 1081–1087. [Google Scholar] [CrossRef]