Preclinical Application of Augmented Reality in Pediatric Craniofacial Surgery: An Accuracy Study

Abstract

:1. Introduction

2. Materials and Methods

2.1. Development Phase

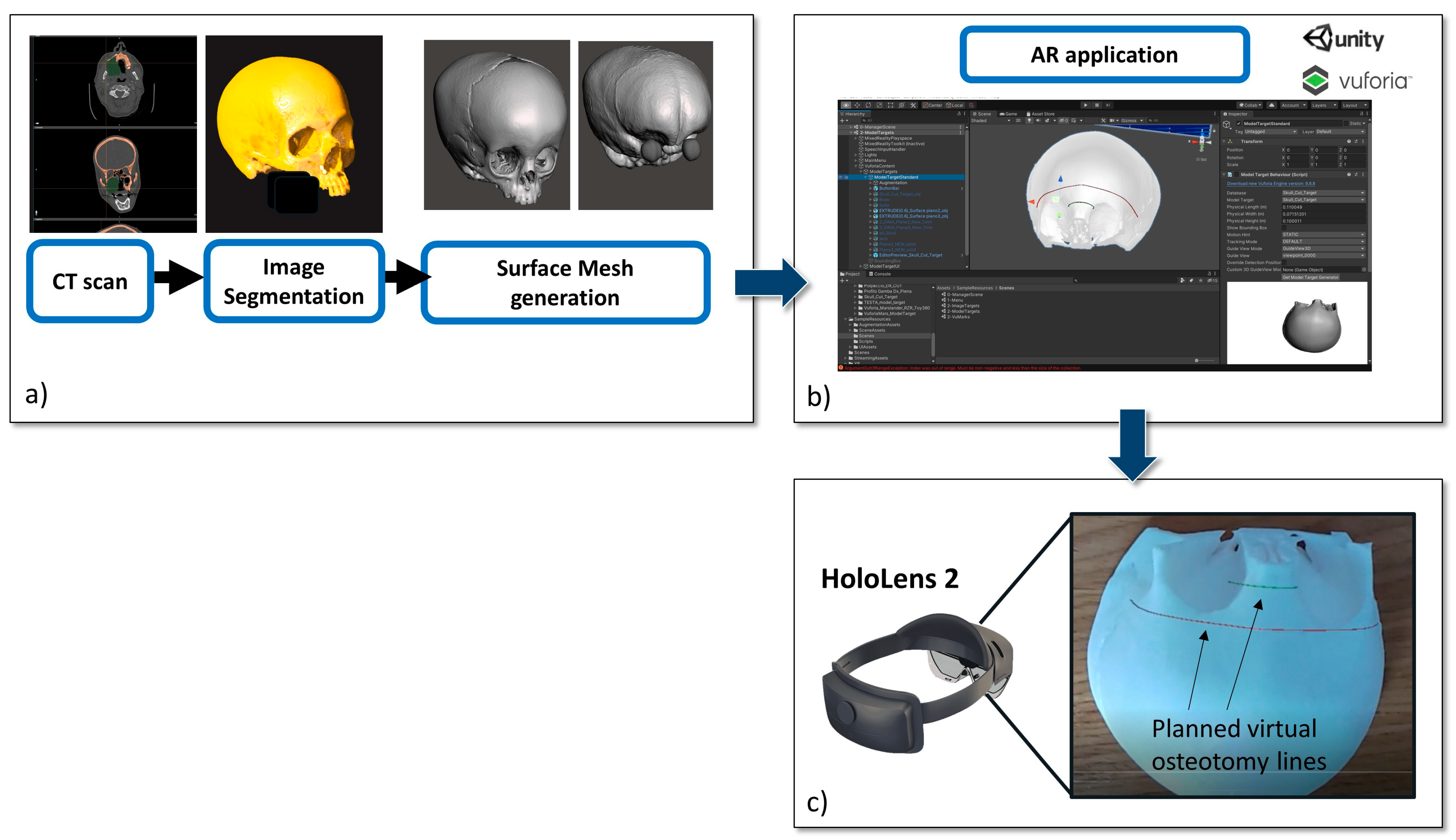

2.1.1. Virtual Content Preparation

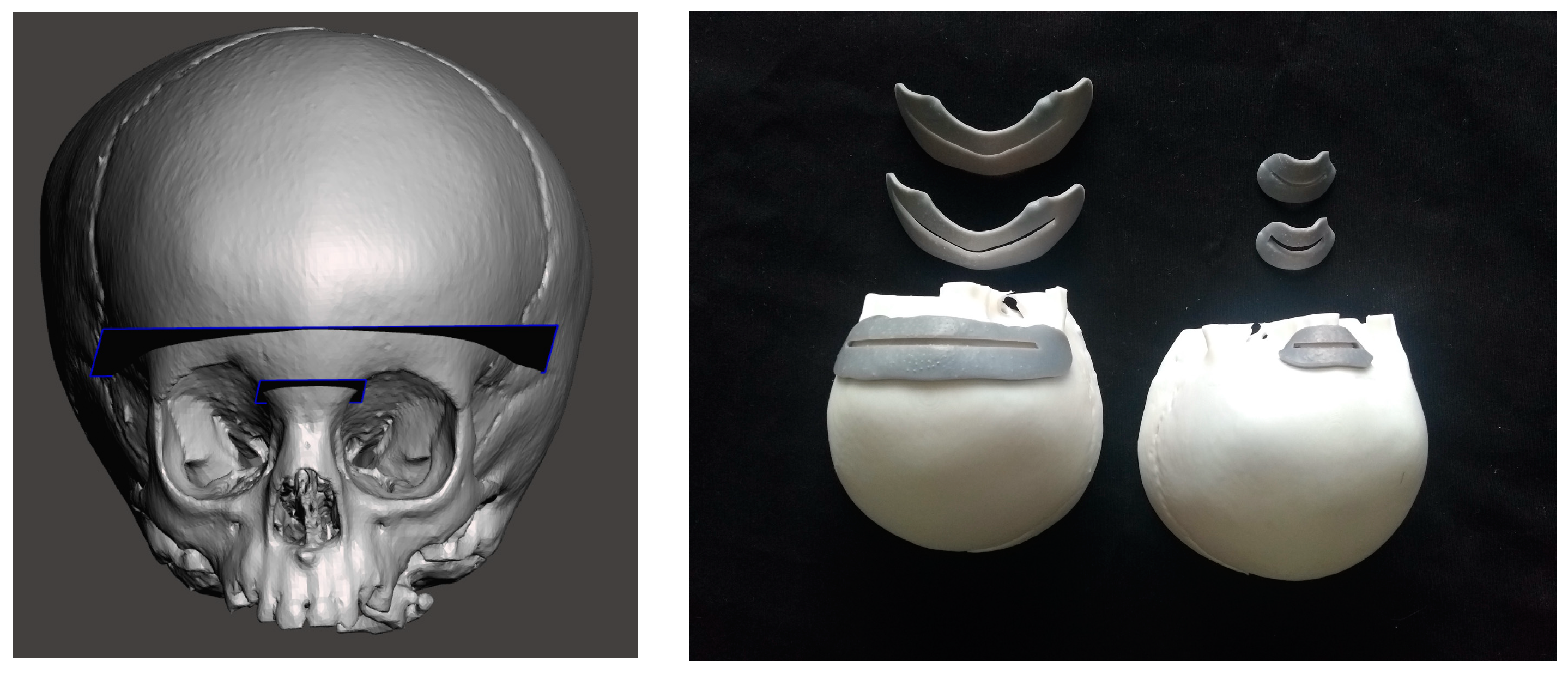

2.1.2. 3D Printing of Skull Phantom and CAD/CAM Templates for Testing Accuracy

2.1.3. The AR Application

2.2. Experimental Phase

2.3. Statistics

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ayoub, A.; Pulijala, Y. The application of virtual reality and augmented reality in Oral & Maxillofacial Surgery. BMC Oral Health 2019, 19, 238. [Google Scholar]

- Marzano, E.; Piardi, T.; Soler, L.; Diana, M.; Mutter, D.; Marescaux, J.; Pessaux, P. Augmented reality-guided artery-first pancreatico-duodenectomy. J. Gastrointest. Surg. 2013, 17, 1980–1983. [Google Scholar] [CrossRef] [PubMed]

- Ghaednia, H.; Fourman, M.S.; Lans, A.; Detels, K.; Dijkstra, H.; Lloyd, S.; Sweeney, A.; Oosterhoff, J.H.F.; Schwab, J.H. Augmented and virtual reality in spine surgery, current applications and future potentials. Spine J. 2021, 21, 1617–1625. [Google Scholar] [CrossRef] [PubMed]

- Verhey, J.T.; Haglin, J.M.; Verhey, E.M.; Hartigan, D.E. Virtual, augmented, and mixed reality applications in orthopedic surgery. Int. J. Med. Robot. 2020, 16, e2067. [Google Scholar] [CrossRef]

- Quero, G.; Lapergola, A.; Soler, L.; Shahbaz, M.; Hostettler, A.; Collins, T.; Marescaux, J.; Mutter, D.; Diana, M.; Pessaux, P. Virtual and Augmented Reality in Oncologic Liver Surgery. Surg. Oncol. Clin. N. Am. 2019, 28, 31–44. [Google Scholar] [CrossRef]

- Aguilar-Salinas, P.; Gutierrez-Aguirre, S.F.; Avila, M.J.; Nakaji, P. Current status of augmented reality in cerebrovascular surgery: A systematic review. Neurosurg. Rev. 2022, 45, 1951–1964. [Google Scholar] [CrossRef]

- Schiavina, R.; Bianchi, L.; Lodi, S.; Cercenelli, L.; Chessa, F.; Bortolani, B.; Gaudiano, C.; Casablanca, C.; Droghetti, M.; Porreca, A.; et al. Real-time Augmented Reality Three-dimensional Guided Robotic Radical Prostatectomy: Preliminary Experience and Evaluation of the Impact on Surgical Planning. Eur. Urol. Focus 2021, 7, 1260–1267. [Google Scholar] [CrossRef]

- Schiavina, R.; Bianchi, L.; Chessa, F.; Barbaresi, U.; Cercenelli, L.; Lodi, S.; Gaudiano, C.; Bortolani, B.; Angiolini, A.; Mineo Bianchi, F.; et al. Augmented Reality to Guide Selective Clamping and Tumor Dissection During Robot-assisted Partial Nephrectomy: A Preliminary Experience. Clin. Genitourin. Cancer 2021, 19, e149–e155. [Google Scholar] [CrossRef]

- Bianchi, L.; Chessa, F.; Angiolini, A.; Cercenelli, L.; Lodi, S.; Bortolani, B.; Molinaroli, E.; Casablanca, C.; Droghetti, M.; Gaudiano, C.; et al. The Use of Augmented Reality to Guide the Intraoperative Frozen Section During Robot-assisted Radical Prostatectomy. Eur. Urol. 2021, 80, 480–488. [Google Scholar] [CrossRef]

- Kim, Y.; Kim, H.; Kim, Y.O. Virtual reality and augmented reality in plastic surgery: A review. Arch. Plast. Surg. 2017, 44, 179–187. [Google Scholar] [CrossRef] [Green Version]

- Okamoto, T.; Onda, S.; Yanaga, K.; Suzuki, N.; Hattori, A. Clinical application of navigation surgery using augmented reality in the abdominal field. Surg. Today 2015, 45, 397–406. [Google Scholar] [CrossRef]

- Scherl, C.; Stratemeier, J.; Rotter, N.; Hesser, J.; Schönberg, S.O.; Servais, J.J.; Männle, D.; Lammert, A. Augmented Reality with HoloLens® in Parotid Tumor Surgery: A Prospective Feasibility Study. ORL J. Otorhinolaryngol. Relat. Spec. 2021, 83, 439–448. [Google Scholar] [CrossRef]

- Scherl, C.; Stratemeier, J.; Karle, C.; Rotter, N.; Hesser, J.; Huber, L.; Dias, A.; Hoffmann, O.; Riffel, P.; Schoenberg, S.O.; et al. Augmented reality with HoloLens in parotid surgery: How to assess and to improve accuracy. Eur. Arch. Otorhinolaryngol. 2021, 278, 2473–2483. [Google Scholar] [CrossRef]

- Guha, D.; Alotaibi, N.M.; Nguyen, N.; Gupta, S.; McFaul, C.; Yang, V.X.D. Augmented Reality in Neurosurgery: A Review of Current Concepts and Emerging Applications. Can. J. Neurol. Sci. 2017, 44, 235–245. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Badiali, G.; Ferrari, V.; Cutolo, F.; Freschi, C.; Caramella, D.; Bianchi, A.; Marchetti, C. Augmented reality as an aid in maxillofacial surgery: Validation of a wearable system allowing maxillary repositioning. J. Cranio Maxillo Facial Surg. 2014, 42, 1970–1976. [Google Scholar] [CrossRef]

- Qu, M.; Hou, Y.; Xu, Y.; Shen, C.; Zhu, M.; Xie, L.; Wang, H.; Zhang, Y.; Chai, G. Precise positioning of an intraoral distractor using augmented reality in patients with hemifacial microsomia. J. Craniomaxillofac. Surg. 2015, 43, 106–112. [Google Scholar] [CrossRef]

- Cercenelli, L.; Babini, F.; Badiali, G.; Battaglia, S.; Tarsitano, A.; Marchetti, C.; Marcelli, E. Augmented Reality to Assist Skin Paddle Harvesting in Osteomyocutaneous Fibular Flap Reconstructive Surgery: A Pilot Evaluation on a 3D-Printed Leg Phantom. Front. Oncol. 2022, 11, 804748. [Google Scholar] [CrossRef]

- Badiali, G.; Cercenelli, L.; Battaglia, S.; Marcelli, E.; Marchetti, C.; Ferrari, V.; Cutolo, F. Review on Augmented Reality in Oral and Cranio-Maxillofacial Surgery: Toward “Surgery-Specific” Head-Up Displays. IEEE Access 2020, 2020, 59015–59028. [Google Scholar] [CrossRef]

- Benmahdjoub, M.; van Walsum, T.; van Twisk, P.; Wolvius, E.B. Augmented reality in craniomaxillofacial surgery: Added value and proposed recommendations through a systematic review of the literature. Int. J. Oral. Maxillofac. Surg. 2021, 50, 969–978. [Google Scholar] [CrossRef]

- Battaglia, S.; Badiali, G.; Cercenelli, L.; Bortolani, B.; Marcelli, E.; Cipriani, R.; Contedini, F.; Marchetti, C.; Tarsitano, A. Combination of CAD/CAM and Augmented Reality in Free Fibula Bone Harvest. Plast. Reconstr. Surg. Glob. Open 2019, 7, e2510. [Google Scholar] [CrossRef]

- Battaglia, S.; Ratti, S.; Manzoli, L.; Marchetti, C.; Cercenelli, L.; Marcelli, E.; Tarsitano, A.; Ruggeri, A. Augmented Reality-Assisted Periosteum Pedicled Flap Harvesting for Head and Neck Reconstruction: An Anatomical and Clinical Viability Study of a Galeo-Pericranial Flap. J. Clin. Med. 2020, 9, 2211. [Google Scholar] [CrossRef]

- Ceccariglia, F.; Cercenelli, L.; Badiali, G.; Marcelli, E.; Tarsitano, A. Application of Augmented Reality to Maxillary Resections: A Three-Dimensional Approach to Maxillofacial Oncologic Surgery. J. Pers. Med. 2022, 12, 2047. [Google Scholar] [CrossRef] [PubMed]

- Mathijssen, I.M.J. Working Group Guideline Craniosynostosis. Updated Guideline on Treatment and Management of Craniosynostosis. J. Craniofac. Surg. 2021, 32, 371–450. [Google Scholar] [CrossRef] [PubMed]

- Vassallo, R.; Rankin, A.; Chen, E.C.S.; Peters, T.M. Hologram Stability Evaluation for Microsoft HoloLens. In Proceedings of the SPIE Medical Imaging 2017: Image Perception, Observer Performance, and Technology Assessment; Kupinski, M.A., Nishikawa, R.M., Eds.; Society of Photo-Optical Instrumentation Engineers (SPIE): Bellingham, WA, USA, 2017; Volume 10136. [Google Scholar] [CrossRef]

- Wandell, A.; Papanastassiou, A.; Tarasiewicz, I.; Miller, M. What is the Accuracy of PEEK Implants for Cranioplasty in Comparison to Their Patient Specific Surgical Plan? J. Oral. Maxillofac. Surg. 2023, 81, 24–31. [Google Scholar] [CrossRef] [PubMed]

- Mazzoni, S.; Bianchi, A.; Schiariti, G.; Badiali, G.; Marchetti, C. Computer-aided design and computer-aided manufacturing cutting guides and customized titanium plates are useful in upper maxilla waferless repositioning. J. Oral Maxillofac. Surg. 2015, 73, 701–707. [Google Scholar] [CrossRef]

- Porpiglia, F.; Fiori, C.; Checcucci, E.; Amparore, D.; Bertolo, R. Augmented reality robot-assisted radical prostatectomy: Preliminary experience. Urology 2018, 115, 184. [Google Scholar] [CrossRef]

- Elmi-Terander, A.; Nachabe, R.; Skulason, H.; Pedersen, K.; Söderman, M.; Racadio, J.; Babic, D.; Gerdhem, P.; Edström, E. Feasibility and accuracy of thoracolumbar minimally invasive pedicle screw placement with augmented reality navigation technology. Spine 2018, 43, 1018–1023. [Google Scholar] [CrossRef]

- Bong, J.H.; Song, H.J.; Oh, Y.; Park, N.; Kim, H.; Park, S. Endoscopic navigation system with extended field of view using augmented reality technology. Int. J. Med. Robot. 2018, 14, e1886. [Google Scholar] [CrossRef]

- Qian, L.; Barthel, A.; Johnson, A.; Osgood, G.; Kazanzides, P.; Navab, N.; Fuerst, B. Comparison of optical see-through head-mounted displays for surgical interventions with object-anchored 2D-display. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 901–910. [Google Scholar] [CrossRef] [Green Version]

- Bremers, A.W.D.; Yöntem, A.O.; Li, K.; Chu, D.; Meijering, V.; Janssen, C.P. Perception of Perspective in Augmented Reality Head-Up Displays. Int. J. Hum. Comput. Stud. 2021, 155, 102693. [Google Scholar] [CrossRef]

- Tokunaga, D.M.; Corrêa, C.G.; Bernardo, F.M.; Bernardes, J.; Ranzini, E.; Nunes, F.L.S.; Tori, R. Registration System Errors Perception in Augmented Reality Based on RGB-D Cameras. In Virtual, Augmented and Mixed Reality; Lecture Notes in Computer Science; Shumaker, R., Lackey, S., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 119–129. [Google Scholar]

- Barber, S.R.; Jain, S.; Son, Y.-J.; Chang, E.H. Virtual functional endoscopic sinus surgery simulation with 3D-printed models for mixed-reality nasal endoscopy. Otolaryngol. Head Neck Surg. 2018, 159, 933–937. [Google Scholar] [CrossRef] [Green Version]

- Li, L.; Yang, J.; Chu, Y.; Wu, W.; Xue, J.; Liang, P.; Chen, L. A novel augmented reality navigation system for endoscopic sinus and skull base surgery: A feasibility study. PLoS ONE 2016, 11, e0146996. [Google Scholar] [CrossRef]

- Van Doormaal, T.P.C.; van Doormaal, J.A.M.; Mensink, T. Clinical Accuracy of Holographic Navigation Using Point-Based Registration on Augmented-Reality Glasses. Oper. Neurosurg. Hagerstown 2019, 17, 588–593. [Google Scholar] [CrossRef] [Green Version]

- Tang, Z.N.; Hu, L.H.; Soh, H.Y.; Yu, Y.; Zhang, W.B.; Peng, X. Accuracy of Mixed Reality Combined with Surgical Navigation Assisted Oral and Maxillofacial Tumor Resection. Front. Oncol. 2022, 11, 715484. [Google Scholar] [CrossRef]

- Han, W.; Yang, X.; Wu, S.; Fan, S.; Chen, X.; Aung, Z.M.; Liu, T.; Zhang, Y.; Gu, S.; Chai, G. A new method for cranial vault reconstruction: Augmented reality in synostotic plagiocephaly surgery. J. Craniomaxillofac. Surg. 2019, 47, 1280–1284. [Google Scholar] [CrossRef]

- Cercenelli, L.; Carbone, M.; Condino, S.; Cutolo, F.; Marcelli, E.; Tarsitano, A.; Marchetti, C.; Ferrari, V.; Badiali, G. The Wearable VOSTARS System for Augmented Reality-Guided Surgery: Preclinical Phantom Evaluation for High-Precision Maxillofacial Tasks. J. Clin. Med. 2020, 9, 3562. [Google Scholar] [CrossRef]

- Badiali, G.; Cutolo, F.; Cercenelli, L.; Carbone, M.; D’Amato, R.; Ferrari, V.; Marchetti, C. The Vostars Project: A New Wearable Hybrid Video and Optical See-Through Augmented Reality Surgical System for Maxillofacial Surgery. Int. J. Oral. Maxillofac. Surg. 2019, 48, 153. [Google Scholar] [CrossRef]

- Cutolo, F.; Freschi, C.; Mascioli, S.; Parchi, P.D.; Ferrari, M.; Ferrari, V. Robust and Accurate Algorithm for Wearable Stereoscopic Augmented Reality with Three Indistinguishable Markers. Electronics 2016, 5, 59. [Google Scholar] [CrossRef] [Green Version]

- Carbone, M.; Cutolo, F.; Condino, S.; Badiali, G.; Ferrari, V. Architecture of a Hybrid Video/Optical See-through Head-Mounted Display-Based Augmented Reality Surgical Navigation Platform. Information 2022, 13, 81. [Google Scholar] [CrossRef]

| Nose | Frontal | ||||||

|---|---|---|---|---|---|---|---|

| PT 1 | CG (3 mm) | CG 2 (mm) | CG 1 (mm) | PT 1 | CG (3 mm) | CG 2 (mm) | CG 1 (mm) |

| 1 | 27 mm | 27 mm | 24 mm | 1 | 75 mm | 70 mm | 40 mm |

| 2 | 27 mm | 27 mm | 27 mm | 2 | 75 mm | 75 mm | 55 mm |

| 3 | 27 mm | 25 mm | 23 mm | 3 | 75 mm | 75 mm | 60 mm |

| 4 | 27 mm | 24 mm | 22 mm | 4 | 75 mm | 72 mm | 65 mm |

| 5 | 27 mm | 27 mm | 27 mm | 5 | 70 mm | 70 mm | 60 mm |

| 6 | 27 mm | 26 mm | 25 mm | 6 | 75 mm | 75 mm | 75 mm |

| PT 2 | PT 2 | ||||||

| 1 | 27 mm | 26 mm | 23 mm | 1 | 75 mm | 55 mm | 35 mm |

| 2 | 27 mm | 27 mm | 26 mm | 2 | 75 mm | 75 mm | 75 mm |

| 3 | 27 mm | 27 mm | 27 mm | 3 | 75 mm | 73 mm | 55 mm |

| 4 | 27 mm | 27 mm | 27 mm | 4 | 75 mm | 75 mm | 74 mm |

| 5 | 27 mm | 27 mm | 25 mm | 5 | 75 mm | 75 mm | 75 mm |

| 6 | 27 mm | 27 mm | 27 mm | 6 | 75 mm | 75 mm | 35 mm |

| PT 3 | PT 3 | ||||||

| 1 | 27 mm | 25 mm | 20 mm | 1 | 75 mm | 75 mm | 70 mm |

| 2 | 27 mm | 27 mm | 27 mm | 2 | 75 mm | 71 mm | 75 mm |

| 3 | 27 mm | 27 mm | 27 mm | 3 | 75 mm | 75 mm | 70 mm |

| 4 | 27 mm | 27 mm | 27 mm | 4 | 75 mm | 75 mm | 75 mm |

| 5 | 27 mm | 27 mm | 27 mm | 5 | 75 mm | 70 mm | 60 mm |

| 6 | 27 mm | 27 mm | 27 mm | 6 | 75 mm | 70 mm | 35 mm |

| PT 4 | PT 4 | ||||||

| 1 | 27 mm | 27 mm | 27 mm | 1 | 75 mm | 75 mm | 65 mm |

| 2 | 27 mm | 15 mm | 10 mm | 2 | 75 mm | 75 mm | 75 mm |

| 3 | 27 mm | 27 mm | 26 mm | 3 | 75 mm | 75 mm | 75 mm |

| 4 | 27 mm | 27 mm | 22 mm | 4 | 75 mm | 70 mm | 55 mm |

| 5 | 27 mm | 27 mm | 27 mm | 5 | 73 mm | 71 mm | 65 mm |

| 6 | 27 mm | 27 mm | 27 mm | 6 | 70 mm | 60 mm | 60 mm |

| PT 5 | PT 5 | ||||||

| 1 | 27 mm | 27 mm | 27 mm | 1 | 70 mm | 50 mm | 35 mm |

| 2 | 27 mm | 27 mm | 27 mm | 2 | 70 mm | 65 mm | 55 mm |

| 3 | 20 mm | 12 mm | 11 mm | 3 | 65 mm | 45 mm | 35 mm |

| 4 | 27 mm | 25 mm | 25 mm | 4 | 75 mm | 59 mm | 54 mm |

| 5 | 27 mm | 27 mm | 27 mm | 5 | 75 mm | 75 mm | 45 mm |

| 6 | 27 mm | 27 mm | 27 mm | 6 | 75 mm | 57 mm | 45 mm |

| PT 6 | PT 6 | ||||||

| 1 | 27 mm | 27 mm | 27 mm | 1 | 75 mm | 75 mm | 75 mm |

| 2 | 27 mm | 27 mm | 27 mm | 2 | 65 mm | 60 mm | 55 mm |

| 3 | 27 mm | 27 mm | 27 mm | 3 | 75 mm | 75 mm | 75 mm |

| 4 | 27 mm | 27 mm | 27 mm | 4 | 75 mm | 75 mm | 75 mm |

| 5 | 27 mm | 27 mm | 27 mm | 5 | 75 mm | 75 mm | 75 mm |

| 6 | 27 mm | 27 mm | 27 mm | 6 | 75 mm | 75 mm | 75 mm |

| fro 3 mm | fro 2 mm | fro 1 mm | nos 3 mm | nos 2 mm | nos 1 mm | |

|---|---|---|---|---|---|---|

| KruskaI-WaIIis H | 6.992 | 9.579 | 13.083 | 5.000 | 4.883 | 6.521 |

| df | 5 | 5 | 5 | 5 | 5 | 5 |

| Asymp. Sig. | 0.221 | 0.088 | 0.023 | 0.416 | 0.43 | 0.259 |

| op2–op1 | op3–op1 | op4–op1 | op5–op1 | op6–op1 | op3–op2 | op4–op2 | op5–op2 | op6–op2 | op4–op3 | op5–op3 | op6–op3 | op5–op4 | op6–op4 | op6–op5 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Z | −0.315 | −0.677 | −1.051 | −2.023 | −1.841 | 0.921 | −1.214 | −1.786 | −1.361 | −0.412 | −1.997 | −0.984 | −2.207 | −0.816 | −2.041 |

| Asymp. Sig. (2-tailed) | 0.752 | 0.498 | 0.293 | 0.043 | 0.066 | 0.357 | 0.225 | 0.074 | 0.174 | 0.68 | 0.046 | 0.343 | 0.027 | 0.414 | 0.041 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ruggiero, F.; Cercenelli, L.; Emiliani, N.; Badiali, G.; Bevini, M.; Zucchelli, M.; Marcelli, E.; Tarsitano, A. Preclinical Application of Augmented Reality in Pediatric Craniofacial Surgery: An Accuracy Study. J. Clin. Med. 2023, 12, 2693. https://doi.org/10.3390/jcm12072693

Ruggiero F, Cercenelli L, Emiliani N, Badiali G, Bevini M, Zucchelli M, Marcelli E, Tarsitano A. Preclinical Application of Augmented Reality in Pediatric Craniofacial Surgery: An Accuracy Study. Journal of Clinical Medicine. 2023; 12(7):2693. https://doi.org/10.3390/jcm12072693

Chicago/Turabian StyleRuggiero, Federica, Laura Cercenelli, Nicolas Emiliani, Giovanni Badiali, Mirko Bevini, Mino Zucchelli, Emanuela Marcelli, and Achille Tarsitano. 2023. "Preclinical Application of Augmented Reality in Pediatric Craniofacial Surgery: An Accuracy Study" Journal of Clinical Medicine 12, no. 7: 2693. https://doi.org/10.3390/jcm12072693

APA StyleRuggiero, F., Cercenelli, L., Emiliani, N., Badiali, G., Bevini, M., Zucchelli, M., Marcelli, E., & Tarsitano, A. (2023). Preclinical Application of Augmented Reality in Pediatric Craniofacial Surgery: An Accuracy Study. Journal of Clinical Medicine, 12(7), 2693. https://doi.org/10.3390/jcm12072693