Artificial Intelligence for Personalised Ophthalmology Residency Training

Abstract

1. Introduction

2. Classifying Color Fundus Photographs with Deep Learning

2.1. Building the Resident Dataset

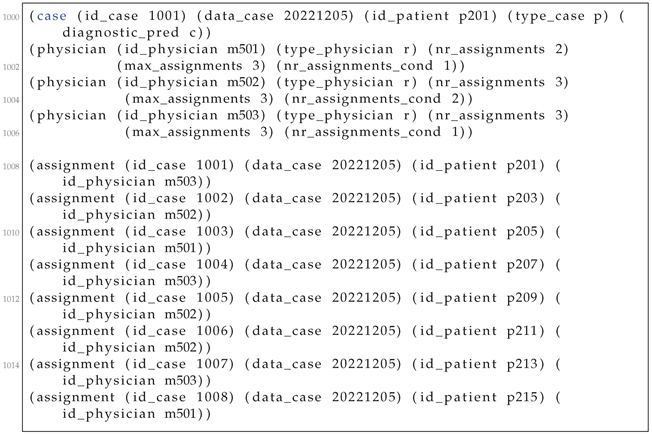

2.2. Applying Contrastive Learning on the Resident Dataset

2.3. Assessing Model Performance

2.4. Automatically Assessing Difficult Cases

3. Case Allocation Algorithm

3.1. Problem Statement

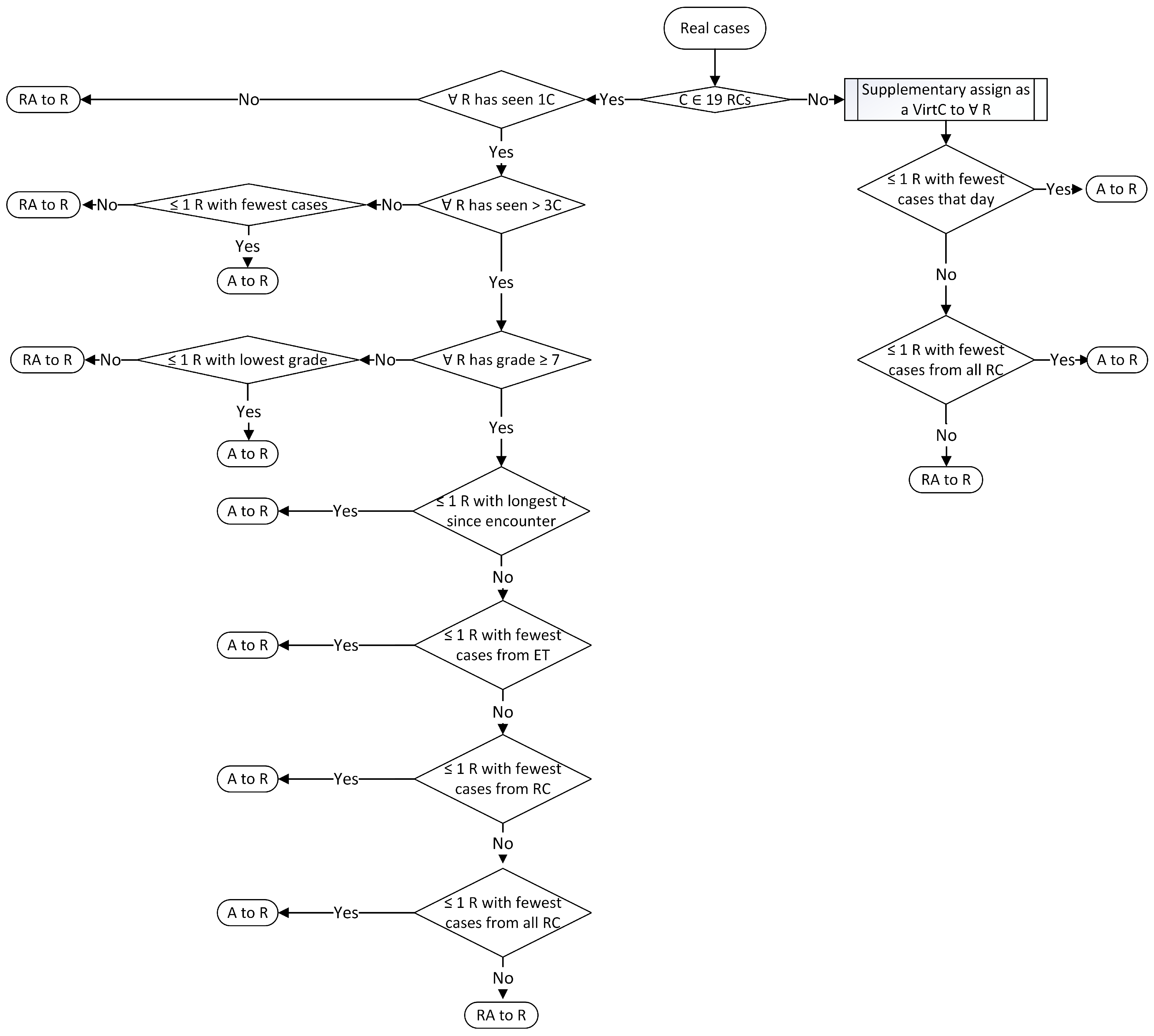

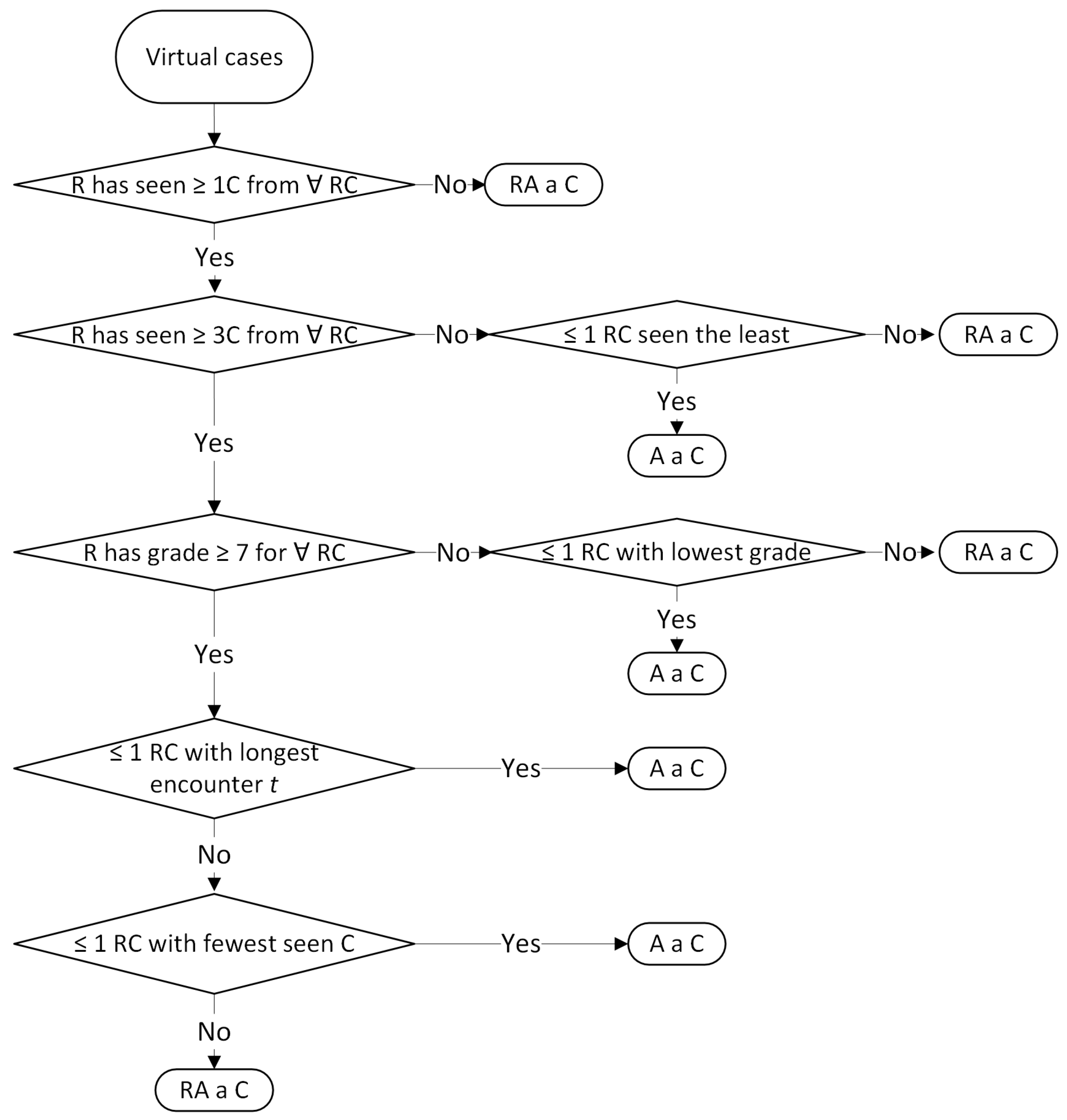

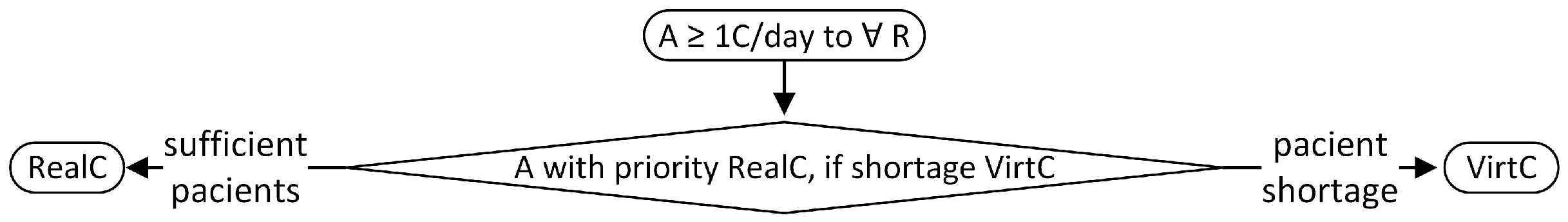

3.2. The Assignment Flow

- Patients arriving at the retina clinic are examined by a technician, who performs a CFP.

- The resulting image is analysed by the deep learning algorithm, which generates a presumptive diagnosis and a difficulty score.

- The diagnosis is validated by the expert physician.

- The case is sent to the expert system-powered allocation algorithm which will assign the case to a resident.

- In case of patient shortage addressing to the retina clinic, the allocation algorithm will select a CFP from the test set from the pedagogical Resident dataset, so that every resident is assigned at least one patient or case per day.

- The resident examines the patient, and based on the clinical signs, elaborates the diagnosis, differential diagnosis, and therapeutic plan.

- The resident’s performance is evaluated and scored by the expert physician.

- Residents’ performances are recorded in their teaching file for the specific retinal condition.

- The pedagogical Resident dataset is extended with the case.

3.3. Allocation Rules

3.4. Running Scenario

4. Discussion and Related Work

4.1. Case Allocation

4.2. Resident’s Evaluation and Teaching File

4.3. AI for Education

- (i)

- Can AI (e.g., machine learning or Bayesian networks) identify the most similar cases?

- (ii)

- Can data augmentation generate more cases to train the resident?

- (iii)

- Can AI identify clinical features?

- (iv)

- Can AI provide a differential diagnosis (e.g., ranking diagnosis based on their probability?)

- (v)

- Can AI assess how incomplete input (e.g., missing features) may lead to erroneous interpretations?

4.4. Towards Compliance with the Artificial Intelligence Act

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

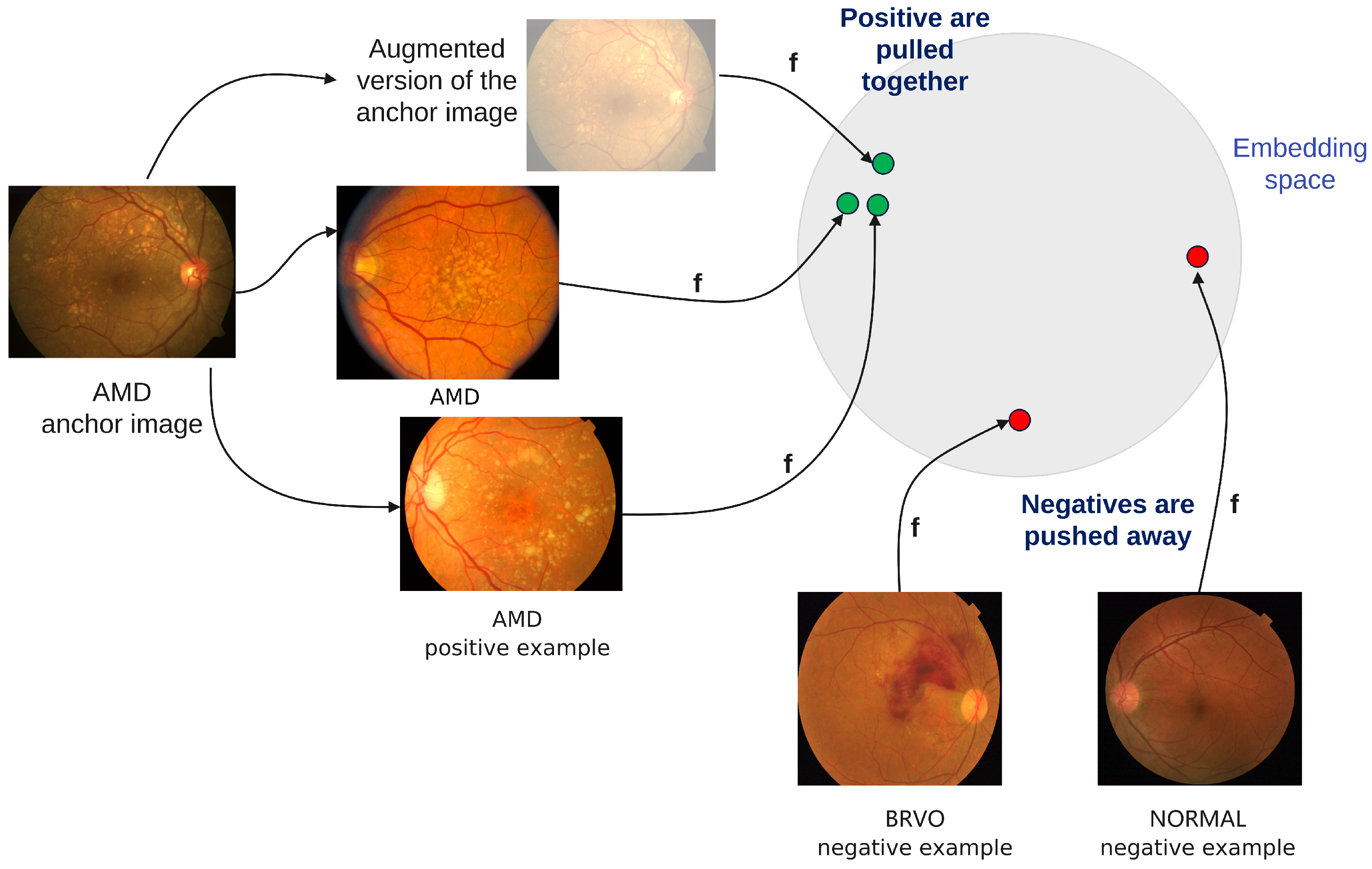

Appendix A. Case Allocation Algorithm

Appendix A.1. Case Allocation Rules

Appendix A.2. Case Allocation Algorithm

| Algorithm A1: Assigning at least one case/day to each resident |

|

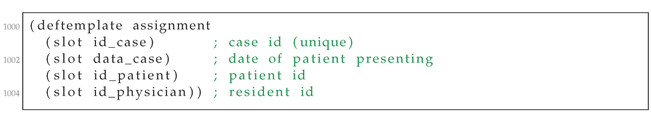

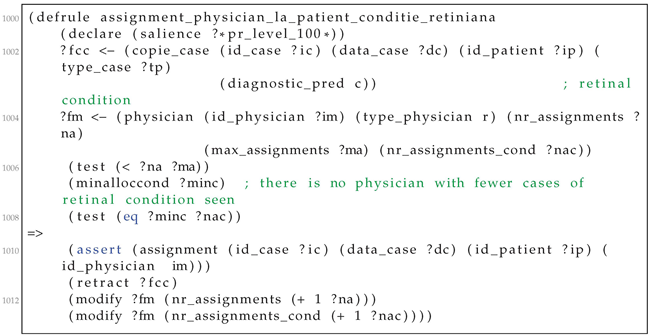

Appendix A.3. Case Allocation Listings

| Listing A1. Templates for storing data on availble cases and residents. |

|

| Listing A2. Storing data about the current assignment. |

|

| Listing A3. Example of a CLIPS rule for assigning cases to residents. |

|

| Listing A4. Running example. |

|

Appendix B. Histogram of Keywords from ODIR Dataset

| C0 | Normal fundus: 2271, lens dust: 46, |

| C1 | Dry age-related macular degeneration: 58, wet age-related macular degeneration: 30, glaucoma: 5, mild nonproliferative retinopathy: 2, myopia retinopathy: 2, diffuse chorioretinal atrophy: 1, |

| C2 | Mild nonproliferative retinopathy: 444, glaucoma: 2, macular pigmentation disorder: 1, macular epiretinal membrane: 17, drusen: 6, vitreous degeneration: 6, myelinated nerve fibers: 4, epiretinal membrane: 8, dry age-related macular degeneration: 2, hypertensive retinopathy: 11, cataract: 2, lens dust: 2, laser spot: 1, retinal pigmentation: 1, |

| C3 | Moderate non proliferative retinopathy: 758, laser spot: 27, lens dust: 7, macular epiretinal membrane: 16, cataract: 9, epiretinal membrane: 25, hypertensive retinopathy: 29, drusen: 7, spotted membranous change: 2, glaucoma: 5, myelinated nerve fibers: 11, abnormal pigment : 1, chorioretinal atrophy: 1, epiretinal membrane over the macula: 2, retinitis pigmentosa: 1, pathological myopia: 4, tessellated fundus: 1, white vessel: 1, branch retinal vein occlusion: 2, retina fold: 1, old branch retinal vein occlusion: 1, vitreous degeneration: 1, refractive media opacity: 1, optic discedema: 1, atrophic change: 2, retinal pigment epithelial hypertrophy: 1, age-related macular degeneration: 1, central retinal artery occlusion: 1, post laser photocoagulation: 1, |

| C4 | Severe nonproliferative retinopathy: 129, hypertensive retinopathy: 9, white vessel: 2, macular epiretinal membrane: 1, |

| C5 | Proliferative diabetic retinopathy: 16, hypertensive retinopathy: 4, severe proliferative diabetic retinopathy: 9, laser spot: 2, epiretinal membrane: 1, |

| C6 | Glaucoma: 209, mild nonproliferative retinopathy: 2, macular pigmentation disorder: 1, hypertensive retinopathy: 4, central retinal vein occlusion: 1, wet age-related macular degeneration: 2, dry age-related macular degeneration: 8, myopia retinopathy: 7, myelinated nerve fibers: 1, moderate non proliferative retinopathy: 5, lens dust: 2, optic nerve atrophy: 2, old central retinal vein occlusion: 1, diffuse retinal atrophy: 1, macular epiretinal membrane: 7, intraretinal hemorrhage: 2, vitreous degeneration: 1, laser spot: 1, |

| C7 | Hypertensive retinopathy: 157, moderate non proliferative retinopathy: 29, glaucoma: 4, proliferative diabetic retinopathy: 4, severe nonproliferative retinopathy: 9, branch retinal vein occlusion: 2, vitreous opacity: 1, age-related macular degeneration: 2, mild nonproliferative retinopathy: 11, cataract: 3, macular epiretinal membrane: 1, suspected diabetic retinopathy: 1, optic nerve atrophy: 1, |

| C8 | Pathological myopia: 195, suspected moderate non proliferative retinopathy: 1, moderate non proliferative retinopathy: 4, refractive media opacity: 4, lens dust: 3, suspected macular epimacular membrane: 1, |

| C9 | Moderate non proliferative retinopathy: 1, tessellated fundus: 1, |

| C10 | Cataract: 1, vitreous degeneration: 55, mild nonproliferative retinopathy: 6, glaucoma: 1, moderate non proliferative retinopathy: 1, lens dust: 1, |

| C11 | Branch retinal vein occlusion: 21, lens dust: 1, hypertensive retinopathy: 2, vitreous opacity: 1, moderate non proliferative retinopathy: 2, drusen: 1, |

| C13 | |

| C14 | Dry age-related macular degeneration: 104, drusen: 140, lens dust: 9, macular epiretinal membrane: 1, glaucoma: 5, moderate non proliferative retinopathy: 8, mild nonproliferative retinopathy: 8, hypertensive retinopathy: 2, age-related macular degeneration: 3, epiretinal membrane: 3, myelinated nerve fibers: 2, branch retinal vein occlusion: 1, wet age-related macular degeneration: 1, atrophic change: 1, cataract: 1, |

| C15 | Macular epiretinal membrane: 147, moderate non proliferative retinopathy: 43, laser spot: 6, epiretinal membrane: 135, drusen: 4, mild nonproliferative retinopathy: 25, epiretinal membrane over the macula: 13, lens dust: 14, atrophic change: 1, glaucoma: 7, hypertensive retinopathy: 1, chorioretinal atrophy: 1, severe nonproliferative retinopathy: 1, white vessel: 1, myelinated nerve fibers: 2, post laser photocoagulation: 1, severe proliferative diabetic retinopathy: 1, vessel tortuosity: 1, |

| C18 | Moderate non proliferative retinopathy: 1, optic disc edema: 1, |

| C19 | Myelinated nerve fibers: 77, lens dust: 2, glaucoma: 1, moderate non proliferative retinopathy: 11, mild nonproliferative retinopathy: 4, old branch retinal vein occlusion: 1, drusen: 2, laser spot: 1, macular epiretinal membrane: 1, epiretinal membrane: 1, |

| C22 | |

| C25 | Cataract: 239, moderate non proliferative retinopathy: 10, refractive media opacity: 49, lens dust: 12, vitreous degeneration: 1, laser spot: 2, mild nonproliferative retinopathy: 2, pathological myopia: 4, hypertensive retinopathy: 3, suspected cataract: 1, drusen: 1, |

| C27 | |

| C29 | Moderate non proliferative retinopathy: 27, laser spot: 31, lens dust: 2, macular epiretinal membrane: 4, epiretinal membrane: 3, cataract: 2, myelinated nerve fibers: 1, severe proliferative diabetic retinopathy: 2, glaucoma: 1, mild nonproliferative retinopathy: 1, post laser photocoagulation: 2, |

| C32 | Glaucoma: 1, central retinal vein occlusion: 1, |

| Age-related macular degeneration (ARMD) | Diabetic retinopathy (DR) | Media haze (MH) |

| Drusens (DN) | Branch retinal vein occlusion (BRVO) | Asteroid hyalosis (AH) |

| Myopia (MYA) | Central retinal vein occlusion (CRVO) | |

| Tessellation (TSLN) | Central retinal artery occlusion (CRAO) | Retinitis (RS) |

| Epiretinal membrane (ERM) | Branch retinal artery occlusion (BRAO) | Chorioretinitis (CRS) |

| Macular hole (MHL) | Hemorrhagic retinopathy (HR) | Vasculitis (VS) |

| Central serous retinopathy (CSR) | Macroaneurysm (MCA) | |

| Cystoid macular edema (CME) | Tortuous vessels (TV) | Vitreous hemorrhage (VH) |

| Parafoveal telangiectasia (PT) | Collateral (CL) | Preretinal hemorrhage (PRH) |

| Macular scar (MS) | Plaque (PLQ) | |

| Post-traumatic choroidal rupture (PTCR) | Optic disc cupping (ODC) | Retinal traction (RT) |

| Choroidal folds (CF) | Optic disc pallor (ODP) | Myelinated nerve fibers (MNF) |

| Optic disc edema (ODE) | Laser scars (LS) | |

| Retinal pigment epithelium changes (RPEC) | Anterior ischemic optic neuropathy (AION) | Cotton-wool spots (CWS) |

| Retinitis pigmentosa (RP) | Optic disc pit maculopathy (ODPM) | Coloboma (CB) |

| Hemorrhagic pigment epithelial detachment (HPED) | Optociliary shunt (ST) | Exudation (EDN) |

| Tilted disc (TD) |

References

- Densen, P. Challenges and opportunities facing medical education. Trans. Am. Clin. Climatol. Assoc. 2011, 122, 48. [Google Scholar] [PubMed]

- Ocular Disease Intelligent Recognition ODIR-5K. Available online: https://www.kaggle.com/datasets/andrewmvd/ocular-disease-recognition-odir5k (accessed on 2 August 2022).

- Gour, N.; Khanna, P. Multi-class multi-label ophthalmological disease detection using transfer learning based convolutional neural network. Biomed. Signal Process. Control 2021, 66, 102329. [Google Scholar] [CrossRef]

- Pachade, S.; Porwal, P.; Thulkar, D.; Kokare, M.; Deshmukh, G.; Sahasrabuddhe, V.; Giancardo, L.; Quellec, G.; Mériaudeau, F. Retinal Fundus Multi-disease Image Dataset (RFMiD). 2020. [Google Scholar] [CrossRef]

- Cen, L.; Ji, J.; Lin, J.; Ju, S.; Lin, H.; Li, T.; Wang, Y.; Yang, J.; Liu, Y.; Tan, S.; et al. Automatic detection of 39 fundus diseases and conditions in retinal photographs using deep neural networks. Nat. Commun. 2021, 12, 4828. [Google Scholar] [CrossRef] [PubMed]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Maschinot, A.; Liu, C.; Krishnan, D. Supervised Contrastive Learning. arXiv 2020, arXiv:2004.11362. [Google Scholar]

- Xie, S.; Girshick, R.B.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. arXiv 2016, arXiv:1611.05431. [Google Scholar]

- Wygant, R.M. CLIPS—A powerful development and delivery expert system tool. Comput. Ind. Eng. 1989, 17, 546–549. [Google Scholar] [CrossRef]

- Ebbinghaus, H. Über das Gedächtnis. Untersuchungen zur Experimentellen Psychologie; Duncker & Humblot: Leipzig, Germany, 1885. [Google Scholar]

- Dunlosky, J.; Rawson, K.A.; Marsh, E.J.; Nathan, M.J.; Willingham, D.T. Improving students’ learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychol. Sci. Public Interest 2013, 14, 4–58. [Google Scholar] [CrossRef]

- Cepeda, N.J.; Pashler, H.; Vul, E.; Wixted, J.T.; Rohrer, D. Distributed practice in verbal recall tasks: A review and quantitative synthesis. Psychol. Bull. 2006, 132, 354. [Google Scholar] [CrossRef]

- Deng, F.; Gluckstein, J.A.; Larsen, D.P. Student-directed retrieval practice is a predictor of medical licensing examination performance. Perspect. Med. Educ. 2015, 4, 308–313. [Google Scholar] [CrossRef]

- Dolan, B.M.; Yialamas, M.A.; McMahon, G.T. A randomized educational intervention trial to determine the effect of online education on the quality of resident-delivered care. J. Grad. Med. Educ. 2015, 7, 376–381. [Google Scholar] [CrossRef]

- Kornell, N.; Bjork, R.A. Learning concepts and categories: Is spacing the “enemy of induction”? Psychol. Sci. 2008, 19, 585–592. [Google Scholar] [CrossRef] [PubMed]

- Larsen, D.P.; Butler, A.C.; Roediger, H.L., III. Repeated testing improves long-term retention relative to repeated study: A randomised controlled trial. Med. Educ. 2009, 43, 1174–1181. [Google Scholar] [CrossRef] [PubMed]

- McCabe, J. Metacognitive awareness of learning strategies in undergraduates. Mem. Cogn. 2011, 39, 462–476. [Google Scholar] [CrossRef] [PubMed]

- Morin, C.E.; Hostetter, J.M.; Jeudy, J.; Kim, W.G.; McCabe, J.A.; Merrow, A.C.; Ropp, A.M.; Shet, N.S.; Sidhu, A.S.; Kim, J.S. Spaced radiology: Encouraging durable memory using spaced testing in pediatric radiology. Pediatr. Radiol. 2019, 49, 990–999. [Google Scholar] [CrossRef] [PubMed]

- Agarwal, V.; Bump, G.M.; Heller, M.T.; Chen, L.W.; Branstetter, B.F., IV; Amesur, N.B.; Hughes, M.A. Resident case volume correlates with clinical performance: Finding the sweet spot. Acad. Radiol. 2019, 26, 136–140. [Google Scholar] [CrossRef] [PubMed]

- Liebman, D.L.; McKay, K.M.; Haviland, M.J.; Moustafa, G.A.; Borkar, D.S.; Kloek, C.E. Quantifying the educational benefit of additional cataract surgery cases in ophthalmology residency. J. Cataract Refract. Surg. 2020, 46, 1495–1500. [Google Scholar] [CrossRef] [PubMed]

- Aleksandrov, M.; Walsh, T. Online fair division: A survey. Proc. Aaai Conf. Artif. Intell. 2020, 34, 13557–13562. [Google Scholar] [CrossRef]

- Duong, M.T.; Rauschecker, A.M.; Rudie, J.D.; Chen, P.H.; Cook, T.S.; Bryan, R.N.; Mohan, S. Artificial intelligence for precision education in radiology. Br. J. Radiol. 2019, 92, 20190389. [Google Scholar] [CrossRef]

- Muthusami, A.; Mohsina, S.; Sureshkumar, S.; Anandhi, A.; Elamurugan, T.P.; Srinivasan, K.; Mahalakshmy, T.; Kate, V. Efficacy and feasibility of objective structured clinical examination in the internal assessment for surgery postgraduates. J. Surg. Educ. 2017, 74, 398–405. [Google Scholar] [CrossRef]

- Augustin, M. How to learn effectively in medical school: Test yourself, learn actively, and repeat in intervals. Yale J. Biol. Med. 2014, 87, 207. [Google Scholar]

- Wissman, K.T.; Rawson, K.A.; Pyc, M.A. How and when do students use flashcards? Memory 2012, 20, 568–579. [Google Scholar] [CrossRef]

- Karpicke, J.D.; Roediger III, H.L. The critical importance of retrieval for learning. Science 2008, 319, 966–968. [Google Scholar] [CrossRef] [PubMed]

- Khan, S.M.; Liu, X.; Nath, S.; Korot, E.; Faes, L.; Wagner, S.K.; Keane, P.A.; Sebire, N.J.; Burton, M.J.; Denniston, A.K. A global review of publicly available datasets for ophthalmological imaging: Barriers to access, usability, and generalisability. Lancet Digit. Health 2021, 3, e51–e66. [Google Scholar] [CrossRef] [PubMed]

- Wartman, S.A.; Combs, C.D. Medical education must move from the information age to the age of artificial intelligence. Acad. Med. 2018, 93, 1107–1109. [Google Scholar] [CrossRef] [PubMed]

- Groza, A.; Toderean, L.; Muntean, G.A.; Nicoara, S.D. Agents that argue and explain classifications of retinal conditions. J. Med. Biol. Eng. 2021, 41, 730–741. [Google Scholar] [CrossRef]

- Spriggs, J. GSN-the Goal Structuring Notation: A Structured Approach to Presenting Arguments; Springer Science & Business Media: London, UK, 2012. [Google Scholar]

| Dataset | CFPs | Device | Conditions |

|---|---|---|---|

| ODIR | 10,000 | Canon, Zeiss, Kowa | 8 |

| RFMID | 3200 | TOPCON 3D OCT-2000, TOPCON TRC-NW300 and Kowa VX-10 | 45 |

| JSIEC | 1000 | ZEISS FF450 Plus IR Fundus, Topcon TRC-50DX Mydriatic Retinal Camera | 39 |

| Resident | 9693 | 19 |

| New Class | ODIR Keywords | RFMID Class | JSIEC Class |

|---|---|---|---|

| C0 | Normal fundus | 0.0.Normal | |

| C1 | Age-related macular degeneration Dry age-related macular degeneration Wet age-related macular degeneration | ARMD | 6.Maculopathy |

| C2 | Mild nonproliferative retinopathy | 0.3.DR1 | |

| C3 | Moderate non proliferative retinopathy | 1.0.DR2 | |

| C4 | Severe nonproliferative retinopathy | ||

| C5 | Proliferative diabetic retinopathy severe proliferative diabetic retinopathy | 1.1.DR3 | |

| C6 | Glaucoma | ||

| C7 | Hypertensive retinopathy | ||

| C8 | Pathological myopia | MYA | 9.Pathological myopia |

| C9 | Tessellated fundus | TSLN | 0.1.Tessellated fundus |

| C10 | Vitreous degeneration | AH | 18.Vitreous particles |

| C11 | Branch retinal vein occlusion | BRVO | 2.0.BRVO |

| C12 | 5.1.VKH disease | ||

| C13 | ODC | 0.2.Large optic cup | |

| C14 | Drusen | DN | |

| C15 | Epiretinal membrane Epiretinal membrane over the macula Macular epiretinal membrane | ERM | 7.ERM |

| C16 | TD | 13.Dragged Disc | |

| C17 | 14.Congenital disc abnormality | ||

| C18 | Optic disc edema | ODE | 12.Disc swelling and elevation |

| C19 | Myelinated nerve fibers | MNF | 17.Myelinated nerve fiber |

| C20 | 15.1.Bietti crystalline dystrophy | ||

| C21 | 16.Peripheral retinal degeneration and break | ||

| C22 | Rhegmatogenous retinal detachment | 4.Rhegmatogenous RD | |

| C23 | Macular hole | MHL | 8.MH |

| C24 | Chorioretinal atrophy | CB | 24.Chorioretinal atrophy-coloboma |

| C25 | Cataract Refractive media opacity | MH | 29.0.Blur fundus without PDR |

| C26 | 29.1.Blur fundus with suspected PDR | ||

| C27 | Central serous chorioretinopathy | CSR | 5.0.CSCR |

| C28 | 19.Fundus neoplasm | ||

| C29 | Post laser photocoagulation, laser spot | LS | 27.Laser Spots |

| C30 | 28.Silicon oil in eye | ||

| C31 | Central retinal artery occlusion Branch retinal artery occlusion (BRAO) | CRAO BRAO | 3.RAO |

| C32 | Central retinal vein occlusion | CRVO | 2.1.CRVO |

| C33 | Retinitis pigmentosa | RP | 15.0.Retinitis pigmentosa |

| C34 | PRH | 25.Preretinal hemorrhage | |

| C35 | EDN | 20.Massive hard exudates | |

| C36 | 21.Yellow-white spots-flecks | ||

| C37 | CWS | 22.Cotton-wool spots | |

| C38 | TV | 23.Vessel tortuosity |

| Train Set | Test Set | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| # | Label | ODIR | RFMID | JSIEC | Total | ODIR | RFMID | JSIEC | Total |

| 1 | C0 | 2270 | 0 | 28 | 2298 | 601 | 0 | 10 | 611 |

| 2 | C1 | 88 | 138 | 0 | 226 | 23 | 31 | 0 | 54 |

| 3 | C2 | 441 | 0 | 0 | 441 | 95 | 0 | 0 | 95 |

| 4 | C3 | 756 | 0 | 38 | 794 | 180 | 0 | 11 | 191 |

| 5 | C4 | 129 | 0 | 0 | 129 | 28 | 0 | 0 | 28 |

| 6 | C5 | 25 | 0 | 29 | 54 | 4 | 0 | 10 | 14 |

| 7 | C6 | 209 | 0 | 0 | 209 | 48 | 0 | 0 | 48 |

| 8 | C7 | 157 | 0 | 0 | 157 | 30 | 0 | 0 | 30 |

| 9 | C8 | 195 | 126 | 40 | 361 | 48 | 35 | 14 | 97 |

| 10 | C9 | 1 | 204 | 9 | 214 | 0 | 47 | 4 | 51 |

| 11 | C10 | 53 | 18 | 11 | 82 | 14 | 1 | 3 | 18 |

| 12 | C11 | 21 | 89 | 29 | 139 | 4 | 27 | 15 | 46 |

| 13 | C13 | 0 | 304 | 36 | 340 | 0 | 69 | 14 | 83 |

| 14 | C14 | 248 | 174 | 0 | 422 | 51 | 53 | 0 | 104 |

| 15 | C15 | 294 | 19 | 22 | 335 | 74 | 5 | 4 | 83 |

| 16 | C18 | 1 | 76 | 12 | 89 | 1 | 20 | 1 | 22 |

| 17 | C19 | 77 | 3 | 10 | 90 | 14 | 0 | 1 | 15 |

| 18 | C22 | 0 | 0 | 44 | 44 | 0 | 0 | 13 | 13 |

| 19 | C25 | 288 | 382 | 84 | 754 | 57 | 104 | 27 | 188 |

| 20 | C27 | 0 | 42 | 9 | 51 | 0 | 19 | 5 | 24 |

| 21 | C29 | 33 | 17 | 16 | 66 | 8 | 4 | 4 | 16 |

| 22 | C32 | 1 | 34 | 15 | 50 | 0 | 10 | 7 | 17 |

| Label | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|

| C0 | 0.75 | 0.79 | 0.77 | 611 |

| C1 | 0.67 | 0.74 | 0.70 | 54 |

| C6 | 0.41 | 0.29 | 0.34 | 48 |

| C7 | 0.38 | 0.10 | 0.16 | 30 |

| C8 | 0.87 | 0.85 | 0.86 | 97 |

| C9 | 0.51 | 0.55 | 0.53 | 51 |

| C10 | 0.86 | 0.67 | 0.75 | 18 |

| C11 | 0.90 | 0.78 | 0.84 | 46 |

| C13 | 0.69 | 0.86 | 0.76 | 83 |

| C14 | 0.60 | 0.50 | 0.54 | 104 |

| C15 | 0.63 | 0.40 | 0.49 | 83 |

| C18 | 0.75 | 0.68 | 0.71 | 22 |

| C19 | 0.75 | 0.60 | 0.67 | 15 |

| C22 | 1.00 | 0.92 | 0.96 | 13 |

| C25 | 0.84 | 0.84 | 0.84 | 188 |

| C27 | 0.86 | 0.79 | 0.83 | 24 |

| C29 | 0.43 | 0.75 | 0.55 | 16 |

| C32 | 1.00 | 1.00 | 1.00 | 17 |

| DR | 0.73 | 0.67 | 0.70 | 328 |

| # | Educational Topic | Retinal Condition |

|---|---|---|

| Normal | Normal (C0), tessellated fundus (C9) | |

| Macular conditions | Age-related macular degeneration (C1), pathological myopia (C8), drusen (C14), epiretinal membrane (C15), central serous chorioretinopathy (C27) | |

| Vascular conditions | Diabetic retinopathy (DR), hypertensive retinopathy (C7), branch retinal vein occlusion (C11), central retinal vein occlusion (C32) | |

| Optic nerve conditions | Glaucoma (C6), large optic cup (C13), optic disc edema (C18), myelinated nerve fibers (C19) | |

| Peripheral retina conditions | Rhegmatogenous retinal detachment (C22), laser spots (C29) | |

| Transparent media conditions | Vitreous degeneration (C10), refractive media opacity (C25) |

| Resident: | Date: | ||

|---|---|---|---|

| OSCE DR | |||

| Correct Diagnosis Pass (Calculate Score) | ☑ | Wrong Diagnosis Fail (0 Points) | ☐ |

| Clinical fundus signs | (each box = 1 point) | ||

| Microaneurysms | ☑ | Neovascularisation of the disc | ☑ |

| Dot-blot hemorrhages | ☑ | Neovascularisation elsewhere | ☐ |

| Hard exudates | ☑ | Preretinal hemorrhage | ☐ |

| Cotton-wool spots | ☑ | Vitreous hemorrhage | ☐ |

| Venous beading | ☑ | Tractional retinal detachment | ☐ |

| Intraretinal microvascular anomalies | ☑ | Laser spots | ☐ |

| Differential diagnosis of macular edema | (each box = 1 point) | ||

| Hypertensive retinopathy | ☑ | Macular edema secondary to epiretinal membrane | ☐ |

| Central retinal vein occlusion | ☐ | Ruptured microaneurysm | ☐ |

| Branch retinal vein occlusion | ☑ | Irvine gass syndrome | ☑ |

| Choroidal neovascular membrane | ☐ | Post uveitic macular edema | ☑ |

| Differential diagnosis of retinopathy | (each box = 1 point) | ||

| Central retinal vein occlusion | ☑ | Valsalva retinopathy | ☐ |

| Hemiretinal vein occlusion | ☑ | Sickle cell retinopathy | ☐ |

| Branch retinal vein occlusion | ☑ | Post-traumatic retinal bleed | ☑ |

| Hypertensive retinopathy | ☑ | Retinal macroaneurysm | ☐ |

| Ocular ischemic syndrome | ☑ | Retinopathy in thalassemia | ☐ |

| Terson syndrome | ☑ | ||

| Management of macular edema | (each box = 1 point) | ||

| Observation | ☐ | Intravitreal anti-VEGF | ☑ |

| Management of retinopathy | (each box = 1 point) | ||

| Observation | ☐ | Intravitreal anti-VEGF | ☑ |

| Panfundus laser photocoagulation | ☑ | Vitrectomy | ☐ |

| Resident scored (29) points of a total of 37 | |||

| Physician: | Score: (4) | ||

| # | Rule |

|---|---|

| Assign at least one case/day to each resident | |

| Assign with priority patients presenting to the retina clinic, then, in case of shortage, CFPs from the Resident dataset | |

| Assign one case from each of the 19 retinal conditions to each resident | |

| Assign the case to the resident which has seen fewer cases from this retinal conditions, up to 3 cases | |

| Assign the case to the resident with the lowest grade (performance score + difficulty score) until all residents obtain a grade ≥ 7 for every retinal condition | |

| Assign the case to the resident with the oldest encounter for that specific condition | |

| Assign the case to the resident with the lowest number of cases from that specific educational topic | |

| Assign the case to the resident with the lowest number of cases from that specific retinal condition | |

| Assign the case to the resident with the lowest number of cases from all the 19 retinal conditions |

| # | Rule |

|---|---|

| Assign the case to the resident who has seen fewer cases overall that day and at the same time, add the case to virtual cases and supplementary assign it as a virtual case to each resident | |

| Assign the case to the resident with the lowest number of cases from all retinal conditions |

| # | Rule |

|---|---|

| Assign one case from each of the 19 RCs | |

| Assign the resident a case from the RCs with fewer encountered cases, up to 3 cases | |

| Assign the resident a case from to the RC with the lowest grade (performance score + difficulty score) until a grade ≥ 7 for all retinal conditions | |

| Assign the resident a case from the RC with the oldest encounter | |

| Assign the resident a case from the RC with the fewest cases seen |

| # | Rule |

|---|---|

| If after month 5, there are still residents who have not seen 1 case from each of the 19 conditions, start supplementarily assigning 1 virtual case each day for every resident until the criteria is met | |

| If after month 7, there are still residents who have not seen 3 cases from each of the 19 retinal conditions, start supplementarily assigning 1 virtual case each day for every resident until the criteria is met | |

| If after month 9, there are still residents who have not achieved a grade of 7 or higher on every one of the 19 retinal conditions, start supplementarily assigning 1 virtual case each day for every resident until the criteria is met. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muntean, G.A.; Groza, A.; Marginean, A.; Slavescu, R.R.; Steiu, M.G.; Muntean, V.; Nicoara, S.D. Artificial Intelligence for Personalised Ophthalmology Residency Training. J. Clin. Med. 2023, 12, 1825. https://doi.org/10.3390/jcm12051825

Muntean GA, Groza A, Marginean A, Slavescu RR, Steiu MG, Muntean V, Nicoara SD. Artificial Intelligence for Personalised Ophthalmology Residency Training. Journal of Clinical Medicine. 2023; 12(5):1825. https://doi.org/10.3390/jcm12051825

Chicago/Turabian StyleMuntean, George Adrian, Adrian Groza, Anca Marginean, Radu Razvan Slavescu, Mihnea Gabriel Steiu, Valentin Muntean, and Simona Delia Nicoara. 2023. "Artificial Intelligence for Personalised Ophthalmology Residency Training" Journal of Clinical Medicine 12, no. 5: 1825. https://doi.org/10.3390/jcm12051825

APA StyleMuntean, G. A., Groza, A., Marginean, A., Slavescu, R. R., Steiu, M. G., Muntean, V., & Nicoara, S. D. (2023). Artificial Intelligence for Personalised Ophthalmology Residency Training. Journal of Clinical Medicine, 12(5), 1825. https://doi.org/10.3390/jcm12051825