Chest X-ray Foreign Objects Detection Using Artificial Intelligence

Abstract

1. Introduction

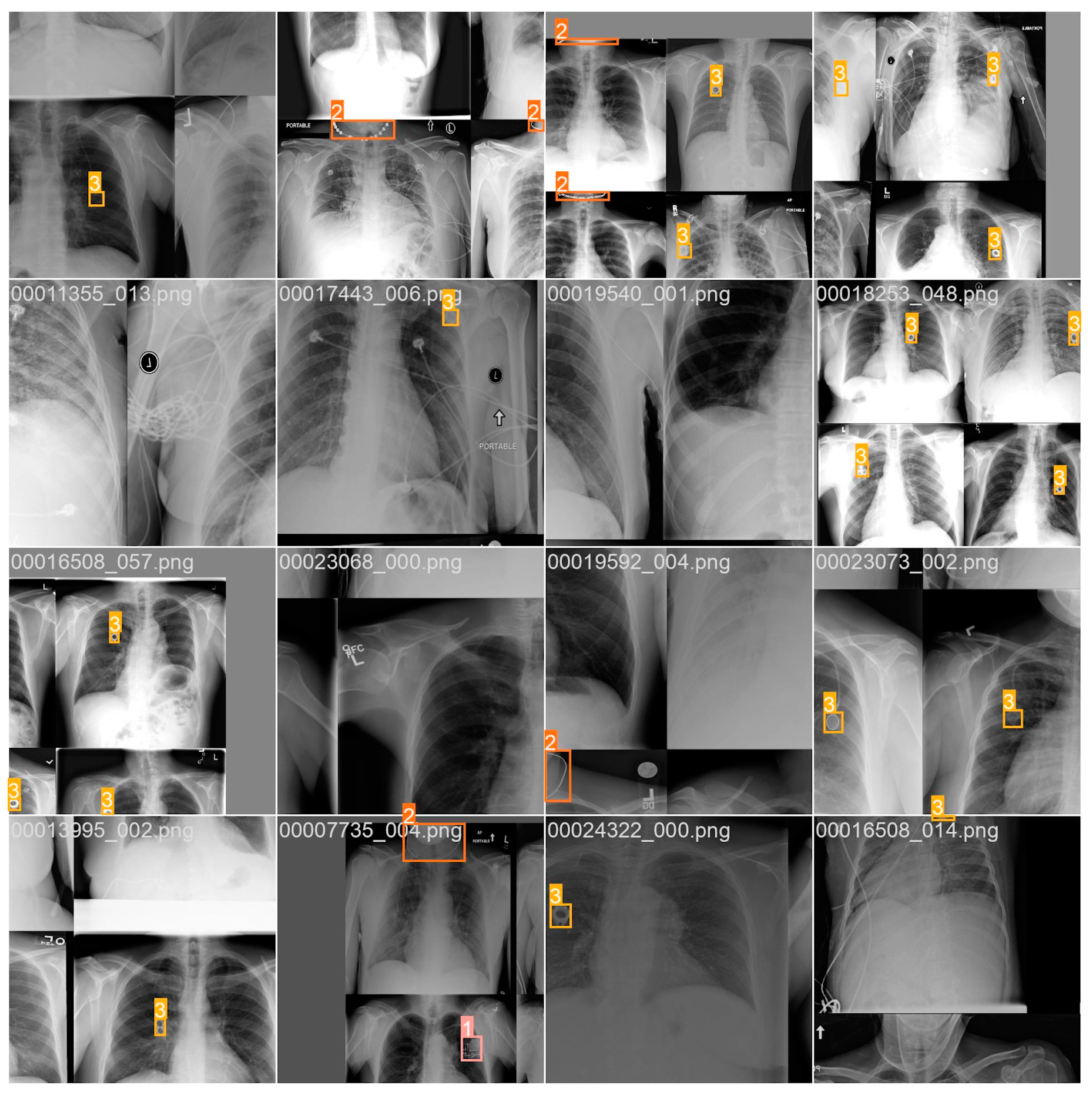

2. Materials and Methods

2.1. Radiological Part

2.2. Technical Part

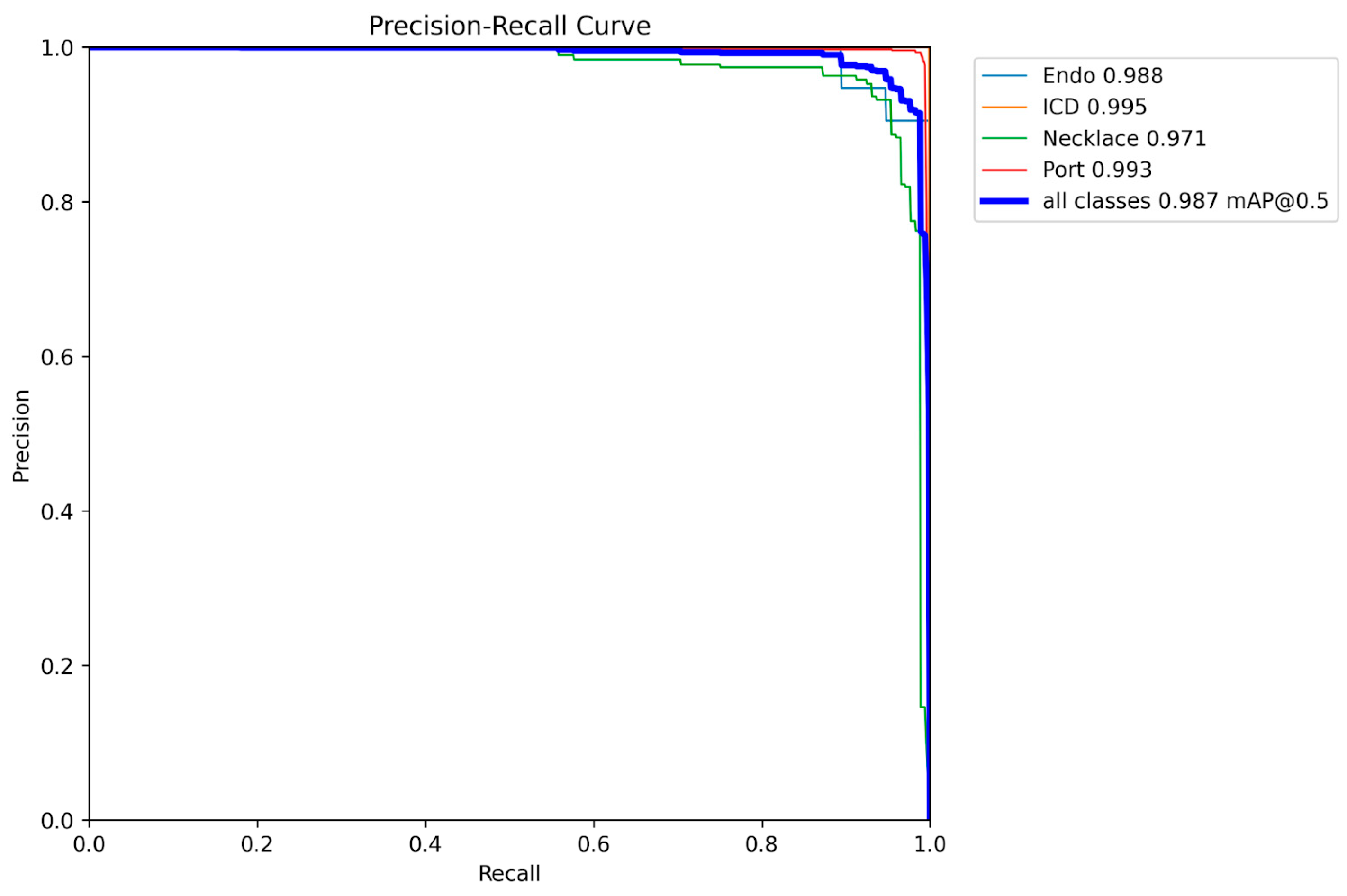

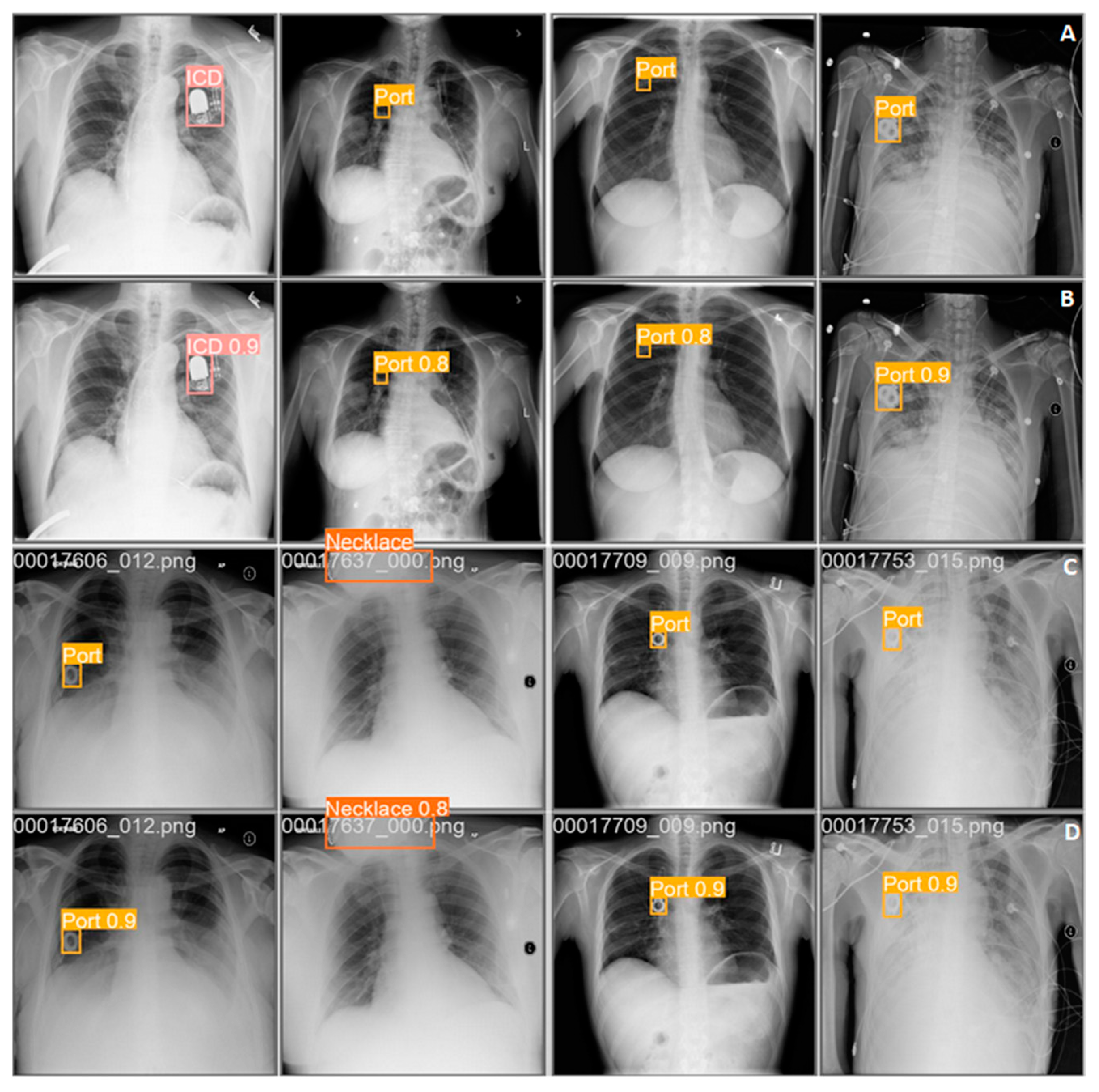

3. Results

4. Related Works

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Murphy, K. The Global Innovation Index 2019. How Data Will Improve Healthcare Without Adding Staff or Beds. Available online: https://www.wipo.int/edocs/pubdocs/en/wipo_pub_gii_2019-chapter8.pdf (accessed on 12 July 2023).

- Rogers, M. Routine Admission CXR (RACXR). Core EM. Available online: https://coreem.net/core/routine-admission-cxr-racxr/ (accessed on 13 July 2023).

- Kufel, J.; Bargieł-Łączek, K.; Kocot, S.; Koźlik, M.; Bartnikowska, W.; Janik, M.; Czogalik, Ł.; Dudek, P.; Magiera, M.; Lis, A.; et al. What Is Machine Learning, Artificial Neural Networks and Deep Learning?—Examples of Practical Applications in Medicine. Diagnostics 2023, 13, 2582. [Google Scholar] [CrossRef]

- Ghaderzadeh, M.; Asadi, F.; Jafari, R.; Bashash, D.; Abolghasemi, H.; Aria, M. Deep Convolutional Neural Network–Based Computer-Aided Detection System for COVID-19 Using Multiple Lung Scans: Design and Implementation Study. J. Med. Internet Res. 2021, 23, e27468. [Google Scholar] [CrossRef]

- Ghaderzadeh, M.; Aria, M.; Hosseini, A.; Asadi, F.; Bashash, D.; Abolghasemi, H. A Fast and Efficient CNN Model for B-ALL Diagnosis and Its Subtypes Classification Using Peripheral Blood Smear Images. Int. J. Intell. Syst. 2022, 37, 5113–5133. [Google Scholar] [CrossRef]

- Hosseini, A.; Eshraghi, M.A.; Taami, T.; Sadeghsalehi, H.; Hoseinzadeh, Z.; Ghaderzadeh, M.; Rafiee, M. A Mobile Application Based on Efficient Lightweight CNN Model for Classification of B-ALL Cancer from Non-Cancerous Cells: A Design and Implementation Study. Inform. Med. Unlocked 2023, 39, 101244. [Google Scholar] [CrossRef]

- Garavand, A.; Behmanesh, A.; Aslani, N.; Sadeghsalehi, H.; Ghaderzadeh, M. Towards Diagnostic Aided Systems in Coronary Artery Disease Detection: A Comprehensive Multiview Survey of the State of the Art. Int. J. Intell. Syst. 2023, 2023, 6442756. [Google Scholar] [CrossRef]

- Howard, J.P.; Fisher, L.; Shun-Shin, M.J.; Keene, D.; Arnold, A.D.; Ahmad, Y.; Cook, C.M.; Moon, J.C.; Manisty, C.H.; Whinnett, Z.I.; et al. Cardiac Rhythm Device Identification Using Neural Networks. JACC Clin. Electrophysiol. 2019, 5, 576–586. [Google Scholar] [CrossRef]

- Jain, R.K.; Vokes, T. Dual-Energy X-ray Absorptiometry. J. Clin. Densitom. 2017, 20, 291–303. [Google Scholar] [CrossRef]

- Morgan, H.; Pettet, G.; Reed, M.; Paul, S.P. Indications for Chest X-rays in Children and How to Obtain and Interpret Them. Nurs. Child. Young People 2018, 30, 30–37. [Google Scholar] [CrossRef]

- Dedhia, K.; Chang, Y.-F.; Leonardis, R.; Chi, D.H. Is There a Need for Repeat Radiologic Examination of Children with Esophageal Coin Foreign Body? Otolaryngol.–Head Neck Surg. 2017, 156, 173–179. [Google Scholar] [CrossRef]

- de Boer, M.; van Middelkoop, M.; Hauptmann, M.; van der Bijl, N.; Bosmans, J.A.W.; Hendriks-Brouwer, N.; Schop, S.J.; de Boer, J.P.; Hijmering, N.J.; Overbeek, L.I.H.; et al. Breast Implant Prevalence in the Dutch Female Population Assessed by Chest Radiographs. Aesthetic Surg. J. 2020, 40, 156–164. [Google Scholar] [CrossRef]

- Javadrashid, R.; Fouladi, D.F.; Golamian, M.; Hajalioghli, P.; Daghighi, M.H.; Shahmorady, Z.; Niknejad, M.T. Visibility of Different Foreign Bodies in the Maxillofacial Region Using Plain Radiography, CT, MRI and Ultrasonography: An In Vitro Study. Dentomaxillofac. Radiol. 2015, 44, 20140229. [Google Scholar] [CrossRef] [PubMed]

- Baram, A.; Sherzad, H.; Saeed, S.; Kakamad, F.H.; Hamawandi, A.M.H. Tracheobronchial Foreign Bodies in Children: The Role of Emergency Rigid Bronchoscopy. Glob. Pediatr. Health 2017, 4, 2333794X17743663. [Google Scholar] [CrossRef] [PubMed]

- Dick, A.C.; Deans, G.T.; Johnston, L.; Spence, R.A. Ruptured Silicone Breast Implant: A Misleading Chest X-ray. Ulster Med. J. 1994, 63, 238–240. [Google Scholar] [PubMed]

- Kim, T.; Messersmith, R.N.; MacMahon, H. Pulmonary Nodule Mimicked by ECG Lead Artifact. Chest 1989, 95, 237–238. [Google Scholar] [CrossRef]

- Gronner, A.T.; Eisenberg, R.L. Pseudonodule of the Lung Caused by Offset DX ECG Electrode. Am. J. Roentgenol. 1994, 162, 728–729. [Google Scholar] [CrossRef] [PubMed]

- Edwards, M.E.; Harris, R.D.; Spiegel, P.K. Offset Electrocardiographic Electrode Simulating a Pulmonary Nodule on Radiographs. Radiology 1989, 172, 281–282. [Google Scholar] [CrossRef]

- Gupta, P.K.; Gupta, K.; Jain, M.; Garg, T. Postprocedural Chest Radiograph: Impact on the Management in Critical Care Unit. Anesth. Essays Res. 2014, 8, 139. [Google Scholar] [CrossRef]

- Kara, S.; Akers, J.Y.; Chang, P.D. Identification and Localization of Endotracheal Tube on Chest Radiographs Using a Cascaded Convolutional Neural Network Approach. J. Digit. Imaging 2021, 34, 898–904. [Google Scholar] [CrossRef]

- Weinreich, M.; Chudow, J.J.; Weinreich, B.; Krumerman, T.; Nag, T.; Rahgozar, K.; Shulman, E.; Fisher, J.; Ferrick, K.J. Development of an Artificially Intelligent Mobile Phone Application to Identify Cardiac Devices on Chest Radiography. JACC Clin. Electrophysiol. 2019, 5, 1094–1095. [Google Scholar] [CrossRef]

- Chudow, J.J.; Jones, D.; Weinreich, M.; Zaremski, L.; Lee, S.; Weinreich, B.; Krumerman, A.; Fisher, J.D.; Ferrick, K.J. A Head-to Head Comparison of Machine Learning Algorithms for Identification of Implanted Cardiac Devices. Am. J. Cardiol. 2021, 144, 77–82. [Google Scholar] [CrossRef]

- Sultan, H.; Owais, M.; Choi, J.; Mahmood, T.; Haider, A.; Ullah, N.; Park, K.R. Artificial Intelligence-Based Solution in Personalized Computer-Aided Arthroscopy of Shoulder Prostheses. J. Pers. Med. 2022, 12, 109. [Google Scholar] [CrossRef] [PubMed]

- Sultan, H.; Owais, M.; Park, C.; Mahmood, T.; Haider, A.; Park, K.R. Artificial Intelligence-Based Recognition of Different Types of Shoulder Implants in X-Ray Scans Based on Dense Residual Ensemble-Network for Personalized Medicine. J. Pers. Med. 2021, 11, 482. [Google Scholar] [CrossRef] [PubMed]

- Jeong, Y.; Lee, J.; Kim, K.A.; Park, C.M. Medical Devices of the Abdomen and Pelvis and Their Complications: A Radiologic Atlas. Taehan Yongsang Uihakhoe Chi 2020, 81, 863–885. [Google Scholar] [CrossRef] [PubMed]

- Mathew, R.P.; Alexander, T.; Patel, V.; Low, G. Chest Radiographs of Cardiac Devices (Part 1): Lines, Tubes, Non-Cardiac Medical Devices and Materials. S. Afr. J. Radiol. 2019, 23, 1–9. [Google Scholar] [CrossRef][Green Version]

- Sigakis, C.J.G.; Mathai, S.K.; Suby-Long, T.D.; Restauri, N.L.; Ocazionez, D.; Bang, T.J.; Restrepo, C.S.; Sachs, P.B.; Vargas, D. Radiographic Review of Current Therapeutic and Monitoring Devices in the Chest. RadioGraphics 2018, 38, 1027–1045. [Google Scholar] [CrossRef]

- Taljanovic, M.S.; Hunter, T.B.; Freundlich, I.M.; Mar, W.A.; Smyth, S.H.; O’Brien, M.J. Misplaced Devices in the Chest, Abdomen, and Pelvis: Part I. In Seminars in Ultrasound, CT and MRI; Saunders: Philadelphia, PA, USA, 2006; Volume 27, pp. 78–97. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.; Shpanskaya, K.; et al. CheXNet: Radiologist-Level Pneumonia Detection on Chest X-rays with Deep Learning. arXiv 2017, arXiv:1711.05225. [Google Scholar] [CrossRef]

- National Institutes of Health—Clinical Center. CXR8. Available online: https://nihcc.app.box.com/v/ChestXray-NIHCC (accessed on 11 July 2023).

- Kim, U.-H.; Kim, M.Y.; Park, E.-A.; Lee, W.; Lim, W.-H.; Kim, H.-L.; Oh, S.; Jin, K.N. Deep Learning-Based Algorithm for the Detection and Characterization of MRI Safety of Cardiac Implantable Electronic Devices on Chest Radiographs. Korean J. Radiol. 2021, 22, 1918–1928. [Google Scholar] [CrossRef]

- Graham, L.-J. Chest X-ray—Cardiac Disease—Other Artifacts. Coronary Artery Stent. 12 March 2023. Available online: https://www.radiologymasterclass.co.uk/gallery/chest/cardiac_disease/artifacts (accessed on 22 July 2023).

- MRI Access Surescan Implantable Cardiac Devices, Medtronic. 2020. Available online: https://www.medtronic.com/us-en/healthcare-professionals/mri-resources/implantable-cardiac-devices.html (accessed on 21 July 2023).

- Ainslie, M.; Miller, C.; Brown, B.; Schmitt, M. Cardiac MRI of Patients with Implanted Electrical Cardiac Devices. Heart 2014, 100, 363–369. [Google Scholar] [CrossRef]

- Higaki, A.; Kurokawa, T.; Kazatani, T.; Kido, S.; Aono, T.; Matsuda, K.; Tanaka, Y.; Kosaki, T.; Kawamura, G.; Shigematsu, T.; et al. Image Similarity-Based Cardiac Rhythm Device Identification from X-Rays Using Feature Point Matching. Pacing Clin. Electrophysiol. 2021, 44, 633–640. [Google Scholar] [CrossRef]

- White, R.D.; Demirer, M.; Gupta, V.; Sebro, R.A.; Kusumoto, F.M.; Erdal, B.S. Pre-Deployment Assessment of an AI Model to Assist Radiologists in Chest X-Ray Detection and Identification of Lead-Less Implanted Electronic Devices for Pre-MRI Safety Screening: Realized Implementation Needs and Proposed Operational Solutions. J. Med. Imaging 2022, 9, 054504. [Google Scholar] [CrossRef]

- Rav Acha, M.; Soifer, E.; Hasin, T. Cardiac Implantable Electronic Miniaturized and Micro Devices. Micromachines 2020, 11, 902. [Google Scholar] [CrossRef] [PubMed]

- Muthalaly, R.G.; Nerlekar, N.; Ge, Y.; Kwong, R.Y.; Nasis, A. MRI in Patients with Cardiac Implantable Electronic Devices. Radiology 2018, 289, 281–292. [Google Scholar] [CrossRef] [PubMed]

- Thurston, M.D.V.; Kim, D.H.; Wit, H.K. Neural Network Detection of Pacemakers for MRI Safety. J. Digit. Imaging 2022, 35, 1673–1680. [Google Scholar] [CrossRef] [PubMed]

- NIK o Sytuacji Pacjentów Potrzebujących Wszczepienia Stawu Biodrowego Lub Kolanowego—Najwyższa Izba Kontroli. Available online: https://www.nik.gov.pl/aktualnosci/pacjenci-potrzebujacy-wszczepienia-stawu.html (accessed on 29 July 2023).

- Deshpande, H.; Harder, T.; Saalbach, A.; Sawarkar, A.; Buelow, T. Detection of Foreign Objects In Chest Radiographs Using Deep Learning. In Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging Workshops (ISBI Workshops), Iowa City, IA, USA, 4 April 2020; IEEE: Iowa City, IA, USA, 2020; pp. 1–4. [Google Scholar]

- Fitter, A.C.; Cowie, J.C. Minerva. Br. Med. J. 1992, 305, 842. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kufel, J.; Bargieł-Łączek, K.; Koźlik, M.; Czogalik, Ł.; Dudek, P.; Magiera, M.; Bartnikowska, W.; Lis, A.; Paszkiewicz, I.; Kocot, S.; et al. Chest X-ray Foreign Objects Detection Using Artificial Intelligence. J. Clin. Med. 2023, 12, 5841. https://doi.org/10.3390/jcm12185841

Kufel J, Bargieł-Łączek K, Koźlik M, Czogalik Ł, Dudek P, Magiera M, Bartnikowska W, Lis A, Paszkiewicz I, Kocot S, et al. Chest X-ray Foreign Objects Detection Using Artificial Intelligence. Journal of Clinical Medicine. 2023; 12(18):5841. https://doi.org/10.3390/jcm12185841

Chicago/Turabian StyleKufel, Jakub, Katarzyna Bargieł-Łączek, Maciej Koźlik, Łukasz Czogalik, Piotr Dudek, Mikołaj Magiera, Wiktoria Bartnikowska, Anna Lis, Iga Paszkiewicz, Szymon Kocot, and et al. 2023. "Chest X-ray Foreign Objects Detection Using Artificial Intelligence" Journal of Clinical Medicine 12, no. 18: 5841. https://doi.org/10.3390/jcm12185841

APA StyleKufel, J., Bargieł-Łączek, K., Koźlik, M., Czogalik, Ł., Dudek, P., Magiera, M., Bartnikowska, W., Lis, A., Paszkiewicz, I., Kocot, S., Cebula, M., Gruszczyńska, K., & Nawrat, Z. (2023). Chest X-ray Foreign Objects Detection Using Artificial Intelligence. Journal of Clinical Medicine, 12(18), 5841. https://doi.org/10.3390/jcm12185841