Digital Alternative Communication for Individuals with Amyotrophic Lateral Sclerosis: What We Have

Abstract

1. Introduction

2. Materials and Methods

- SS01: (eye) AND (track OR gaze OR blink OR localization) AND (camera OR webcam) AND (“amyotrophic lateral sclerosis” OR als);

- SS02: (eye) AND (track OR gaze OR blink OR localization) AND (camera OR webcam) AND (“neuromuscular disease” OR “motor neuron disease”);

- SS03: see Appendix A.1.

- –

- : variable used to represent the total of Quality Assessment Criteria;

- –

- : variable used to determine the value referring to the weight w assigned to the Quality Assessment Criteria under analysis (see the possible values in the Equation (2)).

3. Results

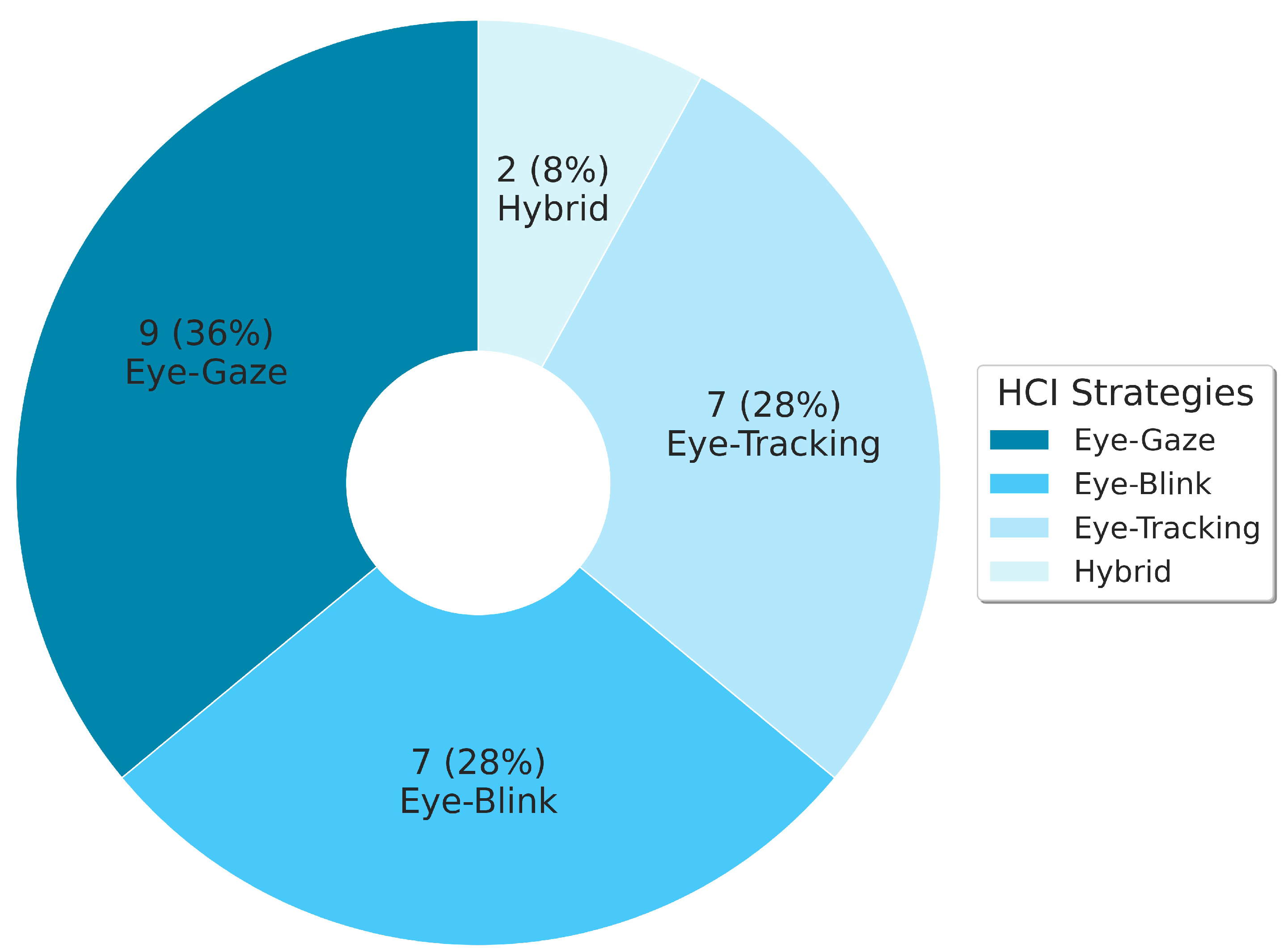

3.1. Research Question 01

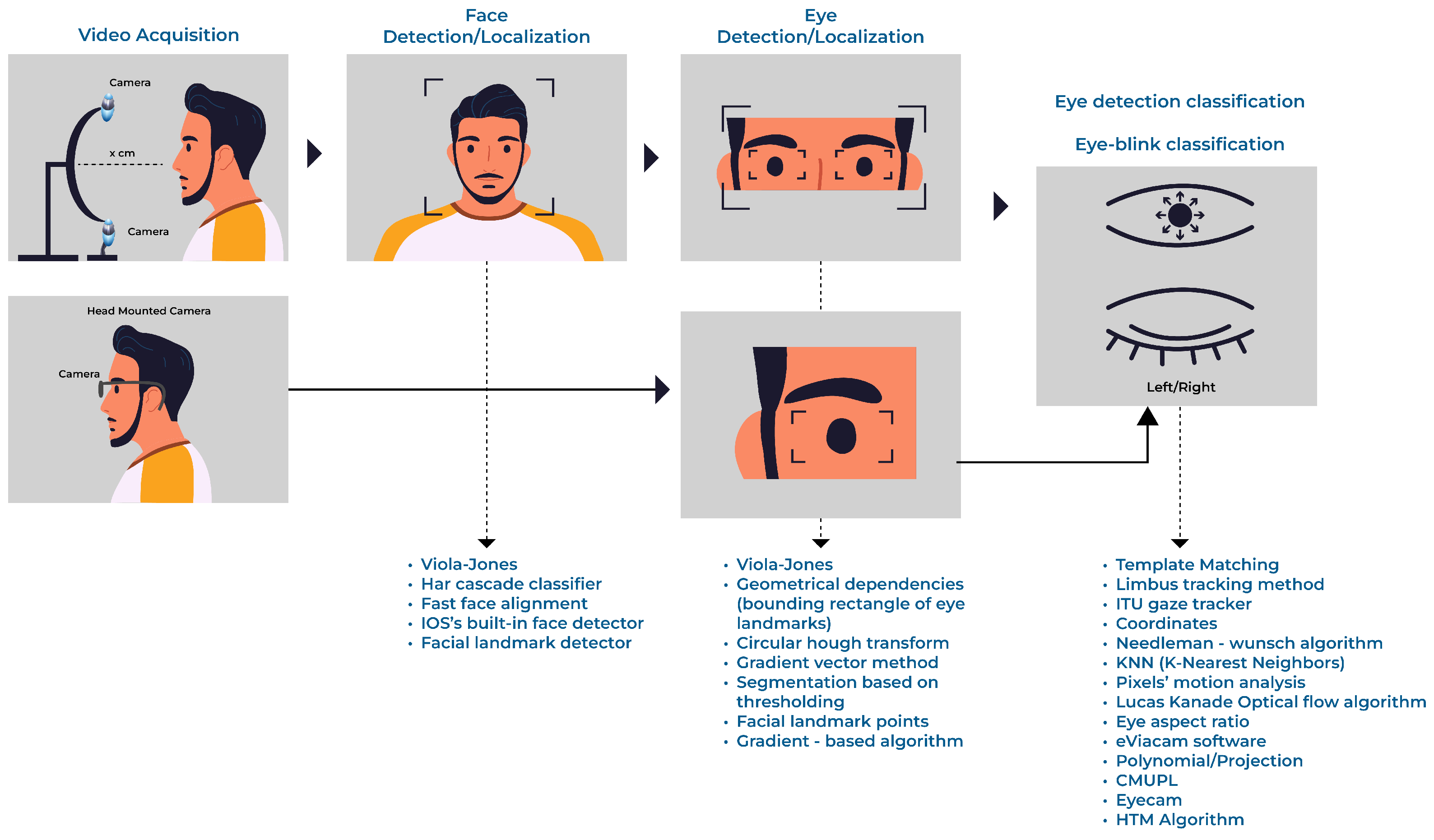

3.2. Research Question 02

3.3. Research Question 03

3.4. Research Question 04

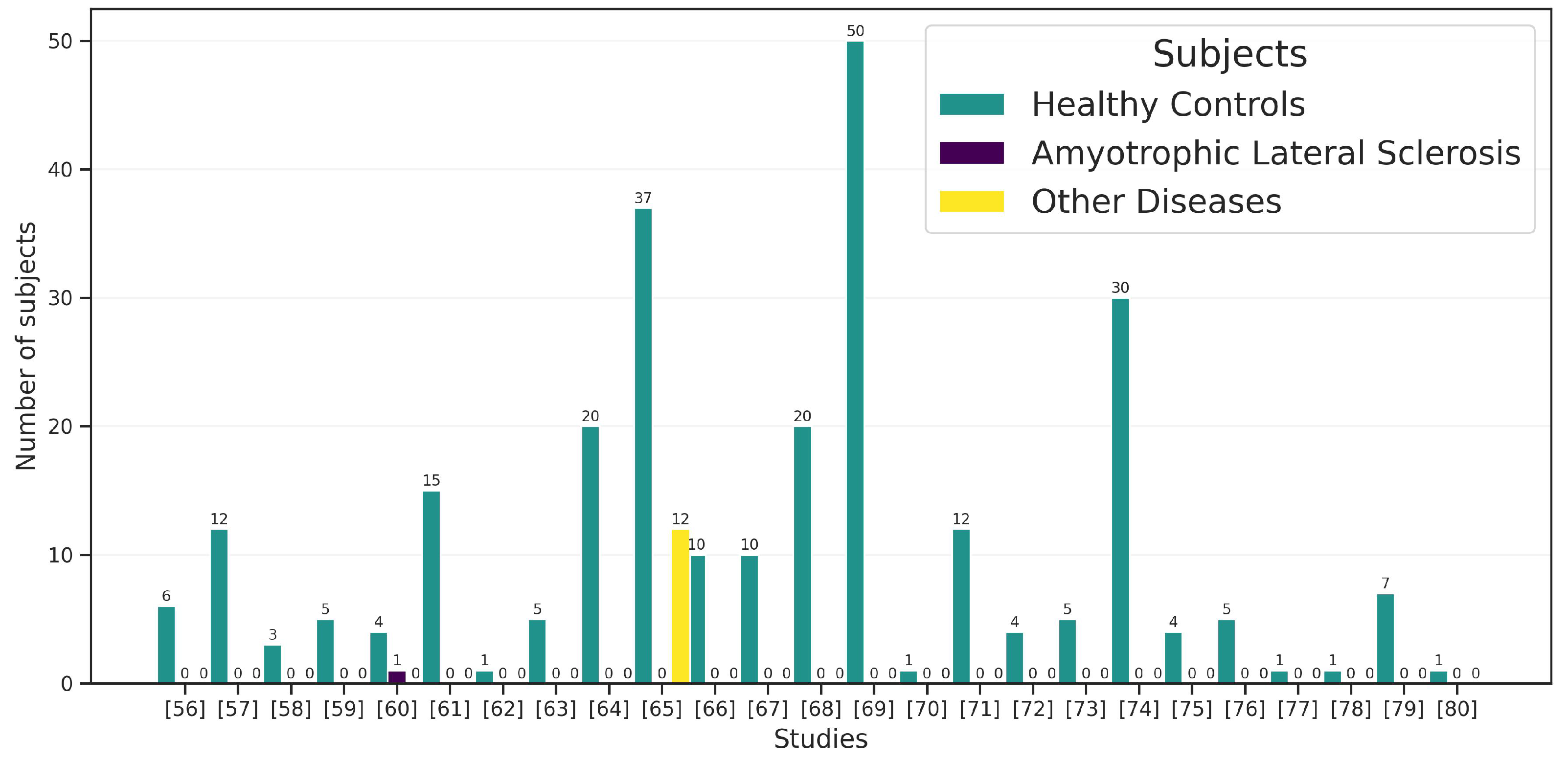

3.5. Research Question 05

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1

References

- Goutman, S.A.; Hardiman, O.; Al-Chalabi, A.; Chió, A.; Savelieff, M.G.; Kiernan, M.C.; Feldman, E.L. Recent advances in the diagnosis and prognosis of amyotrophic lateral sclerosis. Lancet Neurol. 2022, 21, 480–493. [Google Scholar] [CrossRef]

- Goutman, S.A.; Hardiman, O.; Al-Chalabi, A.; Chió, A.; Savelieff, M.G.; Kiernan, M.C.; Feldman, E.L. Emerging insights into the complex genetics and pathophysiology of amyotrophic lateral sclerosis. Lancet Neurol. 2022, 21, 465–479. [Google Scholar] [CrossRef]

- Saadeh, W.; Altaf, M.A.B.; Butt, S.A. A wearable neuro-degenerative diseases detection system based on gait dynamics. In Proceedings of the 2017 IFIP/IEEE International Conference on Very Large Scale Integration (VLSI-SoC), Abu Dhabi, United Arab Emirates, 23–25 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Hardiman, O.; Al-Chalabi, A.; Chio, A.; Corr, E.M.; Logroscino, G.; Robberecht, W.; Shaw, P.J.; Simmons, Z.; van den Berg, L.H. Amyotrophic lateral sclerosis. Nat. Rev. Dis. Prim. 2017, 3, 17071. [Google Scholar] [CrossRef] [PubMed]

- van Es, M.A.; Hardiman, O.; Chio, A.; Al-Chalabi, A.; Pasterkamp, R.J.; Veldink, J.H.; van den Berg, L.H. Amyotrophic lateral sclerosis. Lancet 2017, 390, 2084–2098. [Google Scholar] [CrossRef] [PubMed]

- Londral, A.; Pinto, A.; Pinto, S.; Azevedo, L.; De Carvalho, M. Quality of life in amyotrophic lateral sclerosis patients and caregivers: Impact of assistive communication from early stages. Muscle Nerve 2015, 52, 933–941. [Google Scholar] [CrossRef] [PubMed]

- Linse, K.; Aust, E.; Joos, M.; Hermann, A. Communication Matters—Pitfalls and Promise of Hightech Communication Devices in Palliative Care of Severely Physically Disabled Patients With Amyotrophic Lateral Sclerosis. Front. Neurol. 2018, 9, 603. [Google Scholar] [CrossRef] [PubMed]

- Linse, K.; Rüger, W.; Joos, M.; Schmitz-Peiffer, H.; Storch, A.; Hermann, A. Usability of eyetracking computer systems and impact on psychological wellbeing in patients with advanced amyotrophic lateral sclerosis. Amyotroph. Lateral Scler. Front. Degener. 2018, 19, 212–219. [Google Scholar] [CrossRef] [PubMed]

- Rosa Silva, J.P.; Santiago Júnior, J.B.; dos Santos, E.L.; de Carvalho, F.O.; de França Costa, I.M.P.; de Mendonça, D.M.F. Quality of life and functional independence in amyotrophic lateral sclerosis: A systematic review. Neurosci. Biobehav. Rev. 2020, 111, 1–11. [Google Scholar] [CrossRef]

- Gillespie, J.; Przybylak-Brouillard, A.; Watt, C.L. The Palliative Care Information Needs of Patients with Amyotrophic Lateral Sclerosis and their Informal Caregivers: A Scoping Review. J. Pain Symptom Manag. 2021, 62, 848–862. [Google Scholar] [CrossRef]

- Howard, I.M.; Burgess, K. Telehealth for Amyotrophic Lateral Sclerosis and Multiple Sclerosis. Phys. Med. Rehabil. Clin. N. Am. 2021, 32, 239–251. [Google Scholar] [CrossRef]

- Fernandes, F.; Barbalho, I.; Barros, D.; Valentim, R.; Teixeira, C.; Henriques, J.; Gil, P.; Dourado Júnior, M. Biomedical signals and machine learning in amyotrophic lateral sclerosis: A systematic review. Biomed. Eng. Online 2021, 20, 61. [Google Scholar] [CrossRef]

- de Lima Medeiros, P.A.; da Silva, G.V.S.; dos Santos Fernandes, F.R.; Sánchez-Gendriz, I.; Lins, H.W.C.; da Silva Barros, D.M.; Nagem, D.A.P.; de Medeiros Valentim, R.A. Efficient machine learning approach for volunteer eye-blink detection in real-time using webcam. Expert Syst. Appl. 2022, 188, 116073. [Google Scholar] [CrossRef]

- Caligari, M.; Godi, M.; Guglielmetti, S.; Franchignoni, F.; Nardone, A. Eye tracking communication devices in amyotrophic lateral sclerosis: Impact on disability and quality of life. Amyotroph. Lateral Scler. Front. Degener. 2013, 14, 546–552. [Google Scholar] [CrossRef] [PubMed]

- Hwang, C.S.; Weng, H.H.; Wang, L.F.; Tsai, C.H.; Chang, H.T. An Eye-Tracking Assistive Device Improves the Quality of Life for ALS Patients and Reduces the Caregivers’ Burden. J. Mot. Behav. 2014, 46, 233–238. [Google Scholar] [CrossRef] [PubMed]

- Shravani, T.; Sai, R.; Vani Shree, M.; Amudha, J.; Jyotsna, C. Assistive Communication Application for Amyotrophic Lateral Sclerosis Patients. In Computational Vision and Bio-Inspired Computing; Smys, S., Tavares, J.M.R.S., Balas, V.E., Iliyasu, A.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 1397–1408. [Google Scholar]

- Eicher, C.; Kiselev, J.; Brukamp, K.; Kiemel, D.; Spittel, S.; Maier, A.; Oleimeulen, U.; Greuèl, M. Expectations and Concerns Emerging from Experiences with Assistive Technology for ALS Patients. In Universal Access in Human-Computer Interaction. Theory, Methods and Tools; Antona, M., Stephanidis, C., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 57–68. [Google Scholar]

- Sigafoos, J.; Schlosser, R.W.; Lancioni, G.E.; O’Reilly, M.F.; Green, V.A.; Singh, N.N. Assistive Technology for People with Communication Disorders. In Assistive Technologies for People with Diverse Abilities. Autism and Child Psychopathology Series; Lancioni, G.E., Singh, N.N., Eds.; Springer: New York, NY, USA, 2014; Chapter 4; pp. 77–112. [Google Scholar]

- Bona, S.; Donvito, G.; Cozza, F.; Malberti, I.; Vaccari, P.; Lizio, A.; Greco, L.; Carraro, E.; Sansone, V.A.; Lunetta, C. The development of an augmented reality device for the autonomous management of the electric bed and the electric wheelchair for patients with amyotrophic lateral sclerosis: A pilot study. Disabil. Rehabil. Assist. Technol. 2019, 16, 513–519. [Google Scholar] [CrossRef] [PubMed]

- Santana G., A.; Ortiz C, O.; Acosta, J.F.; Andaluz, V.H. Autonomous Assistance System for People with Amyotrophic Lateral Sclerosis. In IT Convergence and Security 2017; Kim, K.J., Kim, H., Baek, N., Eds.; Springer: Singapore, 2018; pp. 267–277. [Google Scholar]

- Elliott, M.A.; Malvar, H.; Maassel, L.L.; Campbell, J.; Kulkarni, H.; Spiridonova, I.; Sophy, N.; Beavers, J.; Paradiso, A.; Needham, C.; et al. Eye-controlled, power wheelchair performs well for ALS patients. Muscle Nerve 2019, 60, 513–519. [Google Scholar] [CrossRef]

- Ramakrishnan, J.; Mavaluru, D.; Sakthivel, R.S.; Alqahtani, A.S.; Mubarakali, A.; Retnadhas, M. Brain–computer interface for amyotrophic lateral sclerosis patients using deep learning network. Neural Comput. Appl. 2022, 34, 13439–13453. [Google Scholar] [CrossRef]

- Miao, Y.; Yin, E.; Allison, B.Z.; Zhang, Y.; Chen, Y.; Dong, Y.; Wang, X.; Hu, D.; Chchocki, A.; Jin, J. An ERP-based BCI with peripheral stimuli: Validation with ALS patients. Cogn. Neurodynam. 2020, 14, 21–33. [Google Scholar] [CrossRef] [PubMed]

- Sorbello, R.; Tramonte, S.; Giardina, M.E.; La Bella, V.; Spataro, R.; Allison, B.; Guger, C.; Chella, A. A Human–Humanoid Interaction Through the Use of BCI for Locked-In ALS Patients Using Neuro-Biological Feedback Fusion. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 487–497. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.H.; Huang, S.; Huang, Y.D. Motor imagery EEG classification for patients with amyotrophic lateral sclerosis using fractal dimension and Fisher’s criterion-based channel selection. Sensors 2017, 17, 1557. [Google Scholar] [CrossRef] [PubMed]

- Vansteensel, M.J.; Pels, E.G.; Bleichner, M.G.; Branco, M.P.; Denison, T.; Freudenburg, Z.V.; Gosselaar, P.; Leinders, S.; Ottens, T.H.; Van Den Boom, M.A.; et al. Fully Implanted Brain–Computer Interface in a Locked-In Patient with ALS. N. Engl. J. Med. 2016, 375, 2060–2066. [Google Scholar] [CrossRef]

- Mainsah, B.O.; Collins, L.M.; Colwell, K.A.; Sellers, E.W.; Ryan, D.B.; Caves, K.; Throckmorton, C.S. Increasing BCI communication rates with dynamic stopping towards more practical use: An ALS study. J. Neural Eng. 2015, 12, 016013. [Google Scholar] [CrossRef] [PubMed]

- McCane, L.M.; Sellers, E.W.; McFarland, D.J.; Mak, J.N.; Carmack, C.S.; Zeitlin, D.; Wolpaw, J.R.; Vaughan, T.M. Brain-computer interface (BCI) evaluation in people with amyotrophic lateral sclerosis. Amyotroph. Lateral Scler. Front. Degener. 2014, 15, 207–215. [Google Scholar] [CrossRef] [PubMed]

- Jaramillo-Yánez, A.; Benalcázar, M.E.; Mena-Maldonado, E. Real-Time Hand Gesture Recognition Using Surface Electromyography and Machine Learning: A Systematic Literature Review. Sensors 2020, 20, 2467. [Google Scholar] [CrossRef]

- Tonin, A.; Jaramillo-Gonzalez, A.; Rana, A.; Khalili-Ardali, M.; Birbaumer, N.; Chaudhary, U. Auditory Electrooculogram-based Communication System for ALS Patients in Transition from Locked-in to Complete Locked-in State. Sci. Rep. 2020, 10, 8452. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; He, S.; Yang, X.; Wang, X.; Li, K.; Huang, Q.; Yu, Z.; Zhang, X.; Tang, D.; Li, Y. An EOG-Based Human-Machine Interface to Control a Smart Home Environment for Patients with Severe Spinal Cord Injuries. IEEE Trans. Biomed. Eng. 2019, 66, 89–100. [Google Scholar] [CrossRef] [PubMed]

- Chang, W.D.; Cha, H.S.; Kim, D.Y.; Kim, S.H.; Im, C.H. Development of an electrooculogram-based eye-computer interface for communication of individuals with amyotrophic lateral sclerosis. J. Neuroeng. Rehabil. 2017, 14, 89. [Google Scholar] [CrossRef]

- Larson, A.; Herrera, J.; George, K.; Matthews, A. Electrooculography based electronic communication device for individuals with ALS. In Proceedings of the 2017 IEEE Sensors Applications Symposium (SAS), Glassboro, NJ, USA, 13–15 March 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Lingegowda, D.R.; Amrutesh, K.; Ramanujam, S. Electrooculography based assistive technology for ALS patients. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Bengaluru, India, 5–7 October 2017; pp. 36–40. [Google Scholar] [CrossRef]

- Pinheiro, C.G.; Naves, E.L.; Pino, P.; Losson, E.; Andrade, A.O.; Bourhis, G. Alternative communication systems for people with severe motor disabilities: A survey. Biomed. Eng. Online 2011, 10, 31. [Google Scholar] [CrossRef]

- Chaudhary, U.; Vlachos, I.; Zimmermann, J.B.; Espinosa, A.; Tonin, A.; Jaramillo-Gonzalez, A.; Khalili-Ardali, M.; Topka, H.; Lehmberg, J.; Friehs, G.M.; et al. Spelling interface using intracortical signals in a completely locked-in patient enabled via auditory neurofeedback training. Nat. Commun. 2022, 13, 1236. [Google Scholar] [CrossRef]

- Singh, H.; Singh, J. Object Acquisition and Selection in Human Computer Interaction Systems: A Review. Int. J. Intell. Syst. Appl. Eng. 2019, 7, 19–29. [Google Scholar] [CrossRef][Green Version]

- Chaudhary, U.; Birbaumer, N.; Ramos-Murguialday, A. Brain—Computer interfaces for communication and rehabilitation. Nat. Rev. Neurol. 2016, 12, 513–525. [Google Scholar] [CrossRef] [PubMed]

- Kayadibi, I.; Güraksın, G.E.; Ergün, U.; Özmen Süzme, N. An Eye State Recognition System Using Transfer Learning: AlexNet-Based Deep Convolutional Neural Network. Int. J. Comput. Intell. Syst. 2022, 15, 49. [Google Scholar] [CrossRef]

- Fathi, A.; Abdali-Mohammadi, F. Camera-based eye blinks pattern detection for intelligent mouse. Signal Image Video Process. 2015, 9, 1907–1916. [Google Scholar] [CrossRef]

- Mu, S.; Shibata, S.; Chun Chiu, K.; Yamamoto, T.; kuan Liu, T. Study on eye-gaze input interface based on deep learning using images obtained by multiple cameras. Comput. Electr. Eng. 2022, 101, 108040. [Google Scholar] [CrossRef]

- Hwang, I.S.; Tsai, Y.Y.; Zeng, B.H.; Lin, C.M.; Shiue, H.S.; Chang, G.C. Integration of eye tracking and lip motion for hands-free computer access. Univers. Access Inf. Soc. 2021, 20, 405–416. [Google Scholar] [CrossRef]

- Blignaut, P. Development of a gaze-controlled support system for a person in an advanced stage of multiple sclerosis: A case study. Univers. Access Inf. Soc. 2017, 16, 1003–1016. [Google Scholar] [CrossRef]

- Chareonsuk, W.; Kanhaun, S.; Khawkam, K.; Wongsawang, D. Face and Eyes mouse for ALS Patients. In Proceedings of the 2016 Fifth ICT International Student Project Conference (ICT-ISPC), Nakhonpathom, Thailand, 27–28 May 2016; pp. 77–80. [Google Scholar] [CrossRef]

- Liu, S.S.; Rawicz, A.; Ma, T.; Zhang, C.; Lin, K.; Rezaei, S.; Wu, E. An Eye-Gaze Tracking and Human Computer Interface System for People with ALS and Other Locked-in Diseases. J. Med. Biol. Eng. 2012, 32, 1–3. [Google Scholar]

- Liu, Y.; Lee, B.S.; Rajan, D.; Sluzek, A.; McKeown, M.J. CamType: Assistive text entry using gaze with an off-the-shelf webcam. Mach. Vis. Appl. 2019, 30, 407–421. [Google Scholar] [CrossRef]

- Holmqvist, K.; Örbom, S.L.; Hooge, I.T.C.; Niehorster, D.C.; Alexander, R.G.; Andersson, R.; Benjamins, J.S.; Blignaut, P.; Brouwer, A.M.; Chuang, L.L.; et al. Eye tracking: Empirical foundations for a minimal reporting guideline. Behav. Res. Methods 2023, 55, 364–416. [Google Scholar] [CrossRef]

- Kitchenham, B. Procedures for Performing Systematic Reviews; Technical Report; Keele University, Department of Computer Science, Software Engineering Group and Empirical Software Engineering National ICT Australia Ltd.: Keele, UK, 2004. [Google Scholar]

- Brereton, P.; Kitchenham, B.A.; Budgen, D.; Turner, M.; Khalil, M. Lessons from applying the systematic literature review process within the software engineering domain. J. Syst. Softw. 2007, 80, 571–583. [Google Scholar] [CrossRef]

- Kitchenham, B.A.; Budgen, D.; Brereton, P. Evidence-Based Software Engineering and Systematic Reviews, 1st ed.; Chapman and Hall/CRC: New York, NY, USA, 2016. [Google Scholar]

- Snyder, H. Literature review as a research methodology: An overview and guidelines. J. Bus. Res. 2019, 104, 333–339. [Google Scholar] [CrossRef]

- Keele, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; Technical Report; Keele University and University of Durham: Staffs, UK, 2007. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, 71. [Google Scholar] [CrossRef] [PubMed]

- Fernandes, F.; Barbalho, I. Camera-Based Eye Interaction Techniques for Amyotrophic Lateral Sclerosis Individuals: A Systematic Review. PROSPERO. 2021. CRD42021230721. Available online: https://www.crd.york.ac.uk/prospero/display_record.php?RecordID=230721 (accessed on 29 March 2021).

- Ouzzani, M.; Hammady, H.; Fedorowicz, Z.; Elmagarmid, A. Rayyan—A web and mobile app for systematic reviews. Syst. Rev. 2016, 5, 210. [Google Scholar] [CrossRef] [PubMed]

- Eom, Y.; Mu, S.; Satoru, S.; Liu, T. A Method to Estimate Eye Gaze Direction When Wearing Glasses. In Proceedings of the 2019 International Conference on Technologies and Applications of Artificial Intelligence (TAAI), Kaohsiung, Taiwan, 21–23 November 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, X.; Kulkarni, H.; Morris, M.R. Smartphone-Based Gaze Gesture Communication for People with Motor Disabilities. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, CHI ’17, New York, NY, USA, 6–11 May 2017; pp. 2878–2889. [Google Scholar] [CrossRef]

- Aslam, Z.; Junejo, A.Z.; Memon, A.; Raza, A.; Aslam, J.; Thebo, L.A. Optical Assistance for Motor Neuron Disease (MND) Patients Using Real-time Eye Tracking. In Proceedings of the 2019 8th International Conference on Information and Communication Technologies (ICICT), Karachi, Pakistan, 16–17 November 2019; pp. 61–65. [Google Scholar] [CrossRef]

- Abe, K.; Ohi, S.; Ohyama, M. Eye-gaze Detection by Image Analysis under Natural Light. In Human-Computer Interaction. Interaction Techniques and Environments; Jacko, J.A., Ed.; Springer: Berlin/Heidelberg, Gertmany, 2011; pp. 176–184. [Google Scholar]

- Rahnama-ye Moqaddam, R.; Vahdat-Nejad, H. Designing a pervasive eye movement-based system for ALS and paralyzed patients. In Proceedings of the 2015 5th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 29 October 2015; pp. 218–221. [Google Scholar] [CrossRef]

- Rozado, D.; Rodriguez, F.B.; Varona, P. Low cost remote gaze gesture recognition in real time. Appl. Soft Comput. 2012, 12, 2072–2084. [Google Scholar] [CrossRef]

- Yildiz, M.; Yorulmaz, M. Gaze-Controlled Turkish Virtual Keyboard Application with Webcam. In Proceedings of the 2019 Medical Technologies Congress (TIPTEKNO), Izmir, Turkey, 3–5 October 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Nakazawa, N.; Aikawa, S.; Matsui, T. Development of Communication Aid Device for Disabled Persons Using Corneal Surface Reflection Image. In Proceedings of the 2nd International Conference on Graphics and Signal Processing, ICGSP’18, New York, NY, USA, 6–8 October 2018; pp. 16–20. [Google Scholar] [CrossRef]

- Rozado, D.; Agustin, J.S.; Rodriguez, F.B.; Varona, P. Gliding and Saccadic Gaze Gesture Recognition in Real Time. ACM Trans. Interact. Intell. Syst. 2012, 1, 1–27. [Google Scholar] [CrossRef]

- Królak, A.; Strumiłło, P. Eye-blink detection system for human–computer interaction. Univers. Access Inf. Soc. 2012, 11, 409–419. [Google Scholar] [CrossRef]

- Singh, H.; Singh, J. Object acquisition and selection using automatic scanning and eye blinks in an HCI system. J. Multimodal User Interfaces 2019, 13, 405–417. [Google Scholar] [CrossRef]

- Singh, H.; Singh, J. Real-time eye blink and wink detection for object selection in HCI systems. J. Multimodal User Interfaces 2018, 12, 55–65. [Google Scholar] [CrossRef]

- Missimer, E.; Betke, M. Blink and Wink Detection for Mouse Pointer Control. In Proceedings of the 3rd International Conference on PErvasive Technologies Related to Assistive Environments, PETRA ’10, New York, NY, USA, 23–25 June 2010. [Google Scholar] [CrossRef]

- Rupanagudi, S.R.; Bhat, V.G.; Ranjani, B.S.; Srisai, A.; Gurikar, S.K.; Pranay, M.R.; Chandana, S. A simplified approach to assist motor neuron disease patients to communicate through video oculography. In Proceedings of the 2018 International Conference on Communication information and Computing Technology (ICCICT), Mumbai, India, 2–3 February 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Rakshita, R. Communication Through Real-Time Video Oculography Using Face Landmark Detection. In Proceedings of the 2018 Second International Conference on Inventive Communication and Computational Technologies (ICICCT), Coimbatore, India, 20–21 April 2018; pp. 1094–1098. [Google Scholar] [CrossRef]

- Krapic, L.; Lenac, K.; Ljubic, S. Integrating Blink Click interaction into a head tracking system: Implementation and usability issues. Univers. Access Inf. Soc. 2015, 14, 247–264. [Google Scholar] [CrossRef]

- Park, J.H.; Park, J.B. A novel approach to the low cost real time eye mouse. Comput. Stand. Interfaces 2016, 44, 169–176. [Google Scholar] [CrossRef]

- Saleh, N.; Tarek, A. Vision-Based Communication System for Patients with Amyotrophic Lateral Sclerosis. In Proceedings of the 2021 3rd Novel Intelligent and Leading Emerging Sciences Conference (NILES), Giza, Egypt, 23–25 October 2021; pp. 19–22. [Google Scholar] [CrossRef]

- Atasoy, N.A.; Çavuşoğlu, A.; Atasoy, F. Real-Time motorized electrical hospital bed control with eye-gaze tracking. Turk. J. Electr. Eng. Comput. Sci. 2016, 24, 5162–5172. [Google Scholar] [CrossRef]

- Aharonson, V.; Coopoo, V.Y.; Govender, K.L.; Postema, M. Automatic pupil detection and gaze estimation using the vestibulo-ocular reflex in a low-cost eye-tracking setup. SAIEE Afr. Res. J. 2020, 111, 120–124. [Google Scholar] [CrossRef]

- Oyabu, Y.; Takano, H.; Nakamura, K. Development of the eye input device using eye movement obtained by measuring the center position of the pupil. In Proceedings of the 2012 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Seoul, Republic of Korea, 14–17 October 2012; pp. 2948–2952. [Google Scholar] [CrossRef]

- Kaushik, R.; Arora, T.; Tripathi, R. Design of Eyewriter for ALS Patients throughEyecan. In Proceedings of the 2018 International Conference on Advances in Computing, Communication Control and Networking (ICACCCN), Greater Noida, India, 12–13 October 2018; pp. 991–995. [Google Scholar] [CrossRef]

- Kavale, K.; Kokambe, K.; Jadhav, S. taskEYE: “A Novel Approach to Help People Interact with Their Surrounding Through Their Eyes”. In Proceedings of the 2018 IEEE 18th International Conference on Advanced Learning Technologies (ICALT), Mumbai, India, 9–13 July 2018; pp. 311–313. [Google Scholar] [CrossRef]

- Zhao, Q.; Yuan, X.; Tu, D.; Lu, J. Eye moving behaviors identification for gaze tracking interaction. J. Multimodal User Interfaces 2015, 9, 89–104. [Google Scholar] [CrossRef]

- Xu, C.L.; Lin, C.Y. Eye-motion detection system for mnd patients. In Proceedings of the 2017 IEEE 4th International Conference on Soft Computing & Machine Intelligence (ISCMI), Port Louis, Mauritius, 23–24 November 2017; pp. 99–103. [Google Scholar] [CrossRef]

- Bradski, G.R. Computer vision face tracking for use in a perceptual user interface. Intel Technol. J. 1998, 2, 1–15. [Google Scholar]

- King, D.E. Dlib-ml: A Machine Learning Toolkit. J. Mach. Learn. Res. 2009, 10, 1755–1758. [Google Scholar]

- Grauman, K.; Betke, M.; Gips, J.; Bradski, G. Communication via eye blinks - detection and duration analysis in real time. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001; Volume 1. pp. I–I. [Google Scholar] [CrossRef]

- Chau, M.; Betke, M. Real Time Eye Tracking and Blink Detection with USB Cameras. Technical Report, Boston University. CAS: Computer Science: Technical Reports. OpenBU: Boston, MA, USA, 2005. OpenBU. Available online: https://open.bu.edu/handle/2144/1839 (accessed on 20 May 2023).

- Likert, R. A technique for the measurement of attitudes. Arch. Psychol. 1932, 22, 55. [Google Scholar]

- United Nations. Take Action for the Sustainable Development Goals. New York, NY, USA. Available online: https://www.un.org/sustainabledevelopment/sustainable-development-goals/ (accessed on 10 April 2023).

- Papaiz, F.; Dourado, M.E.T.; Valentim, R.A.d.M.; de Morais, A.H.F.; Arrais, J.P. Machine Learning Solutions Applied to Amyotrophic Lateral Sclerosis Prognosis: A Review. Front. Comput. Sci. 2022, 4, 869140. [Google Scholar] [CrossRef]

- Gromicho, M.; Leão, T.; Oliveira Santos, M.; Pinto, S.; Carvalho, A.M.; Madeira, S.C.; De Carvalho, M. Dynamic Bayesian networks for stratification of disease progression in amyotrophic lateral sclerosis. Eur. J. Neurol. 2022, 29, 2201–2210. [Google Scholar] [CrossRef] [PubMed]

- Tavazzi, E.; Daberdaku, S.; Zandonà, A.; Vasta, R.; Nefussy, B.; Lunetta, C.; Mora, G.; Mandrioli, J.; Grisan, E.; Tarlarini, C.; et al. Predicting functional impairment trajectories in amyotrophic lateral sclerosis: A probabilistic, multifactorial model of disease progression. J. Neurol. 2022, 269, 3858–3878. [Google Scholar] [CrossRef]

- Gordon, J.; Lerner, B. Insights into Amyotrophic Lateral Sclerosis from a Machine Learning Perspective. J. Clin. Med. 2019, 8, 1578. [Google Scholar] [CrossRef]

- Ahangaran, M.; Chiò, A. AIM in Amyotrophic Lateral Sclerosis. In Artificial Intelligence in Medicine; Lidströmer, N., Ashrafian, H., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Dalgıç, Ö.O.; Wu, H.; Safa Erenay, F.; Sir, M.Y.; Özaltın, O.Y.; Crum, B.A.; Pasupathy, K.S. Mapping of critical events in disease progression through binary classification: Application to amyotrophic lateral sclerosis. J. Biomed. Inform. 2021, 123, 103895. [Google Scholar] [CrossRef] [PubMed]

- Ahangaran, M.; Chiò, A.; D’Ovidio, F.; Manera, U.; Vasta, R.; Canosa, A.; Moglia, C.; Calvo, A.; Minaei-Bidgoli, B.; Jahed-Motlagh, M.R. Causal associations of genetic factors with clinical progression in amyotrophic lateral sclerosis. Comput. Methods Programs Biomed. 2022, 216, 106681. [Google Scholar] [CrossRef] [PubMed]

- Bede, P.; Murad, A.; Hardiman, O. Pathological neural networks and artificial neural networks in ALS: Diagnostic classification based on pathognomonic neuroimaging features. J. Neurol. 2022, 269, 2440–2452. [Google Scholar] [CrossRef] [PubMed]

- Iadanza, E.; Fabbri, R.; Goretti, F.; Nardo, G.; Niccolai, E.; Bendotti, C.; Amedei, A. Machine learning for analysis of gene expression data in fast- and slow-progressing amyotrophic lateral sclerosis murine models. Biocybern. Biomed. Eng. 2022, 42, 273–284. [Google Scholar] [CrossRef]

- Thome, J.; Steinbach, R.; Grosskreutz, J.; Durstewitz, D.; Koppe, G. Classification of amyotrophic lateral sclerosis by brain volume, connectivity, and network dynamics. Hum. Brain Mapp. 2022, 43, 681–699. [Google Scholar] [CrossRef]

- Greco, A.; Chiesa, M.R.; Da Prato, I.; Romanelli, A.M.; Dolciotti, C.; Cavallini, G.; Masciandaro, S.M.; Scilingo, E.P.; Del Carratore, R.; Bongioanni, P. Using blood data for the differential diagnosis and prognosis of motor neuron diseases: A new dataset for machine learning applications. Sci. Rep. 2021, 11, 3371. [Google Scholar] [CrossRef]

- Kocar, T.D.; Behler, A.; Ludolph, A.C.; Müller, H.P.; Kassubek, J. Multiparametric Microstructural MRI and Machine Learning Classification Yields High Diagnostic Accuracy in Amyotrophic Lateral Sclerosis: Proof of Concept. Front. Neurol. 2021, 12, 745475. [Google Scholar] [CrossRef]

- Leão, T.; Madeira, S.C.; Gromicho, M.; de Carvalho, M.; Carvalho, A.M. Learning dynamic Bayesian networks from time-dependent and time-independent data: Unraveling disease progression in Amyotrophic Lateral Sclerosis. J. Biomed. Inform. 2021, 117, 103730. [Google Scholar] [CrossRef]

- Grollemund, V.; Le Chat, G.; Secchi-Buhour, M.S.; Delbot, F.; Pradat-Peyre, J.F.; Bede, P.; Pradat, P.F. Manifold learning for amyotrophic lateral sclerosis functional loss assessment. J. Neurol. 2021, 268, 825–850. [Google Scholar] [CrossRef] [PubMed]

- Kocar, T.D.; Müller, H.P.; Ludolph, A.C.; Kassubek, J. Feature selection from magnetic resonance imaging data in ALS: A systematic review. Ther. Adv. Chronic Dis. 2021, 12, 20406223211051002. [Google Scholar] [CrossRef]

- Grollemund, V.; Chat, G.L.; Secchi-Buhour, M.S.; Delbot, F.; Pradat-Peyre, J.F.; Bede, P.; Pradat, P.F. Development and validation of a 1-year survival prognosis estimation model for Amyotrophic Lateral Sclerosis using manifold learning algorithm UMAP. Sci. Rep. 2020, 10, 13378. [Google Scholar] [CrossRef] [PubMed]

- Myszczynska, M.A.; Ojamies, P.N.; Lacoste, A.M.B.; Neil, D.; Saffari, A.; Mead, R.; Hautbergue, G.M.; Holbrook, J.D.; Ferraiuolo, L. Applications of machine learning to diagnosis and treatment of neurodegenerative diseases. Nat. Rev. Neurol. 2020, 16, 440–456. [Google Scholar] [CrossRef]

- Chen, Q.F.; Zhang, X.H.; Huang, N.X.; Chen, H.J. Identification of Amyotrophic Lateral Sclerosis Based on Diffusion Tensor Imaging and Support Vector Machine. Front. Neurol. 2020, 11, 275. [Google Scholar] [CrossRef] [PubMed]

- Grollemund, V.; Pradat, P.F.; Querin, G.; Delbot, F.; Le Chat, G.; Pradat-Peyre, J.F.; Bede, P. Machine Learning in Amyotrophic Lateral Sclerosis: Achievements, Pitfalls, and Future Directions. Front. Neurosci. 2019, 13, 135. [Google Scholar] [CrossRef]

- Pinto, S.; Quintarelli, S.; Silani, V. New technologies and Amyotrophic Lateral Sclerosis – Which step forward rushed by the COVID-19 pandemic? J. Neurol. Sci. 2020, 418, 117081. [Google Scholar] [CrossRef] [PubMed]

- Barbalho, I.; Valentim, R.; Júnior, M.D.; Barros, D.; Júnior, H.P.; Fernandes, F.; Teixeira, C.; Lima, T.; Paiva, J.; Nagem, D. National registry for amyotrophic lateral sclerosis: A systematic review for structuring population registries of motor neuron diseases. BMC Neurol. 2021, 21, 269. [Google Scholar] [CrossRef]

- Dietrich-Neto, F.; Callegaro, D.; Dias-Tosta, E.; Silva, H.A.; Ferraz, M.E.; Lima, J.M.B.D.; Oliveira, A.S.B. Amyotrophic lateral sclerosis in Brazil: 1998 national survey. Arq. Neuro-Psiquiatr. 2000, 58, 607–615. [Google Scholar] [CrossRef]

- Moura, M.C.; Casulari, L.A.; Novaes, M.R.C.G. Ethnic and demographic incidence of amyotrophic lateral sclerosis (ALS) in Brazil: A population based study. Amyotroph. Lateral Scler. Front. Degener. 2016, 17, 275–281. [Google Scholar] [CrossRef]

- Barbalho, I.M.P.; Fernandes, F.; Barros, D.M.S.; Paiva, J.C.; Henriques, J.; Morais, A.H.F.; Coutinho, K.D.; Coelho Neto, G.C.; Chioro, A.; Valentim, R.A.M. Electronic health records in Brazil: Prospects and technological challenges. Front. Public Health 2022, 10, 963841. [Google Scholar] [CrossRef]

- Barbalho, I.M.P.; Fonseca, A.; Fernandes, F.; Henriques, J.; Gil, P.; Nagem, D.; Lindquist, R.; Santos-Lima, T.; Santos, J.P.Q.; Paiva, J.C.; et al. Digital Health Solution for Monitoring and Surveillance of Amyotrophic Lateral Sclerosis in Brazil. Front. Public Health. 2023, 11, 1209633. [Google Scholar] [CrossRef]

- Brasil. Projeto de Lei N° 4691, De 2019. Atividade Legislativa. Senado Federal. Brasília, DF. Available online: https://www25.senado.leg.br/web/atividade/materias/-/materia/138326 (accessed on 10 July 2023).

- Brasil. Lei N° 10924, De 10 de Junho de 2021. Diário Oficial do Rio Grande do Norte. Available online: http://diariooficial.rn.gov.br/dei/dorn3/docview.aspx?id_jor=00000001&data=20210611&id_doc=726286. (accessed on 10 July 2023).

| RQ | Description |

|---|---|

| 01 | What strategy is used to establish Human–Computer Interaction based on eye images? |

| 02 | What computational technique is used for processing and classifying eye images (Computer Vision or Machine Learning, e.g.)? |

| 03 | What is the performance of the computational techniques explored (evaluated through accuracy, precision, sensitivity, specificity, error)? |

| 04 | What is the hardware support for image acquisition? |

| 05 | What is the profile of the group of individuals submitted to the experimental tests of the study (healthy controls, ALS, or other diseases)? |

| ID | Inclusion Criteria | Exclusion Criteria |

|---|---|---|

| 01 | Articles published between 2010 and 18 November 2021. | Duplicate articles. |

| 02 | Original and complete research articles published in Journals or Conferences. | Review articles. |

| 03 | Articles in the areas of technology, engineering, or computer science. | Articles not related to communication strategies through the eyes for Human–Computer Interaction based on generic cameras. |

| QA | Description | Eliminator |

|---|---|---|

| 01 | Is the research object of the study a Human–Computer Interaction approach based on eye images for people with ALS or Motor Neurone disease? | Yes |

| 02 | Does the study describe the approach to image processing? | No |

| 03 | Does the study describe the algorithmic technique’s performance (accuracy, precision, sensitivity, specificity, error)? | No |

| 04 | Does the study describe the hardware used for image acquisition? | No |

| 05 | Does the study perform experiments on control groups (healthy people), people with ALS, or other diseases? | No |

| Study | Year | Score | HCI | Hardware | Subjects | Techniques (Keywords) | Performance(%) | |||

|---|---|---|---|---|---|---|---|---|---|---|

| HC/ALS/OD | Acc | Recall | Precision | Error | ||||||

| Eom et al. [56] | 2019 | 0.8 | Eye-Gaze | Camera | 6/0/0 | Haar-like/binarization/grayscale/NN | A different approach | |||

| Zhang et al. [57] | 2017 | 0.8 | Eye-Gaze | iPhone and iPad | 12/0/0 | Fast face alignment/GD/TM | 86% | - | - | - |

| Aslam et al. [58] | 2019 | 0.7 | Eye-Gaze | Camera | 3/0/0 | Haar-like/CHT | 100% | - | - | - |

| Abe et al. [59] | 2011 | 0.7 | Eye-Gaze | Camera | 5/0/0 | Limbus Tracking Method | - | - | - | 0.56/1.09 |

| Rahnama-ye-Moqaddam and Vahdat-Nejad [60] | 2015 | 0.6 | Eye-Gaze | Camera | 4/1/0 | Haar cascade/GVM/TM | - | - | - | 5.68% |

| Rozado et al. [61] | 2012 | 0.6 | Eye-Gaze | Camera with IR | 15/0/0 | ITU Gaze Tracker/E-HTM | 98% | - | - | - |

| Yildiz et al. [62] | 2019 | 0.5 | Eye-Gaze | HMC | 1/0/0 | CHT/KNN | - | - | - | 0.98% |

| Nakazawa et al. [63] | 2018 | 0.5 | Eye-Gaze | HMC with IR | 5/0/0 | CHT | 93.32% | - | - | - |

| Rozado et al. [64] | 2012 | 0.5 | Eye-Gaze | HMC with IR | 20/0/0 | HTM/Needleman–Wunsch | 95% | - | - | - |

| Królak and Strumiłło [65] | 2012 | 0.8 | Eye-Blink | Camera | 37/0/12 | Viola–Jones/GD/TM | 95.17% | 96.91% | 98.13% | - |

| Singh and Singh [66] | 2019 | 0.7 | Eye-Blink | Camera with light source | 10/0/0 | Viola–Jones/PMA | 90% | - | - | - |

| Singh and Singh [67] | 2018 | 0.7 | Eye-Blink | Camera with light source | 10/0/0 | Viola–Jones/PMA | 91.2% | - | 94.11% | - |

| Missimer and Betke [68] | 2010 | 0.7 | Eye-Blink | Camera | 20/0/0 | TM/Optical flow algorithm | 96.6% | - | - | - |

| Rupanagudi et al. [69] | 2018 | 0.6 | Eye-blink | Camera with IR | 50/0/0 | grayscale/SBT/2PVM | A different approach | |||

| Rakshita [70] | 2018 | 0.5 | Eye-Blink | Camera | 1/0/0 | grayscale/FLD/EAR | A different approach | |||

| Krapic et al. [71] | 2015 | 0.5 | Eye-Blink | Camera | 12/0/0 | eViacam software | A different approach | |||

| Park and Park [72] | 2016 | 0.8 | Eye-Tracking | Camera with IR | 4/0/0 | Pupil Center Corneal Reflection | 1–2 | - | - | - |

| Saleh and Tarek [73] | 2021 | 0.7 | Eye-Tracking | HMC with IR | 5/0/0 | grayscale/CHT/GD | A different approach | |||

| Atasoy et al. [74] | 2016 | 0.7 | Eye-Tracking | Camera | 30/0/0 | Viola–-Jones/grayscale/CHT/GD | 90% | - | - | - |

| Aharonson et al. [75] | 2020 | 0.6 | Eye-Tracking | HMC | 4/0/0 | OpenCV/Polynomial/Projection | A different approach | |||

| Oyabu et al. [76] | 2012 | 0.6 | Eye-Tracking | Camera with IR | 5/0/0 | Binarization/CMUPL | A different approach | |||

| Kaushik et al. [77] | 2018 | 0.5 | Eye-Tracking | HMC with IR | 1/0/0 | EyeScan software | 95% | - | - | - |

| Kavale et al. [78] | 2018 | 0.5 | Eye-Tracking | Camera with IR | 1/0/0 | Binarization/GD | A different approach | |||

| Zhao et al. [79] | 2015 | 0.8 | Hybrid | Camera with IR | 7/0/0 | Binarization/GD | 92.69% | - | - | - |

| Xu and Lin [80] | 2017 | 0.7 | Hybrid | Camera with IR | 1/0/0 | FLD/GD | 100% | - | - | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fernandes, F.; Barbalho, I.; Bispo Júnior, A.; Alves, L.; Nagem, D.; Lins, H.; Arrais Júnior, E.; Coutinho, K.D.; Morais, A.H.F.; Santos, J.P.Q.; et al. Digital Alternative Communication for Individuals with Amyotrophic Lateral Sclerosis: What We Have. J. Clin. Med. 2023, 12, 5235. https://doi.org/10.3390/jcm12165235

Fernandes F, Barbalho I, Bispo Júnior A, Alves L, Nagem D, Lins H, Arrais Júnior E, Coutinho KD, Morais AHF, Santos JPQ, et al. Digital Alternative Communication for Individuals with Amyotrophic Lateral Sclerosis: What We Have. Journal of Clinical Medicine. 2023; 12(16):5235. https://doi.org/10.3390/jcm12165235

Chicago/Turabian StyleFernandes, Felipe, Ingridy Barbalho, Arnaldo Bispo Júnior, Luca Alves, Danilo Nagem, Hertz Lins, Ernano Arrais Júnior, Karilany D. Coutinho, Antônio H. F. Morais, João Paulo Q. Santos, and et al. 2023. "Digital Alternative Communication for Individuals with Amyotrophic Lateral Sclerosis: What We Have" Journal of Clinical Medicine 12, no. 16: 5235. https://doi.org/10.3390/jcm12165235

APA StyleFernandes, F., Barbalho, I., Bispo Júnior, A., Alves, L., Nagem, D., Lins, H., Arrais Júnior, E., Coutinho, K. D., Morais, A. H. F., Santos, J. P. Q., Machado, G. M., Henriques, J., Teixeira, C., Dourado Júnior, M. E. T., Lindquist, A. R. R., & Valentim, R. A. M. (2023). Digital Alternative Communication for Individuals with Amyotrophic Lateral Sclerosis: What We Have. Journal of Clinical Medicine, 12(16), 5235. https://doi.org/10.3390/jcm12165235