Abstract

Hearing loss is a prevalent health issue that affects individuals worldwide. Binaural hearing refers to the ability to integrate information received simultaneously from both ears, allowing individuals to identify, locate, and separate sound sources. Auditory evoked potentials (AEPs) refer to the electrical responses that are generated within any part of the auditory system in response to auditory stimuli presented externally. Electroencephalography (EEG) is a non-invasive technology used for the monitoring of AEPs. This research aims to investigate the use of audiometric EEGs as an objective method to detect specific features of binaural hearing with frequency and time domain analysis techniques. Thirty-five subjects with normal hearing and a mean age of 27.35 participated in the research. The stimuli used in the current study were designed to investigate the impact of binaural phase shifts of the auditory stimuli in the presence of noise. The frequency domain and time domain analyses provided statistically significant and promising novel findings. The study utilized Blackman windowed 18 ms and 48 ms pure tones as stimuli, embedded in noise maskers, of frequencies 125 Hz, 250 Hz, 500 Hz, 750 Hz, 1000 Hz in homophasic (the same phase in both ears) and antiphasic (180-degree phase difference between the two ears) conditions. The study focuses on the effect of phase reversal of auditory stimuli in noise of the middle latency response (MLR) and late latency response (LLR) regions of the AEPs. The frequency domain analysis revealed a significant difference in the frequency bands of 20 to 25 Hz and 25 to 30 Hz when elicited by antiphasic and homophasic stimuli of 500 Hz for MLRs and 500 Hz and 250 Hz for LLRs. The time domain analysis identified the Na peak of the MLR for 500 Hz, the N1 peak of the LLR for 500 Hz stimuli and the P300 peak of the LLR for 250 Hz as significant potential markers in detecting binaural processing in the brain.

1. Background

Hearing loss is a prevalent health issue that affects individuals from various cultures, races, and age groups. The negative impact on a person’s quality of life can be considerable, particularly if the condition goes undiagnosed [1]. For children, undetected hearing loss can impede development, and early identification and intervention are critical for them to acquire essential skills at a similar pace to their peers. Thus, it is imperative to detect any form of hearing loss as early as possible [2]. Research has revealed that hearing loss can range from mild to profound, and that a broad spectrum of contributing factors exists [3,4,5,6].

Hearing loss can be categorized as either sensorineural or conductive, with sensorineural hearing loss affecting the inner ear and nervous system, while conductive hearing loss can be caused by malformations or diseases of the outer ear, the ear canal, or the middle ear structure. Both sensorineural and conductive hearing loss can interfere with cognitive development, interfering with auditory processing and binaural hearing development [7,8]. Binaural hearing is essential for sound localization, sound segregation, and understanding speech in noisy environments. Any dysfunction in one or both ears can disrupt the mechanism of binaural processing, resulting in difficulties in sound perception [9].

Binaural hearing is the ability to integrate information received simultaneously from both ears, enabling individuals to identify, locate, and separate sound sources. This ability is crucial for understanding speech in noisy environments, known as the “cocktail party effect” [10]. Any dysfunction in one or both ears, including unilateral hearing loss (UHL) and bilateral hearing loss (BHL) can disrupt the binaural processing mechanisms [11]. Previous research has shown that untreated binaural hearing impairment can significantly impede a child’s development, including verbal cognition skills [12]. Binaural hearing and other aspects of auditory functioning develop before adolescence. Adults can suffer from binaural hearing impediments, which can affect their quality of life and limit further cognitive development. As age increases, neural synchrony in the central auditory system deteriorates, contributing to the difficulty in perceiving temporal cues of sound for older people [13,14].

Auditory processing begins in the brainstem and mesencephalon, where responses such as auditory reflexes are coordinated, followed by processing in the auditory cortex of the temporal lobe [15]. Binaural hearing processing in the auditory cortex contributes to sound localization, differentiation, and delineation of important auditory sources and noise. Binaural hearing and other aspects of auditory functioning mature throughout adolescence and require auditory stimuli for full development. Without this input, auditory functioning, and therefore cognitive development, is at risk [16].

Testing for hearing impediments is diverse and well documented. While psychometric methods are commonly used, measures such as electroencephalograms (EEGs) have proven their ability to detect hearing disorders in infants [17]. The EEG method eliminates reliance on participant literacy and communication skills and is relatively easy to perform [18], making it highly suitable for young children [19]. Although collecting an EEG can be more challenging than a traditional hearing assessment, using the correct stimulus parameters, recording standards, and processing techniques can make this method suitable and feasible for binaural hearing assessment [20,21].

Auditory evoked potentials (AEPs) refer to the electrical potentials from any part of the auditory system, from the cochlea to the cerebral cortex, which are evoked by externally presented auditory stimuli. AEPs can be used to assess neurological integrity and auditory function [22]. Auditory evoked potentials (AEPs) are an essential tool for assessing auditory function and diagnosing hearing disorders [23,24]. AEPs are non-invasive and can provide valuable information about the function and integrity of the auditory system [24]. AEPs are categorized based on the time interval between the onset of the auditory stimulus and the peak of the evoked response [25]. The major AEP components are the auditory brainstem response (ABR), the middle latency response (MLR), and the late latency response (LLR) [26].

The ABR is the earliest AEP component, occurring within the first 10 ms after the presentation of an auditory stimulus. It reflects the activity of the auditory nerve and the brainstem and is commonly used to assess hearing sensitivity and diagnose hearing disorders [27]. The MLR is a later AEP component, occurring between 10 and 80 ms after the presentation of an auditory stimulus [28,29]. It reflects the activity of the auditory cortex and is thought to be involved in the processing of complex auditory stimuli, including speech. A recent study [30] investigated the use of the MLR to evaluate auditory processing in children with autism spectrum disorders (ASD). They found that the MLR was reduced in children with ASD compared to typically developing children, suggesting that the MLR can be a useful tool for identifying auditory processing deficits in individuals with ASD. The LLR is the latest AEP component, occurring between 50 and 500 ms after the presentation of an auditory stimulus. It reflects the activity of higher-order auditory processing areas, including the temporal and frontal lobes, and is thought to be involved in cognitive and attentional processing of auditory stimuli. In recent years the use of LLRs to investigate various hearing disorders has been explored [31,32]. AEPs have also been used to investigate the effects of different types of noise on auditory processing and to evaluate the neural mechanisms underlying sensory integration. Overall, AEPs are a valuable tool for evaluating auditory function and diagnosing hearing disorders [33].

The ABR, MLR, and LLR are the major AEP components, each reflecting different parts of the auditory pathway’s electrical activity. AEPs have the potential to provide important information for the management and treatment of hearing disorders, as well as to advance our understanding of the neural mechanisms underlying auditory perception and cognition [34,35,36]. Further research is needed to fully understand the neural mechanisms underlying AEPs and their clinical and scientific implications.

The use of auditory evoked potentials (AEPs) in investigating binaural hearing has led to the identification of MLR and LLR as two main components of interest. Research related to the MLR has primarily focused on its sensitivity to binaural processing. Studies have shown that the MLR is modulated by differences in interaural time and intensity cues, which are important for sound localization and binaural fusion [29]. Furthermore, the MLR has been shown to be associated with the perceptual grouping of sounds, such as the segregation of speech from background noise [37,38]. However, the literature on the MLR in binaural hearing is limited, and more research is required to comprehend its role in binaural hearing. The LLR has also been studied in the context of binaural hearing [39]. Meanwhile, studies have shown that the LLR is modulated by binaural cues, including interaural time and intensity differences, and is sensitive to the spatial location of sounds [40], suggesting its potential role in auditory scene analysis through its sensitivity to changes in binaural cues over time [41]. However, the literature on the LLR in binaural hearing is still limited, demanding further research to fully understand its contribution to binaural processing.

The literature on the EEG and AEPs is diverse, but there is a lack of relevant literature regarding binaural hearing assessment and testing stimuli for AEP responses, indicating an avenue for future studies. A review of the literature reveals a gap in knowledge regarding the use of the EEG for binaural assessment. Specifically, research pertaining to the Auditory MLR and LLR indicates a need for further investigation and contribution to the existing body of knowledge. This study aims to fill this gap by exploring the potential of AEPs for binaural hearing assessment through MLR and LLR analysis.

ERP signals from the brain can be analysed in different ways which includes time domain analysis by examining AEPs, or the frequency domain analysis that uses methods such as FFT (Fast Fourier Transform) or Welch’s Periodogram (Pwelch) [42,43,44]. The Pwelch method, a spectral decomposition technique, calculates the Power Spectral Density (PSD) for EEG data, providing valuable information about the spectral content and power across different frequency bands. It reduces variance and allows for high accuracy and resolution in PSD estimation, making it useful in ERP data analysis with low signal-to-noise ratios [45]. The Pwelch method can handle signals with non-uniform sampling rates and offers insights into the underlying neural mechanisms of cognitive and sensory processing [44]. AEPs can be analysed in the time domain through averaging techniques, allowing for the examination of peak amplitudes, latencies, and interpeak latency differences under various conditions [46,47]. Overall, the AEP analysis and Pwelch method in both time and frequency domains are essential in understanding brain function and neurological disorders.

This study aims to investigate the use of audiometric EEGs as an objective method to detect binaural hearing. Limited research on the MLR and LLR with binaural hearing stimuli creates an opportunity for novel contributions to the existing knowledge. For this study, the BMLD (Binaural Masking Level Difference) test, which has been recommended for measuring binaural hearing loss, has been taken as a starting point. The stimuli used in the BMLD trials are employed in the current Audiometric EEG study to investigate the impact of binaural phase shifts of the auditory stimuli in the presence of noise.

2. Materials and Methods

This study was conducted at Charles Darwin University in Australia, and the experimental protocols were approved by the Human Ethics Committee of the University, as outlined in H18014—Detecting Binaural Processing in the Audiometric EEG. Prior to the experiment, written consent was obtained from all volunteers indicating their willingness to participate in the various hearing tests, including EEG measurements. A plain language statement (PLS) was provided outlining the details of the study and to provide an overview of the experimental process. A questionnaire was completed by each subject to ensure a healthy otological history. The experimental set up and hardware was the same as explained in [20].

2.1. Participants

The sample for the present study included thirty-five participants (23 males and 12 females) between the ages of 18 and 33 years old (mean age 27.36), with a hearing threshold value between 0 and 20 dB hearing level (HL) in both ears, as confirmed by pure-tone audiometry. Pure-tone audiometry was conducted initially to measure the hearing threshold levels of the participants at different frequencies, in accordance with the relevant Australian Standards, and to determine whether they were acceptable [20]. All the selected thirty-five subjects had normal audiograms with a hearing threshold value between 0 to 20 dB hearing level (HL) for the frequency range of 125 Hz to 8 KHz [20].

Participants were also required to read and understand the PLS. Additionally they were asked to complete a questionnaire to ensure no noticeable otological issues were detected in the past or present. Exclusion criteria included participants who were under 18, had severe hearing loss conditions or cognitive impairment, had a cochlear implant or other implantable hearing device, or had severe or debilitating tinnitus. Additionally, pregnant women were excluded due to potential risk to the foetus. The inclusion and exclusion criteria were carefully considered to ensure that the trial results were valid and could be applied to the target population. The subjects were prepared for the experimental process as explained in the preliminary study conducted [20].

2.2. Auditory Stimuli

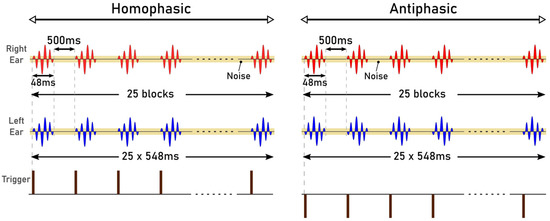

The stimuli used in the study were Blackmann windowed pure tones of different frequencies: 125 Hz, 250 Hz, 500 Hz, 750 Hz and 1000 Hz. Blackman windowing was performed to ensure a smooth acoustic transition at the start or the end of the stimulus and thus to reduce spectral splatter of the signal. The tones were embedded in a 10 Hz bandwidth Gaussian noise masker throughout. The centre frequency of the masker and the frequency of the tone were the same for these trials. The stimuli were 518 ms and 548 ms in duration, generated in MATLAB R2017b with a signal of 18 ms and 48 ms, respectively. The durations were chosen to correspond to the duration of signals that can be used for the generation of AEPs: 18 ms for the Middle Latency Response (MLR) and 48 ms for the Late Latency Response (LLR). The level for the masker was set at 20 dB whereas the tone was at 40 dB. The sampling frequency was set to 19.2 kHz. The generated stimuli of 18 ms and 48 ms, for the MLR and LLR respectively, are illustrated in Figure 1 and Figure 2. The stimuli were presented in a predefined sequence: blocks of 25 homophasic stimuli followed by 25 antiphasic stimuli. A total of 1000 trials were carried out per subject, resulting in the generation of 500 antiphasic and 500 homophasic ERPs for each subject. The total time for 1000 trials was 8.633 min and 9.133 min for the 18 ms and 48 ms stimuli, respectively. On average, healthy adults have an attention span in the range of 10 to 20 min, so a short trial reduces the risk of hearing fatigue and adaptation during the experimental trial [48]. Subjects were asked to relax before and between the experiments, in order to ensure quality data acquisition. The stimuli were delivered to the ER.2 insert earphones via an external sound card (Creative Sound Blaster Omni Surround 5.1) at a 60 dB sound pressure level. The pure tone stimuli consisted of a sinusoidal signal, So, and its opposite signal, Spi [20].

Figure 1.

Auditory Stimuli of 18 ms.

Figure 2.

Auditory Stimuli of 48 ms.

2.3. Electrode Placement

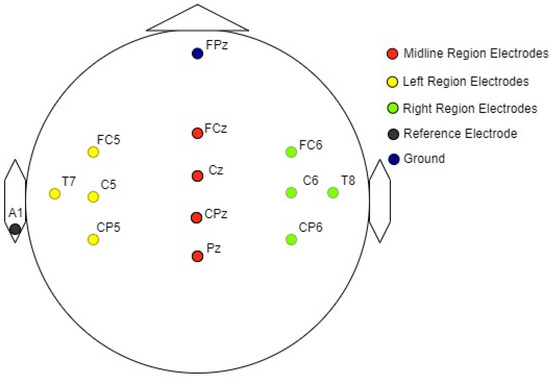

Electrical activity in response to auditory stimuli was recorded from 12 electrode sites on the scalp. The arrangement of specific electrode sites used for the recording of auditory evoked potentials (AEP) is shown in Figure 3 [49,50]. The reference electrode was positioned on the left earlobe, while the ground electrode was placed in the lower position on the forehead (FPz), following the 10–20 electrode placement system [51,52].

Figure 3.

Graphical representation of the electrode arrangement for EEG measurements.

3. Results and Analysis

3.1. Signal Processing and Analysis

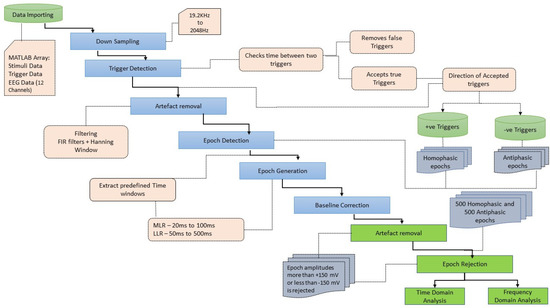

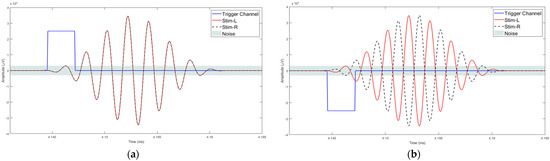

After the data acquisition process was completed, data processing and analysis were carried out offline. Figure 4 illustrates the workflow followed for data processing and analysis. The data were imported as an array into MATLAB. This contained the data of the captured EEG channels, the trigger channels and the stimulus channels. Further processing of signals was carried out in MATLAB R2017b using the EEGLAB v2019.1 toolbox. The sampling rate for EEG data acquisition is 19.2 kHz and the Nyquist frequency is 9.6 kHz, much higher than required for analysing the frequency ranges in the human AEPs. In the pre-processing stage, the EEG data were down sampled to 2048 Hz [53]. The process of down sampling ensures that the filtering process in the preprocessing stage is computationally efficient and improves the roll-off ability of the filters. The next step in data pre-processing was to extract the accurate trigger times by removing false triggers and checking whether 1000 triggers were found in total. The detection of the triggers was carried out by checking the time between two triggers (0.518 s or 0.548 s). If a shorter time delay was found, the corresponding trigger was rejected. The trigger channel data captured by the amplifier were used to synchronize the averaging process. The 18 ms input stimuli, masked in noise in both the homophasic and the antiphasic conditions, were used to evoke the Middle Latency Response (MLR) and the 48 ms input stimuli, masked in noise in both the homophasic and the antiphasic conditions, were used to evoke the Late Latency Response (LLR). As shown in Figure 5, the actual triggers were detected by analysing the right ear stimuli (Stim-R) and left ear stimuli (Stim-L) together with the trigger timing. Figure 5a,b, shows that the triggers at the start of each stimulus were detected accurately. Once the triggers were detected, artefacts and noise were removed from the EEG signals to obtain clear evoked responses for further processing and analysis [54]. The down sampled data were then filtered using an FIR filter with a Hamming window. A low cut-off frequency of 1 Hz was applied to the data to remove slow drift noise and DC components from the signal. The next stage of data processing involves epoch generation. The start of the epoch was determined using the trigger signal, which was delivered synchronously with the stimulus [20]. The duration of the epoch is based on the duration of the signal and the interval between the signals. Using the trigger signal, the responses to homophasic and antiphasic stimuli were identified. The trial was then cut into suitable time frames for analysis. Thus, the evoked potentials were then split in epochs (pre-defined short duration of time) [55]. In the present study, the time windows of interest are the MLR, which ranges from 20 ms to 100 ms, and the LLR from 50 ms to 500 ms after the start of the input stimuli. Epochs with amplitudes larger than 150 mV were rejected. Baseline correction was conducted for the remaining trials [56].

Figure 4.

Workflow of data processing and analysis.

Figure 5.

Trigger detection process: (a) Homophasic signal; (b) Antiphasic.

The remaining epochs were analysed in the time domain and frequency domain. As an initial step for the time domain analysis, epoch averaging was carried out separately for each of the twelve electrode locations. The averaged AEP signals from the accepted trials were then further analysed in the time domain. Frequency domain analysis, however, was carried out initially as individual epochs and in the later stage the averaging was carried out.

3.2. Frequency Domain Analysis

Frequency domain analysis is a widely used method for EEG analysis, and it is often conducted as the first analysis since it is a general and the most common method to understand the EEG data as a whole [57,58]. Frequency domain analysis is regarded as the most powerful and standard method for EEG analysis, compared to other methods [57]. It gives insight into information contained in the frequency domain of EEG waveforms by adopting statistical and Fourier transform methods [57]. Power spectral analysis is the most used spectral method since the power spectrum reflects the ‘frequency content’ of the signal or the distribution of signal power over frequency [57]. The frequency-domain feature analysis method mainly observes the frequency spectrum of the EEG signal of a certain length, which can obtain the distribution [59]. Hence, frequency domain analysis was used in the analysis of the electroencephalogram (EEG), particularly in the context of auditory responses. By transforming time-domain signals into the frequency domain, it may be possible to extract some information about the underlying neural processes that generate the signals in response to auditory stimuli [44,60]. In EEG signal analysis, frequency domain analysis typically involves the use of Fourier transform techniques such as the Fast Fourier Transform (FFT) or modified methods such as Welch’s periodogram (Pwelch) to estimate the spectral components of recorded EEG signals [45]. This technique provides valuable insights into the frequency components of EEG waveforms and is based on the mathematical principles of Fourier transforms and statistical analysis [42].

The Pwelch method is a common method of spectral decomposition of EEG signals used in ERP data analysis. The PSD calculated by the Pwelch method is computed by dividing the time signal into successive blocks, forming the periodogram for each block, and then averaging the periodogram results.

The epoched AEP data are then transformed into power spectral density (PSD) using Welch’s periodogram method using a Hanning window. The Welch periodogram is calculated using Equation (1).

where the w(n) is the Hanning window function, defined as:

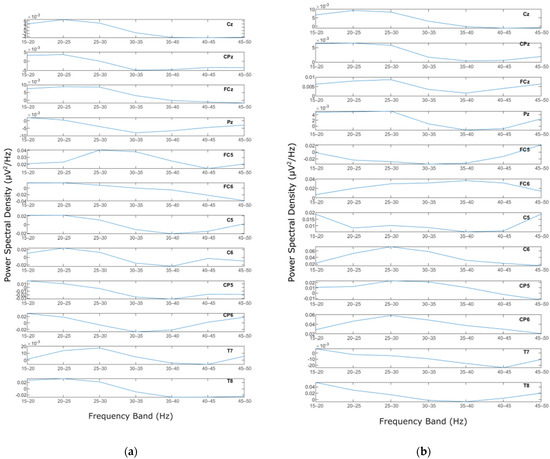

Welch’s method was applied to the MLR and LLR epochs of the EEG signals for each electrode. In other words, Welch’s calculation was conducted on all the extracted epochs of MLR and LLR data for each channel and each subject. Welch’s method generated PSD values for every calculated frequency on the signal of each epoch. The PSDs from all trials were then averaged. Once the averaged PSD of every subject was calculated, further analysis was conducted on more specific frequency bands. In the current study, the frequency range of 15–50 Hz was the objective [20,50]. The PSDs of every channel in all subjects were then trimmed into 15–50 Hz. For further analysis, the trimmed frequency was then separated into 5 Hz bands, resulting in 7 bands: 15–20 Hz, 20–25 Hz, 25–30 Hz, 30–35 Hz, 35–40 Hz, 40–45 Hz, and 45–50 Hz. The PSD for every band was then averaged, with the high boundary of the band not included, i.e., for 15–20 Hz, the averaged PSD data does not include 20 Hz (15 ≤ f < 20). The results are seven mean PSD values for every channel and every subject for two input stimuli (homophase and antiphase) of five different frequencies. Figure 6a,b represent mean PSD values for every EEG channel for one subject study in the homophasic and antiphasic conditions, respectively.

Figure 6.

PSD for a representative subject: (a) Homophase; (b) Antiphase.

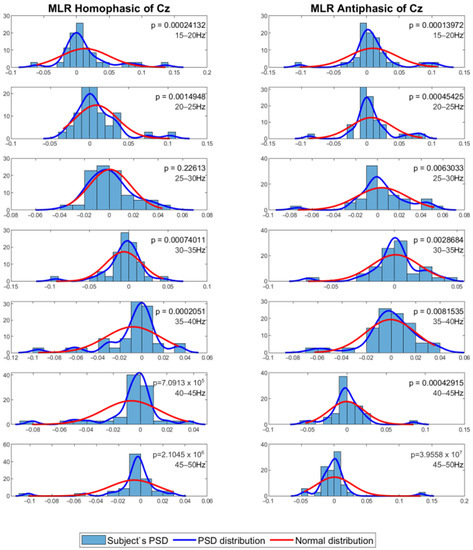

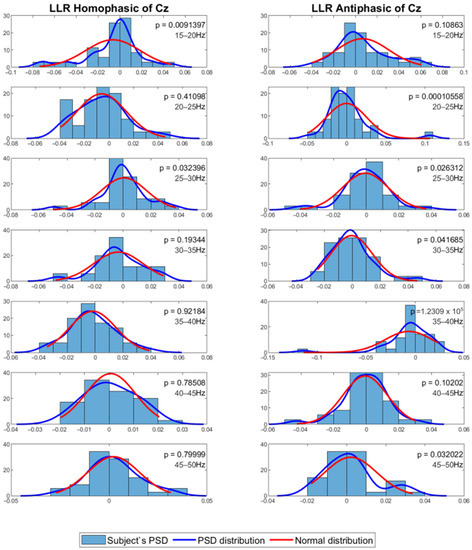

The mean PSD values were then analysed using a statistical method to evaluate whether the PSD values were normally distributed. For this process, the Shapiro–Wilk test with alpha = 0.05 was employed to evaluate normality of the distribution among subjects for the same channel and frequency band. The results show that the data in general have p values less than 0.05, which means that the PSD value distribution among the subjects for each channel and frequency band is not normal. Figure 7 and Figure 8 show the MLR and LLR plots in response to the 500 Hz stimulus, for seven different bands for the Cz channel. Since parametric tests require normal distribution of data, a non-parametric test was chosen as the alternative for further evaluation. The Wilcoxon signed-rank test was chosen to evaluate the significance of the differences between responses to the antiphasic and homophasic stimulus conditions among subjects for the same channel and frequency band. The Wilcoxon signed-rank test uses two matched samples, comparing their rank within the population. It is known to be more robust for a small sample size, usually under 50 samples. The process uses an alpha value as an indicator to accept or reject the null hypothesis that there is no significant difference between the homophasic and the antiphasic conditions. The most common alpha value for the Wilcoxon test is 0.05, which is also used in this study. If the p-value is less than 0.05, the null hypothesis is rejected, which means that there is a significant difference between the homophasic and the antiphasic conditions. If the p-value is more than 0.05, the null hypothesis cannot be rejected, which means that there is no significant difference between the homophasic and the antiphasic condition. Table 1, Table 2, Table 3, Table 4, Table 5, Table 6, Table 7, Table 8, Table 9 and Table 10 show the Wilcoxon signed-rank significance test results for MLR and LLR data for five different frequencies for all twelve channels.

Figure 7.

Normal distribution for seven different frequency bands for 500 Hz MLR Cz channel.

Figure 8.

Normal distribution for seven different frequency bands for 500 Hz LLR Cz channel.

Table 1.

Wilcoxon signed-rank significance results for the MLR with125 Hz stimuli.

Table 2.

Wilcoxon signed-rank significance results for the MLR with 250 Hz stimuli.

Table 3.

Wilcoxon signed-rank significance results for the MLR with 500 Hz stimuli.

Table 4.

Wilcoxon signed-rank significance results for the MLR with750 Hz stimuli.

Table 5.

Wilcoxon signed-rank significance results for the MLR with 1000 Hz stimuli.

Table 6.

Wilcoxon signed-rank significance results for the LLR with 125 Hz stimuli.

Table 7.

Wilcoxon signed-rank significance results for the LLR with 250 Hz stimuli.

Table 8.

Wilcoxon signed-rank significance results for the LLR with 500 Hz stimuli.

Table 9.

Wilcoxon signed-rank significance results for the LLR with750 Hz stimuli.

Table 10.

Wilcoxon signed-rank significance results for the LLR with1000 Hz stimuli.

The results of the statistical analysis from Table 1, Table 2, Table 3, Table 4, Table 5, Table 6, Table 7, Table 8, Table 9 and Table 10 indicate that there exists a significant difference between antiphase and homophase PSD data among subjects for the midline channels of the MLR for 500 Hz stimuli. The difference is significant for the 20 Hz to 25 Hz and 25 Hz to 30 Hz frequency bands. The LLR shows significant differences between the homophasic and antiphasic conditions in various channels for the 500 Hz stimuli. In addition, for the 250 Hz stimuli, the midline and left channels recorded a noticeable difference between the two conditions. Most of the significant differences are in the 20 Hz to 25 Hz and 25 Hz to 30 Hz frequency bands.

The highlighted cells indicate that the p-values are less than alpha, which means that statistically significant differences exist in the PSD bands between the two conditions. Frequency band analysis is a useful tool for identifying the frequency range where the response to the binaural cue of phase reversal is most pronounced. The significant differences observed between the antiphasic and homophasic conditions support the notion that the interaural phase difference (IPD) is important for localizing sound sources. These findings suggest that the brain is particularly sensitive to IPD cues in certain frequency bands, which are used to determine the position of a sound source.

3.3. Time Domain Analysis

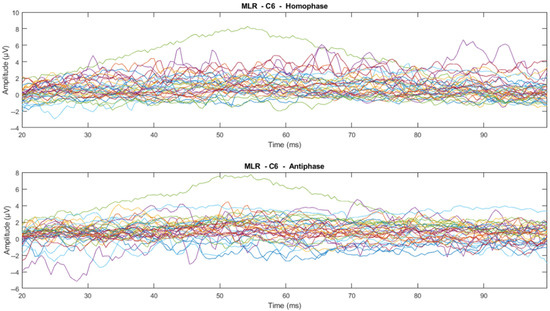

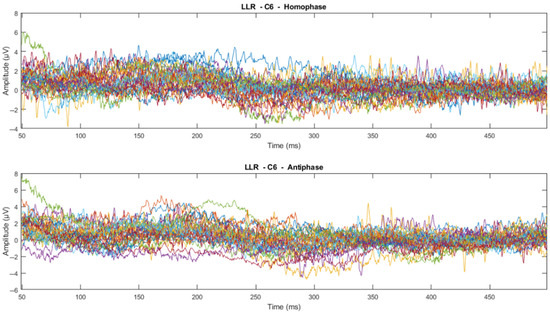

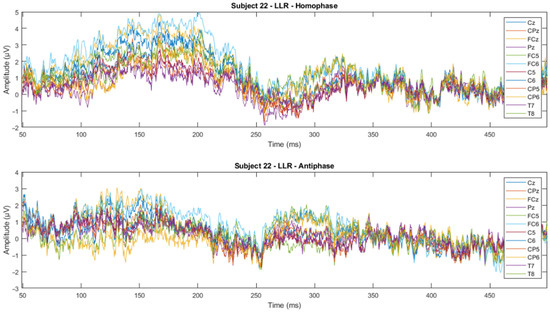

The time domain analysis aims to: (1) determine the effects of phase-shifted pure tone stimuli masked in noise of different frequencies on the MLRs and LLRs for normal hearing subjects; (2) compare the effects of ERP components with previously reported effects; and (3) determine which ERP components of MLR and LLR are dominant and contrast. The ERP data were processed using time-series domain analysis. The first step averaged the accepted epochs after epoch rejection in preprocessing. Next, the visual inspection was conducted to see whether there is a trend or pattern in the signal. This visual inspection was conducted by channel-wise and subject-wise plotting of the MLRs and LLRs, see Figure 9, Figure 10, Figure 11 and Figure 12. For the channel-wise plotting, data for every electrode from all subjects were gathered and grouped for the corresponding electrode. Each electrode group was then plotted and analysed visually. For subject-wise plotting, all electrodes for the same subject were plotted in the same Figure and analysed visually.

Figure 9.

Channel-wise 500 Hz MLR ERP wave for all subjects at C6 electrode (Each line represents each subject).

Figure 10.

Channel-wise 500 Hz LLR ERP wave for all subjects at C6 electrode (Each line represents each subject).

Figure 11.

Subject-wise 500 Hz MLR ERP wave for all channels of Subject 22.

Figure 12.

Subject-wise 500 Hz LLR ERP wave for all channels of Subject 22.

From visual inspection, it was found that the ERPs look similar among channels for each subject, as shown in Figure 11 and Figure 12. This finding is consistent with previous studies that have reported individual differences in topography, latency, and morphology of ERP components [61,62]. However, contradictory results are obtained when the ERPs are plotted for the same channel for all subjects, as shown by Figure 9 and Figure 10. This discrepancy suggests that there may be subject-specific differences in the ERPs [63,64]. For instance, one study [62] analysed ERP traces from 238 scalp channels averaged over 500 EEG epochs in a single subject and found that the ERPs were subject specific.

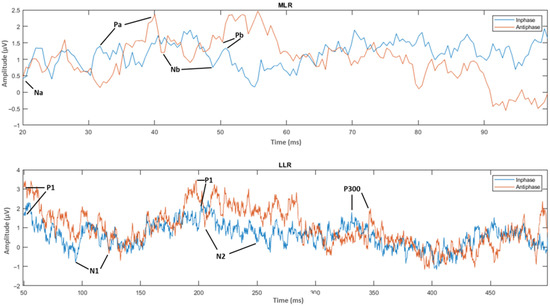

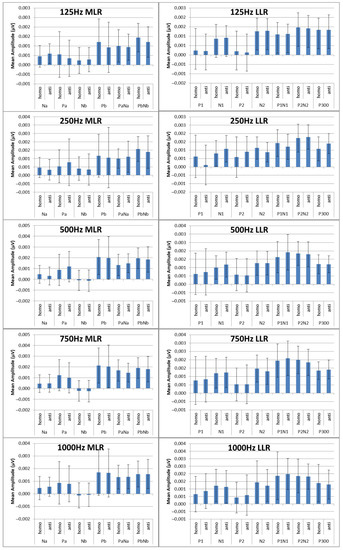

The next step was the extraction of peaks and peak-to-peak values of the MLR and LLR from every channel and subject. For the MLR, the ERP components to be extracted are Na, Pa, Nb, and Pb, while for the LLR, the ERP components are N1, P1, N2, P2 and P300. A representation of the peak components in the MLR and LLR waves is shown in Figure 13. The results were categorized into 10 MLR variables and 11 LLR variables for homophasic and antiphasic data as shown in Table 11. The mean and standard deviation values of all extracted MLR and LLR peaks are shown in Figure 14. Differences between the homophasic and antiphasic results were not immediately apparent. Nonetheless, some insight can be drawn from the results depicted in Figure 14.

Figure 13.

ERP wave peak components of homophase and antiphase for MLR and LLR.

Table 11.

Variable categories extracted from the ERPs.

Figure 14.

Mean peak amplitudes in (µV) with standard deviations for the ERP components for MLR.

Firstly, the Na peak of the MLR for the 500 Hz stimulus has the highest differences in mean values between the antiphasic and homophasic conditions with a low standard deviation. This suggests that the Na peak of the MLR for the 500 Hz stimulus may be sensitive to phase differences in the stimuli. Secondly, for the LLR, the highest average differences between the homophasic and the antiphasic conditions are found for the N1, N2, and P300 peaks for the 250 Hz stimuli and the N1 peak for the 500 Hz stimuli. This indicates that the N1, N2, and P300 peaks of the LLR for 250 Hz stimuli and the N1 peak for the 500 Hz stimuli may be more sensitive to phase differences. These findings suggest that the differences between the antiphasic and homophasic condition in mean values of ERP peaks vary across different frequencies of the stimuli and may depend on the type of AEPs. Previous studies on auditory middle latency responses (MLRs) have shown that the amplitudes of most MLR peaks increase and their latencies decrease with increasing stimulus intensity [65]. To evaluate the significance of ERP peak amplitudes for stimuli of different frequencies, a statistical test is required [61].

The Shapiro–Wilk test was conducted to examine the normality of the results. Since the visual inspection shows similarity across channels for the same subject, subject-wise data grouping for the tests was conducted, i.e., it was checked whether the distribution was normal among channels for the same subject. The normality results show that all peak categories have normal distribution for almost all subjects. Since the peak categories show a normal distribution trend, they may be averaged among subjects for each category separately. The results of averaged peaks among channels for each subject were converted into absolute values prior to the averaging of all subject peak values in each category. The use of absolute values when analysing peak amplitudes is consistent with the literature because it allows for the comparison of peak amplitudes across different conditions and subjects [66]. From the averaged results, the peaks of the MLR and LLR varied with stimuli of different frequencies.

It was then evaluated whether there is a significant difference in the MLR and LLR components between the homophasic and antiphasic for different stimulus frequencies. A two-sample t-Test was conducted for the mean peaks in the antiphasic and homophasic condition among subjects. The results show that there are significant differences in the Na and N1 peaks between the antiphasic and the homophasic condition for 500 Hz stimuli. In addition, the P300 peak of the LLR showed significant differences between the antiphasic and the homophasic condition for 250 Hz stimuli. The results are listed in Table 12 and Table 13.

Table 12.

Significance test for absolute peaks between antiphase and homophase for MLR.

Table 13.

Significance test for absolute peaks between antiphase and homophase for LLR.

4. Discussion

The findings of the current study suggest that for signals masked in noise, phase changes can have a significant effect on binaural processing in the human brain, as measured by auditory evoked potentials (AEPs). Our results showed that there were statistically significant differences between the AEP signals generated by antiphasic and homophasic stimuli, in both time and frequency domain features. The differences suggest that the brain can detect and process interaural phase differences, which may be important for spatial localization of sound sources and other aspects of auditory processing. The results are consistent with previous studies [67] that have shown that binaural stimulation results in larger cortical responses compared to monaural stimuli, and that the amplitude and latency of AEPs are dependent on the binaural difference. However, our study is unique in its focus on phase changes and its use of stimuli with frequency and noise parameters. The findings may improve our understanding of binaural processing in the human brain and lead to applications in the development of new objective hearing tests in the future. Our results indicate that the detection of phase differences may be an important factor in the “cocktail party” effect, whereby listeners are able to focus on a particular sound source in a noisy environment [68].

In the frequency domain study, we used the Pwelch method to calculate the power spectral density values of the MLR and LLR signals in various frequency bands to investigate the significance of phase differences in binaural processing [69]. Our results showed that the 20–25 Hz and 25–30 Hz frequency bands of the MLR and LLR signals had a significant difference for antiphasic and homophasic stimuli. These frequency bands correspond to the high beta and low gamma frequency range of the EEG [70]. The finding is consistent with previous research suggesting that sensory integration results in frequencies in the high beta and low gamma range, which may indicate conscious and accurate phase detection of auditory stimuli [70,71]. Further analysis revealed that the stimuli which resulted in statistically significant differences were 500 Hz for the MLR signals, and 250 Hz and 500 Hz for the LLR signals, mainly in the 20–25 Hz and 25–30 Hz frequency bands. These findings suggest that optimal binaural processing occurs at 500 Hz, in line with previous literature predicting that lower frequencies result in larger binaural masking level differences (BMLD) [72,73].

We also analysed the electrode locations that provided more significant differences between the homophasic and antiphasic condition. Our results show that the midline electrodes provided more significant differences in the MLR [74], while both the midline and left electrodes provided significant differences for the LLR signals [75]. This finding may indicate that midline electrodes could be more suitable to investigate the processing of pure tone stimuli in binaural hearing [76]. The left hemisphere of the brain, which is known to be important for processing the temporal aspects of sound, may also be involved in this processing [77]. It is also in agreement with the finding of Ross et al. [78], where the author confirms the dominance of hemispheric contribution in processing auditory stimuli in noisy environments.

The present study also investigated phase-sensitive binaural hearing using the time domain ERP peak analysis. The results revealed that the Na peak of the MLR for 500 Hz stimuli, the N1 peak of the LLR for 500 Hz stimuli, and the P300 peak of the LLR for 250 Hz stimuli show a statistically significant difference between the antiphasic and the homophasic condition for subject-wise analysis, indicating the importance of phase differences in binaural hearing. It has been suggested that neural functioning in the thalamo-cortical level (bottom up) and neurocognitive functions (top down) are related to phase-sensitive stimuli masked in noise for binaural hearing [79,80]. Furthermore, our study highlights the importance of the N1 peak and the P300 peak of LLR in the analysis of binaural hearing and their potential use as measures of cortical processing of IPD [80,81]. The Na peak of the MLR, the N1 peak of the LLR, and the P300 peak of the LLR are important components in the analysis of the relevance of interaural phase differences (IPD) for binaural hearing. The peaks are believed to reflect the processing of IPD at different levels of the auditory system, starting from the midbrain regions to the auditory cortex, and finally, the attentional and working memory systems. The Na peak of MLR is believed to represent the processing of IPD in the midbrain regions, specifically in the superior olivary complex and lateral lemniscus [82]. This peak is sensitive to small differences in IPD, making it an important measure for studying spatial hearing in binaural hearing tasks [83,84]. The N1 peak of LLR reflects cortical processing of IPD, particularly in the auditory cortex. This peak may represent the neural processing of the differences in the timing of the sound wave between the two ears at a higher level of the auditory system. Reduced N1 peak amplitudes may suggest a possible deficit in cortical processing of IPD, which may contribute to difficulties in discriminating tones and non-speech sounds from noise [85]. In literature, the N1 peak has been identified as a physiological index of the ability to “tune in” one’s attention to a single sound source when there are several competing sources in a noisy environment, again referring to the ‘cocktail party effect’ [86,87]. Enhancement of the N1 component for tasks which require selective attention has also been described in the literature [46] and is in line with the current study’s findings. The P300 peak of LLR may reflect cognitive processing of IPD, particularly in attentional and working memory systems. Several studies have stated the importance of the P300 component in analysing binaural hearing for normal adults as well as in adults with central processing disorders [88,89]. Reduced P300 peak amplitudes may suggest a possible deficit in cognitive processing of IPD and impaired attentional and working memory functions.

The frequency domain analysis results suggest that the brain is capable of detecting and processing phase differences in binaural hearing, particularly in the high beta and low gamma frequency range, and that optimal binaural processing occurs for 500 Hz stimuli, based on the MLR results. Additionally, our results provide guidance on the selection of electrode locations for future binaural hearing studies. For time domain analysis, the Na peak of the MLR and N1 peak of the LLR for 500 Hz stimuli can be used as markers in objective studies of binaural hearing. The P300 peaks of the LLR for 250 Hz stimuli also may contribute to objective measures for binaural hearing.

5. Conclusions

In conclusion, the current study explored the role of interaural phase differences in binaural processing in noise and their neural correlates in the human brain. The results demonstrated significant differences between auditory evoked potentials (AEP) generated by antiphasic and homophasic stimuli in both time and frequency domains. These findings highlight the brain’s ability to detect and process interaural phase differences, crucial for sound source localization and other auditory processing aspects.

Frequency domain analysis revealed significant differences in the middle latency response (MLR) signals for 500 Hz stimuli, while both 250 Hz and 500 Hz stimuli showed significant differences in the late latency response (LLR) signals, particularly in the 20–25 Hz and 25–30 Hz frequency bands. This suggests optimal binaural processing at 500 Hz, specifically in the high beta–low gamma frequency range, known for sensory integration. Additionally, midline electrodes proved more effective for investigating binaural processing of pure tone stimuli, yielding significant differences in MLR signals, while both the midline and left electrodes showed significant differences in LLR signals.

Furthermore, time domain analysis identified the Na peak of the MLR and N1 peak of the LLR for 500 Hz stimuli as significant markers for responses to homophasic and antiphasic stimuli, with potential applications in objective studies of binaural hearing. The P300 peak of the LLR for 250 Hz stimuli also exhibited strong significance between responses to homophasic and antiphasic stimuli, suggesting it might be considered as an objective measure for binaural hearing.

Future research can expand on these findings to explore the clinical implications of binaural processing in hearing disorders and related conditions.

6. Limitations

It is important to note, however, that the present study has some limitations. For instance, the sample size was thirty-five, and the current study mainly focused on healthy young adults, so the results may not be generalizable to other populations. Additionally, the study used a limited set of auditory stimuli, so future research could explore the effects of different types of stimuli on binaural processing in more depth.

Author Contributions

Conceptualization, E.I., S.A., M.J. and F.D.B.; methodology, E.I.; software, E.I.; validation, S.A., M.J. and F.D.B.; formal analysis, E.I.; investigation, E.I.; resources, E.I., S.A., M.J. and F.D.B.; data curation, E.I.; writing—original draft preparation, E.I.; writing—review and editing, S.A., M.J. and F.D.B.; visualisation, E.I.; supervision, S.A., M.J. and F.D.B.; project administration, F.D.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was approved by the Charles Darwin University Human Research Ethics Committee (CDU-HREC) (approval number is H18014, approved on 9 June 2022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tharpe, A.M. Unilateral and mild bilateral hearing loss in children: Past and current perspectives. Trends Amplif. 2008, 12, 7–15. [Google Scholar] [CrossRef]

- Fortnum, H.M.; Davis, A.; Summerfield, A.Q.; Marshall, D.H.; Davis, A.C.; Bamford, J.M.; Yoshinaga-Itano, C.; Hind, S. Prevalence of permanent childhood hearing impairment in the United Kingdom and implications for universal neonatal hearing screening: Questionnaire based ascertainment study Commentary: Universal newborn hearing screening: Implications for coordinating and developing services for deaf and hearing impaired children. BMJ 2001, 323, 536. [Google Scholar] [PubMed]

- Isaacson, J.; Vora, N.M. Differential diagnosis and treatment of hearing loss. Am. Fam. Physician 2003, 68, 1125–1132. [Google Scholar] [PubMed]

- Perez, P.; Bao, J. Why do hair cells and spiral ganglion neurons in the cochlea die during aging? Aging Dis. 2011, 2, 231. [Google Scholar]

- Jamal, A.; Alsabea, A.; Tarakmeh, M. Effect of ear infections on hearing ability: A narrative review on the complications of otitis media. Cureus 2022, 14, e27400. [Google Scholar] [CrossRef] [PubMed]

- Sliwinska-Kowalska, M. New trends in the prevention of occupational noise-induced hearing loss. Int. J. Occup. Med. Environ. Health 2020, 33, 841–848. [Google Scholar] [CrossRef]

- Michels, T.C.; Duffy, M.T.; Rogers, D.J. Hearing loss in adults: Differential diagnosis and treatment. Am. Fam. Physician 2019, 100, 98–108. [Google Scholar]

- Okely, J.A.; Akeroyd, M.A.; Deary, I.J. Associations Between Hearing and Cognitive Abilities from Childhood to Middle Age: The National Child Development Study 1958. Trends Hear. 2021, 25, 23312165211053707. (In English) [Google Scholar] [CrossRef]

- Lavandier, M.; Best, V. Modeling binaural speech understanding in complex situations. In The Technology of Binaural Understanding; Springer: Cham, Switzerland, 2020; pp. 547–578. [Google Scholar] [CrossRef]

- Yahav, P.H.-S.; Golumbic, E.Z. Linguistic processing of task-irrelevant speech at a cocktail party. eLife 2021, 10, e65096. [Google Scholar] [CrossRef]

- van Wieringen, A.; Boudewyns, A.; Sangen, A.; Wouters, J.; Desloovere, C. Unilateral congenital hearing loss in children: Challenges and potentials. Hear. Res. 2019, 372, 29–41. [Google Scholar] [CrossRef]

- Cole, E.B.; Flexer, C. Children with Hearing Loss: Developing Listening and Talking, Birth to Six; Plural Publishing: San Diego, CA, USA, 2019. [Google Scholar]

- Profant, O.; Jilek, M.; Bures, Z.; Vencovsky, V.; Kucharova, D.; Svobodova, V.; Korynta, J.; Syka, J. Functional age-related changes within the human auditory system studied by audiometric examination. Front. Aging Neurosci. 2019, 11, 26. [Google Scholar] [CrossRef]

- Harris, K. The Aging Auditory System: Electrophysiology. In Aging and Hearing, Causes and Consequences; Springer: Cham, Switzerland, 2020; pp. 117–141. [Google Scholar]

- Bellis, T.J. Assessment and Management of Central Auditory Processing Disorders in the Educational Setting: From Science to Practice; Plural Publishing: San Diego, CA, USA, 2011. [Google Scholar]

- Polley, D.B.; Thompson, J.H.; Guo, W. Brief hearing loss disrupts binaural integration during two early critical periods of auditory cortex development. Nat. Commun. 2013, 4, 2547. [Google Scholar] [CrossRef]

- Mason, J.A.; Herrmann, K.R. Universal infant hearing screening by automated auditory brainstem response measurement. Pediatrics 1998, 101, 221–228. [Google Scholar] [CrossRef] [PubMed]

- Ibarra-Zarate, D.; Alonso-Valerdi, L.M. Acoustic therapies for tinnitus: The basis and the electroencephalographic evaluation. Biomed. Signal Process. Control. 2020, 59, 101900. [Google Scholar] [CrossRef]

- Engström, E. Neurophysiological Conditions for Hearing in Children Using Hearing Aids or Cochlear Implants: An Intervention and Follow-Up Study; Karolinska Institutet: Solna, Sweden, 2021. [Google Scholar]

- Ignatious, E.; Azam, S.; Jonkman, M.; De Boer, F. Study of Correlation Between EEG Electrodes for the Analysis of Cortical Responses Related to Binaural Hearing. IEEE Access 2021, 9, 66282–66308. [Google Scholar] [CrossRef]

- Christensen, C.B.; Hietkamp, R.K.; Harte, J.M.; Lunner, T.; Kidmose, P. Toward EEG-Assisted Hearing Aids: Objective Threshold Estimation Based on Ear-EEG in Subjects with Sensorineural Hearing Loss. Trends Hear. 2018, 22, 2331216518816203. [Google Scholar] [CrossRef]

- Cone-Wesson, B.; Wunderlich, J. Auditory evoked potentials from the cortex: Audiology applications. Curr. Opin. Otolaryngol. Head Neck Surg. 2003, 11, 372–377. [Google Scholar] [CrossRef]

- Luo, J.J.; Khurana, D.S.; Kothare, S. Brainstem auditory evoked potentials and middle latency auditory evoked potentials in young children. J. Clin. Neurosci. 2013, 20, 383–388. (In English) [Google Scholar] [CrossRef]

- Paulraj, M.P.; Subramaniam, K.; Bin Yaccob, S.; Bin Adom, A.H.; Hema, C.R. Auditory evoked potential response and hearing loss: A review. Open Biomed. Eng. J. 2015, 9, 17–24. (In English) [Google Scholar] [CrossRef]

- Burkard, R.F.; Eggermont, J.J.; Don, M. (Eds.) Auditory Evoked Potentials: Basic Principles and Clinical Application; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2007. [Google Scholar]

- Radeloff, A.; Cebulla, M.; Shehata-Dieler, W. Auditory evoked potentials: Basics and clinical applications. Laryngo-Rhino-Otol. 2014, 93, 625–637. [Google Scholar]

- Behmen, M.B.; Guler, N.; Kuru, E.; Bal, N.; Toker, O.G. Speech auditory brainstem response in audiological practice: A systematic review. Eur. Arch. Oto-Rhino-Laryngol. 2023, 280, 2099–2118. [Google Scholar] [CrossRef] [PubMed]

- Leigh-Paffenroth, E.D.; Roup, C.M.; Noe, C.M. Behavioral and electrophysiologic binaural processing in persons with symmetric hearing loss. J. Am. Acad. Audiol. 2011, 22, 181–193. [Google Scholar] [CrossRef] [PubMed]

- Abdollahi, F.Z.; Lotfi, Y.; Moosavi, A.; Bakhshi, E. Binaural interaction component of middle latency response in children suspected to central auditory processing disorder. Indian J. Otolaryngol. Head Neck Surg. 2019, 71, 182–185. [Google Scholar] [CrossRef] [PubMed]

- Ono, Y.; Kudoh, K.; Ikeda, T.; Takahashi, T.; Yoshimura, Y.; Minabe, Y.; Kikuchi, M. Auditory steady-state response at 20 Hz and 40 Hz in young typically developing children and children with autism spectrum disorder. Psychiatry Clin. Neurosci. 2020, 74, 354–361. [Google Scholar] [CrossRef] [PubMed]

- Macaskill, M.; Omidvar, S.; Koravand, A. Long Latency Auditory Evoked Responses in the Identification of Children with Central Auditory Processing Disorders: A Scoping Review. J. Speech Lang Hear. Res. 2022, 65, 3595–3619. (In English) [Google Scholar] [CrossRef]

- Xiong, S.; Jiang, L.; Wang, Y.; Pan, T.; Ma, F. The Role of the P1 Latency in Auditory and Speech Performance Evaluation in Cochlear Implanted Children. Neural Plast. 2022, 2022, 6894794. [Google Scholar] [CrossRef]

- Islam, N.; Sulaiman, N.; Rashid, M.; Bari, B.S.; Mustafa, M. Hearing disorder detection using auditory evoked potential (AEP) signals. In Proceedings of the 2020 Emerging Technology in Computing, Communication and Electronics (ETCCE), Dhaka, Bangladesh, 21–22 December 2020; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Kähkönen, S.; Yamashita, H.; Rytsälä, H.; Suominen, K.; Ahveninen, J.; Isometsä, E. Dysfunction in early auditory processing in major depressive disorder revealed by combined MEG and EEG. J. Psychiatry Neurosci. 2007, 32, 316–322. [Google Scholar]

- Musacchia, G.; Schroeder, C.E. Neuronal mechanisms, response dynamics and perceptual functions of multisensory interactions in auditory cortex. Hear. Res. 2009, 258, 72–79. [Google Scholar] [CrossRef]

- Vaughan, H.G.J.; Ritter, W.; Simson, R. Topographic analysis of auditory event-related potentials. Prog. Brain Res. 1980, 54, 279–285. [Google Scholar]

- Lotfi, Y.; Moosavi, A.; Abdollahi, F.Z.; Bakhshi, E. Auditory lateralization training effects on binaural interaction component of middle latency response in children suspected to central auditory processing disorder. Indian J. Otolaryngol. Head Neck Surg. 2019, 71, 104–108. [Google Scholar] [CrossRef]

- Malavolta, V.C.; Sanfins, M.D.; Soares, L.d.S.; Skarzynski, P.H.; Moreira, H.G.; Nascimento, V.D.O.C.; Schumacher, C.G.; Moura, A.F.; de Lima, S.S.; Mundt, A.A.; et al. Frequency-Following Response and Auditory Middle Latency Response: An analysis of central auditory processing in young adults. Rev. CEFAC 2022, 24, e5622. [Google Scholar] [CrossRef]

- Colella-Santos, M.F.; Donadon, C.; Sanfins, M.D.; Borges, L.R. Otitis media: Long-term effect on central auditory nervous system. BioMed Res. Int. 2019, 2019, 8930904. [Google Scholar] [CrossRef] [PubMed]

- Atılgan, A.; Cesur, S.; Çiprut, A. A longitudinal study of cortical auditory maturation and implications of the short inter-implant delay in children with bilateral sequential cochlear implants. Int. J. Pediatr. Otorhinolaryngol. 2023, 166, 111472. [Google Scholar] [CrossRef] [PubMed]

- Borges, L.R.; Donadon, C.; Sanfins, M.D.; Valente, J.P.; Paschoal, J.R.; Colella-Santos, M.F. The effects of otitis media with effusion on the measurement of auditory evoked potentials. Int. J. Pediatr. Otorhinolaryngol. 2020, 133, 109978. [Google Scholar] [CrossRef] [PubMed]

- Acharya, U.R.; Sree, S.V.; Swapna, G.; Martis, R.J.; Suri, J.S. Automated EEG analysis of epilepsy: A review. Knowl.-Based Syst. 2013, 45, 147–165. [Google Scholar] [CrossRef]

- Paulraj, M.P.; Subramaniam, K.; Yaccob, S.B.; Hamid, A.; Hema, C.R. EEG based detection of conductive and sensorineural hearing loss using artificial neural networks. J. Next Gener. Inf. Technol. 2013, 4, 204. [Google Scholar]

- Zhang, Z. Spectral and time-frequency analysis. In EEG Signal Processing and Feature Extraction; Hu, L., Zhang, Z., Eds.; Springer: Singapore, 2019; pp. 89–116. [Google Scholar]

- Bansal, D.; Mahajan, R. (Eds.) Chapter 7—Conclusion. In EEG-Based Brain-Computer Interfaces; Academic Press: Cambridge, MA, USA, 2019; pp. 195–203. [Google Scholar]

- Hillyard, S.A.; Hink, R.F.; Schwent, V.L.; Picton, T.W. Electrical signs of selective attention in the human brain. Science 1973, 182, 177–180. [Google Scholar] [CrossRef]

- Kumar, A.; Anand, S.; Yaddanapudi, L.N. Comparison of auditory evoked potential parameters for predicting clinically anaesthetized state. Acta Anaesthesiol. Scand. 2006, 50, 1139–1144. [Google Scholar] [CrossRef]

- Absalom, A.R.; Sutcliffe, N.; Kenny, G.N.C. Effects of the auditory stimuli of an auditory evoked potential system on levels of consciousness, and on the bispectral index. Br. J. Anaesth. 2001, 87, 778–780. [Google Scholar] [CrossRef]

- Alain, C.; Arnott, S.R.; Hevenor, S.; Graham, S.; Grady, C.L. “What” and “where” in the human auditory system. Proc. Natl. Acad. Sci. USA 2001, 98, 12301–12306. [Google Scholar] [CrossRef]

- Miles, T.; Ignatious, E.; Azam, S.; Jonkman, M.; De Boer, F. Mathematically modelling the brain response to auditory stimulus. In Proceedings of the TENCON 2021–2021 IEEE Region 10 Conference (TENCON), Auckland, New Zealand, 7–10 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 151–156. [Google Scholar] [CrossRef]

- Saia, R.; Carta, S.; Fenu, G.; Pompianu, L. Influencing brain waves by evoked potentials as biometric approach: Taking stock of the last six years of research. Neural Comput. Appl. 2023, 35, 11625–11651. [Google Scholar] [CrossRef]

- Sun, K.-T.; Hsieh, K.-L.; Lee, S.-Y. Using Mental Shadowing Tasks to Improve the Sound-Evoked Potential of EEG in the Design of an Auditory Brain–Computer Interface. Appl. Sci. 2023, 13, 856. Available online: https://www.mdpi.com/2076-3417/13/2/856 (accessed on 24 April 2023). [CrossRef]

- Zavala-Fernandez, H.; Orglmeister, R.; Trahms, L.; Sander, T. Identification enhancement of auditory evoked potentials in EEG by epoch concatenation and temporal decorrelation. Comput. Methods Programs Biomed. 2012, 108, 1097–1105. [Google Scholar] [CrossRef]

- van Driel, J.; Olivers, C.N.; Fahrenfort, J.J. High-pass filtering artifacts in multivariate classification of neural time series data. J. Neurosci. Methods 2021, 352, 109080. [Google Scholar] [CrossRef] [PubMed]

- Prado-Gutierrez, P.; Martínez-Montes, E.; Weinstein, A.; Zañartu, M. Estimation of auditory steady-state responses based on the averaging of independent EEG epochs. PLoS ONE 2019, 14, e0206018. [Google Scholar] [CrossRef]

- Jiang, X.; Bian, G.-B.; Tian, Z. Removal of artifacts from EEG signals: A review. Sensors 2019, 19, 987. [Google Scholar] [CrossRef]

- Al-Fahoum, A.S.; Al-Fraihat, A.A. Methods of EEG signal features extraction using linear analysis in frequency and time-frequency domains. ISRN Neurosci. 2014, 2014, 730218. (In English) [Google Scholar] [CrossRef] [PubMed]

- Das, R.K.; Martin, A.; Zurales, T.; Dowling, D.; Khan, A. A Survey on EEG Data Analysis Software. Science 2023, 5, 23. Available online: https://www.mdpi.com/2413-4155/5/2/23 (accessed on 8 March 2023). [CrossRef]

- Huang, Z.; Wang, M. A review of electroencephalogram signal processing methods for brain-controlled robots. Cogn. Robot. 2021, 1, 111–124. [Google Scholar] [CrossRef]

- Barlaam, F.; Descoins, M.; Bertrand, O.; Hasbroucq, T.; Vidal, F.; Assaiante, C.; Schmitz, C. Time–Frequency and ERP Analyses of EEG to Characterize Anticipatory Postural Adjustments in a Bimanual Load-Lifting Task. Front. Hum. Neurosci. 2011, 5, 163. (In English) [Google Scholar] [CrossRef]

- Kallionpää, R.E.; Pesonen, H.; Scheinin, A.; Sandman, N.; Laitio, R.; Scheinin, H.; Revonsuo, A.; Valli, K. Single-subject analysis of N400 event-related potential component with five different methods. Int. J. Psychophysiol. 2019, 144, 14–24. [Google Scholar] [CrossRef]

- Makeig, S.; Onton, J. ERP features and EEG dynamics: An ICA perspective. In Oxford Handbook of Event-Related Potential Components; Oxford University Press: Oxford, UK, 2011; pp. 51–86. [Google Scholar]

- Groen, I.I.A.; Ghebreab, S.; Lamme, V.A.F.; Scholte, H.S. Spatially Pooled Contrast Responses Predict Neural and Perceptual Similarity of Naturalistic Image Categories. PLoS Comput. Biol. 2012, 8, e1002726. [Google Scholar] [CrossRef]

- Rousselet, N.; Rousselet, G.A.; Gaspar, C.M.; Wieczorek, K.P.; Pernet, C.R. Modelling single-trial ERP reveals modulation of bottom-up face visual processing by top-down task constraints (in some subjects). Front. Psychol. 2011, 2, 137. [Google Scholar] [PubMed]

- Borgmann, C.; Roß, B.; Draganova, R.; Pantev, C. Human auditory middle latency responses: Influence of stimulus type and intensity. Hear. Res. 2001, 158, 57–64. [Google Scholar] [CrossRef]

- Luck, S.J. An Introduction to the Event-Related Potential Technique; MIT Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Chait, M.; Poeppel, D.; de Cheveigné, A.; Simon, J.Z. Human auditory cortical processing of changes in interaural correlation. J. Neurosci. 2005, 25, 8518–8527. (In English) [Google Scholar] [CrossRef] [PubMed]

- Arons, B. A review of the cocktail party effect. J. Am. Voice I/O Soc. 1992, 12, 35–50. [Google Scholar]

- Hong, L.E.; Buchanan, R.W.; Thaker, G.K.; Shepard, P.D.; Summerfelt, A. Beta (~16 Hz) frequency neural oscillations mediate auditory sensory gating in humans. Psychophysiology 2008, 45, 197–204. [Google Scholar] [CrossRef]

- Jirakittayakorn, N.; Wongsawat, Y. Brain responses to 40-Hz binaural beat and effects on emotion and memory. Int. J. Psychophysiol. 2017, 120, 96–107. (In English) [Google Scholar] [CrossRef]

- Gao, X.; Cao, H.; Ming, D.; Qi, H.; Wang, X.; Wang, X.; Chen, R.; Zhou, P. Analysis of EEG activity in response to binaural beats with different frequencies. Int. J. Psychophysiol. 2014, 94, 399–406. [Google Scholar] [CrossRef]

- Kortlang, S.; Mauermann, M.; Ewert, S.D. Suprathreshold auditory processing deficits in noise: Effects of hearing loss and age. Hear. Res. 2016, 331, 27–40. [Google Scholar] [CrossRef]

- Pillsbury, H.C.; Grose, J.H.; Hall, J.W. Otitis media with effusion in children: Binaural hearing before and after corrective surgery. Arch. Otolaryngol.–Head Neck Surg. 1991, 117, 718–723. [Google Scholar] [CrossRef]

- Kelly-Ballweber, D.; Dobie, R.A. Binaural interaction measured behaviorally and electrophysiologically in young and old adults. Audiology 1984, 23, 181–194. [Google Scholar] [CrossRef]

- Johnson, B.W.; Hautus, M.; Clapp, W.C. Neural activity associated with binaural processes for the perceptual segregation of pitch. Clin. Neurophysiol. 2003, 114, 2245–2250. [Google Scholar] [CrossRef]

- Rao, A.; Zhang, Y.; Miller, S. Selective listening of concurrent auditory stimuli: An event-related potential study. Hear. Res. 2010, 268, 123–132. [Google Scholar] [CrossRef] [PubMed]

- Zatorre, R.J.; Belin, P. Spectral and temporal processing in human auditory cortex. Cereb. Cortex 2001, 11, 946–953. [Google Scholar] [CrossRef] [PubMed]

- Okamoto, H.; Stracke, H.; Ross, B.; Kakigi, R.; Pantev, C. Left hemispheric dominance during auditory processing in a noisy environment. BMC Biol. 2007, 5, 52. [Google Scholar] [CrossRef]

- Busch, N.A.; Schadow, J.; Fründ, I.; Herrmann, C.S. Time-frequency analysis of target detection reveals an early interface between bottom–up and top–down processes in the gamma-band. NeuroImage 2006, 29, 1106–1116. [Google Scholar] [CrossRef] [PubMed]

- Epp, B.; Yasin, I.; Verhey, J.L. Objective measures of binaural masking level differences and comodulation masking release based on late auditory evoked potentials. Hear. Res. 2013, 306, 21–28. [Google Scholar] [CrossRef]

- van Dinteren, R.; Arns, M.; Jongsma, M.L.A.; Kessels, R.P.C. P300 Development across the Lifespan: A Systematic Review and Meta-Analysis. PLoS ONE 2014, 9, e87347. [Google Scholar] [CrossRef]

- Neves, I.F.; Gonçalves, I.C.; Leite, R.A.; Magliaro, F.C.L.; Matas, C.G. Middle latency response study of auditory evoked potentials amplitudes and lantencies audiologically normal individuals. Braz. J. Otorhinolaryngol. 2007, 73, 69–74. (In English) [Google Scholar] [CrossRef]

- Jang, H.; Yoon, T.S.; Shin, J.S. Study of the Factors Affecting the Middle Latency Response. J. Korean Acad. Rehabil. Med. 1990, 14, 13. Available online: http://www.e-arm.org/journal/view.php?number=3545 (accessed on 24 April 2023).

- Bell, S.L.; Smith, D.C.; Allen, R.; Lutman, M.E. The auditory middle latency response, evoked using maximum length sequences and chirps, as an indicator of adequacy of anesthesia. Anesth. Analg. 2006, 102, 495–498. (In English) [Google Scholar] [CrossRef] [PubMed]

- Papesh, M.A.; Folmer, R.L.; Gallun, F.J. Cortical measures of binaural processing predict spatial release from masking performance. Front. Hum. Neurosci. 2017, 11, 124. [Google Scholar] [CrossRef]

- Schwent, V.L.; Hillyard, S.A. Evoked potential correlates of selective attention with multi-channel auditory inputs. Electroencephalogr. Clin. Neurophysiol. 1975, 38, 131–138. [Google Scholar] [CrossRef]

- Schwent, V.L.; Hillyard, S.A.; Galambos, R. Selective attention and the auditory vertex potential. II. Effects of signal intensity and masking noise. Electroencephalogr. Clin. Neurophysiol. 1976, 40, 615–622. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y. Hearing in Noise Ability Measured with P300 in Normal Hearing Adults. Master’s Thesis, Missouri State University, Springfield, MO, USA, 2010. [Google Scholar]

- Krishnamurti, S. P300 auditory event-related potentials in binaural and competing noise conditions in adults with central auditory processing disorders. Contemp. Issues Commun. Sci. Disord. 2001, 28, 40–47. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).