1. Introduction

Mental stress is an inexorable issue faced by human beings irrespective of age, religion, ethnicity, region, and gender. Mental stress affects and limits an individual’s ability to disrupt daily routines [

1]. In psychology, stress combines the perception of stress or a situation with the body’s response to it. Stress is generally triggered when an individual encounters adverse conditions, such as mental, physical, or emotional stressors. The stressors were grouped into internal and external. Internal stressors depend on individual perceptions, thoughts, and personalities. External stressors include relationship problems; financial difficulties; work pressure; and professional, political, and religious pressures [

2]. Stressors include mental arithmetic tests, picture perception tests, and rapidly changing tasks. Physical stressors included exercise, physical activity, painful stimuli, or sleep deprivation. Emotional stressors include videos or songs [

3].

Mental stress is classified as either chronic or acute. Acute stress occurs when an individual is exposed to short-duration stressors such as public speaking or job interviews. Long-term and frequent exposure to stressors, such as poor sleep habits, stressful jobs, and poor relationships, lead to chronic stress. Various physiological changes occur in an individual’s body to deal with stress [

4]. Stress may cause the release of cortisol, noradrenaline, and adrenaline, thereby providing instant energy to the body. Afterwards, the parasympathetic nervous system regulates the body to normal conditions (homeostasis condition) without any significant harm to the body. Continuous or long-term exposure to stress affects an individual’s mental and physical health. Stress leads to distinct health issues such as stroke, hypertension, cardiac arrest, coronary artery disease, persistent pain, anxiety, muscle exhaustion, and depression [

5].

Psychiatrists and clinicians analyze stress using self-report questionnaires. Various questionnaires are used to analyze stress, such as the daily stress inventory, perceived stress scale, and relative stress scale. However, their trust and efficiency were highly subjective and prone to incorrect or invalid answers. Questionnaire-based stress analysis has a high error rate owing to social response and desirability biases. Additionally, behavior analysis based on vocal and non-verbal indications (rapid eye movement and body gesture) and visual responses was utilized for stress analysis [

6]. However, behavioral analysis can vary in conscious states. The questionnaire reports and behavioral analysis are subject to erroneous expert knowledge due to fatigue, inadequate expert knowledge, tiredness, and bias. Stress refers to the physiological changes in a person affected by the autonomic nervous system. These changes include physiological modalities, such as eye gaze, skin temperature, pupil diameter, voice blood volume pressure, heart rate variability (HRV), and electrodermal conductance. However, physiological signals are significantly affected by environmental conditions and health. Skin diseases and environmental parameters, such as temperature and humidity, strongly influence electrodermal conductance [

7].

Researchers have recently focused on various neuro-signals and neuroimaging techniques for stress analyses. These modalities include EEG, near-infrared spectroscopy, positron emission tomography, and functional magnetic resonance imaging [

8]. EEG has shown greater reliability, robustness, and accuracy than neuroimaging techniques. EEG-based stress analysis is inexpensive and offers a high temporal resolution [

9]. EEG is a noninvasive technique that captures oscillations produced by electrical brain activity by mounting electrodes over the scalp. EEG signals have amplitudes up to 200 V. EEG has different frequency bands, which represent distinct mental states such as delta (0.5–4 Hz), theta (4–8 Hz), alpha (8–13 Hz), beta (13–30 Hz), and gamma (>30 Hz). Details of the EEG signals are provided in

Table 1.

AI-based stress detection systems are categorized into machine learning and deep learning-based stress detection systems. Traditional ML-based systems involve preprocessing, feature extraction, and classification. Preprocessing consisted of signal standardization, normalization, noise removal, artifact removal, data augmentation, signal cropping, and appending. It is essential to enhance the quality of the EEG signals. EEG signals often suffer from artifacts generated from body muscles, ocular signals, and body movements. These artifacts degrade EEG quality and lead to poor feature representation.

The next phase includes feature extraction, which acquires the unique characteristics of the EEG using computational algorithms. These features are important for segregating normal EEGs from stressed EEGs. ML algorithms are highly reliant on the data used for training. Quantity, diversity, and quality affect the performance of ML algorithms. Biased and insufficient data can lead to inaccurate predictions. ML algorithms are less suitable for larger datasets and require more learning time. The performance of the ML classifier depends largely on its features. Redundancy and non-distinct features reduce the model’s performance. The ML algorithm must provide better results with limited data. ML models require more contextual understanding and may provide less accuracy. Feature extraction algorithm selection is challenging owing to the unavailability of standard benchmarks for algorithm selection. The ML models show interior feature representation capabilities. Uneven training samples lead to a class-imbalance problem. The ML algorithm provides overfitting and shows a lower generalization capability for newer data. The outcomes of ML algorithms are easily affected by noise and signal artifacts. Traditional systems suffer from class imbalance problems owing to uneven training dataset samples. Generating a dataset for the stress class is challenging because of the micro-nature of the stress and reliability issues of the stressor [

10].

This study presents a novel hybrid BDDNet for stress detection using EEG signals. The main contributions of this study are summarized as follows.

An efficient channel selection scheme using a novel improved CSA algorithm to select distinctive channels and reduce the computational complexity of the system.

Stress representation using Multiple EEG features (MEFs) provides time and frequency domain features.

Implementation of a novel BDDNet for stress detection where DCNN provides the spatial and spectral domain features of EEG; BiLSTM offers the temporal and long-term dependency of the EEG and DBN to provide multilevel hierarchical features of the EEG.

Hyper-parameter optimization of the BDDNet using novel EOA to boost training performance.

The remainder of this paper is structured as follows.

Section 2 provides a literature survey of recent stress detection schemes.

Section 3 describes the overview of the proposed methodology in detail.

Section 4 offers the details about the proposed BDDNet.

Section 5 describes the implementation details of EOA-based hyper-parameter optimization. Further,

Section 6 presents the experimental results and discusses the analytical findings.

Section 7 presents the conclusions, imperative findings, and directions for future work.

2. Related Work

Various deep learning-based schemes have been proposed in recent years to enhance the performance of stress-detection schemes. Roy et al. [

11] presented CBGG, which is a hybrid combination of CNN, BiLSTM, and two gated recurrent unit GRU layers for EEG-based stress detection. It uses a discrete wavelet transform (DWT) representation to describe the spectral and temporal characteristics of EEGs. DWT minimizes the nonlinearity and non-stationarity of EEGs. It offers an overall accuracy of 98.1% for the simultaneous task EEG workload (STEW) dataset that includes 14-channel EEG signals. However, the effectiveness of stress detection is limited because of its high network complexity, higher recognition time, and extensive hyper-parameter tuning. In addition, selecting a DL algorithm to construct a hybrid classifier is a challenging task. Mane et al. [

12] explored the amalgamation of 2D-CNN and LSTM for stress detection, which considered azimuthal projected images of alpha, theta, and beta signals as the input. The combination of CNN and LSTM assists in boosting the spectral and temporal depictions of the EEGs. This resulted in an overall stress detection rate of 97.8% for DEAP, 94.5% SEED, and 97.8% for (DEAP+SEED). The system required a higher training time of 4.2 h and a recognition time of 12.5 s. Patel et al. [

13] investigated 1-D CNN and BiLSTM to enrich the spectral–temporal characteristics of EEGs. The stress detection model accepts time–frequency features to learn the local and global representations of the EEGs. It provides 88.03% accuracy for the DEAP dataset, but suffers from poor feature depiction and class imbalance problems.

Furthermore, Bhatnagar et al. [

14] provided an EEGNet based on CNN, which accepts the mother wavelet decomposition of the EEG into five spectral bands for stress detection. It offered 99.45% accuracy for the in-house dataset created by capturing the EEGs while playing low- to high-pitched music. However, the dataset variability was limited owing to the limited sample size (45 subjects aged 13–21). According to Hafeez et al. [

15], timing has a significant influence on stress. Researchers have observed that stress levels are greater for untimed tests than for timed tests based on real-time experimental data. Overall accuracy for the EEG signals in picture format was 70.67% and 90.46% for the LSTM and DCNN, respectively. The DCNN offers improved spatial correlation and connectivity among the various EEG bands. However, the temporal description of the signal and long-term reliance were absent from the 2D picture representation of the EEG. Geetha et al. [

16] investigated an enhanced multilayer perceptron (EMLP) to identify stress by utilizing sleep patterns in EEG data. Owing to the extreme complexity of sleep patterns, the ability of the EMLP to extract complicated sleep pattern information from EEG signals is restricted for real-time analysis.

To identify epileptic seizures caused by stress and worry, Palanisamy et al. [

17] used fuzzy c-mean (FCM) features, and LSTM was adjusted using a particle swarm algorithm (LSTM-PSO). The Hjorth Activity, variance, skewness, kurtosis, standard deviation, Shannon entropy, and mean are among the FCM properties. Location and random data augmentation resolve the class imbalance issue, creating synthetic EEG samples. It achieved 97% PSO-LSTM-based stress identification for the BONN EEG dataset, and an overall accuracy of 98.5% for FCM-PSO-LSTM. According to Bakare et al. [

18], valence and arousal can provide stress information from different EEGs. KNN obtained better results for the smaller dataset, whereas the larger dataset did not provide encouraging results. Recurrent neural networks (RNNs) and random forests (RFs) were proposed by Khan et al. [

19] for cross-dataset mental stress detection to improve the capacity of generalization of the stress detection scheme. It tests employing RNN and RF on the Game Emotion (GAMEEMO) dataset and the SJTU Emotion EEG Dataset (SEED) dataset for training. Regarding accuracy, the RNN performed better than the RF (83% for arousal and 75% for valence), with 87% for arousal and 83% for valence. Gonzalez-Vazquez et al.’s [

20] proposal, which uses an 8-channel EEG for multilevel stress detection in serious gaming tasks, calls for gated recurrent units (GRUs). It performed well in stress detection with 94% accuracy, but its weak generalization limits its usefulness. Naren et al. [

21] investigated a 1D CNN and Doppler characteristics for stress detection. The initial component of the 1D CNN was trained using induced stress via mirror tasks, the Stroop test, and arithmetic tests. Features of low, medium, and high stress levels were used to train the second portion of the 1D CNN. The SAM-40 dataset yielded an overall accuracy of 95.25 percent.

From an extensive survey of various stress-detection techniques, the following gaps were identified:

Lower feature depictions of single-channel EEGs, low-frequency resolution issues, limited spectral–temporal representation, and inferior long-term dependency on EEG signals [

22].

The class imbalance problem provides the disparity between the qualitative and quantitative stress attributes of EEGs [

23].

Low accuracy for low arousal and valence EEG signals.

Stress detection systems suffer from a low generalization capability, which limits their effectiveness in real-time implementation. DL-based systems have provided better results than ML-based stress-detection techniques [

24].

DL algorithms work as black boxes and have higher abstraction levels, failing to justify different features adequately. Thus, their explainability and interpretability were inferior.

3. Methodology

Figure 1 shows a flow diagram of the proposed stress-detection framework, which encompasses EEG preprocessing, channel selection, feature extraction, and stress detection using a novel DL framework.

3.1. EEG Preprocessing

EEGs often affect noise and artifacts due to electrooculogram (EOG), electromyogram (EMG), and electrocardiogram (EEG) signals, thereby reducing stress. Minimizing noise and artifacts is essential for enhancing the EEG quality and improving the mental stress detection performance. The EEG was passed through a finite impulse response (FIR) filter with bands between 0.75 Hz and 45 Hz to minimize noise. Furthermore, a wavelet packet transform-based soft thresholding scheme was used for EEG denoising, which minimized the noise and artifacts in the EEG signal without degrading the actual information in the EEG [

25]. The EEG signals were decomposed into three levels using a Daubechies filter (db3). The decomposed packets are compared with Donoho’s soft thresholding value and reconstructed to attain an enhanced signal.

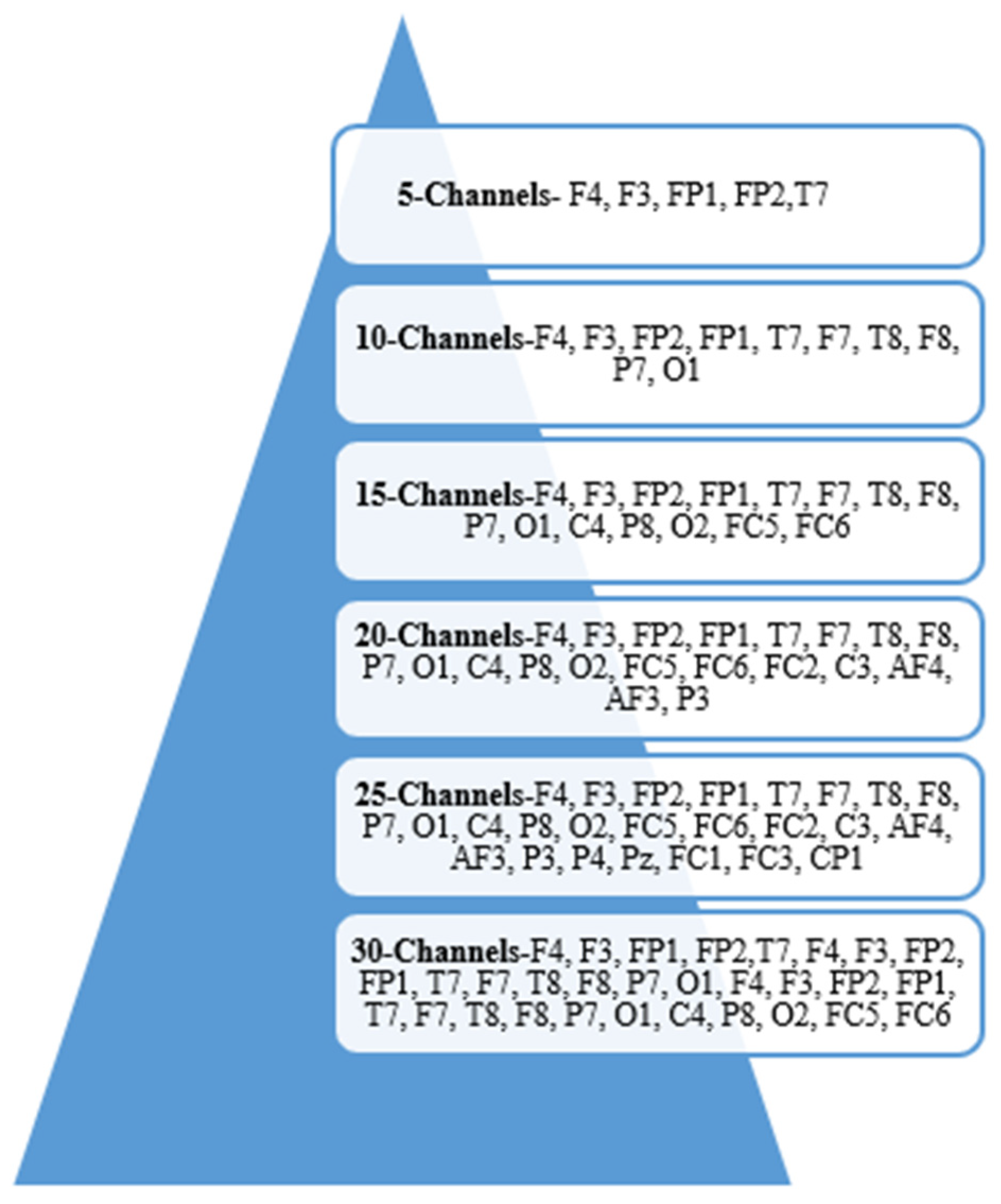

3.2. Channel Selection Using Improved Crow Search Algorithm

Crows are regarded as the most intelligent bird species. They stow away food and retrieve it when needed. They stick close to one another, watch and investigate where other crows keep their food, and then take it after the owner has gone [

26]. Crows will change their hiding spot if they believe that they are being followed to protect their food from being taken. The algorithm comprises four fundamental principles, all of which are derived from the behavioral patterns of crows.

Crows tend to congregate in large numbers.

Crows have excellent memories and can recall exactly where food was hidden.

Crows are known to stick together in order to steal food.

Crows have the capacity to perceive their environment. When they become aware that they are being followed, they move the food they have hidden to protect it from being taken.

The Crow Search Algorithm (CSA) is an innovative type of swarm intelligence optimization algorithm that was developed by modeling the intelligent actions that crows perform while searching for and locating food. The method is characterized by its straightforward structure, limited number of control parameters, and straightforward applications. The fact that only two factors can be adjusted makes it straightforward, which in turn makes it extremely appealing for use in a variety of technical domains [

27]. The traditional CSA algorithm provides a poor optimization solution because of its low solution diversity, poor exploration and exploitation, and inferior optimization results [

28]. The proposed improved CSA uses elite learning with spiralized learning and a weak member replacement scheme to enhance the solution diversity, convergence, and balance between exploration and exploitation. The flow of the proposed ICSA-based channel selection process is illustrated in

Figure 2.

The crow search algorithm (CSA) imitates the behavior of crows by storing excess food and recovering it when required. According to optimization theory, the crow acts as the searcher, the environment around it serves as the search space, and storing the location of food in a manner that is entirely at random is a possible option. The CSA adheres to the following ideals, which are derived from the lifestyle of crows: (1) crows are gregarious creatures; (2) crows are able to recall the position of concealed food; (3) crows will follow each other and take food from each other; and (4) crows try their utmost to prevent other crows from stealing their food. The algorithm for the CSA is as follows [

26,

27,

28]:

Step 1: Initialize the problem statement and algorithm parameters.

Step 2: Initialize the crow position and memory.

The flock is composed of N crows that are distributed randomly over a d-dimensional search space, where d denotes the total number of possible channels. The initial row positions are represented by Equation (1):

Each crow had initialized memory. It is supposed that crows have hidden their food in their first placements because they are thought to have little experience at this point. The memory of crows is described by Equation (2).

Step 3: Evaluate the fitness of each crow.

By entering the values of the decision variables into the objective function for each crow, the quality of its location was calculated. The objective function for channel selection considers the entropy (

) and covariance (

) of the channels, which helps to select salient channels with higher information. Channel selection assists in minimizing the computational effort of the stress detection system. The objective function utilized for computing the fitness is provided in Equation (3). Here,

and

were selected such that

.

Step 4: Generate new crow position.

To update, the crow selects a flock member at random, such as Crow J, and follows it to find the location of concealed food. In Equation (4), the new location of the crow is updated.

The traditional CSA updates the population randomly, leading to poor solution diversity and convergence. However, updating the best and worst solutions is neglected, which creates a poor balance between exploration and exploitation. Thus, the improved CSA provides two competitive learning schemes to enhance the diversity of solutions: convergence and exploration–exploitation search space. The LFEL strategy updates the best object using the Levy step function to improve the exploration space of the algorithm. It considers the first two solutions (

with the highest fitness values, as given in Equation (5).

Here, indicates the updated object obtained using the LFEL scheme, denotes the distribution index, and r1 is an arbitrary index between 0 and 1.

Furthermore, it uses the RWM strategy to boost exploitation of the algorithm. Every weak solution is updated towards the best solution to enhance the exploitation search space of the CSA. The crow position is updated using the RWM strategy, as shown in Equation (6).

Here, signifies the updated crow using the RWM scheme, r2 is a random number between 0 and 1, and stands for a solution with the worst fitness.

Step 5: Feasibility checking of new crow positions.

The new position in each crow was examined for viability. A crow changes position if its new location is viable. Otherwise, the crow does not go to the new spot and remains in its existing location.

Step 6: Compute the fitness value for newer position.

Step 7: Update the crow memory using Equation (7). Step 8: Check the termination criteria. Steps 4–7 were repeated until

was achieved. After the termination requirement is satisfied, the optimal memory position relative to the value of the objective function is provided as a solution to the optimization problem. The algorithm for the ICSA is given as follows (Algorithm 1):

| Algorithm 1: ICSA for EEG Channel Selection |

Input: Random channel population

Output: Optimized Channels |

| Step 1: | Initialize the problem and parameters.

Set flock size N, flight length Ft, maximum iterations Iter_max, and awareness probability AP. |

| Step 2: | Initialize crow positions and memory. - i.

Randomly generate initial positions of N crows in a d-dimensional search space, as given in Equation (1). - ii.

Initialize memory assuming each crow hides food at its initial location, as given in Equation (2).

|

| Step 3: | Evaluate initial fitness.

- Compute fitness of each crow using the objective function considering entropy (EN) and covariance (CV), as given in Equation (3). |

| Step 4: | Generate new crow positions.

For each crow i: - i.

Randomly choose crow j. - ii.

Update position based on Equation (4). - iii.

Apply the LFEL strategy to update the best solutions using Equation (5). - iv.

Apply the RWM strategy to guide weaker solutions using Equation (6).

|

| Step 5: | Check feasibility of new positions.

- If the new position is feasible, update the crow’s position. Otherwise, retain the old position. |

| Step 6: | Recalculate fitness for updated positions. |

| Step 7: | Update crow memory.

i. Compare current and previous fitness values.

ii. Update memory as per Equation (7). |

| Step 8: | Termination check.

i. Repeat Steps 4–7 until Iter_max is reached.

ii. Return the best memory position as the optimal solution. |

3.3. Multiple EEG Features

The features were classified into time-domain, spectral, and textural features of the EEG.

- A.

Time-Domain EEG Features

The mean and SD offer time-domain variations in the EEG owing to stress. The variance provides consistency in the EEG patterns. The mean (

), standard deviation (

), and variance (

vr) for EEG signal

E having

N samples are depicted in Equations (8)–(10), respectively.

Hjorth’s parameters provide the activity, mobility, and complexity of the EEG signal. The EEG signal activity depicts the signal’s variance over time [

8]. Higher activity due to stress in the brain indicates a higher activity value compared with a normal mental state. Mobility is the square root of the ratio of the variance of the first-order derivative of EEG to that of EEG over time. The activity (act or

) is denoted by Equations (11) and (12), where

denotes individual samples of EEG signals,

describes the mean of the EEG, and

signifies the total samples of the EEG. Mobility (

) describes the frequency variations in the EEG, as given in Equation (13). Higher mobility indicates rapid variations in the EEG, representing higher brain activity or stress. A higher complexity (

) value represents more complex variations in brain activity that depict higher stress. The complexity is defined as the square root of the ratio of the mobility of the first derivative of EEG to the mobility of EEG, as given in Equation (14).

The median value offers the central tendency of the EEG, which depicts the independence of the outliers.

ZCR offers randomness and noise in the EEG, which has a higher stress value. Stress causes instability in EEG patterns and provides larger transitions in the EEG. ZCR is computed using Equation (15), where

1{.} provides one value as a sign that the current samples have changed from the previous samples that depict zero crossing. The signs of the EEG samples were obtained using

function.

The RMS describes the overall signal power, and the entropy depicts random or irregular EEG patterns. The RMS value was computed using Equation (16).

The line length provides the overall vertical or curve length of the EEG signal, which shows the stress pattern in the signal. Equation (17) is used to calculate LL.

Equation (18) is used to determine the uncertainty value in the EEG signal provided by SnE, where

is the frequency of each sample in the EEG.

NE offers information regarding the irregular and non-linear patterns of EEG. The amplitude and oscillation shifted frequency values increased with the NE value. Equation (19) was used to calculate the NE.

- B.

Frequency Domain Feature

WPT provides stationary and transient EEG patterns in the time–frequency domain. The EEG signal is split into five levels using a ‘db2’ filter that provides subbands. The fifth-level decomposition provided 32 subbands. Seven statistical features (mean, median, energy, skewness, kurtosis, variance, and entropy) were computed for each sub-band. The five-level decomposition provided 224 WPT features.

Energy provides the EEG strength in distinct frequency bands. It depicts the transition from the normal state to stress and is computed using Equation (20).

The IWMF offers dynamic disparities in EEG that describe the microarousal due to stress. The IWMF was estimated using Equation (21), where

E[

k] is the normalized PSD at frequency

f[

k].

The IWBF provides the bandwidth of the stress levels, as given in Equation (22). It is vital to discriminate between activities in the brain due to stress. PSD offers a high value for normal activity.

SK describes the non-Gaussian nature of the EEG pattern, which depicts complex EEG patterns.

- C.

Texture Feature

Local temporal changes in the EEG signal pattern are provided by the local binary pattern (LBP) characteristics. Smaller amplitude fluctuations, micro-arousals, and transitory changes across the EEG due to stress may be obtained using the Local Neighborhood Difference Pattern (LNDP). The Local Gradient Pattern (LGP) offers directional changes in EEG to show prominent and subtle differences across complicated EEG patterns. Fluctuations in the EEG gradients (G), as shown in the equation, are provided by the LGP, where c signifies the center value in the window and

represents the neighboring samples. LBP, LGP, and function f are given by Equations (23), (24), and (25), respectively. The details of all 527 EEG features for each channel are listed in

Table 2.

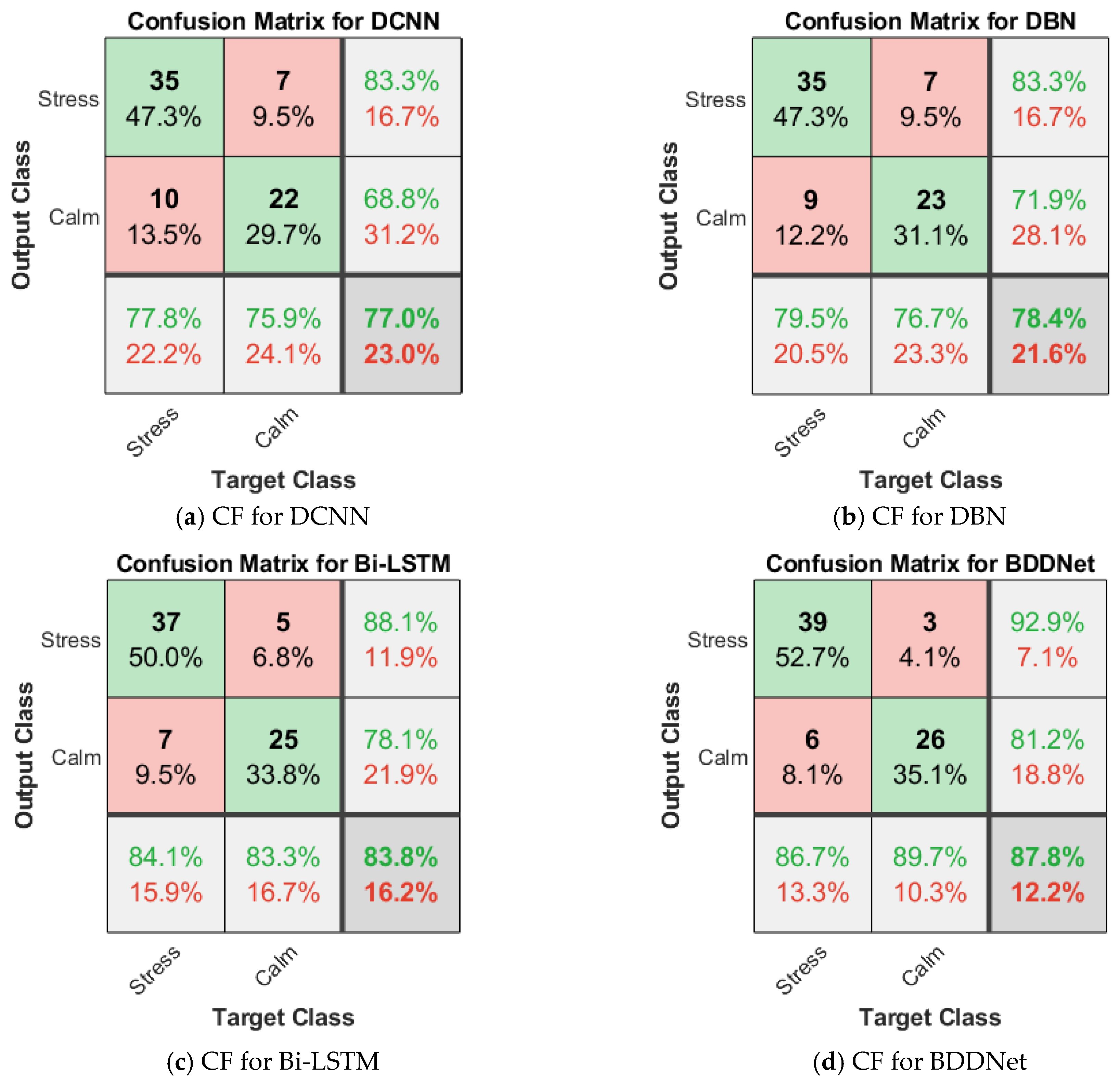

4. BDDNet for Stress Detection

The proposed BDD network combines Bi-LSTM, DCNN, and DBN to enhance feature representation. Bi-LSTM provides bidirectional long-term dependencies and a superior temporal representation of EEG features. The DCNN provides spatial detection and hierarchical abstract-level features that offer correlation and connectivity between the distinct local and global features of the EEG for stress detection. The DBN offers multilevel hierarchical features and representation of the complex patterns of the EEG signal for stress detection.

- A.

Deep Convolution Neural Network

CNNs have shown robustness in various signal processing applications. They are capable of learning EEG features independently. CNNs adaptively and automatically learn the hierarchical and spatial features of EEG signals using back-propagation learning. CNNs have the potential for various pattern recognition applications for biomedical image signals, audio, and time-series data [

29,

30,

31,

32]. The convolution layer is the chief building block of CNNs. This provides a hierarchical correlation between the different local and global characteristics of the EEG signal. The convolution layer provides local correlations and connectivity in EEG features. It offers hierarchical abstract-level EEG features that describe distinctive features depicting variations in the EEG signal. In this layer, the input signal is convolved with multiple convolution kernels to provide deep features, as expressed in Equations (26) and (27).

Here, EEG denotes the original EEG signal, denotes the convolution filter, and denotes the convolution output.

Batch normalization converts the deep features into a normalized format to minimize outliers. This assists in accelerating the training performance of the DCNN. The BN operation for a batch size b is given by Equation (28). Here,

and

denote the mean and variance over batch b, respectively, and

and

indicates the scale and offset, respectively.

The ReLU layer enhances the non-linear characteristics of the features by replacing the negative values with zero. This helps to improve the classification accuracy and lessen the vanishing gradient problem. The output

of the ReLu layer is described by Equation (29).

The maximum pooling layer selects the maximum value from a local window. It chooses salient features and neglects non-salient or redundant features. Maximum pooling minimizes the feature dimensions and thus helps reduce the network’s trainable parameters.

The output of the last max pool layer was flattened and converted into a 1-D vector. The flattened vector is provided to the FCL, which links every neuron of one layer with all other neurons of the other layers to enhance connectivity. The FCL learns the dependencies and relationships in EEG data. In the FCL, a linear transformation is applied to the input vector via FCL weights. Later, the non-linear activation function is applied to the product, as given in Equation (30).

where

represents the input flattened vector to the FCL,

is the bias, and

is the non-linear activation function. The Softmax classifier computes the probability of the output class using Equation (31). The class label with the highest probability is chosen as the output class, as given in Equation (32). The SML activation function is given by the equation.

indicates the output layer value, which is given by Equation (33):

is the probability of the output class level, and

indicates the predicted class label.

The mini-batch gradient descent algorithm (MBGDM), which combines batch gradient descent (BGD) and stochastic gradient descent (SGD) to achieve lower computational efforts and robustness, is utilized for training the DL frameworks. MBGDM splits the training data into fewer batches (

) to reduce training time. The weights of the DL framework are modified using the error function described in the equation.

where

xi is the

ith feature set of the training data, and MBGDM considers the initial learning rate

μ = 0.001 to modify the weights, as described in Equation (35).

Here, denoted modified weights, signifies older weights, describes the error function and symbolizes the gradient.

- B.

Bi-LSTM

Bi-LSTM provides a temporal representation of EEG features and long-range connectivity in the local and global features of the EEG. The proposed scheme uses two Bi-LSTM layers with 50 hidden layers to represent the features [

33,

34].

BiLSTM is an extension of the LSTM, which provides forward and backward long-term dependencies in the EEG. Understanding the context of the sequence in both directions is essential for time series stress analysis. In the input sequence from

t = 1 to

t =

T, the BiLSTM incorporates a forward LSTM model to learn forward dependence. The input sequence from

t =

T to

t = 1 incorporates a backward LSTM model to learn backward reliance. After flattening the PDCNN’s output, the BiLSTM determined the forward and backward states for the input sequence

using Equations (36) and (37), respectively.

The output of the BiLSTM combines the backward and forward hidden states at time

t, as given in Equation (38). The symbol

represents the final hidden state of the BiLSTM at time

t, and the operator represents the concatenation of the backward and forward states.

The LSTM provides temporal portrayal and long-term connection in complex stress aspects. It is responsible for regulating the flow of information inside the model and comprises the forget gate, the input gate, and the output gate. The information stored in the cell state is discarded via the forget gate. A representation of the forgetting gate may be seen in Equation (39). Several symbols are used in this context:

represents the input state at time step

represents the hidden state from the previous step,

represents the weight matrix of the forget gate,

represents the bias value, and σ represents the sigmoid activation function.

The input gate

and candidate values

add the new information in the cell state as given in Equations (40) and (41), respectively. The cell state update

combines the new information and the forgot gate’s output as given in Equation (42). The output gate

produces the final output considering the hidden state

as given in Equations (43) and (44), respectively.

- C.

Deep Belief Network

The DBN provides multilevel hierarchical features using multiple restricted Boltzmann Machines (RBMs). RBMs act as hidden layers and learn the connectivity and correlation between the input and hidden layers. The RBM attempts to minimize the energy required to learn the fundamental probability distribution of the EEG features as given in Equation (45) [

35,

36].

The RBM computes the energy for every configuration of the input (visible) and hidden layers using Equation. Here,

denotes a binary state of the input layer,

signifies a binary state of the hidden layer,

stands for bias values of the input layer,

symbolizes bias values of the hidden layer,

denotes the number of input (visible) layers,

stands for the number of hidden layers, and

denotes weights linking the input and hidden layers. The RBM assigns probability

p(

v) to the visible vector

v using Equation (46).

As the connection between the hidden and hidden layers is unavailable, the conditional distribution

p(

h|

v) is factorial and is depicted by Equation (47).

Similarly, the connection between the visible and invisible layers was unavailable. The conditional distribution

p(

v|

h) is factorial and depicted by Equation (48).

The

indicates the sigmoid function and is denoted by Equation (49).

The features of the last layer of the DCNN, BilSTM, and DBN are concatenated together and provided to the FC layer for connectivity improvement. Finally, a softmax classifier is utilized for the classification of the stress. The BDDNet is optimized for hyper-parameter tuning using novel EOA.

5. EOA for Hyper-Parameter Optimization

Employee satisfaction is crucial in private and government sectors to achieve the highest throughput of the employee for generating maximum profit and fulfilling employee satisfaction and personal needs. The novel EOA is motivated by the employee appraisal process in organizations where the employees are motivated for good work, penalized or warned for mistakes, and trained or mentored to enhance their professional skills. Here, the EOA is utilized for the hyper-parameter tuning of the BDDNet, such as learning rate, decay rate, and momentum, which are usually manually optimized and lead to poor performance for hybrid DL frameworks.

In EOA, the initial population of employee is created for the employee variables representing the performance variables. Here, N is analogous to the total possible solutions, and n denotes problem variables. The initial population of the employees is set randomly in 0 to 1 using Equation (50) where represent the employees of the organization.

The fitness of each employee is considered based on the training error of the BDDNet. The employees providing better fitness for the iteration are directly passed to the next iterations as the reward policy, and other employees are trained or mentored based on exploration and exploitation and exploration strategy. During exploration, the employees are trained by providing the external training using Equation (51), and during exploitation, the employees are optimized based on mentoring from the best employees (

) of the organization using Equation (52).

Here, is the updated population, and and are the arbitrary numbers in 0 to 1. The population is updated to 100 iterations, and the final optimized solution, having a lower error rate, is considered for training the BDDNet.

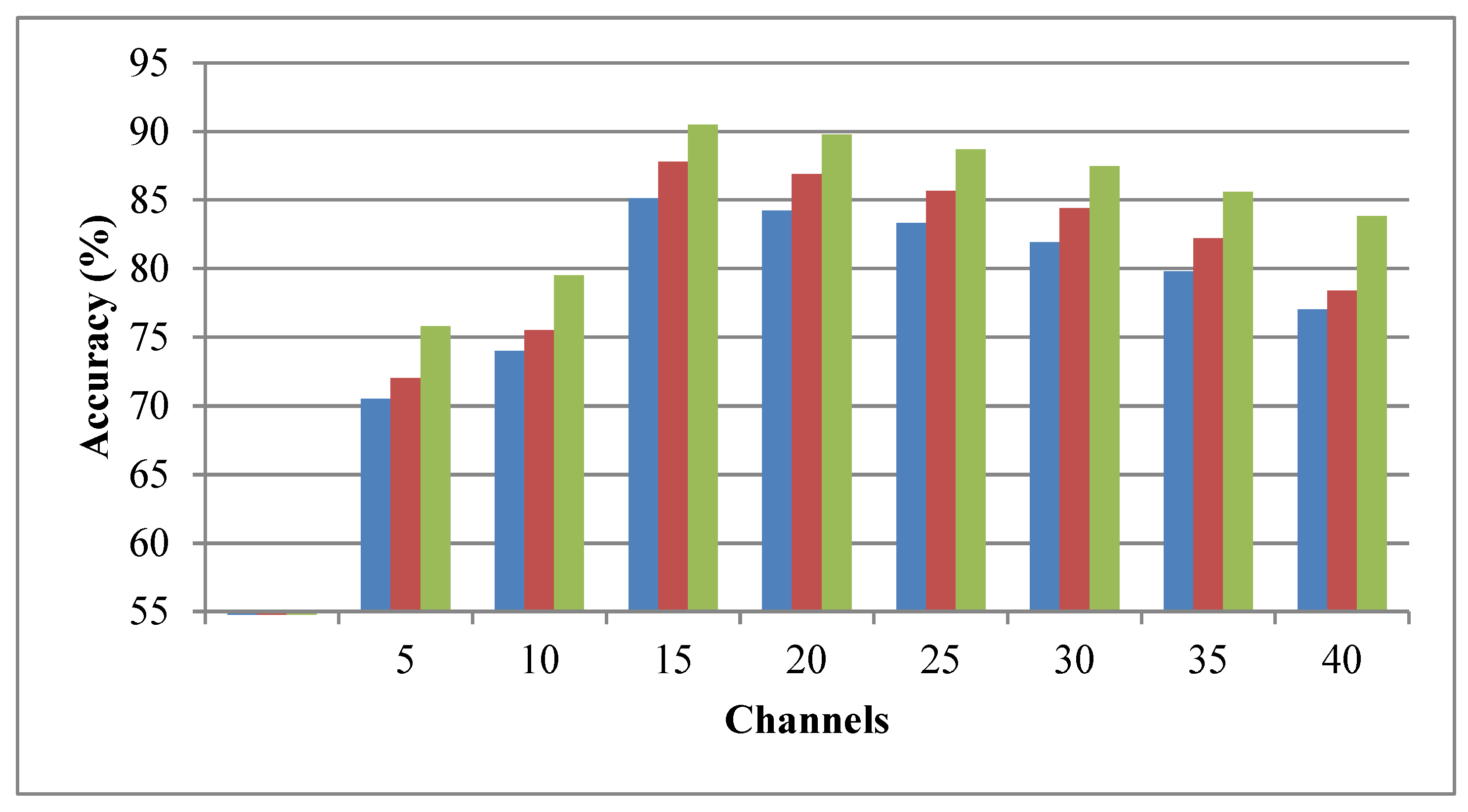

7. Conclusions

Thus, this article presents stress detection using a novel hybrid BDDNet that combines a DCNN, BiLSTM, and DBN. It helps improve feature distinctiveness, spectral–temporal depiction, long-term dependency, and multilevel abstracted hierarchical features. The competitive improved CSA provides efficient channel selection, offering several benefits, including the capacity to self-organize, simplicity, flexibility, robustness, and scalability. The novel EOA is used to optimize the hyper-parameters of the BDDNet, such as learning rate, decay rate, and momentum. The performance of the EOA-optimized BDDNet-ICSA was evaluated on the DEAP dataset, yielding enhanced recall, precision, F1-score, selectivity, NPV, and accuracy of 97.6%, 97.6%, 97.6%, 96.9%, 96.9%, and 97.3%, respectively, for the 15-channel EEG. The proposed BDDNet offers an overall accuracy of 92.62% for the SEED dataset and helps to validate the generalization capability of the system. The complexity in the DL framework may limit the deployment flexibility of the suggested system on the resource-constrained standalone devices. As DL architectures are highly abstracted, the interpretability and explainability of the stress detection system are inferior, which limits the acquisition of trust and reliability in real-time critical applications. The disparity in the calm and stress samples leads to a class imbalance problem. In the future, the focus should be on improving the interpretability and explainability of the system. In the future, the effectiveness of the stress detection scheme can be improved by generating synthetic samples using data augmentation to lessen the class imbalance issue. Additionally, the effectiveness of the system can be enhanced by implementing an efficient feature selection scheme to minimize the computational complexity of the stress detection framework.