Predicting Cognitive Decline in Parkinson’s Disease Using Artificial Neural Networks: An Explainable AI Approach

Abstract

1. Introduction

1.1. Machine Learning Techniques in the Context of Parkinson’s Disease

1.2. Aims

2. Materials and Methods

2.1. Sample

2.2. Measures

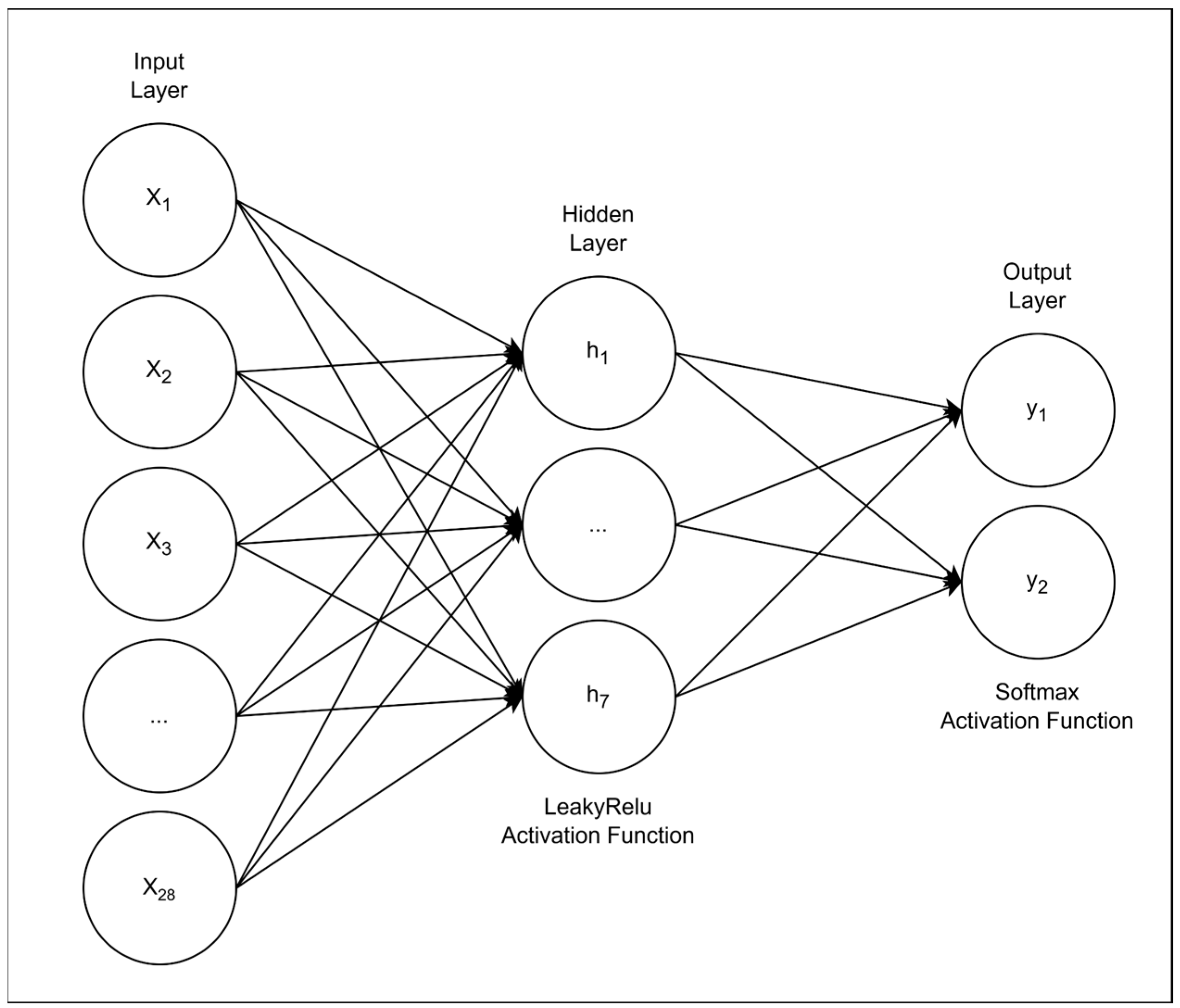

2.3. The Artificial Neural Network Model and Training

- •

- α (alpha): weights assigned to each class to balance their influence;

- •

- γ (gamma): a focusing term that amplifies the penalty for misclassifications.

2.4. Model Explainability

2.4.1. SHAP Analysis

2.4.2. Group-Wise Feature Masking

2.4.3. Brute-Force Combinatorial Masking

3. Results

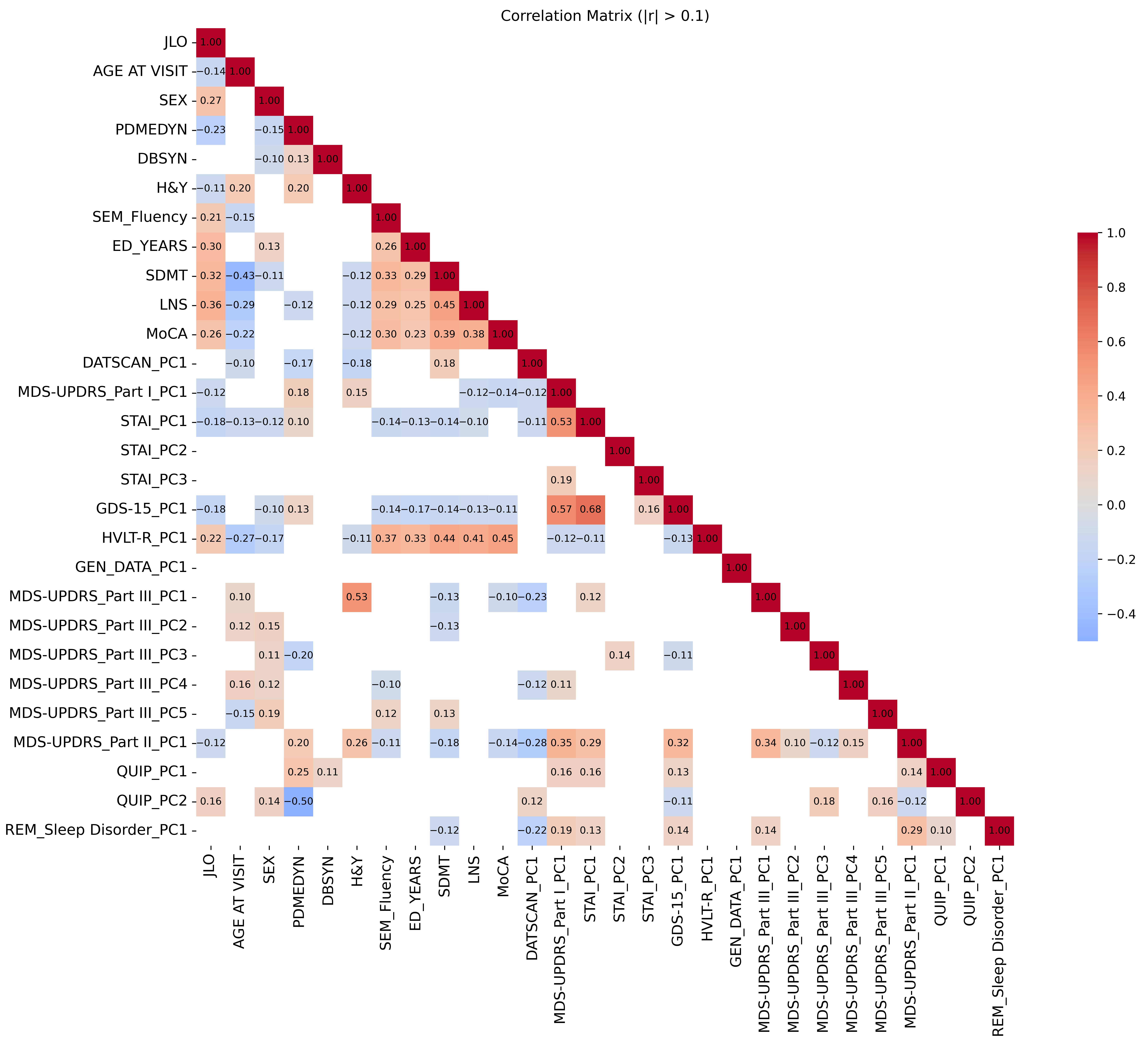

3.1. Descriptive Analysis and Data Preprocessing

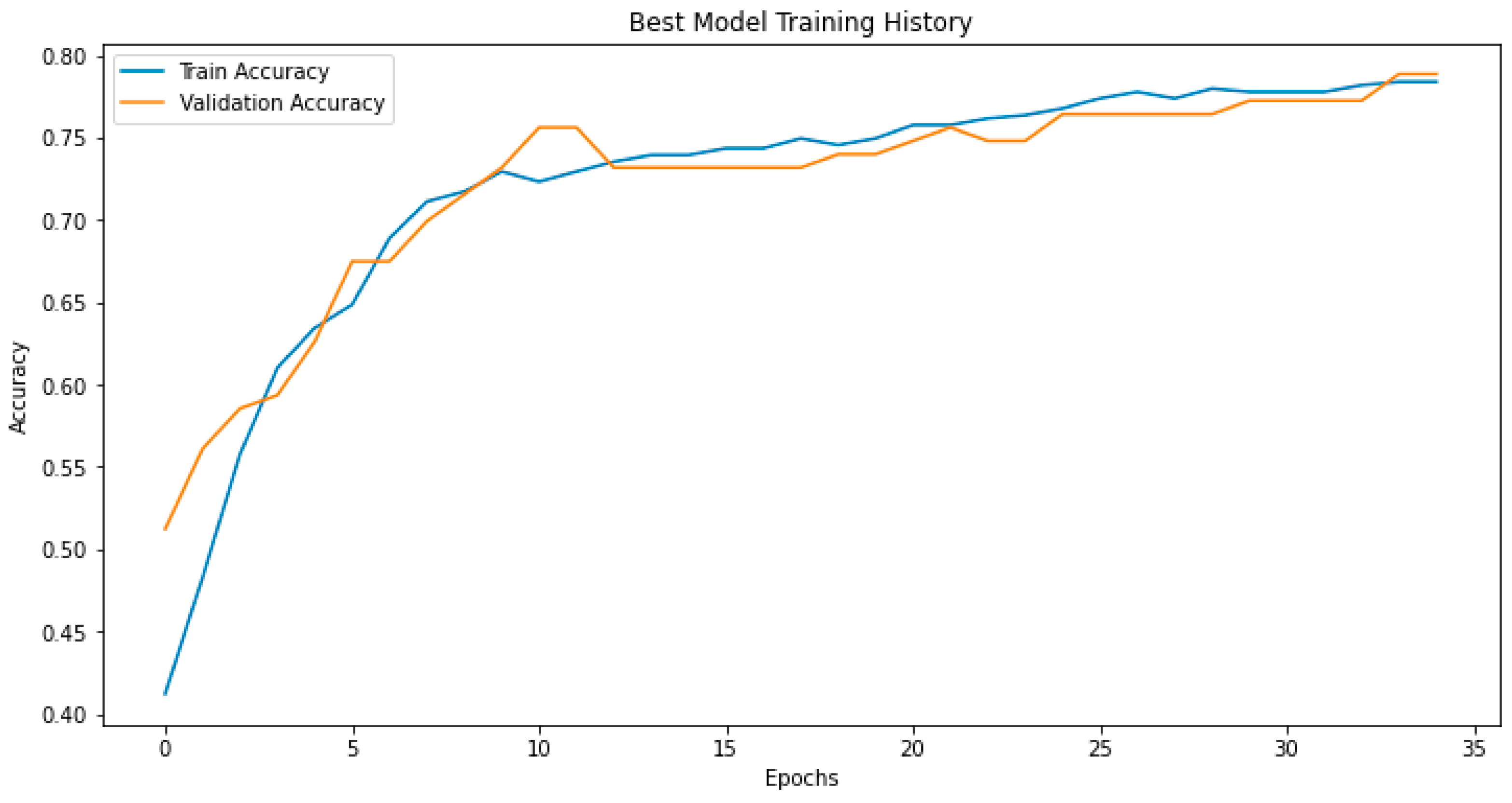

3.2. Model Performance

3.3. Model Explainability

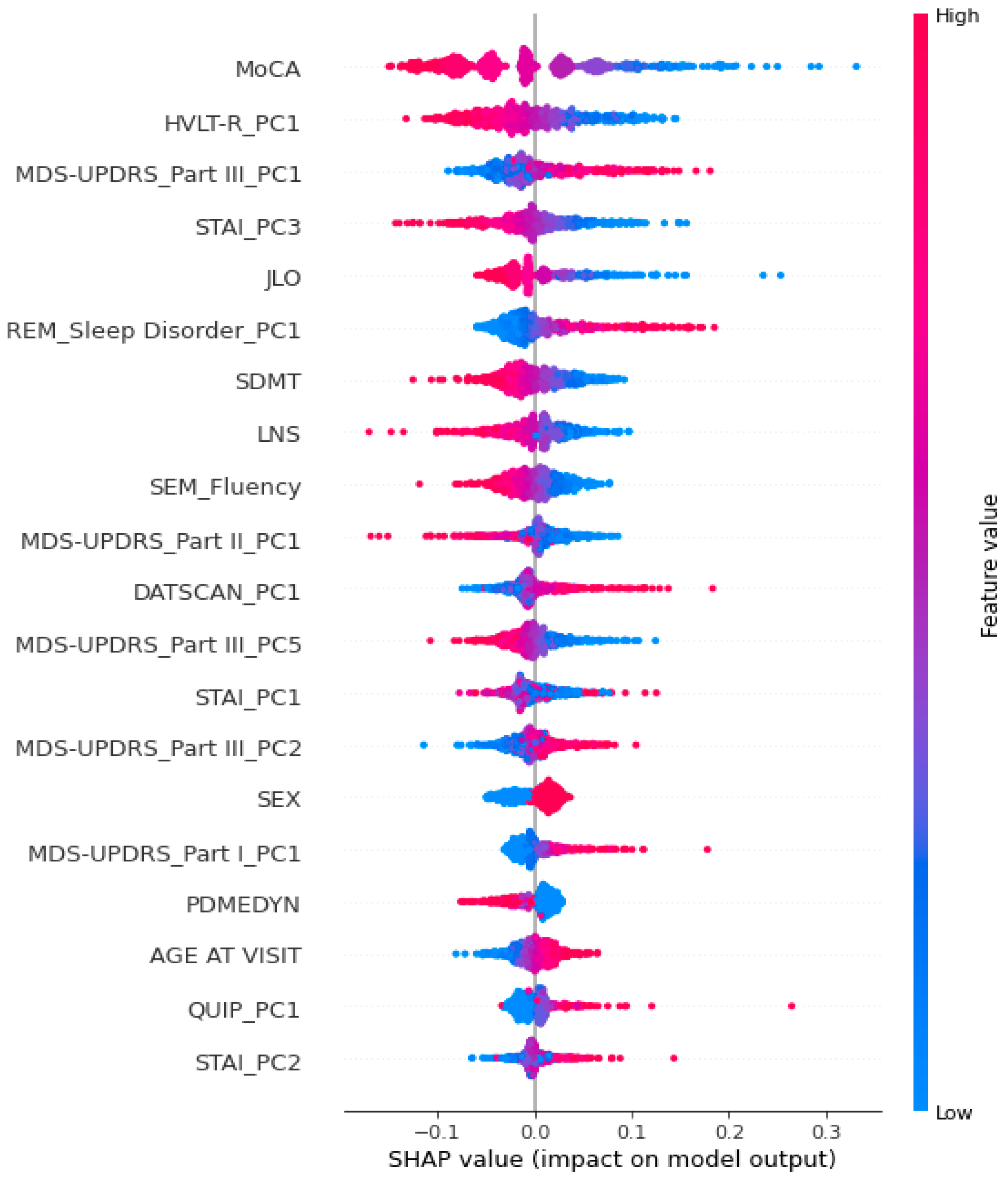

3.3.1. SHAP Analysis

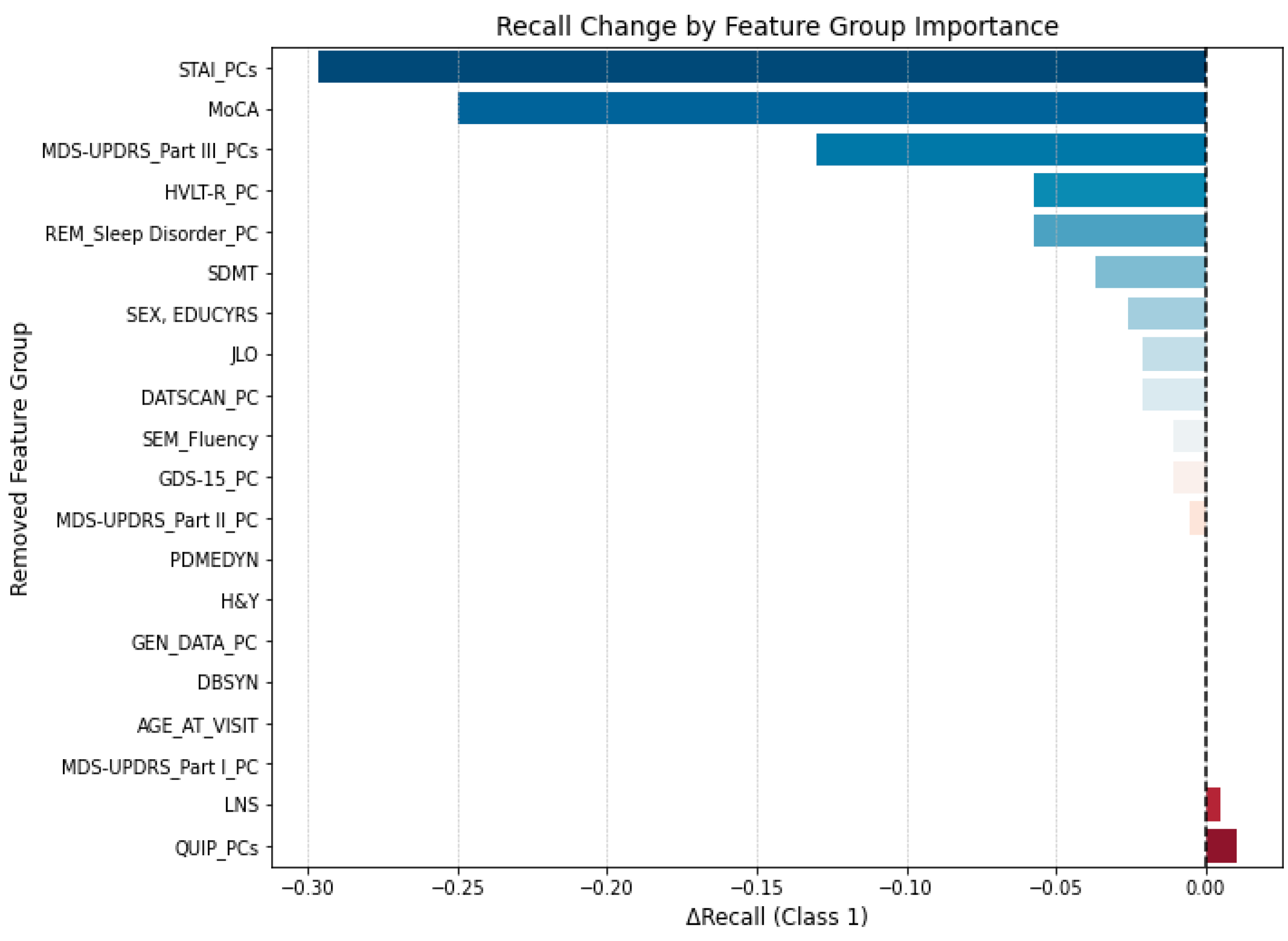

3.3.2. Group-Wise Feature Masking

3.3.3. Brute-Force Feature Elimination Procedure

4. Discussion

4.1. A Focus on the Crucial Variables Identified by the Model

4.2. Further Lines of Research to Deepen Cognitive Functioning in Parkinson’s Disease

4.3. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ANN | Artificial neural network |

| APOE | Apolipoprotein E |

| DAT | Dopamine transporter |

| fMRI | Functional magnetic resonance imaging |

| GBA | Glucocerebrosidase |

| GDS-15 | Geriatric Depression Scale |

| H&Y | Hoehn and Yahr |

| HVLT-R | Hopkins Verbal Learning Test—Revised |

| JLO | Benton Judgment of Line Orientation |

| LNS | Letter Number Sequencing |

| MDS-UPDRS | Movement Disorder Society Unified Parkinson’s Disease Rating Scale |

| MoCA | Montreal Cognitive Assessment |

| MRI | Magnetic resonance imaging |

| PC | Principal component |

| PCA | Principal component analysis |

| PD | Parkinson’s disease |

| PET | Positron emission tomography |

| SDMT | Symbol-Digit Modalities Test |

| SHAP | Shapley Additive Explanations |

| SNCA | a-synuclein |

| STAI | State-Trait Anxiety Inventory |

| QUIP | Questionnaire for Impulsive-Compulsive Disorders in Parkinson’s Disease |

References

- Chen, H.; Wang, K.; Scheperjans, F.; Killinger, B. Environmental Triggers of Parkinson’s Disease—Implications of the Braak and Dual-Hit Hypotheses. Neurobiol. Dis. 2022, 163, 105601. [Google Scholar] [CrossRef] [PubMed]

- Tolosa, E.; Garrido, A.; Scholz, S.W.; Poewe, W. Challenges in the Diagnosis of Parkinson’s Disease. Lancet Neurol. 2021, 20, 385–397. [Google Scholar] [CrossRef] [PubMed]

- Pfeiffer, R.F. Non-Motor Symptoms in Parkinson’s Disease. Park. Relat. Disord. 2016, 22, S119–S122. [Google Scholar] [CrossRef] [PubMed]

- Stefanova, E.; Žiropadja, L.; Stojković, T.; Stanković, I.; Tomić, A.; Ječmenica-Lukić, M.; Petrović, I.; Kostić, V. Mild Cognitive Impairment in Early Parkinson’s Disease Using the Movement Disorder Society Task Force Criteria: Cross-Sectional Study in Hoehn and Yahr Stage 1. Dement. Geriatr. Cogn. Disord. 2015, 40, 199–209. [Google Scholar] [CrossRef]

- Poletti, M.; Bonuccelli, U. Funzioni Cognitive Nella Malattia Di Parkinson. G. Ital. Psicol. 2010, 37, 523–550. [Google Scholar] [CrossRef]

- Colautti, L.; Iannello, P.; Silveri, M.C.; Antonietti, A. Decision-Making under Ambiguity and Risk and Executive Functions in Parkinson’s Disease Patients: A Scoping Review of the Studies Investigating the Iowa Gambling Task and the Game of Dice. Cogn. Affect. Behav. Neurosci. 2023, 23, 1225–1243. [Google Scholar] [CrossRef]

- Colautti, L.; Iannello, P.; Silveri, M.C.; Giovagnoli, A.R.; Elia, A.E.; Pepe, F.; Magni, E.; Antonietti, A. Deepening the Decisional Processes under Value-Based Conditions in Patients Affected by Parkinson’s Disease: A Comparative Study. Cogn. Affect. Behav. Neurosci. 2024, 24, 1167–1185. [Google Scholar] [CrossRef]

- Coundouris, S.P.; Adams, A.G.; Henry, J.D. Empathy and Theory of Mind in Parkinson’s Disease: A Meta-Analysis. Neurosci. Biobehav. Rev. 2020, 109, 92–102. [Google Scholar] [CrossRef]

- Saredakis, D.; Collins-Praino, L.E.; Gutteridge, D.S.; Stephan, B.C.M.; Keage, H.A.D. Conversion to MCI and Dementia in Parkinson’s Disease: A Systematic Review and Meta-Analysis. Park. Relat. Disord. 2019, 65, 20–31. [Google Scholar] [CrossRef]

- Aarsland, D.; Creese, B.; Politis, M.; Chaudhuri, K.R.; Ffytche, D.H.; Weintraub, D.; Ballard, C. Cognitive Decline in Parkinson Disease. Nat. Rev. Neurol. 2017, 13, 217–231. [Google Scholar] [CrossRef]

- Jones, J.D.; Kuhn, T.P.; Szymkowicz, S.M. Reverters from PD-MCI to Cognitively Intact Are at Risk for Future Cognitive Impairment: Analysis of the PPMI Cohort. Park. Relat. Disord. 2018, 47, 3–7. [Google Scholar] [CrossRef]

- He, Y.; Tian, Y.; Han, H.; Cui, J.; Ge, X.; Qin, Y.; Luo, Y.; Bai, W.; Yu, H. The Path Linking Disease Severity and Cognitive Function with Quality of Life in Parkinson’s Disease: The Mediating Effect of Activities of Daily Living and Depression. Health Qual. Life Outcomes 2021, 19, 92. [Google Scholar] [CrossRef]

- Leroi, I.; McDonald, K.; Pantula, H.; Harbishettar, V. Cognitive Impairment in Parkinson Disease: Impact on Quality of Life, Disability, and Caregiver Burden. J. Geriatr. Psychiatry Neurol. 2012, 25, 208–214. [Google Scholar] [CrossRef]

- Akhtar, R.S.; Xie, S.X.; Chen, Y.J.; Rick, J.; Gross, R.G.; Nasrallah, I.M.; Deerlin, V.M.V.; Trojanowski, J.Q.; Chen-Plotkin, A.S.; Hurtig, H.I.; et al. Regional Brain Amyloid-β Accumulation Associates with Domain-Specific Cognitive Performance in Parkinson Disease without Dementia. PLoS ONE 2017, 12, e0177924. [Google Scholar] [CrossRef]

- Jerčić, K.G.; Blažeković, A.; Borovečki, S.; Borovečki, F. Non-Motor Symptoms of Parkinson`s Disease-Insights from Genetics. J. Neural Transm. 2024, 131, 1277–1284. [Google Scholar] [CrossRef] [PubMed]

- Jones, S.; Torsney, K.M.; Scourfield, L.; Berryman, K.; Henderson, E.J. Neuropsychiatric Symptoms in Parkinson’s Disease: Aetiology, Diagnosis and Treatment. BJPsych Adv. 2020, 26, 333–342. [Google Scholar] [CrossRef]

- Pourzinal, D.; Yang, J.; Lawson, R.A.; McMahon, K.L.; Byrne, G.J.; Dissanayaka, N.N. Systematic Review of Data-Driven Cognitive Subtypes in Parkinson Disease. Eur. J. Neurol. 2022, 29, 3395–3417. [Google Scholar] [CrossRef] [PubMed]

- Schrag, A.; Siddiqui, U.F.; Anastasiou, Z.; Weintraub, D.; Schott, J.M. Clinical Variables and Biomarkers in Prediction of Cognitive Impairment in Patients with Newly Diagnosed Parkinson’s Disease: A Cohort Study. Lancet Neurol. 2017, 16, 66–75. [Google Scholar] [CrossRef]

- Wilson, H.; Pagano, G.; Yousaf, T.; Polychronis, S.; De Micco, R.; Giordano, B.; Niccolini, F.; Politis, M. Predict Cognitive Decline with Clinical Markers in Parkinson’s Disease (PRECODE-1). J. Neural Transm. 2020, 127, 51–59. [Google Scholar] [CrossRef]

- Kehagia, A.A.; Barker, R.A.; Robbins, T.W. Cognitive Impairment in Parkinson’s Disease: The Dual Syndrome Hypothesis. Neurodegener. Dis. 2012, 11, 79–92. [Google Scholar] [CrossRef]

- Yoshimura, K.; Shima, A.; Kambe, D.; Furukawa, K.; Nishida, A.; Wada, I.; Sakato, Y.; Sakamaki-Tsukita, H.; Terada, Y.; Yamakado, H.; et al. Neural Substrates Underlying Distinct Dual Cognitive Syndromes in Parkinson’s Disease. Eur. J. Neurol. 2025, 32, e70022. [Google Scholar] [CrossRef]

- Williams-Gray, C.H.; Evans, J.R.; Goris, A.; Foltynie, T.; Ban, M.; Robbins, T.W.; Brayne, C.; Kolachana, B.S.; Weinberger, D.R.; Sawcer, S.J.; et al. The Distinct Cognitive Syndromes of Parkinson’s Disease: 5 Year Follow-up of the CamPaIGN Cohort. Brain 2009, 132, 2958–2969. [Google Scholar] [CrossRef] [PubMed]

- Sun, M.; Yan, T.; Liu, R.; Zhao, X.; Zhou, X.; Ma, Y.; Jia, J. Predictive Value of Machine Learning in Diagnosing Cognitive Impairment in Patients with Parkinson’s Disease: A Systematic Review and Meta-Analysis. Ann. Palliat. Med. 2022, 11, 3775784. [Google Scholar] [CrossRef] [PubMed]

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Mohamed, N.A.; Arshad, H. State-of-the-Art in Artificial Neural Network Applications: A Survey. Heliyon 2018, 4, e00938. [Google Scholar] [CrossRef] [PubMed]

- Ghaffar Nia, N.; Kaplanoglu, E.; Nasab, A. Evaluation of Artificial Intelligence Techniques in Disease Diagnosis and Prediction. Discov. Artif. Intell. 2023, 3, 5. [Google Scholar] [CrossRef]

- Mei, J.; Desrosiers, C.; Frasnelli, J. Machine Learning for the Diagnosis of Parkinson’s Disease: A Review of Literature. Front. Aging Neurosci. 2021, 13, 633752. [Google Scholar] [CrossRef]

- Dennis, A.-G.P.; Strafella, A.P. The Identification of Cognitive Impairment in Parkinson’s Disease Using Biofluids, Neuroimaging, and Artificial Intelligence. Front. Neurosci. 2024, 18, 1446878. [Google Scholar] [CrossRef]

- Alzubaidi, M.S.; Shah, U.; Dhia Zubaydi, H.; Dolaat, K.; Abd-Alrazaq, A.A.; Ahmed, A.; Househ, M. The Role of Neural Network for the Detection of Parkinson’s Disease: A Scoping Review. Healthcare 2021, 9, 740. [Google Scholar] [CrossRef]

- Berus, L.; Klancnik, S.; Brezocnik, M.; Ficko, M. Classifying Parkinson’s Disease Based on Acoustic Measures Using Artificial Neural Networks. Sensors 2019, 19, 16. [Google Scholar] [CrossRef]

- Tang, J.; Yang, B.; Adams, M.P.; Shenkov, N.N.; Klyuzhin, I.S.; Fotouhi, S.; Davoodi-Bojd, E.; Lu, L.; Soltanian-Zadeh, H.; Sossi, V.; et al. Artificial Neural Network–Based Prediction of Outcome in Parkinson’s Disease Patients Using DaTscan SPECT Imaging Features. Mol. Imaging Biol. 2019, 21, 1165–1173. [Google Scholar] [CrossRef]

- Nguyen, A.A.; Maia, P.D.; Gao, X.; Damasceno, P.F.; Raj, A. Dynamical Role of Pivotal Brain Regions in Parkinson Symptomatology Uncovered with Deep Learning. Brain Sci. 2020, 10, 73. [Google Scholar] [CrossRef] [PubMed]

- Fiorenzato, E.; Moaveninejad, S.; Weis, L.; Biundo, R.; Antonini, A.; Porcaro, C. Brain Dynamics Complexity as a Signature of Cognitive Decline in Parkinson’s Disease. Mov. Disord. 2024, 39, 305–317. [Google Scholar] [CrossRef] [PubMed]

- Choi, H.; Kim, Y.K.; Yoon, E.J.; Lee, J.-Y.; Lee, D.S.; for the Alzheimer’s Disease Neuroimaging Initiative. Cognitive Signature of Brain FDG PET Based on Deep Learning: Domain Transfer from Alzheimer’s Disease to Parkinson’s Disease. Eur. J. Nucl. Med. Mol. Imaging 2020, 47, 403–412. [Google Scholar] [CrossRef] [PubMed]

- Chung, C.-C.; Chan, L.; Chen, J.-H.; Bamodu, O.A.; Chiu, H.-W.; Hong, C.-T. Plasma Extracellular Vesicles Tau and β-Amyloid as Biomarkers of Cognitive Dysfunction of Parkinson’s Disease. FASEB J. 2021, 35, e21895. [Google Scholar] [CrossRef]

- Nasreddine, Z.S.; Phillips, N.A.; Bédirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A Brief Screening Tool for Mild Cognitive Impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef]

- Kandiah, N.; Zhang, A.; Cenina, A.R.; Au, W.L.; Nadkarni, N.; Tan, L.C. Montreal Cognitive Assessment for the Screening and Prediction of Cognitive Decline in Early Parkinson’s Disease. Park. Relat. Disord. 2014, 20, 1145–1148. [Google Scholar] [CrossRef]

- Litvan, I.; Goldman, J.G.; Tröster, A.I.; Schmand, B.A.; Weintraub, D.; Petersen, R.C.; Mollenhauer, B.; Adler, C.H.; Marder, K.; Williams-Gray, C.H.; et al. Diagnostic Criteria for Mild Cognitive Impairment in Parkinson’s Disease: Movement Disorder Society Task Force Guidelines. Mov. Disord. 2012, 27, 349–356. [Google Scholar] [CrossRef]

- Benton, A.L.; Varney, N.R.; Hamsher, K.D. Visuospatial Judgment. A Clinical Test. Arch. Neurol. 1978, 35, 364–367. [Google Scholar] [CrossRef]

- Benedict, R.H.B.; Schretlen, D.; Groninger, L.; Brandt, J. Hopkins Verbal Learning Test—Revised: Normative Data and Analysis of Inter-Form and Test-Retest Reliability. Clin. Neuropsychol. 1998, 12, 43–55. [Google Scholar] [CrossRef]

- Gladsjo, J.A.; Schuman, C.C.; Evans, J.D.; Peavy, G.M.; Miller, S.W.; Heaton, R.K. Norms for Letter and Category Fluency: Demographic Corrections for Age, Education, and Ethnicity. Assessment 1999, 6, 147–178. [Google Scholar] [CrossRef]

- Wechsler, D. Wechsler Adult Intelligence Scale. Arch. Clin. Neuropsychol. 1955, 4, 6675. [Google Scholar]

- Smith, A. Symbol Digit Modalities Test. Clin. Neuropsychol. 1973. [Google Scholar] [CrossRef]

- Sheikh, J.I.; Yesavage, J.A. Geriatric Depression Scale (GDS): Recent Evidence and Development of a Shorter Version. In Clinical Gerontology; Routledge: London, UK, 1986; ISBN 978-1-315-82623-3. [Google Scholar]

- Spielberger, C.D.; Gorsuch, R.L.; Lushene, R.; Vagg, P.; Jacobs, G. Manual for the State-Trait Anxiety Inventory; Consulting Psychologists Press: Palo Alto, CA, USA, 1983. [Google Scholar]

- Weintraub, D.; Hoops, S.; Shea, J.A.; Lyons, K.E.; Pahwa, R.; Driver-Dunckley, E.D.; Adler, C.H.; Potenza, M.N.; Miyasaki, J.; Siderowf, A.D.; et al. Validation of the Questionnaire for Impulsive-Compulsive Disorders in Parkinson’s Disease. Mov. Disord. 2009, 24, 1461–1467. [Google Scholar] [CrossRef] [PubMed]

- Goetz, C.G.; Tilley, B.C.; Shaftman, S.R.; Stebbins, G.T.; Fahn, S.; Martinez-Martin, P.; Poewe, W.; Sampaio, C.; Stern, M.B.; Dodel, R.; et al. Movement Disorder Society-Sponsored Revision of the Unified Parkinson’s Disease Rating Scale (MDS-UPDRS): Scale Presentation and Clinimetric Testing Results. Mov. Disord. 2008, 23, 2129–2170. [Google Scholar] [CrossRef]

- Stiasny-Kolster, K.; Mayer, G.; Schäfer, S.; Möller, J.C.; Heinzel-Gutenbrunner, M.; Oertel, W.H. The REM Sleep Behavior Disorder Screening Questionnaire—A New Diagnostic Instrument. Mov. Disord. 2007, 22, 2386–2393. [Google Scholar] [CrossRef]

- Hoehn, M.M.; Yahr, M.D. Parkinsonism: Onset, Progression and Mortality. Neurology 1967, 17, 427–442. [Google Scholar] [CrossRef]

- Dalrymple-Alford, J.C.; MacAskill, M.R.; Nakas, C.T.; Livingston, L.; Graham, C.; Crucian, G.P.; Melzer, T.R.; Kirwan, J.; Keenan, R.; Wells, S.; et al. The MoCA. Neurology 2010, 75, 1717–1725. [Google Scholar] [CrossRef]

- Haykin, S. Neural Networks: A Comprehensive Foundation, 1st ed.; Prentice Hall PTR: New York, NY, USA, 1994; ISBN 978-0-02-352761-6. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Representations by Back-Propagating Errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Chollet, F. Keras: The Python Deep Learning Library. Astrophysics Source Code Library, 2018, ascl-1806. Available online: https://ui.adsabs.harvard.edu/abs/2018ascl.soft06022C/abstract (accessed on 31 May 2025).

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier Nonlinearities Improve Neural Network Acoustic Models. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 17–19 June 2013. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Prechelt, L. Orr, G.B., Müller, K.-R., Eds.; Early Stopping—But When? In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2002; pp. 55–69. ISBN 978-3-540-49430-0. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Browne, M.W. Cross-Validation Methods. J. Math. Psychol. 2000, 44, 108–132. [Google Scholar] [CrossRef]

- Lalkhen, A.G.; McCluskey, A. Clinical Tests: Sensitivity and Specificity. Contin. Educ. Anaesth. Crit. Care Pain 2008, 8, 221–223. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Covert, I.; Lundberg, S.; Lee, S.-I. Explaining by Removing: A Unified Framework for Model Explanation. J. Mach. Learn. Res. 2021, 22, 1–90. [Google Scholar]

- Stekhoven, D.J.; Bühlmann, P. MissForest—Non-Parametric Missing Value Imputation for Mixed-Type Data. Bioinformatics 2012, 28, 112–118. [Google Scholar] [CrossRef] [PubMed]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Kaiser, H.F. The Application of Electronic Computers to Factor Analysis. Educ. Psychol. Meas. 1960, 20, 141–151. [Google Scholar] [CrossRef]

- Cohen, J. A Power Primer; Statistical Power Analysis for the Behavioral Sciences; Lawrence Erlbaum Associates: Hillside, NJ, USA, 1988. [Google Scholar]

- Edmonds, E.C.; Delano-Wood, L.; Jak, A.J.; Galasko, D.R.; Salmon, D.P.; Bondi, M.W.; Alzheimer’s Disease Neuroimaging Initiative. “Missed” Mild Cognitive Impairment: High False-Negative Error Rate Based on Conventional Diagnostic Criteria. J. Alzheimers Dis. 2016, 52, 685–691. [Google Scholar] [CrossRef]

- Almgren, H.; Camacho, M.; Hanganu, A.; Kibreab, M.; Camicioli, R.; Ismail, Z.; Forkert, N.D.; Monchi, O. Machine Learning-Based Prediction of Longitudinal Cognitive Decline in Early Parkinson’s Disease Using Multimodal Features. Sci. Rep. 2023, 13, 13193. [Google Scholar] [CrossRef]

- Harvey, J.; Reijnders, R.A.; Cavill, R.; Duits, A.; Köhler, S.; Eijssen, L.; Rutten, B.P.; Shireby, G.; Torkamani, A.; Creese, B. Machine Learning-Based Prediction of Cognitive Outcomes in de Novo Parkinson’s Disease. npj Park. Dis. 2022, 8, 150. [Google Scholar] [CrossRef]

- Bezdicek, O.; Biundo, R.; Boelema, S.; Cammisuli, D.M.; Cholerton, B.; Cronin-Golomb, A.; Dalrymple-Alford, J.C.; Duits, A.; Fellows, R.; Gerstenecker, A.; et al. Neuropsychological Tests of Memory, Visuospatial, and Language Function in Parkinson’s Disease: Review, Critique, and Recommendations. Mov. Disord. 2025, 40, 795–806. [Google Scholar] [CrossRef]

- Byeon, H. Application of Machine Learning Technique to Distinguish Parkinson’s Disease Dementia and Alzheimer’s Dementia: Predictive Power of Parkinson’s Disease-Related Non-Motor Symptoms and Neuropsychological Profile. J. Pers. Med. 2020, 10, 31. [Google Scholar] [CrossRef]

- Hu, M.T.M.; Szewczyk-Królikowski, K.; Tomlinson, P.; Nithi, K.; Rolinski, M.; Murray, C.; Talbot, K.; Ebmeier, K.P.; Mackay, C.E.; Ben-Shlomo, Y. Predictors of Cognitive Impairment in an Early Stage Parkinson’s Disease Cohort. Mov. Disord. 2014, 29, 351–359. [Google Scholar] [CrossRef]

- Kenney, L.E.; Ratajska, A.M.; Lopez, F.V.; Price, C.C.; Armstrong, M.J.; Bowers, D. Mapping Actuarial Criteria for Parkinson’s Disease-Mild Cognitive Impairment onto Data-Driven Cognitive Phenotypes. Brain Sci. 2022, 12, 54. [Google Scholar] [CrossRef]

- Locascio, J.J.; Corkin, S.; Growdon, J.H. Relation Between Clinical Characteristics of Parkinson’s Disease and Cognitive Decline. J. Clin. Exp. Neuropsychol. 2003, 25, 94–109. [Google Scholar] [CrossRef]

- Ehgoetz Martens, K.A.; Silveira, C.R.A.; Intzandt, B.N.; Almeida, Q.J. State Anxiety Predicts Cognitive Performance in Patients with Parkinson’s Disease. Neuropsychology 2018, 32, 950–957. [Google Scholar] [CrossRef] [PubMed]

- Djamshidian, A.; Friedman, J.H. Anxiety and Depression in Parkinson’s Disease. Curr. Treat. Options Neurol. 2014, 16, 285. [Google Scholar] [CrossRef] [PubMed]

- Braak, H.; Rüb, U.; Del Tredici, K. Cognitive Decline Correlates with Neuropathological Stage in Parkinson’s Disease. J. Neurol. Sci. 2006, 248, 255–258. [Google Scholar] [CrossRef]

- Pedersen, K.F.; Larsen, J.P.; Tysnes, O.-B.; Alves, G. Natural Course of Mild Cognitive Impairment in Parkinson Disease. Neurology 2017, 88, 767–774. [Google Scholar] [CrossRef]

- Koepsell, T.D.; Monsell, S.E. Reversion from Mild Cognitive Impairment to Normal or Near-Normal Cognition. Neurology 2012, 79, 1591–1598. [Google Scholar] [CrossRef]

- Roberts, R.O.; Knopman, D.S.; Mielke, M.M.; Cha, R.H.; Pankratz, V.S.; Christianson, T.J.H.; Geda, Y.E.; Boeve, B.F.; Ivnik, R.J.; Tangalos, E.G.; et al. Higher Risk of Progression to Dementia in Mild Cognitive Impairment Cases Who Revert to Normal. Neurology 2014, 82, 317–325. [Google Scholar] [CrossRef]

- Cools, R.; Tichelaar, J.G.; Helmich, R.C.G.; Bloem, B.R.; Esselink, R.A.J.; Smulders, K.; Timmer, M.H.M. Chapter 13—Role of Dopamine and Clinical Heterogeneity in Cognitive Dysfunction in Parkinson’s Disease. In Progress in Brain Research; Narayanan, N.S., Albin, R.L., Eds.; Cognition in Parkinson’s Disease; Elsevier: Amsterdam, The Netherlands, 2022; Volume 269, pp. 309–343. [Google Scholar]

- Rodriguez-Blazquez, C.; Schrag, A.; Rizos, A.; Chaudhuri, K.R.; Martinez-Martin, P.; Weintraub, D. Prevalence of Non-Motor Symptoms and Non-Motor Fluctuations in Parkinson’s Disease Using the MDS-NMS. Mov. Disord. Clin. Pract. 2021, 8, 231–239. [Google Scholar] [CrossRef] [PubMed]

| Variable | |

|---|---|

| Sex: | |

| Men | 374 (60.5%) |

| Women | 244 (39.5%) |

| Age at visit | M = 61.84, SD = 9.65 |

| Years of education | M = 15.74, SD = 3.55 |

| Pharmacological treatment: | |

| On regular treatment | 193 (31.2%) |

| Without treatment | 425 (68.8%) |

| MoCA score (baseline) | M = 26.92, SD = 2.51 |

| MoCA score (3-year follow-up) | M = 26.41, SD = 3.29 |

| MDS-UPDRS Part III | M = 20.35, SD = 9.23 |

| Hoehn & Yahr staging: | |

| Stage 1 | 232 (37.5%) |

| Stage 2 | 377 (61%) |

| Stage 3 | 9 (1.5%) |

| Variable Groups | Original Number of Variables | Number of Components |

|---|---|---|

| Socio-demographic data: | ||

| SEX | 1 | - |

| ED_YEARS | 1 | - |

| AGE AT VISIT | 1 | - |

| Clinical data: | ||

| PDMEDYN | 1 | - |

| DBSYN | 1 | - |

| MDS-UPDRS_Part I | 6 | 1 |

| MDS-UPDRS_Part II | 13 | 1 |

| MDS-UPDRS_Part III | 33 | 5 |

| H&Y | 1 | - |

| Cognitive data: | ||

| MoCA | 1 | - |

| JLO | 1 | - |

| HVLT-R | 7 | 1 |

| SDMT | 1 | - |

| LNS | 1 | - |

| SEM_Fluency | 1 | - |

| Neuropsychiatric and affective data: | ||

| GDS-15 | 15 | 1 |

| STAI | 40 | 3 |

| QUIP | 13 | 2 |

| REM_Sleep Disorder | 12 | 1 |

| Neuroimaging data: | ||

| DATSCAN | 6 | 1 |

| Genetic data: | ||

| GEN_DATA | 10 | 1 |

| Class | Count |

|---|---|

| Cognitively Intact | 371 |

| Stable Impaired | 92 |

| Conversion to Impaired | 100 |

| Reversion to Cognitively Intact | 55 |

| Group | Count | Precision | Recall | F1 | Accuracy | AUC |

|---|---|---|---|---|---|---|

| Cognitively Intact | 426 | 0.94 | 0.72 | 0.82 | - | - |

| Cognitively Impaired | 192 | 0.59 | 0.91 | 0.72 | - | - |

| Overall | 618 | 0.78 | 0.81 | 0.79 | 0.78 | 0.91 |

| Class | Count | Misclassified |

|---|---|---|

| Cognitively Intact | 371 | 84 |

| Stable Impaired | 92 | 3 |

| Conversion to Impaired | 100 | 15 |

| Reversion to Cognitively Intact | 55 | 36 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Colautti, L.; Casella, M.; Robba, M.; Marocco, D.; Ponticorvo, M.; Iannello, P.; Antonietti, A.; Marra, C.; for the CPP Integrated Parkinson’s Database. Predicting Cognitive Decline in Parkinson’s Disease Using Artificial Neural Networks: An Explainable AI Approach. Brain Sci. 2025, 15, 782. https://doi.org/10.3390/brainsci15080782

Colautti L, Casella M, Robba M, Marocco D, Ponticorvo M, Iannello P, Antonietti A, Marra C, for the CPP Integrated Parkinson’s Database. Predicting Cognitive Decline in Parkinson’s Disease Using Artificial Neural Networks: An Explainable AI Approach. Brain Sciences. 2025; 15(8):782. https://doi.org/10.3390/brainsci15080782

Chicago/Turabian StyleColautti, Laura, Monica Casella, Matteo Robba, Davide Marocco, Michela Ponticorvo, Paola Iannello, Alessandro Antonietti, Camillo Marra, and for the CPP Integrated Parkinson’s Database. 2025. "Predicting Cognitive Decline in Parkinson’s Disease Using Artificial Neural Networks: An Explainable AI Approach" Brain Sciences 15, no. 8: 782. https://doi.org/10.3390/brainsci15080782

APA StyleColautti, L., Casella, M., Robba, M., Marocco, D., Ponticorvo, M., Iannello, P., Antonietti, A., Marra, C., & for the CPP Integrated Parkinson’s Database. (2025). Predicting Cognitive Decline in Parkinson’s Disease Using Artificial Neural Networks: An Explainable AI Approach. Brain Sciences, 15(8), 782. https://doi.org/10.3390/brainsci15080782