Evolutionary Trajectories of Consciousness: From Biological Foundations to Technological Horizons

Abstract

1. Introduction

- Defining “Consciousness”: Achieving a precise definition of consciousness, which may only be fully clarified with the development of a universally accepted theory—a goal that remains unmet.

- Identifying Neural Correlates: Pinpointing the neural correlates of consciousness, including the minimal neural mechanisms sufficient for conscious perception and behavior.

- Determining Levels of Consciousness: Assessing various levels of consciousness, encompassing medical aspects of disorders in humans, potential manifestations in animals, and explorations in computer science and artificial intelligence.

- Exploring Cognitive Relationships: Investigating the relationship between consciousness and various cognitive brain functions, including attention, perception, thinking, decision-making, and behavior.

- Developing Distinct Criteria: Establishing criteria for distinct properties of consciousness applicable to psychiatry, neurophysiology, psychology, sociology, and other biomedical and social sciences.

- Assessing subjecthood and Qualia: Evaluating the degree of individual subjecthood in information processing and qualia (subjective sensory experiences) that shape perception of reality and subsequent behavior.

- Defining Consciousness and the Subconscious: Elucidating the relationship between consciousness and the subconscious, including the influence of acquired and instinctive behavioral programs, innate motivations, and their sublimations on conscious experience.

- Natural Evolutionary Stage: The emergence of consciousness is a natural stage in the evolution of animals endowed with nervous systems, aimed at ensuring their survival in changing environmental conditions.

- Subjectivity as Key Factor: The key systemic factor of consciousness is subjectivity, which involves the perception of the value of one’s existence and needs, and the distinction of the subjective “self” from the surrounding environment.

- Subjective “Self” as Mediator: The subjective “self” acts as a “censor” for incoming conscious information and as a “conductor” for the implementation of complex behavioral programs aimed at satisfying the subject’s perceived needs.

- Central Position of the “Self”: The subjective “self” occupies a central and distinct position in the subject’s worldview.

2. Consciousness as a Lawful Product of the Evolution of Information Systems

2.1. Definition of Consciousness

2.2. Specification of Definitions: System, Information, and Information System

2.2.1. Systematicity

2.2.2. Information and Information Systems

- Interaction and Adaptation: Subsystems exchange signals—chemical, electrical, or mechanical—to coordinate growth, metabolism, and behavior. This exchange enables homeostatic regulation and responsive adaptation when conditions change.

- Evolutionary Progression: Through mutation, selection, and developmental programs, biological ISs undergo gradual, irreversible transformations that enhance information processing capacity.

3. Subjectivity as the Organizing Factor of Consciousness and Thought

3.1. Essential Conditions for the Emergence of Consciousness

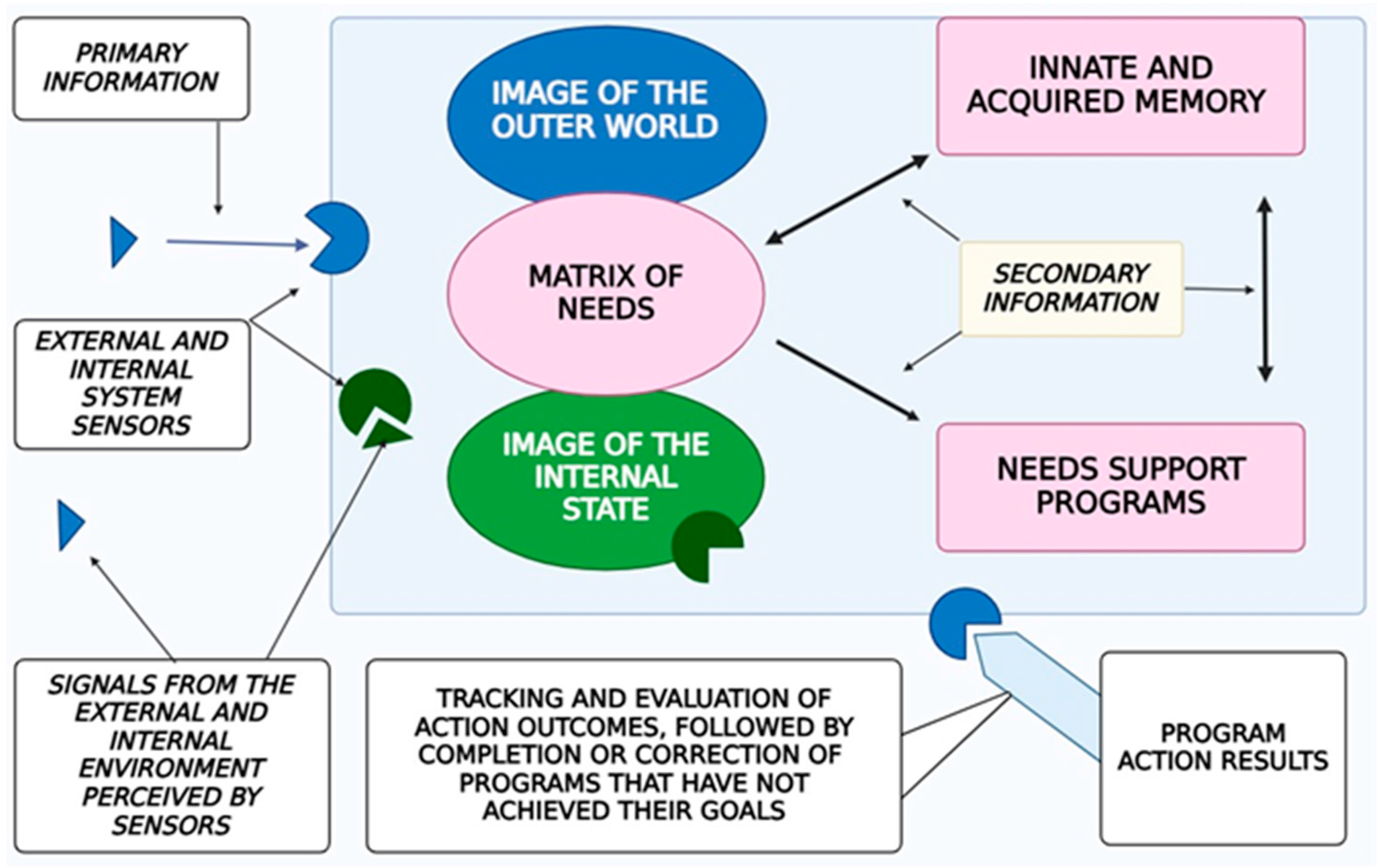

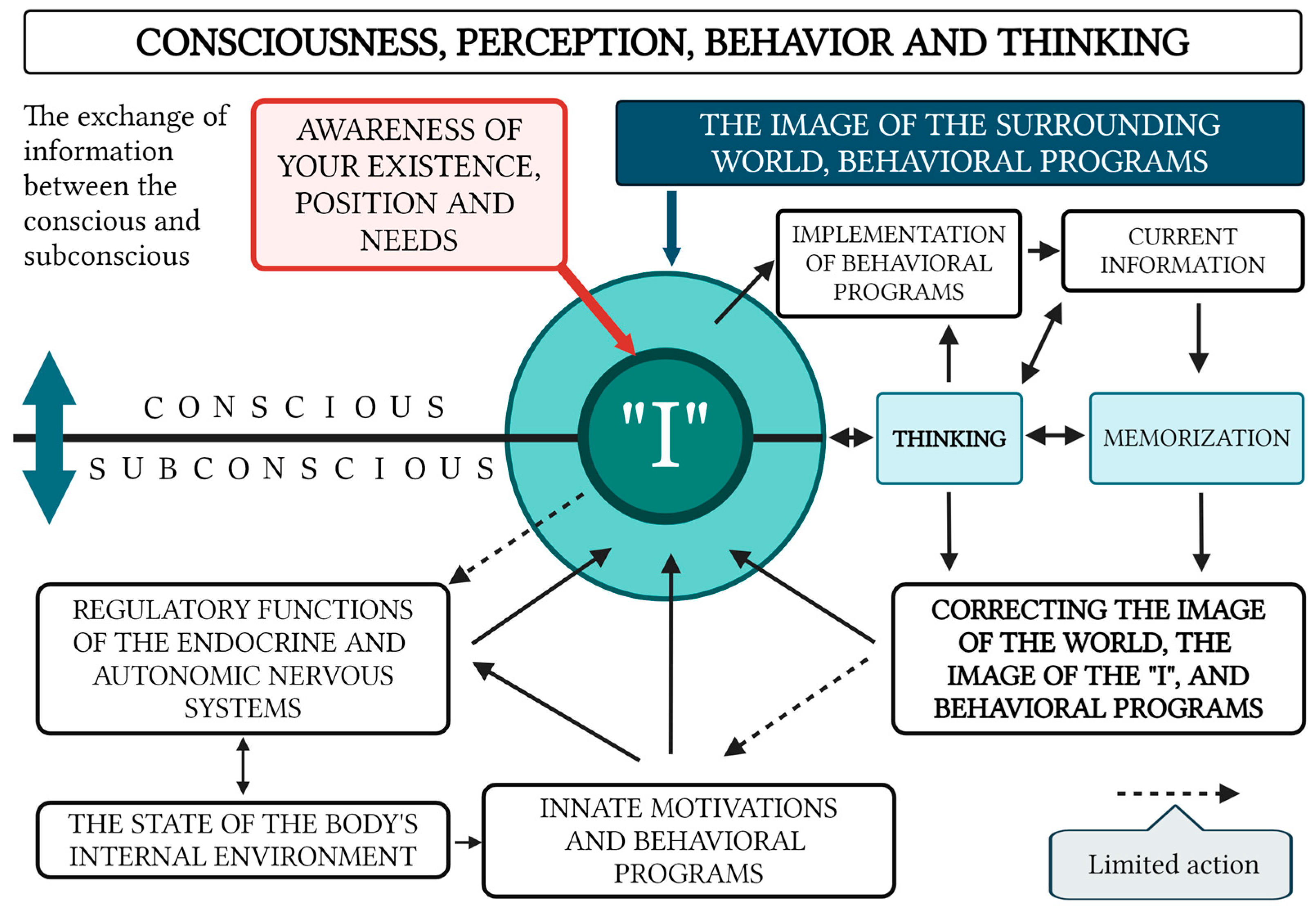

3.2. Fundamental Model of Human Consciousness

- The Subjective “Self”: This functions as the central organizing factor of consciousness.

- A Holistic Image of the External World and the Subject’s Own Body: Consciousness incorporates a comprehensive representation of the surrounding environment and the current state of the organism. With that, the image of the surrounding world is not simply a reflection in consciousness (a “photograph” of the reality surrounding the subject) but is generally a complex system with constantly established cause-and-effect relationships between individual phenomena within this dynamically changing system.

- Thought Processes: These enable the formulation of behavioral programs that are aligned with conscious needs and goals.

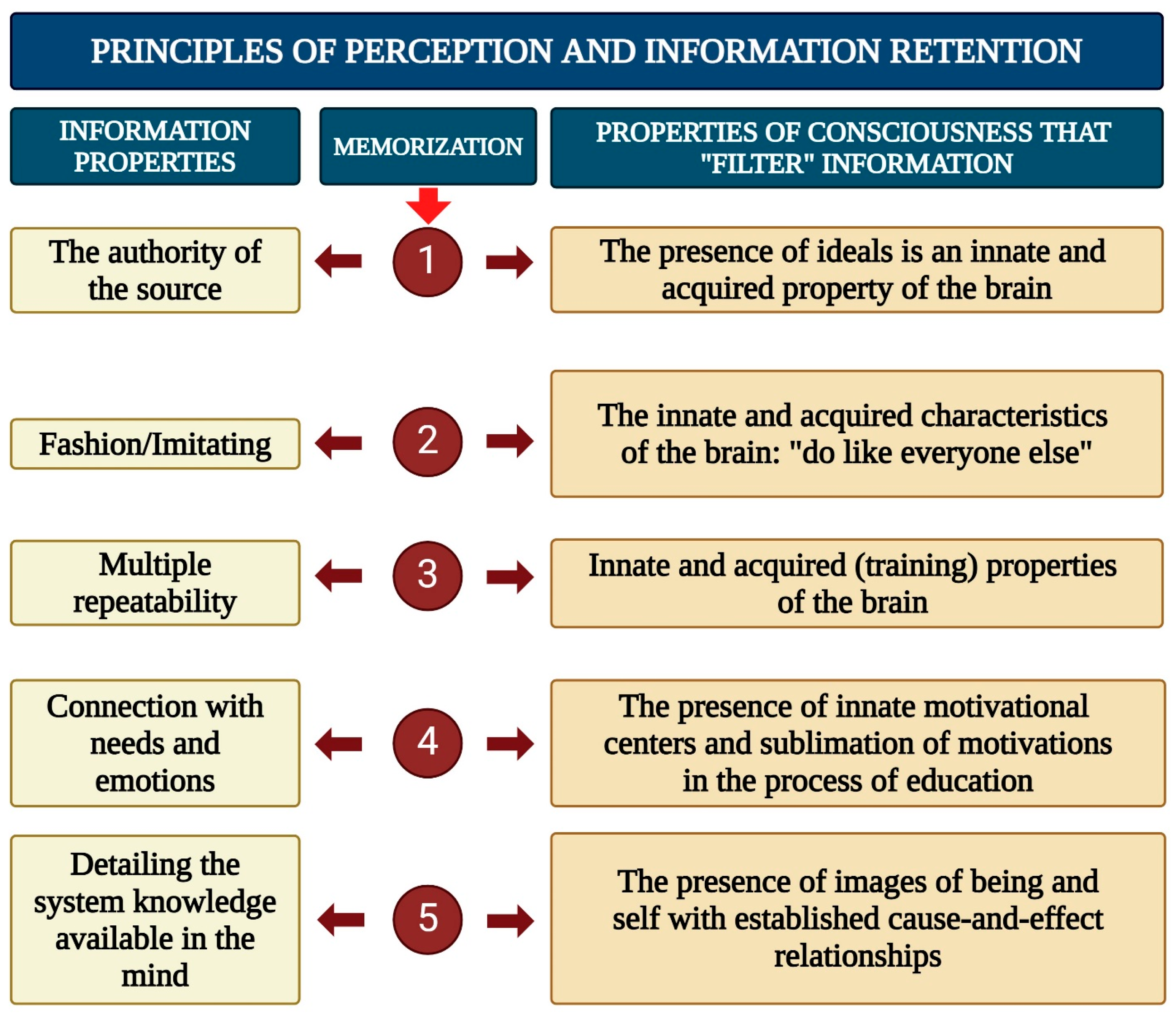

- Filtering and Organizing Sensory Information: Consciousness must systematize and filter the sensory input it receives in order to make it usable for higher-order processing.

- Integration of Information Through Thought: Thought processes integrate incoming data to form a cohesive understanding that drives behavior.

- Influence of Internal Bodily Changes: Consciousness is influenced by hormonal fluctuations, the autonomic nervous system, and motivational centers, although it has limited direct control over these processes (Figure 2).

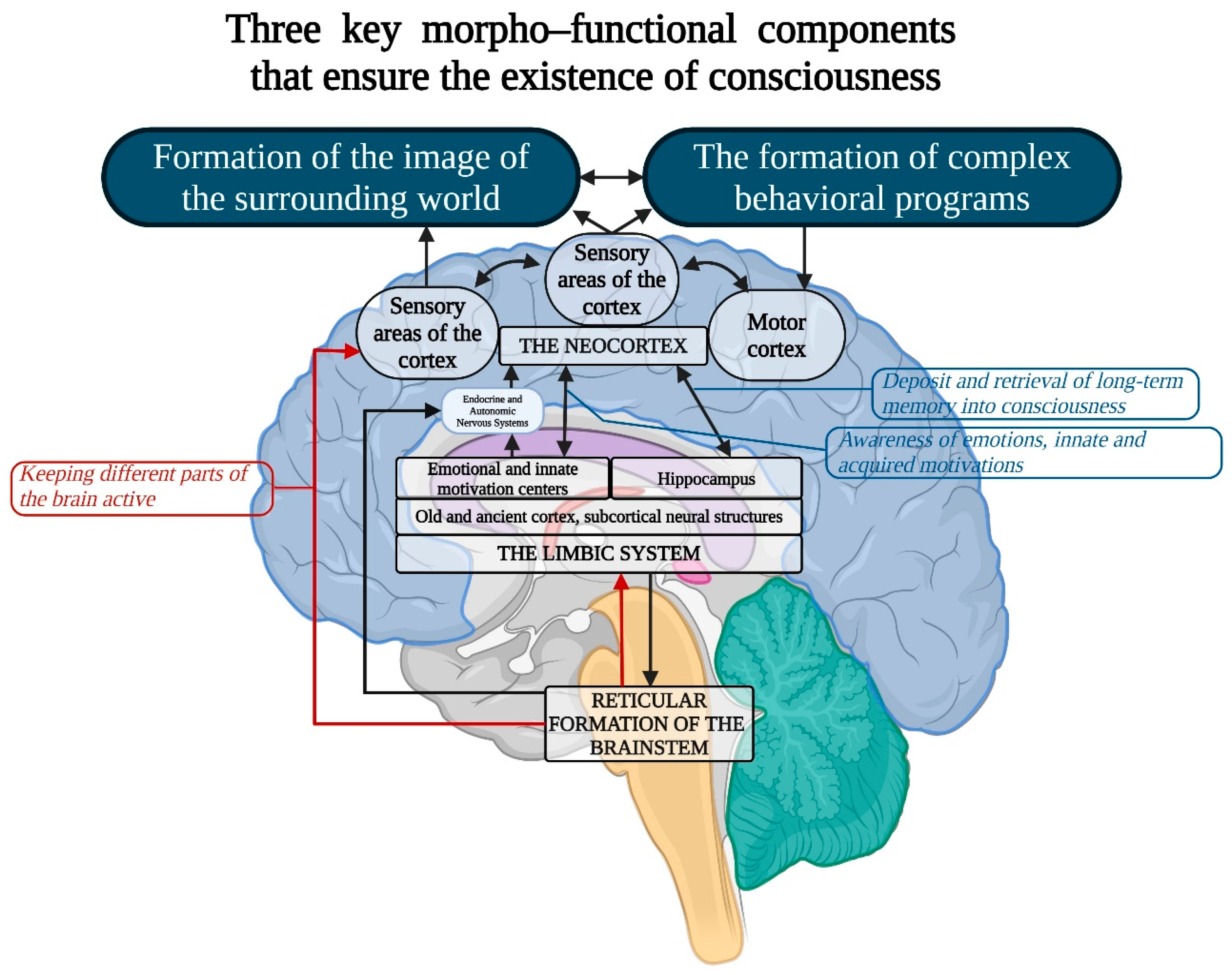

3.3. Morphofunctional Foundations of Consciousness

- Neocortex: Throughout mammalian evolution, distinct cortical regions have expanded at varying rates, leading to a complex mosaic of specialized areas within the neocortex [123]. A prime example is the prefrontal cortex (PFC), crucial for planning complex cognitive behaviors and showing significant evolutionary divergence across species. In rodents, the PFC is predominantly agranular, lacking the prominent layer 4 (granular layer) that defines the granular PFC in primates [124,125]. In humans, the granular PFC expanded further, not only increasing in size but also enhancing connectivity with other association areas. This expansion facilitated abilities such as abstract reasoning, language, and sophisticated social behaviors [126].

- 2.

- Von Economo Neurons (VENs)—large, spindle-shaped neurons of the fifth cortical layer: While consciousness is an integrative brain function, certain neuronal mechanisms may hold special significance in its realization. One example is von Economo neurons (VENs), large, spindle-shaped neurons located in the anterior insular cortex and anterior cingulate cortex (Figure 5), which play critical roles in self-awareness and the social facets of consciousness. These neurons are primarily found in humans but are also present in great apes and highly intelligent marine mammals [133,134]. Researchers agree on the selective vulnerability of VENs in certain pathologies where social behavior and emotional capacities are impaired, such as neuropsychiatric disorders including schizophrenia [135,136]. It is hypothesized that VENs help direct neural signals from deep cortical areas to more distant parts of the brain, integrating the neocortex with older cortical and subcortical structures of the limbic system [137]. In humans, strong emotions activate the anterior cingulate cortex, which relays signals from the amygdala—the primary center for emotional processing—to the frontal cortex. This system may function like a lens, focusing complex neural signals to facilitate emotional and cognitive processing.

- 3.

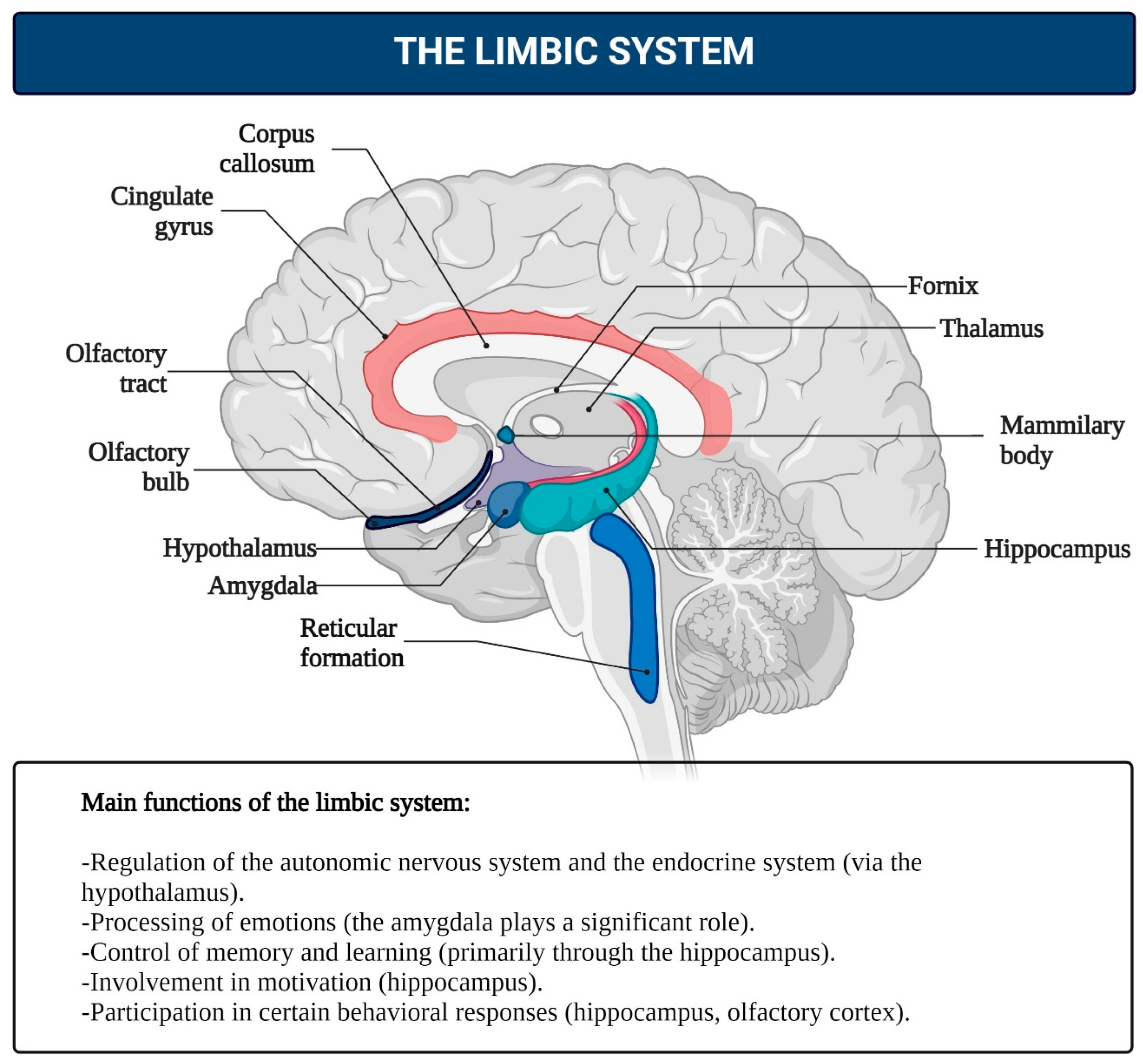

- Limbic System: This system functions as the center for emotions, as well as the organization of behavior, memory, sleep and wakefulness, stress response control, attention, and innate behavioral programs, including the execution of sexual instincts, feeding, aggression, and parental care [126,137,138,139,140]. The limbic system includes regions of the evolutionarily older cortex and subcortical (basal) nuclei, such as the olfactory bulb, anterior perforated substance, cingulate gyrus, parahippocampal gyrus, dentate gyrus, hippocampus, amygdala, hypothalamus, mammillary bodies, and reticular formation (Figure 5). The main functions of the limbic system are to regulate the autonomic nervous and endocrine systems (via the hypothalamus), process emotions (with a significant role played by the amygdala), and manage memory and learning (primarily through the hippocampus). It is also involved in motivation and some behavioral responses, including the olfactory cortex in humans. It is hypothesized that in the early stages of animal evolution, complex reflex mechanisms in the limbic compartments of the basal brain, which serve metabolic and other homeostatic processes, along with elements of the reticular activating system, contributed to the emergence of consciousness as a primordial emotion signaling that the organism’s existence was under threat [141,142,143,144,145,146,147,148,149,150,151,152].

- 4.

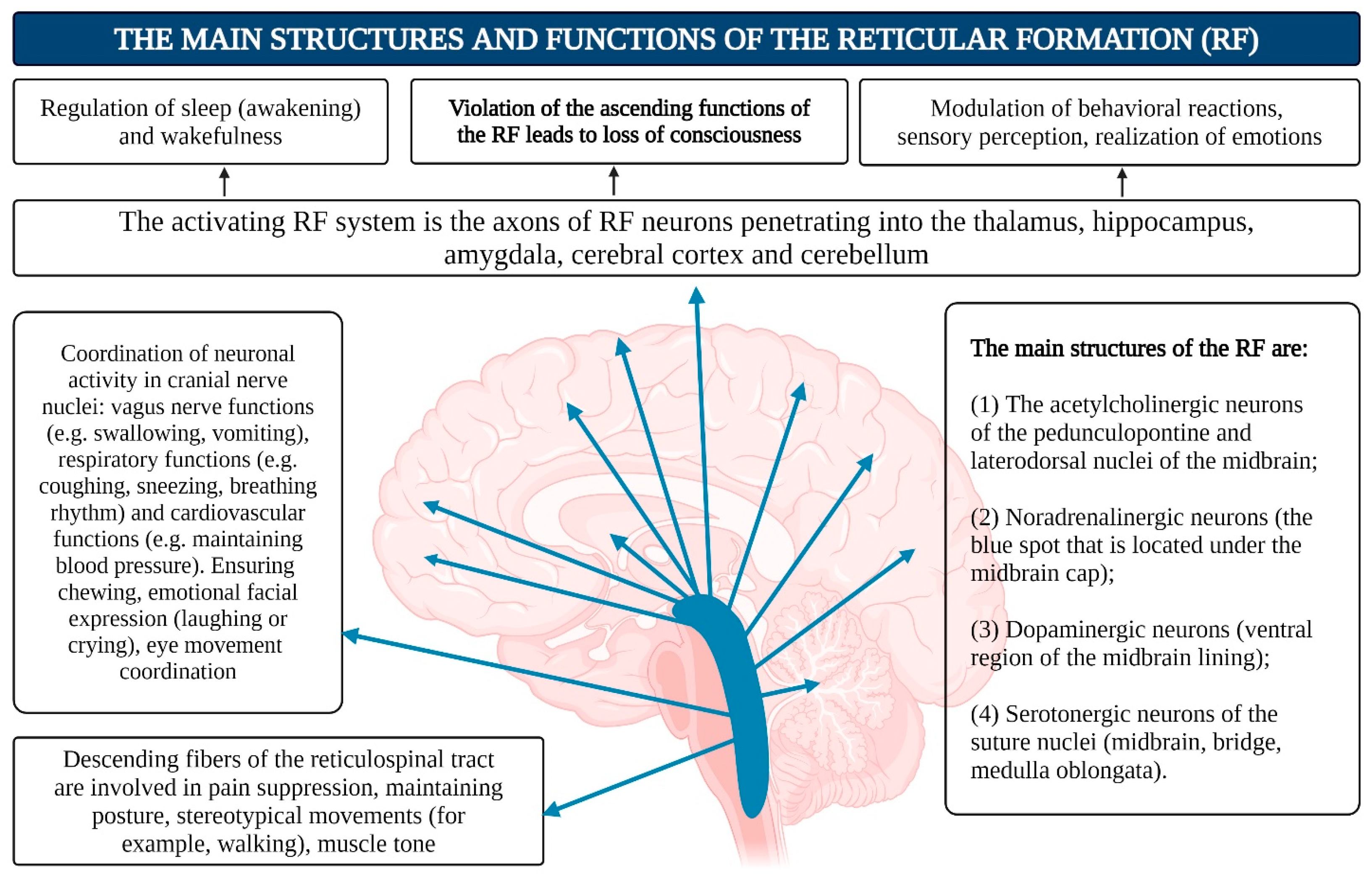

- Reticular Formation (RF): The reticular formation consists of nuclei and network structures that extend along the entire axis of the brainstem. The midbrain RF is closely connected with the limbic system, and some authors consider them to form a unified limbic–reticular complex. Projections from the RF (dendrites and axons) extend into most other brain areas, particularly the neocortex and limbic system, maintaining their active state. The RF is also responsible for regulating sleep–wake cycles, brain rhythms, vital centers (such as respiration and blood pressure), and cranial nerve nuclei in the brainstem. Damage or hyperactivation of the RF results in loss of consciousness [142] (Figure 6).

- 5.

- The thalamus.

3.4. Decortication Experiments in Rats

4. Evolutionary Aspects of Consciousness

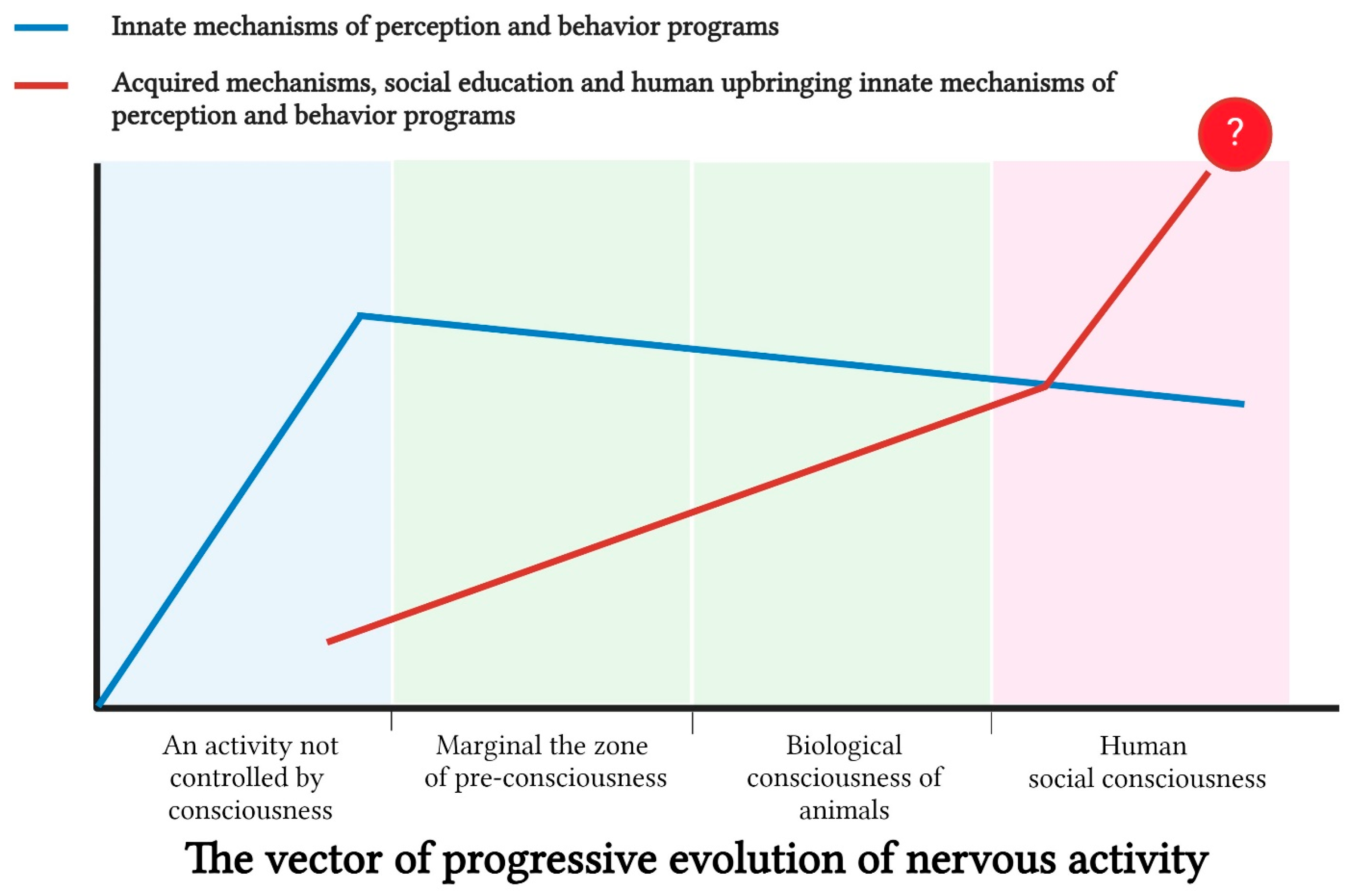

- Preconsciousness: This category refers to instances where the existence of consciousness as a unified phenomenon in certain animal species cannot be confirmed or denied with sufficient certainty. In humans, manifestations of preconsciousness are characterized by vague, subconscious memories that do not form into clear recollections [161]. In animals, verification of preconsciousness may be associated with insufficient scientific data to confirm the presence of fully developed subjective consciousness, as well as with the marginal state of their higher nervous activity.

- Biological (animal) consciousness: This form of consciousness is defined by the presence of a sufficient number of identifiable traits that allow its verification. Achieving absolute consensus among all specialists on this issue is an inherently unattainable goal.

- Culturally mediated or socialized human consciousness: This form of consciousness is shaped and influenced by the cultural environment of society.

4.1. The Origins of Consciousness or Preconsciousness in Animals

4.1.1. Some General Characteristics of Nervous System Evolution

4.1.2. Insects

4.1.3. Cephalopods, Octopuses

- Small Neurons: Cephalopods possess small neurons (3–5 μm in diameter), which allow for a higher density of neurons in the brain. This increased density may enhance the brain’s ability to process complex behavioral responses and cognitive abilities [212].

- Limited Somatotopy: Generally, cephalopods lack a somatotopic representation of their body in the brain, but recent data indicate somatotopy in the basal lobe of squid brains [233].

- Efferent Modulation of Sensory Receptors: The function of efferent modulation of sensory receptors allows cephalopods to modulate sensory information, which is closely related to attention and selective perception, contributing to the accumulation of acquired experience [234].

- Complex Neural Circuit Architecture: The intricate architecture of cephalopod neural circuits, particularly in the dorsal basal and subvertical lobes of the brain, is thought by some researchers to mirror certain cortical processes found in mammals, responsible for learning and memory, and may be functionally similar to the mammalian hippocampus [159,235,236].

4.1.4. Vertebrates

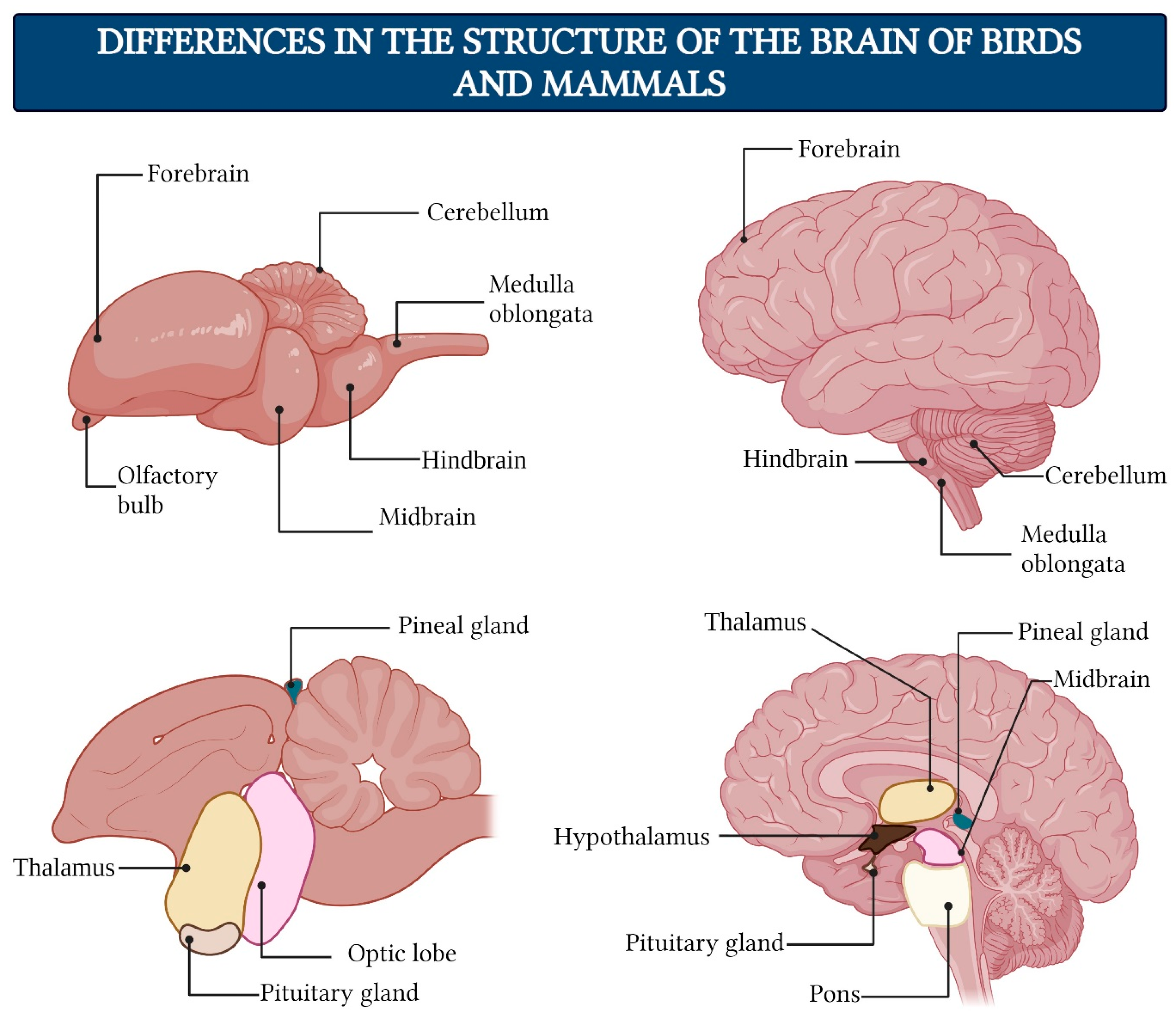

- The forebrain consists of the telencephalon and diencephalon. The telencephalon is the most cranial region of the central nervous system in humans, which matures into the cerebrum. The dorsal part of the telencephalon (the pallium) develops predominantly into the cerebral cortex in mammals, while the ventral telencephalon (the subpallium) forms the basal ganglia. Primitive cortical structures are already present in agnathans vertebrates, indicating that these structures likely arose in the last common ancestor of jawed and jawless vertebrates approximately 460 million years ago [236]. The diencephalon includes the thalamus, metathalamus, hypothalamus, epithalamus, prethalamus (or subthalamus), and the pretectum.

- The midbrain is located centrally beneath the cerebral cortex. It consists of the tectum, the tegmentum of the midbrain, the cerebral aqueduct, the cerebral peduncles, as well as several nuclei and bundles of nerve fibers.

- The hindbrain serves as the transition to the spinal cord. In humans, it includes the pons, cerebellum, and medulla oblongata.

4.2. Biological Consciousness and Signs of Subjectivity in Animals

4.2.1. Selfhood in Animals

4.2.2. Learned Animal Communication

4.2.3. Creativity and Flexible Thinking in Animals

4.2.4. Sensory Emotions in Animals

4.2.5. Differentiated Roles of Cortical and Noncortical Structures in Bird and Mammal Consciousness

4.3. Evolution of Human Consciousness

5. What’s Next?

- Increasing urbanization, globalization, and unification of all key areas of human life, leading to a reduction in the diversity of individual subsystems within society, each with its own characteristics of collective consciousness.

- The creation of unmanned production and logistics systems based on robotics and the implementation of AI; the displacement of humans from socially useful spheres of activity [328].

- The rapid development of global data centers for accumulating and analyzing information through AI, such as for the use of language models like ChatGPT, including in medicine [334].

- The self-development of computer programs based on artificial neural network technologies and their hybridization with other methods [335].

- The hybridization of AI approaches reflects both centralized (mammalian-like) and decentralized (cephalopod-like) neural processing, which could lead to hybrid intelligence models integrating the strengths of both approaches.

- The presence of computational thinking—the ability to reformulate and solve problems in ways that can be executed by computers, declared a foundational capability for the development of intelligent systems in the 21st century [340].

- The spread of trans-humanist ideology, aimed at altering and enhancing the natural characteristics of humans through biological, technological, and cognitive modifications, with the ultimate goal of transforming humans into a “new human” [341,342,343]. Additionally, post-humanist ideology, whose conceptual foundations include scientific discoveries that have blurred the boundaries between humans and other living beings; the development of artificial intelligence; changes in perceptions of humanity; and the hybridization of humans with other living organisms and machines [344,345]. While trans-humanism seeks to overcome human intellectual and physical limitations, post-humanism seeks to surpass humanism itself by creating the “post-human” [346,347].

5.1. Positioning Our Subjective System-Forming Model Among Evolutionary Naturalisms

5.2. Cultural Exaptation and Technogenic Frontiers

5.3. Emergence of Non-Human-Generated Cultural Substrates

6. Conclusions

- The Complexity Threshold: No current model predicts why octopuses (500 M neurons) exhibit goal-directed cognition while similar-complexity AI systems do not.

- The Subjectivity Gap: SFF mechanisms (e.g., conflict arbitration) are empirically tractable in vertebrates but lack computational formalization for artificial systems.

- The Technogenic Leap: Cultural scaffolding extends consciousness beyond biology, but whether AI can autonomously replicate SFF dynamics remains untested. Future research must quantify SFF dynamics across phylogenetic scales while developing cross-species assays for subjective arbitration.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Laberge, D.; Kasevich, R. The apical dendrite theory of consciousness. Neural. Netw. 2007, 20, 1004–1020. [Google Scholar] [CrossRef] [PubMed]

- Hobson, J.A. REM sleep and dreaming: Towards a theory of protoconsciousness. Nat. Rev. Neurosci. 2009, 10, 803–813. [Google Scholar] [CrossRef] [PubMed]

- Grossberg, S. Adaptive Resonance Theory: How a brain learns to consciously attend, learn, and recognize a changing world. Neural. Netw. 2013, 37, 1–47. [Google Scholar] [CrossRef]

- Graziano, M.S.A. Consciousness and the attention schema: Why it has to be right. Cogn. Neuropsychol. 2020, 37, 224–233. [Google Scholar] [CrossRef] [PubMed]

- Kriegel, U. A cross-order integration hypothesis for the neural correlate of consciousness. Conscious. Cogn. 2007, 16, 897–912. [Google Scholar] [CrossRef]

- Northoff, G.; Klar, P.; Bein, M.; Safron, A. As without, so within: How the brain’s temporo-spatial alignment to the environment shapes consciousness. Interface Focus 2023, 13, 20220076. [Google Scholar] [CrossRef]

- Merker, B. Consciousness without a cerebral cortex: A challenge for neuroscience and medicine. Behav. Brain Sci. 2007, 30, 63–81. [Google Scholar] [CrossRef]

- Berkovich-Ohana, A.; Glicksohn, J. The consciousness state space (CSS)-a unifying model for consciousness and self. Front. Psychol. 2014, 5, 341. [Google Scholar] [CrossRef]

- Ward, L.M. The thalamic dynamic core theory of conscious experience. Conscious. Cogn. 2011, 20, 464–486. [Google Scholar] [CrossRef]

- Gelepithis, P.A.M. A Novel Theory of Consciousness. Int. J. Mach. Conscious. 2014, 6, 125–139. [Google Scholar] [CrossRef]

- Farrell, J. Higher-order theories of consciousness and what-it-is-like-ness. Philos. Stud. 2018, 175, 2743–2761. [Google Scholar] [CrossRef] [PubMed]

- Barba, G.D.; Boisse, M.-F. Temporal consciousness and confabulation: Is the medial temporal lobe “temporal”? Cogn. Neuropsychiatry 2010, 15, 95–117. [Google Scholar] [CrossRef]

- Feinberg, T.E.; Mallatt, J. The Nature of Primary Consciousness. A New Synthesis. Conscious. Cogn. 2016, 43, 113–127. [Google Scholar] [CrossRef]

- Feinberg, T.E.; Mallatt, J. Phenomenal Consciousness and Emergence: Eliminating the Explanatory Gap. Front. Psychol. 2020, 11, 1041. [Google Scholar] [CrossRef]

- Irwin, L.N.; Chittka, L.; Jablonka, E.; Mallatt, J. Editorial: Comparative Animal Consciousness. Front. Syst. Neurosci. 2022, 16, 998421. [Google Scholar] [CrossRef]

- Mallatt, J.; Feinberg, T.E. Decapod Sentience: Promising Framework and Evidence. Anim. Sentience 2022, 32, 24. [Google Scholar] [CrossRef]

- Mallatt, J.; Feinberg, T.E. Multiple Routes to Animal Consciousness: Constrained Multiple Realizability Rather Than Modest Identity Theory. Front. Psychol. 2021, 12, 732336. [Google Scholar] [CrossRef] [PubMed]

- Schiff, N.D. Recovery of consciousness after brain injury: A mesocircuit hypothesis. Trends Neurosci. 2010, 33, 1–9. [Google Scholar] [CrossRef]

- Schiff, N.D. Mesocircuit mechanisms in the diagnosis and treatment of disorders of consciousness. Presse Med. 2023, 52, 104161. [Google Scholar] [CrossRef]

- Min, B.-K. A thalamic reticular networking model of consciousness. Theor. Biol. Med. Model. 2010, 7, 10. [Google Scholar] [CrossRef]

- Yu, L.; Blumenfeld, H. Theories of Impaired Consciousness in Epilepsy. Ann. N. Y. Acad. Sci. 2009, 1157, 48–60. [Google Scholar] [CrossRef]

- O’Doherty, F. A Contribution to Understanding Consciousness: Qualia as Phenotype. Biosemiotics 2012, 6, 191–203. [Google Scholar] [CrossRef]

- Morsella, E.; Godwin, C.A.; Jantz, T.K.; Krieger, S.C.; Gazzaley, A. Homing in on consciousness in the nervous system: An action-based synthesis. Behav. Brain Sci. 2015, 39, e168. [Google Scholar] [CrossRef]

- Shannon, B. A Psychological Theory of Consciousness. J. Conscious. Stud. 2008, 15, 5–47. [Google Scholar]

- Poznanski, R.R. The dynamic organicity theory of consciousness: How consciousness arises from the functionality of multiscale complexity in the material brain. J. Multiscale Neurosci. 2024, 3, 68–87. [Google Scholar] [CrossRef]

- Cleeremans, A.; Timmermans, B.; Pasquali, A. Consciousness and metarepresentation: A computational sketch. Neural Netw. 2007, 20, 1032–1039. [Google Scholar] [CrossRef] [PubMed]

- Thagard, P.; Stewart, T.C. Two theories of consciousness: Semantic pointer competition vs. information integration. Conscious. Cogn. 2014, 30, 73–90. [Google Scholar] [CrossRef] [PubMed]

- Hameroff, S. ‘Orch OR’ is the most complete, and most easily falsifiable theory of consciousness. Cogn. Neurosci. 2021, 12, 74–76. [Google Scholar] [CrossRef]

- Dehaene, S.; Changeux, J.P. Experimental and theoretical approaches to conscious processing. Neuron 2011, 70, 200–227. [Google Scholar] [CrossRef]

- Tononi, G.; Boly, M.; Massimini, M.; Koch, C. Integrated information theory: From consciousness to its physical substrate. Nat. Rev. Neurosci. 2016, 17, 450–461. [Google Scholar] [CrossRef]

- Lamme, V.A. Towards a true neural stance on consciousness. Trends Cogn. Sci. 2006, 10, 494–501. [Google Scholar] [CrossRef] [PubMed]

- Clark, A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 2013, 36, 181–204. [Google Scholar] [CrossRef]

- Pennartz, C.M.A. What is neurorepresentationalism? From neural activity and predictive processing to multi-level representations and consciousness. Behav. Brain Res. 2022, 432, 113969. [Google Scholar] [CrossRef]

- Aru, J.; Suzuki, M.; Larkum, M.E. Cellular Mechanisms of Conscious Processing. Trends Cogn. Sci. 2020, 24, 814–825. [Google Scholar] [CrossRef]

- Anokhin, K.V. Cognitom: In search of a fundamental neuroscientific theory of Consciousness. I.P. Pavlov. J. High. Nerv. Act. 2021, 71, 39–71. (In Russian) [Google Scholar] [CrossRef]

- Sattin, D.; Magnani, F.G.; Bartesaghi, L.; Caputo, M.; Fittipaldo, A.V.; Cacciatore, M.; Picozzi, M.; Leonardi, M. Theoretical Models of Consciousness: A Scoping Review. Brain Sci. 2021, 11, 535. [Google Scholar] [CrossRef] [PubMed]

- Storm, J.F.; Klink, P.C.; Aru, J.; Senn, W.; Goebel, R.; Pigorini, A.; Avanzini, P.; Vanduffel, W.; Roelfsema, P.R.; Massimini, M.; et al. An integrative, multiscale view on neural theories of consciousness. Neuron 2024, 112, 1531–1552. [Google Scholar] [CrossRef] [PubMed]

- Seth, A.K.; Bayne, T. Theories of consciousness. Nat. Rev. Neurosci. 2022, 23, 439–452. [Google Scholar] [CrossRef]

- Ryle, G. The Concept of Mind: 60th Anniversary Edition; Routledge: New York, NY, USA, 2009. [Google Scholar]

- Block, N. On a Confusion about a Function of Consciousness. Behav. Brain Sci. 1995, 18, 227–287. [Google Scholar] [CrossRef]

- Ashmore , R.D.; Jussim, L. (Eds.) Self and Identity: Fundamental Issues; Oxford University Press: New York, NY, USA, 1997; p. 256. ISBN 978-0-19-509826-6. [Google Scholar]

- Gooding, P.L.T.; Callan, M.J.; Hughes, G. The Association Between Believing in Free Will and Subjective Well-Being Is Confounded by a Sense of Personal Control. Front. Psychol. 2018, 9, 623. [Google Scholar] [CrossRef]

- Wisniewski, D.; Deutschländer, R.; Haynes, J.-D. Free Will Beliefs Are Better Predicted by Dualism than Determinism Beliefs across Different Cultures. PLoS ONE 2019, 14, e0221617. [Google Scholar] [CrossRef] [PubMed]

- Delnatte, C.; Roze, E.; Pouget, P.; Galléa, C.; Welniarz, Q. Can Neuroscience Enlighten the Philosophical Debate about Free Will? Neuropsychologia 2023, 188, 108632. [Google Scholar] [CrossRef] [PubMed]

- Braun, M.N.; Wessler, J.; Friese, M. A Meta-Analysis of Libet-Style Experiments. Neurosci. Biobehav. Rev. 2021, 128, 182–198. [Google Scholar] [CrossRef]

- Schlegel, A.; Alexander, P.; Sinnott-Armstrong, W.; Roskies, A.; Tse, P.U.; Wheatley, T. Hypnotizing Libet: Readiness Potentials with Non-Conscious Volition. Conscious. Cogn. 2015, 33, 196–203. [Google Scholar] [CrossRef]

- Brass, M.; Furstenberg, A.; Mele, A.R. Why Neuroscience Does Not Disprove Free Will. Neurosci. Biobehav. Rev. 2019, 102, 251–263. [Google Scholar] [CrossRef] [PubMed]

- Shum, Y.H.; Galang, C.M.; Brass, M. Using a Veto Paradigm to Investigate the Decision Models in Explaining Libet-Style Experiments. Conscious. Cogn. 2024, 124, 103732. [Google Scholar] [CrossRef]

- Zacks, O.; Jablonka, E. The evolutionary origins of the Global Neuronal Workspace in vertebrates. Neurosci. Conscious. 2023, 2023, niad020. [Google Scholar] [CrossRef]

- Irwin, L.N. Renewed Perspectives on the Deep Roots and Broad Distribution of Animal Consciousness. Front. Syst. Neurosci. 2020, 14, 57. [Google Scholar] [CrossRef]

- Hunter, P. The emergence of consciousness: Research on animals yields insights into how, when and why consciousness evolved. EMBO Rep. 2021, 22, e53199. [Google Scholar] [CrossRef]

- Kohda, M.; Bshary, R.; Kubo, N.; Awata, S.; Sowersby, W.; Kawasaka, K.; Kobayashi, T.; Sogawa, S. Cleaner fish recognize self in a mirror via self-face recognition like humans. Proc. Natl. Acad. Sci. USA 2023, 120, e2208420120. [Google Scholar] [CrossRef]

- Kohda, M.; Hotta, T.; Takeyama, T.; Awata, S.; Tanaka, H.; Asai, J.Y.; Jordan, A.L. If a fish can pass the mark test, what are the implications for consciousness and self-awareness testing in animals? PLoS Biol. 2019, 17, e3000021. [Google Scholar] [CrossRef] [PubMed]

- Von Bertalanffy, L. The History and Status of General Systems Theory. Acad. Manag. J. 1972, 15, 407–426. [Google Scholar] [CrossRef]

- Hofkirchner, W.; Schafranek, M. General System Theory. In Handbook of the Philosophy of Science, Philosophy of Complex Systems; Hooker, C., Ed.; North-Holland: Atlanta, GA, USA, 2011; Volume 10, pp. 177–194. [Google Scholar] [CrossRef]

- Kitto, K. A Contextualised General Systems Theory. Systems 2014, 2, 541–565. [Google Scholar] [CrossRef]

- Drack, M.; Schwarz, G. Recent developments in general system theory. Syst. Res. 2010, 27, 601–610. [Google Scholar] [CrossRef]

- Grames, E.M.; Schwartz, D.; Elphick, C.S. A systematic method for hypothesis synthesis and conceptual model development. Methods Ecol. Evol. 2022, 13, 2078–2087. [Google Scholar] [CrossRef]

- Lovejoy, J.C. Expanding our thought horizons in systems biology and medicine. Front. Syst. Biol. 2024, 4, 1385458. [Google Scholar] [CrossRef]

- Singh, D.; Shyam, P.; Verma, S.K.; Anjali, A. Medical Applications of Systems Biology. In Systems Biology Approaches: Prevention, Diagnosis, and Understanding Mechanisms of Complex Diseases; Joshi, S., Ray, R.R., Nag, M., Lahiri, D., Eds.; Springer: Singapore, 2024. [Google Scholar] [CrossRef]

- Katrakazas, P.; Grigoriadou, A.; Koutsouris, D. Applying a general systems theory framework in mental health treatment pathways: The case of the Hellenic Center of Mental Health and Research. Int. J. Ment. Health Syst. 2020, 14, 67. [Google Scholar] [CrossRef]

- Tramonti, F.; Giorgi, F.; Fanali, A. General system theory as a framework for biopsychosocial research and practice in mental health. Syst. Res. Behav. Sci. 2019, 36, 332–341. [Google Scholar] [CrossRef]

- Shannon, C.E. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Ben-Gal, I.; Kagan, E. Information Theory: Deep Ideas, Wide Perspectives, and Various Applications. Entropy 2021, 23, 232. [Google Scholar] [CrossRef]

- Kannan, M.; Bridgewater, G.; Zhang, M.; Blinov, M.L. Leveraging public AI tools to explore systems biology resources in mathematical modeling. npj Syst. Biol. Appl. 2025, 11, 15. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Adelaja, A.; Ye, F.X.; Deeds, E.; Wollman, R.; Hoffmann, A. Quantifying information accumulation encoded in the dynamics of biochemical signaling. Nat. Commun. 2021, 12, 1272. [Google Scholar] [CrossRef]

- Jensen, G.; Ward, R.D.; Balsam, P.D. Information: Theory, brain, and behavior. J. Exp. Anal. Behav. 2013, 100, 408–431. [Google Scholar] [CrossRef]

- Jost, J. Information Theory and Consciousness. Front. Appl. Math. Stat. 2021, 7, 641239. [Google Scholar] [CrossRef]

- Northoff, G.; Zilio, F. From Shorter to Longer Timescales: Converging Integrated Information Theory (IIT) with the Temporo-Spatial Theory of Consciousness (TTC). Entropy 2022, 24, 270. [Google Scholar] [CrossRef] [PubMed]

- Gómez-Márquez, J. What is life? Mol. Biol. Rep. 2021, 48, 6223–6230. [Google Scholar] [CrossRef]

- Sabater, B. Entropy Perspectives of Molecular and Evolutionary Biology. Int. J. Mol. Sci. 2022, 23, 4098. [Google Scholar] [CrossRef] [PubMed]

- Gómez-Márquez, J. Reflections upon a new definition of life. Sci. Nat. 2023, 110, 53. [Google Scholar] [CrossRef]

- Tetz, V.V.; Tetz, G.V. A new biological definition of life. Biomol. Concepts 2020, 11, 1–6. [Google Scholar] [CrossRef]

- Galimov, E.M. Phenomenon of life: Between equilibrium and non-linearity. Orig. Life Evol. Biosph. 2004, 34, 599–613. [Google Scholar] [CrossRef]

- Matsuno, K. Temporal cohesion as a candidate for negentropy in biological thermodynamics. Biosystems 2023, 230, 104957. [Google Scholar] [CrossRef]

- Gusev, E.; Sarapultsev, A.; Solomatina, L.; Chereshnev, V. SARS-CoV-2-Specific Immune Response and the Pathogenesis of COVID-19. Int. J. Mol. Sci. 2022, 23, 1716. [Google Scholar] [CrossRef]

- Bourret, R.B. Census of prokaryotic senses. J. Bacteriol. 2006, 188, 4165–4168. [Google Scholar] [CrossRef][Green Version]

- Zou, Z.; Qin, H.; Brenner, A.E.; Raghavan, R.; Millar, J.A.; Gu, Q.; Xie, Z.; Kreth, J.; Merritt, J. LytTR Regulatory Systems: A potential new class of prokaryotic sensory system. PLoS Genet. 2018, 14, e1007709. [Google Scholar] [CrossRef]

- Dlakić, M. Discovering unknown associations between prokaryotic receptors and their ligands. Proc. Natl. Acad. Sci. USA 2023, 120, e2316830120. [Google Scholar] [CrossRef] [PubMed]

- Monteagudo-Cascales, E.; Gavira, J.A.; Xing, J.; Velando, F.; Matilla, M.A.; Zhulin, I.B.; Krell, T. Bacterial sensor evolved by decreasing complexity. Proc. Natl. Acad. Sci. USA 2025, 122, e2409881122. [Google Scholar] [CrossRef] [PubMed]

- Baker, M.D.; Stock, J.B. Signal Transduction: Networks and Integrated Circuits in Bacterial Cognition. Curr. Biol. 2007, 17, R1021–R1024. [Google Scholar] [CrossRef] [PubMed]

- Fasnacht, M.; Polacek, N. Oxidative Stress in Bacteria and the Central Dogma of Molecular Biology. Front. Mol. Biosci. 2021, 8, 671037. [Google Scholar] [CrossRef]

- Misra, H.S.; Rajpurohit, Y.S. DNA damage response and cell cycle regulation in bacteria: A twist around the paradigm. Front. Microbiol. 2024, 15, 1389074. [Google Scholar] [CrossRef]

- Gusev, E.Y.; Zotova, N.V. Cellular Stress and General Pathological Processes. Curr. Pharm. Des. 2019, 25, 251–297. [Google Scholar] [CrossRef]

- Wadhwa, N.; Berg, H.C. Bacterial motility: Machinery and mechanisms. Nat. Rev. Microbiol. 2022, 20, 161–173. [Google Scholar] [CrossRef]

- Snoeck, S.; Johanndrees, O.; Nürnberger, T.; Zipfel, C. Plant Pattern Recognition Receptors: From Evolutionary Insight to Engineering. Nat. Rev. Genet. 2025, 26, 268–278. [Google Scholar] [CrossRef] [PubMed]

- Emery, M.A.; Dimos, B.A.; Mydlarz, L.D. Cnidarian Pattern Recognition Receptor Repertoires Reflect Both Phylogeny and Life History Traits. Front. Immunol. 2021, 12, 689463. [Google Scholar] [CrossRef]

- Alkhnbashi, O.S.; Meier, T.; Mitrofanov, A.; Backofen, R.; Voß, B. CRISPR-Cas bioinformatics. Methods 2020, 172, 3–11. [Google Scholar] [CrossRef] [PubMed]

- Veigl, S.J. Adaptive immunity or evolutionary adaptation? Transgenerational immune systems at the crossroads. Biol. Philos. 2022, 37, 41. [Google Scholar] [CrossRef]

- Wu, S.; Bu, X.; Chen, D.; Wu, X.; Wu, H.; Caiyin, Q.; Qiao, J. Molecules-mediated bidirectional interactions between microbes and human cells. NPJ Biofilms Microbiomes 2025, 11, 38. [Google Scholar] [CrossRef] [PubMed]

- Schertzer, J.W.; Whiteley, M. Microbial communication superhighways. Cell 2011, 144, 469–470. [Google Scholar] [CrossRef] [PubMed]

- Reguera, G. When microbial conversations get physical. Trends Microbiol. 2011, 19, 105–113. [Google Scholar] [CrossRef]

- Pacheco, A.R.; Pauvert, C.; Kishore, D.; Segrè, D. Toward FAIR Representations of Microbial Interactions. mSystems 2022, 7, e0065922. [Google Scholar] [CrossRef]

- Juszczuk-Kubiak, E. Molecular Aspects of the Functioning of Pathogenic Bacteria Biofilm Based on Quorum Sensing (QS) Signal-Response System and Innovative Non-Antibiotic Strategies for Their Elimination. Int. J. Mol. Sci. 2024, 25, 2655. [Google Scholar] [CrossRef]

- Almblad, H.; Randall, T.E.; Liu, F.; Leblanc, K.; Groves, R.A.; Kittichotirat, W.; Winsor, G.L.; Fournier, N.; Au, E.; Groizeleau, J.; et al. Bacterial Cyclic Diguanylate Signaling Networks Sense Temperature. Nat. Commun. 2021, 12, 1986. [Google Scholar] [CrossRef]

- Egiazaryan, G.G.; Sudakov, K.V. Theory of Functional Systems in the Scientific School of P.K. Anokhin. J. Hist. Neurosci. 2007, 16, 194–205. [Google Scholar] [CrossRef]

- Grant, S.G. The molecular evolution of the vertebrate behavioural repertoire. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2016, 371, 20150051. [Google Scholar] [CrossRef] [PubMed]

- Fountain, S.B.; Dyer, K.H.; Jackman, C.C. Simplicity From Complexity in Vertebrate Behavior: Macphail (1987) Revisited. Front. Psychol. 2020, 11, 581899. [Google Scholar] [CrossRef] [PubMed]

- Donahoe, J.W. Reflections on Behavior Analysis and Evolutionary Biology: A Selective Review of Evolution Since Darwin—The First 150 Years. Edited by M. A.; Bell, D.J.; Futuyama, W.F. Eanes, & J. S. Levinton. J. Exp. Anal. Behav. 2012, 97, 249–260. [Google Scholar] [CrossRef]

- Shumway, C.A. Habitat complexity, brain, and behavior. Brain Behav. Evol. 2008, 72, 123–134. [Google Scholar] [CrossRef]

- Larsson, M.; Abbott, B.W. Is the Capacity for Vocal Learning in Vertebrates Rooted in Fish Schooling Behavior? Evol. Biol. 2018, 45, 359–373. [Google Scholar] [CrossRef] [PubMed]

- Denver, R.J.; Middlemis-Maher, J. Lessons from evolution: Developmental plasticity in vertebrates with complex life cycles. J. Dev. Orig. Health Dis. 2010, 1, 282–291. [Google Scholar] [CrossRef]

- Hansen, M.J.; Domenici, P.; Bartashevich, P.; Burns, A.; Krause, J. Mechanisms of group-hunting in vertebrates. Biol. Rev. Camb. Philos. Soc. 2023, 98, 1687–1711. [Google Scholar] [CrossRef]

- Heldstab, S.A.; Isler, K.; Graber, S.M.; Schuppli, C.; van Schaik, C.P. The economics of brain size evolution in vertebrates. Curr. Biol. 2022, 32, R697–R708. [Google Scholar] [CrossRef]

- Fried, I. Neurons as will and representation. Nat. Rev. Neurosci. 2022, 23, 104–114. [Google Scholar] [CrossRef] [PubMed]

- Rochat, P. Developmental Roots of Human Self-consciousness. J. Cogn. Neurosci. 2024, 36, 1610–1619. [Google Scholar] [CrossRef]

- Vedor, J.E. Revisiting Carl Jung’s archetype theory a psychobiological approach. Biosystems 2023, 234, 105059. [Google Scholar] [CrossRef] [PubMed]

- Sun, H.; Kim, E. Archetype symbols and altered consciousness: A study of shamanic rituals in the context of Jungian psychology. Front. Psychol. 2024, 15, 1379391. [Google Scholar] [CrossRef]

- Chen, H.; Yang, J. Multiple Exposures Enhance Both Item Memory and Contextual Memory Over Time. Front. Psychol. 2020, 11, 565169. [Google Scholar] [CrossRef]

- Plater, L.; Nyman, S.; Joubran, S.; Al-Aidroos, N. Repetition enhances the effects of activated long-term memory. Q. J. Exp. Psychol. 2023, 76, 621–631. [Google Scholar] [CrossRef]

- Cross, L.; Atherton, G.; Sebanz, N. Intentional synchronisation affects automatic imitation and source memory. Sci. Rep. 2021, 11, 573. [Google Scholar] [CrossRef] [PubMed]

- Mahr, J.B.; Mascaro, O.; Mercier, H.; Csibra, G. The effect of disagreement on children’s source memory performance. PLoS ONE 2021, 16, e0249958. [Google Scholar] [CrossRef]

- Foy, J.E.; LoCasto, P.C.; Briner, S.W.; Dyar, S. Would a madman have been so wise as this?” The effects of source credibility and message credibility on validation. Mem. Cognit. 2017, 45, 281–295. [Google Scholar] [CrossRef]

- Martijena, I.D.; Molina, V.A. The influence of stress on fear memory processes. Braz. J. Med. Biol. Res. 2012, 45, 308–313. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, J.Z. From Structure to Behavior in Basolateral Amygdala-Hippocampus Circuits. Front. Neural. Circuits 2017, 11, 86. [Google Scholar] [CrossRef] [PubMed]

- Brod, G.; Werkle-Bergner, M.; Shing, Y.L. The influence of prior knowledge on memory: A developmental cognitive neuroscience perspective. Front. Behav. Neurosci. 2013, 7, 139. [Google Scholar] [CrossRef]

- Bein, O.; Reggev, N.; Maril, A. Prior knowledge influences on hippocampus and medial prefrontal cortex interactions in subsequent memory. Neuropsychologia 2014, 64, 320–330. [Google Scholar] [CrossRef] [PubMed]

- Kahn, D.; Gover, T. Consciousness in dreams. Int. Rev. Neurobiol. 2010, 92, 181–195. [Google Scholar] [CrossRef]

- von Bartheld, C.S.; Bahney, J.; Herculano-Houzel, S. The search for true numbers of neurons and glial cells in the human brain: A review of 150 years of cell counting. J. Comp. Neurol. 2016, 524, 3865–3895. [Google Scholar] [CrossRef]

- Gusev, E.; Sarapultsev, A. Interplay of G-proteins and Serotonin in the Neuroimmunoinflammatory Model of Chronic Stress and Depression: A Narrative Review. Curr. Pharm. Des. 2024, 30, 180–214. [Google Scholar] [CrossRef]

- Azevedo, F.A.; Carvalho, L.R.; Grinberg, L.T.; Farfel, J.M.; Ferretti, R.E.; Leite, R.E.; Jacob Filho, W.; Lent, R.; Herculano-Houzel, S. Equal numbers of neuronal and nonneuronal cells make the human brain an isometrically scaled-up primate brain. J. Comp. Neurol. 2009, 513, 532–541. [Google Scholar] [CrossRef] [PubMed]

- Bélanger, M.; Allaman, I.; Magistretti, P.J. Brain energy metabolism: Focus on astrocyte-neuron metabolic cooperation. Cell Metab. 2011, 14, 724–738. [Google Scholar] [CrossRef]

- Wang, X.J. Macroscopic gradients of synaptic excitation and inhibition in the neocortex. Nat. Rev. Neurosci. 2020, 21, 169–178. [Google Scholar] [CrossRef]

- Rakic, P. Evolution of the neocortex: A perspective from developmental biology. Nat. Rev. Neurosci. 2009, 10, 724–735. [Google Scholar] [CrossRef]

- Preuss, T.M.; Wise, S.P. Evolution of prefrontal cortex. Neuropsychopharmacology 2022, 47, 3–19. [Google Scholar] [CrossRef] [PubMed]

- Brosch, T.; Scherer, K.R.; Grandjean, D.; Sander, D. The impact of emotion on perception, attention, memory, and decision-making. Swiss. Med. Wkly. 2013, 143, w13786. [Google Scholar] [CrossRef]

- Raichle, M.E.; MacLeod, A.M.; Snyder, A.Z.; Powers, W.J.; Gusnard, D.A.; Shulman, G.L. A default mode of brain function. Proc. Natl. Acad. Sci. USA 2001, 98, 676–682. [Google Scholar] [CrossRef] [PubMed]

- Uylings, H.B.; Groenewegen, H.J.; Kolb, B. Do rats have a prefrontal cortex? Behav. Brain Res. 2003, 146, 3–17. [Google Scholar] [CrossRef]

- Concha-Miranda, M.; Hartmann, K.; Reinhold, A.; Brecht, M.; Sanguinetti-Scheck, J.I. Play, but not observing play, engages rat medial prefrontal cortex. Eur. J. Neurosci. 2020, 52, 4127–4138. [Google Scholar] [CrossRef]

- Dehay, C.; Huttner, W.B. Development and evolution of the primate neocortex from a progenitor cell perspective. Development 2024, 151, dev199797. [Google Scholar] [CrossRef]

- Huttner, W.B.; Heide, M.; Mora-Bermúdez, F.; Namba, T. Neocortical neurogenesis in development and evolution-Human-specific features. J. Comp. Neurol. 2024, 532, e25576. [Google Scholar] [CrossRef]

- Quiroga, R.Q.; Reddy, L.; Kreiman, G.; Koch, C.; Fried, I. Invariant visual representation by single neurons in the human brain. Nature 2005, 435, 1102–1107. [Google Scholar] [CrossRef] [PubMed]

- Evrard, H.C.; Forro, T.; Logothetis, N.K. Von Economo neurons in the anterior insula of the macaque monkey. Neuron 2012, 74, 482–489. [Google Scholar] [CrossRef]

- Banovac, I.; Sedmak, D.; Judaš, M.; Petanjek, Z. Von Economo Neurons—Primate-Specific or Commonplace in the Mammalian Brain? Front. Neural. Circuits. 2021, 15, 714611. [Google Scholar] [CrossRef]

- López-Ojeda, W.; Hurley, R.A. Von Economo Neuron Involvement in Social Cognitive and Emotional Impairments in Neuropsychiatric Disorders. J. Neuropsychiatry Clin. Neurosci. 2022, 34, 302–306. [Google Scholar] [CrossRef] [PubMed]

- Krause, M.; Theiss, C.; Brüne, M. Ultrastructural Alterations of Von Economo Neurons in the Anterior Cingulate Cortex in Schizophrenia. Anat. Rec. 2017, 300, 2017–2024. [Google Scholar] [CrossRef] [PubMed]

- González-Acosta, C.A.; Ortiz-Muñoz, D.; Becerra-Hernández, L.V.; Casanova, M.F.; Buriticá, E. Von Economo neurons: Cellular specialization of human limbic cortices? J. Anat. 2022, 241, 20–32. [Google Scholar] [CrossRef]

- Torrico, T.J.; Abdijadid, S. Neuroanatomy, Limbic System. In StatPearls [Internet]; StatPearls Publishing: Treasure Island, FL, USA, 2024. [Google Scholar] [PubMed]

- Cai, Z.J. The limbic-reticular coupling theory of memory processing in the brain and its greater compatibility over other theories. Dement. Neuropsychol. 2018, 12, 105–113. [Google Scholar] [CrossRef]

- Zha, X.; Xu, X. Dissecting the hypothalamic pathways that underlie innate behaviors. Neurosci. Bull. 2015, 31, 629–648. [Google Scholar] [CrossRef]

- Denton, D.A.; McKinley, M.J.; Farrell, M.; Egan, G.F. The role of primordial emotions in the evolutionary origin of consciousness. Conscious. Cogn. 2009, 18, 500–514. [Google Scholar] [CrossRef] [PubMed]

- Taran, S.; Gros, P.; Gofton, T.; Boyd, G.; Briard, J.N.; Chassé, M.; Singh, J.M. The reticular activating system: A narrative review of discovery, evolving understanding, and relevance to current formulations of brain death. Can. J. Anaesth. 2023, 70, 788–795. [Google Scholar] [CrossRef]

- Whyte, C.J.; Redinbaugh, M.J.; Shine, J.M.; Saalmann, Y.B. Thalamic contributions to the state and contents of consciousness. Neuron 2024, 112, 1611–1625. [Google Scholar] [CrossRef]

- Redinbaugh, M.J.; Phillips, J.M.; Kambi, N.A.; Mohanta, S.; Andryk, S.; Dooley, G.L.; Afrasiabi, M.; Raz, A.; Saalmann, Y.B. Thalamus Modulates Consciousness via Layer-Specific Control of Cortex. Neuron 2020, 106, 66–75.e12. [Google Scholar] [CrossRef]

- Aru, J.; Suzuki, M.; Rutiku, R.; Larkum, M.E.; Bachmann, T. Coupling the State and Contents of Consciousness. Front. Syst. Neurosci. 2019, 13, 43. [Google Scholar] [CrossRef]

- Shine, J.M.; Lewis, L.D.; Garrett, D.D.; Hwang, K. The impact of the human thalamus on brain-wide information processing. Nat. Rev. Neurosci. 2023, 24, 416–430. [Google Scholar] [CrossRef] [PubMed]

- Sgourdou, P. The Consciousness of Pain: A Thalamocortical Perspective. NeuroSci 2022, 3, 311–320. [Google Scholar] [CrossRef]

- Toker, D.; Müller, E.; Miyamoto, H.; Riga, M.S.; Lladó-Pelfort, L.; Yamakawa, K.; Artigas, F.; Shine, J.M.; Hudson, A.E.; Pouratian, N.; et al. Criticality supports cross-frequency cortical-thalamic information transfer during conscious states. Elife 2024, 13, e86547. [Google Scholar] [CrossRef]

- Strausfeld, N.J.; Hirth, F. Deep homology of arthropod central complex and vertebrate basal ganglia. Science 2013, 340, 157–161. [Google Scholar] [CrossRef] [PubMed]

- Butler, A.B. Evolution of the thalamus: A morphological and functional review. Thalamus Relat. Syst. 2008, 4, 35–58. [Google Scholar] [CrossRef]

- Tasserie, J.; Uhrig, L.; Sitt, J.D.; Manasova, D.; Dupont, M.; Dehaene, S.; Jarraya, B. Deep brain stimulation of the thalamus restores signatures of consciousness in a nonhuman primate model. Sci. Adv. 2022, 8, eabl5547. [Google Scholar] [CrossRef] [PubMed]

- Redinbaugh, M.J.; Afrasiabi, M.; Phillips, J.M.; Kambi, N.A.; Mohanta, S.; Raz, A.; Saalmann, Y.B. Thalamic deep brain stimulation paradigm to reduce consciousness: Cortico-striatal dynamics implicated in mechanisms of consciousness. PLoS Comput. Biol. 2022, 18, e1010294. [Google Scholar] [CrossRef]

- Kolb, B.; Whishaw, I.Q. Decortication of rats in infancy or adulthood produced comparable functional losses on learned and species-typical behaviors. J. Comp. Physiol. Psychol. 1981, 95, 468–483. [Google Scholar] [CrossRef]

- Vanderwolf, C.H.; Kolb, B.; Cooley, R.K. Behavior of the rat after removal of the neocortex and hippocampal formation. J. Comp. Physiol. Psychol. 1978, 92, 156–175. [Google Scholar] [CrossRef]

- Whishaw, I.Q.; Kolb, B. Can male decorticate rats copulate? Behav. Neurosci. 1983, 97, 270–279. [Google Scholar] [CrossRef]

- Whishaw, I.Q.; Kolb, B. Decortication abolishes place but not cue learning in rats. Behav. Brain Res. 1984, 11, 123–134. [Google Scholar] [CrossRef] [PubMed]

- Kolb, B.; Whishaw, I.Q.; van der Kooy, D. Brain development in the neonatally decorticated rat. Brain Res. 1986, 397, 315–326. [Google Scholar] [CrossRef] [PubMed]

- Cisek, P. Resynthesizing behavior through phylogenetic refinement. Atten. Percept. Psychophys. 2019, 81, 2265–2287. [Google Scholar] [CrossRef] [PubMed]

- Shigeno, S.; Andrews, P.L.R.; Ponte, G.; Fiorito, G. Cephalopod Brains: An Overview of Current Knowledge to Facilitate Comparison with Vertebrates. Front. Physiol. 2018, 9, 952. [Google Scholar] [CrossRef]

- Holland, L.Z.; Carvalho, J.E.; Escriva, H.; Laudet, V.; Schubert, M.; Shimeld, S.M.; Yu, J.K. Evolution of bilaterian central nervous systems: A single origin? EvoDevo 2013, 4, 27. [Google Scholar] [CrossRef]

- Fulgencio, L. Freud’s metapsychological speculations. Int. J. Psychoanal. 2005, 86 Pt 1, 99–123. [Google Scholar] [CrossRef]

- Briggs, D.E.G. The Cambrian Explosion. Curr. Biol. 2015, 25, R864–R868. [Google Scholar] [CrossRef]

- Zhuravlev, A.Y.; Wood, R.A. The Two Phases of the Cambrian Explosion. Sci. Rep. 2018, 8, 16656. [Google Scholar] [CrossRef]

- Sacerdot, C.; Louis, A.; Bon, C.; Berthelot, C.; Roest Crollius, H. Chromosome Evolution at the Origin of the Ancestral Vertebrate Genome. Genome Biol. 2018, 19, 166. [Google Scholar] [CrossRef]

- Simakov, O.; Marlétaz, F.; Yue, J.-X.; O’Connell, B.; Jenkins, J.; Brandt, A.; Calef, R.; Tung, C.-H.; Huang, T.-K.; Schmutz, J.; et al. Deeply Conserved Synteny Resolves Early Events in Vertebrate Evolution. Nat. Ecol. Evol. 2020, 4, 820–830. [Google Scholar] [CrossRef]

- Pombal, M.A.; Megías, M.; Bardet, S.M.; Puelles, L. New and Old Thoughts on the Segmental Organization of the Forebrain in Lampreys. Brain Behav. Evol. 2009, 74, 7–19. [Google Scholar] [CrossRef] [PubMed]

- Sower, S.A. Landmark Discoveries in Elucidating the Origins of the Hypothalamic-Pituitary System from the Perspective of a Basal Vertebrate, Sea Lamprey. Gen. Comp. Endocrinol. 2018, 264, 3–15. [Google Scholar] [CrossRef]

- Gusev, E.Y.; Zhuravleva, Y.A.; Zotova, N.V. Correlation of the Evolution of Immunity and Inflammation in Vertebrates. Biol. Bull. Rev. 2019, 9, 358–372. [Google Scholar] [CrossRef]

- Boehm, T. Evolution of Vertebrate Immunity. Curr. Biol. 2012, 22, R722–R732. [Google Scholar] [CrossRef] [PubMed]

- Tan, C.L.; Knight, Z.A. Regulation of Body Temperature by the Nervous System. Neuron 2018, 98, 31–48. [Google Scholar] [CrossRef]

- Birch, J. The search for invertebrate consciousness. Nous 2022, 56, 133–153. [Google Scholar] [CrossRef]

- Lenharo, M. Do insects have an inner life? Animal consciousness needs a rethink. Nature 2024, 629, 14–15. [Google Scholar] [CrossRef]

- Overgaard, M. Insect Consciousness. Front. Behav. Neurosci. 2021, 15, 653041. [Google Scholar] [CrossRef]

- de Waal, F.B.M.; Andrews, K. The question of animal emotions. Science 2022, 375, 1351–1352. [Google Scholar] [CrossRef]

- Mendl, M.; Paul, E.S.; Chittka, L. Animal behaviour: Emotion in invertebrates? Curr. Biol. 2011, 21, R463–R465. [Google Scholar] [CrossRef]

- Barron, A.B.; Klein, C. What insects can tell us about the origins of consciousness. Proc. Natl. Acad. Sci USA 2016, 113, 4900–4908. [Google Scholar] [CrossRef]

- Cruce, W.L.; Stuesse, S.L.; Newman, D.B. Evolution of the reticular formation. Acta Biol. Hung. 1988, 39, 327–333. [Google Scholar]

- Manger, P.R. Evolution of the Reticular Formation. In Encyclopedia of Neuroscience; Binder, M.D., Hirokawa, N., Windhorst, U., Eds.; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar] [CrossRef]

- Evenden, M.L.; Konopka, J.K. Recent history and future trends in the study of insect behavior in North America. Ann. Entomol. Soc. Am. 2024, 117, 150–159. [Google Scholar] [CrossRef]

- Bodlah, M.A.; Iqbal, J.; Ashiq, A.; Bodlah, I.; Jiang, S.; Mudassir, M.A.; Rasheed, M.T.; Fareen, A.G.E. Insect behavioral restraint and adaptation strategies under heat stress: An inclusive review. J. Saudi Soc. Agric. Sci. 2023, 22, 327–350. [Google Scholar] [CrossRef]

- Zhang, D.W.; Xiao, Z.J.; Zeng, B.P.; Li, K.; Tang, Y.L. Insect Behavior and Physiological Adaptation Mechanisms Under Starvation Stress. Front. Physiol. 2019, 10, 163. [Google Scholar] [CrossRef]

- Couzin-Fuchs, E.; Ayali, A. The social brain of ‘non-eusocial’ insects. Curr. Opin. Insect. Sci. 2021, 48, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Howard, S.R.; Avarguès-Weber, A.; Garcia, J.E.; Greentree, A.D.; Dyer, A.G. Numerical cognition in honeybees enables addition and subtraction. Sci. Adv. 2019, 5, eaav0961. [Google Scholar] [CrossRef]

- Dacke, M.; Srinivasan, M.V. Evidence for counting in insects. Anim. Cogn. 2008, 11, 683–689. [Google Scholar] [CrossRef]

- von Frisch, K. The Dance Language and Orientation of Bees; Harvard University Press: Cambridge, MA, USA, 1967. [Google Scholar]

- Menzel, R.; Greggers, U.; Smith, A. Honey bees navigate according to a map-like spatial memory. Proc. Natl. Acad. Sci. USA 2005, 102, 3040–3045. [Google Scholar] [CrossRef]

- Wehner, R. Desert ant navigation: How miniature brains solve complex tasks. J. Comp. Physiol. A 2003, 189, 579–588. [Google Scholar] [CrossRef]

- Hölldobler, B.; Wilson, E.O. The Ants; Harvard University Press: Cambridge, MA, USA, 1990. [Google Scholar]

- Liu, W.; Li, Q. Single-cell transcriptomics dissecting the development and evolution of nervous system in insects. Curr. Opin. Insect Sci. 2024, 63, 101201. [Google Scholar] [CrossRef] [PubMed]

- Rittschof, C.C.; Schirmeier, S. Insect models of central nervous system energy metabolism and its links to behavior. Glia 2018, 66, 1160–1175. [Google Scholar] [CrossRef] [PubMed]

- Hillyer, J.F. Insect immunology and hematopoiesis. Dev. Comp. Immunol. 2016, 58, 102–118. [Google Scholar] [CrossRef]

- Mangold, C.A.; Hughes, D.P. Insect Behavioral Change and the Potential Contributions of Neuroinflammation-A Call for Future Research. Genes 2021, 12, 465. [Google Scholar] [CrossRef]

- Wigglesworth, V.B. “Insect.” Encyclopedia Britannica. 2024. Available online: www.britannica.com/animal/insect (accessed on 30 January 2025).

- Hillyer, J.F.; Pass, G. The Insect Circulatory System: Structure, Function, and Evolution. Annu. Rev. Entomol. 2020, 65, 121–143. [Google Scholar] [CrossRef] [PubMed]

- Pendar, H.; Aviles, J.; Adjerid, K.; Schoenewald, C.; Socha, J.J. Functional compartmentalization in the hemocoel of insects. Sci. Rep. 2019, 9, 6075. [Google Scholar] [CrossRef]

- Monahan-Earley, R.; Dvorak, A.M.; Aird, W.C. Evolutionary origins of the blood vascular system and endothelium. J. Thromb. Haemost. 2013, 11 (Suppl. 1), 46–66. [Google Scholar] [CrossRef]

- Tong, K.J.; Duchêne, S.; Lo, N.; Ho, S.Y.W. The impacts of drift and selection on genomic evolution in insects. PeerJ 2017, 5, e3241. [Google Scholar] [CrossRef]

- Roderick, G.K. Geographic structure of insect populations: Gene flow, phylogeography, and their uses. Annu. Rev. Entomol. 1996, 41, 325–352. [Google Scholar] [CrossRef]

- Hill, T.; Unckless, R.L. Adaptation, ancestral variation and gene flow in a ‘Sky Island’ Drosophila species. Mol. Ecol. 2021, 30, 83–99. [Google Scholar] [CrossRef]

- Menzel, R. A short history of studies on intelligence and brain in honeybees. Apidologie 2021, 52, 23–34. [Google Scholar] [CrossRef]

- Oellermann, M.; Strugnell, J.M.; Lieb, B.; Mark, F.C. Positive selection in octopus haemocyanin indicates functional links to temperature adaptation. BMC Evol. Biol. 2015, 15, 133, Erratum in: BMC Evol. Biol. 2015, 15, 267. https://doi.org/10.1186/s12862-015-0536-5. [Google Scholar] [CrossRef]

- Neacsu, D.; Crook, R.J. Repeating ultrastructural motifs provide insight into the organization of the octopus arm nervous system. Curr. Biol. 2024, 34, 4767–4773.e2. [Google Scholar] [CrossRef]

- Bundgaard, M.; Abbott, N.J. Fine structure of the blood-brain interface in the cuttlefish Sepia officinalis (Mollusca, Cephalopoda). J. Neurocytol. 1992, 21, 260–275. [Google Scholar] [CrossRef] [PubMed]

- Hochner, B. Octopuses. Curr. Biol. 2008, 18, R897–R898. [Google Scholar] [CrossRef]

- Vizcaíno, R.; Guardiola, F.A.; Prado-Alvarez, M.; Machado, M.; Costas, B.; Gestal, C. Functional and molecular immune responses in Octopus vulgaris skin mucus and haemolymph under stressful conditions. Aulture Rep. 2023, 29, 101484. [Google Scholar] [CrossRef]

- Gestal, C.; Castellanos-Martínez, S. Understanding the cephalopod immune system based on functional and molecular evidence. Fish Shellfish Immunol. 2015, 46, 120–130. [Google Scholar] [CrossRef]

- Schipp, R. The blood vessels of cephalopods. A comparative morphological and functional survey. Experientia 1987, 43, 525–537. [Google Scholar] [CrossRef]

- Mather, J. Octopus Consciousness: The Role of Perceptual Richness. NeuroSci. 2021, 2, 276–290. [Google Scholar] [CrossRef]

- Mather, J. Why Are Octopuses Going to Be the ‘Poster Child’ for Invertebrate Welfare? J. Appl. Anim. Welf. Sci. 2022, 25, 31–40. [Google Scholar] [CrossRef]

- Scheel, D.; Godfrey-Smith, P.; Lawrence, M. Signal Use by Octopuses in Agonistic Interactions. Curr. Biol. 2016, 26, 377–382. [Google Scholar] [CrossRef]

- Chung, W.S.; Kurniawan, N.D.; Marshall, N.J. Comparative Brain Structure and Visual Processing in Octopus from Different Habitats. Curr. Biol. 2022, 32, 97–110.e4. [Google Scholar] [CrossRef]

- Ponte, G.; Chiandetti, C.; Edelman, D.B.; Imperadore, P.; Pieroni, E.M.; Fiorito, G. Cephalopod Behavior: From Neural Plasticity to Consciousness. Front. Syst. Neurosci. 2022, 15, 787139. [Google Scholar] [CrossRef] [PubMed]

- Gutnick, T.; Kuba, M.J.; Di Cosmo, A. Neuroecology: Forces That Shape the Octopus Brain. Curr. Biol. 2022, 32, R131–R135. [Google Scholar] [CrossRef]

- Suryanarayana, S.M.; Pérez-Fernández, J.; Robertson, B.; Grillner, S. The evolutionary origin of visual and somatosensory representation in the vertebrate pallium. Nat. Ecol. Evol. 2020, 4, 639–651. [Google Scholar] [CrossRef]

- Roxo, M.R.; Franceschini, P.R.; Zubaran, C.; Kleber, F.D.; Sander, J.W. The limbic system conception and its historical evolution. Sci. World J. 2011, 11, 2428–2441. [Google Scholar] [CrossRef]

- Feinberg, T.E.; Mallatt, J. The evolutionary and genetic origins of consciousness in the Cambrian Period over 500 million years ago. Front. Psychol. 2013, 4, 667. [Google Scholar] [CrossRef]

- Nieder, A. In search for consciousness in animals: Using working memory and voluntary attention as behavioral indicators. Neurosci. Biobehav. Rev. 2022, 142, 104865. [Google Scholar] [CrossRef]

- Madeira, N.; Oliveira, R.F. Long-Term Social Recognition Memory in Zebrafish. Zebrafish 2017, 14, 305–310. [Google Scholar] [CrossRef] [PubMed]

- Levin, E.D. Zebrafish assessment of cognitive improvement and anxiolysis: Filling the gap between in vitro and rodent models for drug development. Rev. Neurosci. 2011, 22, 75–84. [Google Scholar] [CrossRef]

- Daniel, D.K.; Bhat, A. Bolder and Brighter? Exploring Correlations Between Personality and Cognitive Abilities Among Individuals Within a Population of Wild Zebrafish, Danio rerio. Front. Behav. Neurosci. 2020, 14, 138. [Google Scholar] [CrossRef]

- Learmonth, M.J. The Matter of Non-Avian Reptile Sentience, and Why It “Matters” to Them: A Conceptual, Ethical and Scientific Review. Animals 2020, 10, 901. [Google Scholar] [CrossRef] [PubMed]

- Janovcová, M.; Rádlová, S.; Polák, J.; Sedláčková, K.; Peléšková, Š.; Žampachová, B.; Frynta, D.; Landová, E. Human Attitude toward Reptiles: A Relationship between Fear, Disgust, and Aesthetic Preferences. Animals 2019, 9, 238. [Google Scholar] [CrossRef] [PubMed]

- Grinde, B. Consciousness makes sense in the light of evolution. Neurosci. Biobehav. Rev. 2024, 164, 105824. [Google Scholar] [CrossRef]

- Fabbro, F.; Aglioti, S.M.; Bergamasco, M.; Clarici, A.; Panksepp, J. Evolutionary aspects of self- and world consciousness in vertebrates. Front. Hum. Neurosci. 2015, 9, 157. [Google Scholar] [CrossRef]

- Young, J.Z. The Anatomy of the Nervous System of Octopus vulgaris; Clarendon Press: Oxford, UK, 1971. [Google Scholar]

- Zullo, L.; Hochner, B. A New Perspective on the Organization of an Invertebrate Brain. Commun. Integr. Biol. 2011, 4, 26–29. [Google Scholar] [CrossRef]

- Carls-Diamante, S. The Octopus and the Unity of Consciousness. Biol. Philos. 2017, 32, 1269–1287. [Google Scholar] [CrossRef]

- Albertin, C.B.; Simakov, O.; Mitros, T.; Wang, Z.Y.; Pungor, J.R.; Edsinger-Gonzales, E.; Brenner, S.; Ragsdale, C.W.; Rokhsar, D.S. The Octopus Genome and the Evolution of Cephalopod Neural and Morphological Novelties. Nature 2015, 524, 220–224. [Google Scholar] [CrossRef]

- Liscovitch-Brauer, N.; Alon, S.; Porath, H.T.; Elstein, B.; Unger, R.; Ziv, T.; Admon, A.; Levanon, E.Y.; Rosenthal, J.J.C.; Eisenberg, E. Trade-Off Between Transcriptome Plasticity and Genome Evolution in Cephalopods. Cell 2017, 169, 191–202.e11. [Google Scholar] [CrossRef] [PubMed]

- Deryckere, A.; Seuntjens, E. The Cephalopod Large Brain Enigma: Are Conserved Mechanisms of Stem Cell Expansion the Key? Front. Physiol. 2018, 9, 1160. [Google Scholar] [CrossRef]

- Amodio, P.; Fiorito, G. A Preliminary Attempt to Investigate Mirror Self-Recognition in Octopus vulgaris. Front. Physiol. 2022, 13, 951808. [Google Scholar] [CrossRef] [PubMed]

- Godfrey-Smith, P. Cephalopods and the Evolution of the Mind. Pac. Conserv. Biol. 2013, 19, 4–9. [Google Scholar] [CrossRef]

- Chung, W.S.; Kurniawan, N.D.; Marshall, N.J. Toward an MRI-Based Mesoscale Connectome of the Squid Brain. iScience 2020, 23, 100816. [Google Scholar] [CrossRef]

- Dunton, A.D.; Göpel, T.; Ho, D.H.; Burggren, W. Form and Function of the Vertebrate and Invertebrate Blood-Brain Barriers. Int. J. Mol. Sci. 2021, 22, 12111. [Google Scholar] [CrossRef]

- Brown, E.R.; Piscopo, S. Synaptic Plasticity in Cephalopods: More Than Just Learning and Memory? Invert. Neurosci. 2013, 13, 35–44. [Google Scholar] [CrossRef]

- Matzel, L.D.; Kolata, S. Selective attention, working memory, and animal intelligence. Neurosci. Biobehav. Rev. 2010, 34, 23–30. [Google Scholar] [CrossRef]

- Öztel, T.; Balcı, F. Surfacing of Latent Time Memories Supports the Representational Basis of Timing Behavior in Mice. Anim. Cogn. 2024, 27, 57. [Google Scholar] [CrossRef]

- Lage, C.A.; Wolmarans, W.; Mograbi, D.C. An evolutionary view of self-awareness. Behav. Processes 2022, 194, 104543. [Google Scholar] [CrossRef]

- Zamorano-Abramson, J.; Michon, M.; Hernández-Lloreda, M.V.; Aboitiz, F. Multimodal imitative learning and synchrony in cetaceans: A model for speech and singing evolution. Front. Psychol. 2023, 14, 1061381. [Google Scholar] [CrossRef] [PubMed]

- Lei, Y. Sociality and self-awareness in animals. Front. Psychol. 2023, 13, 1065638. [Google Scholar] [CrossRef] [PubMed]

- Horowitz, A. Smelling themselves: Dogs investigate their own odours longer when modified in an “olfactory mirror” test. Behav. Processes. 2017, 143, 17–24. [Google Scholar] [CrossRef] [PubMed]

- Cazzolla Gatti, R.; Velichevskaya, A.; Gottesman, B.; Davis, K. Grey wolf may show signs of self-awareness with the sniff test of self-recognition. Ethol. Ecol. Evol. 2020, 33, 444–467. [Google Scholar] [CrossRef]

- Kohda, M.; Sogawa, S.; Jordan, A.L.; Kubo, N.; Awata, S.; Satoh, S.; Kobayashi, T.; Fujita, A.; Bshary, R. Further evidence for the capacity of mirror self-recognition in cleaner fish and the significance of ecologically relevant marks. PLoS Biol. 2022, 20, e3001529. [Google Scholar] [CrossRef]

- King, S.L.; Guarino, E.; Donegan, K.; McMullen, C.; Jaakkola, K. Evidence that bottlenose dolphins can communicate with vocal signals to solve a cooperative task. R. Soc. Open Sci. 2021, 8, 202073. [Google Scholar] [CrossRef]

- Melis, A.P.; Tomasello, M. Chimpanzees (Pan troglodytes) coordinate by communicating in a collaborative problem-solving task. Proc. Biol. Sci. 2019, 286, 20190408. [Google Scholar] [CrossRef]

- Schamberg, I.; Surbeck, M.; Townsend, S.W. Cross-population variation in usage of a call combination: Evidence of signal usage flexibility in wild bonobos. Anim. Cogn. 2024, 27, 58. [Google Scholar] [CrossRef]

- Torday, J.S. Cellular evolution of language. Prog. Biophys. Mol. Biol. 2021, 167, 140–146. [Google Scholar] [CrossRef]

- Abramson, J.Z.; Hernández-Lloreda, M.V.; García, L.; Colmenares, F.; Aboitiz, F.; Call, J. Imitation of novel conspecific and human speech sounds in the killer whale (Orcinus orca). Proc. Biol. Sci. 2018, 285, 20172171. [Google Scholar] [CrossRef]

- Riemer, S.; Bonorand, A.; Stolzlechner, L. Evidence for the communicative function of human-directed gazing in 6- to 7-week-old dog puppies. Anim. Cogn. 2024, 27, 61. [Google Scholar] [CrossRef]

- Chakrabarti, S.; Bump, J.K.; Jhala, Y.V.; Packer, C. Contrasting levels of social distancing between the sexes in lions. iScience 2021, 24, 102406. [Google Scholar] [CrossRef]

- Mech, L.D. Alpha status, dominance, and division of labor in wolf packs. Can. J. Zool. 1999, 77, 1196–1203. [Google Scholar] [CrossRef][Green Version]

- Cafazzo, S.; Lazzaroni, M.; Marshall-Pescini, S. Dominance relationships in a family pack of captive arctic wolves (Canis lupus arctos): The influence of competition for food, age and sex. PeerJ 2016, 4, e2707. [Google Scholar] [CrossRef]

- Watts, H.E.; Tanner, J.B.; Lundrigan, B.L.; Holekamp, K.E. Post-weaning maternal effects and the evolution of female dominance in the spotted hyena. Proc. Biol. Sci. 2009, 276, 2291–2298. [Google Scholar] [CrossRef] [PubMed]

- Archie, E.A.; Morrison, T.A.; Foley, C.A.H.; Moss, C.J.; Alberts, S.C. Dominance rank relationships among wild female African elephants, Loxodonta Africana. Anim. Behav. 2006, 71, 117–127. [Google Scholar] [CrossRef]

- Pusey, A.E.; Schroepfer-Walker, K. Female competition in chimpanzees. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2013, 368, 20130077. [Google Scholar] [CrossRef]

- Sugiyama, Y. My primate studies. Primates 2022, 63, 9–24. [Google Scholar] [CrossRef]

- Kaplan, G. The evolution of social play in songbirds, parrots and cockatoos—Emotional or highly complex cognitive behaviour or both? Neurosci. Biobehav. Rev. 2024, 161, 105621. [Google Scholar] [CrossRef]

- Clayton, N.S.; Emery, N.J. The social life of corvids. Curr. Biol. 2007, 17, R652–R656. [Google Scholar] [CrossRef]

- Miller, L.J.; Lauderdale, L.K.; Mellen, J.D.; Walsh, M.T.; Granger, D.A. Assessment of animal management and habitat characteristics associated with social behavior in bottlenose dolphins across zoological facilities. PLoS ONE 2021, 16, e0253732. [Google Scholar] [CrossRef]

- Connor, R.C. Dolphin social intelligence: Complex alliance relationships in bottlenose dolphins and a consideration of selective environments for extreme brain size evolution in mammals. Philos. Trans. R. Soc. Lond. B Bio.l Sci. 2007, 362, 587–602. [Google Scholar] [CrossRef] [PubMed]

- Bates, L.A.; Byrne, R.W. Creative or created: Using anecdotes to investigate animal cognition. Methods 2007, 42, 12–21. [Google Scholar] [CrossRef] [PubMed]

- Hill, H.M.; Weiss, M.; Brasseur, I.; Manibusan, A.; Sandoval, I.R.; Robeck, T.; Sigman, J.; Werner, K.; Dudzinski, K.M. Killer whale innovation: Teaching animals to use their creativity upon request. Anim. Cogn. 2022, 25, 1091–1108. [Google Scholar] [CrossRef] [PubMed]

- Roth, G.; Dicke, U. Evolution of the brain and intelligence in primates. Prog. Brain Res. 2012, 195, 413–430. [Google Scholar] [CrossRef]

- Kuczaj, S.; Tranel, K.; Trone, M.; Hill, H. Are Animals Capable of Deception or Empathy? Implications for Animal Consciousness and Animal Welfare. Anim. Welf. 2001, 10 (Suppl. S1), S161–S173. [Google Scholar] [CrossRef]

- Hall, K.; Brosnan, S.F. Cooperation and deception in primates. Infant Behav. Dev. 2017, 48, 28–44. [Google Scholar] [CrossRef]

- Oesch, N. Deception as a Derived Function of Language. Front. Psychol. 2016, 7, 1485. [Google Scholar] [CrossRef]

- Dunbar, R.I.M.; Shultz, S. Why are there so many explanations for primate brain evolution? Philos. Trans. R. Soc. Lond. B Biol. Sci. 2017, 372, 20160244. [Google Scholar] [CrossRef]

- Vernouillet, A.; Leonard, K.; Katz, J.S.; Magnotti, J.F.; Wright, A.; Kelly, D.M. Abstract-concept learning in two species of new world corvids, pinyon jays (Gymnorhinus cyanocephalus) and California scrub jays (Aphelocoma californica). J. Exp. Psychol. Anim. Learn. Cogn. 2021, 47, 384–392. [Google Scholar] [CrossRef] [PubMed]

- Wright, A.A.; Magnotti, J.F.; Katz, J.S.; Leonard, K.; Vernouillet, A.; Kelly, D.M. Corvids Outperform Pigeons and Primates in Learning a Basic Concept. Psychol. Sci. 2017, 28, 437–444. [Google Scholar] [CrossRef]

- Jarvis, E.D.; Güntürkün, O.; Bruce, L.; Csillag, A.; Karten, H.; Kuenzel, W.; Medina, L.; Paxinos, G.; Perkel, D.J.; Shimizu, T.; et al. Avian Brain Nomenclature Consortium. Avian brains and a new understanding of vertebrate brain evolution. Nat. Rev. Neurosci. 2005, 6, 151–159. [Google Scholar] [CrossRef] [PubMed]

- Katz, J.S.; Wright, A.A.; Bodily, K.D. Issues in the Comparative Cognition of Abstract-Concept Learning. Comp. Cogn. Behav. Rev. 2007, 2, 79–92. [Google Scholar] [CrossRef] [PubMed]

- Matsuzawa, T. Symbolic representation of number in chimpanzees. Curr. Opin. Neurobiol. 2009, 19, 92–98. [Google Scholar] [CrossRef]

- Felsche, E.; Völter, C.J.; Herrmann, E.; Seed, A.M.; Buchsbaum, D. How can I find what I want? Can children, chimpanzees and capuchin monkeys form abstract representations to guide their behavior in a sampling task? Cognition 2024, 245, 105721. [Google Scholar] [CrossRef]

- Fragaszy, D.M. Cummins-Sebree SE. Relational spatial reasoning by a nonhuman: The example of capuchin monkeys. Behav. Cogn. Neurosci. Rev. 2005, 4, 282–306. [Google Scholar] [CrossRef]

- Chung, H.K.; Alós-Ferrer, C.; Tobler, P.N. Conditional valuation for combinations of goods in primates. Philos. Trans. R. Soc. Lond. B. Biol. Sci. 2021, 376, 20190669. [Google Scholar] [CrossRef]

- Saez, A.; Rigotti, M.; Ostojic, S.; Fusi, S.; Salzman, C.D. Abstract Context Representations in Primate Amygdala and Prefrontal Cortex. Neuron 2015, 87, 869–881. [Google Scholar] [CrossRef]

- Miletto Petrazzini, M.E.; Brennan, C.H. Application of an abstract concept across magnitude dimensions by fish. Sci. Rep. 2020, 10, 16935. [Google Scholar] [CrossRef]

- Diaz, F.; O’Donoghue, E.M.; Wasserman, E.A. Two-item conditional same-different categorization in pigeons: Finding differences. J. Exp. Psychol. Anim. Learn. Cogn. 2021, 47, 455–463. [Google Scholar] [CrossRef] [PubMed]

- Urcelay, G.P.; Miller, R.R. On the generality and limits of abstraction in rats and humans. Anim. Cogn. 2010, 13, 21–32. [Google Scholar] [CrossRef]

- Mendl, M.; Neville, V.; Paul, E.S. Bridging the Gap: Human Emotions and Animal Emotions. Affect Sci. 2022, 3, 703–712. [Google Scholar] [CrossRef]

- Panksepp, J.; Panksepp, J.B. Toward a cross-species understanding of empathy. Trends Neurosci. 2013, 36, 489–496. [Google Scholar] [CrossRef]

- Briefer, E.F. Vocal contagion of emotions in non-human animals. Proc. Biol. Sci. 2018, 285, 20172783. [Google Scholar] [CrossRef] [PubMed]

- Quaranta, A.; d’Ingeo, S.; Amoruso, R.; Siniscalchi, M. Emotion Recognition in Cats. Animals 2020, 10, 1107. [Google Scholar] [CrossRef]

- Albuquerque, N.; Resende, B. Dogs functionally respond to and use emotional information from human expressions. Evol. Hum. Sci. 2022, 5, e2. [Google Scholar] [CrossRef] [PubMed]

- Maurício, L.S.; Leme, D.P.; Hötzel, M.J. How to Understand Them? A Review of Emotional Indicators in Horses. J. Equine. Vet. Sci. 2023, 126, 104249. [Google Scholar] [CrossRef]

- Trösch, M.; Cuzol, F.; Parias, C.; Calandreau, L.; Nowak, R.; Lansade, L. Horses Categorize Human Emotions Cross-Modally Based on Facial Expression and Non-Verbal Vocalizations. Animals 2019, 9, 862. [Google Scholar] [CrossRef]

- Riters, L.V.; Polzin, B.J.; Maksimoski, A.N.; Stevenson, S.A.; Alger, S.J. Birdsong and the Neural Regulation of Positive Emotion. Front. Psychol. 2022, 13, 903857. [Google Scholar] [CrossRef]

- Papini, M.R.; Penagos-Corzo, J.C.; Pérez-Acosta, A.M. Avian Emotions: Comparative Perspectives on Fear and Frustration. Front. Psychol. 2019, 9, 2707. [Google Scholar] [CrossRef] [PubMed]

- Webb, C.E.; Romero, T.; Franks, B.; de Waal, F.B.M. Long-term consistency in chimpanzee consolation behaviour reflects empathetic personalities. Nat. Commun. 2017, 8, 292. [Google Scholar] [CrossRef]

- Wilson, J.M. Examining Empathy Through Consolation Behavior in Prairie Voles. J. Undergrad. Neurosci. Educ. 2021, 19, R35–R38. [Google Scholar] [PubMed]

- de Waal, F.B.M.; Preston, S.D. Mammalian empathy: Behavioural manifestations and neural basis. Nat. Rev. Neurosci. 2017, 18, 498–509. [Google Scholar] [CrossRef] [PubMed]

- Adriaense, J.E.C.; Koski, S.E.; Huber, L.; Lamm, C. Challenges in the comparative study of empathy and related phenomena in animals. Neurosci. Biobehav. Rev. 2020, 112, 62–82. [Google Scholar] [CrossRef]

- Edgar, J.L.; Nicol, C.J.; Clark, C.C.A.; Paul, E.S. Measuring empathic responses in animals. Appl. Anim. Behav. Sci. 2012, 138, 182–193. [Google Scholar] [CrossRef]

- Güntürkün, O.; Pusch, R.; Rose, J. Why birds are smart. Trends. Cogn. Sci. 2024, 28, 197–209. [Google Scholar] [CrossRef]

- dos Reis, M.; Thawornwattana, Y.; Angelis, K.; Telford, M.J.; Donoghue, P.C.; Yang, Z. Uncertainty in the Timing of Origin of Animals and the Limits of Precision in Molecular Timescales. Curr. Biol. 2015, 25, 2939–2950. [Google Scholar] [CrossRef]

- Vidal, C. What is the noosphere? Planetary superorganism, majorevolutionary transition and emergence. Syst. Res. Behav. Sci. 2024, 41, 614–662. [Google Scholar] [CrossRef]

- Jasečková, G.; Konvit, M.; Vartiak, L. Vernadsky’s concept of the noosphere and its reflection in ethical and moral values of society. Hist. Sci. Technol. 2022, 12, 231–248. [Google Scholar] [CrossRef]

- Delio, I. Can Science Alone Advance the Noosphere? Understanding Teilhard’s Omega Principle. Theol. Sci. 2024, 23, 21–37. [Google Scholar] [CrossRef]

- Miier, T.I.; Holodiuk, L.S.; Rybalko, L.M.; Tkachenko, I.A. Chronic fatigue development of modern human in the context of v. vernadsky’s nosophere theory. Wiad. Lek. 2019, 72, 1012–1016. [Google Scholar] [CrossRef] [PubMed]

- Deacon, T.W. Conscious evolution of the noösphere: Hubris or necessity? Relig. Brain Behav. 2023, 13, 457–459. [Google Scholar] [CrossRef]

- Oldfield, J.D.; Shaw, D.J.B.V.I. Vernadsky and the noosphere concept: Russian understandings of society–nature interaction. Geoforum 2006, 37, 145–154. [Google Scholar] [CrossRef]

- Pylypchuk, O. Whether the biosphere will turn into the noosphere? Review of the monograph: L. A. Griffen. The last stage of the biosphere evolution: Monograph. Kyiv: Talkom, 2024. 200 p. ISBN 978-617-8352-32-5. Hist. Sci. Technol. 2024, 14, 284–291. [Google Scholar] [CrossRef]

- Schlesinger, W.H. A Noosphere. BioScience 2018, 68, 722–723. [Google Scholar] [CrossRef]

- Korobova, E.; Romanov, S. Ecogeochemical exploration of noosphere in light of ideas of V.I. Vernadsky. J. Geochem. Explor. 2014, 147, 58–64. [Google Scholar] [CrossRef]

- Palagin, A.V.; Kurgaev, A.F.; Shevchenko, A.I. The Noosphere Paradigm of the Development of Science and Artificial Intelligence. Cybern. Syst. Anal. 2017, 53, 503–511. [Google Scholar] [CrossRef]

- Lapaeva, V.V. The Law of a Technogenic Civilization to Face Technological Dehumanization Challenges. Law Digit. Age 2021, 2, 3–32. [Google Scholar] [CrossRef]

- Clark, K.B. Digital life, a theory of minds, and mapping human and machine cultural universals. Behav. Brain Sci. 2020, 43, e98. [Google Scholar] [CrossRef]

- Kulikov, S. Non-anthropogenic mind and complexes of cultural codes. Semiotica 2016, 2016, 63–73. [Google Scholar] [CrossRef]

- Cottam, R.; Vounckx, R. Intelligence: Natural, artificial, or what? Biosystems 2024, 246, 105343. [Google Scholar] [CrossRef]

- Bayne, T.; Frohlich, J.; Cusack, R.; Moser, J.; Naci, L. Consciousness in the cradle: On the emergence of infant experience. Trends Cogn. Sci. 2023, 27, 1135–1149. [Google Scholar] [CrossRef] [PubMed]

- Clune, J.; Pennock, R.T.; Ofria, C.; Lenski, R.E. Ontogeny tends to recapitulate phylogeny in digital organisms. Am. Nat. 2012, 180, E54–E63. [Google Scholar] [CrossRef] [PubMed]

- Richardson, M.K.; Hanken, J.; Gooneratne, M.L.; Pieau, C.; Raynaud, A.; Selwood, L.; Wright, G.M. There is no highly conserved embryonic stage in the vertebrates: Implications for current theories of evolution and development. Anat. Embryol. 1997, 196, 91–106. [Google Scholar] [CrossRef] [PubMed]

- Hopwood, N. Pictures of evolution and charges of fraud: Ernst Haeckel’s embryological illustrations. Isis 2006, 97, 260–301. [Google Scholar] [CrossRef]

- Raff, R.A. The Shape of Life: Genes, Development, and the Evolution of Animal Form; University of Chicago Press: Chicago, IL, USA, 1996. [Google Scholar]

- DiFrisco, J.; Jaeger, J. Homology of process: Developmental dynamics in comparative biology. Interface Focus 2021, 11, 20210007. [Google Scholar] [CrossRef]

- Mashour, G.A.; Alkire, M.T. Evolution of consciousness: Phylogeny, ontogeny, and emergence from general anesthesia. Proc. Natl. Acad. Sci. USA 2013, 110 (Suppl. 2), 10357–10364. [Google Scholar] [CrossRef]

- Decety, J.; Svetlova, M. Putting together phylogenetic and ontogenetic perspectives on empathy. Dev. Cogn. Neurosci. 2012, 2, 1–24. [Google Scholar] [CrossRef]

- Friederici, A.D. Language development and the ontogeny of the dorsal pathway. Front. Evol. Neurosci. 2012, 4, 3. [Google Scholar] [CrossRef]

- Kolk, S.M.; Rakic, P. Development of prefrontal cortex. Neuropsychopharmacology 2022, 47, 41–57. [Google Scholar] [CrossRef]

- Ravignani, A.; Dalla Bella, S.; Falk, S.; Kello, C.T.; Noriega, F.; Kotz, S.A. Rhythm in speech and animal vocalizations: A cross-species perspective. Ann. N. Y. Acad. Sci. 2019, 1453, 79–98. [Google Scholar] [CrossRef] [PubMed]

- Seed, A.; Byrne, R. Animal tool-use. Curr. Biol. 2010, 20, R1032–R1039. [Google Scholar] [CrossRef] [PubMed]

- Lazarowski, L.; Davila, A.; Krichbaum, S.; Cox, E.; Smith, J.G.; Waggoner, L.P.; Katz, J.S. Matching-to-sample abstract-concept learning by dogs (Canis familiaris). J. Exp. Psychol. Anim. Learn. Cogn. 2021, 47, 393–400. [Google Scholar] [CrossRef]

- Bajaffer, A.; Mineta, K.; Gojobori, T. Evolution of memory system-related genes. FEBS Open. Bio. 2021, 11, 3201–3210. [Google Scholar] [CrossRef]

- Hampton, R.R.; Schwartz, B.L. Episodic memory in nonhumans: What, and where, is when? Curr. Opin. Neurobiol. 2004, 14, 192–197. [Google Scholar] [CrossRef]

- Farnworth, M.S.; Montgomery, S.H. Evolution of neural circuitry and cognition. Biol. Lett. 2024, 20, 20230576. [Google Scholar] [CrossRef]

- Eyler, L.T.; Elman, J.A.; Hatton, S.N.; Gough, S.; Mischel, A.K.; Hagler, D.J.; Franz, C.E.; Docherty, A.; Fennema-Notestine, C.; Gillespie, N.; et al. Resting State Abnormalities of the Default Mode Network in Mild Cognitive Impairment: A Systematic Review and Meta-Analysis. J. Alzheimers Dis. 2019, 70, 107–120. [Google Scholar] [CrossRef]

- Huang, H.; Chen, C.; Rong, B.; Wan, Q.; Chen, J.; Liu, Z.; Zhou, Y.; Wang, G.; Wang, H. Resting-State Functional Connectivity of Salience Network in Schizophrenia and Depression. Sci. Rep. 2022, 12, 11204. [Google Scholar] [CrossRef] [PubMed]

- Tai, M.C. The impact of artificial intelligence on human society and bioethics. Tzu. Chi. Med. J. 2020, 32, 339–343. [Google Scholar] [CrossRef]

- Morgenstern, J.D.; Rosella, L.C.; Daley, M.J.; Goel, V.; Schünemann, H.J.; Piggott, T. “AI’s gonna have an impact on everything in society, so it has to have an impact on public health”: A fundamental qualitative descriptive study of the implications of artificial intelligence for public health. BMC Public Health 2021, 21, 40. [Google Scholar] [CrossRef] [PubMed]

- Alfeir, N.M. Dimensions of artificial intelligence on family communication. Front. Artif. Intell. 2024, 7, 1398960. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, X.; Cao, X.; Huang, C.; Liu, E.; Qian, S.; Liu, X.; Wu, Y.; Dong, F.; Qiu, C.-W.; et al. Artificial intelligence: A powerful paradigm for scientific research. Innovation 2021, 2, 100179. [Google Scholar] [CrossRef]

- Oksanen, A.; Cvetkovic, A.; Akin, N.; Latikka, R.; Bergdahl, J.; Chen, Y.; Savela, N. Artificial intelligence in fine arts: A systematic review of empirical research. Comput. Hum. Behav. Artif. Hum. 2023, 1, 100004. [Google Scholar] [CrossRef]

- Zylinska, J. Art in the age of artificial intelligence. Science 2023, 381, 139–140. [Google Scholar] [CrossRef]

- Dave, T.; Athaluri, S.A.; Singh, S. ChatGPT in medicine: An overview of its applications, advantages, limitations, future prospects, and ethical considerations. Front. Artif. Intell. 2023, 6, 1169595. [Google Scholar] [CrossRef]

- Oludare, I.A.; Aman, J.A.E.O.; Kemi, V.D.; Nachaat, A.M.; Humaira, A. State-of-the-art in artificial neural network applications: A survey. Heliyon 2018, 4, e00938. [Google Scholar]

- Ivcevic, Z.; Grandinetti, M. Artificial intelligence as a tool for creativity. J. Creat. 2024, 34, 100079. [Google Scholar] [CrossRef]

- Cheng, M. The Creativity of Artificial Intelligence in Art. Proceedings 2022, 81, 110. [Google Scholar] [CrossRef]

- Grilli, L.; Pedota, M. Creativity and artificial intelligence: A multilevel perspective. Creat. Innov. Manag. 2024, 33, 234–247. [Google Scholar] [CrossRef]

- Magurean, I.D.; Brata, A.M.; Ismaie, A.; Barsan, M.; Czako, Z.; Pop, C.; Muresan, L.; Jurje, A.I.; Dumitrascu, D.I.; Brata, V.D.; et al. Artificial Intelligence as a Substitute for Human Creativity. J. Res. Philos. Hist. 2024, 7, p7. [Google Scholar] [CrossRef]