Abstract

Autism Spectrum Disorder (ASD) seriously affects social, communication, and behavioral functions, and early accurate diagnosis is crucial to improve the prognosis of patients. Traditional diagnosis methods rely on professional doctors to make subjective diagnosis through scales, the feature extraction of existing machine learning methods is inefficient, and existing deep learning methods have limitations in capturing time-varying features and the joint expression of time–frequency features. To this end, this study proposes a time–frequency synergy network (TFSNet) to improve the accuracy of ASD EEG signal classification. The proposed Dynamic Residual Block (TDRB) was used to enhance time-domain feature extraction; Short-Time Fourier Transform (STFT), convolutional attention mechanism, and transformation technology were combined to capture frequency-domain information; and an adaptive cross-domain attention mechanism (ACDA) was designed to realize efficient fusion of time–frequency features. The experimental results show that the average accuracy of TFSNet on the University of Sheffield (containing 28 ASD patients and 28 healthy controls) and KAU dataset (containing 12 ASD patients and five healthy controls) reaches 98.68%and 97.14%, respectively, yielding significantly better results than the existing machine learning and deep learning methods. In addition, the analysis of model decisions through interpretability analysis techniques enhances its transparency and reliability.

1. Introduction

Autism Spectrum Disorder (ASD) is a complex neurodevelopmental condition characterized by challenges in social interaction, communication, and repetitive behaviors [1,2]. The World Health Organization estimates that approximately 1 in 100 children worldwide are diagnosed with ASD, with prevalence varying significantly by region and socioeconomic status, reflecting global differences in diagnostic capabilities and service provision [3]. The etiology of ASD remains unclear, though research suggests it results from complex interactions between genetic and environmental factors [4,5].

Currently, ASD diagnosis relies on clinical observations and behavioral assessments by medical professionals [6,7,8,9], a process that is time-consuming and lacks objective biological indicators, potentially leading to diagnostic variability [10]. In recent years, an increasing number of studies have focused on identifying ASD through biomarkers, especially the electroencephalogram (EEG) [11]. While magnetic resonance imaging (MRI) [12] and computed tomography (CT) [13] are also used in ASD research, EEG offers advantages, including cost-effectiveness, high temporal resolution, and noninvasiveness [14]. Although EEG signal analysis requires preprocessing for noise and artifact removal [15], constructing ASD diagnostic models using EEG signals remains crucial for clinical screening and diagnosis support.

Many researchers are applying machine learning approaches to ASD EEG signal analysis for feature extraction and classification [16]. However, traditional machine learning methods typically rely on manual feature extraction, requiring tedious feature engineering that depends heavily on researcher experience and domain knowledge [17,18]. To address these limitations, researchers have explored increasingly complex feature extraction methods to improve classification performance [19,20,21].

The development of deep learning has made it a prominent area of research in ASD EEG signal analysis [22,23,24,25]. Compared to traditional machine learning, deep learning automatically extracts complex nonlinear features from data, greatly reducing the need for manual feature engineering. Researchers are continuing to explore methods for automating feature extraction and minimizing manual intervention. Tawhid et al. [26] proposed an effective diagnostic framework based on EEG spectrogram images. The authors applied a Short-Time Fourier Transform to convert the processed signals into 2D spectrogram images, which were then analyzed using machine learning. Ari et al. [27] proposed a method combining the Douglas–Peucker algorithm, sparse coding-based feature mapping, and a convolutional neural network. The authors sparsely encode the EEG rhythm during feature extraction and input it into a CNN for training. Baygin et al. [28] proposed a method for automatically detecting ASD. They extracted one-dimensional Local Binary Pattern (1D-LBP) features from electroencephalogram (EEG) signals and generated spectrogram images using Short-Time Fourier Transform (STFT). Pretrained MobileNetV2, ShuffleNet, and SqueezeNet models were used to extract deep features. Feature selection was performed using a two-layer ReliefF algorithm, resulting in excellent performance. Ardakani et al. [29] divided the EEG signals from autistic and healthy individuals into nonoverlapping windows, treated them as images, and classified them using a 2D deep convolutional neural network (2D-DCNN). Wadhera et al. [30] employed transfer learning to classify autism using pretrained EEGNet and DeepConvNet models, achieving accuracies of 89.6% and 92.3%, respectively. Ullah et al. [31] proposed a deep learning-based weighted ensemble model for classifying Autism Spectrum Disorder (ASD) using electroencephalogram (EEG) data. They converted the original EEG signals into multi-channel two-dimensional spectrograms using Short-Time Fourier Transform (STFT). A grid search strategy was then employed to determine the optimal model weight combination, resulting in excellent performance.

Despite recent advances in feature extraction research for autism signal classification, traditional convolutional neural networks are still insufficient to capture temporal variations in EEG signals. Due to the use of a fixed convolutional kernel, it is difficult for these models to adapt to the dynamic changes of EEG signals. In addition, most current studies lack a strategy to jointly represent time- and frequency-domain EEG signals at the feature level, which limits the effective capture of integrated signal information. These limitations lead to a weak generalization ability of existing methods when faced with different datasets in subject-related experiments, making it difficult to maintain stable performance under different acquisition environments and participant characteristics. To address these issues, we propose a time–frequency synergy network (TFSNet) that fuses time- and frequency-domain features for the classification of EEG signals in autism. This network introduces dynamic convolution into the time-domain feature extraction, which can adaptively adjust the parameters of the convolution kernel and enhance the ability to capture the temporal variations of EEG signals. In addition, this paper proposes an attention mechanism that fuses time-domain and frequency-domain features, which reveals the dynamic changes and complex patterns of EEG signals in greater depth while effectively representing the time-domain and frequency-domain features jointly. The contributions of this paper are as follows:

- A time–frequency synergy network model is proposed in this paper for the feature extraction and classification of autism EEG signals. This model effectively extracts key information from EEG signals and significantly improves classification performance.

- This paper introduces dynamic convolution into EEG time-domain feature extraction for ASD. We propose the TDRB module, which adaptively adjusts convolution kernel parameters and enhances the model’s ability to capture time-varying features.

- This paper proposes an adaptive cross-domain attention mechanism to fuse time-domain and frequency-domain features. This mechanism effectively extracts and integrates key information in time and frequency feature and realizes feature-level interaction and fusion.

- This paper compared and analyzed the proposed model with other deep learning models and mainstream methods based on the same dataset and enhanced the transparency and interpretability of the model by the SHAP method, thus verifying the superior performance and application potential of the model in the field of autism EEG signal diagnosis.

2. Materials and Methods

2.1. Dataset

This paper utilized the King Abdulaziz University dataset and the University of Sheffield dataset to include diverse age groups and ensure sample variety.

2.1.1. King Abdulaziz University Dataset

The KAU dataset [32] used in this paper is public and can be obtained by researchers through the link (https://malhaddad.kau.edu.sa/Pages-BCI-Datasets.aspx (17 October 2024)) or by contacting the dataset owner, Dr. Mohammed Jafar Alhaddad, for a request via email (malhaddad@kau.edu.sa). The dataset does not contain the participants’ personal identification information to ensure their privacy. The dataset includes 17 subjects: 12 ASD participants (boys and girls aged 6–20 years) and five controls (boys aged 9–13 years). For more information about the dataset, please refer to [28]. In addition, to keep the data set balanced and prevent overfitting, we visually selected five cases of data with good artifact-free from the ASD category to maintain the balance with the control group.

2.1.2. The University of Sheffield Dataset

Another dataset used in this paper is the Sheffield University Public Dataset [33]. It includes data from 28 individuals diagnosed with Autism Spectrum Disorders (ASDs) and 28 neurologically normal controls, with participants ranging in age from 18 to 68 years. The recordings were made during 2.5 min (150 s) of rest with eyes closed. In addition, the authors re-referenced the data as the average of all electrodes, with the data collected at a sampling frequency of 512 Hz. Data collection and sharing were permitted with permission from the Health Research Authority, specifically under IRAS ID 212171 [33].

2.2. Preprocessing

The raw EEG signals went through several different preprocessing stages. First, we downsampled the data to 256 Hz. Subsequently, a Butterworth bandpass filter (0.5–100 Hz) was used to remove noise and high-frequency components of nonbrain electrical activity from the EEG signals. We then decomposed the preprocessed the EEG signals using independent component analysis (ICA) to identify and remove components related to non-neural artifacts such as eye movements, electrocardiograms, and electromyography. In addition, a whole-brain average reference was applied to standardize the signals across channels. Furthermore, we applied 50 Hz and 60 Hz notch filters to the KAU dataset and the University of Sheffield dataset, respectively, to remove line noise. Finally, we performed Z-score normalization on the preprocessed datasets.In this paper, the preprocessed dataset was divided into nonoverlapping signal segments, with each segment lasting 2 s as a sample.

2.3. Overview of Time–Frequency Synergy Network Architecture

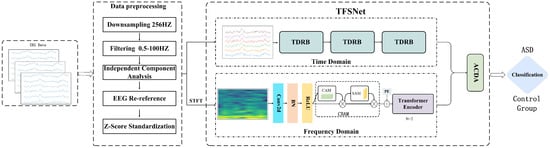

To more comprehensively characterize the feature information in EEG signals, this paper proposes a time–frequency synergy network—TFSNet—which integrates time-domain and frequency-domain features. The main process and architecture are shown in Figure 1. As shown, the TFSNet consists of two streams—a time-domain stream and a frequency-domain stream—which extract and fuse time-domain and frequency-domain features, respectively. The time-domain stream extracts the time characteristics of the signal through a dynamic residual network constructed by dynamic convolution, while the frequency-domain stream first uses Short-Time Fourier Transform (STFT) to obtain the frequency domain characteristics. To achieve efficient interaction and fusion between the two features, this paper also proposes an adaptive cross-domain attention mechanism to further improve the overall performance of the model. By combining time-domain and frequency-domain features, the model effectively captures multi-scale features and global patterns in EEG signals, enhancing the accuracy and robustness of Autism Spectrum Disorder (ASD) classification. The design and implementation of the model are described in detail below.

Figure 1.

Overall visualization of the time–frequency synergy network (TFSNet) architecture.

2.3.1. Temporal Feature Extraction with Dynamic Convolution and Residual Connection

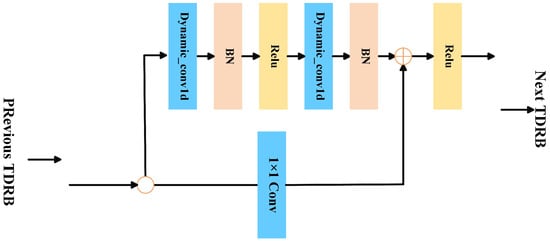

Dynamic convolution, unlike static convolution with fixed kernels, adaptively generates convolution kernels for each input, improving temporal feature extraction in EEG signals [34]. Therefore, we propose a Time-Domain Dynamic Residual Block (TDRB) that combines dynamic convolution and residual connection mechanisms [35]. The TDRB structure is illustrated in Figure 2. It adaptively adjusts convolution kernel parameters and prevents gradient vanishing, thereby enhancing the model’s sensitivity to different input signals. This improves the model’s ability to extract temporal variations in autism EEG.

Figure 2.

Structure of the Time-Domain Dynamic Residual Block (TDRB).

Each input feature map of the TDRB first passes through two dynamic convolutional layers, which are each followed by a batch normalization layer (BN) [36] to obtain normalized data. The input features are not only nonlinearly mapped by the dynamic convolution module but also directly connected to the output through identity mapping. The final output of the dynamic residual block is expressed as

where

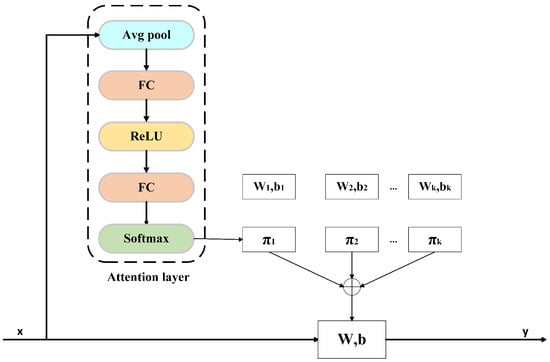

is defined based on channel consistency: If the input and output channels match, ; otherwise, , where and denote the weights and bias of the Conv. Here, indicates the i-th dynamic convolution. Dynamic convolution creates a convolution kernel tailored to the input sample by weighted summation of multiple kernels, enabling a single convolution operation. Compared to traditional convolution, dynamic convolution enhances both feature extraction and representation capabilities. The calculation process is shown in Figure 3, where the input is x, and the output is y, which are defined as

where

where represents the normalized attention weight for the k-th kernel (, ), reflecting its relative contribution to the input x. and denote the aggregated kernel weight and bias.

Figure 3.

Dynamic convolution calculation process.

The weight coefficient in the dynamic convolution is not fixed but is adjusted accordingly as the input EEG signal changes dynamically. It is determined by an attention mechanism. This attention mechanism is similar to the Squeeze-and-Excitation attention mechanism (SE) [37], except that it uses softmax instead of sigmoid as the activation function, which can dynamically adjust the output sparsity. The calculation process can be expressed as

where denotes the global average pooling operation. is the unnormalized attention score of the j-th convolution kernel, which is used to calculate the final attention weight, and represents the unnormalized attention score of the k-th convolution kernel. A constant controls the smoothness of these weights, ensuring that the early attention distribution remains nearly uniform.

2.3.2. Attention-Enhanced Frequency-Domain Feature Extraction

Frequency-domain features offer a unique perspective on neural activity and can reveal the specific functions of different frequency bands in EEG signal analysis. Furthermore, time-domain and frequency-domain features complement each other [38]. Leveraging both features can significantly enhance EEG signal decoding accuracy. Therefore, based on the time-domain feature extraction, in this paper, we adopt a frequency-domain feature extraction branch and integrate it with the time-domain feature fusion strategy to improve the overall performance of the model. In addition, frequency-domain features also are extensively applied in the research and diagnosis of neurological disorders, such as attention deficit hyperactivity disorder (ADHD) [39] and epilepsy detection [40].

Prior to feature extraction, we apply the Short-Time Fourier Transform (STFT) to the raw EEG signals to capture features in frequency domains. In this paper, the Short-Time Fourier Transform adopts the Hanning window, with a window length of 256 and an overlap of 50%. Next, a convolutional neural network (CNN) processes the frequency-domain features to extract low-level local features, capturing local patterns and dynamic changes. To further enhance the characteristics of the frequency domain, extract key information, and suppress irrelevant characteristics, a Convolutional Block Attention Module (CBAM) [41] is introduced in the branch of the frequency domain. The CBAM consists of two components: channel attention and spatial attention. Its primary function is to adaptively assign higher weights to important features, enhancing the model’s representational capacity. In this paper, the CBAM adopts the standard configuration, including the sequential combination of two sub-modules: channel attention and spatial attention.

When extracting features in the frequency domain of EEG signals, in addition to focusing on the local features of the signal, it is also necessary to capture long-term dependencies and global patterns between different periods. To capture global patterns and long-range dependencies in frequency-domain features, a Transformer encoder is introduced after the Convolutional Block Attention Module (CBAM) to further model the spectral features, thereby enhancing the model’s classification ability for Autism Spectrum Disorder (ASD). In this paper, we only used the Transformer encoder part, adopted four parallel attention heads, and connected the Transformer encoder part to the Convolutional Block Attention Module (CBAM) to model the global state, thereby capturing the global dependencies of the EEG.

2.3.3. Time-Frequency Feature Fusion of Adaptive Cross-Domain Attention Mechanism

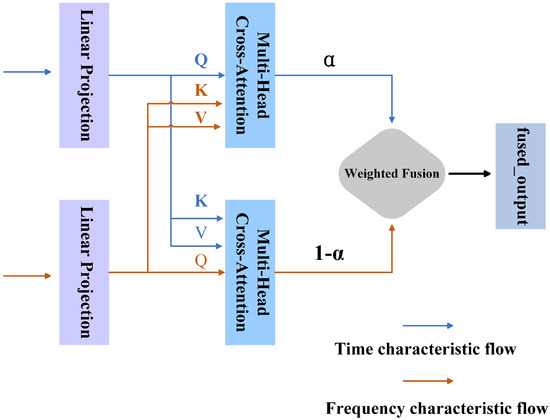

In autism EEG data, the features in the time and frequency domain are highly complementary. However, they are relatively independent in their feature representation. Simply concatenating the two features may introduce redundant or irrelevant information, hindering effective model learning [42,43]. Therefore, this paper proposes an Adaptive Cross-Domain Attention mechanism (ACDA), which facilitates interaction and fusion between time- and frequency-domain features and improves the decoding accuracy of EEG signals.

Unlike traditional multi-head attention mechanisms that typically focus on multi-view learning within the same feature domain, ACDA emphasizes cross-domain interactions between time-domain and frequency-domain representations. These attention heads operate in different directions (time-to-frequency and frequency-to-time) to capture complementary information and simultaneously suppress redundant signals. Among them, the multi-head attention mechanism can be understood as the process in which the model analyzes EEG data from multiple perspectives simultaneously, similar to how clinicians integrate different types of information (such as behavioral observations and neural signals) to form a comprehensive diagnosis. Each “head” in this mechanism focuses on a specific aspect of the time–frequency relationship, enabling the model to capture complex neural patterns related to ASD classification. Compared to more complex fusion techniques (e.g., bilinear pooling), ACDA avoids excessively increasing model parameters while still capturing higher-order correlations across domains. The specific structure is illustrated in Figure 4, where multiple attention heads adaptively learn to emphasize the most discriminative features for robust ASD classification.

Figure 4.

Adaptive Cross-Domain Attention mechanism structure.

The input features and are first linearly projected into their respective query, key, and value matrices: , , for the time domain and , , or the frequency domain. The cross-domain attention mechanism computes attention weights by interacting the projected matrices. Specifically, from the time domain is matched with from the frequency domain to calculate , representing the attention flow from the time to the frequency domain. Conversely, and compute , reflecting attention flow in the opposite direction. These computations effectively model the interplay between the time and frequency domains, as formalized below:

where and denote the attention weights between the time and frequency domains in both directions. To enhance adaptability, learnable weight parameters and are introduced to adjust these weights, producing the weighted results dynamically.

After obtaining the weighted attention weights, we further calculate the output of the cross-domain features:

where represents the time domain feature output obtained by weighting the attention from the time domain to the frequency domain, and represents the frequency domain feature output obtained by weighting the attention from the frequency domain to the time domain.

To reduce the feature redundancy after fusion, we use an adaptive fusion factor to perform a weighted fusion of the two features and to obtain the final fusion feature O. The formula is as follows:

where represents the sigmoid activation function, and and are learned parameters. Through this fusion strategy, the model can automatically adjust the fusion ratio of time domain and frequency domain features according to the specific distribution of the data, thereby optimizing the joint expression of features.

2.4. Performance Evaluation Parameters

We uses different evaluation metrics to evaluate our proposed model, including accuracy, precision, recall, specificity, and F1-score.

TP and TN are true positive and true negative, respectively, and FP and FN are false positive and false negative, respectively.

3. Results

3.1. Model Parameters

To evaluate the performance of the model, we randomly divided the EEG data into five equal parts, used 5-fold cross-validation, and obtained the final average evaluation index. During the training process, the batch size and the number of training rounds (epochs) were set to 32 and 100, respectively, the initial learning rate was 0.01, and the Adam optimizer was used. There are two key parameters K and in the dynamic convolution. To effectively determine the optimal values of these two parameters, we adopted a grid search strategy. For each set of parameter combinations, the same training configuration was used for experiments, and the best parameter combination was selected based on the minimum loss value on the test set. The experimental results show that when K and are 10 and 25, respectively, the model performance is optimal. Therefore, this set of parameter settings was used in the final model training to ensure the best feature extraction effect and classification performance.

3.2. Experimental Results and Analysis

This section analyzes and summarizes the results of the classification experiments conducted on the University of Sheffield dataset and the KAU dataset. The experimental results show that TFSNet has significant effectiveness in feature extraction, as shown in Table 1 and Table 2. On the University of Sheffield dataset, the accuracy of Random Forest (RF), K Nearest Neighbors (KNN), and Support Vector Machine (SVM) were all below 85% when used alone, reflecting the limitations of traditional methods in feature extraction. However, when combined with the features extracted by TFSNet, the overall accuracy of the classifiers was over 97%, with the combination of RF and TFSNet achieving an accuracy of 99.27%. On the KAU dataset, the accuracies of RF, KNN, and SVM alone were all below 90%, whereas when combined with TFSNet, the accuracies of all combinations were above 97%. This indicates that the highly discriminative features extracted by TFSNet through dynamic convolution and time–frequency feature fusion effectively compensate for the limitations of traditional classifiers and significantly improve classification accuracy.

Table 1.

Performance comparison of traditional classifiers and TFSNet combination on the University of Sheffield dataset.

Table 2.

Performance comparison of traditional classifiers and TFSNet combination on the KAU dataset.

In addition, TFSNet also performed well compared to mainstream deep learning models on both datasets, as shown in Table 3 and Table 4. In the results of the deep learning comparison experiments, on the University of Sheffield dataset, the accuracy results of EEGNet, DeepConvNet, and ShallowConvNet were below 95%, while TFSNet reached 98.68%. On the KAU dataset, the accuracy results of EEGNet, DeepConvNet, and ShallowConvNet were are all below 90%, and the other methods such as Tawhid et al. [26] and Ullah et al. [31] yielded 95.25% and 93.56%, respectively, while the accuracy of TFSNet was 97.14%, which outperforms all the comparative models and shows its superiority in processing complex data patterns. This indicates that TFSNet has strong feature extraction and generalization ability in complex data pattern processing and can effectively capture the main features of EEG signals in different data sets, thus improving the classification performance. To ensure a comprehensive evaluation, we report the variance from the 5-fold cross-validation in this study. For the University of Sheffield dataset, the variance in accuracy is 0.55%, and for the KAU dataset, it is 0.7%. These low variances suggest that the model’s performance is stable across different folds, reinforcing the reliability of the reported results.

Table 3.

Performance comparison of mainstream deep learning models on the University of Sheffield dataset.

Table 4.

Performance comparison of mainstream deep learning models on the KAU dataset.

It can be seen from Table 2 and Table 4 that the performance of the model on the KAU dataset was not satisfactory. This may stem from inherent differences in EEG signals between adolescents and adults, as the KAU dataset consists of adolescent subjects, while the Sheffield dataset consists mainly of adults. Adolescent brain activity patterns may exhibit greater variability or different frequency characteristics that are not fully captured by the model. In addition, differences in data collection methods or devices between the two datasets may have introduced subtle variations that affected performance.

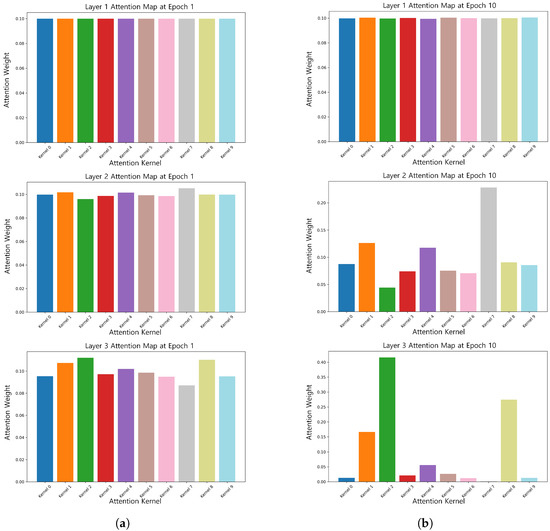

3.3. Dynamic Convolutional Attention Weight Visualization

To verify the effectiveness of dynamic convolution in dealing with the time-varying nature of EEG signals, we visualized its attentional weights, as shown in Figure 5. Figure 5 shows that in the early stage of training (1st epoch), the attention weights of each convolutional kernel were more uniformly distributed due to the large temperature coefficient , which allowed the model to fully explore the features in the early stage. As training progressed, gradually decreased, and the attention weights began to focus on key features. For example, by the 10th epoch, the attention of some of the convolutional kernels in the second layer decreased, while the attention of the third layer was focused on kernel 1, kernel 2, and kernel 8.

Figure 5.

Attention weights of the same subject at epoch 1 () and epoch 10 (). (a) Attention weights of the same subject at epoch 1 (). (b) Attention weights of the same subject at epoch 10 ().

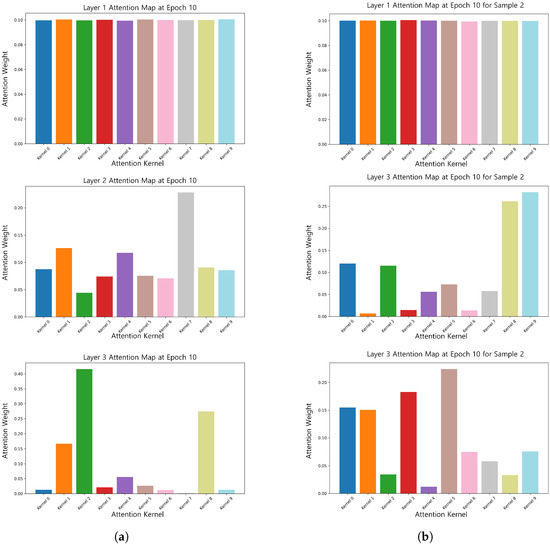

Furthermore, the attentional weights of different samples dynamically convolved at the 10th epoch () were visualized, as illustrated in Figure 6. The results indicate that for the temperature coefficient, the attentional weights of different samples exhibited differences. Specifically, one sample allocated more attention to the features of kernel 1, kernel 2, and kernel 8, while the other sample focused on other key features. This phenomenon suggests that dynamic convolution can dynamically adjust the attention weights according to the characteristics of the input samples, gradually shifting from global features to sample-specific key features, thus improving the model’s ability to extract complex signal features.

Figure 6.

Attention weights of the 10th epoch for different samples (epoch = 10). (a) Attention weight map of sample 1 at epoch = 10. (b) Attention weight map of sample 2 at epoch = 10.

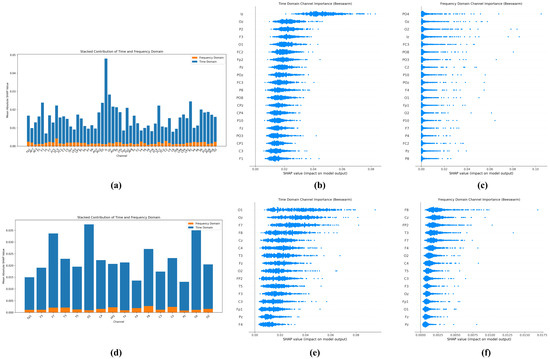

3.4. Interpretability Analysis Based on SHAP

Figure 7 shows the interpretability analysis of the TFSNet model performed by the SHapley Additive exPlanations (SHAP) method, aiming to reveal the contribution patterns of electrodes in different brain regions in the classification of Autism Spectrum Disorders (ASDs). This analysis not only validates the model’s classification decisions but also provides important insights into the neurophysiological mechanisms of ASD. For the Sheffield dataset (adult), SHAP analysis showed that electrodes in the occipital region (such as Oz, O1, and O2) exhibited the highest SHAP contribution values in both the time and frequency domains, with the average contribution significantly exceeding the other regions. This result is highly consistent with the findings of clinical studies on abnormalities in visual processing and visual information integration in ASD [46,47]. In addition, the high contribution of electrodes in the parieto-occipital region, such as PO3, PO4, and POz, further highlights the critical role of visual–spatial processing networks in ASD classification. The beeswarm plot in the picture clearly demonstrates the importance of these electrodes, while the contribution of the other electrodes is relatively low, indicating the dominant role of visually related brain areas in adult ASD classification.

Figure 7.

Explainable analysis results based on SHAP: (a–c) are the analysis results of the University of Sheffield dataset; (d–f) are the analysis results of the KAU dataset.

Unlike the Sheffield dataset, SHAP analysis of the KAU dataset (adolescents) showed a higher contribution from prefrontal electrodes (e.g., F7, F8, Fp1, and Fp2), which may reflect more significant executive function deficits in adolescents with ASD [48]. Furthermore, the involvement of electrodes in the central region, such as Cz, indicates the importance of sensor motion processing in this population. The difference in the pattern of electrode importance between the two datasets clearly reveals that ASD may present different neurophysiological features at different developmental stages. Overall, the analysis in Figure 7 not only validates the rationality and biological interpretability of the classification decisions made by the TFSNet model but also provides valuable insights for clinical practice. For example, the identified key electrode regions can be used to optimize EEG acquisition protocols and guide personalized diagnostic methods and potential intervention strategies, thereby improving the accuracy and efficiency of ASD diagnosis.

3.5. Ablation Experiment

To evaluate the contribution of each component in TFSNet to model performance, we first conducted single-branch ablation experiments on the University of Sheffield and KAU datasets, and the results are shown in Table 5 and Table 6. The experimental results show that the single branch results were lower than TFSNet in both datasets. This paper also conducted ablation experiments on various components of TFSNet, and the results are shown in Table 7 and Table 8. The experimental results show that when only the dynamic convolution in the time domain was removed, the accuracy of the model on the two datasets was reduced by 1.17% and 0.9%, respectively, showing a small performance decline, while the adaptive span in the frequency domain was removed. When removing the Adaptive Cross-Domain Attention mechanism (ACDA), the accuracy dropped most significantly, dropping by 2.33% and 3.1%, respectively, being especially notable on the KAU dataset. In addition, the deletion of the CBAM module decreased the second, with a decrease of 1.32% and 1.36%, respectively. Notably, in the “only time” experiment, the model specificity index using only temporal branches was higher than TFSNet on both datasets, but the recall was lower, which may lead to the risk of missed diagnosis and hence delayed intervention timing.

Table 5.

Single-branch ablation experiment on the University of Sheffield dataset.

Table 6.

Single-branch ablation experiment on the KAU dataset.

Table 7.

Ablation results using the University of Sheffield dataset.

Table 8.

Ablation experiment results using the KAU dataset.

The above experimental results verify our hypothesis that dynamic convolution can adaptively adjust the convolution kernel and effectively improve the ability to capture the temporal variation characteristics of different EEG signal features. It is worth noting that the Adaptive Cross-Domain Attention mechanism (ACDA) plays a key role in the interaction and fusion of time–frequency features, significantly enhancing the performance of the model. In addition, the CBAM module can also better enhance key features in the frequency domain and suppress uncritical features, thereby further improving the robustness and generalization ability of the model.

4. Discussion

This paper proposes a time–frequency synergy network (TFSNet) for Autism Spectrum Disorder (ASD) classification using EEG signals, and it was validated on the University of Sheffield and KAU datasets.

TFSNet introduces an innovative architecture that addresses these challenges by integrating time- and frequency-domain features. Using dynamic convolution, TFSNet adaptively adjusts convolution kernels to capture the time-varying characteristics of EEG signals, overcoming the limitations of static convolution in models like EEGNet. In the frequency domain, it employs Short-Time Fourier Transform (STFT) and a Convolutional Attention Module (CBAM) for robust feature extraction. The Adaptive Cross-Domain Attention mechanism (ACDA) enables bidirectional interaction and fusion of time- and frequency-domain features, enhancing classification performance and generalization. This time–frequency synergy makes TFSNet more robust and effective for ASD classification across varied datasets.

TFSNet, in combination with SHAP analysis, revealed key EEG patterns associated with ASD that were highly correlated with known ASD neurophysiological features such as abnormal activity of theta and alpha waves [46,47]. This finding provides clinicians with interpretable diagnostic evidence to help distinguish ASD subtypes and guide treatment decisions. For example, the specific frequency band activity differences identified by TFSNet could be used to design targeted neurofeedback training to improve social or cognitive function in patients. In addition, SHAP analysis identifies the most important electrode locations for diagnosis, which provides the possibility to optimize EEG acquisition, significantly reducing the time and cost of the examination and making it more viable for application in resource-limited clinical Settings. In terms of personalized diagnosis, TFSNet can generate diagnostic markers based on patient-specific EEG patterns, which can provide support for the formulation of individualized treatment plans. For example, in clinical practice, physicians can use the output of TFSNet in combination with other clinical assessments to develop targeted intervention strategies. However, the limitation of the current model is that its validation data are mainly from specific populations, and its generalization ability needs to be further tested in a wider patient population in the future. In addition, the integration of TFSNet into existing clinical workflows may face technical compatibility and ethical challenges, which will be further explored in subsequent studies.

Despite the excellent performance of TFSNet, its limitations cannot be ignored. Firstly, the computational complexity of the model is a key issue. Its multi-component architecture, including dynamic convolutions, CBAM modules, Transformer encoders, and ACDA mechanisms, significantly improves performance but also increases computational cost. In resource-constrained clinical settings, such as using portable EEG devices or within real-time diagnostic systems, this complexity may limit the deployment of models. Future research should explore model compression techniques such as pruning or quantization to reduce computational requirements while maintaining high accuracy. In addition, the real-time performance of the model needs to be further optimized to meet the requirements of rapid diagnosis in clinical environments.

Interpretability is another area that needs to be improved. Although SHAP analysis reveals the importance of features, its interpretation is still biased toward the technical level, which makes it difficult to fully meet the intuitive interpretation needs of clinicians for direct association with functional brain regions. For example, more clarity is still needed on how models relate specific EEG patterns to behavioral phenotypes or treatment effects in ASD. Future work can incorporate neuroscience knowledge to develop visualization tools closer to clinical context to enhance the transparency and credibility of the model in medical decision making.

5. Conclusions

In this paper, we propose the time–frequency synergy network (TFSNet), which uses electroencephalogram (EEG) signals for robust Autism Spectrum Disorder (ASD) classification, and has been validated on multiple datasets. TFSNet’s innovative architecture provides promising insights for personalized ASD diagnosis and targeted intervention strategies by collaboratively fusing time- and frequency-domain features and enhancing interpretability through SHAP analysis. However, several future works are still needed to translate TFSNet from research to practical clinical applications. Its current computational complexity needs to be optimized by model compression techniques (e.g., pruning, quantization, etc.) to ensure real-time deployment and hardware feasibility, especially for resource-constrained portable EEG systems. Crucially, comprehensive clinical testing across a broader and more diverse patient population via multicenter trials is needed to validate its generalization ability and clinical utility while addressing technical compatibility and ethical challenges for seamless integration into existing healthcare workflows. Overcoming these practical deployment challenges will be key for TFSNet to become a valuable diagnostic tool in clinical settings.

Author Contributions

L.S. (Lijuan Shi): Conceptualization, Formal analysis, Investigation, Validation. L.M.: Conceptualization, Software, Validation, Data curation, Methodology, Writing—original draft, Writing—review and editing. J.Z.: Project administration, Supervision, Resources. Z.K.: Methodology, Resources. S.W.: Visualization. H.Y.: Visualization. H.W.: Conceptualization, Formal analysis. Q.H.: Investigation. L.S. (Lei Sun): Formal analysis. All authors reviewed the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the Science and Technology Development Plan Project of Jilin Province (Grant/Award Numbers: YDZ1202201ZYTS684, YDZJ202301ZYTS496, YDZJ202201ZYTS549, YDZJ202501ZYTS589, YDZJ202501ZYTS619).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset used for this study is publicly available and accessible online from https://malhaddad.kau.edu.sa/Pages-BCI-Datasets.aspx (17 October 2024) and DOI: https://doi.org/10.1016/j.cortex.2021.09.022.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lord, C.; Brugha, T.S.; Charman, T.; Cusack, J.; Dumas, G.; Frazier, T.; Jones, E.J.; Jones, R.M.; Pickles, A.; State, M.W.; et al. Autism spectrum disorder. Nat. Rev. Dis. Prim. 2020, 6, 5. [Google Scholar] [CrossRef] [PubMed]

- Waizbard-Bartov, E.; Ferrer, E.; Heath, B.; Rogers, S.J.; Nordahl, C.W.; Solomon, M.; Amaral, D.G. Identifying autism symptom severity trajectories across childhood. Autism Res. 2022, 15, 687–701. [Google Scholar] [CrossRef] [PubMed]

- Zeidan, J.; Fombonne, E.; Scorah, J.; Ibrahim, A.; Durkin, M.S.; Saxena, S.; Yusuf, A.; Shih, A.; Elsabbagh, M. Global prevalence of autism: A systematic review update. Autism Res. 2022, 15, 778–790. [Google Scholar] [CrossRef]

- Amaral, D.G. Examining the causes of autism. Cerebrum Dana Forum Brain Sci. 2017, 2017, cer-01. [Google Scholar]

- Hodges, H.; Fealko, C.; Soares, N. Autism spectrum disorder: Definition, epidemiology, causes, and clinical evaluation. Transl. Pediatr. 2020, 9, S55. [Google Scholar] [CrossRef]

- Schopler, E.; Van Bourgondien, M.E.; Wellman, G.J.; Love, S.R. The Childhood Autism Rating Scale, (CARS2): Manual; Western Psychological Services: Los Angeles, CA, USA, 2010. [Google Scholar]

- Lord, C.; Rutter, M.; DiLavore, P.; Risi, S.; Gotham, K.; Bishop, S.; Luyster, R.; Guthrie, W. (ADOS-2) Autism Diagnostic Observation Schedule, 2nd ed.; Western Psychological Corporation: Los Angeles, CA, USA, 2012; Volume 284, pp. 474–478. [Google Scholar]

- Khowaja, M.; Robins, D.L. Checklist for Autism in Toddlers (CHAT). In Encyclopedia of Autism Spectrum Disorders; Springer: Cham, Switzerland, 2021; pp. 873–879. [Google Scholar]

- Rutter, M.; Le Couteur, A.; Lord, C. Autism Diagnostic Interview-Revised; Western Psychological Corporation: Los Angeles, CA, USA, 2003; Volume 29, p. 30. [Google Scholar]

- Li, J.; Kong, X.; Sun, L.; Chen, X.; Ouyang, G.; Li, X.; Chen, S. Identification of autism spectrum disorder based on electroencephalography: A systematic review. Comput. Biol. Med. 2024, 170, 108075. [Google Scholar] [CrossRef]

- Ali, N.A.; Radzi, S.A.; Jaafar, S.; Shamsuddin, S.; Nor, N.K. LSTM-based electroencephalogram classification on autism spectrum disorder. Int. J. Integr. Eng. 2021, 13, 321–329. [Google Scholar] [CrossRef]

- Schielen, S.J.; Pilmeyer, J.; Aldenkamp, A.P.; Zinger, S. The diagnosis of ASD with MRI: A systematic review and meta-analysis. Transl. Psychiatry 2024, 14, 318. [Google Scholar] [CrossRef]

- Martini, A.L.; Quartuccio, N.; Schiera, I.G.; Berti, V.; Burroni, L.; Cistaro, A. Applications of PET and SPECT in patients with autism spectrum disorder. Curr. Med. Imaging 2024, 20, e15734056232408. [Google Scholar] [CrossRef]

- Silk, T.J.; Rinehart, N.; Bradshaw D Sc, J.L.; Tonge, B.; Egan, G.; O’Boyle, M.W.; Cunnington, R. Visuospatial processing and the function of prefrontal-parietal networks in autism spectrum disorders: A functional MRI study. Am. J. Psychiatry 2006, 163, 1440–1443. [Google Scholar] [CrossRef]

- Peng, W. EEG preprocessing and denoising. In EEG Signal Processing and Feature Extraction; Springer: Berlin/Heidelberg, Germany, 2019; pp. 71–87. [Google Scholar]

- Ranaut, A.; Khandnor, P.; Chand, T. Identification of autism spectrum disorder using electroencephalography and machine learning: A review. J. Neural Eng. 2024. epub ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Alturki, F.A.; AlSharabi, K.; Abdurraqeeb, A.M.; Aljalal, M. EEG signal analysis for diagnosing neurological disorders using discrete wavelet transform and intelligent techniques. Sensors 2020, 20, 2505. [Google Scholar] [CrossRef] [PubMed]

- Frye, R.E.; Vassall, S.; Kaur, G.; Lewis, C.; Karim, M.; Rossignol, D. Emerging biomarkers in autism spectrum disorder: A systematic review. Ann. Transl. Med. 2019, 7, 792. [Google Scholar] [CrossRef] [PubMed]

- Grossi, E.; Olivieri, C.; Buscema, M. Diagnosis of autism through EEG processed by advanced computational algorithms: A pilot study. Comput. Methods Programs Biomed. 2017, 142, 73–79. [Google Scholar] [CrossRef]

- Ibrahim, S.; Djemal, R.; Alsuwailem, A. Electroencephalography (EEG) signal processing for epilepsy and autism spectrum disorder diagnosis. Biocybern. Biomed. Eng. 2018, 38, 16–26. [Google Scholar] [CrossRef]

- Abdolzadegan, D.; Moattar, M.H.; Ghoshuni, M. A robust method for early diagnosis of autism spectrum disorder from EEG signals based on feature selection and DBSCAN method. Biocybern. Biomed. Eng. 2020, 40, 482–493. [Google Scholar] [CrossRef]

- Dong, H.; Chen, D.; Zhang, L.; Ke, H.; Li, X. Subject sensitive EEG discrimination with fast reconstructable CNN driven by reinforcement learning: A case study of ASD evaluation. Neurocomputing 2021, 449, 136–145. [Google Scholar] [CrossRef]

- Ali, N.A.; Syafeeza, A.; Jaafar, A.; Alif, M.; Ali, N. Autism spectrum disorder classification on electroencephalogram signal using deep learning algorithm. IAES Int. J. Artif. Intell. 2020, 9, 91–99. [Google Scholar] [CrossRef]

- Rasool, A.; Aslam, S.; Xu, Y.; Wang, Y.; Pan, Y.; Chen, W. Deep neurocomputational fusion for ASD diagnosis using multi-domain EEG analysis. Neurocomputing 2025, 641, 130353. [Google Scholar] [CrossRef]

- Gao, T.; Chen, D.; Zhou, M.; Wang, Y.; Zuo, Y.; Tu, W.; Li, X.; Chen, J. Self-training EEG discrimination model with weakly supervised sample construction: An age-based perspective on ASD evaluation. Neural Netw. 2025, 187, 107337. [Google Scholar] [CrossRef]

- Tawhid, M.N.A.; Siuly, S.; Wang, H.; Whittaker, F.; Wang, K.; Zhang, Y. A spectrogram image based intelligent technique for automatic detection of autism spectrum disorder from EEG. PLoS ONE 2021, 16, e0253094. [Google Scholar] [CrossRef] [PubMed]

- Ari, B.; Sobahi, N.; Alçin, Ö.F.; Sengur, A.; Acharya, U.R. Accurate detection of autism using Douglas-Peucker algorithm, sparse coding based feature mapping and convolutional neural network techniques with EEG signals. Comput. Biol. Med. 2022, 143, 105311. [Google Scholar] [CrossRef] [PubMed]

- Baygin, M.; Dogan, S.; Tuncer, T.; Barua, P.D.; Faust, O.; Arunkumar, N.; Abdulhay, E.W.; Palmer, E.E.; Acharya, U.R. Automated ASD detection using hybrid deep lightweight features extracted from EEG signals. Comput. Biol. Med. 2021, 134, 104548. [Google Scholar] [CrossRef] [PubMed]

- Ardakani, H.A.; Taghizadeh, M.; Shayegh, F. Diagnosis of autism disorder based on deep network trained by augmented EEG signals. Int. J. Neural Syst. 2022, 32, 2250046. [Google Scholar] [CrossRef]

- Wadhera, T.; Kakkar, D.; Rani, R. Behavioral modeling using deep neural network framework for ASD diagnosis and prognosis. In Emerging Technologies for Healthcare: Internet of Things and Deep Learning Models; Wiley: Hoboken, NJ, USA, 2021; pp. 279–298. [Google Scholar]

- Ullah, M.Z.; Yu, D. Grid-tuned ensemble models for 2D spectrogram-based autism classification. Biomed. Signal Process. Control 2024, 93, 106151. [Google Scholar]

- Alhaddad, M.J.; Kamel, M.I.; Malibary, H.M.; Alsaggaf, E.A.; Thabit, K.; Dahlwi, F.; Hadi, A.A. Diagnosis autism by fisher linear discriminant analysis FLDA via EEG. Int. J. Bio-Sci. Bio-Technol. 2012, 4, 45–54. [Google Scholar]

- Dickinson, A.; Jeste, S.; Milne, E. Electrophysiological signatures of brain aging in autism spectrum disorder. Cortex 2022, 148, 139–151. [Google Scholar] [CrossRef]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic convolution: Attention over convolution kernels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11030–11039. [Google Scholar]

- Padhmashree, V.; Bhattacharyya, A. Human emotion recognition based on time–frequency analysis of multivariate EEG signal. Knowl.-Based Syst. 2022, 238, 107867. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning. PMLR, Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Singh, A.K.; Krishnan, S. Trends in EEG signal feature extraction applications. Front. Artif. Intell. 2023, 5, 1072801. [Google Scholar] [CrossRef]

- Luo, N.; Luo, X.; Zheng, S.; Yao, D.; Zhao, M.; Cui, Y.; Zhu, Y.; Calhoun, V.D.; Sun, L.; Sui, J. Aberrant brain dynamics and spectral power in children with ADHD and its subtypes. Eur. Child Adolesc. Psychiatry 2023, 32, 2223–2234. [Google Scholar] [CrossRef]

- Abdulwahhab, A.H.; Abdulaal, A.H.; Al-Ghrairi, A.H.T.; Mohammed, A.A.; Valizadeh, M. Detection of epileptic seizure using EEG signals analysis based on deep learning techniques. Chaos Solitons Fractals 2024, 181, 114700. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Li, X.; Wei, W.; Qiu, S.; He, H. A temporal–spectral fusion transformer with subject-specific adapter for enhancing RSVP-BCI decoding. Neural Netw. 2025, 181, 106844. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Chen, C.P.; Zhang, T. TFAGL: A Novel Agent Graph Learning Method Using Time-Frequency EEG For Major Depressive Disorder Detection. IEEE Trans. Affect. Comput. 2025. epub ahead of print. [Google Scholar] [CrossRef]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 2018, 15, 056013. [Google Scholar] [CrossRef]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 2017, 38, 5391–5420. [Google Scholar] [CrossRef]

- Keehn, B.; Westerfield, M.; Müller, R.A.; Townsend, J. Autism, attention, and alpha oscillations: An electrophysiological study of attentional capture. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 2017, 2, 528–536. [Google Scholar] [CrossRef]

- Robertson, C.E.; Baron-Cohen, S. Sensory perception in autism. Nat. Rev. Neurosci. 2017, 18, 671–684. [Google Scholar] [CrossRef]

- Demetriou, E.A.; Lampit, A.; Quintana, D.S.; Naismith, S.L.; Song, Y.J.; Pye, J.E.; Hickie, I.; Guastella, A.J. Autism spectrum disorders: A meta-analysis of executive function. Mol. Psychiatry 2018, 23, 1198–1204. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).