1. Introduction

Autism Spectrum Disorder (ASD) is a complex neurodevelopmental condition characterized by challenges in social interaction, communication, and repetitive behaviors [

1,

2]. The World Health Organization estimates that approximately 1 in 100 children worldwide are diagnosed with ASD, with prevalence varying significantly by region and socioeconomic status, reflecting global differences in diagnostic capabilities and service provision [

3]. The etiology of ASD remains unclear, though research suggests it results from complex interactions between genetic and environmental factors [

4,

5].

Currently, ASD diagnosis relies on clinical observations and behavioral assessments by medical professionals [

6,

7,

8,

9], a process that is time-consuming and lacks objective biological indicators, potentially leading to diagnostic variability [

10]. In recent years, an increasing number of studies have focused on identifying ASD through biomarkers, especially the electroencephalogram (EEG) [

11]. While magnetic resonance imaging (MRI) [

12] and computed tomography (CT) [

13] are also used in ASD research, EEG offers advantages, including cost-effectiveness, high temporal resolution, and noninvasiveness [

14]. Although EEG signal analysis requires preprocessing for noise and artifact removal [

15], constructing ASD diagnostic models using EEG signals remains crucial for clinical screening and diagnosis support.

Many researchers are applying machine learning approaches to ASD EEG signal analysis for feature extraction and classification [

16]. However, traditional machine learning methods typically rely on manual feature extraction, requiring tedious feature engineering that depends heavily on researcher experience and domain knowledge [

17,

18]. To address these limitations, researchers have explored increasingly complex feature extraction methods to improve classification performance [

19,

20,

21].

The development of deep learning has made it a prominent area of research in ASD EEG signal analysis [

22,

23,

24,

25]. Compared to traditional machine learning, deep learning automatically extracts complex nonlinear features from data, greatly reducing the need for manual feature engineering. Researchers are continuing to explore methods for automating feature extraction and minimizing manual intervention. Tawhid et al. [

26] proposed an effective diagnostic framework based on EEG spectrogram images. The authors applied a Short-Time Fourier Transform to convert the processed signals into 2D spectrogram images, which were then analyzed using machine learning. Ari et al. [

27] proposed a method combining the Douglas–Peucker algorithm, sparse coding-based feature mapping, and a convolutional neural network. The authors sparsely encode the EEG rhythm during feature extraction and input it into a CNN for training. Baygin et al. [

28] proposed a method for automatically detecting ASD. They extracted one-dimensional Local Binary Pattern (1D-LBP) features from electroencephalogram (EEG) signals and generated spectrogram images using Short-Time Fourier Transform (STFT). Pretrained MobileNetV2, ShuffleNet, and SqueezeNet models were used to extract deep features. Feature selection was performed using a two-layer ReliefF algorithm, resulting in excellent performance. Ardakani et al. [

29] divided the EEG signals from autistic and healthy individuals into nonoverlapping windows, treated them as images, and classified them using a 2D deep convolutional neural network (2D-DCNN). Wadhera et al. [

30] employed transfer learning to classify autism using pretrained EEGNet and DeepConvNet models, achieving accuracies of 89.6% and 92.3%, respectively. Ullah et al. [

31] proposed a deep learning-based weighted ensemble model for classifying Autism Spectrum Disorder (ASD) using electroencephalogram (EEG) data. They converted the original EEG signals into multi-channel two-dimensional spectrograms using Short-Time Fourier Transform (STFT). A grid search strategy was then employed to determine the optimal model weight combination, resulting in excellent performance.

Despite recent advances in feature extraction research for autism signal classification, traditional convolutional neural networks are still insufficient to capture temporal variations in EEG signals. Due to the use of a fixed convolutional kernel, it is difficult for these models to adapt to the dynamic changes of EEG signals. In addition, most current studies lack a strategy to jointly represent time- and frequency-domain EEG signals at the feature level, which limits the effective capture of integrated signal information. These limitations lead to a weak generalization ability of existing methods when faced with different datasets in subject-related experiments, making it difficult to maintain stable performance under different acquisition environments and participant characteristics. To address these issues, we propose a time–frequency synergy network (TFSNet) that fuses time- and frequency-domain features for the classification of EEG signals in autism. This network introduces dynamic convolution into the time-domain feature extraction, which can adaptively adjust the parameters of the convolution kernel and enhance the ability to capture the temporal variations of EEG signals. In addition, this paper proposes an attention mechanism that fuses time-domain and frequency-domain features, which reveals the dynamic changes and complex patterns of EEG signals in greater depth while effectively representing the time-domain and frequency-domain features jointly. The contributions of this paper are as follows:

A time–frequency synergy network model is proposed in this paper for the feature extraction and classification of autism EEG signals. This model effectively extracts key information from EEG signals and significantly improves classification performance.

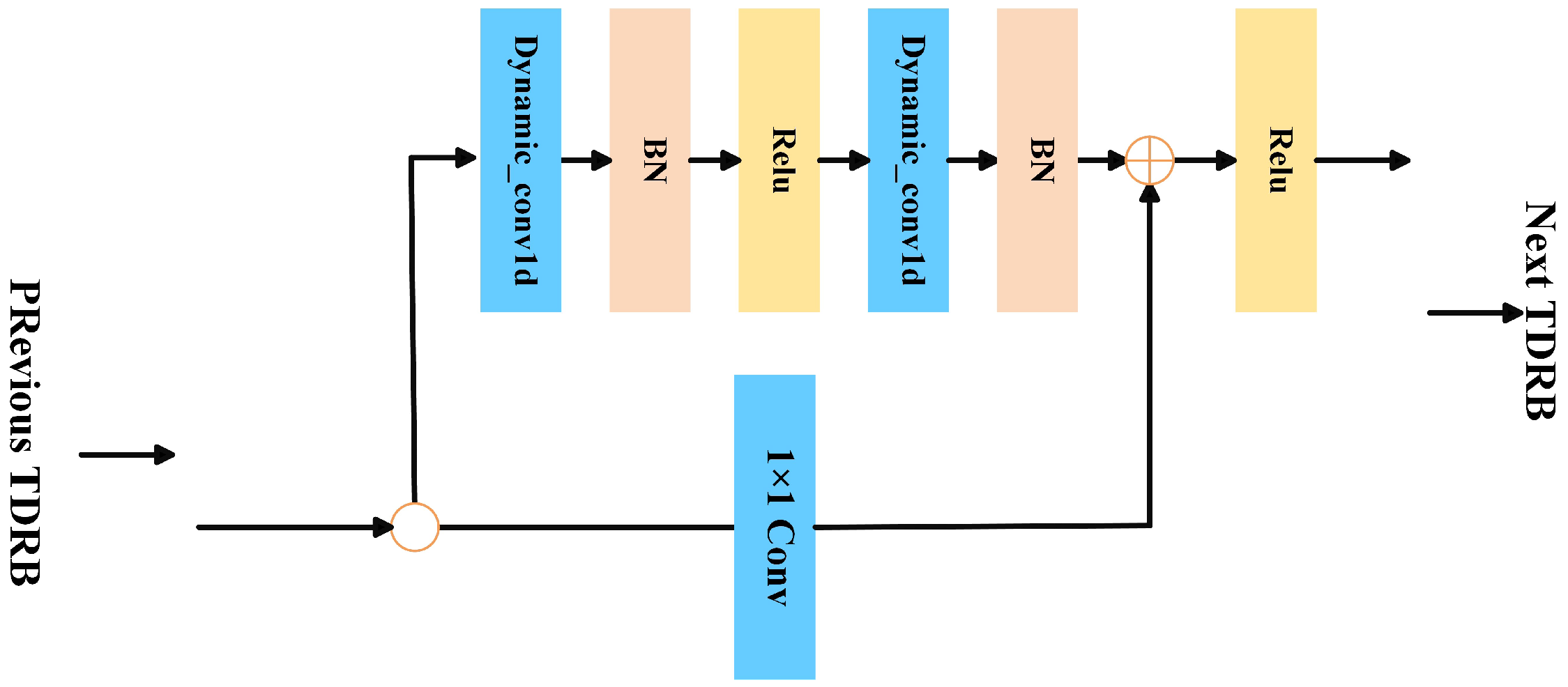

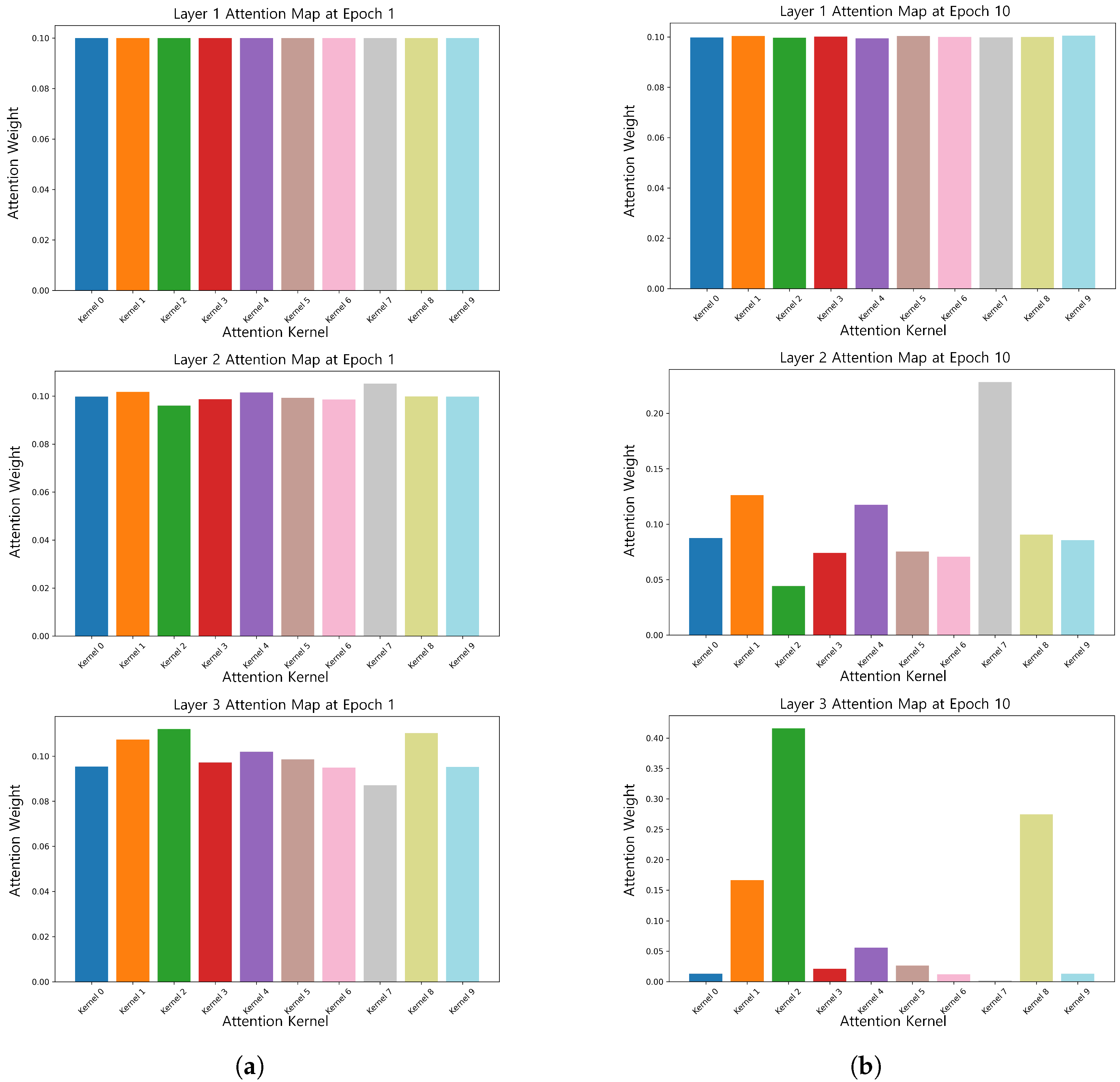

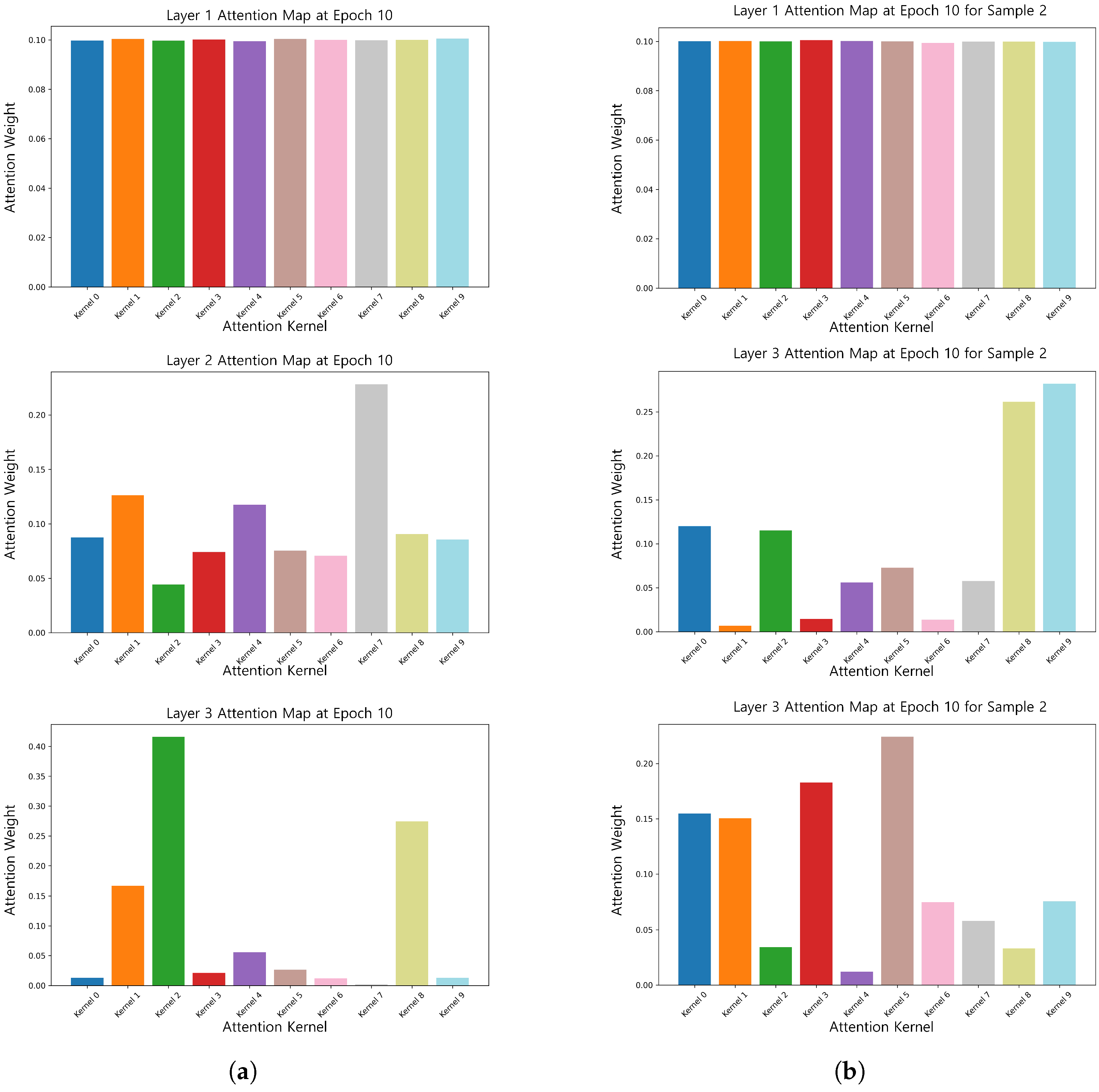

This paper introduces dynamic convolution into EEG time-domain feature extraction for ASD. We propose the TDRB module, which adaptively adjusts convolution kernel parameters and enhances the model’s ability to capture time-varying features.

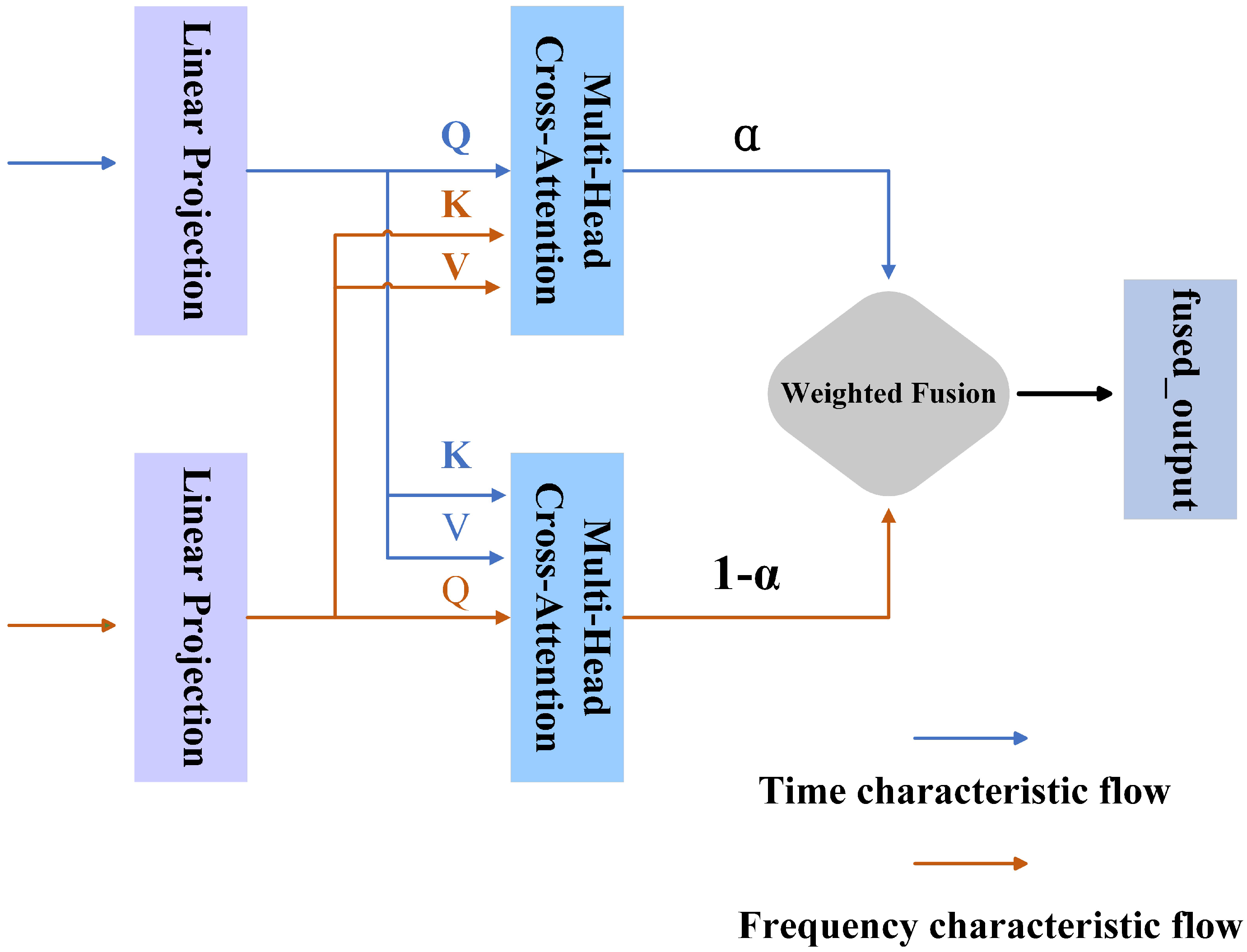

This paper proposes an adaptive cross-domain attention mechanism to fuse time-domain and frequency-domain features. This mechanism effectively extracts and integrates key information in time and frequency feature and realizes feature-level interaction and fusion.

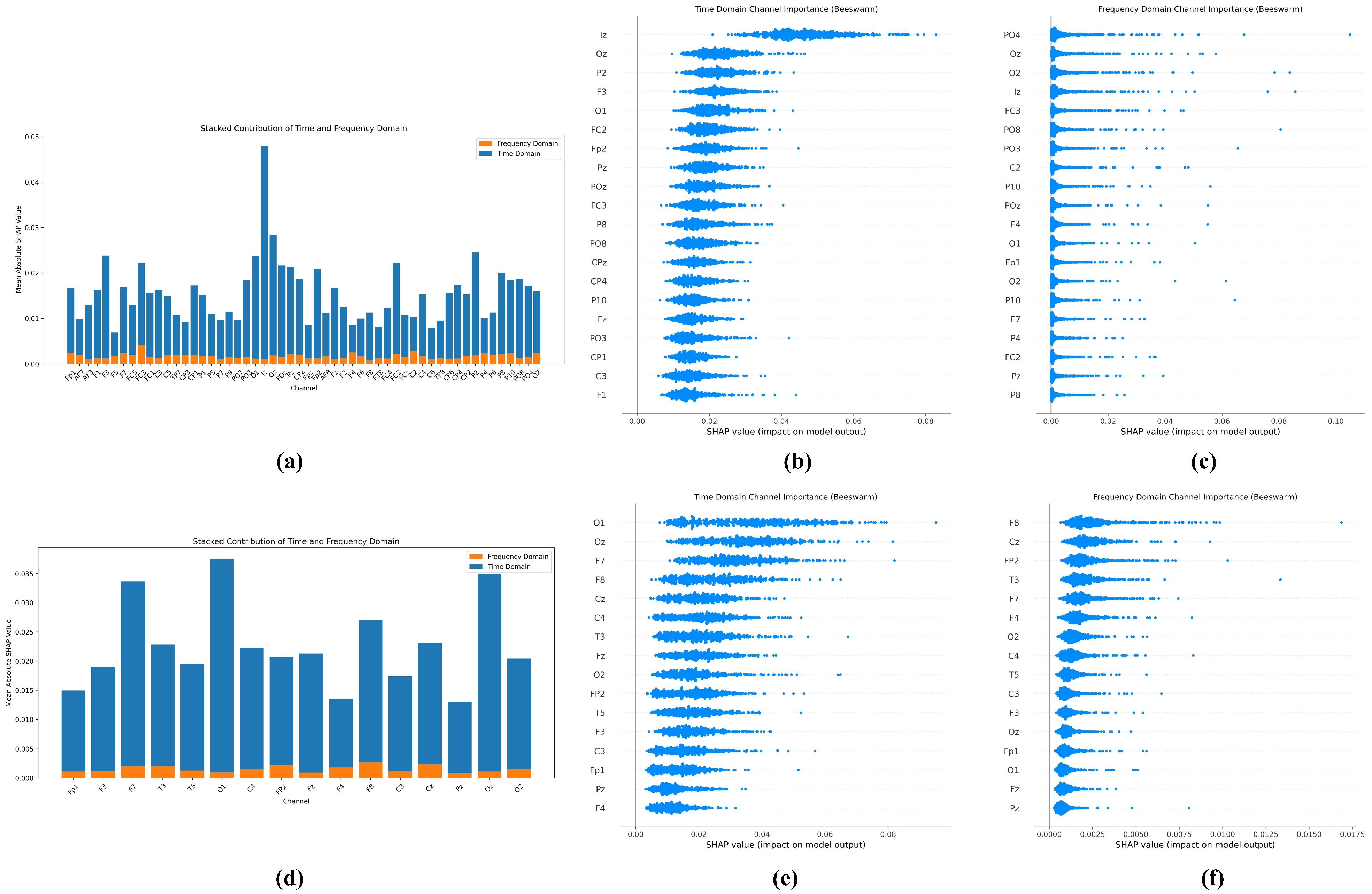

This paper compared and analyzed the proposed model with other deep learning models and mainstream methods based on the same dataset and enhanced the transparency and interpretability of the model by the SHAP method, thus verifying the superior performance and application potential of the model in the field of autism EEG signal diagnosis.

4. Discussion

This paper proposes a time–frequency synergy network (TFSNet) for Autism Spectrum Disorder (ASD) classification using EEG signals, and it was validated on the University of Sheffield and KAU datasets.

TFSNet introduces an innovative architecture that addresses these challenges by integrating time- and frequency-domain features. Using dynamic convolution, TFSNet adaptively adjusts convolution kernels to capture the time-varying characteristics of EEG signals, overcoming the limitations of static convolution in models like EEGNet. In the frequency domain, it employs Short-Time Fourier Transform (STFT) and a Convolutional Attention Module (CBAM) for robust feature extraction. The Adaptive Cross-Domain Attention mechanism (ACDA) enables bidirectional interaction and fusion of time- and frequency-domain features, enhancing classification performance and generalization. This time–frequency synergy makes TFSNet more robust and effective for ASD classification across varied datasets.

TFSNet, in combination with SHAP analysis, revealed key EEG patterns associated with ASD that were highly correlated with known ASD neurophysiological features such as abnormal activity of theta and alpha waves [

46,

47]. This finding provides clinicians with interpretable diagnostic evidence to help distinguish ASD subtypes and guide treatment decisions. For example, the specific frequency band activity differences identified by TFSNet could be used to design targeted neurofeedback training to improve social or cognitive function in patients. In addition, SHAP analysis identifies the most important electrode locations for diagnosis, which provides the possibility to optimize EEG acquisition, significantly reducing the time and cost of the examination and making it more viable for application in resource-limited clinical Settings. In terms of personalized diagnosis, TFSNet can generate diagnostic markers based on patient-specific EEG patterns, which can provide support for the formulation of individualized treatment plans. For example, in clinical practice, physicians can use the output of TFSNet in combination with other clinical assessments to develop targeted intervention strategies. However, the limitation of the current model is that its validation data are mainly from specific populations, and its generalization ability needs to be further tested in a wider patient population in the future. In addition, the integration of TFSNet into existing clinical workflows may face technical compatibility and ethical challenges, which will be further explored in subsequent studies.

Despite the excellent performance of TFSNet, its limitations cannot be ignored. Firstly, the computational complexity of the model is a key issue. Its multi-component architecture, including dynamic convolutions, CBAM modules, Transformer encoders, and ACDA mechanisms, significantly improves performance but also increases computational cost. In resource-constrained clinical settings, such as using portable EEG devices or within real-time diagnostic systems, this complexity may limit the deployment of models. Future research should explore model compression techniques such as pruning or quantization to reduce computational requirements while maintaining high accuracy. In addition, the real-time performance of the model needs to be further optimized to meet the requirements of rapid diagnosis in clinical environments.

Interpretability is another area that needs to be improved. Although SHAP analysis reveals the importance of features, its interpretation is still biased toward the technical level, which makes it difficult to fully meet the intuitive interpretation needs of clinicians for direct association with functional brain regions. For example, more clarity is still needed on how models relate specific EEG patterns to behavioral phenotypes or treatment effects in ASD. Future work can incorporate neuroscience knowledge to develop visualization tools closer to clinical context to enhance the transparency and credibility of the model in medical decision making.

5. Conclusions

In this paper, we propose the time–frequency synergy network (TFSNet), which uses electroencephalogram (EEG) signals for robust Autism Spectrum Disorder (ASD) classification, and has been validated on multiple datasets. TFSNet’s innovative architecture provides promising insights for personalized ASD diagnosis and targeted intervention strategies by collaboratively fusing time- and frequency-domain features and enhancing interpretability through SHAP analysis. However, several future works are still needed to translate TFSNet from research to practical clinical applications. Its current computational complexity needs to be optimized by model compression techniques (e.g., pruning, quantization, etc.) to ensure real-time deployment and hardware feasibility, especially for resource-constrained portable EEG systems. Crucially, comprehensive clinical testing across a broader and more diverse patient population via multicenter trials is needed to validate its generalization ability and clinical utility while addressing technical compatibility and ethical challenges for seamless integration into existing healthcare workflows. Overcoming these practical deployment challenges will be key for TFSNet to become a valuable diagnostic tool in clinical settings.