Cross-Cultural Biases of Emotion Perception in Music

Abstract

:1. Introduction

1.1. Emotion Recognition Across Musical Cultures

1.2. Dimensional Approaches to Emotion

1.3. Musical Features in Emotion Recognition

1.4. Perceptual Bias in Emotion Perception Across Musical Cultures

1.5. The Present Study

2. Methods

2.1. Participants

2.2. Music Stimuli

2.3. Procedure

2.4. Design and Analysis

2.5. Test of Assumptions and Possible Covariates

3. Results

3.1. Emotion Recognition Accuracy Across Cultures

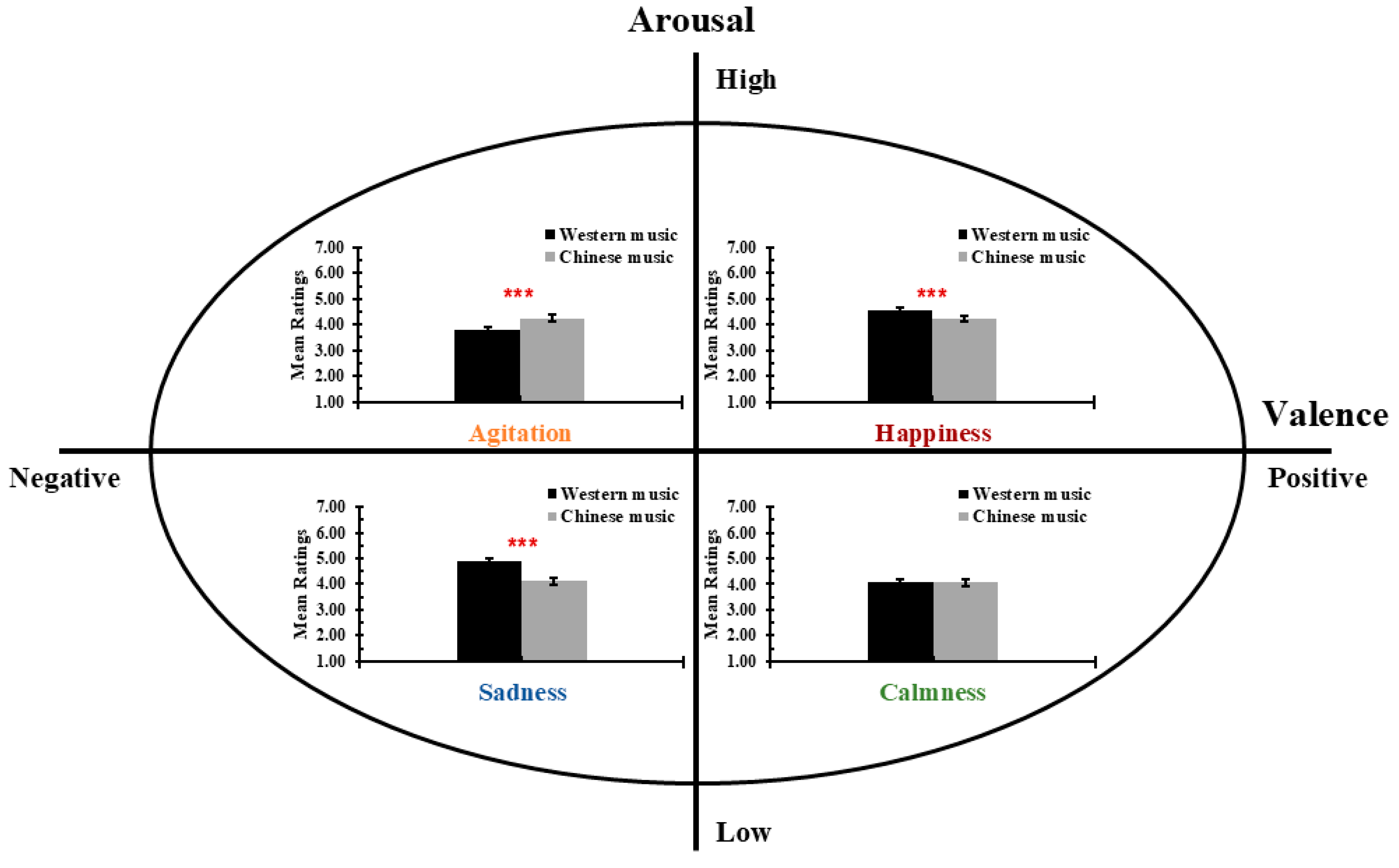

3.2. Perceived Emotional Intensity Across Cultures

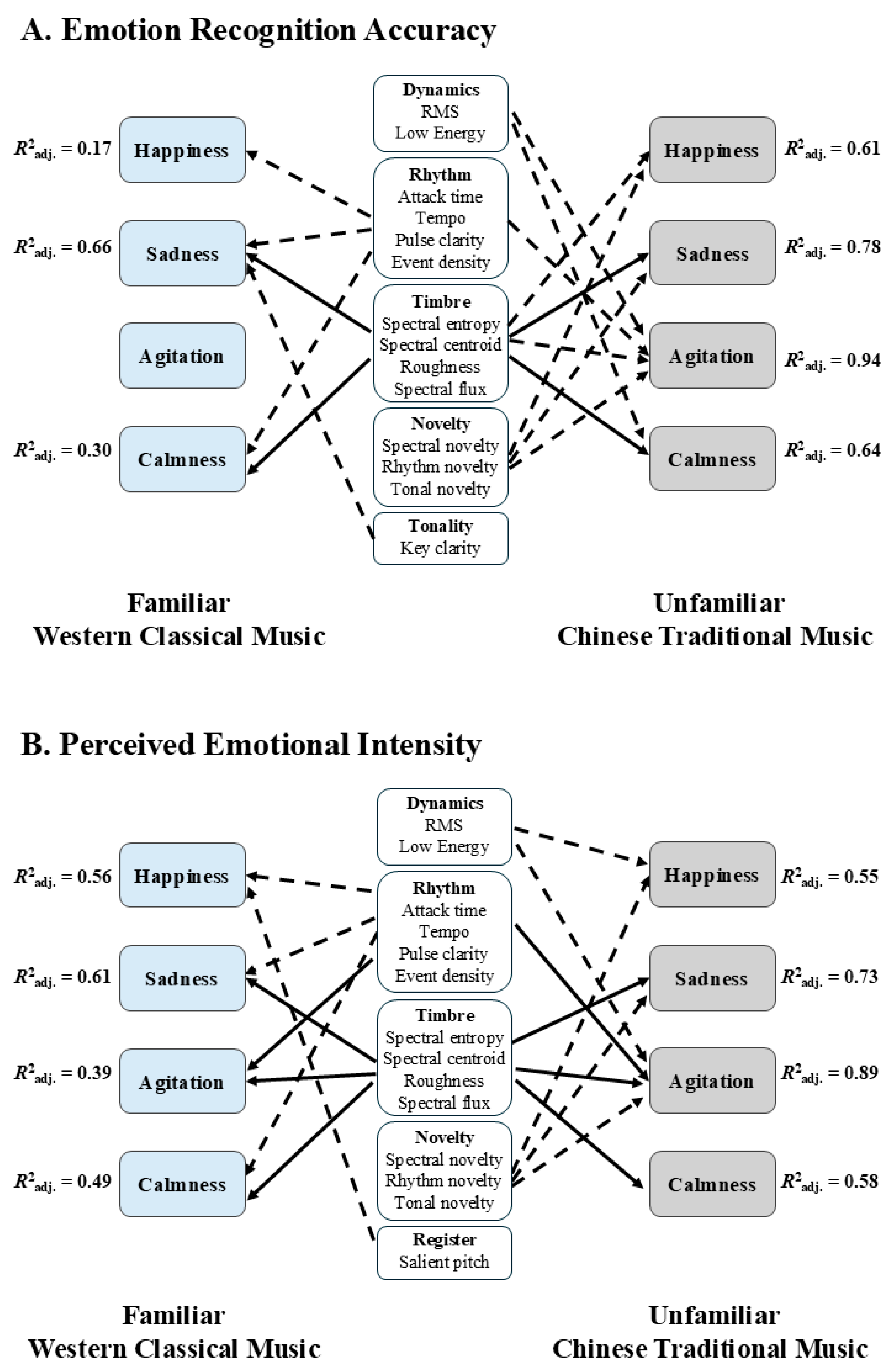

3.3. Musical Features Predicting Emotion Perception

3.3.1. Predictors of Perceived Emotion Recognition Accuracy

3.3.2. Predictors of Perceived Emotional Intensity

4. Discussion

4.1. Cultural Familiarity and Emotion Perception

4.2. Musical Features and Emotion Perception

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cowen, A.S.; Fang, X.; Sauter, D.; Keltner, D. What music makes us feel: At least 13 dimensions organize subjective experiences associated with music across different cultures. Proc. Natl. Acad. Sci. USA 2020, 117, 1924–1934. [Google Scholar] [CrossRef] [PubMed]

- Thompson, W.F.; Sun, Y.; Fritz, T. Music across cultures. In Foundations in Music Psychology: Theory and Research; Rentfrow, P.J., Levitin, D.J., Eds.; The MIT Press: Cambridge, MA, USA, 2019; pp. 503–541. [Google Scholar]

- Day, R.; Thompson, W.F. How does music elicit emotions? In Emotion Theory: The Routledge Comprehensive Guide: Volume II: Theories of Specific Emotions and Major Theoretical Challenges, 1st ed.; Scarantino, A., Ed.; Routledge: Oxfordshire, UK, 2024; pp. 407–422. [Google Scholar]

- Gabrielsson, A. Emotion perceived and emotion felt: Same or different? Music. Sci. 2001, 5, 123–147. [Google Scholar] [CrossRef]

- Thompson, W.F.; Bullot, N.J.; Margulis, E.H. The psychological basis of music appreciation: Structure, self, source. Psychol. Rev. 2023, 130, 260–284. [Google Scholar] [CrossRef] [PubMed]

- Chilvers, A.; Quan, Y.; Olsen, K.N.; Thompson, W.F. The effects of cultural source sensitivity on music appreciation. Psychol. Music 2023, 52, 284–304. [Google Scholar] [CrossRef]

- Brackett, M.A.; Rivers, S.E.; Shiffman, S.; Lerner, N.; Salovey, P. Relating emotional abilities to social functioning: A comparison of self-report and performance measures of emotional intelligence. J. Pers. Soc. Psychol. 2006, 91, 780–795. [Google Scholar] [CrossRef]

- Mayer, J.D.; Caruso, D.R.; Salovey, P. The ability model of emotional intelligence: Principles and updates. Emot. Rev. 2016, 8, 290–300. [Google Scholar] [CrossRef]

- Elfenbein, H.A.; MacCann, C. A closer look at ability emotional intelligence (EI): What are its component parts, and how do they relate to each other? Soc. Personal. Psychol. Compass 2017, 11, e12324. [Google Scholar] [CrossRef]

- Clarke, E.; DeNora, T.; Vuoskoski, J. Music, empathy and cultural understanding. Phys. Life Rev. 2015, 15, 61–88. [Google Scholar] [CrossRef]

- Margulis, E.H.; Wong, P.C.M.; Turnbull, C.; Kubit, B.M.; McAuley, J.D. Narratives imagined in response to instrumental music reveal culture-bounded intersubjectivity. Proc. Natl. Acad. Sci. USA 2022, 119, e2110406119. [Google Scholar] [CrossRef]

- Lyu, M.; Egermann, H. A cross-cultural study in Chinese and Western music: Cultural advantages in recognition of emotions. Psychol. Music 2024, 1–20. [Google Scholar] [CrossRef]

- Laukka, P.; Eerola, T.; Thingujam, N.S.; Yamasaki, T.; Beller, G. Universal and culture-specific factors in the recognition and performance of musical affect expressions. Emotion 2013, 13, 434–449. [Google Scholar] [CrossRef] [PubMed]

- Thompson, W.F.; Balkwill, L.-L. Cross-cultural similarities and differences. In Handbook of Music and Emotion: Theory, Research, and Applications, 1st ed.; Juslin, P.N., Sloboda, J.A., Eds.; Oxford University Press: Oxford, UK, 2010; pp. 755–788. [Google Scholar]

- Juslin, P.N. Cue utilization in communication of emotion in music performance: Relating performance to perception. J. Exp. Psychol. Hum. Percept. Perform. 2000, 26, 1797–1812. [Google Scholar] [CrossRef] [PubMed]

- Deliege, I. Cue abstraction as a component of categorisation processes in music listening. Psychol. Music 1996, 24, 131–156. [Google Scholar] [CrossRef]

- MacGregor, C.; Müllensiefen, D. The musical emotion discrimination task: A new measure for assessing the ability to discriminate emotions in music. Front. Psychol. 2019, 10, 1955. [Google Scholar] [CrossRef]

- Susino, M.; Schubert, E. Musical emotions in the absence of music: A cross-cultural investigation of emotion communication in music by extra-musical cues. PLoS ONE 2020, 15, e0241196. [Google Scholar] [CrossRef]

- Mesquita, B.; Frijda, N.H. Cultural variations in emotions: A review. Psychol. Bull. 1992, 112, 179–204. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Pers. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Cespedes-Guevara, J.; Eerola, T. Music communicates affects, not basic emotions—A constructionist account of attribution of emotional meanings to nusic. Front. Psychol. 2018, 9, 215. [Google Scholar] [CrossRef]

- Balkwill, L.-L.; Thompson, W.F. A cross-cultural investigation of the perception of emotion in music: Psychophysical and cultural cues. Music Percept. 1999, 17, 43–64. [Google Scholar] [CrossRef]

- Fritz, T.; Jentschke, S.; Gosselin, N.; Sammler, D.; Peretz, I.; Turner, R.; Friederici, A.D.; Koelsch, S. Universal recognition of three basic emotions in music. Curr. Biol. 2009, 19, 573–576. [Google Scholar] [CrossRef]

- Wang, X.; Wei, Y.; Heng, L.; McAdams, S. A cross-cultural analysis of the influence of timbre on affect perception in Western classical music and Chinese music traditions. Front. Psychol. 2021, 12, 732865. [Google Scholar] [CrossRef] [PubMed]

- Nyklíček, I.; Thayer, J.F.; Van Doornen, L.J. Cardiorespiratory differentiation of musically-induced emotions. J. Psychophysiol. 1997, 11, 304–321. [Google Scholar]

- Li, M.G.; Olsen, K.N.; Thompson, W.F. Cognitive processes in the cultural classification of music. Music. Sci. 2024; submitted. [Google Scholar]

- Balkwill, L.-L.; Thompson, W.F.; Matsunaga, R. Recognition of emotion in Japanese, Western, and Hindustani music by Japanese listeners. Jpn. Psychol. Res. 2004, 46, 337–349. [Google Scholar] [CrossRef]

- Midya, V.; Valla, J.; Balasubramanian, H.; Mathur, A.; Singh, N.C. Cultural differences in the use of acoustic cues for musical emotion experience. PLoS ONE 2019, 14, e0222380. [Google Scholar] [CrossRef]

- Wang, X.; Wei, Y.; Yang, D. Cross-cultural analysis of the correlation between musical elements and emotion. Cogn. Comput. Syst. 2022, 4, 116–129. [Google Scholar] [CrossRef]

- Elfenbein, H.A.; Ambady, N. When familiarity breeds accuracy. J. Pers. Soc. Psychol. 2003, 85, 276–290. [Google Scholar] [CrossRef]

- Mesquita, B.; Walker, R. Cultural differences in emotions: A context for interpreting emotional experiences. Behav. Res. Ther. 2003, 41, 777–793. [Google Scholar] [CrossRef]

- Pereira, C.S.; Teixeira, J.; Figueiredo, P.; Xavier, J.; Castro, S.L.; Brattico, E. Music and emotions in the brain: Familiarity matters. PLoS ONE 2011, 6, e27241. [Google Scholar] [CrossRef]

- Ara, A.; Marco-Pallarés, J. Different theta connectivity patterns underlie pleasantness evoked by familiar and unfamiliar music. Sci. Rep. 2021, 11, 18523. [Google Scholar] [CrossRef]

- Huron, D. Sweet Anticipation: Music and the Psychology of Expectation; MIT Press: Cambridge, MA, USA, 2006; pp. xii. 462p. [Google Scholar]

- Juslin, P.N. From everyday emotions to aesthetic emotions: Toward a unified theory of musical emotions. Phys. Life Rev. 2013, 10, 235–266. [Google Scholar] [CrossRef] [PubMed]

- Song, W.; Horner, A.B. Uncovering the differences between the violin and erhu musical instruments by statistical analysis of multiple musical pieces. Proc. Meet. Acoust. 2022, 50, 050005. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Buchner, A.; Lang, A.-G. Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behav. Res. Methods 2009, 41, 1149–1160. [Google Scholar] [CrossRef] [PubMed]

- Elfenbein, H.A.; Ambady, N. On the universality and cultural specificity of emotion recognition: A meta-analysis. Psychol. Bull. 2002, 128, 203–235. [Google Scholar] [CrossRef]

- Xie, L.; Gao, Y. A database for aesthetic classification of Chinese traditional music. Cogn. Comput. Syst. 2022, 4, 197–204. [Google Scholar] [CrossRef]

- Eerola, T.; Vuoskoski, J.K. A comparison of the discrete and dimensional models of emotion in music. Psychol. Music 2011, 39, 18–49. [Google Scholar] [CrossRef]

- Zhang, J.D.; Schubert, E. A single item measure for identifying musician and nonmusician categories based on measures of musical sophistication. Music Percept. 2019, 36, 457–467. [Google Scholar] [CrossRef]

- Hess, U.; Blairy, S.; Kleck, R.E. The intensity of emotional facial expressions and decoding accuracy. J. Nonverbal Behav. 1997, 21, 241–257. [Google Scholar] [CrossRef]

- Wagner, H.L. On measuring performance in category judgement studies of nonverbal behavior. J. Nonverbal Behav. 1993, 17, 3–28. [Google Scholar] [CrossRef]

- Lartillot, O.; Toiviainen, P.; Eerola, T. A Matlab Toolbox for Music Information Retrieval. In Data Analysis, Machine Learning and Applications; Preisach, C., Burkhardt, H., Schmidt-Thieme, L., Decker, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 261–268. [Google Scholar]

- Tabachnick, B.G.; Fidell, L.S. Using Multivariate Statistics, 7th ed.; Pearson: Boston, MA, USA, 2019. [Google Scholar]

- Egermann, H.; Fernando, N.; Chuen, L.; McAdams, S. Music induces universal emotion-related psychophysiological responses: Comparing Canadian listeners to Congolese Pygmies. Front. Psychol. 2015, 5, 1341. [Google Scholar] [CrossRef]

- Heng, L.; McAdams, S. The function of timbre in the perception of affective intentions: Effect of enculturation in different musical traditions. Music. Sci. 2024, 28, 675–702. [Google Scholar] [CrossRef]

- Vassilakis, P.N. Auditory roughness as a means of musical expression. Sel. Rep. Ethnomusicol. 2005, 12, 119–144. [Google Scholar]

- Li, M.G.; Olsen, K.N.; Davidson, J.W.; Thompson, W.F. Rich intercultural music engagement enhances cultural understanding: The impact of learning a musical instrument outside of one’s lived experience. Int. J. Environ. Res. Public Health 2023, 20, 1919. [Google Scholar] [CrossRef] [PubMed]

- Crooke, A.H.D.; Thompson, W.F.; Fraser, T.; Davidson, J. Music, social cohesion, and intercultural understanding: A conceptual framework for intercultural music engagement. Music. Sci. 2024, 28, 18–38. [Google Scholar] [CrossRef]

- Jagiello, R.; Pomper, U.; Yoneya, M.; Zhao, S.; Chait, M. Rapid brain responses to familiar vs. unfamiliar music—An EEG and pupillometry study. Sci. Rep. 2019, 9, 15570. [Google Scholar] [CrossRef]

- Peretz, I.; Gosselin, N.; Belin, P.; Zatorre, R.J.; Plailly, J.; Tillmann, B. Music lexical networks: The cortical organization of music recognition. Ann. N. Y. Acad. Sci. 2009, 1169, 256–265. [Google Scholar] [CrossRef]

- Tanaka, S.; Kirino, E. Increased Functional Connectivity of the Angular Gyrus During Imagined Music Performance. Front. Hum. Neurosci. 2019, 13, 92. [Google Scholar] [CrossRef]

- Igelström, K.M.; Graziano, M.S.A. The inferior parietal lobule and temporoparietal junction: A network perspective. Neuropsychologia 2017, 105, 70–83. [Google Scholar] [CrossRef]

- Molenberghs, P. The neuroscience of in-group bias. Neurosci. Biobehav. Rev. 2013, 37, 1530–1536. [Google Scholar] [CrossRef]

- Harada, T.; Mano, Y.; Komeda, H.; Hechtman, L.A.; Pornpattananangkul, N.; Parrish, T.B.; Sadato, N.; Iidaka, T.; Chiao, J.Y. Cultural influences on neural systems of intergroup emotion perception: An fMRI study. Neuropsychologia 2020, 137, 107254. [Google Scholar] [CrossRef]

| Culture | Happiness | Sadness | Agitation | Calmness | Cultural Recognition |

|---|---|---|---|---|---|

| Western music | |||||

| Happiness | 6.25 (0.25) | 1.58 (0.25) | 2.63 (0.56) | 3.90 (0.58) | 6.88 (0.00) |

| Sadness | 1.81 (0.32) | 5.71 (0.53) | 2.58 (1.07) | 3.60 (0.77) | 6.88 (0.00) |

| Agitation | 2.63 (0.99) | 3.48 (1.12) | 5.65 (0.74) | 1.69 (0.49) | 6.79 (0.13) |

| Calmness | 4.04 (0.53) | 2.85 (0.8) | 1.77 (0.41) | 5.58 (0.52) | 6.88 (0.00) |

| Chinese music | |||||

| Happiness | 6.08 (0.32) | 1.31 (0.21) | 3.25 (0.97) | 3.35 (1.18) | 6.77 (0.29) |

| Sadness | 2.06 (0.30) | 5.46 (0.69) | 2.81 (0.74) | 3.50 (0.75) | 6.44 (0.47) |

| Agitation | 3.52 (0.24) | 2.21 (0.44) | 5.96 (0.52) | 1.56 (0.39) | 6.08 (0.38) |

| Calmness | 4.10 (1.09) | 2.75 (1.2) | 1.96 (0.51) | 5.44 (0.29) | 6.69 (0.19) |

| Emotion | Western Music | Chinese Music | ||

|---|---|---|---|---|

| % | Hu | % | Hu | |

| Happiness | 63.33 (2.83) | 42.96 (2.26) | 53.33 (2.68) | 25.31 (1.52) |

| Sadness | 67.00 (2.93) | 35.41 (1.96) | 50.17 (3.27) | 33.35 (2.32) |

| Agitation | 45.33 (2.76) | 36.37 (2.42) | 56.67 (3.07) | 42.63 (2.61) |

| Calmness | 38.67 (2.78) | 18.59 (1.46) | 44.67 (3.01) | 21.76 (1.74) |

| Intended Emotions | Emotional Intensity Ratings | |||

|---|---|---|---|---|

| Happiness | Sadness | Agitation | Calmness | |

| Western music | ||||

| Happiness | 4.54 (0.13) | 1.96 (0.08) | 2.19 (0.11) | 3.37 (0.12) |

| Sadness | 2.01 (0.08) | 4.89 (0.14) | 2.13 (0.10) | 3.56 (0.11) |

| Agitation | 2.78 (0.09) | 3.03 (0.11) | 3.78 (0.15) | 2.29 (0.10) |

| Calmness | 2.88 (0.10) | 3.92 (0.12) | 1.80 (0.08) | 4.08 (0.12) |

| Chinese music | ||||

| Happiness | 4.22 (0.12) | 2.22 (0.10) | 2.48 (0.10) | 3.07 (0.11) |

| Sadness | 2.36 (0.09) | 4.10 (0.14) | 2.03 (0.10) | 3.75 (0.13) |

| Agitation | 3.61 (0.12) | 1.96 (0.08) | 4.25 (0.15) | 1.81 (0.08) |

| Calmness | 3.45 (0.11) | 3.00 (0.11) | 1.81 (0.08) | 4.06 (0.12) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.G.; Olsen, K.N.; Thompson, W.F. Cross-Cultural Biases of Emotion Perception in Music. Brain Sci. 2025, 15, 477. https://doi.org/10.3390/brainsci15050477

Li MG, Olsen KN, Thompson WF. Cross-Cultural Biases of Emotion Perception in Music. Brain Sciences. 2025; 15(5):477. https://doi.org/10.3390/brainsci15050477

Chicago/Turabian StyleLi, Marjorie G., Kirk N. Olsen, and William Forde Thompson. 2025. "Cross-Cultural Biases of Emotion Perception in Music" Brain Sciences 15, no. 5: 477. https://doi.org/10.3390/brainsci15050477

APA StyleLi, M. G., Olsen, K. N., & Thompson, W. F. (2025). Cross-Cultural Biases of Emotion Perception in Music. Brain Sciences, 15(5), 477. https://doi.org/10.3390/brainsci15050477