Neurocomputational Mechanisms of Sense of Agency: Literature Review for Integrating Predictive Coding and Adaptive Control in Human–Machine Interfaces

Abstract

1. Introduction

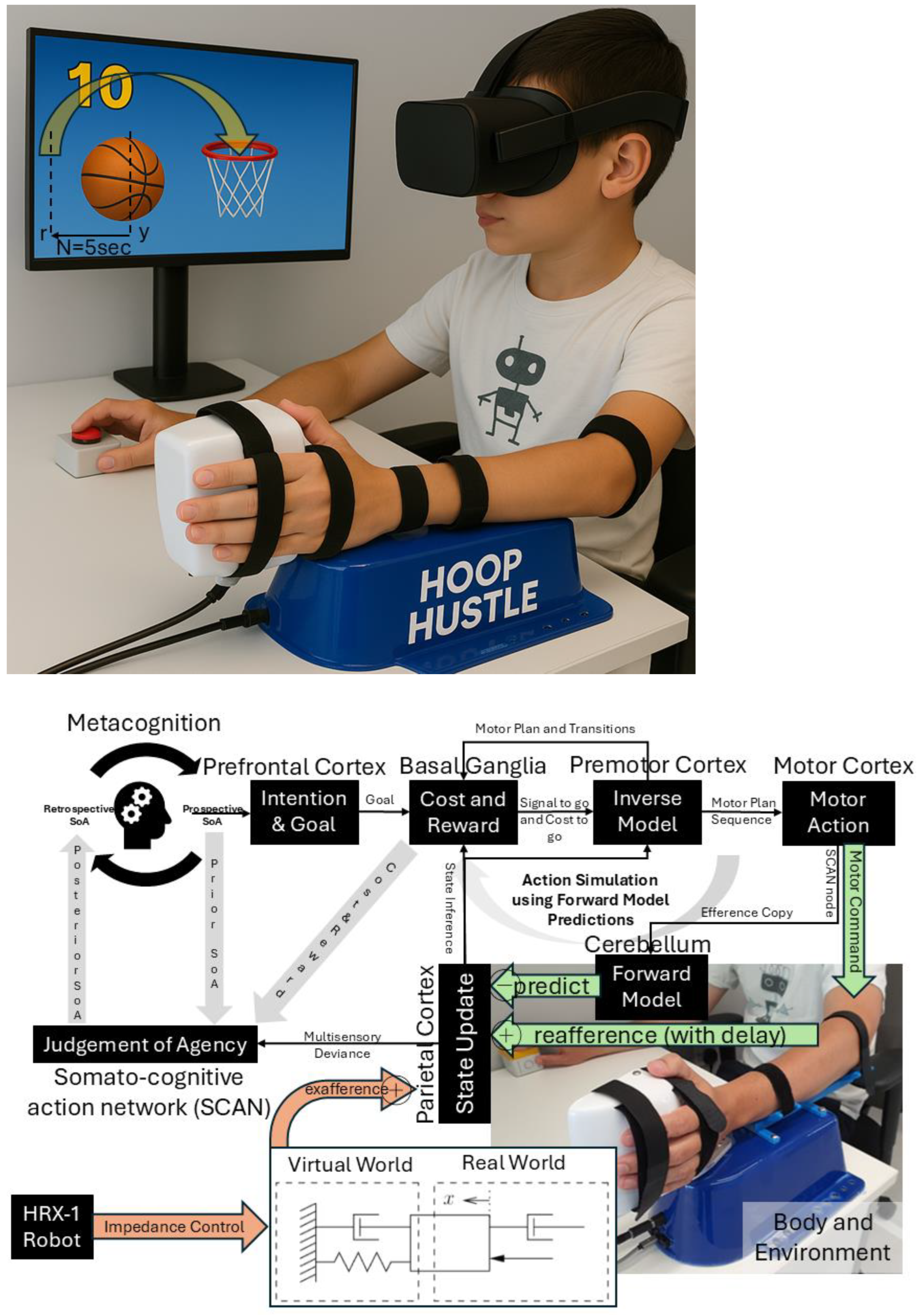

2. Computational Models and Neurophysiological Mechanisms

3. SCAN Mechanisms of Sense of Agency

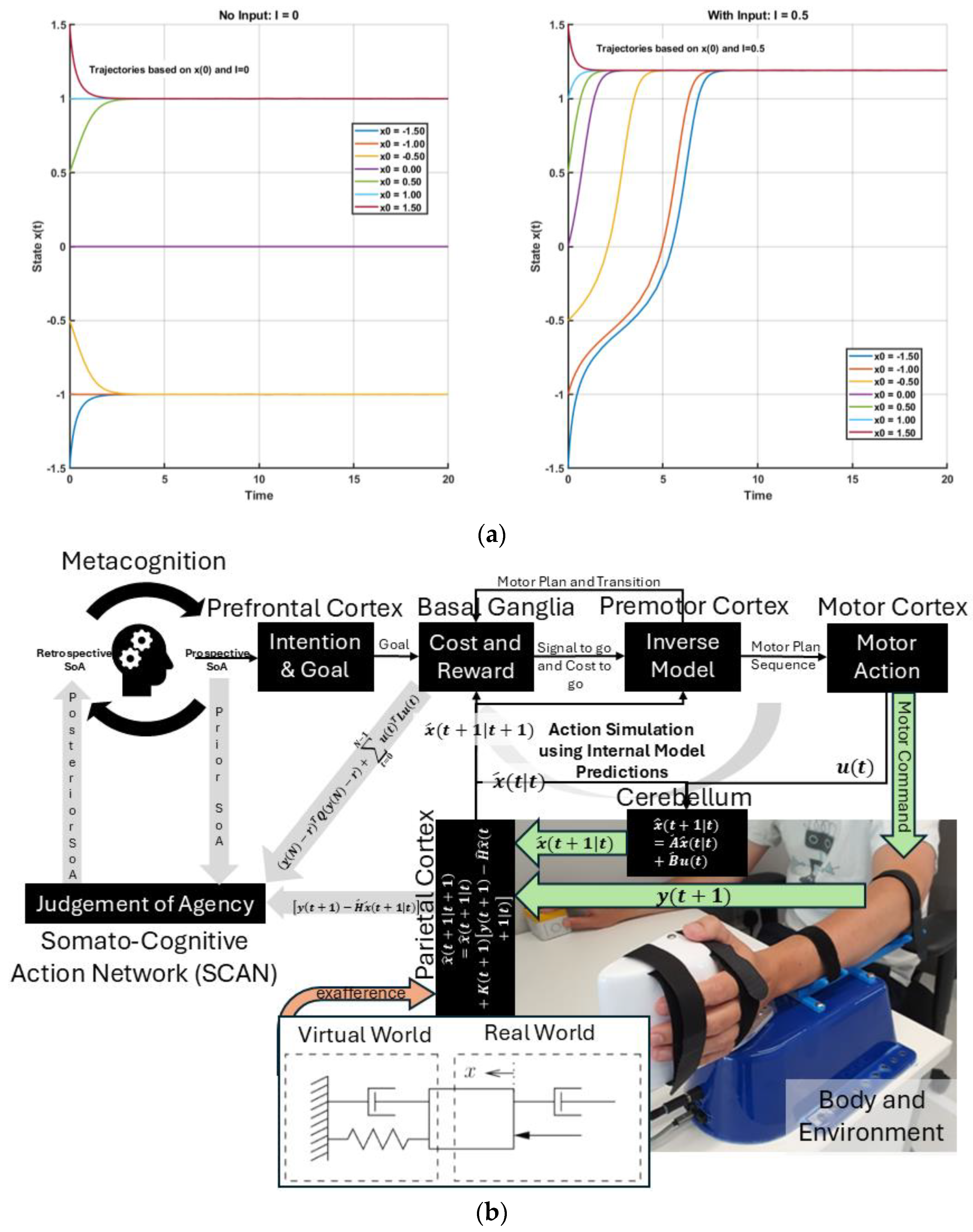

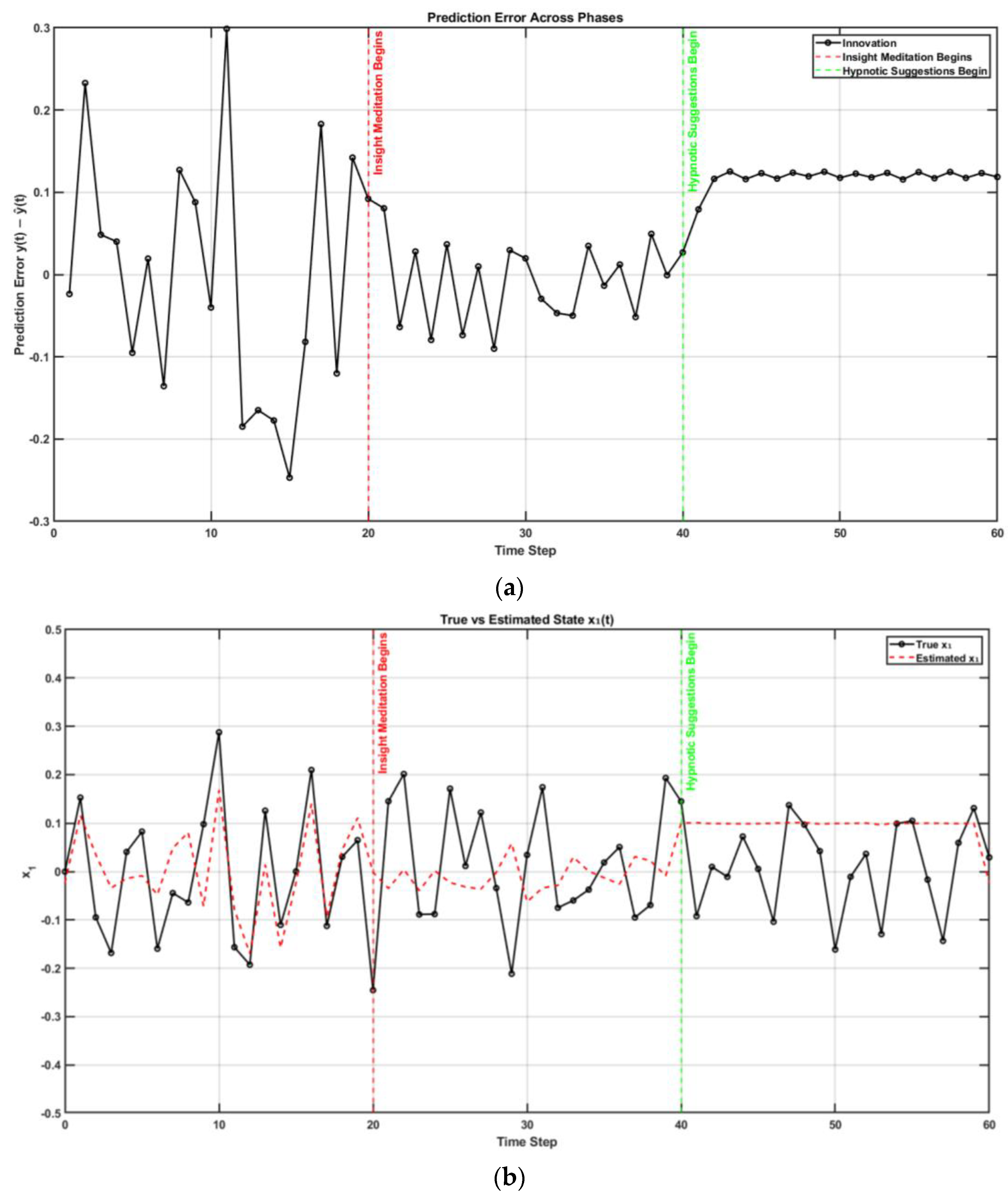

4. Predictive Coding Framework: Preparatory Activity Bayesian Prior

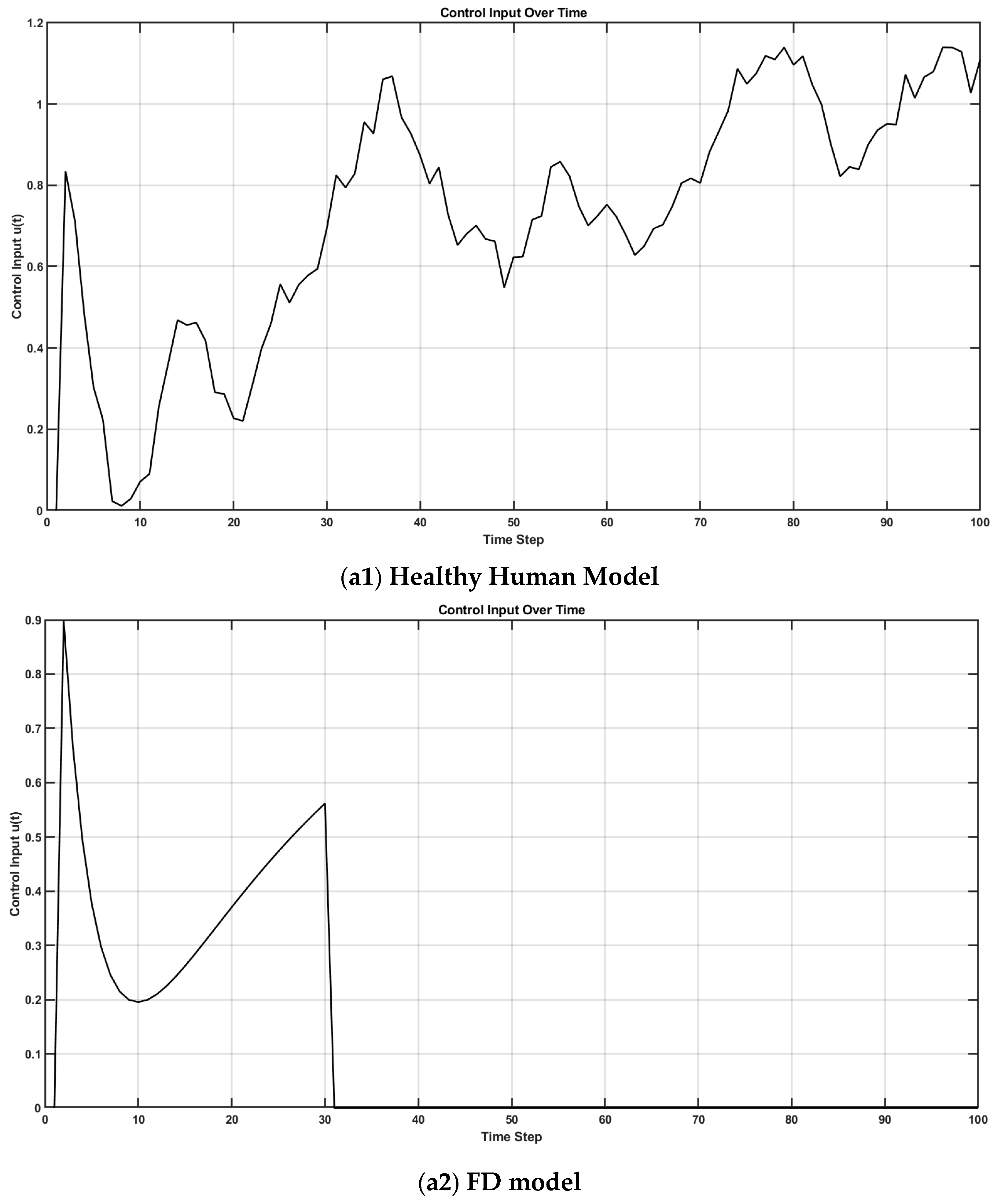

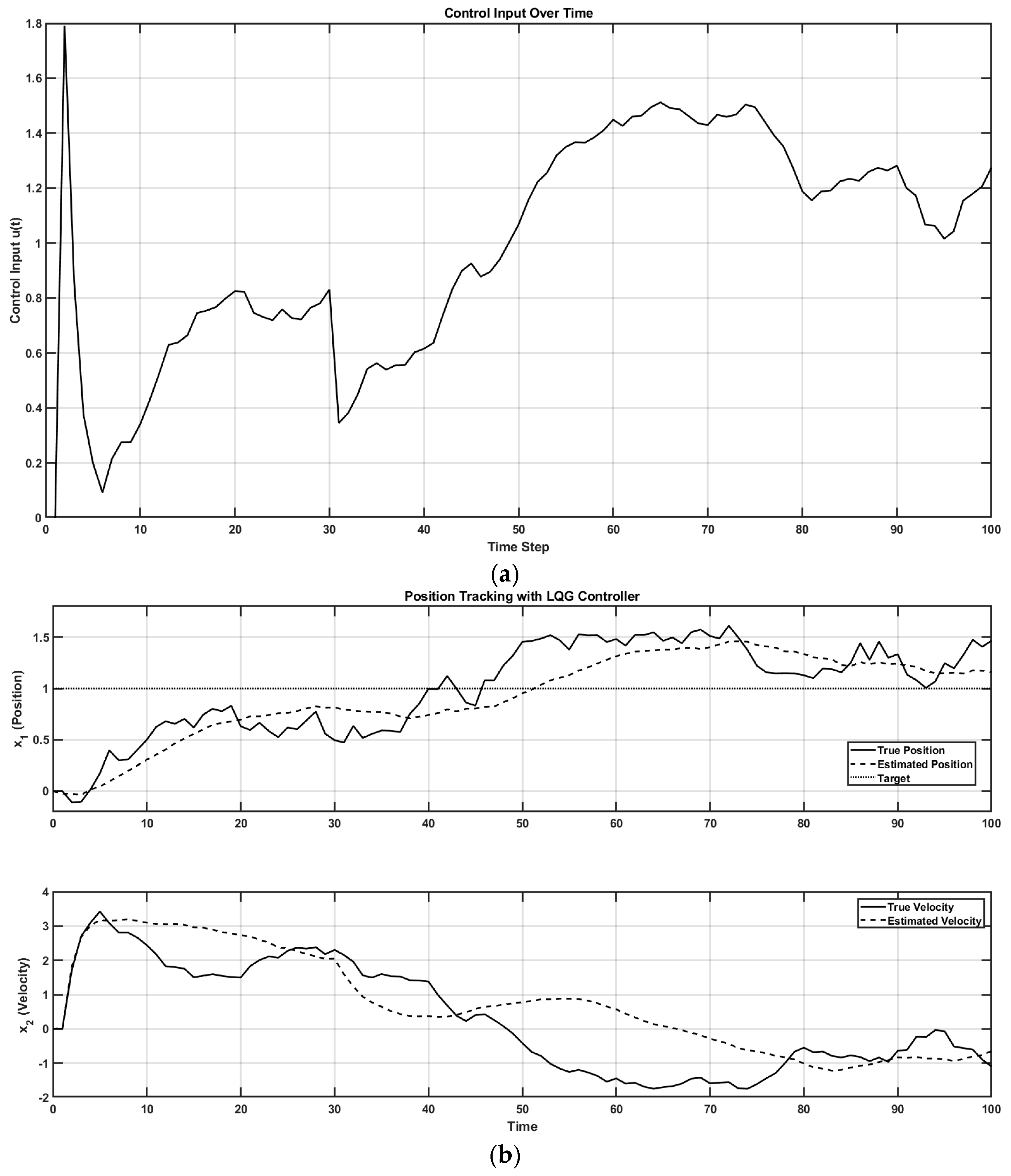

5. Kalman–Bucy Filter and Linear–Quadratic–Gaussian Control

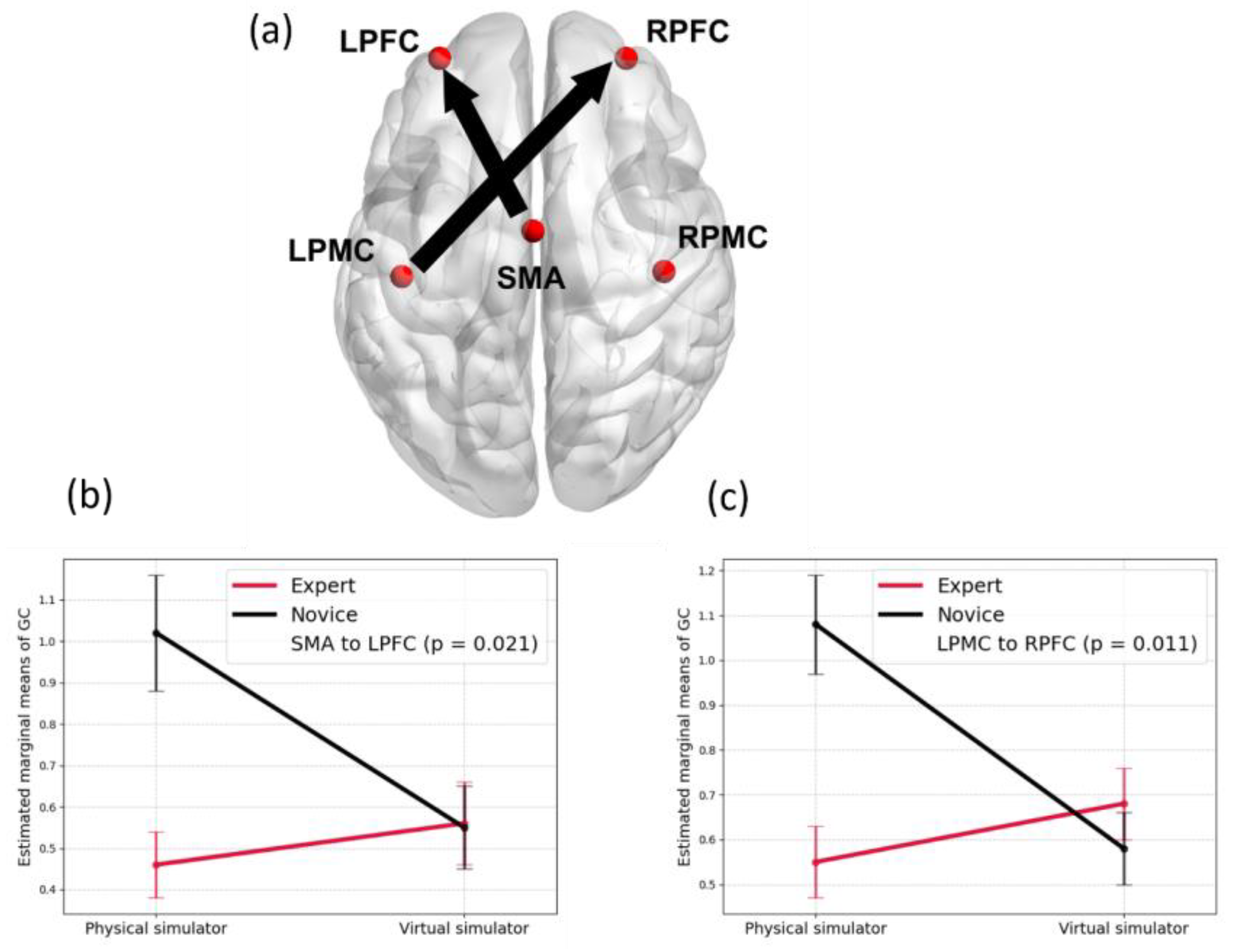

6. “Brain as Controller” vis-à-vis “Brain as Observer” in Skill Learning

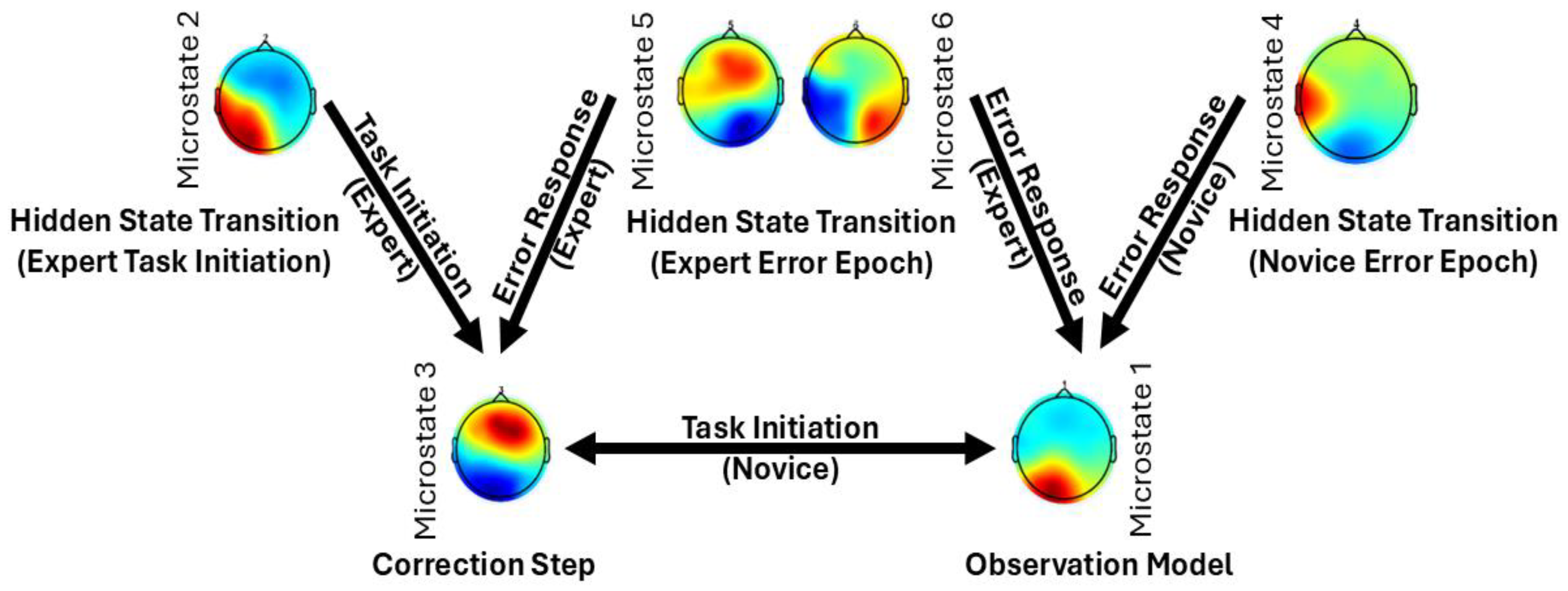

6.1. Microstate Transitions During the Error Epoch

6.2. Microstate Dynamics During Task Initiation

6.3. Internal Simulation Model and Task Observation

7. Discussion

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SoA | Sense of agency |

| HMI | Human–machine interface |

| XR | eXtended Reality |

| VR | Virtual reality |

| FND | Functional neurological disorder |

| EPH | Equilibrium Point Hypothesis |

| fMRI | Functional Magnetic Resonance Imaging |

| ERP | Event-Related Potential |

| DTI | Diffusion Tensor Imaging |

| SMA | Supplementary motor area |

| M1 | Primary motor cortex |

| PFC | Prefrontal cortex |

| IPL | Inferior parietal lobule |

| TPJ | Temporoparietal Junction |

| DMN | Default mode network |

| FPN | Frontoparietal network |

| CON | Cingulo-opercular network |

| SN | Salience network |

| aDMN | Anterior default mode network |

| pDMN | Posterior default mode network |

| LQG | Linear–Quadratic–Gaussian |

| DT | Dynamical Systems Theory |

| tES | Transcranial electrical stimulation |

| AG | Angular gyrus |

| MFG | Middle frontal gyrus |

| SMG | Supramarginal gyrus |

| IFG | Inferior frontal gyrus |

| LPFC | Left prefrontal cortex |

| RPFC | Right prefrontal cortex |

| LPMC | Left premotor cortex |

| exFAT | Extended frontal aslant tract |

| fNIRS | Functional near-infrared spectroscopy |

| EEG | Electroencephalography |

| MS | Microstate |

| PPIE | Patient and Public Involvement and Engagement |

| CFR | Counterfactual regret minimization |

| GLM | General Linear Model |

| GEV | Global Explained Variance |

| HRX-1 | Haptic Rehabilitation eXtended robot 1 (HumanRobotix London) |

| BOLD | Blood Oxygen Level Dependent (implied in neuroimaging context) |

| A, B, C, D, E, F, C′ | EEG microstate classes |

References

- Haggard, P. Sense of Agency in the Human Brain. Nat. Rev. Neurosci. 2017, 18, 196–207. [Google Scholar] [CrossRef]

- Moore, J.W.; Obhi, S.S. Intentional Binding and the Sense of Agency: A Review. Conscious. Cogn. 2012, 21, 546–561. [Google Scholar] [CrossRef] [PubMed]

- Frith, C.D.; Blakemore, S.J.; Wolpert, D.M. Abnormalities in the Awareness and Control of Action. Philos. Trans. R. Soc. B Biol. Sci. 2000, 355, 1771–1788. [Google Scholar] [CrossRef] [PubMed]

- Synofzik, M.; Vosgerau, G.; Newen, A. Beyond the Comparator Model: A Multifactorial Two-Step Account of Agency. Conscious. Cogn. 2008, 17, 219–239. [Google Scholar] [CrossRef]

- Malik, R.A.; Galang, C.M.; Finger, E. The Sense of Agency for Brain Disorders: A Comprehensive Review and Proposed Framework. Neurosci. Biobehav. Rev. 2022, 139, 104759. [Google Scholar] [CrossRef]

- Caspar, E.A.; De Beir, A.; Lauwers, G.; Cleeremans, A.; Vanderborght, B. How Using Brain-Machine Interfaces Influences the Human Sense of Agency. PLoS ONE 2021, 16, e0245191. [Google Scholar] [CrossRef] [PubMed]

- Cornelio, P.; Haggard, P.; Hornbaek, K.; Georgiou, O.; Bergström, J.; Subramanian, S.; Obrist, M. The Sense of Agency in Emerging Technologies for Human–Computer Integration: A Review. Front. Neurosci. 2022, 16, 949138. [Google Scholar] [CrossRef]

- Friston, K. The History of the Future of the Bayesian Brain. NeuroImage 2012, 62, 1230–1233. [Google Scholar] [CrossRef]

- Spratling, M.W. A Review of Predictive Coding Algorithms. Brain Cogn. 2017, 112, 92–97. [Google Scholar] [CrossRef]

- Friston, K. Prediction, Perception and Agency. Int. J. Psychophysiol. 2012, 83, 248–252. [Google Scholar] [CrossRef]

- Srinivasan, M.V.; Laughlin, S.B.; Dubs, A. Predictive Coding: A Fresh View of Inhibition in the Retina. Proc. R. Soc. Lond. Ser. B. Biol. Sci. 1982, 216, 427–459. [Google Scholar] [CrossRef]

- Huang, Y.; Rao, R.P.N. Predictive Coding. WIREs Cogn. Sci. 2011, 2, 580–593. [Google Scholar] [CrossRef]

- Aitchison, L.; Lengyel, M. With or without You: Predictive Coding and Bayesian Inference in the Brain. Curr. Opin. Neurobiol. 2017, 46, 219–227. [Google Scholar] [CrossRef]

- Mumford, D. On the Computational Architecture of the Neocortex. I. The Role of the Thalamo-Cortical Loop. Biol. Cybern. 1991, 65, 135–145. [Google Scholar] [CrossRef] [PubMed]

- Mumford, D. On the Computational Architecture of the Neocortex. II. The Role of Cortico-Cortical Loops. Biol. Cybern. 1992, 66, 241–251. [Google Scholar] [CrossRef] [PubMed]

- Rao, R.P.N.; Ballard, D.H. Predictive Coding in the Visual Cortex: A Functional Interpretation of Some Extra-Classical Receptive-Field Effects. Nat. Neurosci. 1999, 2, 79–87. [Google Scholar] [CrossRef] [PubMed]

- Friston, K. A Theory of Cortical Responses. Philos. Trans. R. Soc. B Biol. Sci. 2005, 360, 815–836. [Google Scholar] [CrossRef]

- Friston, K. The Free-Energy Principle: A Unified Brain Theory? Nat. Rev. Neurosci. 2010, 11, 127–138. [Google Scholar] [CrossRef]

- Bastos, A.M.; Usrey, W.M.; Adams, R.A.; Mangun, G.R.; Fries, P.; Friston, K.J. Canonical Microcircuits for Predictive Coding. Neuron 2012, 76, 695–711. [Google Scholar] [CrossRef]

- Lotter, W.; Kreiman, G.; Cox, D. Deep Predictive Coding Networks for Video Prediction and Unsupervised Learning. arXiv 2017, arXiv:1605.08104. [Google Scholar]

- Parr, T.; Friston, K.J. Generalised Free Energy and Active Inference. Biol. Cybern. 2019, 113, 495–513. [Google Scholar] [CrossRef] [PubMed]

- Rao, R.P.N.; Gklezakos, D.C.; Sathish, V. Active Predictive Coding: A Unifying Neural Model for Active Perception, Compositional Learning, and Hierarchical Planning. Neural Comput. 2023, 36, 1–32. [Google Scholar] [CrossRef]

- Oxenham, A.J. Helmholtz. J. Clin. Investig. 2011, 121, 2064. [Google Scholar] [CrossRef]

- Friston, K.; Schwartenbeck, P.; FitzGerald, T.; Moutoussis, M.; Behrens, T.; Dolan, R.J. The Anatomy of Choice: Active Inference and Agency. Front. Hum. Neurosci. 2013, 7, 598. [Google Scholar] [CrossRef] [PubMed]

- Garrido, M.I.; Kilner, J.M.; Stephan, K.E.; Friston, K.J. The Mismatch Negativity: A Review of Underlying Mechanisms. Clin. Neurophysiol. 2009, 120, 453–463. [Google Scholar] [CrossRef]

- Parr, T.; Pezzulo, G.; Friston, K.J. Active Inference: The Free Energy Principle in Mind, Brain, and Behavior; The MIT Press: Cambridge, MA, USA, 2022; ISBN 978-0-262-36997-8. [Google Scholar]

- Wolpert, D.M.; Kawato, M. Multiple Paired Forward and Inverse Models for Motor Control. Neural Netw. 1998, 11, 1317–1329. [Google Scholar] [CrossRef]

- Wilson, M. Six Views of Embodied Cognition. Psychon. Bull. Rev. 2002, 9, 625–636. [Google Scholar] [CrossRef]

- Varela, F.J.; Rosch, E.; Thompson, E. The Embodied Mind: Cognitive Science and Human Experience; The MIT Press: Cambridge, MA, USA, 1991; ISBN 978-0-262-28547-6. [Google Scholar]

- O’Regan, J.K.; Noë, A. A Sensorimotor Account of Vision and Visual Consciousness. Behav. Brain Sci. 2001, 24, 939–973, discussion 973–1031. [Google Scholar] [CrossRef]

- A Dynamic Systems Approach to the Development of Cognition and Action. Available online: https://mitpress.mit.edu/9780262200950/a-dynamic-systems-approach-to-the-development-of-cognition-and-action/ (accessed on 26 March 2025).

- Kelso, J.A.S.; Schöner, G. Self-Organization of Coordinative Movement Patterns. Hum. Mov. Sci. 1988, 7, 27–46. [Google Scholar] [CrossRef]

- Lobo, L.; Heras-Escribano, M.; Travieso, D. The History and Philosophy of Ecological Psychology. Front. Psychol. 2018, 9, 2228. [Google Scholar] [CrossRef]

- Moore, J.W.; Fletcher, P.C. Sense of Agency in Health and Disease: A Review of Cue Integration Approaches. Conscious. Cogn. 2012, 21, 59–68. [Google Scholar] [CrossRef] [PubMed]

- Wolpe, N.; Haggard, P.; Siebner, H.R.; Rowe, J.B. Cue Integration and the Perception of Action in Intentional Binding. Exp. Brain Res. 2013, 229, 467–474. [Google Scholar] [CrossRef] [PubMed]

- Skandalakis, G.P.; Viganò, L.; Neudorfer, C.; Rossi, M.; Fornia, L.; Cerri, G.; Kinsman, K.P.; Bajouri, Z.; Tavakkoli, A.D.; Koutsarnakis, C.; et al. White Matter Connections within the Central Sulcus Subserving the Somato-Cognitive Action Network. Brain 2025, awaf022. [Google Scholar] [CrossRef]

- Schieber, M.H. Constraints on Somatotopic Organization in the Primary Motor Cortex. J. Neurophysiol. 2001, 86, 2125–2143. [Google Scholar] [CrossRef] [PubMed]

- Gordon, E.M.; Chauvin, R.J.; Van, A.N.; Rajesh, A.; Nielsen, A.; Newbold, D.J.; Lynch, C.J.; Seider, N.A.; Krimmel, S.R.; Scheidter, K.M.; et al. A Somato-Cognitive Action Network Alternates with Effector Regions in Motor Cortex. Nature 2023, 617, 351–359. [Google Scholar] [CrossRef]

- Mangin, J.-F.; Le Guen, Y.; Labra, N.; Grigis, A.; Frouin, V.; Guevara, M.; Fischer, C.; Rivière, D.; Hopkins, W.D.; Régis, J.; et al. “Plis de Passage” Deserve a Role in Models of the Cortical Folding Process. Brain Topogr. 2019, 32, 1035–1048. [Google Scholar] [CrossRef]

- Adams, R.A.; Shipp, S.; Friston, K.J. Predictions Not Commands: Active Inference in the Motor System. Brain Struct. Funct. 2013, 218, 611–643. [Google Scholar] [CrossRef]

- Dutta, A. Bayesian Predictive Coding Hypothesis: Brain as Observer’s Key Role in Insight. Med. Hypotheses 2025, 195, 111546. [Google Scholar] [CrossRef]

- Edwards, M.J.; Adams, R.A.; Brown, H.; Pareés, I.; Friston, K.J. A Bayesian Account of “Hysteria”. Brain 2012, 135, 3495–3512. [Google Scholar] [CrossRef]

- Kirihara, K.; Tada, M.; Koshiyama, D.; Fujioka, M.; Usui, K.; Araki, T.; Kasai, K. A Predictive Coding Perspective on Mismatch Negativity Impairment in Schizophrenia. Front. Psychiatry 2020, 11, 660. [Google Scholar] [CrossRef]

- Powers, A.R.; Mathys, C.; Corlett, P.R. Pavlovian Conditioning–Induced Hallucinations Result from Overweighting of Perceptual Priors. Science 2017, 357, 596–600. [Google Scholar] [CrossRef] [PubMed]

- Stuke, H.; Weilnhammer, V.A.; Sterzer, P.; Schmack, K. Delusion Proneness Is Linked to a Reduced Usage of Prior Beliefs in Perceptual Decisions. Schizophr. Bull. 2019, 45, 80–86. [Google Scholar] [CrossRef]

- Dutta, A. ‘Hyperbinding’ in Functional Movement Disorders: Role of Supplementary Motor Area Efferent Signalling. Brain Commun. 2025, 7, fcae464. [Google Scholar] [CrossRef] [PubMed]

- Human Motor Cortex Re-Mapped by Precision Imaging|Neuroscience. Available online: https://www.labroots.com/trending/neuroscience/25118/human-motor-cortex-re-mapped-precision-imaging?srsltid=AfmBOor61nMONkApHTJKhvA0XMM_uclQq-i1PA8Q6Zd1L4Xi0mtP_zvs (accessed on 6 April 2025).

- Dutta, A.; Das, A. A Platform Technology for VR Biofeedback Training under Operant Conditioning for Functional Lower Limb Weakness. Preprints 2024, 2024072520. [Google Scholar] [CrossRef]

- Voss, M.; Chambon, V.; Wenke, D.; Kühn, S.; Haggard, P. In and out of Control: Brain Mechanisms Linking Fluency of Action Selection to Self-Agency in Patients with Schizophrenia. Brain 2017, 140, 2226–2239. [Google Scholar] [CrossRef]

- Sidarus, N.; Chambon, V.; Haggard, P. Priming of Actions Increases Sense of Control over Unexpected Outcomes. Conscious. Cogn. 2013, 22, 1403–1411. [Google Scholar] [CrossRef]

- Dutta, A.; Hatjipanagioti, K.; Newsham, M.; Leyland, L.; Rickson, L.; Buchanan, A.; Farkhatdinov, I.; Twamley, J.; Das, A. Co-Production of a Platform Neurotechnology for XR Biofeedback Training for Functional Upper Limb Weakness. JMIR XR Spat. Comput. 2025. [Google Scholar] [CrossRef]

- Boucher, P.O.; Wang, T.; Carceroni, L.; Kane, G.; Shenoy, K.V.; Chandrasekaran, C. Initial Conditions Combine with Sensory Evidence to Induce Decision-Related Dynamics in Premotor Cortex. Nat. Commun. 2023, 14, 6510. [Google Scholar] [CrossRef]

- Körding, K.P.; Wolpert, D.M. Bayesian Decision Theory in Sensorimotor Control. Trends Cogn. Sci. 2006, 10, 319–326. [Google Scholar] [CrossRef]

- Wolpert, D.M.; Miall, R.C.; Kawato, M. Internal Models in the Cerebellum. Trends Cogn. Sci. 1998, 2, 338–347. [Google Scholar] [CrossRef]

- Engel, A.K.; Fries, P. Beta-Band Oscillations--Signalling the Status Quo? Curr. Opin. Neurobiol. 2010, 20, 156–165. [Google Scholar] [CrossRef] [PubMed]

- Feldman, A.G. Origin and Advances of the Equilibrium-Point Hypothesis. Adv. Exp. Med. Biol. 2009, 629, 637–643. [Google Scholar] [CrossRef]

- Latash, M.L. Evolution of Motor Control: From Reflexes and Motor Programs to the Equilibrium-Point Hypothesis. J. Hum. Kinet. 2008, 19, 3–24. [Google Scholar] [CrossRef]

- Lan, N.; Crago, P.E. Equilibrium-Point Hypothesis, Minimum Effort Control Strategy and the Triphasic Muscle Activation Pattern. Behav. Brain Sci. 1992, 15, 769–771. [Google Scholar] [CrossRef]

- Latash, M.L.; Gutman, S.R. Abnormal Motor Patterns in the Framework of the Equilibrium-Point Hypothesis: A Cause for Dystonic Movements? Biol. Cybern. 1994, 71, 87–94. [Google Scholar] [CrossRef]

- Codol, O.; Kashefi, M.; Forgaard, C.J.; Galea, J.M.; Pruszynski, J.A.; Gribble, P.L. Sensorimotor Feedback Loops Are Selectively Sensitive to Reward. eLife 2023, 12, e81325. [Google Scholar] [CrossRef] [PubMed]

- Baltieri, M.; Buckley, C. On Kalman-Bucy Filters, Linear Quadratic Control and Active Inference. arXiv 2020, arXiv:2005.06269. [Google Scholar]

- Kumar, D.; Sinha, N.; Dutta, A.; Lahiri, U. Virtual Reality-Based Balance Training System Augmented with Operant Conditioning Paradigm. Biomed. Eng. Online 2019, 18, 90. [Google Scholar] [CrossRef] [PubMed]

- Körding, K. Decision Theory: What “Should” the Nervous System Do? Science 2007, 318, 606–610. [Google Scholar] [CrossRef]

- Tanaka, T. Evaluating the Bayesian Causal Inference Model of Intentional Binding through Computational Modeling. Sci. Rep. 2024, 14, 2979. [Google Scholar] [CrossRef]

- Körding, K.P.; Wolpert, D.M. Bayesian Integration in Sensorimotor Learning. Nature 2004, 427, 244–247. [Google Scholar] [CrossRef] [PubMed]

- Genewein, T.; Braun, D.A. Occam’s Razor in Sensorimotor Learning. Proc. R. Soc. B Biol. Sci. 2014, 281, 20132952. [Google Scholar] [CrossRef]

- Blanchard, T.; Lombrozo, T.; Nichols, S. Bayesian Occam’s Razor Is a Razor of the People. Cogn. Sci. 2018, 42, 1345–1359. [Google Scholar] [CrossRef]

- Kalman, R.E. On the General Theory of Control Systems. IFAC Proc. Vol. 1960, 1, 491–502. [Google Scholar] [CrossRef]

- Millidge, B.; Seth, A.; Buckley, C.L. Predictive Coding: A Theoretical and Experimental Review. arXiv 2022, arXiv:2107.12979. [Google Scholar]

- Gilmour, G.S.; Nielsen, G.; Teodoro, T.; Yogarajah, M.; Coebergh, J.A.; Dilley, M.D.; Martino, D.; Edwards, M.J. Management of Functional Neurological Disorder. J. Neurol. 2020, 267, 2164–2172. [Google Scholar] [CrossRef]

- Dutta, A.; Lahiri, U.; Das, A.; Nitsche, M.A.; Guiraud, D. Post-Stroke Balance Rehabilitation under Multi-Level Electrotherapy: A Conceptual Review. Front. Neurosci. 2014, 8, 403. [Google Scholar] [CrossRef] [PubMed]

- Churchland, M.M.; Shenoy, K.V. Preparatory Activity and the Expansive Null-Space. Nat. Rev. Neurosci. 2024, 25, 213–236. [Google Scholar] [CrossRef]

- Athans, M. The Role and Use of the Stochastic Linear-Quadratic-Gaussian Problem in Control System Design. IEEE Trans. Autom. Control. 1971, 16, 529–552. [Google Scholar] [CrossRef]

- Todorov, E.; Li, W. A Generalized Iterative LQG Method for Locally-Optimal Feedback Control of Constrained Nonlinear Stochastic Systems. In Proceedings of the 2005 American Control Conference, Portland, OR, USA, 8–10 June 2005; Volume 1, pp. 300–306. [Google Scholar]

- Maceira-Elvira, P.; Timmermann, J.E.; Popa, T.; Schmid, A.-C.; Krakauer, J.W.; Morishita, T.; Wessel, M.J.; Hummel, F.C. Dissecting Motor Skill Acquisition: Spatial Coordinates Take Precedence. Sci. Adv. 2022, 8, eabo3505. [Google Scholar] [CrossRef]

- Decety, J.; Grèzes, J. The Power of Simulation: Imagining One’s Own and Other’s Behavior. Brain Res. 2006, 1079, 4–14. [Google Scholar] [CrossRef] [PubMed]

- Oakley, D.A.; Halligan, P.W. Hypnotic Suggestion: Opportunities for Cognitive Neuroscience. Nat. Rev. Neurosci. 2013, 14, 565–576. [Google Scholar] [CrossRef] [PubMed]

- Apelian, C.; De Vignemont, F.; Terhune, D.B. Comparative Effects of Hypnotic Suggestion and Imagery Instruction on Bodily Awareness. Conscious. Cogn. 2023, 108, 103473. [Google Scholar] [CrossRef]

- Elkins, G.R.; Barabasz, A.F.; Council, J.R.; Spiegel, D. Advancing Research and Practice: The Revised APA Division 30 Definition of Hypnosis. Int. J. Clin. Exp. Hypn. 2015, 63, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Stankozi, C. Imagination, Ecologized and Enacted: Driven by the Historicity of Affordance Competition. Front. Psychol. 2024, 15, 1369820. [Google Scholar] [CrossRef]

- Platform Technology for VR Biofeedback Training under Operant Conditioning for Functional Limb Weakness: A Proposal for Co-Production of At-Home Solution (REACT2HOME). Available online: https://preprints.jmir.org/preprint/70620 (accessed on 2 March 2025).

- Rimbert, S.; Zaepffel, M.; Riff, P.; Adam, P.; Bougrain, L. Hypnotic State Modulates Sensorimotor Beta Rhythms During Real Movement and Motor Imagery. Front. Psychol. 2019, 10, 2341. [Google Scholar] [CrossRef]

- Takarada, Y.; Nozaki, D. Hypnotic Suggestion Alters the State of the Motor Cortex. Neurosci. Res. 2014, 85, 28–32. [Google Scholar] [CrossRef]

- Cesari, P.; Modenese, M.; Benedetti, S.; Emadi Andani, M.; Fiorio, M. Hypnosis-Induced Modulation of Corticospinal Excitability during Motor Imagery. Sci. Rep. 2020, 10, 16882. [Google Scholar] [CrossRef]

- Sanyal, R.; Raseta, M.; Natarajan, I.; Roffe, C. The Use of Hypnotherapy as Treatment for Functional Stroke: A Case Series from a Single Center in the UK. Int. J. Stroke 2022, 17, 59–66. [Google Scholar] [CrossRef]

- Pareés, I.; Brown, H.; Nuruki, A.; Adams, R.A.; Davare, M.; Bhatia, K.P.; Friston, K.; Edwards, M.J. Loss of Sensory Attenuation in Patients with Functional (Psychogenic) Movement Disorders. Brain 2014, 137, 2916–2921. [Google Scholar] [CrossRef]

- Espay, A.J.; Aybek, S.; Carson, A.; Edwards, M.J.; Goldstein, L.H.; Hallett, M.; LaFaver, K.; LaFrance, W.C.; Lang, A.E.; Nicholson, T. Current Concepts in Diagnosis and Treatment of Functional Neurological Disorders. JAMA Neurol. 2018, 75, 1132–1141. [Google Scholar] [CrossRef] [PubMed]

- Pearl, J. Causal Inference. In Proceedings of the Workshop on Causality: Objectives and Assessment at NIPS 2008, PMLR, Whistler, BC, Canada, 12 December 2008; pp. 39–58. [Google Scholar]

- Frith, C. Explaining Delusions of Control: The Comparator Model 20 Years On. Conscious. Cogn. 2012, 21, 52–54. [Google Scholar] [CrossRef]

- Zinkevich, M.; Johanson, M.; Bowling, M.; Piccione, C. Regret Minimization in Games with Incomplete Information. In Proceedings of the 21st International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 3–6 December 2007; Curran Associates Inc.: Red Hook, NY, USA, 2007; pp. 1729–1736. [Google Scholar]

- Corlett, P.R.; Aitken, M.R.F.; Dickinson, A.; Shanks, D.R.; Honey, G.D.; Honey, R.A.E.; Robbins, T.W.; Bullmore, E.T.; Fletcher, P.C. Prediction Error during Retrospective Revaluation of Causal Associations in Humans: fMRI Evidence in Favor of an Associative Model of Learning. Neuron 2004, 44, 877–888. [Google Scholar] [CrossRef] [PubMed]

- Holt, C.A.; Roth, A.E. The Nash Equilibrium: A Perspective. Proc. Natl. Acad. Sci. USA 2004, 101, 3999–4002. [Google Scholar] [CrossRef] [PubMed]

- Kamat, A.; Makled, B.; Norfleet, J.; Schwaitzberg, S.D.; Intes, X.; De, S.; Dutta, A. Directed Information Flow during Laparoscopic Surgical Skill Acquisition Dissociated Skill Level and Medical Simulation Technology. npj Sci. Learn. 2022, 7, 1–13. [Google Scholar] [CrossRef]

- Bréchet, L.; Brunet, D.; Birot, G.; Gruetter, R.; Michel, C.M.; Jorge, J. Capturing the Spatiotemporal Dynamics of Self-Generated, Task-Initiated Thoughts with EEG and fMRI. NeuroImage 2019, 194, 82–92. [Google Scholar] [CrossRef]

- Walia, P.; Fu, Y.; Norfleet, J.; Schwaitzberg, S.D.; Intes, X.; De, S.; Cavuoto, L.; Dutta, A. Error-Related Brain State Analysis Using Electroencephalography in Conjunction with Functional near-Infrared Spectroscopy during a Complex Surgical Motor Task. Brain Inform. 2022, 9, 29. [Google Scholar] [CrossRef]

- Michel, C.M.; Koenig, T. EEG Microstates as a Tool for Studying the Temporal Dynamics of Whole-Brain Neuronal Networks: A Review. NeuroImage 2018, 180, 577–593. [Google Scholar] [CrossRef]

- Kashihara, S.; Asai, T.; Imamizu, H. Topographical Polarity Reveals Continuous EEG Microstate Transitions and Electric Field Direction in Healthy Aging. bioRxiv 2024. [Google Scholar] [CrossRef]

- Bek, J.; O’Farrell, R.; Cooney, S.M. Experience in Sports and Music Influences Motor Imagery: Insights from Implicit and Explicit Measures. Acta Psychol. 2025, 252, 104650. [Google Scholar] [CrossRef]

- L’Huillier, J.C.; Jones, C.B.; Fu, Y.; Myneni, A.A.; De, S.; Cavuoto, L.; Dutta, A.; Stefanski, M.; Cooper, C.A.; Schwaitzberg, S.D. On the Journey to Measure Cognitive Expertise: What Can Functional Imaging Tell Us? Surgery 2025, 181, 109145. [Google Scholar] [CrossRef]

- Pirondini, E.; Coscia, M.; Minguillon, J.; Millán, J.d.R.; Van De Ville, D.; Micera, S. EEG Topographies Provide Subject-Specific Correlates of Motor Control. Sci. Rep. 2017, 7, 13229. [Google Scholar] [CrossRef] [PubMed]

- Chenot, Q.; Hamery, C.; Truninger, M.; Langer, N.; De Boissezon, X.; Scannella, S. Investigating the Relationship between Resting-State EEG Microstates and Executive Functions: A Null Finding. Cortex 2024, 178, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Tarailis, P.; Koenig, T.; Michel, C.M.; Griškova-Bulanova, I. The Functional Aspects of Resting EEG Microstates: A Systematic Review. Brain Topogr. 2024, 37, 181–217. [Google Scholar] [CrossRef]

- Goulden, N.; Khusnulina, A.; Davis, N.J.; Bracewell, R.M.; Bokde, A.L.; McNulty, J.P.; Mullins, P.G. The Salience Network Is Responsible for Switching between the Default Mode Network and the Central Executive Network: Replication from DCM. NeuroImage 2014, 99, 180–190. [Google Scholar] [CrossRef] [PubMed]

- Carter, C.S.; Braver, T.S.; Barch, D.M.; Botvinick, M.M.; Noll, D.; Cohen, J.D. Anterior Cingulate Cortex, Error Detection, and the Online Monitoring of Performance. Science 1998, 280, 747–749. [Google Scholar] [CrossRef]

- Sadaghiani, S.; D’Esposito, M. Functional Characterization of the Cingulo-Opercular Network in the Maintenance of Tonic Alertness. Cereb. Cortex 2015, 25, 2763–2773. [Google Scholar] [CrossRef]

- Clairis, N.; Lopez-Persem, A. Debates on the Dorsomedial Prefrontal/Dorsal Anterior Cingulate Cortex: Insights for Future Research. Brain 2023, 146, 4826–4844. [Google Scholar] [CrossRef]

- Visalli, A.; Capizzi, M.; Ambrosini, E.; Kopp, B.; Vallesi, A. Electroencephalographic Correlates of Temporal Bayesian Belief Updating and Surprise. NeuroImage 2021, 231, 117867. [Google Scholar] [CrossRef]

- Wood, J.L.; Nee, D.E. Cingulo-Opercular Subnetworks Motivate Frontoparietal Subnetworks during Distinct Cognitive Control Demands. J. Neurosci. 2023, 43, 1225–1237. [Google Scholar] [CrossRef]

- Kamat, A.; Rahul, R.; Dutta, A.; Cavuoto, L.; Kruger, U.; Burke, H.; Hackett, M.; Norfleet, J.; Schwaitzberg, S.; De, S. Dynamic Directed Functional Connectivity as a Neural Biomarker for Objective Motor Skill Assessment. arXiv 2025, arXiv:2502.13362. [Google Scholar]

- Pearson, J.; Naselaris, T.; Holmes, E.A.; Kosslyn, S.M. Mental Imagery: Functional Mechanisms and Clinical Applications. Trends Cogn. Sci. 2015, 19, 590–602. [Google Scholar] [CrossRef] [PubMed]

- Kim, E.; Lee, W.H.; Seo, H.G.; Nam, H.S.; Kim, Y.J.; Kang, M.-G.; Bang, M.S.; Kim, S.; Oh, B.-M. Deciphering Functional Connectivity Differences Between Motor Imagery and Execution of Target-Oriented Grasping. Brain Topogr. 2023, 36, 433–446. [Google Scholar] [CrossRef]

- Möhring, L.; Gläscher, J. Prediction Errors Drive Dynamic Changes in Neural Patterns That Guide Behavior. Cell Rep. 2023, 42, 112931. [Google Scholar] [CrossRef] [PubMed]

- Seghier, M.L. The Angular Gyrus. Neuroscientist 2013, 19, 43–61. [Google Scholar] [CrossRef]

- Khalighinejad, N.; Haggard, P. Modulating Human Sense of Agency with Non-Invasive Brain Stimulation. Cortex 2015, 69, 93–103. [Google Scholar] [CrossRef]

- Clairis, N.; Barakat, A.; Brochard, J.; Xin, L.; Sandi, C. A Neurometabolic Mechanism Involving dmPFC/dACC Lactate in Physical Effort-Based Decision-Making. Mol. Psychiatry 2024, 30, 899–913. [Google Scholar] [CrossRef]

- Kühn, S.; Brass, M.; Haggard, P. Feeling in Control: Neural Correlates of Experience of Agency. Cortex 2013, 49, 1935–1942. [Google Scholar] [CrossRef]

- Cho, H.J.; Waugh, R.; Wu, T.; Panyakaew, P.; Mente, K.; Urbano, D.; Hallett, M.; Horovitz, S.G. Role of Supplementary Motor Area in Cervical Dystonia and Sensory Tricks. Sci. Rep. 2022, 12, 21206. [Google Scholar] [CrossRef]

- Pastötter, B.; Weissbach, A.; Takacs, A.; Moyé, J.; Verrel, J.; Chwolka, F.; Friedrich, J.; Paulus, T.; Zittel, S.; Bäumer, T.; et al. Increased Beta Synchronization Underlies Perception-Action Hyperbinding in Functional Movement Disorders. Brain Commun. 2024, 6, fcae301. [Google Scholar] [CrossRef]

- Hallett, M. Functional Neurologic Disorder, La Lésion Dynamique. Neurology 2024, 103, e210051. [Google Scholar] [CrossRef] [PubMed]

- Teodoro, T.; Meppelink, A.M.; Little, S.; Grant, R.; Nielsen, G.; Macerollo, A.; Pareés, I.; Edwards, M.J. Abnormal Beta Power Is a Hallmark of Explicit Movement Control in Functional Movement Disorders. Neurology 2018, 90, e247–e253. [Google Scholar] [CrossRef]

- Srzich, A.J.; Byblow, W.D.; Stinear, J.W.; Cirillo, J.; Anson, J.G. Can Motor Imagery and Hypnotic Susceptibility Explain Conversion Disorder with Motor Symptoms? Neuropsychologia 2016, 89, 287–298. [Google Scholar] [CrossRef] [PubMed]

- Ren, J.; Zhang, W.; Dahmani, L.; Hu, Q.; Jiang, C.; Bai, Y.; Ji, G.-J.; Zhou, Y.; Zhang, P.; Wang, W.; et al. The Somato-Cognitive Action Network Links Diverse Neuromodulatory Targets for Parkinson’s Disease. bioRxiv 2023. [Google Scholar] [CrossRef]

- Dupont, W.; Papaxanthis, C.; Madden-Lombardi, C.; Lebon, F. Explicit and Implicit Motor Simulations Are Impaired in Individuals with Aphantasia. Brain Commun. 2024, 6, fcae072. [Google Scholar] [CrossRef] [PubMed]

- Locke, S.M.; Mamassian, P.; Landy, M.S. Performance Monitoring for Sensorimotor Confidence: A Visuomotor Tracking Study. Cognition 2020, 205, 104396. [Google Scholar] [CrossRef]

- Maurus, P.; Jackson, K.; Cashaback, J.G.A.; Cluff, T. The Nervous System Tunes Sensorimotor Gains When Reaching in Variable Mechanical Environments. iScience 2023, 26, 106756. [Google Scholar] [CrossRef]

- Kilteni, K.; Ehrsson, H.H. Dynamic Changes in Somatosensory and Cerebellar Activity Mediate Temporal Recalibration of Self-Touch. Commun. Biol. 2024, 7, 1–15. [Google Scholar] [CrossRef]

- Kim, H.; Tobisawa, S.; Park, H.; Kim, J.; Lee, J.; Shin, D. Aging-Induced Degradation in Tracking Performance in Three-Dimensional Movement. SICE J. Control. Meas. Syst. Integr. 2024, 17, 239–246. [Google Scholar] [CrossRef]

- Tzagarakis, C.; Ince, N.F.; Leuthold, A.C.; Pellizzer, G. Beta-Band Activity during Motor Planning Reflects Response Uncertainty. J. Neurosci. 2010, 30, 11270–11277. [Google Scholar] [CrossRef]

- McFarland, D.J.; Miner, L.A.; Vaughan, T.M.; Wolpaw, J.R. Mu and Beta Rhythm Topographies during Motor Imagery and Actual Movements. Brain Topogr. 2000, 12, 177–186. [Google Scholar] [CrossRef]

- Wischnewski, M.; Haigh, Z.J.; Shirinpour, S.; Alekseichuk, I.; Opitz, A. The Phase of Sensorimotor Mu and Beta Oscillations Has the Opposite Effect on Corticospinal Excitability. Brain Stimul. 2022, 15, 1093–1100. [Google Scholar] [CrossRef]

- Zito, G.A.; de Sousa Ribeiro, R.; Kamal, E.; Ledergerber, D.; Imbach, L.; Polania, R. Self-Modulation of the Sense of Agency via Neurofeedback Enhances Sensory-Guided Behavioral Control. Cereb. Cortex 2023, 33, 11447–11455. [Google Scholar] [CrossRef] [PubMed]

- Ide, J.S.; Li, C.R. A Cerebellar Thalamic Cortical Circuit for Error-Related Cognitive Control. NeuroImage 2011, 54, 455–464. [Google Scholar] [CrossRef]

- Blakemore, S.-J.; Oakley, D.A.; Frith, C.D. Delusions of Alien Control in the Normal Brain. Neuropsychologia 2003, 41, 1058–1067. [Google Scholar] [CrossRef] [PubMed]

- Schrag, A.E.; Mehta, A.R.; Bhatia, K.P.; Brown, R.J.; Frackowiak, R.S.J.; Trimble, M.R.; Ward, N.S.; Rowe, J.B. The Functional Neuroimaging Correlates of Psychogenic versus Organic Dystonia. Brain 2013, 136, 770–781. [Google Scholar] [CrossRef] [PubMed]

- Blakemore, S.J.; Frith, C.D.; Wolpert, D.M. The Cerebellum Is Involved in Predicting the Sensory Consequences of Action. Neuroreport 2001, 12, 1879–1884. [Google Scholar] [CrossRef]

- Batsikadze, G.; Rezaee, Z.; Chang, D.-I.; Gerwig, M.; Herlitze, S.; Dutta, A.; Nitsche, M.A.; Timmann, D. Effects of Cerebellar Transcranial Direct Current Stimulation on Cerebellar-Brain Inhibition in Humans: A Systematic Evaluation. Brain Stimul. 2019, 12, 1177–1186. [Google Scholar] [CrossRef]

- Rezaee, Z.; Dutta, A. A Computational Pipeline to Optimize Lobule-Specific Electric Field Distribution during Cerebellar Transcranial Direct Current Stimulation. Front. Neurosci. 2019, 13, 266. [Google Scholar] [CrossRef]

- Polito, V.; Barnier, A.J.; Woody, E.Z. Developing the Sense of Agency Rating Scale (SOARS): An Empirical Measure of Agency Disruption in Hypnosis. Conscious. Cogn. 2013, 22, 684–696. [Google Scholar] [CrossRef]

- Bréchet, L.; Ziegler, D.A.; Simon, A.J.; Brunet, D.; Gazzaley, A.; Michel, C.M. Reconfiguration of Electroencephalography Microstate Networks after Breath-Focused, Digital Meditation Training. Brain Connect. 2021, 11, 146–155. [Google Scholar] [CrossRef] [PubMed]

- Panda, R.; Bharath, R.D.; Upadhyay, N.; Mangalore, S.; Chennu, S.; Rao, S.L. Temporal Dynamics of the Default Mode Network Characterize Meditation-Induced Alterations in Consciousness. Front. Hum. Neurosci. 2016, 10, 372. [Google Scholar] [CrossRef] [PubMed]

- Katayama, H.; Gianotti, L.R.R.; Isotani, T.; Faber, P.L.; Sasada, K.; Kinoshita, T.; Lehmann, D. Classes of Multichannel EEG Microstates in Light and Deep Hypnotic Conditions. Brain Topogr. 2007, 20, 7–14. [Google Scholar] [CrossRef]

- Xu, X.; Yuan, H.; Lei, X. Activation and Connectivity within the Default Mode Network Contribute Independently to Future-Oriented Thought. Sci. Rep. 2016, 6, 21001. [Google Scholar] [CrossRef] [PubMed]

- Nagai, Y.; Critchley, H.D.; Featherstone, E.; Fenwick, P.B.C.; Trimble, M.R.; Dolan, R.J. Brain Activity Relating to the Contingent Negative Variation: An fMRI Investigation. NeuroImage 2004, 21, 1232–1241. [Google Scholar] [CrossRef]

- Birbaumer, N.; Elbert, T.; Canavan, A.G.; Rockstroh, B. Slow Potentials of the Cerebral Cortex and Behavior. Physiol. Rev. 1990, 70, 1–41. [Google Scholar] [CrossRef]

- Kononowicz, T.W.; Penney, T.B. The Contingent Negative Variation (CNV): Timing Isn’t Everything. Curr. Opin. Behav. Sci. 2016, 8, 231–237. [Google Scholar] [CrossRef]

- Dutta, A.; Boulenouar, R.S.; Guiraud, D.; Nitsche, M.A. Delineating the Effects of Anodal Transcranial Direct Current Stimulation on Myoelectric Control Based on Slow Cortical Potentials. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 3094–3097. [Google Scholar] [CrossRef]

- Saarinen, T.; Kujala, J.; Laaksonen, H.; Jalava, A.; Salmelin, R. Task-Modulated Corticocortical Synchrony in the Cognitive-Motor Network Supporting Handwriting. Cereb. Cortex 2020, 30, 1871–1886. [Google Scholar] [CrossRef]

- Seghezzi, S.; Zirone, E.; Paulesu, E.; Zapparoli, L. The Brain in (Willed) Action: A Meta-Analytical Comparison of Imaging Studies on Motor Intentionality and Sense of Agency. Front. Psychol. 2019, 10, 804. [Google Scholar] [CrossRef]

- Zito, G.A.; Wiest, R.; Aybek, S. Neural Correlates of Sense of Agency in Motor Control: A Neuroimaging Meta-Analysis. PLoS ONE 2020, 15, e0234321. [Google Scholar] [CrossRef]

- Yomogida, Y.; Sugiura, M.; Sassa, Y.; Wakusawa, K.; Sekiguchi, A.; Fukushima, A.; Takeuchi, H.; Horie, K.; Sato, S.; Kawashima, R. The Neural Basis of Agency: An fMRI Study. NeuroImage 2010, 50, 198–207. [Google Scholar] [CrossRef] [PubMed]

- Walia, P.; Fu, Y.; Norfleet, J.; Schwaitzberg, S.D.; Intes, X.; De, S.; Cavuoto, L.; Dutta, A. Brain-Behavior Analysis of Transcranial Direct Current Stimulation Effects on a Complex Surgical Motor Task. Front. Neuroergon. 2024, 4, 1135729. [Google Scholar] [CrossRef] [PubMed]

- Walia, P.; Kamat, A.; De, S.; Dutta, A. Dynamic Causal Modeling for EEG during Complex Laparoscopic Skill Acquisition. In Proceedings of the Neuroergonomics Conference, Online, 11–16 September 2021. [Google Scholar]

| Authors (Year) | Model Name/Type | Core Assumptions | Computational Framework | Application Domain |

|---|---|---|---|---|

| Srinivasan, Laughlin, and Dubs (1982) [11] | Predictive coding (PC) in retina | Retina uses lateral inhibition to predict and cancel expected input (center–surround); reduces redundancy and noise. | Linear predictive coding (subtract prediction from signal) | Early vision (retinal encoding) |

| Mumford (1992) [14,15] | Cortical predictive coding (theory) | Hierarchical cortico-cortical loops: higher areas send top–down predictions; lower areas send unexplained residuals upward. | Theoretical Bayesian hierarchy (conceptual model of cortex) | Visual cortex (general cortical function) |

| Rao and Ballard (1999) [16] | Hierarchical PC in visual cortex | Higher-level visual units predict lower-level responses; only prediction errors propagate feedforward. Explains context effects in V1. | Hierarchical generative model with error-feedback learning (gradient descent on prediction error) | Visual perception (extra-classical RF effects, V1–V2 interactions) |

| Friston (2005) [17] | Variational free-energy PC (Bayesian brain) | Brain minimizes “free energy” (prediction error + complexity). Cortical areas carry approximate posteriors; prediction errors drive inference. | Variational Bayesian inference (free-energy minimization); hierarchical dynamic model | Multisensory cortical processing (unified perception theory) |

| Friston et al. (2010) [18] | Active inference (continuous) | Perception and action both minimize prediction errors. Motor commands realize predicted sensory states by reducing proprioceptive error. | Dynamic Bayesian network (state-space model) with action feedback; expected free-energy minimization for policy selection | Sensorimotor control (e.g., oculomotor reflexes, limb control) |

| Spratling (2008) [9] | Predictive coding with biased competition (PC/BC-DIM) | Cortical predictions modulate lower-level activity divisively; predictive coding integrated with attention (biased competition) mechanism. | Nonlinear neural network (divisive inhibition for error calculation); still error minimization principle | Visual attention and feature binding (cortical V1/V2 function) |

| Bastos et al. (2012) [19] | Canonical microcircuit for PC | Specific laminar circuit mapping: superficial pyramidal neurons carry prediction errors upward; deep pyramidal neurons send predictions downward. | Conceptual neural architecture (informed by anatomy and Dynamic Causal Modeling) | General cortical implementation (e.g., vision, audition microcircuits) |

| Lotter, Kreiman, and Cox (2017) [20] | PredNet (deep predictive coding network) | Each layer of a deep network predicts the next input and forwards only errors. Unsupervised learning via prediction of future sensory input (frames). | Deep convolutional recurrent network following predictive coding architecture (multiple layers of prediction and error units) | Visual sequence learning (video frame prediction, unsupervised vision, driving scenes) |

| Parr and Friston (2019) [21] | Active inference (discrete, Partially Observable Markov Decision Process) | Agent evaluates possible action sequences via expected free energy (combining predicted rewards and information gain); perception and decision-making unified. | Partially Observable Markov Decision Process with variational message passing (belief propagation for inference and planning) | Goal-directed behavior and planning (sequential decision-making, foraging tasks) |

| Rao, Gklezakos, and Sathish (2024) [22] | Active Predictive Coding (APC) | Unified hierarchical model learning both state dynamics and policies. Predictive coding used for compositional representation learning and multi-step planning. | Multi-level generative model with integrated policy networks; uses hypernetworks and deep learning techniques within a predictive coding loop | High-level perception and action (e.g., learning object–part hierarchies, long-horizon planning in AI agents) |

| Network Engagement | Motor Execution | Mental Simulation |

|---|---|---|

| Primary M1 effector zones | Strongly activated for the specific moving body part; large corticospinal output drives muscles. | Moderately activated (sub-threshold activity); increased excitability but output gated (no overt movement). |

| SCAN inter-effector zones | Active to integrate multi-limb coordination, posture, and link movement with goals. Assist in planning and adjusting movement (feedback integration). | Active to simulate the action plan and sense of movement. Likely more relative involvement since they generate the internal action representation without triggering muscles. Contribute to the feeling of “agency” and urge during imagination. |

| Sensory feedback (S1) | High actual feedback from proprioception, touch, etc., fed into primary sensory cortex S1; SCAN bridges to S1 (via “plis de passage”) to incorporate this feedback. | Little to no actual feedback (no movement), but predicted feedback may be internally generated. SCAN’s connection to BA3a (proprioceptive area) might simulate expected sensations. |

| Subcortical involvement | Strong involvement of basal ganglia and cerebellum for executing movement (initiating and refining motions); SCAN connects to these (putamen, cerebellum) to help orchestrate execution. | Basal ganglia engaged in suppressing actual movement (inhibition via striatal circuits) while still allowing the thalamocortical loop of the plan. Cerebellum active in simulating the dynamics of the imagined movement. SCAN connectivity to these regions supports running the “motor program” offline. |

| Cognitive network coupling | Moderate: cognitive control (dACC/insula) signals ensure the movement meets the goal, especially if adjustments or attention is needed. Some cognitive resources focus on external execution monitoring. | Strong: sustained engagement of prefrontal, attention, and imagery networks (visual or kinesthetic imagery recruits frontoparietal and occipital areas). SCAN nodes heavily coupled with cingulo-opercular network to maintain the intention in mind without output. |

| Kalman Filter Component | Brain Regions | Function Alignment |

|---|---|---|

| State Representation | Posterior parietal cortex (PPC), motor cortex, and cerebellum | Encodes current estimated body state; integrates proprioceptive, visual signals. |

| Internal Models | Cerebellum (forward model) and premotor cortex (inverse model) | Predicts sensory outcomes from efference copy of motor command. |

| Error Computation | Cerebellum and anterior cingulate cortex (ACC) | Computes prediction errors between expected and actual sensory input. |

| Gain Adjustment | Basal ganglia and thalamus | Adjusts how much weight to give to prediction vs. sensory feedback (Kalman gain). |

| Control Update | Motor cortex and supplementary motor area (SMA) | Updates motor plans based on corrected state estimates. |

| Cognitive Modulation | Prefrontal cortex (PFC) and ACC | Modulates attention, strategy, and confidence in the internal model. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dutta, A. Neurocomputational Mechanisms of Sense of Agency: Literature Review for Integrating Predictive Coding and Adaptive Control in Human–Machine Interfaces. Brain Sci. 2025, 15, 396. https://doi.org/10.3390/brainsci15040396

Dutta A. Neurocomputational Mechanisms of Sense of Agency: Literature Review for Integrating Predictive Coding and Adaptive Control in Human–Machine Interfaces. Brain Sciences. 2025; 15(4):396. https://doi.org/10.3390/brainsci15040396

Chicago/Turabian StyleDutta, Anirban. 2025. "Neurocomputational Mechanisms of Sense of Agency: Literature Review for Integrating Predictive Coding and Adaptive Control in Human–Machine Interfaces" Brain Sciences 15, no. 4: 396. https://doi.org/10.3390/brainsci15040396

APA StyleDutta, A. (2025). Neurocomputational Mechanisms of Sense of Agency: Literature Review for Integrating Predictive Coding and Adaptive Control in Human–Machine Interfaces. Brain Sciences, 15(4), 396. https://doi.org/10.3390/brainsci15040396