Real-Time Mobile Robot Obstacles Detection and Avoidance Through EEG Signals †

Abstract

1. Introduction

1.1. Overview

1.2. Research Objectives

2. Materials and Methods

2.1. Experimental Setup and Simulation Framework

2.1.1. Robot Description and Human-in-the-Loop Control

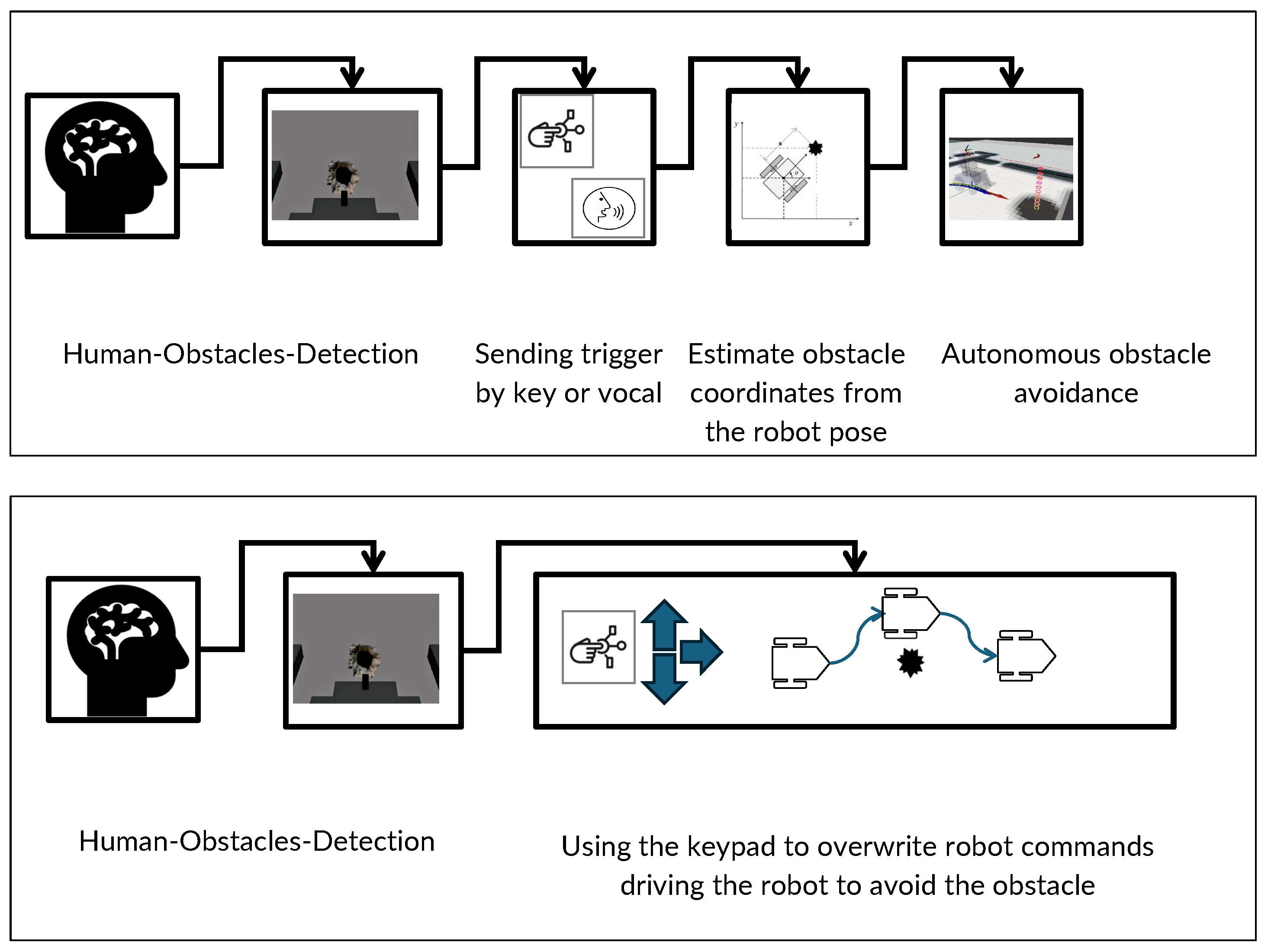

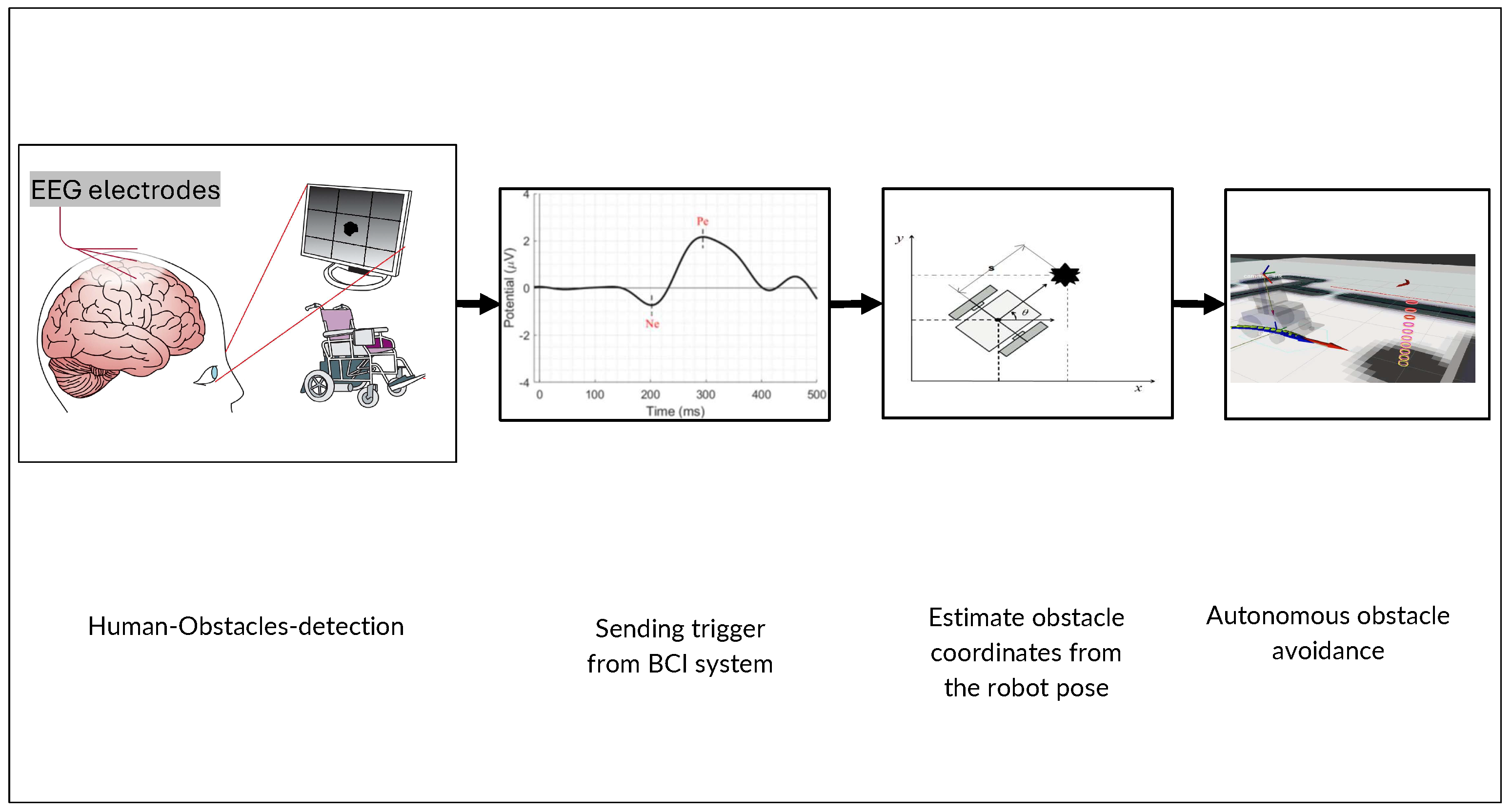

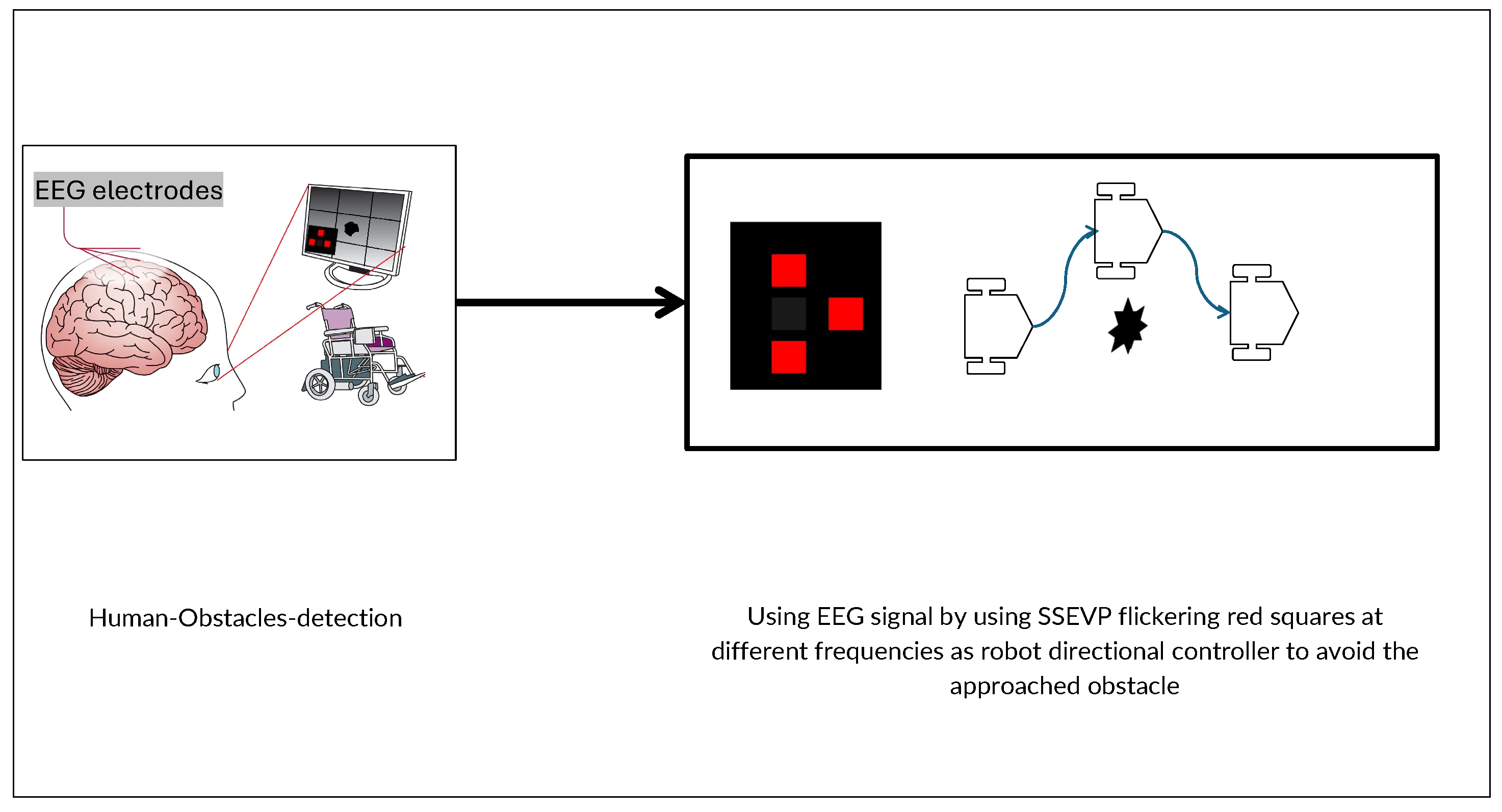

2.1.2. Human-in-the-Loop Approaches

2.1.3. Simulation and Data Integration

2.2. BCI System and Protocol

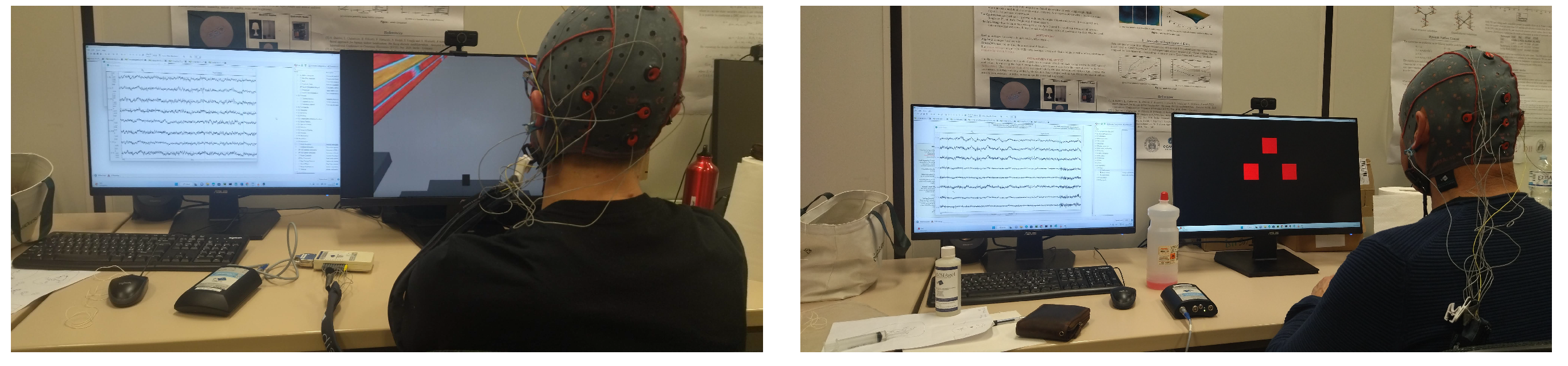

2.2.1. Protocol Description and Experimental Setup

2.2.2. Passive BCI (ErrP)

2.2.3. Active BCI (SSVEP)

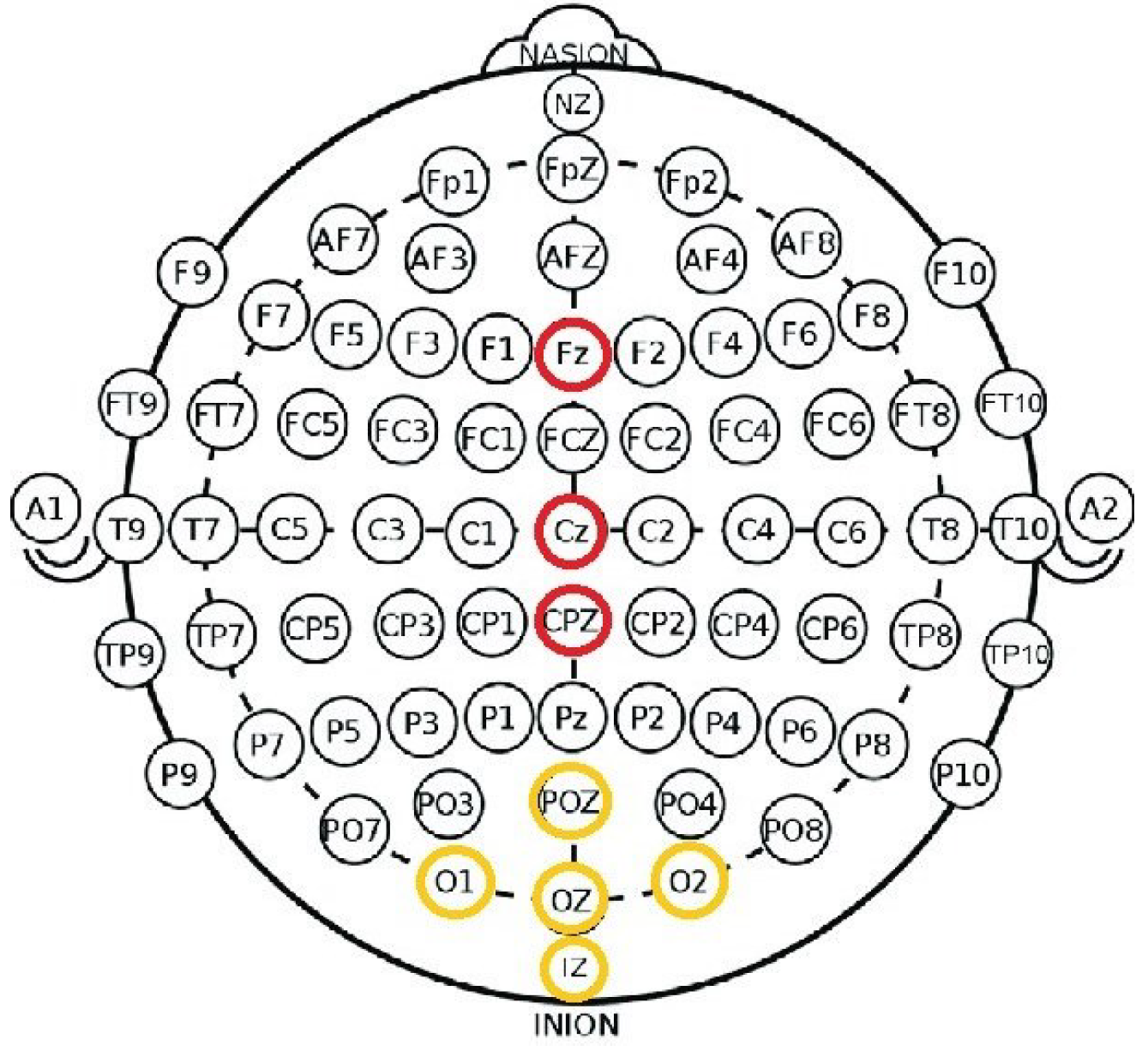

2.2.4. Data Acquisition and Pre-Processing

2.2.5. Data Processing

2.2.6. Mental Load Index

3. Results

3.1. Classification Results

3.2. Task Achievement

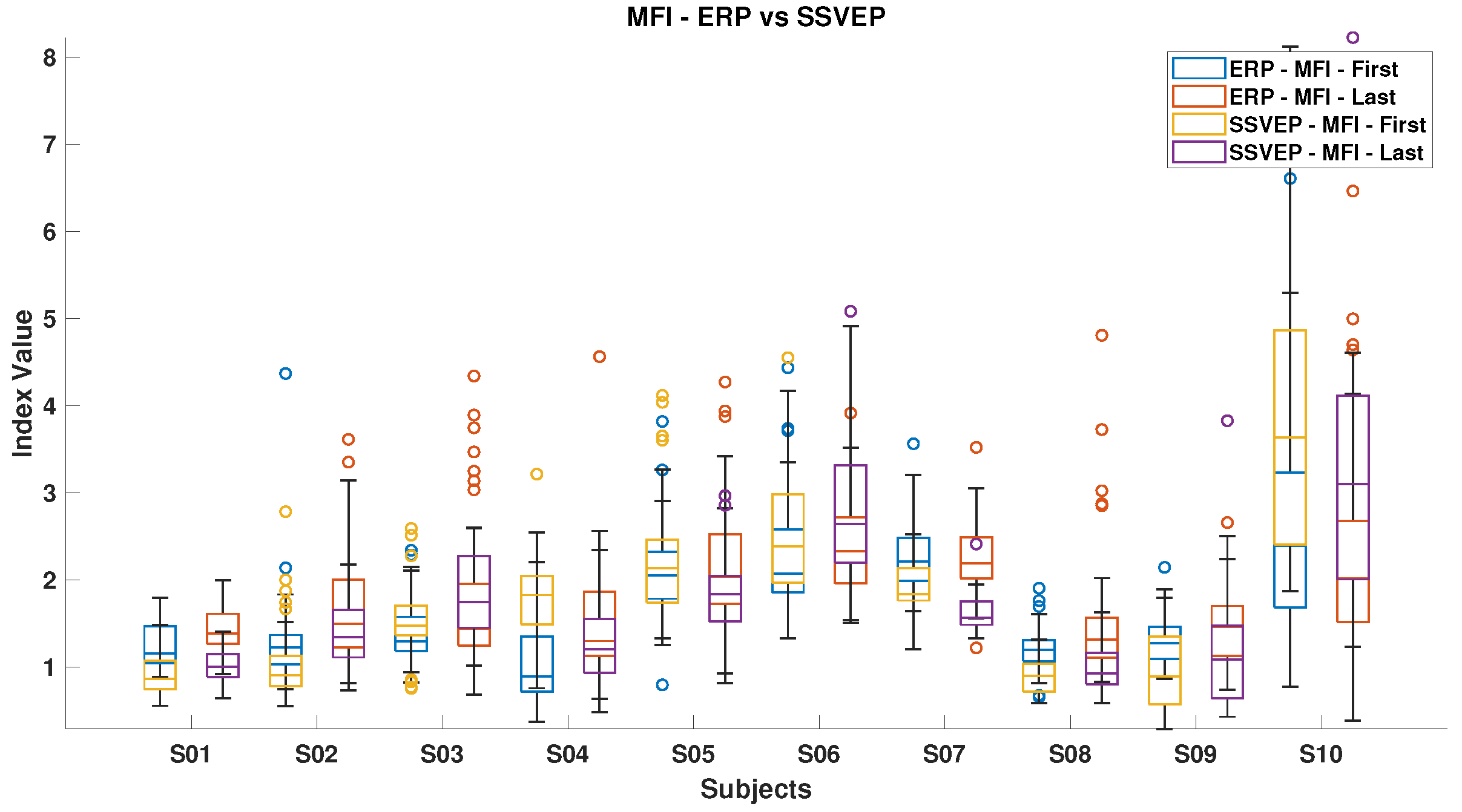

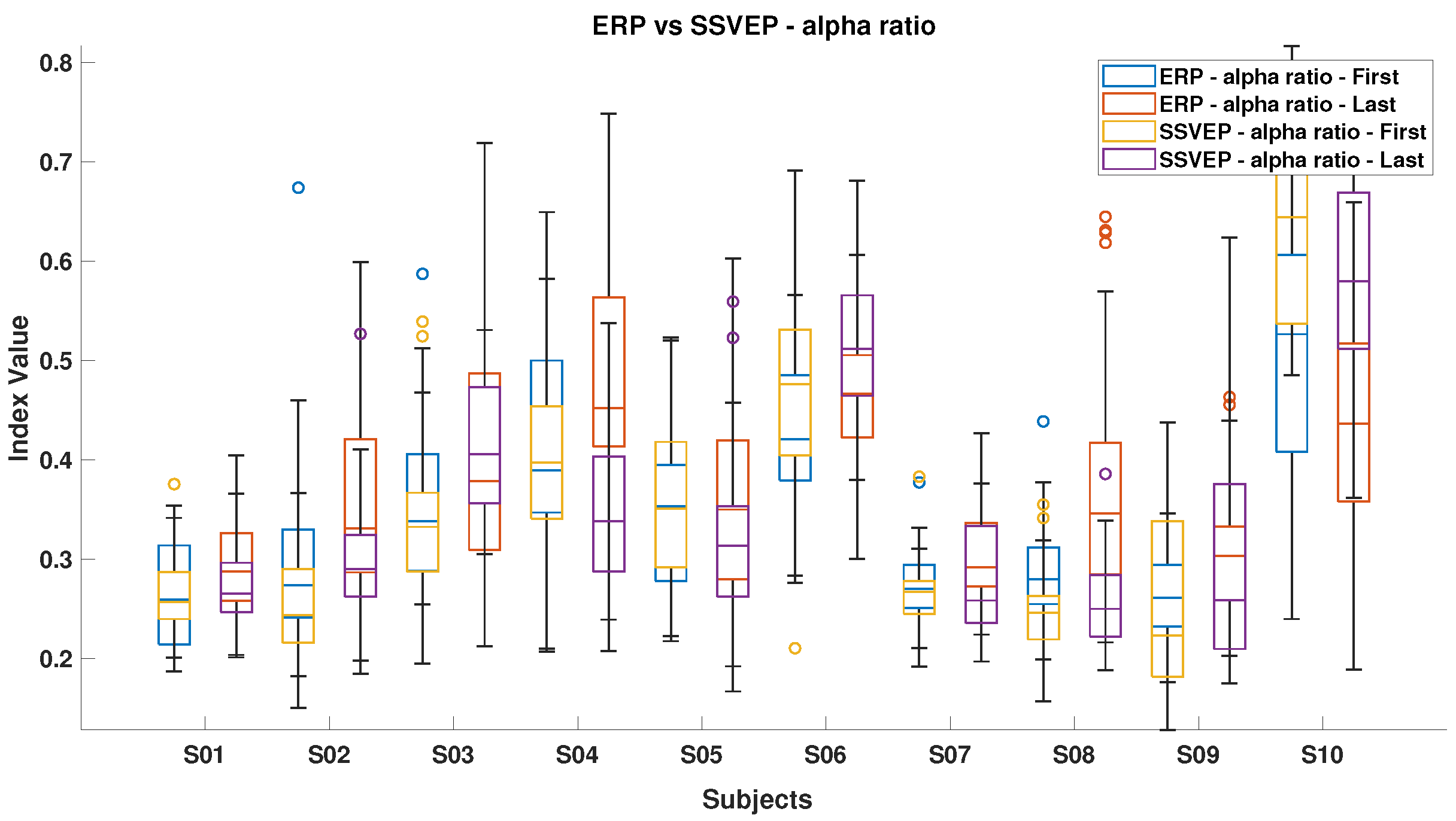

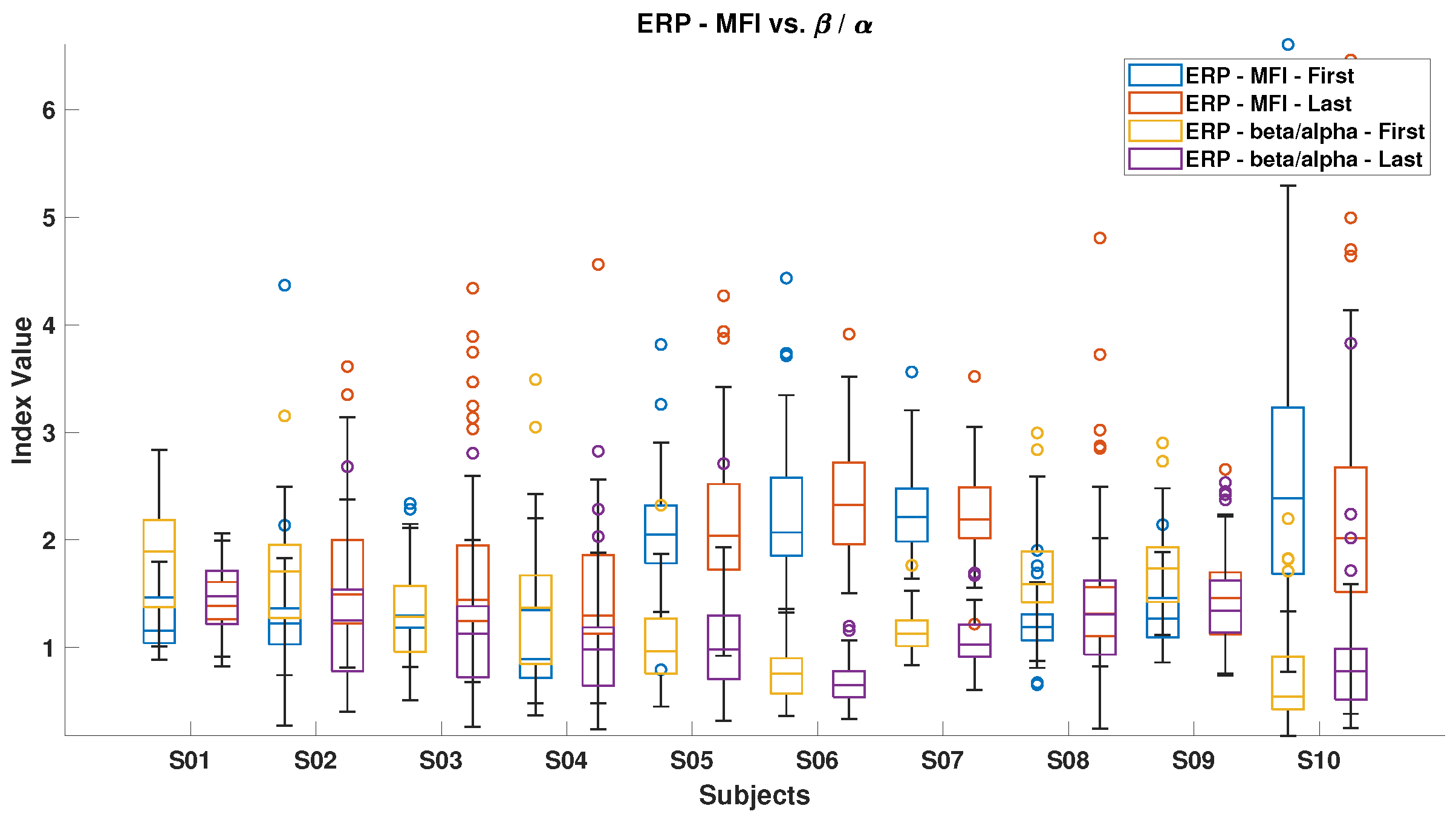

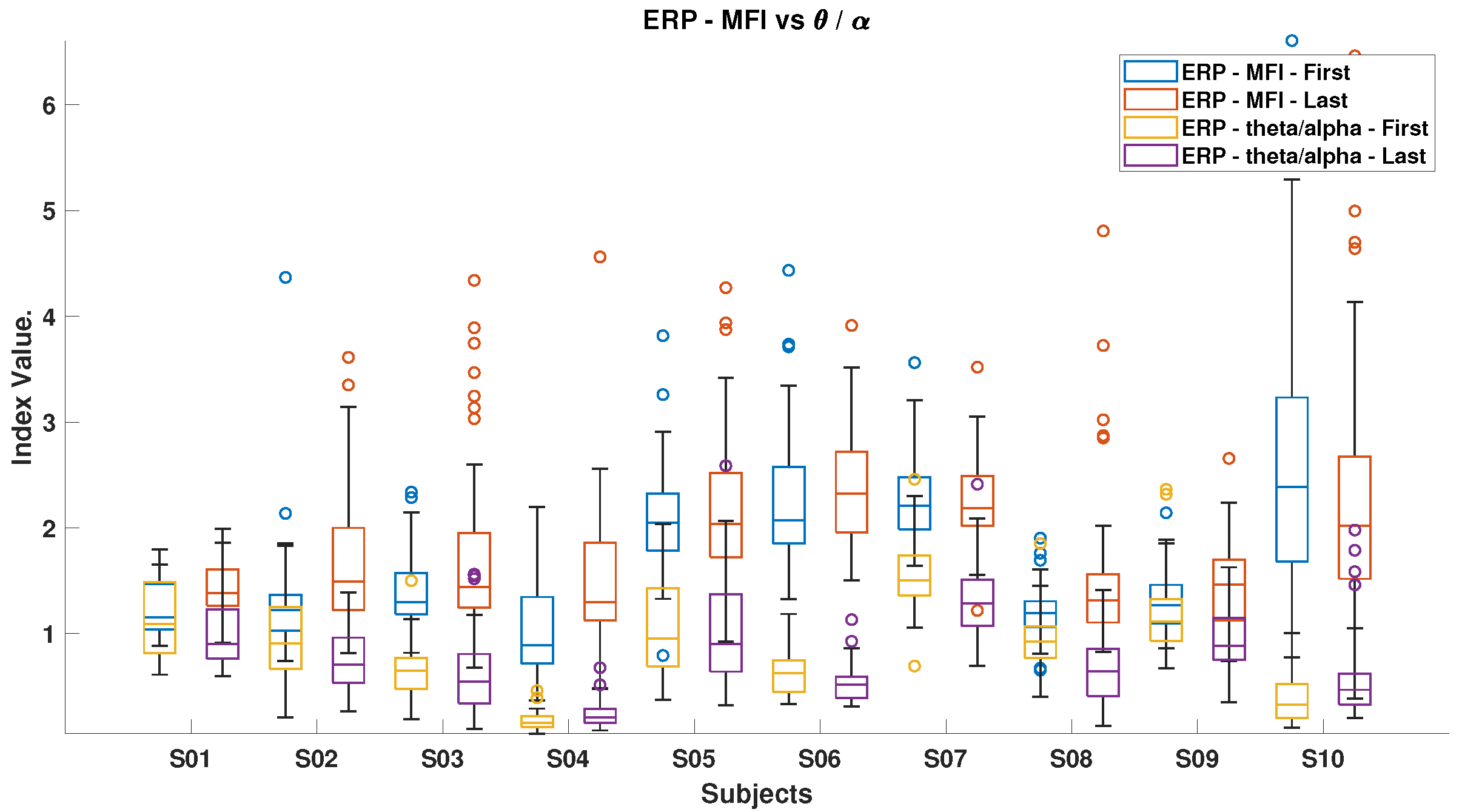

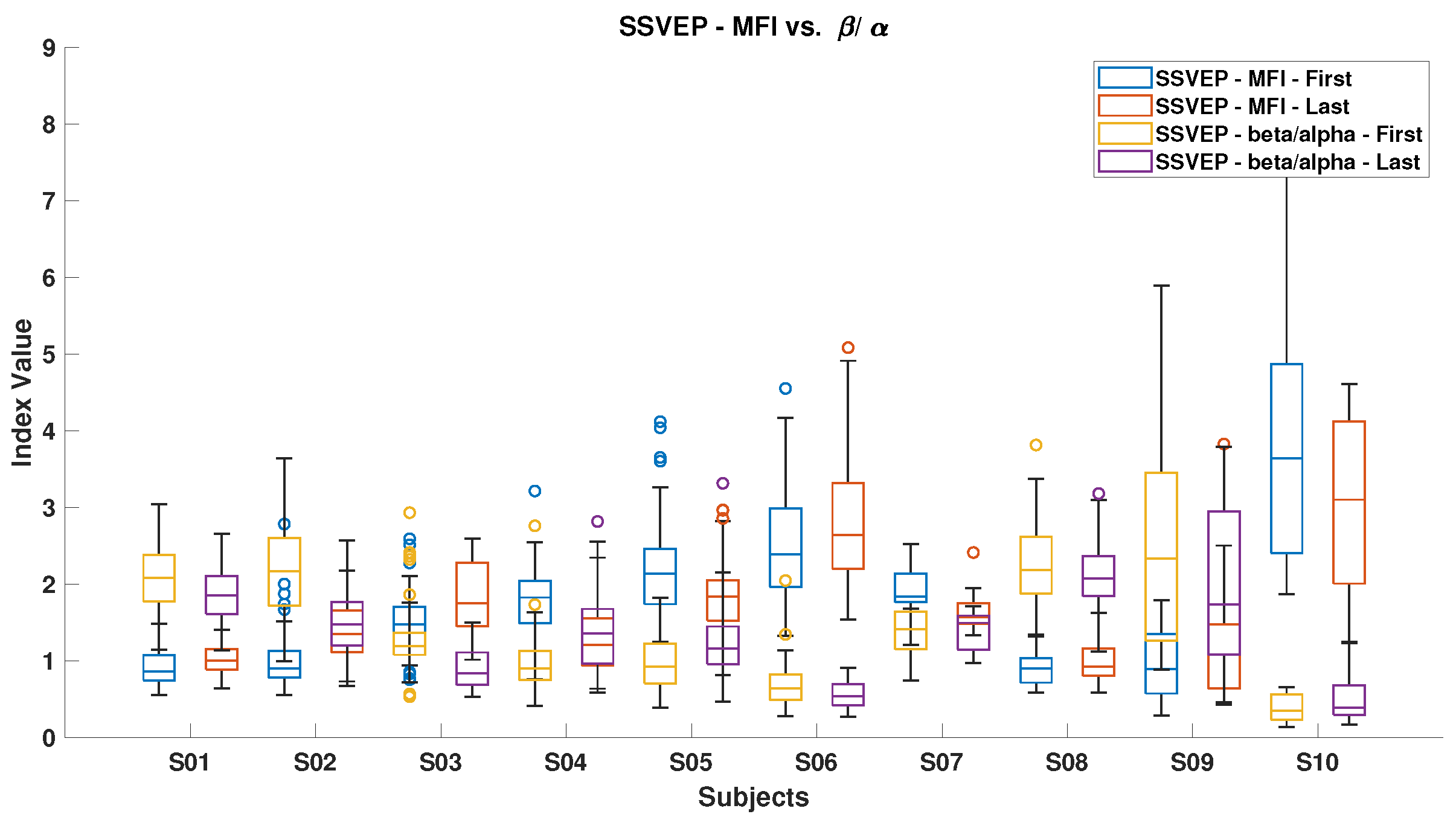

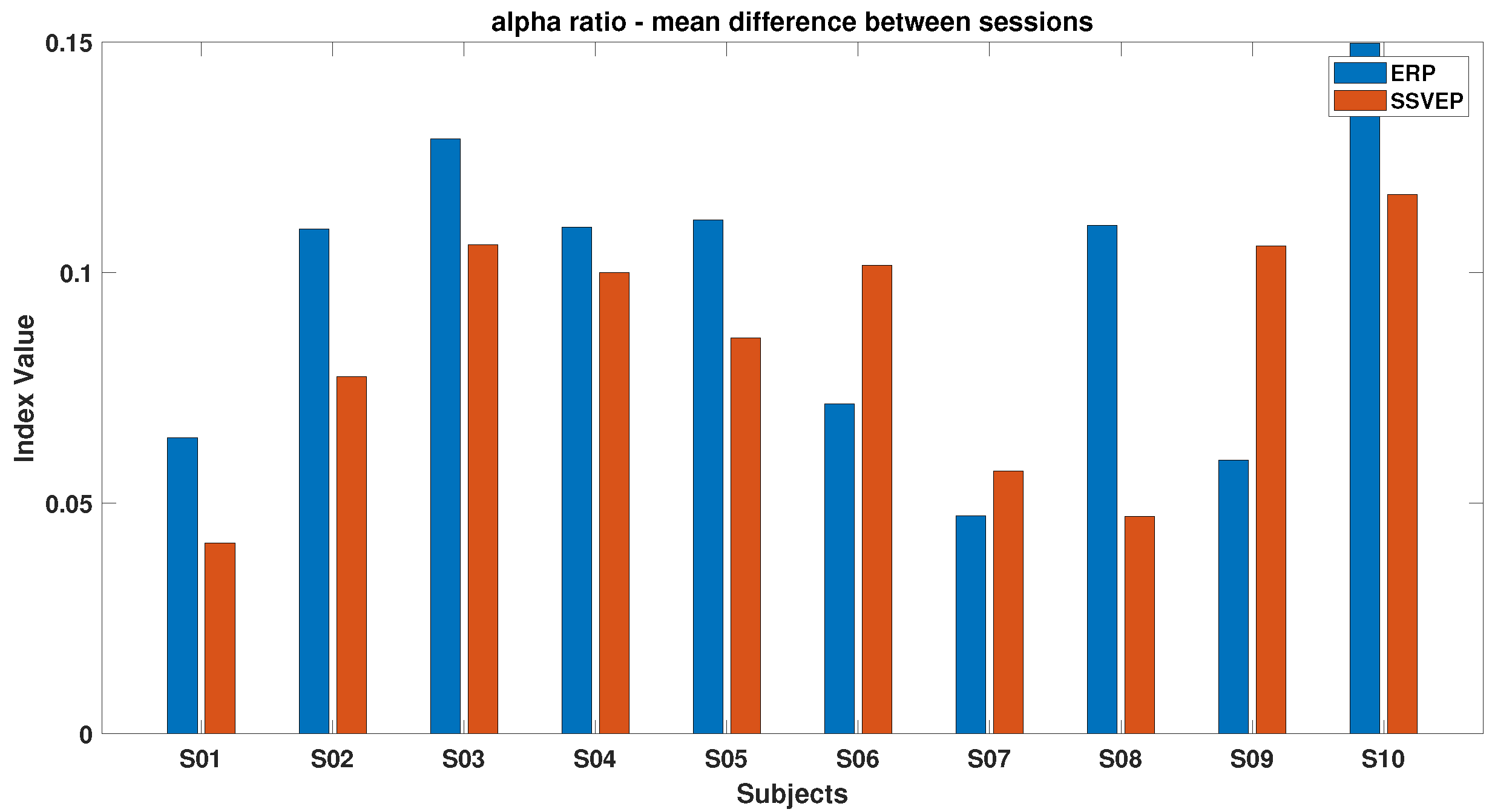

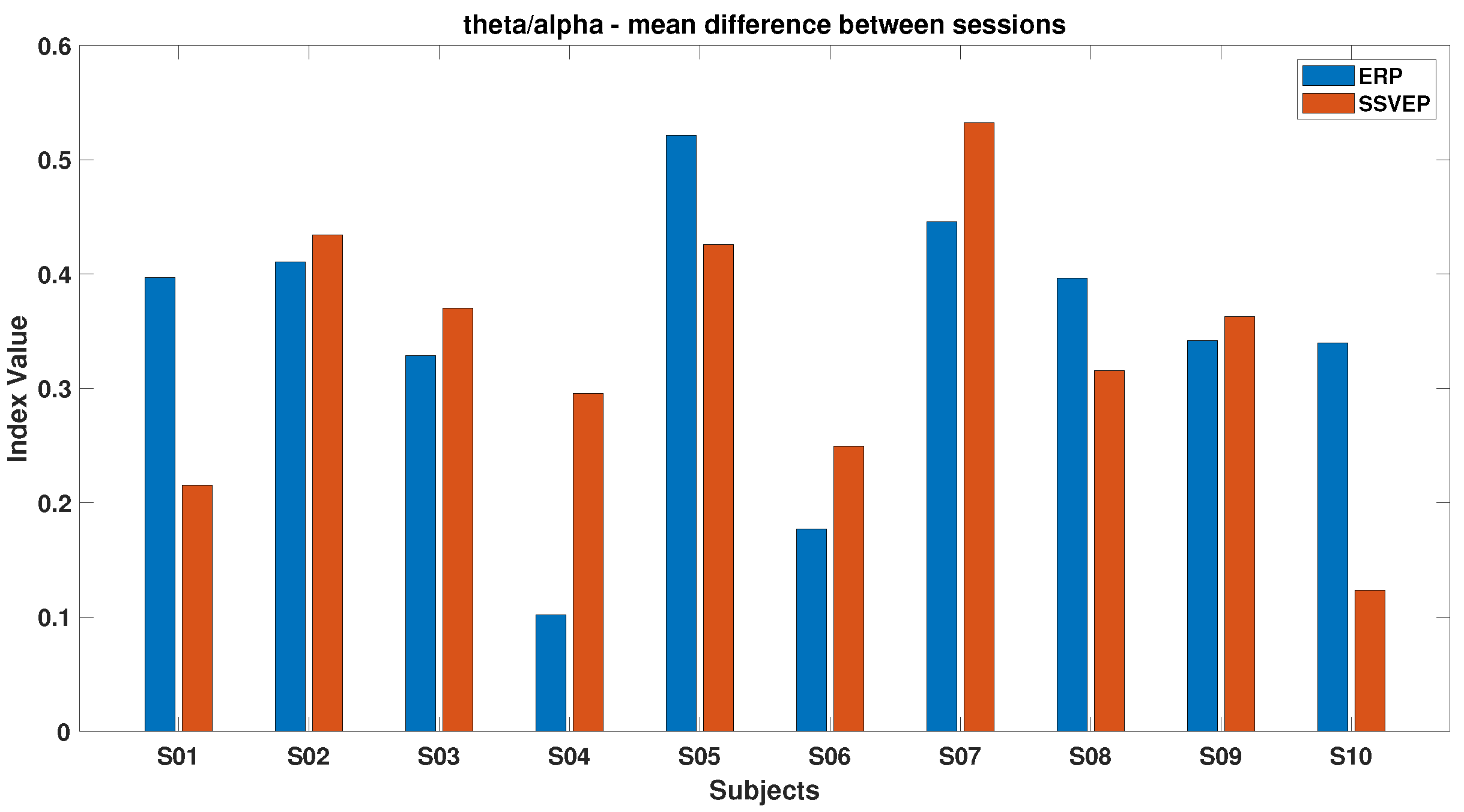

3.3. Mental Load Assessment

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Millán, J.D.R.; Rupp, R.; Müller-Putz, G.; Murray-Smith, R.; Giugliemma, C.; Tangermann, M.; Vidaurre, C.; Cincotti, F.; Kübler, A.; Leeb, R.; et al. Combining Brain-Computer Interfaces and Assistive Technologies: State-of-the-Art and Challenges. Front. Neurosci. 2010, 4, 161. [Google Scholar] [CrossRef]

- Krishnan, N.M.; Mariappan, M.; Muthukaruppan, K.; Hijazi, M.H.A.; Kitt, W.W. Electroencephalography (EEG) based control in assistive mobile robots: A review. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2016; Volume 121, p. 012017. [Google Scholar]

- Rashid, M.; Sulaiman, N.; Abdul Majeed, A.P.; Musa, R.M.; Ab Nasir, A.F.; Bari, B.S.; Khatun, S. Current Status, Challenges, and Possible Solutions of EEG-Based Brain-Computer Interface: A Comprehensive Review. Front. Neurorobot. 2020, 14, 25. [Google Scholar] [CrossRef]

- Mridha, M.; Das, S.C.; Kabir, M.M.; Lima, A.A.; Islam, M.R.; Watanobe, Y. Brain-Computer Interface: Advancement and Challenges. Sensors 2021, 21, 5746. [Google Scholar] [CrossRef]

- Ferracuti, F.; Freddi, A.; Iarlori, S.; Monteriù, A.; Omer, K.I.M.; Porcaro, C. A human-in-the-loop approach for enhancing mobile robot navigation in presence of obstacles not detected by the sensory set. Front. Robot. AI 2022, 9, 909971. [Google Scholar]

- Tariq, M.; Trivailo, P.M.; Simic, M. EEG-Based BCI Control Schemes for Lower-Limb Assistive-Robots. Front. Hum. Neurosci. 2018, 12, 312. [Google Scholar] [CrossRef]

- Kim, S.K.; Kirchner, E.A.; Schloßmüller, L.; Kirchner, F. Errors in Human-Robot Interactions and Their Effects on Robot Learning. Front. Robot. AI 2020, 7, 558531. [Google Scholar] [CrossRef]

- Aljalal, M.; Ibrahim, S.; Djemal, R.; Ko, W. Comprehensive review on brain-controlled mobile robots and robotic arms based on electroencephalography signals. Intell. Serv. Robot. 2020, 13, 539–563. [Google Scholar] [CrossRef]

- Choi, K. Control of a vehicle with EEG signals in real-time and system evaluation. Eur. J. Appl. Physiol. 2012, 112, 755–766. [Google Scholar] [CrossRef]

- Satti, A.R.; Coyle, D.; Prasad, G. Self-paced brain-controlled wheelchair methodology with shared and automated assistive control. In Proceedings of the 2011 IEEE Symposium on Computational Intelligence, Cognitive Algorithms, Mind, and Brain (CCMB), Paris, France, 11–15 April 2011; pp. 1–8. [Google Scholar] [CrossRef]

- Bi, L.; Fan, X.A.; Liu, Y. EEG-based brain-controlled mobile robots: A survey. IEEE Trans. Hum.-Mach. Syst. 2013, 43, 161–176. [Google Scholar]

- Värbu, K.; Muhammad, N.; Muhammad, Y. Past, present, and future of EEG-based BCI applications. Sensors 2022, 22, 3331. [Google Scholar] [CrossRef]

- Eaves, D.L.; Riach, M.; Holmes, P.S.; Wright, D.J. Motor imagery during action observation: A brief review of evidence, theory and future research opportunities. Front. Neurosci. 2016, 10, 514. [Google Scholar]

- Hamedi, M.; Salleh, S.H.; Noor, A.M. Electroencephalographic motor imagery brain connectivity analysis for BCI: A review. Neural Comput. 2016, 28, 999–1041. [Google Scholar] [CrossRef]

- Parr, J.V.; Wright, D.J.; Uiga, L.; Marshall, B.; Mohamed, M.O.; Wood, G. A scoping review of the application of motor learning principles to optimize myoelectric prosthetic hand control. Prosthetics Orthot. Int. 2022, 46, 274–281. [Google Scholar]

- Picton, T.W. The P300 wave of the human event-related potential. J. Clin. Neurophysiol. 1992, 9, 456. [Google Scholar]

- Powers, J.C.; Bieliaieva, K.; Wu, S.; Nam, C.S. The human factors and ergonomics of P300-based brain-computer interfaces. Brain Sci. 2015, 5, 318–354. [Google Scholar] [CrossRef]

- Punsawad, Y.; Wongsawat, Y. A multi-command ssvep-based bci system based on single flickering frequency half-field steady-state visual stimulation. Med. Biol. Eng. Comput. 2017, 55, 965–977. [Google Scholar]

- Zhang, R.; Xu, Z.; Zhang, L.; Cao, L.; Hu, Y.; Lu, B.; Shi, L.; Yao, D.; Zhao, X. The effect of stimulus number on the recognition accuracy and information transfer rate of SSVEP–BCI in augmented reality. J. Neural Eng. 2022, 19, 036010. [Google Scholar]

- McFarland, D.J.; Sarnacki, W.A.; Wolpaw, J.R. Brain–computer interface (BCI) operation: Optimizing information transfer rates. Biol. Psychol. 2003, 63, 237–251. [Google Scholar]

- Creel, D.J. Visually evoked potentials. Handb. Clin. Neurol. 2019, 160, 501–522. [Google Scholar]

- Chavarriaga, R.; Sobolewski, A.; Millán, J.d.R. Errare machinale est: The use of error-related potentials in brain-machine interfaces. Front. Neurosci. 2014, 8, 208. [Google Scholar]

- Salazar-Gomez, A.F.; DelPreto, J.; Gil, S.; Guenther, F.H.; Rus, D. Correcting robot mistakes in real time using EEG signals. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 6570–6577. [Google Scholar]

- Käthner, I.; Wriessnegger, S.C.; Müller-Putz, G.R.; Kübler, A.; Halder, S. Effects of mental workload and fatigue on the P300, alpha and theta band power during operation of an ERP (P300) brain–computer interface. Biol. Psychol. 2014, 102, 118–129. [Google Scholar]

- Kumar, A.; Gao, L.; Pirogova, E.; Fang, Q. A review of error-related potential-based brain–computer interfaces for motor impaired people. IEEE Access 2019, 7, 142451–142466. [Google Scholar]

- Cheng, Y.; Yan, L.; Shoukat, M.U.; She, J.; Liu, W.; Shi, C.; Wu, Y.; Yan, F. An improved SSVEP-based brain-computer interface with low-contrast visual stimulation and its application in UAV control. J. Neurophysiol. 2024, 132, 809–821. [Google Scholar]

- Karimi, R.; Mohammadi, A.; Asif, A.; Benali, H. DF-SSmVEP: Dual frequency aggregated steady-state motion visual evoked potential design with bifold canonical correlation analysis. Sensors 2022, 22, 2568. [Google Scholar] [CrossRef]

- Iturrate, I.; Antelis, J.M.; Kubler, A.; Minguez, J. A noninvasive brain-actuated wheelchair based on a P300 neurophysiological protocol and automated navigation. IEEE Trans. Robot. 2009, 25, 614–627. [Google Scholar] [CrossRef]

- Alimardani, M.; Hiraki, K. Passive brain-computer interfaces for enhanced human-robot interaction. Front. Robot. AI 2020, 7, 125. [Google Scholar]

- Siribunyaphat, N.; Punsawad, Y. Steady-state visual evoked potential-based brain–computer interface using a novel visual stimulus with quick response (QR) code pattern. Sensors 2022, 22, 1439. [Google Scholar] [CrossRef]

- Ferracuti, F.; Freddi, A.; Iarlori, S.; Longhi, S.; Monteriù, A.; Porcaro, C. Augmenting robot intelligence via EEG signals to avoid trajectory planning mistakes of a smart wheelchair. J. Ambient Intell. Humaniz. Comput. 2023, 14, 223–235. [Google Scholar]

- Koenig, N.; Howard, A. Design and use paradigms for gazebo, an open-source multi-robot simulator. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 3, pp. 2149–2154. [Google Scholar]

- Trautmann, T.; Blechschmidt, F.; Friedrich, M.; Mendt, F. Possibilities and Limitations of Object Detection Using Lidar. In International Stuttgart Symposium; Springer: Berlin/Heidelberg, Germany, 2023; pp. 36–43. [Google Scholar]

- Herghelegiu, P.; Burlacu, A.; Caraiman, S. Negative obstacle detection for wearable assistive devices for visually impaired. In Proceedings of the 2017 21st International Conference on System Theory, Control, and Computing (ICSTCC), Sinaia, Romania, 19–21 October 2017; pp. 564–570. [Google Scholar] [CrossRef]

- Ciabattoni, L.; Ferracuti, F.; Freddi, A.; Iarlori, S.; Longhi, S.; Monteriù, A. Human-in-the-loop approach to safe navigation of a smart wheelchair via brain computer interface. In Ambient Assisted Living: Italian Forum 2019 10; Springer: Berlin/Heidelberg, Germany, 2021; pp. 197–209. [Google Scholar]

- van Schie, H.T.; Mars, R.B.; Coles, M.G.; Bekkering, H. Modulation of activity in medial frontal and motor cortices during error observation. Nat. Neurosci. 2004, 7, 549–554. [Google Scholar]

- Wolpaw, J.; Wolpaw, E. Brain-Computer Interfaces: Principles and Practice; Oxford University Press: Oxford, UK, 2012; pp. 1–424. [Google Scholar] [CrossRef]

- Schalk, G.; McFarland, D.J.; Hinterberger, T.; Birbaumer, N.; Wolpaw, J.R. BCI2000: A general-purpose brain-computer interface (BCI) system. IEEE Trans. Biomed. Eng. 2004, 51, 1034–1043. [Google Scholar]

- Renard, Y.; Lotte, F.; Gibert, G.; Congedo, M.; Maby, E.; Delannoy, V.; Bertrand, O.; Lécuyer, A. Openvibe: An open-source software platform to design, test, and use brain–computer interfaces in real and virtual environments. Presence 2010, 19, 35–53. [Google Scholar] [CrossRef]

- The Mathworks, Inc. MATLAB, version 9.12.0.1956245 (R2022a); The Mathworks, Inc.: Natick, MA, USA, 2022.

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Kothe, C.A.; Makeig, S. BCILAB: A platform for brain–computer interface development. J. Neural Eng. 2013, 10, 056014. [Google Scholar] [CrossRef] [PubMed]

- Omer, K.; Ferracuti, F.; Freddi, A.; Iarlori, S.; Monteriù, A.; Porcaro, C. Human-in-the-loop approach for enhanced mobile robot navigation. In Proceedings of the 2022 IEEE International Conference on Metrology for Extended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE), Rome, Italy, 26–28 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 416–421. [Google Scholar]

- MacKay, D.J.C. Bayesian Interpolation. Neural Comput. 1992, 4, 415–447. [Google Scholar] [CrossRef]

- Hoffmann, U.; Garcia, G.; Vesin, J.M.; Ebrahimi, T. Application of the evidence framework to brain-computer interfaces. In Proceedings of the 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Francisco, CA, USA, 1–5 September 2004; Volume 1, pp. 446–449. [Google Scholar]

- Hoffmann, U.; Vesin, J.M.; Ebrahimi, T.; Diserens, K. An efficient P300-based brain–computer interface for disabled subjects. J. Neurosci. Methods 2008, 167, 115–125. [Google Scholar] [CrossRef]

- Ang, K.K.; Chin, Z.Y.; Zhang, H.; Guan, C. Filter bank common spatial pattern (FBCSP) in brain-computer interface. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 2390–2397. [Google Scholar]

- Kim, H.; Luo, J.; Chu, S.; Cannard, C.; Hoffmann, S.; Miyakoshi, M. ICA’s bug: How ghost ICs emerge from effective rank deficiency caused by EEG electrode interpolation and incorrect re-referencing. Front. Signal Process. 2023, 3, 1064138. [Google Scholar] [CrossRef]

- Quiles, E.; Dadone, J.; Chio, N.; García, E. Cross-Platform Implementation of an SSVEP-Based BCI for the Control of a 6-DOF Robotic Arm. Sensors 2022, 22, 5000. [Google Scholar] [CrossRef]

- Welch, P. The use of fast Fourier transform for the estimation of power spectra: A method based on time averaging over short, modified periodograms. IEEE Trans. Audio Electroacoust. 1967, 15, 70–73. [Google Scholar] [CrossRef]

- Li, G.; Huang, S.; Xu, W.; Jiao, W.; Jiang, Y.; Gao, Z.; Zhang, J. The impact of mental fatigue on brain activity: A comparative study both in resting state and task state using EEG. BMC Neurosci. 2020, 21, 20. [Google Scholar] [CrossRef]

- Spüler, M.; Niethammer, C. Error-related potentials during continuous feedback: Using EEG to detect errors of different type and severity. Front. Hum. Neurosci. 2015, 9, 155. [Google Scholar] [CrossRef]

- Omer, K.; Vella, F.; Ferracuti, F.; Freddi, A.; Iarlori, S.; Monteriù, A. Mental Fatigue Evaluation for Passive and Active BCI Methods for Wheelchair-Robot During Human-in-the-Loop Control. In Proceedings of the 2023 IEEE International Conference on Metrology for eXtended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE), Milano, Italy, 25–27 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 787–792. [Google Scholar]

- O’Connell, R.G.; Dockree, P.M.; Robertson, I.H.; Bellgrove, M.A.; Foxe, J.J.; Kelly, S.P. Uncovering the neural signature of lapsing attention: Electrophysiological signals predict errors up to 20 s before they occur. J. Neurosci. 2009, 29, 8604–8611. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.; Wong, C.M.; Wang, Z.; Rosa, A.C.; Wang, H.T.; Wan, F. Fatigue Detection in SSVEP-BCIs Based on Wavelet Entropy of EEG. IEEE Access 2021, 9, 114905–114913. [Google Scholar]

| Band | Frequency (Hz) | Amplitude (µV) | Location | Activity |

|---|---|---|---|---|

| Delta | 0.5–4 | 100–200 | Frontal | Deep sleep |

| Theta | 4–8 | 5–10 | Various | Drowsiness, light sleep |

| Alpha | 8–13 | 20–80 | Posterior region of head | Relaxed |

| Beta | 13–30 | 1–5 | Symmetrical distribution, most evident frontally | Active thinking, alert |

| Subject | Avg Classification Accuracy % |

|---|---|

| S1 | 57.6 |

| S2 | 72.9 |

| S3 | 51.6 |

| S4 | 52.0 |

| S5 | 53.8 |

| S6 | 59.6 |

| S7 | 58.5 |

| S8 | 51.0 |

| S9 | 46.0 |

| S10 | 53.8 |

| Subject | Avg Classification Accuracy % |

|---|---|

| S1 | 84.9 |

| S2 | 75.7 |

| S3 | 77.6 |

| S4 | 76.2 |

| S5 | 77.5 |

| S6 | 75.1 |

| S7 | 78.9 |

| S8 | 78.2 |

| S9 | 68.7 |

| S10 | 75.3 |

| Subject | Active Task Achievement Accuracy | Passive Task Achievement Accuracy |

|---|---|---|

| S1 | 61 | 57 |

| S2 | 43 | 71 |

| S3 | 47 | 51 |

| S4 | 44 | 51 |

| S5 | 47 | 53 |

| S6 | 42 | 59 |

| S7 | 49 | 58 |

| S8 | 48 | 50 |

| S9 | 32 | 45 |

| S10 | 43 | 53 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Omer, K.; Ferracuti, F.; Freddi, A.; Iarlori, S.; Vella, F.; Monteriù, A. Real-Time Mobile Robot Obstacles Detection and Avoidance Through EEG Signals. Brain Sci. 2025, 15, 359. https://doi.org/10.3390/brainsci15040359

Omer K, Ferracuti F, Freddi A, Iarlori S, Vella F, Monteriù A. Real-Time Mobile Robot Obstacles Detection and Avoidance Through EEG Signals. Brain Sciences. 2025; 15(4):359. https://doi.org/10.3390/brainsci15040359

Chicago/Turabian StyleOmer, Karameldeen, Francesco Ferracuti, Alessandro Freddi, Sabrina Iarlori, Francesco Vella, and Andrea Monteriù. 2025. "Real-Time Mobile Robot Obstacles Detection and Avoidance Through EEG Signals" Brain Sciences 15, no. 4: 359. https://doi.org/10.3390/brainsci15040359

APA StyleOmer, K., Ferracuti, F., Freddi, A., Iarlori, S., Vella, F., & Monteriù, A. (2025). Real-Time Mobile Robot Obstacles Detection and Avoidance Through EEG Signals. Brain Sciences, 15(4), 359. https://doi.org/10.3390/brainsci15040359