Cross-Modal Interactions and Movement-Related Tactile Gating: The Role of Vision

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Design and Procedure

2.3. Data Analysis

Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Parise, C.V.; Ernst, M.O. Noise, Multisensory Integration, and Previous Response in Perceptual Disambiguation. PLoS Comput. Biol. 2017, 13, e1005546. [Google Scholar] [CrossRef] [PubMed]

- Ernst, M.O.; Banks, M.S.; Bülthoff, H.H. Touch Can Change Visual Slant Perception. Nat. Neurosci. 2000, 3, 69–73. [Google Scholar] [CrossRef]

- Pomper, U.; Brincker, J.; Harwood, J.; Prikhodko, I.; Senkowski, D. Taking a Call Is Facilitated by the Multisensory Processing of Smartphone Vibrations, Sounds, and Flashes. PLoS ONE 2014, 9, e103238. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Keil, J. Double Flash Illusions: Current Findings and Future Directions. Front. Neurosci. 2020, 14, 298. [Google Scholar] [CrossRef] [PubMed]

- Mozolic, J.L.; Hugenschmidt, C.E.; Peiffer, A.M.; Laurienti, P.J. Modality-Specific Selective Attention Attenuates Multisensory Integration. Exp. Brain Res. 2008, 184, 39–52. [Google Scholar] [CrossRef]

- Gori, M.; Del Viva, M.; Sandini, G.; Burr, D.C. Young Children Do Not Integrate Visual and Haptic Form Information. Curr. Biol. 2008, 18, 694–698. [Google Scholar] [CrossRef]

- Nardini, M.; Jones, P.; Bedford, R.; Braddick, O. Development of Cue Integration in Human Navigation. Curr. Biol. 2008, 18, 689–693. [Google Scholar] [CrossRef] [PubMed]

- Brandwein, A.B.; Foxe, J.J.; Russo, N.N.; Altschuler, T.S.; Gomes, H.; Molholm, S. The Development of Audiovisual Multisensory Integration across Childhood and Early Adolescence: A High-Density Electrical Mapping Study. Cereb. Cortex 2011, 21, 1042–1055. [Google Scholar] [CrossRef]

- Nardini, M.; Begus, K.; Mareschal, D. Multisensory Uncertainty Reduction for Hand Localization in Children and Adults. J. Exp. Psychol. Hum. Percept. Perform. 2013, 39, 773–787. [Google Scholar] [CrossRef]

- Petrini, K.; Remark, A.; Smith, L.; Nardini, M. When Vision Is Not an Option: Children’s Integration of Auditory and Haptic Information Is Suboptimal. Dev. Sci. 2014, 17, 376–387. [Google Scholar] [CrossRef]

- Alais, D.; Burr, D. The Ventriloquist Effect Results from Near-Optimal Bimodal Integration. Curr. Biol. 2004, 14, 257–262. [Google Scholar] [CrossRef] [PubMed]

- Hötting, K.; Röder, B. Hearing Cheats Touch, but Less in Congenitally Blind Than in Sighted Individuals. Psychol. Sci. 2004, 15, 60–64. [Google Scholar] [CrossRef] [PubMed]

- Occelli, V.; Bruns, P.; Zampini, M.; Röder, B. Audiotactile Integration Is Reduced in Congenital Blindness in a Spatial Ventriloquism Task. Neuropsychologia 2012, 50, 36–43. [Google Scholar] [CrossRef]

- Crollen, V.; Lazzouni, L.; Rezk, M.; Bellemare, A.; Lepore, F.; Collignon, O. Visual Experience Shapes the Neural Networks Remapping Touch into External Space. J. Neurosci. 2017, 37, 10097–10103. [Google Scholar] [CrossRef]

- Crollen, V.; Spruyt, T.; Mahau, P.; Bottini, R.; Collignon, O. How Visual Experience and Task Context Modulate the Use of Internal and External Spatial Coordinate for Perception and Action. J. Exp. Psychol. Hum. Percept. Perform. 2019, 45, 354–362. [Google Scholar] [CrossRef]

- Collignon, O.; Charbonneau, G.; Lassonde, M.; Lepore, F. Early Visual Deprivation Alters Multisensory Processing in Peripersonal Space. Neuropsychologia 2009, 47, 3236–3243. [Google Scholar] [CrossRef] [PubMed]

- Chapman, C.E.; Tremblay, F.; Ageranioti-Bélanger, S.A. Role of Primary Somatosensory Cortex in Active and Passive Touch. In Hand and Brain; Wing, A.M., Haggard, P., Flanagan, J., Eds.; Academic Press, Inc.: San Diego, CA, USA, 1996; pp. 329–347. [Google Scholar]

- Chapman, C.E. Active versus Passive Touch: Factors Influencing the Transmission of Somatosensory Signals to Primary Sornatosensory Cortex. Can. J. Physiol. Pharmacol. 1994, 72, 558–570. [Google Scholar] [CrossRef] [PubMed]

- Kurz, M.J.; Wiesman, A.I.; Coolidge, N.M.; Wilson, T.W. Haptic Exploration Attenuates and Alters Somatosensory Cortical Oscillations. J. Physiol. 2018, 596, 5051–5061. [Google Scholar] [CrossRef]

- Williams, S.R.; Shenasa, J.; Chapman, C.E. Time Course and Magnitude of Movement-Related Gating of Tactile Detection in Humans. I. Importance of Stimulus Location. J. Neurophysiol. 1998, 79, 947–963. [Google Scholar] [CrossRef]

- Gibson, J.J. Psychological Review Observations on Active Touch. Psychol. Rev. 1962, 69, 477–491. [Google Scholar] [CrossRef]

- Cybulska-Klosowicz, A.; Meftah, E.M.; Raby, M.; Lemieux, M.L.; Chapman, C.E. A Critical Speed for Gating of Tactile Detection during Voluntary Movement. Exp. Brain Res. 2011, 210, 291–301. [Google Scholar] [CrossRef] [PubMed]

- Casado-Palacios, M.; Tonelli, A.; Campus, C.; Gori, M. Movement—Related Tactile Gating in Blindness. Sci. Rep. 2023, 13, 16553. [Google Scholar] [CrossRef] [PubMed]

- Gori, M.; Mazzilli, G.; Sandini, G.; Burr, D. Cross-Sensory Facilitation Reveals Neural Interactions between Visual and Tactile Motion in Humans. Front. Psychol. 2011, 2, 55. [Google Scholar] [CrossRef] [PubMed]

- Cuppone, A.V.; Cappagli, G.; Gori, M. Audio-Motor Training Enhances Auditory and Proprioceptive Functions in the Blind Adult. Front. Neurosci. 2019, 13, 1272. [Google Scholar] [CrossRef]

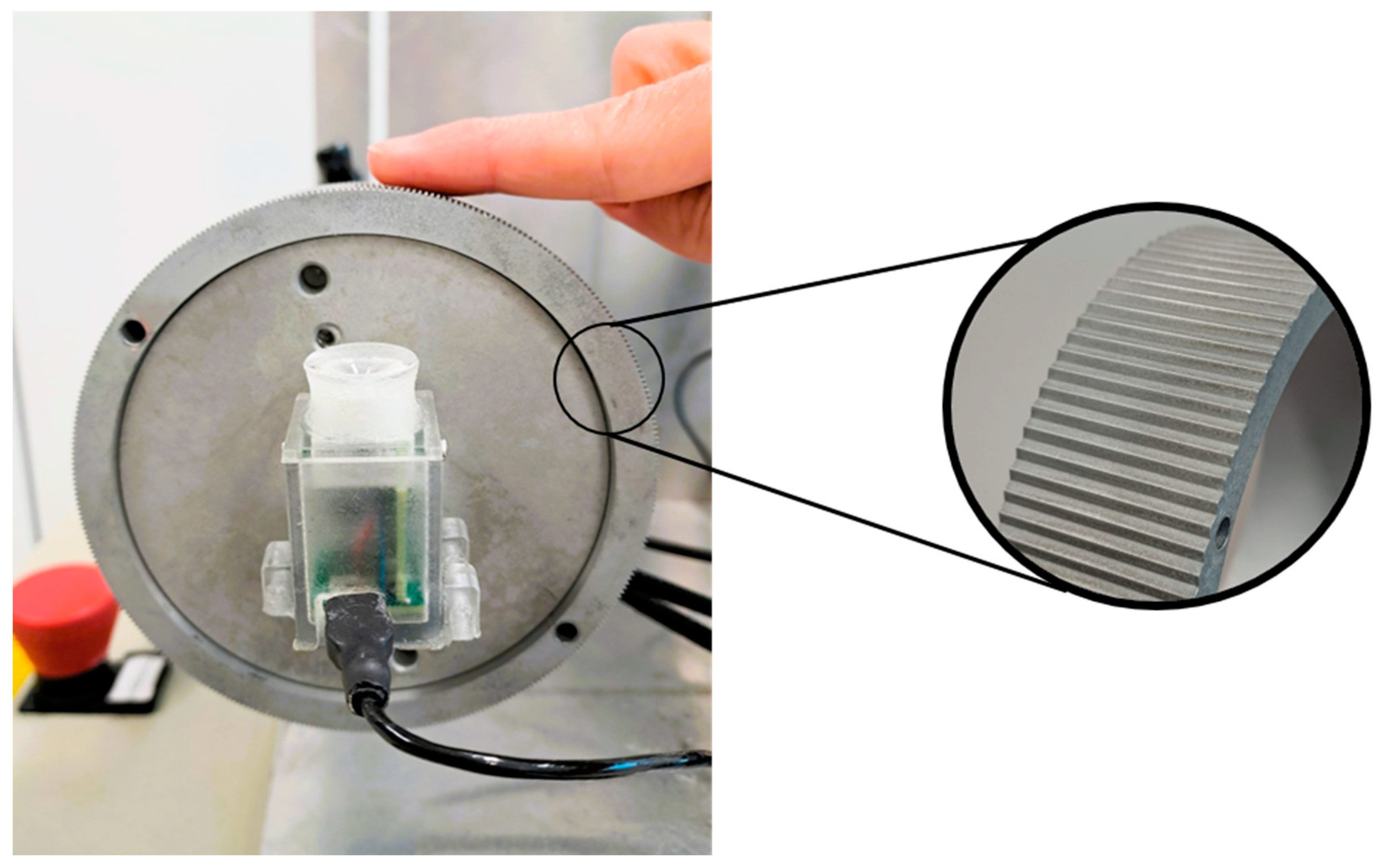

- Gori, M.; Bollini, A.; Maviglia, A.; Amadeo, M.B.; Tonelli, A.; Crepaldi, M.; Campus, C. MSI Caterpillar: An Effective Multisensory System to Evaluate Spatial Body Representation. In Proceedings of the 2019 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Istanbul, Turkey, 26–28 June 2019. [Google Scholar] [CrossRef]

- Watson, A.B.; Pelli, D. QUEST: A Bayesian Adaptive Psychophysical Method. Percept. Psychophys. 1983, 33, 113–120. [Google Scholar] [CrossRef]

- Togoli, I.; Crollen, V.; Arrighi, R.; Collignon, O. The Shared Numerical Representation for Action and Perception Develops Independently from Vision. Cortex 2020, 129, 436–445. [Google Scholar] [CrossRef]

- Efron, B.; Tibshirani, R.J. An Introduction to Bootstrap; Chapman and Hall: London, UK, 1993. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2013; Available online: http://www.r-project.org/ (accessed on 7 March 2025).

- Voudouris, D.; Fiehler, K. The Role of Grasping Demands on Tactile Suppression. Hum. Mov. Sci. 2022, 83, 102957. [Google Scholar] [CrossRef]

- Fuehrer, E.; Voudouris, D.; Lezkan, A.; Drewing, K.; Fiehler, K. Tactile Suppression Stems from Specific Sensorimotor Predictions. Proc. Natl. Acad. Sci. USA 2022, 119, e2118445119. [Google Scholar] [CrossRef]

- Voudouris, D.; Broda, M.D.; Fiehler, K. Anticipatory Grasping Control Modulates Somatosensory Perception. J. Vis. 2019, 19, 4. [Google Scholar] [CrossRef]

- Angel, R.W.; Malenka, R.C. Velocity-Dependent Suppression of Cutaneous Sensitivity during Movement. Exp. Neurol. 1982, 77, 266–274. [Google Scholar] [CrossRef]

- Kilteni, K.; Ehrsson, H.H. Predictive Attenuation of Touch and Tactile Gating Are Distinct Perceptual Phenomena. iScience 2022, 25, 104077. [Google Scholar] [CrossRef] [PubMed]

- Chapman, C.E.; Beauchamp, E. Differential Controls over Tactile Detection in Humans by Motor Commands and Peripheral Reafference. J. Neurophysiol. 2006, 96, 1664–1675. [Google Scholar] [CrossRef] [PubMed]

- Gehringer, J.E.; Arpin, D.J.; VerMaas, J.R.; Trevarrow, M.P.; Wilson, T.W.; Kurz, M.J. The Strength of the Movement-Related Somatosensory Cortical Oscillations Differ between Adolescents and Adults. Sci. Rep. 2019, 9, 18520. [Google Scholar] [CrossRef]

- Faisal, A.A.; Selen, L.P.J.; Wolpert, D.M. Noise in the Nervous System. Nat. Rev. Neurosci. 2008, 9, 292–303. [Google Scholar] [CrossRef]

- Sekuler, R.; Sekular, A.B.; Lau, R. Sound Alters Visual Motor Perception. Nature 1997, 385, 308. [Google Scholar] [CrossRef]

- Schirmer, A.; Escoffier, N.; Cheng, X.; Feng, Y.; Penney, T.B. Detecting Temporal Change in Dynamic Sounds: On the Role of Stimulus Duration, Speed, and Emotion. Front. Psychol. 2016, 6, 2055. [Google Scholar] [CrossRef] [PubMed]

- Champoux, F.; Collignon, O.; Bacon, B.A.; Lepore, F.; Zatorre, R.J.; Théoret, H. Early- and Late-Onset Blindness Both Curb Audiotactile Integration on the Parchment-Skin Illusion. Psychol. Sci. 2011, 22, 19–25. [Google Scholar] [CrossRef]

- Amadeo, M.B.; Campus, C.; Gori, M. Impact of Years of Blindness on Neural Circuits Underlying Auditory Spatial Representation. Neuroimage 2019, 191, 140–149. [Google Scholar] [CrossRef]

- Mouchnino, L.; Fontan, A.; Tandonnet, C.; Perrier, J.; Saradjian, A.; Blouin, J.; Simoneau, M. Facilitation of Cutaneous Inputs during the Planning Phase of Gait Initiation. J. Neurophysiol. 2015, 114, 301–308. [Google Scholar] [CrossRef]

| Participant | Gender | Age | Blindness Onset | Pathology | Visual Perception |

|---|---|---|---|---|---|

| S1 | F | 22 | 0 | Retinopathy of prematurity | Lights and shadows |

| S2 | F | 34 | 0 | Retinopathy of prematurity | No |

| S3 | F | 50 | 0 | Retinopathy of prematurity | Lights and shadows |

| S4 | F | 32 | 0 | Hereditary retinal dystrophies Retinitis pigmentosa | Lights and shadows |

| S5 | F | 32 | 0 | Retinopathy of prematurity | No |

| S6 | M | 49 | 0 | Congenital atrophy of the optic nerve | No |

| S7 | M | 52 | 40 | Optic nerve subatrophy for a benign tumor | Lights and shadows |

| S8 | M | 29 | 0 | Leber Amaurosis | No |

| S9 | F | 56 | 35 | Retinitis pigmentosa | Lights and shadows |

| S10 | M | 49 | 6 | Congenital glaucoma | No |

| S11 | M | 33 | 17 | Corneal opacity | No |

| S12 | M | 43 | 26 | Leber Amaurosis Retinitis pigmentosa | Lights and shadows |

| S13 | F | 43 | 0 | Atrophy of the optic nerve | No |

| S14 | F | 54 | 0 | Retinitis pigmentosa | No |

| S15 | M | 59 | 0 | Glaucoma | No |

| S16 | F | 56 | 46 | Dystrophies primarily involving the retinal pigment epithelium Leber’s congenital amaurosis | No |

| S17 | M | 33 | 20 | Degenerative oculopathy of both eyes | Light and shadows with strong contrast, even colors, a field of view < 1% |

| S18 | F | 24 | 10 | Alstrom syndrome Retinitis pigmentosa | Light and shadows |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Casado-Palacios, M.; Tonelli, A.; Campus, C.; Gori, M. Cross-Modal Interactions and Movement-Related Tactile Gating: The Role of Vision. Brain Sci. 2025, 15, 288. https://doi.org/10.3390/brainsci15030288

Casado-Palacios M, Tonelli A, Campus C, Gori M. Cross-Modal Interactions and Movement-Related Tactile Gating: The Role of Vision. Brain Sciences. 2025; 15(3):288. https://doi.org/10.3390/brainsci15030288

Chicago/Turabian StyleCasado-Palacios, Maria, Alessia Tonelli, Claudio Campus, and Monica Gori. 2025. "Cross-Modal Interactions and Movement-Related Tactile Gating: The Role of Vision" Brain Sciences 15, no. 3: 288. https://doi.org/10.3390/brainsci15030288

APA StyleCasado-Palacios, M., Tonelli, A., Campus, C., & Gori, M. (2025). Cross-Modal Interactions and Movement-Related Tactile Gating: The Role of Vision. Brain Sciences, 15(3), 288. https://doi.org/10.3390/brainsci15030288