1. Introduction

Brain aneurysms are balloon-like structures that develop due to weaknesses in the walls of cerebral arteries and may lead to serious consequences such as subarachnoid hemorrhage (SAH) in case of rupture [

1]. Mortality rates can reach up to 50%, and a significant proportion of survivors suffer from permanent neurological damage [

2]. Therefore, early and accurate diagnosis is of critical importance for appropriate treatment planning [

3]. Time-of-Flight Magnetic Resonance Angiography (TOF-MRA) is widely used in the screening of intracranial aneurysms, as it does not require contrast agents and provides high contrast resolution [

4].

However, manual evaluation of MRA images involves reviewing a large number of slices one by one, which increases the likelihood of missing small aneurysms or those located near bifurcation points [

5]. For this reason, automatic and semi-automatic analysis methods can accelerate the diagnostic process, reduce the workload of radiologists, and assist in detecting lesions that might otherwise be overlooked [

6].

Traditional image processing methods rely on techniques such as thresholding, edge detection, region growing, and morphological filtering [

7]. While these approaches have been foundational in early computer-aided detection (CAD) systems, they fall short in complex anatomical regions such as cerebral vasculature. Specifically, arterial bifurcations, overlapping vessels, and intensity inhomogeneity in time-of-flight magnetic resonance angiography (TOF-MRA) images pose significant challenges. Consequently, such methods often yield a high rate of false positives (FPs) and struggle to reliably detect small aneurysms—typically less than 5 mm in diameter—which are of critical clinical relevance [

8].

To overcome these limitations, deep learning-based approaches, particularly convolutional neural networks (CNNs), have been increasingly employed in medical image analysis. Numerous studies have demonstrated that deep learning models not only outperform traditional image processing methods in segmentation tasks but also offer better generalization and classification capabilities across diverse datasets and imaging modalities [

9,

10]. In the context of aneurysm detection and segmentation, CNN-based architectures have shown promising results, particularly when trained with large, well-annotated datasets. Fully segmentation-focused architectures such as U-Net [

11] and V-Net [

12] have been widely adopted in medical imaging applications due to their ability to learn rich contextual features at multiple scales. However, these models perform only segmentation and lack the inherent capability to localize and classify multiple distinct objects—an essential requirement when dealing with multiple aneurysms in a single MRA or CTA volume. In contrast, hybrid architectures that combine object detection and segmentation functionalities, such as Mask R-CNN, are well-suited for this task.

Mask R-CNN, proposed by He et al. [

13] extends the object detection framework of Faster R-CNN by adding a parallel branch for predicting segmentation masks [

14]. This enables simultaneous bounding box regression, classification, and pixel-level mask generation for each detected region of interest (ROI). In neurovascular imaging tasks, this architecture offers a critical advantage: it can precisely delineate the boundary of each aneurysm while also discriminating between true aneurysms and anatomical variants such as vascular loops or bifurcations. Dai et al. [

15] used a Faster R-CNN (region proposal CNN) on CTA maximum-intensity-projection images to detect cerebral aneurysms (311 patients, 352 aneurysms). This 2D slice-based approach achieved 91.8% sensitivity overall, and 96.7% sensitivity for aneurysms >3 mm. The model performed consistently across aneurysm locations and processed each 3D case in 25 s, suggesting practicality for screening CTA [

15]. Hwang et al. [

16] employed Mask R-CNN (with custom loss functions) to detect and segment intraluminal thrombi in Abdominal Aortic Aneurysms (AAA) on CTA. Using 60 CTA volumes, their method achieved an F1-score of 0.92 for thrombus detection and a high Dice coefficient (0.83) for segmentation. This demonstrates Mask R-CNN’s ability to delineate vascular abnormalities in 3D scans with high accuracy, prioritizing minimal false negatives [

16].

In recent years, applications of deep learning in time-of-flight magnetic resonance angiography (TOF-MRA) have demonstrated the potential of automated aneurysm detection systems, particularly in achieving high sensitivity. Ueda et al. [

17] employed a patch-based 2D ResNet-18 CNN and reported a sensitivity of approximately 93%. However, neither Dice nor F1 scores were reported, limiting the interpretability of segmentation performance. Stember et al. [

18], using a 2D U-Net on MIP images, achieved 98.8% sensitivity, but did not report metrics such as Dice or F1 scores, as all test cases were aneurysm-positive. Sichtermann et al. [

19] applied a 3D convolutional neural network based on the DeepMedic architecture to TOF-MRA data comprising 85 cases with 115 annotated aneurysms. Their model achieved an overall sensitivity of 90%, increasing to 96% for aneurysms between 3–7 mm and 100% for aneurysms larger than 7 mm. The study emphasized the importance of pre- and post-processing to enhance model performance. Joo et al. [

20] introduced a 3D ResNet model trained on 468 MRA exams, achieving 87.1% sensitivity and 92.0% specificity on the internal test set, along with 85.7% sensitivity and 98.0% specificity on an external dataset. Joo et al. [

21] further validated their deep learning model in a retrospective, single-center, confirmatory clinical trial of 332 MRA exams, reporting 91.1% sensitivity and 93.9% specificity at the patient level, and 92.3% sensitivity at the lesion level. Sohn et al. [

22] evaluated the clinical benefit of AI-assisted reading in a physician study, observing improved sensitivity—from 73.5% to 86.5%—across all readers, particularly among non-experts, supporting the model’s utility in assisting diagnostic accuracy in clinical settings.

To further improve detection performance, Terasaki et al. [

23] proposed a multidimensional CNN (MD-CNN) that integrates both 2D and 3D information. Evaluated on a three-center dataset comprising 559 TOF-MRA scans, the MD-CNN achieved 89% sensitivity on external validation sets, showing better performance compared to conventional 2D and 3D CNNs. Di Noto et al. [

24] introduced a weakly supervised 3D CNN that utilized coarse annotations and anatomical priors to reduce labeling effort. Their model reached 83% sensitivity on internal data and 68% on the external MICCAI ADAM dataset, highlighting challenges in generalization across different institutions and scanners.

In the field of computed tomography angiography (CTA), Park et al. [

25] developed HeadXNet, a 3D U-Net model that increased radiologists’ sensitivity from 83.1% to 89.0% in a reader study, without affecting specificity or reading time. Similarly, Shi et al. [

26] trained a 3D CNN on over 1000 CTA scans and achieved 97.5% sensitivity on internal data, maintaining strong performance during external validation. Their model modestly improved radiologist accuracy, demonstrating the benefit of AI assistance in detecting subtle lesions.

More recently, Bizjak et al. [

27] proposed a cross-modal pipeline involving a two-step approach: vessel segmentation using nnU-Net, followed by aneurysm classification on vessel surfaces via PointNet++. Evaluated on over 3200 scans (1054 MRA, 2174 CTA), the model achieved 90% sensitivity on CTA and 85% on MRA. Sensitivity remained high even for aneurysms smaller than 3 mm, emphasizing its robustness and clinical applicability across imaging modalities.

A comparative overview of the most recent deep learning-based studies on intracranial aneurysm detection using TOF-MRA is presented in

Table 1. This summary highlights variations in dataset composition, modeling approaches, and performance metrics across the literature, providing context for the effectiveness of the proposed model in this study.

In many previous studies, segmentation performance has primarily been reported in terms of sensitivity, which reflects the model’s ability to correctly identify true aneurysmal cases. This metric is conceptually equivalent to recall in classification terminology, as both measure the proportion of true positives correctly detected. However, sensitivity alone does not account for false positives and may therefore be insufficient, particularly in datasets with class imbalance or in clinical decision support settings where high precision is crucial. For this reason, our study reports not only recall but also precision—which penalizes false positives—along with the F1 score, which balances precision and recall, and the Dice Similarity Coefficient (DSC), which evaluates the spatial accuracy of segmentation. This comprehensive evaluation aims to capture not only the sensitivity of the model but also its accuracy and consistency in a more balanced and clinically meaningful manner.

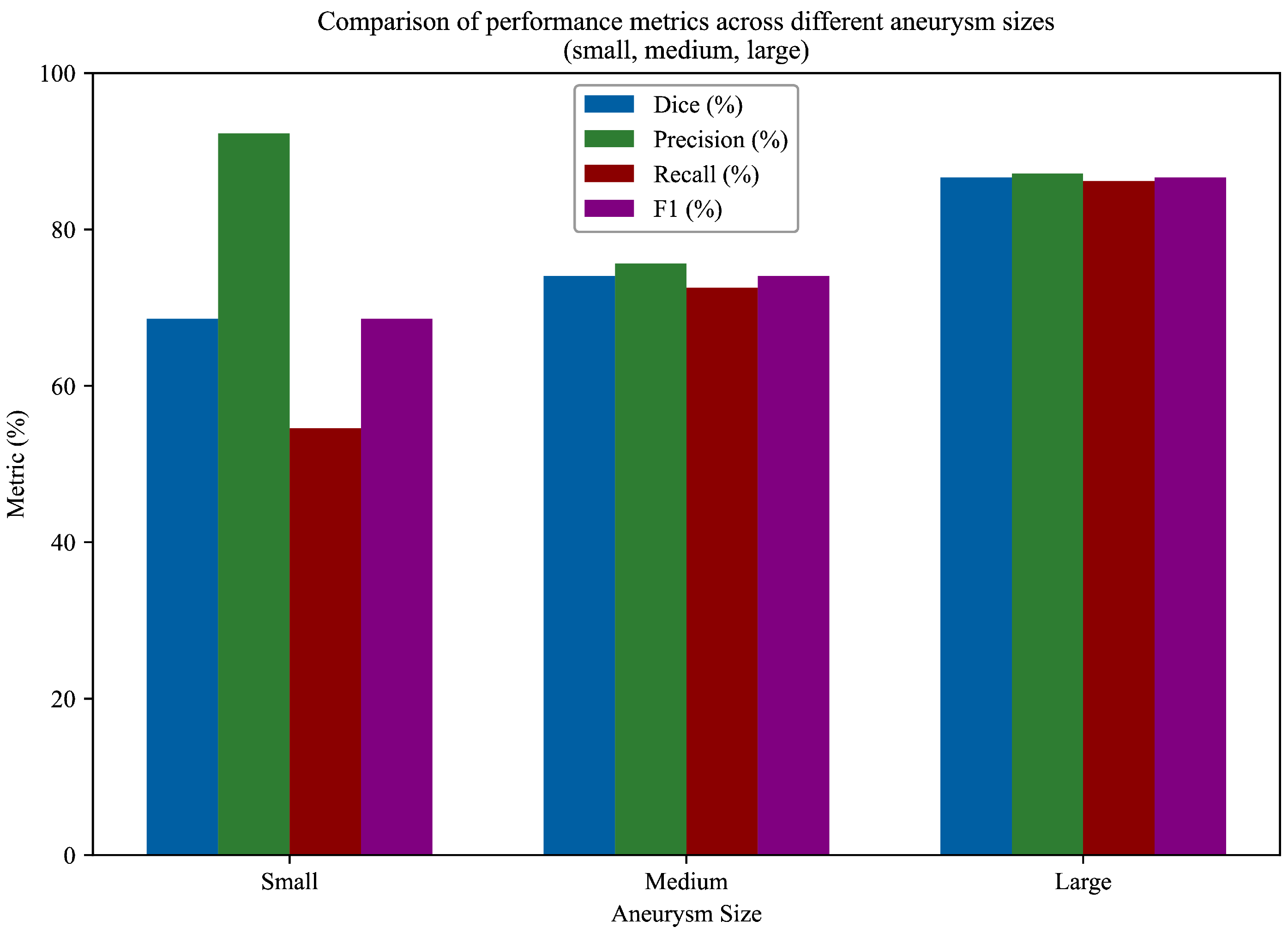

Given these promising developments, the present study aims to evaluate the performance of a Mask R-CNN model trained on 3D TOF-MRA images using optimized hyperparameters via Optuna [

28]. Particular attention is given to the ability of the model to segment aneurysms of varying sizes, with quantitative evaluation metrics such as Dice Similarity Coefficient (DSC), Precision, Recall, and F1-score being computed separately for small, medium, and large aneurysms.

To the best of our knowledge, no prior study has explored the application of a Mask R-CNN architecture with a ResNet-50 backbone for intracranial aneurysm detection using TOF-MRA data. This model was selected for its proven capability to perform simultaneous object detection and instance-level segmentation, which is particularly advantageous in cases with multiple or anatomically ambiguous aneurysms. Furthermore, the ResNet-50 backbone offers a favorable trade-off between model depth and computational cost, facilitating robust feature extraction even in complex vascular structures. Although most prior work has focused on segmentation-only architectures or CTA-based datasets, this study demonstrates that Mask R-CNN, when appropriately adapted to 2D slices of TOF-MRA volumes, can effectively localize and delineate aneurysms with competitive performance. Based on these considerations, our primary objective was not to develop a full 3D classification framework, but rather to design a sequence-based, pixel-level approach capable of distinguishing aneurysmal vessels from normal vasculature and accurately segmenting these regions. In particular, we aimed to build a decision-support system that can reliably detect small aneurysms—those most likely to be overlooked in routine clinical interpretation—by leveraging fine-grained 2D representations of TOF-MRA slices.

Mask R-CNN, with its ResNet-50 backbone, brings a two-stage detection-and-segmentation framework to aneurysm tasks. This is unusual in this domain: reviews consistently report that most aneurysm-detection pipelines use encoder–decoder CNNs (especially U-Net variants) for segmentation [

29]. By contrast, Mask R-CNN first proposes candidate regions (like a generic object detector) and then refines each into an instance mask. Alternative architectures have been explored: vision–transformer hybrids (e.g., TransUNet) capture global context, and graph convolutional nets have been applied to vascular graphs for artery labeling, but these address different problems (classification or structural labeling rather than pixelwise aneurysm masks). Classical approaches typically involve explicit vessel segmentation via thresholding or model fitting, followed by rule-based lesion detection.

In parallel, recent work has emphasized that deep learning models for neurovascular imaging should provide spatially interpretable outputs and follow rigorous, attention-driven neuroimaging methodology [

30,

31]. Although our framework does not introduce an explicit attention module, the two-stage, region-proposal-based design of Mask R-CNN naturally focuses computation on a limited set of salient vascular regions and produces pixel-level aneurysm masks. These masks offer anatomically grounded visual evidence that radiologists can inspect alongside the original TOF-MRA images, which is consistent with this attention-focused, interpretable neuroimaging perspective.

Architecturally, Mask R-CNN’s combination of a Region Proposal Network (RPN) and ROIAlign is the key advantage: it treats each aneurysm as a distinct instance, which can help in cases with multiple nearby aneurysms. In practice, Mask R-CNN has shown competitive accuracy. For example, one comparative study reported that Mask R-CNN achieved a detection mAP of approximately

at

on an aneurysm-like task, slightly better than a U-Net baseline (mAP

) and nearly matching a TransUNet. However, this came with a cost: Mask R-CNN ran substantially slower (about 30 frames/s) than a pure U-Net (about 42 frames/s) on the same hardware. In other words, Mask R-CNN can yield high accuracy but has higher computational complexity. Thus, the novelty of our approach lies in adapting this powerful instance-segmentation model to TOF-MRA aneurysm data. Ultimately, its use is justified only if it provides tangible gains over well-tuned CNN or transformer baselines; current evidence suggests that it can be competitive in accuracy, but any improvements must outweigh its extra computational overhead [

32].

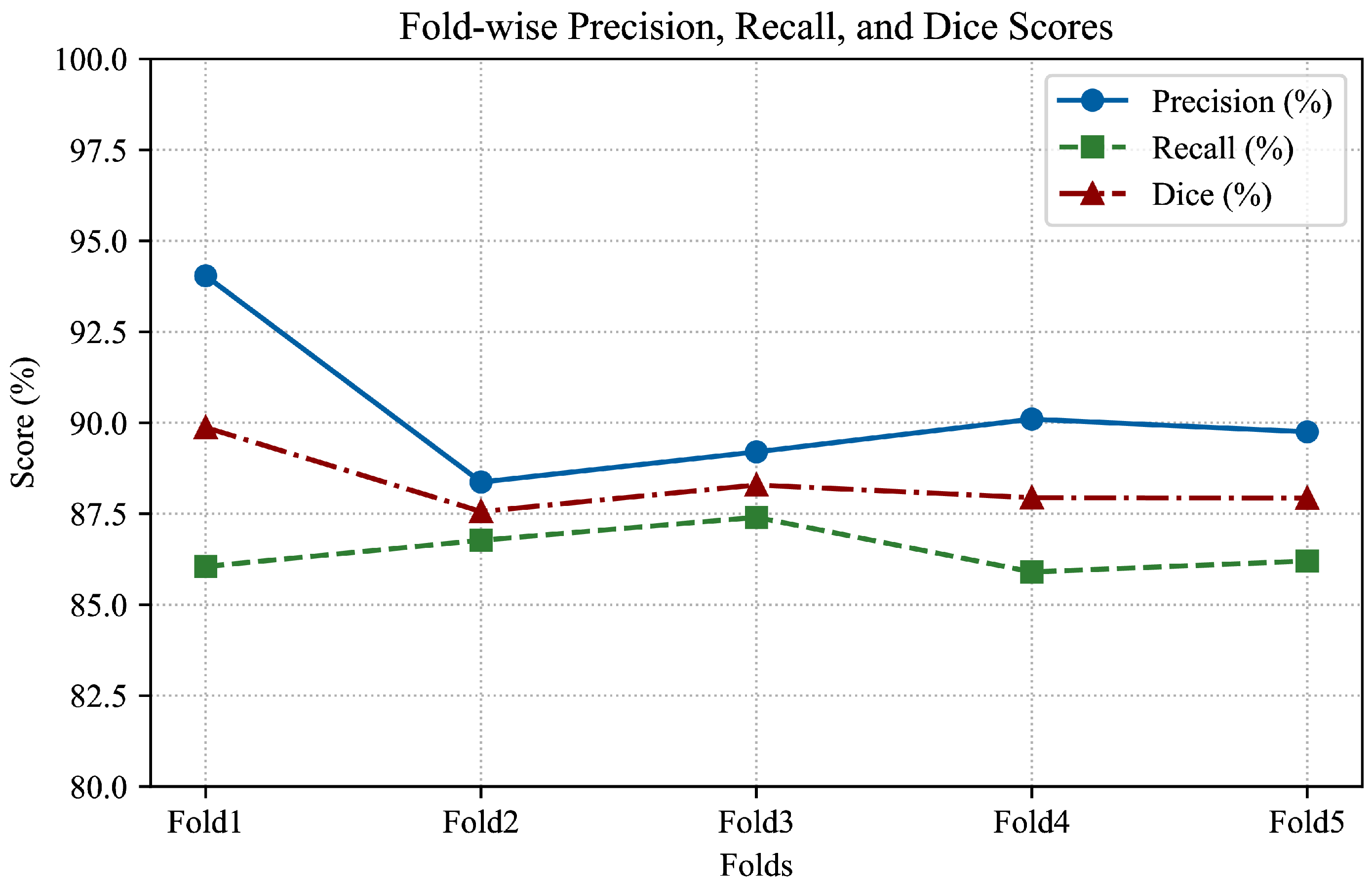

To improve the model’s ability to generalize across different patient data, 5-fold cross-validation was implemented. This approach partitions the dataset into five equally sized subsets (folds), ensuring that each subset is used as a testing set exactly once while the remaining four serve as the training set. Five folds were chosen as a practical compromise between computational efficiency and statistical robustness. Using fewer folds (e.g., 2 or 3) may result in high variance in validation performance, whereas a higher number (e.g., 10) increases training time substantially without a proportionate gain in reliability, particularly in medical datasets where data preprocessing and model training are time-consuming.

For hyperparameter tuning, a Bayesian optimization strategy was adopted using the Optuna framework. This allowed for an efficient exploration of the hyperparameter space, including learning rate, batch size, number of iterations, and decision thresholds, all of which were selected based on validation set performance.

To enhance robustness against image variability, a range of data augmentation techniques were applied to the training data. These included geometric transformations such as rotation, as well as photometric augmentations like brightness and contrast adjustments and the addition of slight Gaussian noise. It is necessary to emphasize that horizontal and vertical flipping were not employed, as preserving anatomical orientation is critical in cerebral imaging tasks.

In addition to the base architecture, two targeted architectural modifications are introduced to improve the detection of small intracranial aneurysms (<3 mm). First, a custom Small Object Aware ROI Head is integrated into the Mask R-CNN framework. This specialized module processes low-area proposals separately using adjusted classification thresholds and, when needed, low-level FPN features to better preserve fine structural details. Secondly, the Region Proposal Network (RPN) is modified with a refined anchor box configuration, including smaller anchor sizes and broader aspect ratios, alongside optimized IoU thresholds. These enhancements significantly improve the sensitivity of the model and segmentation accuracy for small and hard-to-detect aneurysms.

2. Material and Methods

2.1. Dataset

In this study, two different data sources consisting of Time-of-Flight Magnetic Resonance Angiography (TOF-MRA) images were used for the detection and segmentation of intracranial aneurysms. A total of 161 aneurysmal cases were formed by combining 157 TOF-MRA sequences with aneurysms obtained from the OpenNeuro platform and 4 aneurysmal sequences obtained from Yozgat City Hospital. In addition, 286 healthy TOF-MRA images from Yozgat City Hospital were included in the model as background data for training the Mask R-CNN model.

All images were anonymized, and patient identifiers were removed. The TOF-MRA sequences obtained from Yozgat City Hospital were acquired on a Siemens MAGNETOM Amira 1.5T MRI scanner and were approved by the Ethics Committee of the Yozgat Provincial Directorate of Health and the Ethics Committee of Yozgat Bozok University.

All images obtained from the OpenNeuro platform (dataset: ds003949) were collected between 2010 and 2015 and were approved by the relevant Regional Ethics Committee. The OpenNeuro dataset consists of TOF-MRA scans acquired on a Siemens MAGNETOM Verio 3T MRI scanner, and all data were anonymized according to the Brain Imaging Data Structure (BIDS) standard and publicly shared under the CC0 license [

33].

2.2. Manual Segmentation with ITK-SNAP

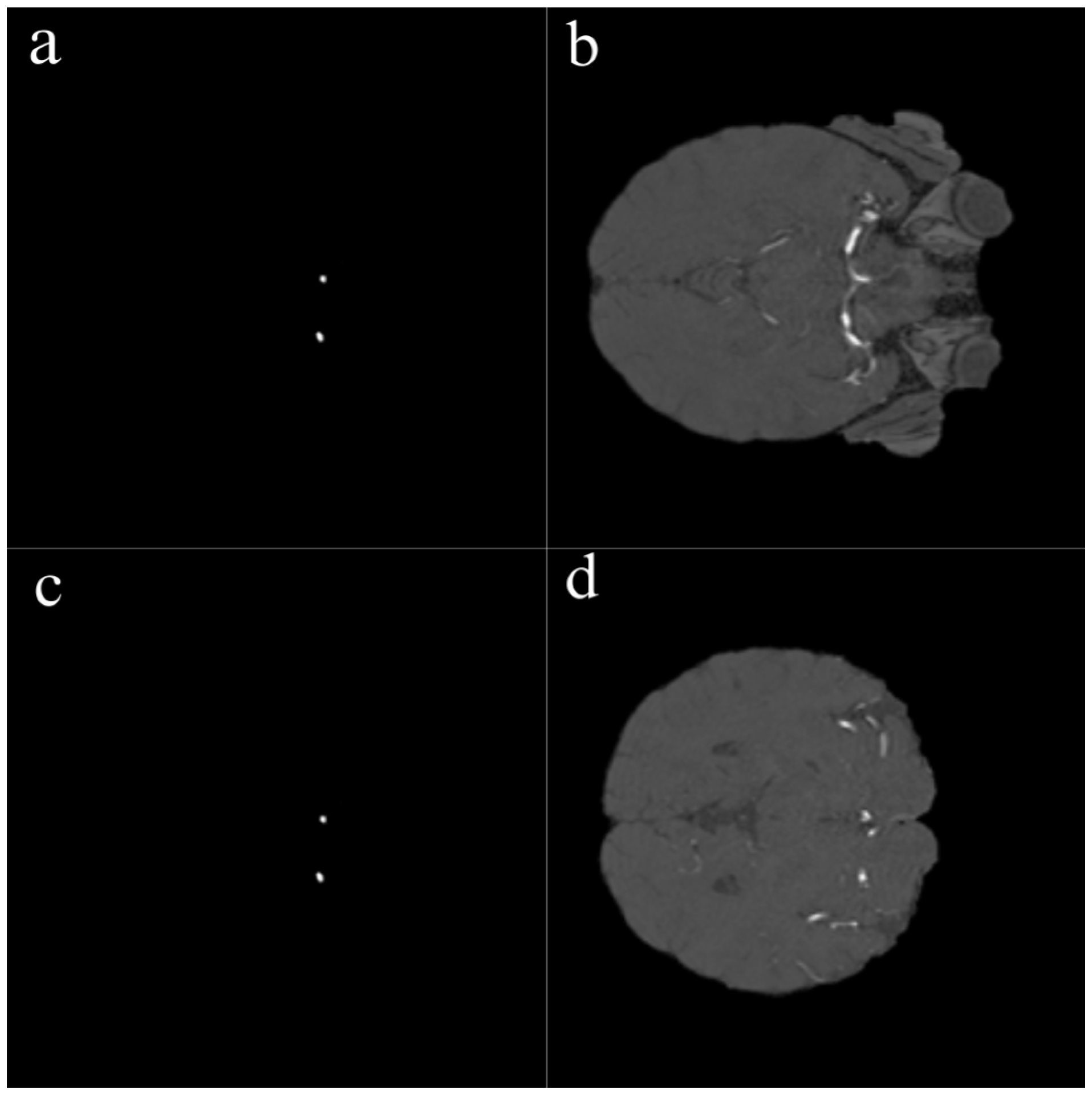

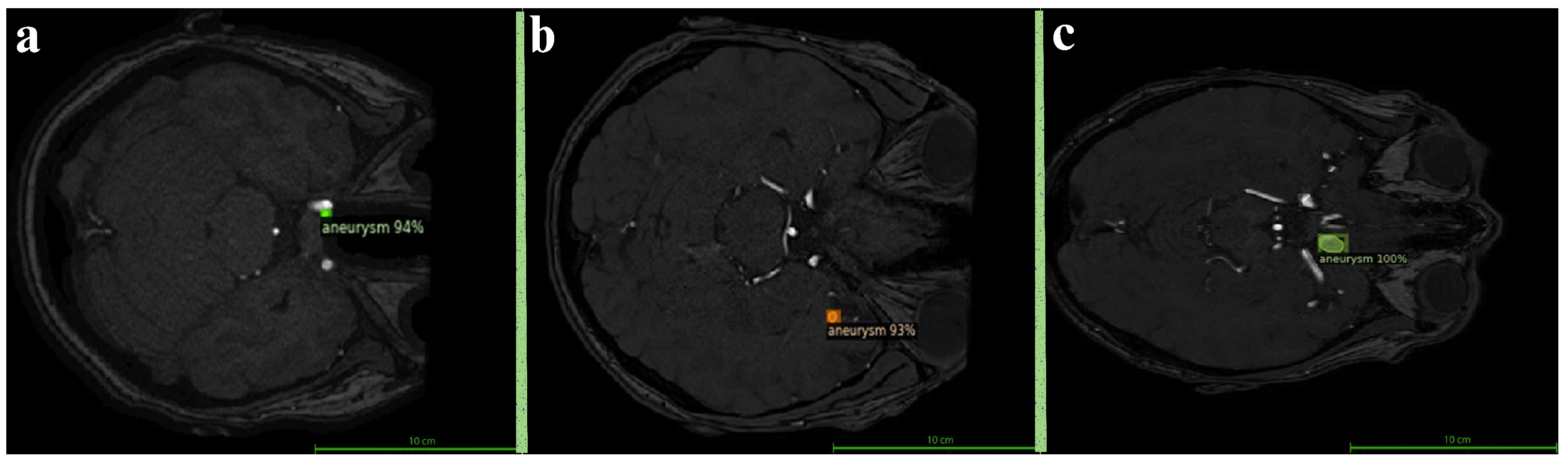

The segmentation of all aneurysmal cases used in this study was performed manually by an expert radiologist using the ITK-SNAP software version 4.2.0. During the segmentation process, each axial slice of the TOF-MRA images was carefully examined, and the boundaries of the aneurysms were manually delineated using the “Polygon Tool.” As a result of this process, accurately defined mask files were generated for each aneurysmal image as illustrated in

Figure 1. Among the 290 TOF-MRA scans obtained from Yozgat City Hospital, 286 cases were labeled as “no aneurysm” after a brief screening. The remaining 4 aneurysmal cases were subjected to detailed segmentation and were manually masked. In addition, all 157 aneurysmal TOF-MRA cases obtained from the OpenNeuro platform were re-evaluated by the expert radiologist and manually re-segmented using ITK-SNAP. As a result of this process, a total of 161 aneurysmal and 286 healthy TOF-MRA images were prepared for use in the study.

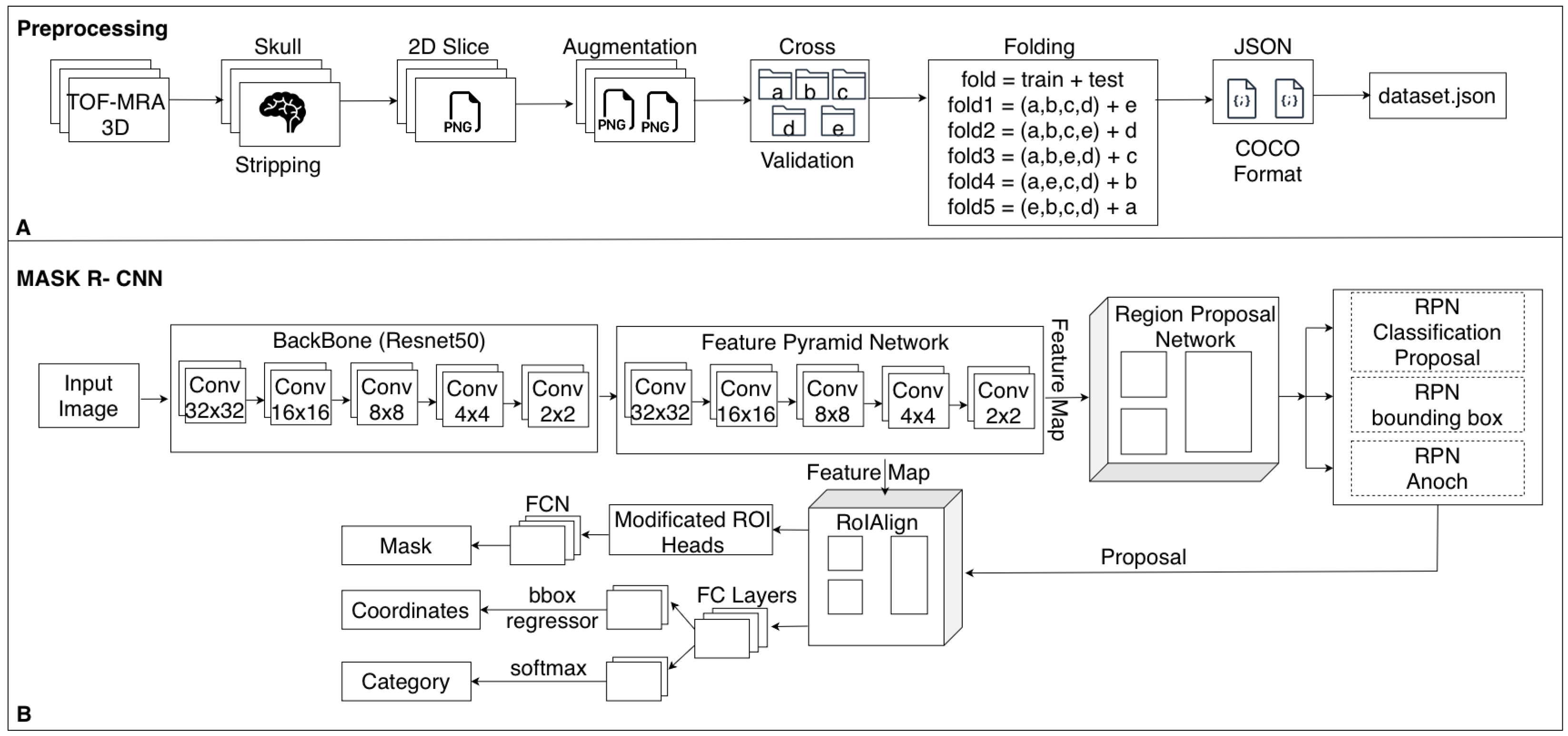

2.3. Preprocessing

In this study, several preprocessing steps were applied to prepare the TOF-MRA images for deep learning. To focus the model on intracranial structures, skull stripping was applied to all TOF-MRA images using FMRIB’s Brain Extraction Tool (BET). By adjusting parameters such as fractional intensity and surface inflation, brain tissue was effectively isolated. Processed images were manually reviewed, and necessary adjustments were made to ensure accurate brain extraction, resulting in a clean dataset suitable for segmentation. The raw data consisted of 3D volumetric brain images stored in NIfTI format (nii/nii.gz). To improve the training performance of the model and standardize the dataset, these volumes were converted into two-dimensional (2D) slices and saved in PNG format. Each image slice was then paired with its corresponding segmentation mask (

Figure 2).

In the initial phase, the dataset was organized by creating training, validation, and test subsets. TOF-MRA images with and without aneurysms were separated into corresponding folders. To ensure the accuracy of segmentation procedures, the presence and correctness of mask files corresponding to each image were verified. Any missing or mismatched segmentation files were identified and either corrected or excluded from the dataset if necessary.

One of the most important steps in the preprocessing workflow was the conversion of 3D volumetric TOF-MRA images into two-dimensional slices. Each TOF-MRA sequence was sliced in the axial plane and converted into 2D images, with each slice saved as an individual image file. The same process was applied to the segmentation masks, ensuring that each aneurysmal region was paired with the corresponding 2D image.

To enable more efficient processing by the model, pixel intensity normalization was performed. This step aimed to reduce brightness variations across images, allowing for more consistent model learning. For each image, minimum and maximum pixel values were identified, and a rescaling to the range of 0–255 was applied. The normalization was carried out using the following formula:

where

I is the original pixel intensity,

and

are the minimum and maximum pixel intensities in the image, respectively, and

is the normalized pixel intensity.

In the final step of the preprocessing pipeline, all generated images and their corresponding segmentation masks were saved into designated directories and organized for use during model training. As a result, a processed and normalized dataset was created from the raw TOF-MRA data, making it suitable for training the deep learning model.

2.4. Data Augmentation

In this study, various data augmentation techniques were applied to enable better generalization of the deep learning model on TOF-MRA images. Expanding the dataset helps the model learn different variations, reduces the risk of overfitting, and improves its ability to detect small aneurysms.

The OpenCV library was used to perform image transformations and manipulations during the augmentation process. These transformations were applied not only to the images but also to their corresponding masks to maintain data consistency.

One of the primary techniques used was rotation. To increase the ability of the model to detect aneurysms from different angles, each image was rotated by a certain degree. Rotation was performed around the image center to avoid distortion of the original content and corresponding masks, and interpolation was used to minimize information loss. The rotation was applied using the following transformation matrix:

where

is the rotation angle, and

,

are the translation terms used to preserve the center of the image during rotation. Each image was rotated by

and

to improve the model’s ability to detect aneurysms from different orientations.

In addition to rotation, contrast adjustment was also applied. To enable the model to learn from variations in image brightness and contrast levels, contrast adjustment was applied. This process was performed by multiplying the pixel values of the image by a certain factor and modifying the brightness value. The contrast adjustment was applied using the following formula:

where

represents the adjusted image,

is the contrast factor, and

is the brightness value. In this study, the parameters were set as

and

to reduce the dominance of high-intensity vessels and improve the visibility of low-contrast aneurysmal regions.

To further enhance visual features, gamma correction was utilized. This technique mimics the logarithmic response of the human eye and is commonly used to enhance details in low-contrast areas. The gamma correction was computed using the following formula:

where

represents the gamma coefficient. In this study,

was used, which enhanced the visibility of the bright regions in the images.

2.5. Dataset Splitting and 5-Fold Cross-Validation

The dataset, consisting of NIfTI-format TOF-MRA images, was randomly shuffled and partitioned into five mutually exclusive folds for cross-validation. In each iteration, 80% of the data (four folds) was used for training, while the remaining 20% (one fold) served as an independent test set.

In total, 286 aneurysm-negative scans were used as background-only training data and were not included in the aneurysm-focused cross-validation analysis. The remaining 161 aneurysm-positive subjects, each comprising approximately 150–200 axial slices, were used to construct the 5-fold cross-validation splits. All images were annotated using subject-slice identifiers of the form sub_XX_slice_YY (e.g., sub_05_slice_115). By grouping on the subject prefix (sub_XX), we ensured that all slices from a given patient were always assigned to the same fold, so that no subject appeared in more than one split. Thus to avoid data leakage and ensure unbiased performance estimation, we strictly enforced patient-level separation between training and test folds, ensuring that no scans from the same patient appeared in both training and test sets.

Although training is performed on 2D slices with pixel-wise labels, all data splits were defined strictly at the patient level. Slices from the same 3D TOF-MRA volume share highly correlated anatomy, noise characteristics, and acquisition parameters; mixing such slices across training and test sets would lead to information leakage and artificially optimistic performance estimates. Therefore, each patient’s entire volume (all slices) was assigned exclusively to a single split (train or test), reflecting the realistic clinical scenario in which the model encounters completely unseen patients.

2.6. Mapping to 2D PNG Format

After converting the NIfTI-format images into 2D PNG format, the training and test datasets were updated accordingly. The original dataset.json files were used to map the NIfTI filenames to their corresponding PNG images, and new JSON files (dataset_fold.json) were generated for each fold. This resulted in five separate training and test sets in PNG format, prepared for cross-validation and model training.

2.7. Architectural Modifications for Small Aneurysm Detection

To enhance the sensitivity of Mask R-CNN for small aneurysms, several architectural modifications were introduced to improve the detection of lesions occupying very few pixels in TOF-MRA slices. Although clinical rupture-risk classifications typically define aneurysms smaller than 7 mm as “small” [

34], this threshold does not reflect the actual detection difficulty in computer-vision-based segmentation. In practice, the greatest challenge arises for aneurysms smaller than 3 mm, which may appear in only one or two slices and cover a very limited pixel area. Therefore, consistent with prior work [

24], we adopted a segmentation-oriented grouping: aneurysms <3 mm were considered small, those between 3–5 mm as medium, and those >5 mm as large. This grouping guided the design of architectural modifications aimed at improving sensitivity for the most pixel-sparse lesions.

During each forward pass, the physical area of every predicted region was computed in mm2 using voxel spacing values obtained directly from the NIfTI metadata, rather than assuming a fixed spacing. Empirically, we observed that very small bounding boxes were frequently suppressed by standard ROI operations (non-maximum suppression and score filtering). For typical voxel spacings in our dataset, this corresponded to regions with approximate areas below 25–35 mm2. Based on a series of pilot experiments, we selected 28 mm2 as the decision threshold for defining “small objects”: proposals below this area were treated as candidate small aneurysms.

For these small-object proposals, we applied a dynamic confidence-thresholding strategy to mitigate the bias of the ROI classifier toward larger structures. Concretely, the classification threshold was reduced for small regions (from 0.90 to 0.60), allowing plausible small aneurysm candidates to pass the classification stage instead of being prematurely discarded. In parallel, the ROI head was modified to become size-aware: small instances were processed with enhanced feature resolution by prioritizing lower FPN levels (P2–P3) and increasing the ROI pooling resolution (from the default to ), thereby preserving fine vascular details.

Overall, the ROI module became explicitly size-aware through the combination of (i) physical size computation from image metadata, (ii) adaptive score filtering for small proposals, and (iii) high-resolution feature pooling for tiny regions. In our experiments, the 28 mm2 threshold provided the best trade-off: lower thresholds led to a noticeable increase in false positives, whereas higher thresholds caused systematic suppression of small aneurysm candidates during NMS and ROI scoring. With the chosen configuration, the model’s recall for small aneurysms improved, while performance for medium and large lesions remained stable.

2.8. Training Process and Hyperparameter Optimization

In this study, a deep learning model based on the Mask R-CNN architecture was trained to perform automatic detection and segmentation of intracranial aneurysms using TOF-MRA images. The Detectron2 framework was employed for implementation, offering a modular and flexible environment for object detection and instance segmentation tasks [

35]. The training pipeline involved several sequential stages, including dataset preparation, annotation formatting, model configuration, hyperparameter tuning, and evaluation.

To ensure compatibility with Detectron2, all preprocessed images and their corresponding segmentation masks were converted into the COCO (Common Objects in Context) annotation format. Each aneurysmal image slice was associated with a manually created binary mask, which was represented in the COCO file using polygon-based contour information. In contrast, healthy TOF-MRA images were incorporated into the dataset without any annotation entries. These background-only samples, lacking annotated regions, were explicitly included to help the model distinguish normal vascular anatomy from pathological aneurysmal structures. By exposing the model to such negative examples, false positive detections were significantly reduced, particularly in areas where normal vessels might resemble aneurysms in structure or intensity.

Following the dataset formatting process, two separate COCO annotation files were generated for each fold: one for training and one for validation. Each file included both aneurysmal and non-aneurysmal cases, allowing the model to learn from a balanced distribution of pathological and normal anatomical patterns. The segmentation task was defined as single-class, focusing solely on the “aneurysm” category.

The base model configuration was adopted from Detectron2’s standard

mask_rcnn_R_50_FPN_3x.yaml, which employs a ResNet-50 backbone in combination with a Feature Pyramid Network (FPN). This configuration was customized to accommodate the specific characteristics of medical imaging data. Pretrained ImageNet weights were used to initialize the model, facilitating transfer learning and ensuring more stable convergence during training. The model was trained using Detectron2’s

DefaultTrainer with GPU acceleration enabled on a workstation equipped with an NVIDIA RTX 3080 GPU running Ubuntu Linux. An overview of the complete preprocessing pipeline and the adopted Mask R-CNN architecture is summarized in

Figure 3.

To enhance detection accuracy, especially for small aneurysms that are often challenging to localize, an extensive hyperparameter optimization process was conducted using the Optuna framework. Bayesian optimization was applied to explore the parameter space efficiently. The objective was to maximize validation performance in terms of Dice score and F1-score across all folds. Key parameters such as learning rate, batch size, number of iterations, region proposal thresholds, and anchor sizes were dynamically tuned. The optimization process was repeated for each fold in a 5-fold cross-validation setting to ensure robust and generalizable results.

Incorporating healthy TOF-MRA scans as negative training examples, along with fine-tuned hyperparameters and model adaptations, significantly improved both the sensitivity of the model. This strategy contributed to reducing false alarms while maintaining high detection performance, particularly in identifying small and morphologically variable aneurysms.