P300 Spatiotemporal Prior-Based Transformer-CNN for Auxiliary Diagnosis of PTSD

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

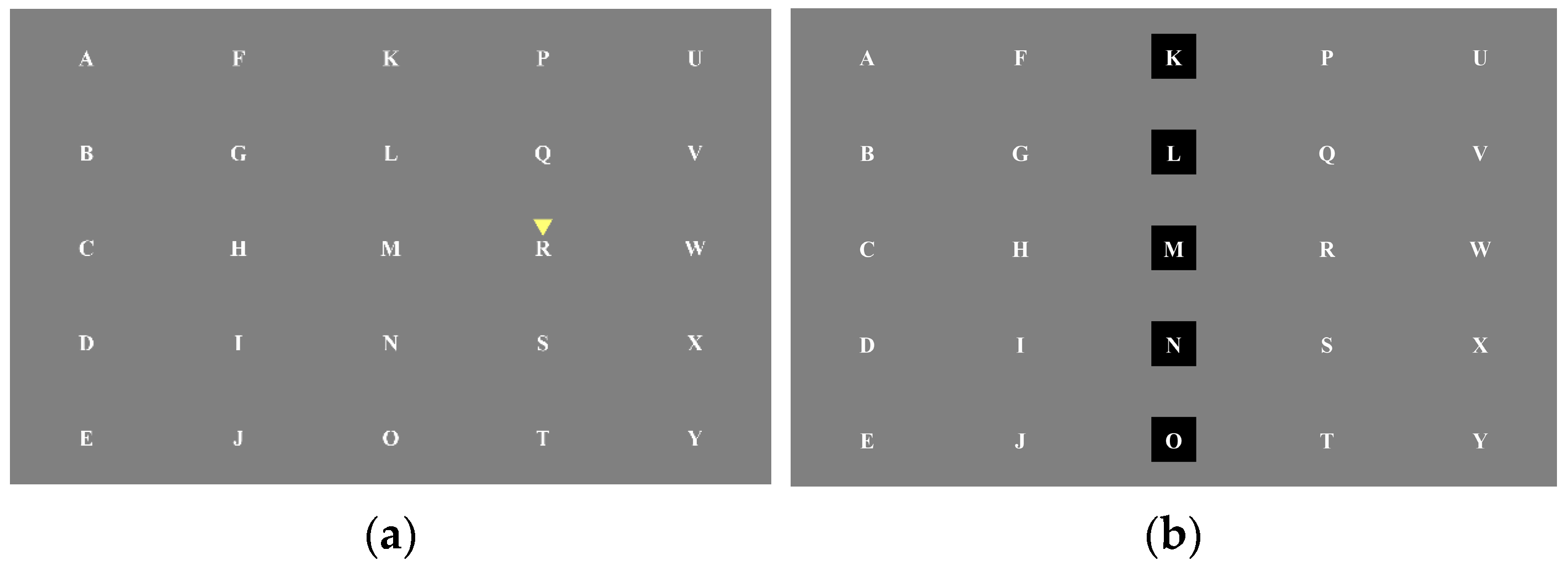

2.2. Experimental Paradigm

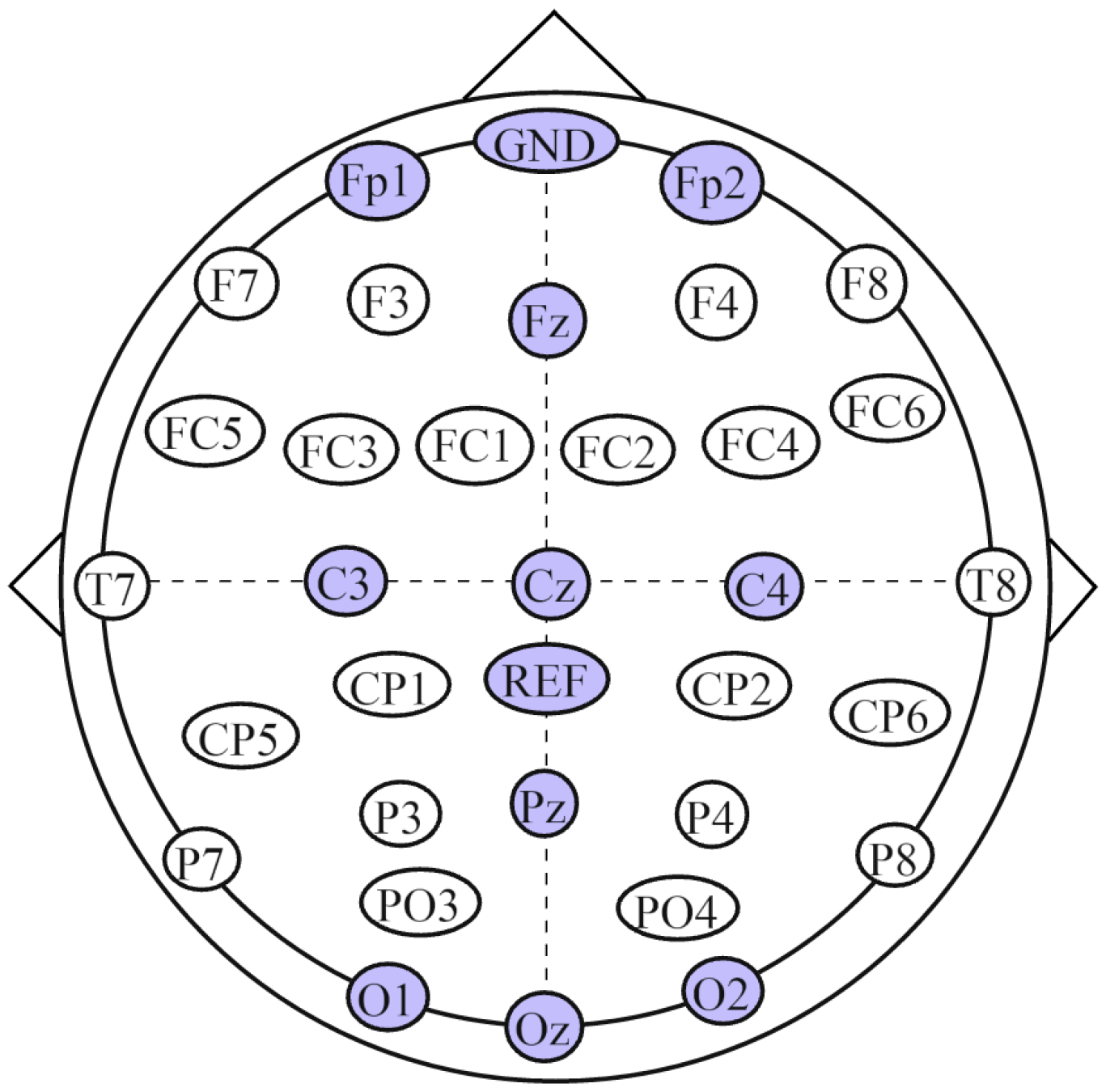

2.3. Data Acquisition

2.4. Preprocessing

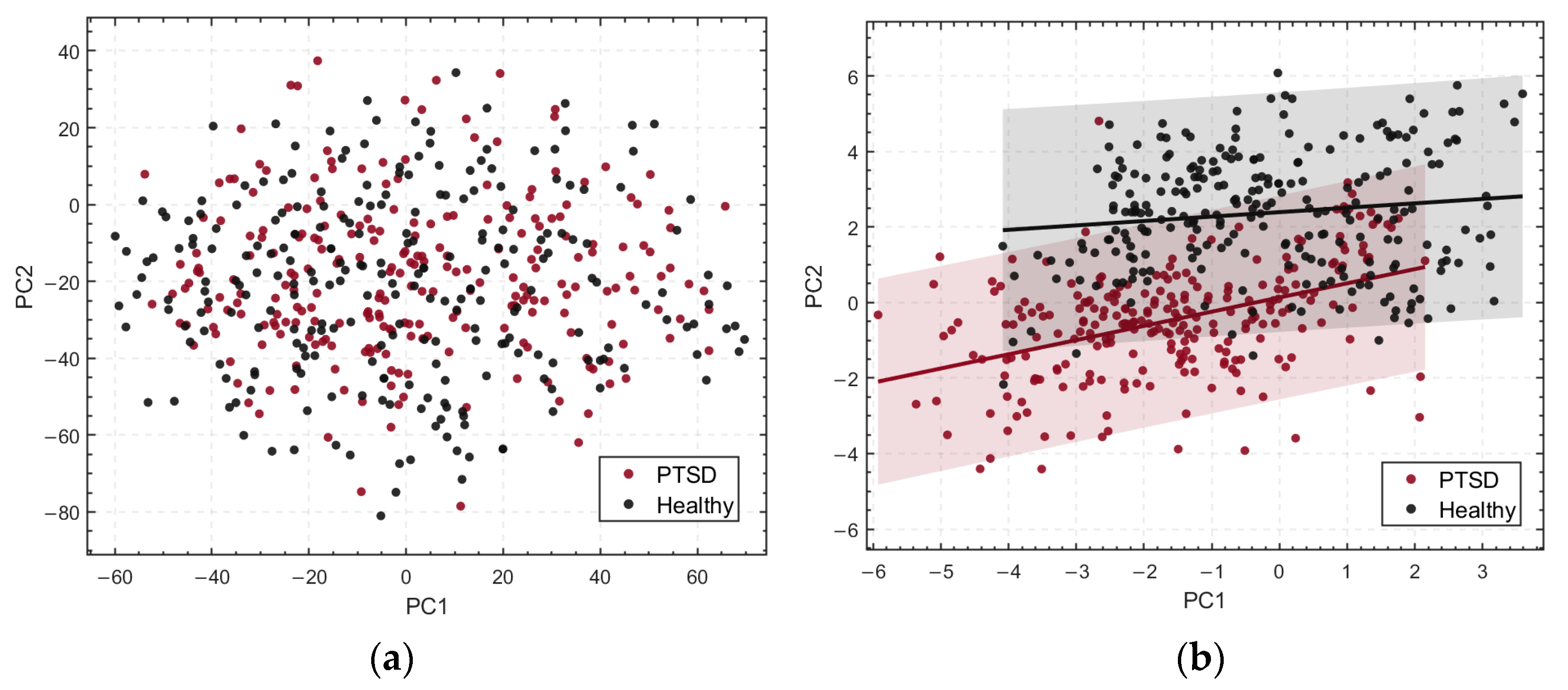

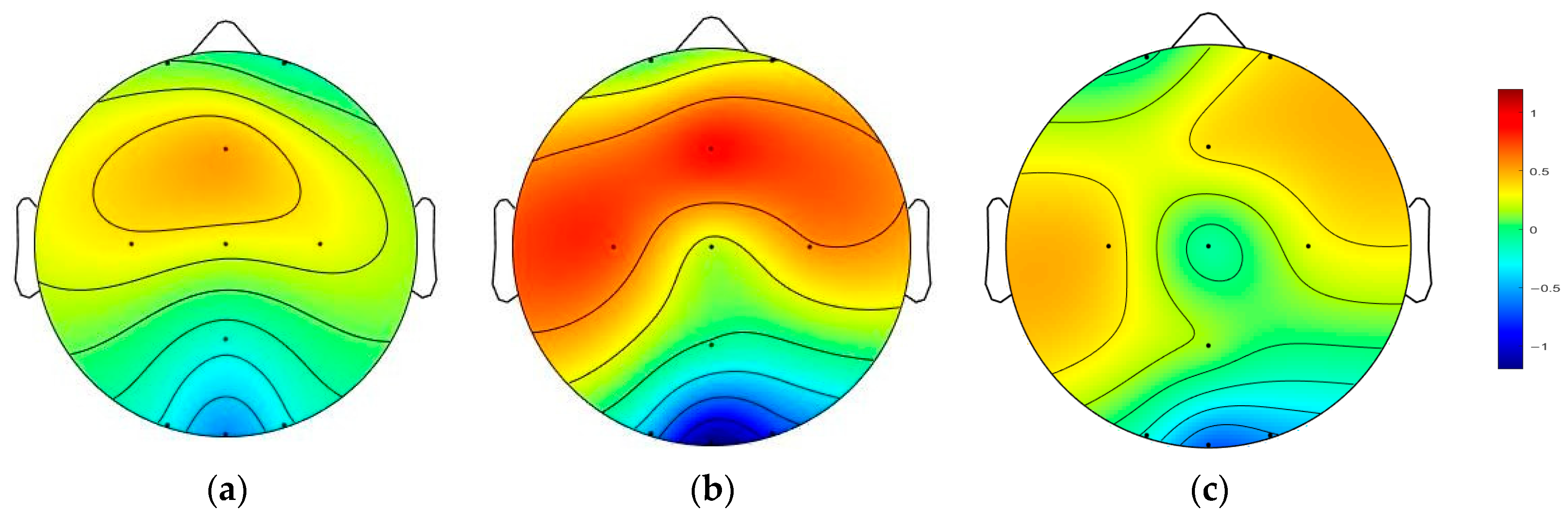

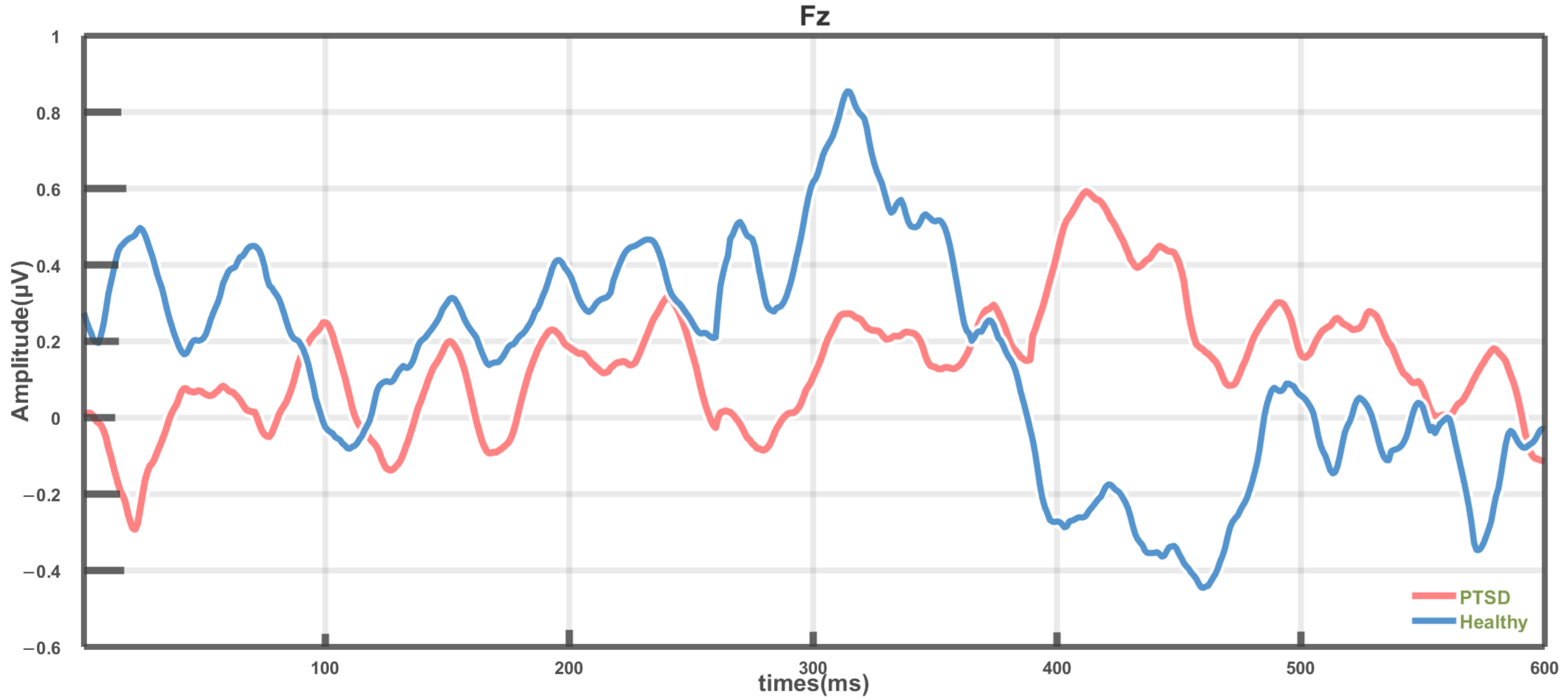

2.5. Spatiotemporal Difference Analysis

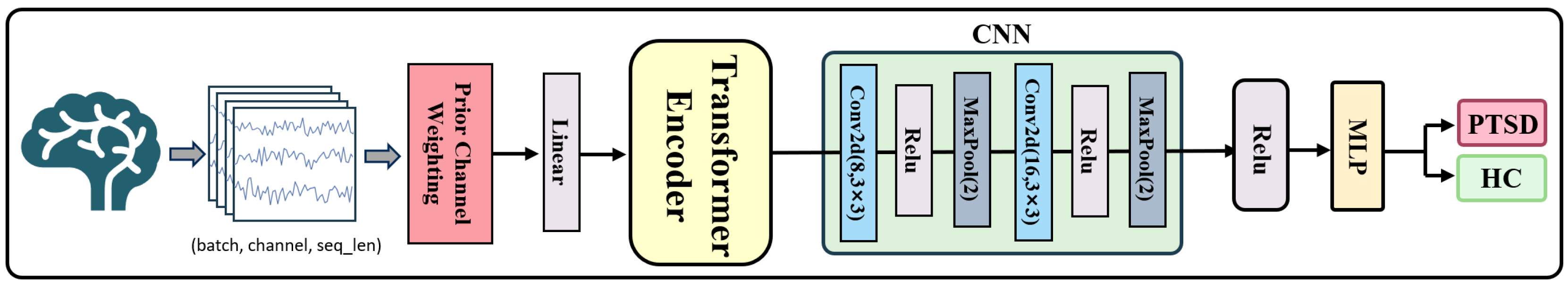

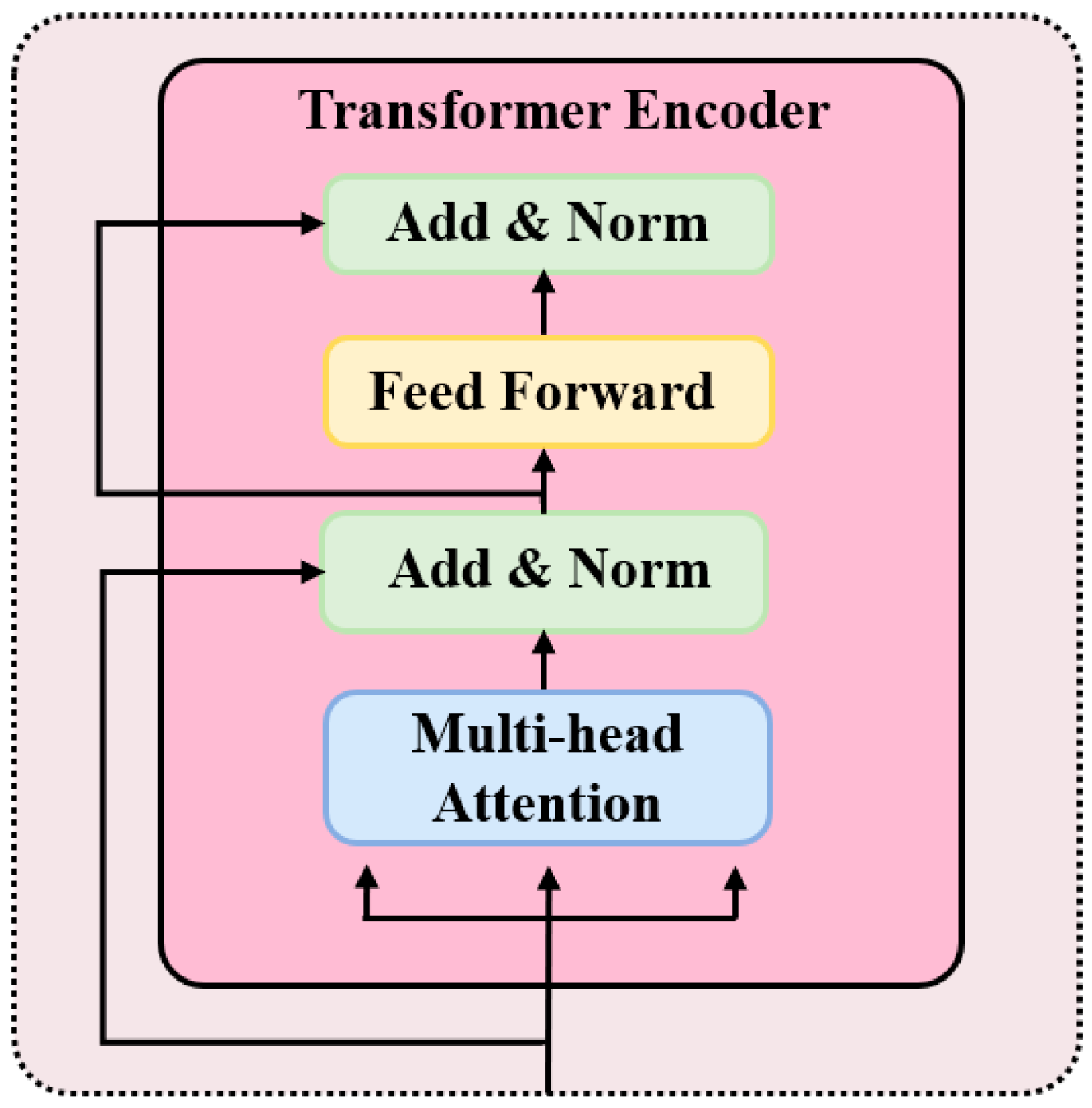

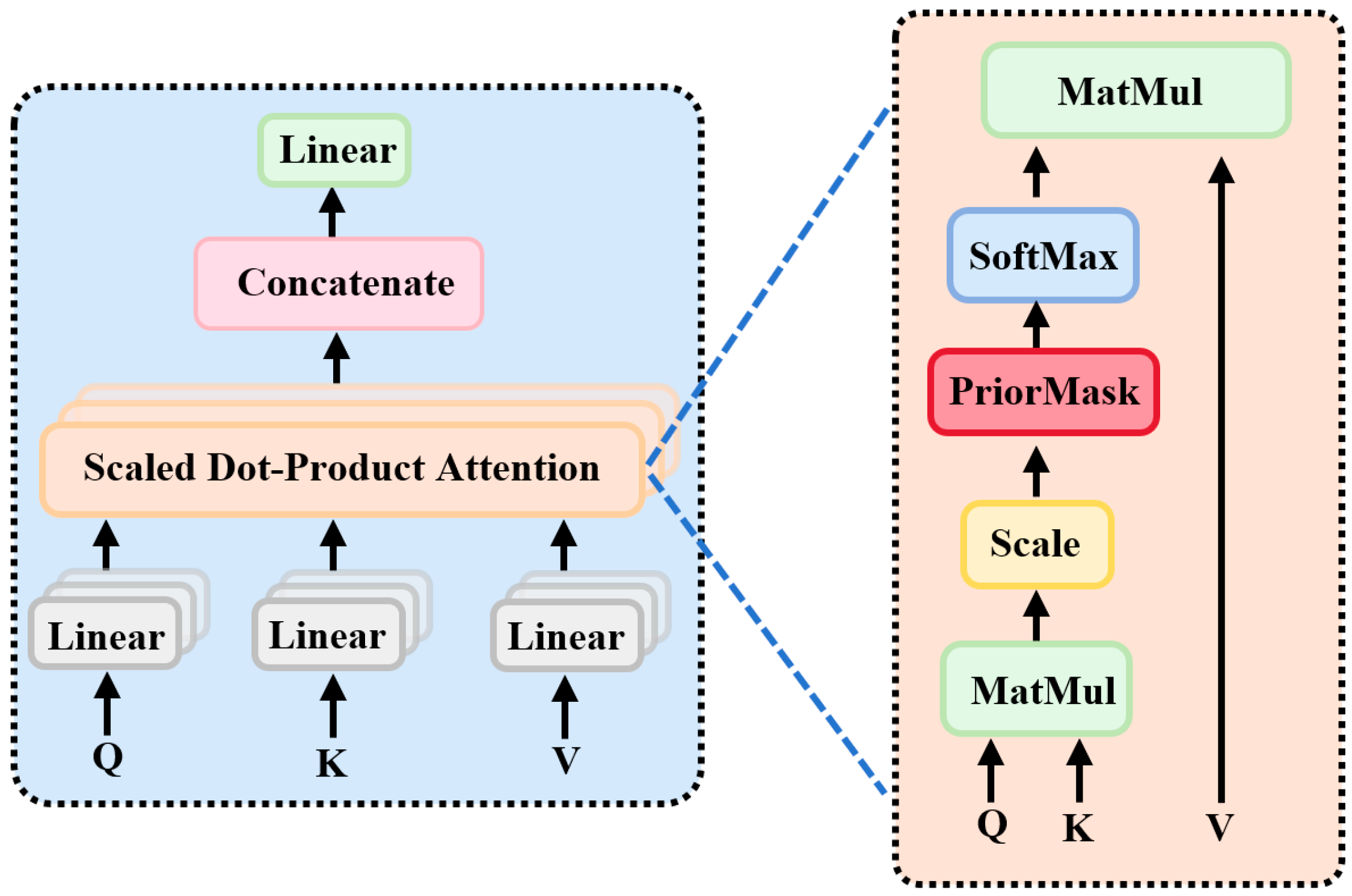

2.6. P300-STTCNet Model

3. Results

3.1. Difference Analysis

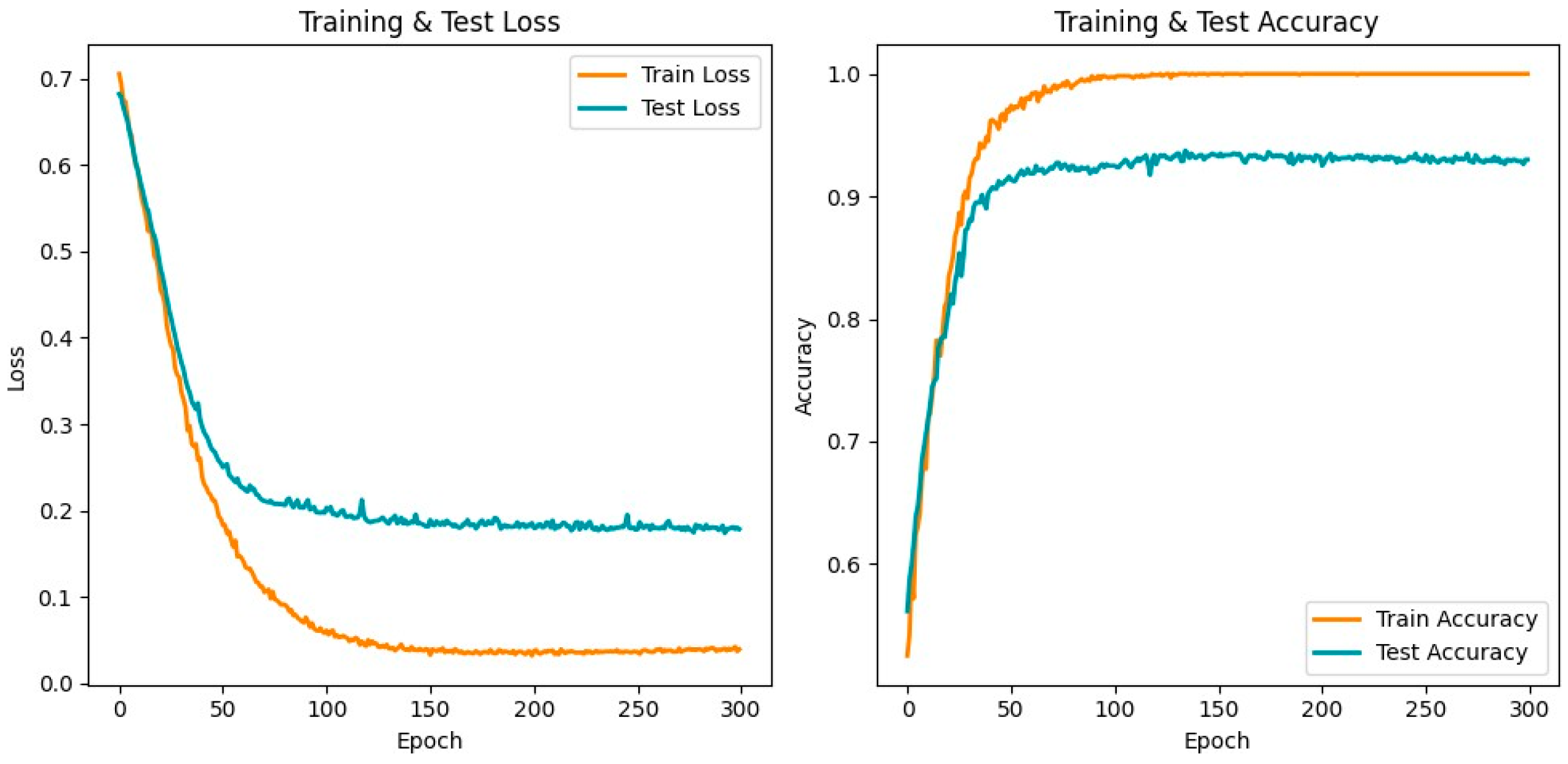

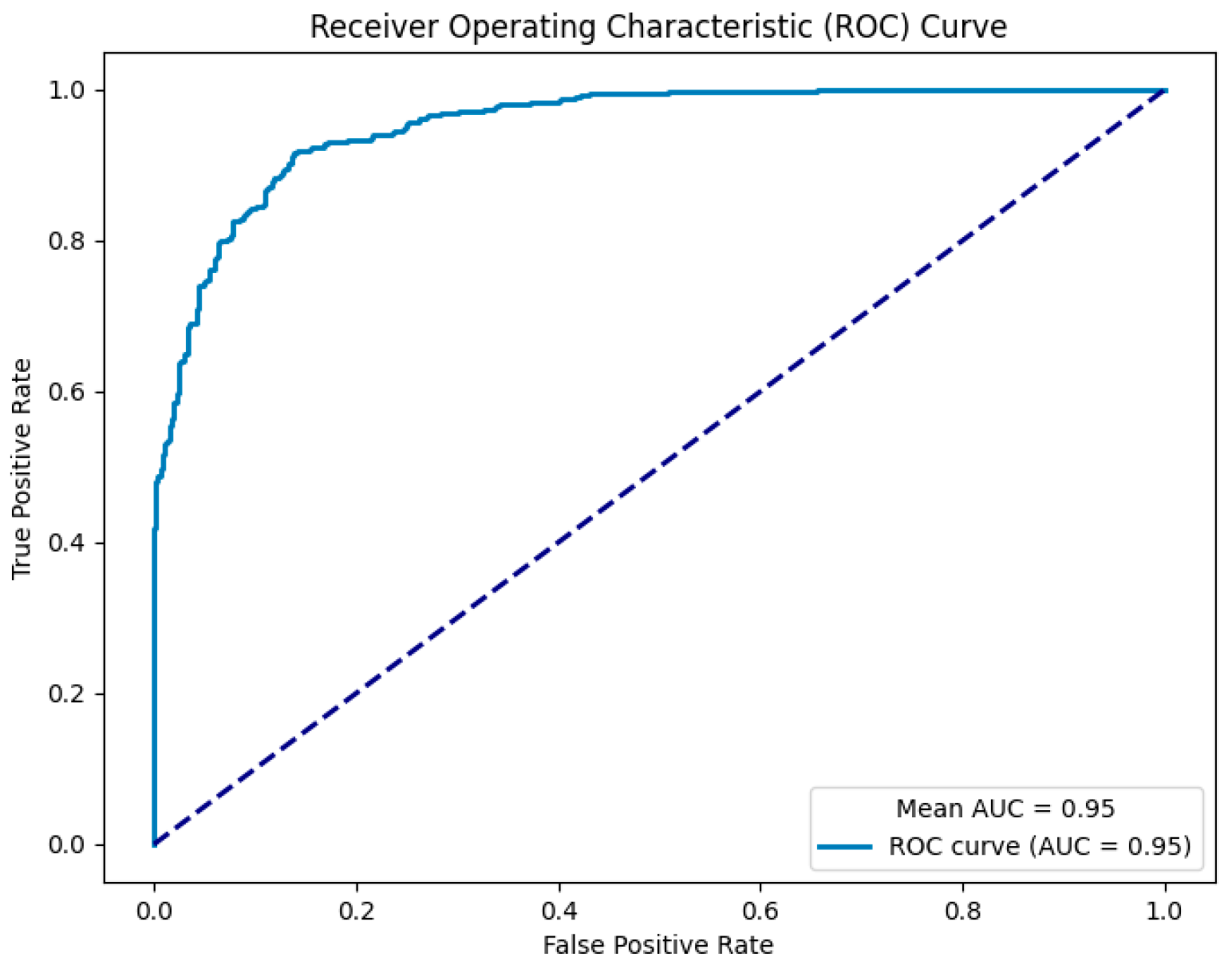

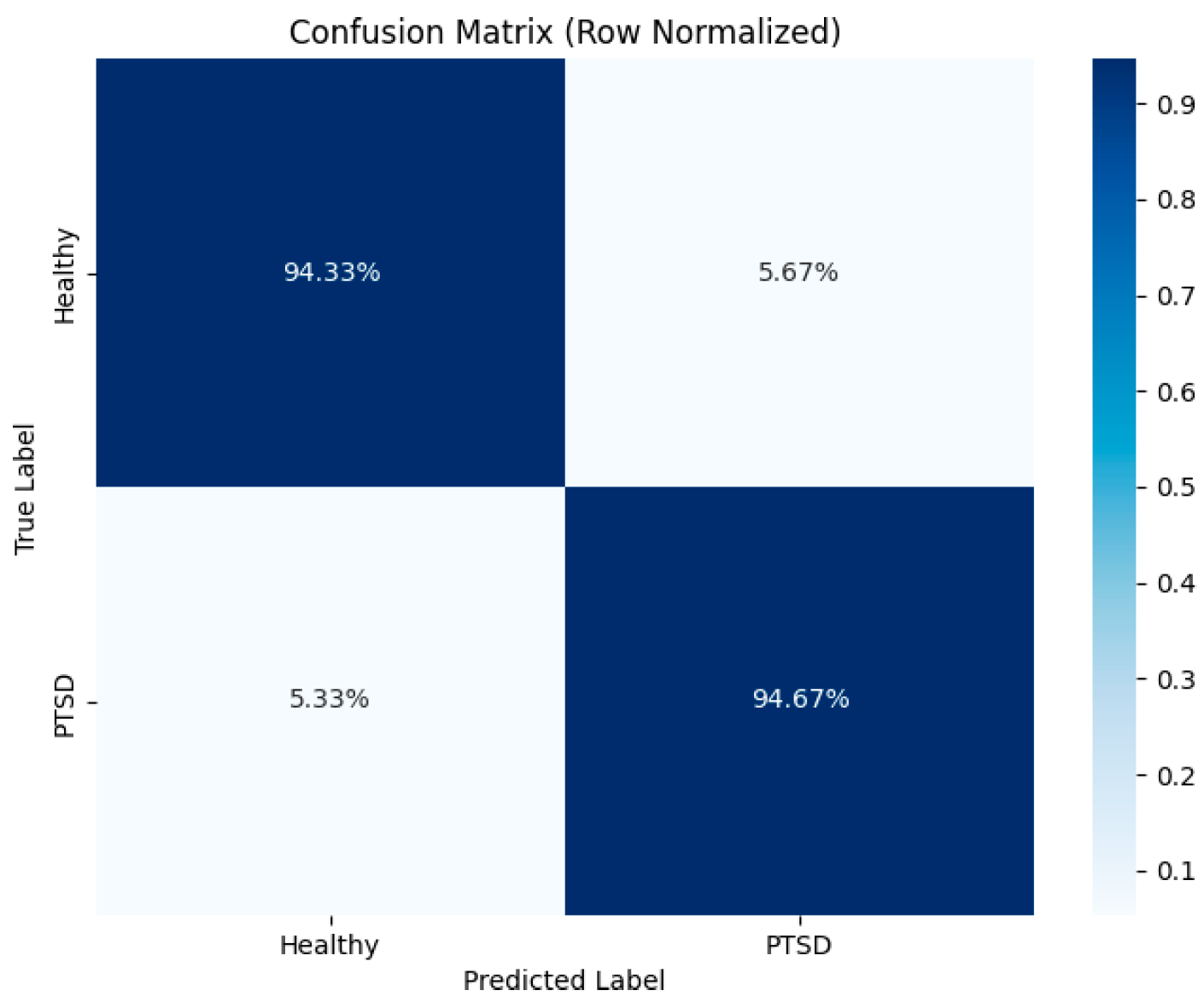

3.2. Model Performance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Barel, E.; Tannous-Haddad, L.; Tzischinsky, O. Self-compassion, self-coldness, and social support and their relationship with depression, anxiety, and PTSD symptoms following a massive terror attack: A prospective study. Eur. J. Psychotraumatol. 2025, 16, 2461948. [Google Scholar] [CrossRef]

- Schlechter, P.; Hoppen, T.H.; Morina, N. Associations among posttraumatic stress disorder symptoms, life satisfaction, and well-being comparisons: A longitudinal investigation. J. Trauma. Stress 2024, 37, 448–459. [Google Scholar] [CrossRef]

- O’Donnell, M.L.; Elliott, P.; Lau, W.; Creamer, M. PTSD symptom trajectories: From early to chronic response. Behav. Res. Ther. 2007, 45, 601–606. [Google Scholar] [CrossRef]

- Wu, Y.Q.; Mao, K.N.; Dennett, L.; Zhang, Y.B.; Chen, J. Systematic review of machine learning in PTSD studies for automated diagnosis evaluation. npj Ment. Health Res. 2023, 2, 16. [Google Scholar] [CrossRef]

- Qi, W.; Gevonden, M.; Shalev, A. Prevention of post-traumatic stress disorder after trauma: Current evidence and future directions. Curr. Psychiatry Rep. 2016, 18, 20. [Google Scholar] [CrossRef]

- Greene, T.; Neria, Y.; Gross, R. Prevalence, detection and correlates of PTSD in the primary care setting: A systematic review. J. Clin. Psychol. Med. Settings 2016, 23, 160–180. [Google Scholar] [CrossRef]

- Radow, B.; Anderson, N.; Richmond, B.K. Post-traumatic stress disorder (PTSD) in trauma patients. Am. Surg. 2025, 91, 292–299. [Google Scholar] [CrossRef]

- Basak, M.; Maiti, D.; Das, D. EEG innovations in neurological disorder diagnostics: A five-year review. Asian J. Res. Comput. Sci. 2024, 17, 226–249. [Google Scholar] [CrossRef]

- Jadhav, C.; Kamble, P.; Mundewadi, S.; Jaiswal, N.; Mali, S.; Ranga, S.; Suvvari, T.K.; Rukadikar, A. Clinical applications of EEG as an excellent tool for event related potentials in psychiatric and neurotic disorders. Int. J. Physiol. Pathophysiol. Pharmacol. 2022, 14, 73–80. [Google Scholar]

- Shim, M.; Jin, M.J.; Im, C.H.; Lee, S.H. Machine-learning-based classification between post-traumatic stress disorder and major depressive disorder using P300 features. NeuroImage Clin. 2019, 24, 102001. [Google Scholar] [CrossRef]

- Zhang, Q.; Luo, C.; Ngetich, R.; Zhang, J.J.; Jin, Z.L.; Li, L. Visual selective attention P300 source in frontal-parietal lobe: ERP and fMRI study. Brain Topogr. 2022, 35, 636–650. [Google Scholar] [CrossRef]

- Jahromi, G.P.; Sotoudeh, H.G.; Mostafaie, R.; Khaleghi, A. Auditory Steady-State Evoked Potentials in Post Traumatic Stress Disorder: Introduction of a Potential Biomarker. Iran. J. Psychiatry 2024, 19, 85–92. [Google Scholar] [CrossRef]

- Trongnetrpunya, A.; Rapp, P.; Wang, C.; Darmon, D.; Costanzo, M.E.; Nathan, D.E.; Roy, M.J.; Cellucci, C.J.; Keyser, D. Single-trial mechanisms underlying changes in averaged P300 ERP amplitude and latency in military service members after combat deployment. Front. Hum. Neurosci. 2019, 13, 377. [Google Scholar] [CrossRef]

- Telesheva, K.; Savenkova, V.; Morozova, I.; Ochneva, A.; Zeltser, A.; Andreyuk, D.; Reznik, A.; Mukhin, V.; Melkonyan, G.; Lytkina, K.; et al. Potential neurophysiological markers of combat-related post-traumatic stress disorder: A cross-sectional diagnostic study. Consort. Psychiatr. 2024, 5, 31–40. [Google Scholar] [CrossRef]

- Wang, C.; Rapp, P.; Darmon, D.; Trongnetrpunya, A.; Costanzo, M.E.; Nathan, D.E.; Cellucci, C.J.; Roy, M.; Keyser, D. Utility of P300 ERP in monitoring post-trauma mental health: A longitudinal study in military personnel returning from combat deployment. J. Psychiatr. Res. 2018, 101, 5–13. [Google Scholar] [CrossRef]

- Min, D.; Kwon, A.; Kim, Y.; Jin, M.J.; Kim, Y.W.; Jeon, H.; Kim, S.; Jeon, H.J.; Lee, S.H. Clinical implication of altered inhibitory response in patients with post-traumatic stress disorder: Electrophysiological evidence from a Go/Nogo task. Brain Topogr. 2020, 33, 208–220. [Google Scholar] [CrossRef]

- Shim, M.; Lee, S.H.; Hwang, H.J. A novel neurophysiological feature based on quantifying EEG data for separating patients in psychiatric disorders with comorbidities. In Proceedings of the 10th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Republic of Korea, 21–23 February 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–3. [Google Scholar] [CrossRef]

- Terpou, B.A.; Shaw, S.B.; Théberge, J.; Férat, V.; Michel, C.M.; McKinnon, M.C.; Lanius, R.A.; Ros, T. Spectral decomposition of EEG microstates in post-traumatic stress disorder. NeuroImage Clin. 2022, 35, 103135. [Google Scholar] [CrossRef]

- Kim, Y.W.; Kim, S.; Shim, M.; Jin, M.J.; Jeon, H.; Lee, S.H.; Im, C.H. Riemannian classifier enhances the accuracy of machine-learning-based diagnosis of PTSD using resting EEG. Prog. Neuro-Psychopharmacol. Biol. Psychiatry 2020, 102, 109960. [Google Scholar] [CrossRef]

- Qu, Z.H.; Wu, W.P.; Cao, Y. PTSD Prediction Based on EEG Feature Extraction and CNN-LSTM Model. IAENG Int. J. Comput. Sci. 2025, 52, 3167–3177. [Google Scholar]

- Beykmohammadi, A.; Ghanbari, Z.; Moradi, M.H. PTSD diagnosis using deep transfer learning: An EEG study. In Proceedings of the 2022 29th National and 7th International Iranian Conference on Biomedical Engineering (ICBME), Tehran, Iran, 21–22 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 9–13. [Google Scholar] [CrossRef]

- Li, F.; Xia, Y.; Wang, F.; Zhang, D.Y.; Li, X.Y.; He, F. Transfer learning algorithm of P300-EEG signal based on XDAWN spatial filter and Riemannian geometry classifier. Appl. Sci. 2020, 10, 1804. [Google Scholar] [CrossRef]

- Tibermacine, I.E.; Russo, S.; Tibermacine, A.; Rabehi, A.; Nail, B.; Kadri, K.; Napoli, C. Riemannian geometry-based eeg approaches: A literature review. arXiv 2024, arXiv:2407.20250. [Google Scholar] [CrossRef]

- Kovacevic, N.; Meghdadi, A.; Berka, C. Characterizing PTSD using electrophysiology: Towards a precision medicine approach. Clin. EEG Neurosci. 2025, 56, 305–315. [Google Scholar] [CrossRef]

- Salazar-Castro, J.A.; Peluffo-Ordóñez, D.H.; López, D.M. Advances in Electroencephalography for Post-traumatic Stress Disorder Identification: A Scoping Review. IEEE Open J. Eng. Med. Biol. 2025, 6, 332–344. [Google Scholar] [CrossRef]

- Shim, M.; Hwang, H.J.; Lee, S.H. Impaired functional cortical networks in the theta frequency band of patients with post-traumatic stress disorder during auditory-cognitive processing. Front. Psychiatry 2022, 13, 811766. [Google Scholar] [CrossRef]

| Channel | pm | tm | Cohen’s dm | pa | pl |

|---|---|---|---|---|---|

| Fp1 | 0.09 | 0.686 | 0.292 | 0.084 | 0.135 |

| Fp2 | 0.055 | −1.173 | −0.500 | 0.062 | 0.001 |

| Fz | 0.005 | −2.886 | −1.228 | 0.002 | 0.006 |

| C3 | 0.019 | −1.988 | −0.846 | 0.011 | 0.043 |

| Cz | 0.048 | 1.278 | 0.544 | 0.065 | 0.071 |

| C4 | 0.02 | −1.778 | −0.758 | 0.053 | 0.036 |

| Pz | 0.059 | 0.944 | 0.402 | 0.072 | 0.026 |

| O1 | 0.17 | 0.341 | 0.145 | 0.089 | 0.015 |

| Oz | 0.54 | 0.104 | 0.044 | 0.291 | 0.078 |

| O2 | 0.35 | 0.262 | 0.112 | 0.157 | 0.015 |

| Study | Features | Classifier | Accuracy |

|---|---|---|---|

| Shim et al. [10] | P300 amplitude, latency, and source | SVM | 80% |

| Shim et al. [17] | P300 mean amplitude | SVM | 73.33% |

| Terpou et al. [18] | P300 spatiotemporal features | SVM | 76% |

| Kim et al. [19] | Source covariance | Riemannian geometry | 75.24% |

| Ours | CSTP-extracted P300 spatiotemporal features | SVM | 84.6% |

| P300-STTCNet | 93.37% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tan, L.; Fang, H.; Ding, P.; Wang, F.; Wei, Y.; Fu, Y. P300 Spatiotemporal Prior-Based Transformer-CNN for Auxiliary Diagnosis of PTSD. Brain Sci. 2025, 15, 1124. https://doi.org/10.3390/brainsci15101124

Tan L, Fang H, Ding P, Wang F, Wei Y, Fu Y. P300 Spatiotemporal Prior-Based Transformer-CNN for Auxiliary Diagnosis of PTSD. Brain Sciences. 2025; 15(10):1124. https://doi.org/10.3390/brainsci15101124

Chicago/Turabian StyleTan, Lize, Hao Fang, Peng Ding, Fan Wang, Yuanyuan Wei, and Yunfa Fu. 2025. "P300 Spatiotemporal Prior-Based Transformer-CNN for Auxiliary Diagnosis of PTSD" Brain Sciences 15, no. 10: 1124. https://doi.org/10.3390/brainsci15101124

APA StyleTan, L., Fang, H., Ding, P., Wang, F., Wei, Y., & Fu, Y. (2025). P300 Spatiotemporal Prior-Based Transformer-CNN for Auxiliary Diagnosis of PTSD. Brain Sciences, 15(10), 1124. https://doi.org/10.3390/brainsci15101124