FB-CCNN: A Filter Bank Complex Spectrum Convolutional Neural Network with Artificial Gradient Descent Optimization

Abstract

1. Introduction

- A novel deep learning model named FB-CCNN is proposed and validated to have leading classification performances using two open SSVEP datasets.

- A hyperparameter optimization algorithm named artificial gradient descent is proposed and validated to be effective in choosing deep learning model’s hyperparameters.

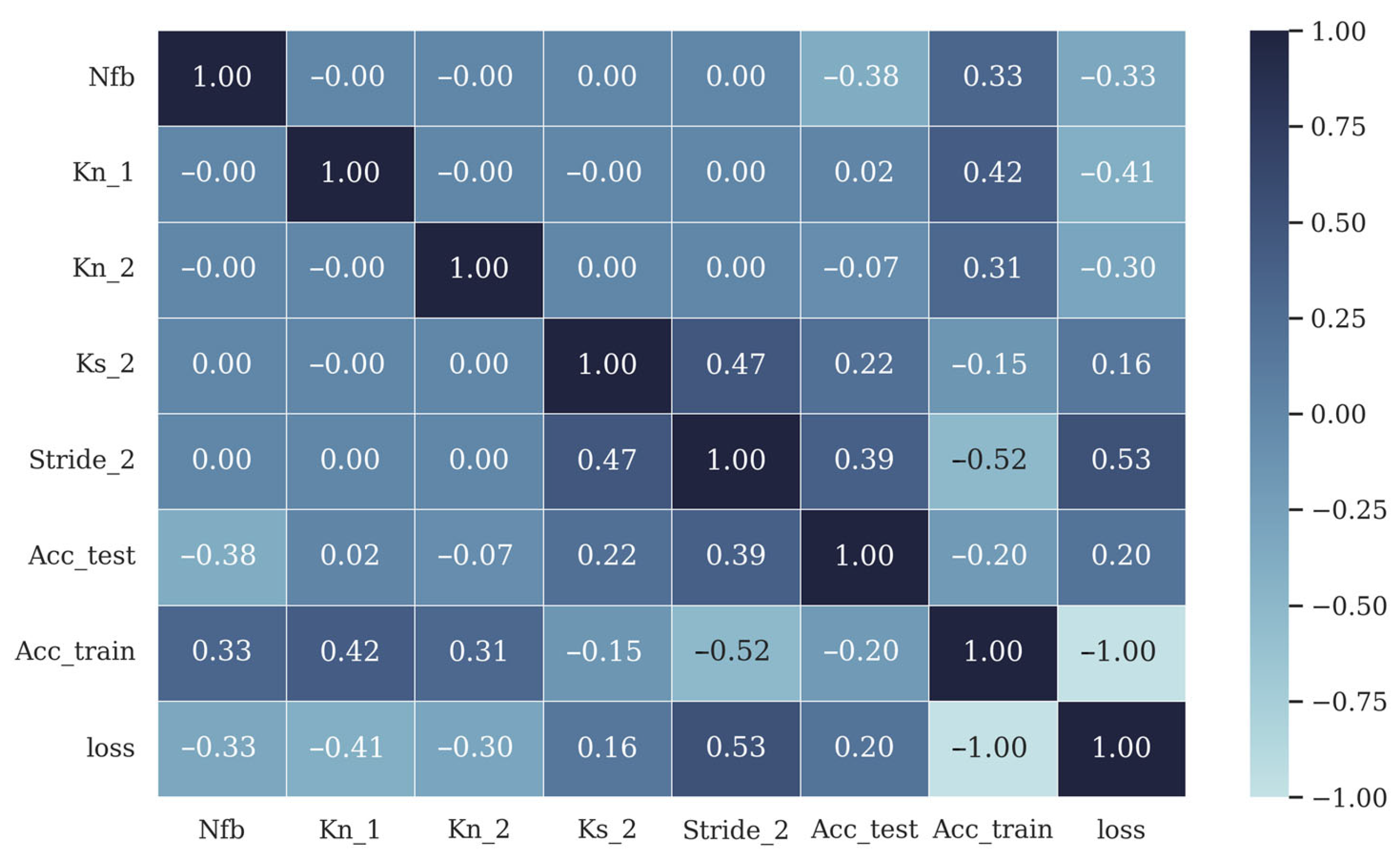

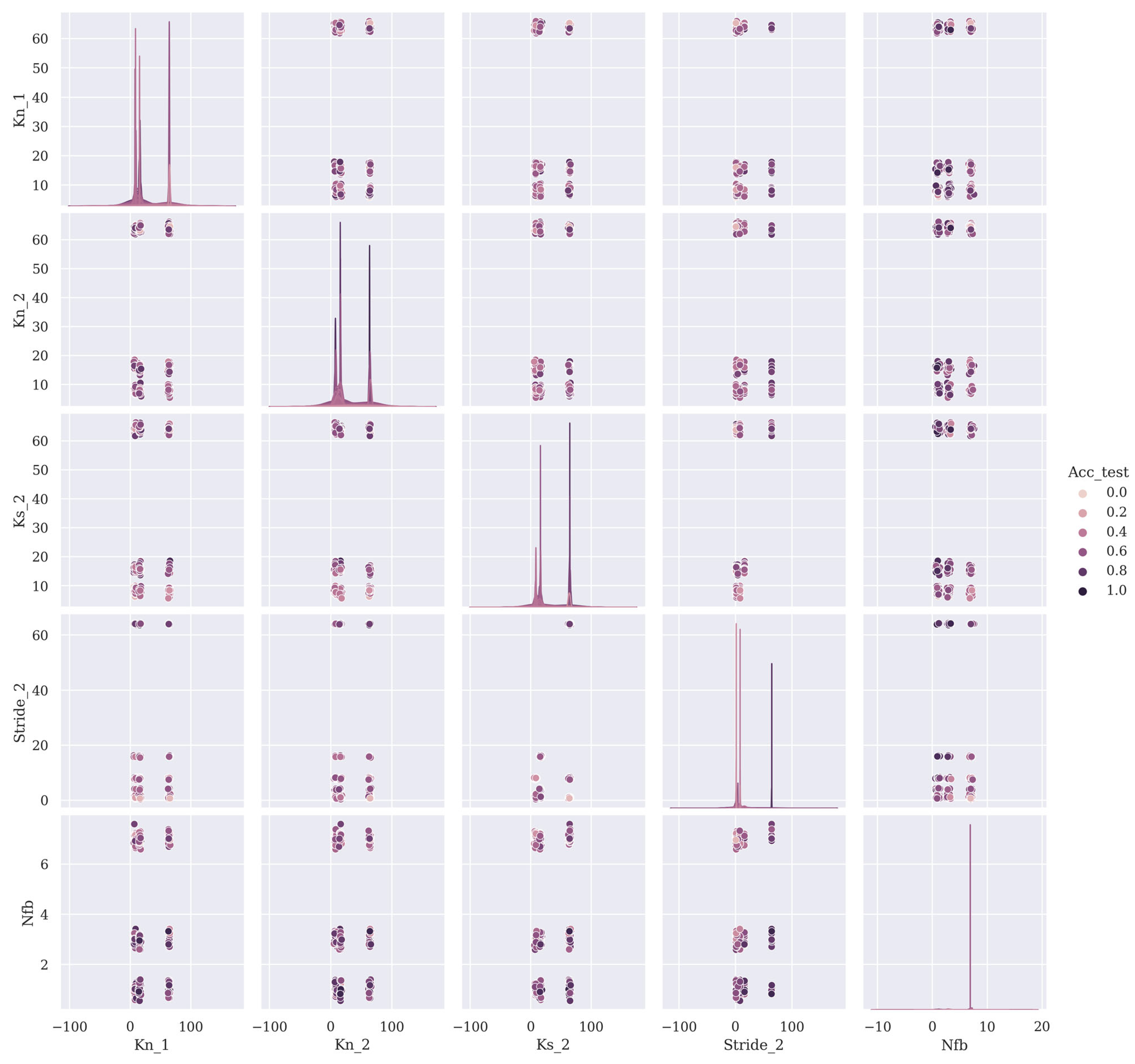

- A hyperparameter relationship analysis was carried out by training 243 models using AGD; this is the first hyperparameter analysis used in SSVEP deep learning models, and it revealed the correlations between hyperparameters and the model’s performance.

- Through experiments, it was demonstrated that the hyperparameters of the FB-CCNN should be fixed values rather than channel number-based, as in most CNN models, and using filter banks allows the model to have a better generalization ability.

- In Section 2, two SSVEP open datasets and benchmark methods for comparison purposes are introduced. FB-CCNN and AGD are presented and used to generate four sets of hyperparameters. In Section 3, FB-CCNN with four sets of hyperparameters are tested on two open datasets, and the results are shown. Section 4 is a discussion of the experimental results, limitations and future directions. The conclusion is provided in Section 5.

2. Materials and Methods

2.1. Datasets

2.1.1. Nakanishi Dataset

2.1.2. Benchmark Dataset

2.2. Benchmark Algorithms

2.2.1. Canonical Correlation Analysis

2.2.2. Complex Convolutional Neural Network

2.2.3. Filter Bank Convolutional Neural Network by Zhao

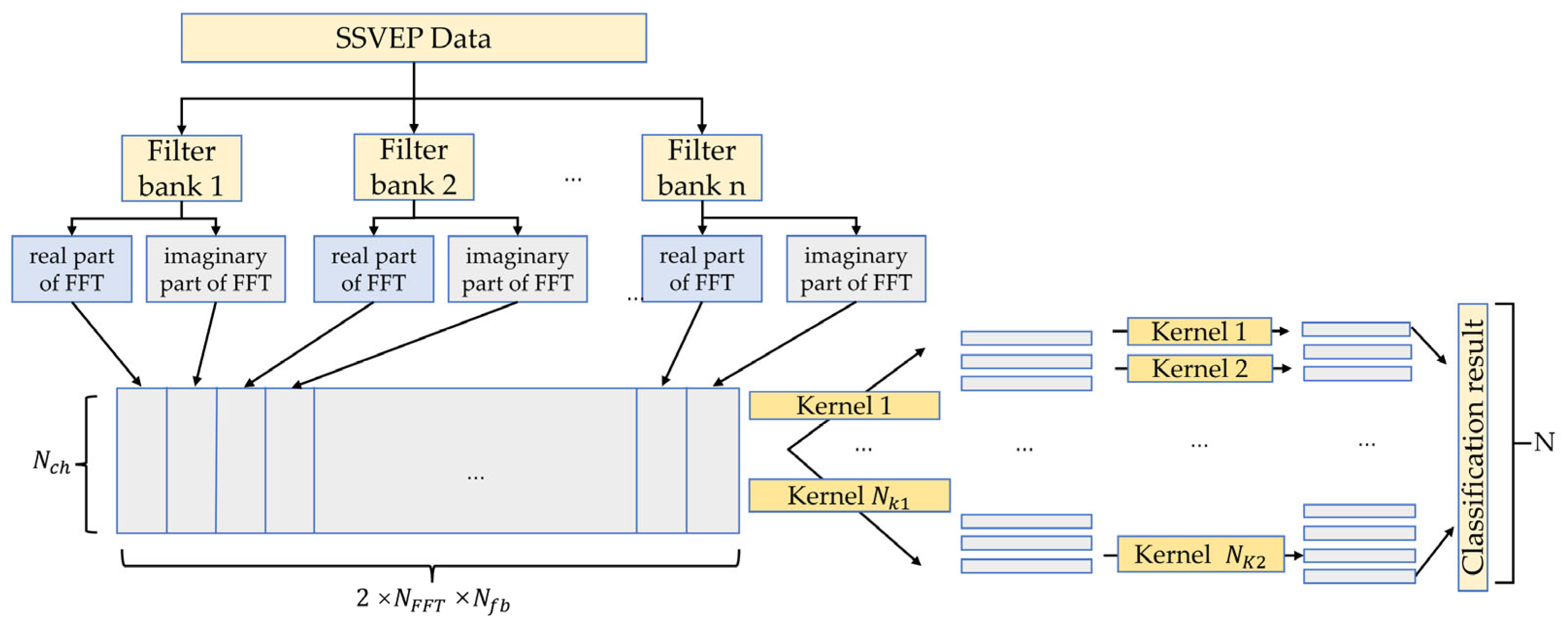

2.3. The FB-CCNN Model

2.3.1. The Filter Bank Component

2.3.2. The Complex Spectrum Convolutional Neural Network Component

2.4. Artificial Gradient Descent (AGD)

- The filter bank number had a strong negative impact on the test accuracy, and thus should not be too high and less than 7.

- The kernel size in the second convolutional layer and the stride impacted the test accuracy much more than the kernel numbers and in the first and second convolution layers.

- The model performed better when the values of and were higher.

- Generally, the model did not perform well when was 7 and, therefore, should be smaller.

- The model had a similar performance when was 1 or 3.

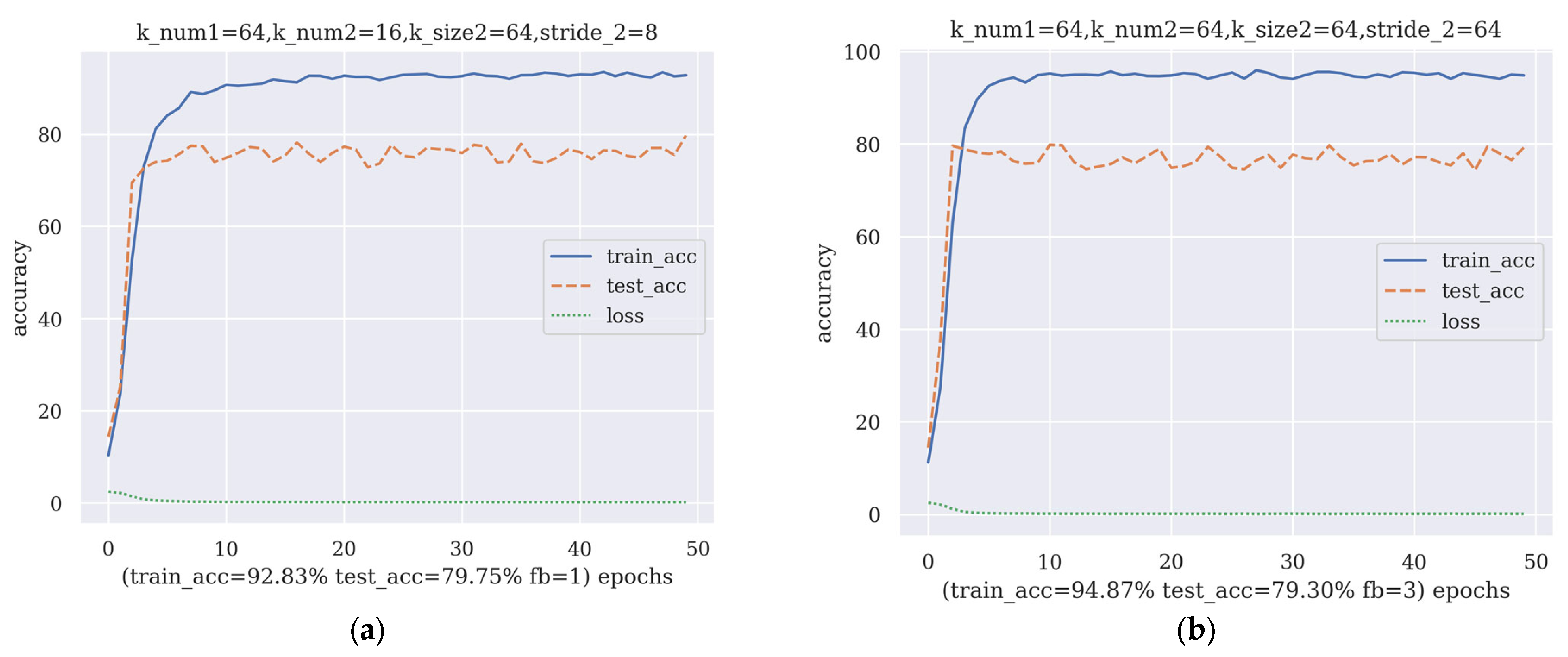

3. Results

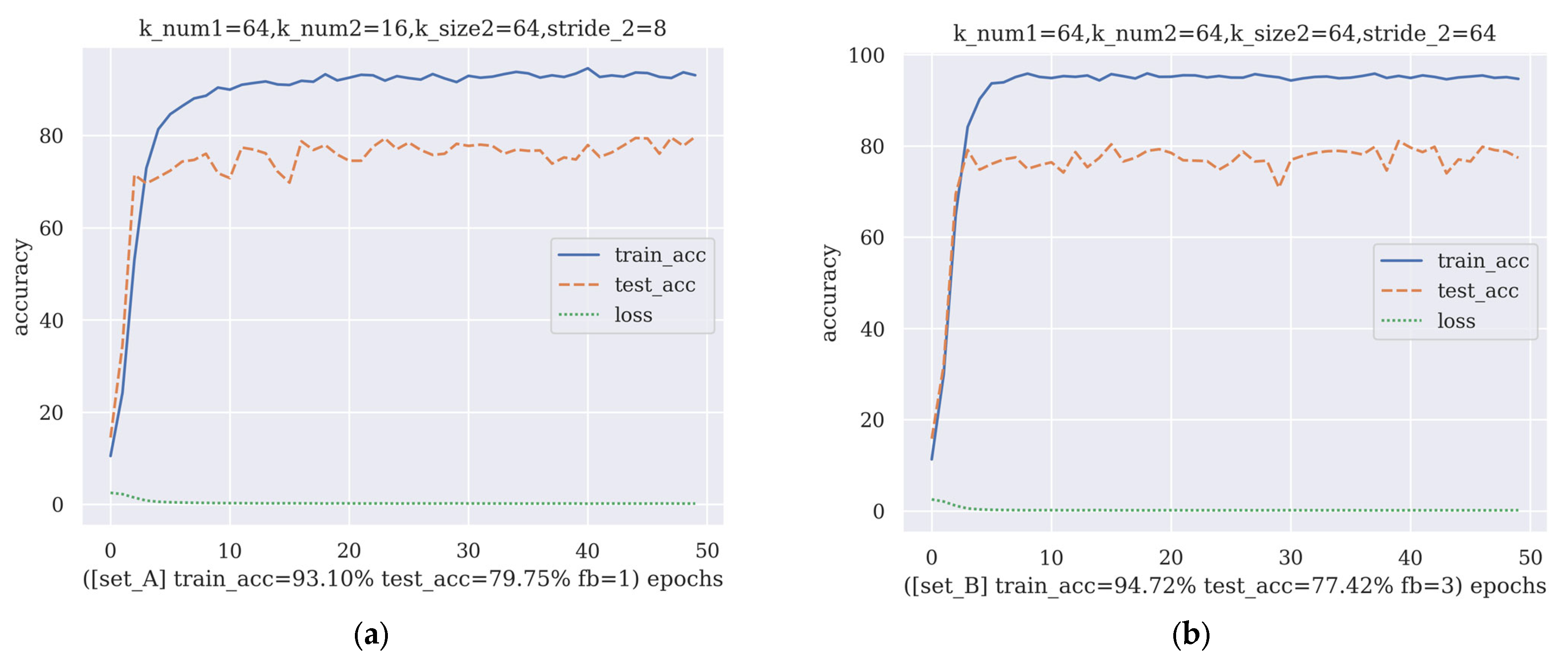

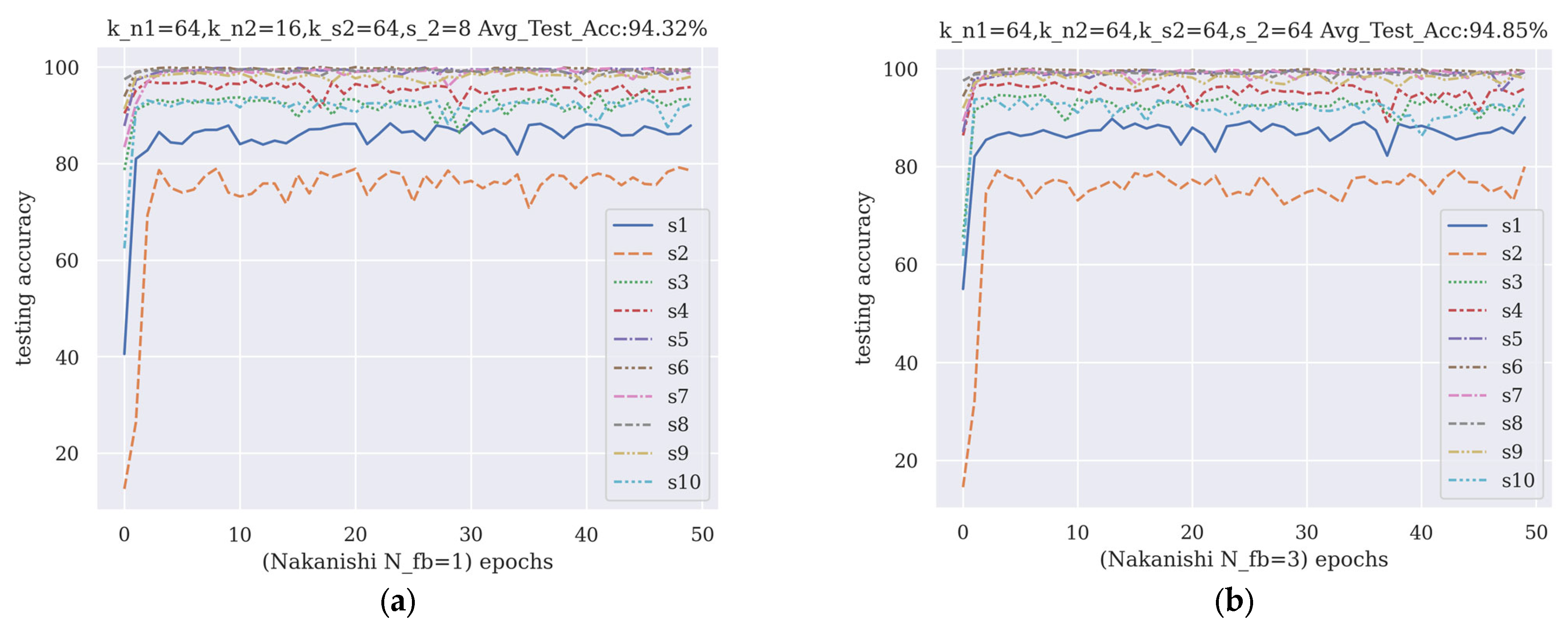

3.1. Validation Using Nakanishi Dataset

3.2. Validation Using Benchmark Dataset

4. Discussion

4.1. Structure Design in FB-CCNN

4.2. Fixed Value Hyperparameters Performed Better Than Channel Number-Based Hyperparameters

4.3. The Value of AGD

4.4. Multiple Individual CNN Models or One CNN Model

4.5. Limitation and Future Works

- Testing and optimizing the performance of the FB-CCNN in inter-subject scenarios and comparing it with other benchmark methods.

- Applying the FB-CCNN to an online BCI system and testing its performance with recruited subjects.

- Adding a proportional similarity-based Openmax classifier to the FB-CCNN to detect whether the subject is watching the stimuli on the screen [46] and thus improve the synchronous SSVEP BCI system to an asynchronous SSVEP BCI system to expand its practicality.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wolpaw, J.R.; Birbaumer, N.; Heetderks, W.J.; McFarland, D.J.; Peckham, P.H.; Schalk, G.; Donchin, E.; Quatrano, L.A.; Robinson, C.J.; Vaughan, T.M. Brain-computer interface technology: A review of the first international meeting. IEEE Trans. Rehabil. Eng. 2000, 8, 164–173. [Google Scholar] [CrossRef]

- Donchin, E.; Spencer, K.M.; Wijesinghe, R. The mental prosthesis: Assessing the speed of a P300-based brain-computer interface. IEEE Trans. Rehabil. Eng. 2000, 8, 174–179. [Google Scholar] [CrossRef] [PubMed]

- Hwang, H.-J.; Lim, J.-H.; Jung, Y.-J.; Choi, H.; Lee, S.W.; Im, C.-H. Development of an SSVEP-based BCI spelling system adopting a QWERTY-style LED keyboard. J. Neurosci. Methods 2012, 208, 59–65. [Google Scholar] [CrossRef]

- Lopes, A.C.; Pires, G.; Nunes, U. Assisted navigation for a brain-actuated intelligent wheelchair. Robot. Auton. Syst. 2013, 61, 245–258. [Google Scholar] [CrossRef]

- Carlson, T.; Millan, J.d.R. Brain-controlled wheelchairs: A robotic architecture. IEEE Robot. Autom. Mag. 2013, 20, 65–73. [Google Scholar] [CrossRef]

- Xu, D.; Tong, Y.; Dong, X.; Wang, C.; Huo, L.; Li, Y.; Zhang, Q.; Feng, X. A brain-computer interface based semi-autonomous robotic system. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 27–31 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1083–1086. [Google Scholar]

- Chen, X.; Zhao, B.; Wang, Y.; Gao, X. Combination of high-frequency SSVEP-based BCI and computer vision for controlling a robotic arm. J. Neural Eng. 2019, 16, 026012. [Google Scholar] [CrossRef]

- Peining, P.; Tan, G.; Wai, A.A.P. Evaluation of consumer-grade EEG headsets for BCI drone control. In Proceedings of the IRC Conference on Science, Engineering, and Technology, Singapore, 10–11 August 2017. [Google Scholar]

- Holm, N.S.; Puthusserypady, S. An improved five class MI based BCI scheme for drone control using filter bank CSP. In Proceedings of the 2019 7th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Republic of Korea, 18–20 February 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Frisoli, A.; Loconsole, C.; Leonardis, D.; Banno, F.; Barsotti, M.; Chisari, C.; Bergamasco, M. A new gaze-BCI-driven control of an upper limb exoskeleton for rehabilitation in real-world tasks. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2012, 42, 1169–1179. [Google Scholar] [CrossRef]

- Frolov, A.; Mokienko, O.; Kh, L.R.; Chernikova, L.; Kotov, S.; Turbina, L.; Biryukova, E.; Kondur, A.; Ivanova, G.; Staritsyn, A. Preliminary results of a controlled study of BCI–exoskeleton technology efficacy in patients with poststroke arm paresis. Bull. Russ. State Med. Univ. 2016, 2, 16–23. [Google Scholar] [CrossRef]

- Leeb, R.; Tonin, L.; Rohm, M.; Desideri, L.; Carlson, T.; Millan, J.d.R. Towards independence: A BCI telepresence robot for people with severe motor disabilities. Proc. IEEE 2015, 103, 969–982. [Google Scholar] [CrossRef]

- Spataro, R.; Chella, A.; Allison, B.; Giardina, M.; Sorbello, R.; Tramonte, S.; Guger, C.; La Bella, V. Reaching and grasping a glass of water by locked-in ALS patients through a BCI-controlled humanoid robot. Front. Hum. Neurosci. 2017, 11, 68. [Google Scholar] [CrossRef]

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A comprehensive review of EEG-based brain–computer interface paradigms. J. Neural Eng. 2019, 16, 011001. [Google Scholar] [CrossRef]

- Farwell, L.A.; Donchin, E. Talking off the top of your head: Toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 1988, 70, 510–523. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; McFarland, D.J.; Neat, G.W.; Forneris, C.A. An EEG-based brain-computer interface for cursor control. Electroencephalogr. Clin. Neurophysiol. 1991, 78, 252–259. [Google Scholar] [CrossRef] [PubMed]

- Cheng, M.; Gao, X.; Gao, S.; Xu, D. Design and implementation of a brain-computer interface with high transfer rates. IEEE Trans. Biomed. Eng. 2002, 49, 1181–1186. [Google Scholar] [CrossRef] [PubMed]

- Başar, E. EEG—Dynamics and evoked potentials in sensory and cognitive processing by the brain. In Dynamics of Sensory and Cognitive Processing by the Brain; Springer: Berlin/Heidelberg, Germany, 1988; pp. 30–55. [Google Scholar]

- Wang, Y.; Wang, R.; Gao, X.; Hong, B.; Gao, S. A practical VEP-based brain-computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 234–240. [Google Scholar] [CrossRef] [PubMed]

- Nicolas-Alonso, L.F.; Gomez-Gil, J. Brain computer interfaces, a review. Sensors 2012, 12, 1211–1279. [Google Scholar] [CrossRef]

- Apicella, A.; Arpaia, P.; De Benedetto, E.; Donato, N.; Duraccio, L.; Giugliano, S.; Prevete, R. Enhancement of SSVEPs classification in BCI-based wearable instrumentation through machine learning techniques. IEEE Sens. J. 2022, 22, 9087–9094. [Google Scholar] [CrossRef]

- Xu, D.; Tang, F.; Li, Y.; Zhang, Q.; Feng, X. An Analysis of Deep Learning Models in SSVEP-Based BCI: A Survey. Brain Sci. 2023, 13, 483. [Google Scholar] [CrossRef]

- Alzahab, N.A.; Apollonio, L.; Di Iorio, A.; Alshalak, M.; Iarlori, S.; Ferracuti, F.; Monteriù, A.; Porcaro, C. Hybrid deep learning (hDL)-based brain-computer interface (BCI) systems: A systematic review. Brain Sci. 2021, 11, 75. [Google Scholar] [CrossRef]

- Zhang, X.; Yao, L.; Wang, X.; Monaghan, J.; Mcalpine, D.; Zhang, Y. A survey on deep learning based brain computer interface: Recent advances and new frontiers. arXiv 2019, arXiv:190504149. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Kwak, N.-S.; Müller, K.-R.; Lee, S.-W. A convolutional neural network for steady state visual evoked potential classification under ambulatory environment. PLoS ONE 2017, 12, e0172578. [Google Scholar] [CrossRef] [PubMed]

- Aznan, N.K.N.; Bonner, S.; Connolly, J.; Al Moubayed, N.; Breckon, T. On the classification of SSVEP-based dry-EEG signals via convolutional neural networks. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 3726–3731. [Google Scholar]

- Zhao, X.; Du, Y.; Zhang, R. A CNN-based multi-target fast classification method for AR-SSVEP. Comput. Biol. Med. 2022, 141, 105042. [Google Scholar] [CrossRef]

- Podmore, J.J.; Breckon, T.P.; Aznan, N.K.; Connolly, J.D. On the relative contribution of deep convolutional neural networks for SSVEP-based bio-signal decoding in BCI speller applications. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 611–618. [Google Scholar] [CrossRef]

- Nguyen, T.-H.; Chung, W.-Y. A single-channel SSVEP-based BCI speller using deep learning. IEEE Access 2018, 7, 1752–1763. [Google Scholar] [CrossRef]

- Dang, W.; Li, M.; Lv, D.; Sun, X.; Gao, Z. MHLCNN: Multi-harmonic linkage CNN model for SSVEP and SSMVEP signal classification. IEEE Trans. Circuits Syst. II: Express Briefs 2021, 69, 244–248. [Google Scholar] [CrossRef]

- Ravi, A.; Beni, N.H.; Manuel, J.; Jiang, N. Comparing user-dependent and user-independent training of CNN for SSVEP BCI. J. Neural Eng. 2020, 17, 026028. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Y.; Gao, S.; Jung, T.-P.; Gao, X. Filter bank canonical correlation analysis for implementing a high-speed SSVEP-based brain–computer interface. J. Neural Eng. 2015, 12, 046008. [Google Scholar] [CrossRef]

- Ding, W.; Shan, J.; Fang, B.; Wang, C.; Sun, F.; Li, X. Filter bank convolutional neural network for short time-window steady-state visual evoked potential classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 2615–2624. [Google Scholar] [CrossRef]

- Zhao, D.; Wang, T.; Tian, Y.; Jiang, X. Filter bank convolutional neural network for SSVEP classification. IEEE Access 2021, 9, 147129–147141. [Google Scholar] [CrossRef]

- Pan, Y.; Chen, J.; Zhang, Y.; Zhang, Y. An efficient CNN-LSTM network with spectral normalization and label smoothing technologies for SSVEP frequency recognition. J. Neural Eng. 2022, 19, 056014. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Zhang, Y.; Pan, Y.; Xu, P.; Guan, C. A Transformer-based deep neural network model for SSVEP classification. arXiv 2022, arXiv:221004172. [Google Scholar] [CrossRef]

- Yao, H.; Liu, K.; Deng, X.; Tang, X.; Yu, H. FB-EEGNet: A fusion neural network across multi-stimulus for SSVEP target detection. J. Neurosci. Methods 2022, 379, 109674. [Google Scholar] [CrossRef] [PubMed]

- Bassi, P.R.; Attux, R. FBDNN: Filter banks and deep neural networks for portable and fast brain-computer interfaces. Biomed. Phys. Eng. Express 2022, 8, 035018. [Google Scholar] [CrossRef] [PubMed]

- Nakanishi, M.; Wang, Y.; Wang, Y.-T.; Jung, T.-P. A comparison study of canonical correlation analysis based methods for detecting steady-state visual evoked potentials. PLoS ONE 2015, 10, e0140703. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, X.; Gao, X.; Gao, S. A benchmark dataset for SSVEP-based brain–computer interfaces. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 25, 1746–1752. [Google Scholar] [CrossRef]

- Lin, Z.; Zhang, C.; Wu, W.; Gao, X. Frequency recognition based on canonical correlation analysis for SSVEP-based BCIs. IEEE Trans. Biomed. Eng. 2006, 53, 2610–2614. [Google Scholar] [CrossRef]

- Nwankpa, C.; Ijomah, W.; Gachagan, A.; Marshall, S. Activation functions: Comparison of trends in practice and research for deep learning. arXiv 2018, arXiv:181103378. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:14126980. [Google Scholar]

- Giusti, E.; Ghio, S.; Oveis, A.H.; Martorella, M. Proportional Similarity-Based Openmax Classifier for Open Set Recognition in SAR Images. Remote Sens. 2022, 14, 4665. [Google Scholar] [CrossRef]

- Hekmatmanesh, A.; Azni, H.M.; Wu, H.; Afsharchi, M.; Li, M.; Handroos, H. Imaginary control of a mobile vehicle using deep learning algorithm: A brain computer interface study. IEEE Access 2021, 10, 20043–20052. [Google Scholar] [CrossRef]

- Li, Y.; Pan, J.; Wang, F.; Yu, Z. A hybrid BCI system combining P300 and SSVEP and its application to wheelchair control. IEEE Trans. Biomed. Eng. 2013, 60, 3156–3166. [Google Scholar] [PubMed]

- Hayta, Ü.; Irimia, D.C.; Guger, C.; Erkutlu, İ.; Güzelbey, İ.H. Optimizing Motor Imagery Parameters for Robotic Arm Control by Brain-Computer Interface. Brain Sci. 2022, 12, 833. [Google Scholar] [CrossRef] [PubMed]

| Algorithm: Artificial Gradient Descent (AGD) | |||

|---|---|---|---|

| Input: n hyperparameter set including: , , …, | |||

| Output: Optimized n hyperparameters | |||

| Initialization of variables: Assign three values for each of the hyperparameter set that ∈ [], where i [1, 2, …, n], where the range [] covers searching domain for optimal hyperparameter based on experience or estimation | |||

| L1 | For each combination of value in train the model accordingly and calculate the model’s performance , where m [1, 2, …, ], and r [0, 1, …, ], here at round the model’s performance is good enough | ||

| End for | |||

| For each manually selected optimal performance , where m [1, 2, …, ] | |||

| L2 | For each hyperparameter set ∈ [1, 2, …, n] | ||

| If (performance of model by using hyperparameters (, , …, ) < (performance of model by using hyperparameters ), , …, ) < (performance of model by using hyperparameters = 8 , , …, ) do Generate a new set for that | |||

| Else if < do Generate a new set for that | |||

| Else do Generate a new set for that End if | |||

| i = i + 1, Repeat L2 | |||

| r = r + 1, Repeat L1 | |||

| If < < and > or ( > < < ) do Generate a new set for that End if | |||

| End L1 For | |||

| Hyperparameter Set () | Lower Value () | Mid Value () | Higher Value () |

|---|---|---|---|

| 1 | 3 | 7 |

| Hyperparameter Set | Hyperparameters | Lower Bound | Mid Value | Higher Bound |

|---|---|---|---|---|

| Set_A (AGD round 2) | ) | Argmax | ||

| Set_B (AGD round 2) | ) | Argmax | ||

| Hyperparameter | One Filter Bank Hyperparameter Set | Three Filter Banks Hyperparameter Set | ||

|---|---|---|---|---|

| Channel Number-Based Representation | Fixed Value Representation | Channel Number-Based Representation | Fixed Value Representation | |

| 1 | 1 | 3 | 3 | |

| 64 | 64 | |||

| 16 | 64 | |||

| 64 | 64` | |||

| 8 | 64 | |||

| Hyper Parameters | Fixed Value Hyperparameter Set | Channel Number-Based Hyperparameter Set | ||||

|---|---|---|---|---|---|---|

| Nakanishi Dataset | Benchmark Dataset | |||||

| 8, 64 | 8, 64 | 8 | 64 | |||

| 1 | 3 | 1 | 3 | 1 | 3 | |

| (64) | (64) | |||||

| 16 | (16) | (64) | ||||

| 64 | (64) | (64) | ||||

| 8 | (8) | (64) | (64) | |||

| Subject | CCA | C-CNN | FB-CNN | FB-CCNN (FB = 1) | FB-CCNN (FB = 3) |

|---|---|---|---|---|---|

| S1 | 29.17 | 75.69 | 91.67 | 87.90 | 90.05 |

| S2 | 26.25 | 51.81 | 57.08 | 78.58 | 80.02 |

| S3 | 59.44 | 93.89 | 97.36 | 93.37 | 92.38 |

| S4 | 80.28 | 98.61 | 98.11 | 95.88 | 95.97 |

| S5 | 52.36 | 99.72 | 99.58 | 99.73 | 99.37 |

| S6 | 87.22 | 99.72 | 99.95 | 99.46 | 99.55 |

| S7 | 69.17 | 92.64 | 98.75 | 98.66 | 99.55 |

| S8 | 96.67 | 99.03 | 99.58 | 99.19 | 99.19 |

| S9 | 66.39 | 97.36 | 97.92 | 98.03 | 98.03 |

| S10 | 65.28 | 90.28 | 91.94 | 92.38 | 94.35 |

| Average | 63.22 ± 22.84 | 89.88 ± 15.22 | 93.19 ± 13.04 | 94.32 ± 6.73 | 94.85 ± 6.18 |

| Subject | M-CNN | C-CNN | FB-CNN | FB-CCNN (FB = 1) | FB-CCNN (FB = 3) | FB-CCNN (FB = 1, ch) | FB-CCNN (FB = 3, ch) |

|---|---|---|---|---|---|---|---|

| S1 | 71.33 | 73.58 | 85.42 | 80.25 | 81.12 | 74.31 | 82.50 |

| S2 | 78.00 | 88.58 | 92.92 | 86.19 | 85.06 | 78.94 | 85.13 |

| S3 | 84.67 | 89.25 | 93.08 | 84.88 | 86.81 | 74.31 | 74.31 |

| S4 | 83.67 | 90.50 | 94.17 | 96.25 | 97.69 | 83.50 | 83.50 |

| S5 | 79.92 | 87.00 | 93.17 | 86.31 | 90.00 | 66.69 | 81.63 |

| S6 | 70.16 | 80.16 | 83.75 | 67.13 | 76.63 | 63.50 | 76.75 |

| S7 | 50.75 | 73.88 | 75.25 | 73.56 | 76.50 | 51.69 | 64.31 |

| S8 | 52.67 | 61.66 | 67.00 | 56.69 | 72.94 | 48.56 | 54.38 |

| S9 | 61.25 | 68.25 | 71.25 | 74.44 | 76.31 | 69.56 | 72.56 |

| S10 | 69.47 | 78.58 | 91.25 | 90.00 | 94.31 | 70.63 | 84.63 |

| S11 | 34.83 | 38.75 | 43.67 | 61.87 | 53.88 | 49.88 | 48.38 |

| S12 | 83.16 | 86.92 | 86.75 | 72.63 | 88.69 | 73.94 | 84.44 |

| S13 | 64.67 | 72.42 | 82.83 | 80.81 | 74.13 | 66.88 | 51.00 |

| S14 | 78.67 | 81.08 | 84.92 | 96.06 | 96.56 | 63.13 | 91.88 |

| S15 | 51.92 | 64.42 | 61.32 | 58.25 | 74.00 | 54.50 | 63.00 |

| S16 | 56.00 | 72.00 | 77.25 | 79.69 | 84.56 | 70.50 | 84.19 |

| S17 | 54.19 | 70.91 | 72.75 | 70.31 | 75.31 | 67.50 | 43.50 |

| S18 | 51.75 | 62.08 | 65.50 | 35.63 | 57.38 | 43.31 | 58.63 |

| S19 | 33.67 | 36.41 | 43.67 | 80.13 | 81.31 | 72.75 | 79.75 |

| S20 | 69.33 | 78.00 | 87.25 | 85.88 | 85.69 | 72.38 | 75.88 |

| S21 | 78.38 | 85.42 | 86.50 | 74.13 | 77.50 | 71.00 | 67.19 |

| S22 | 88.33 | 91.42 | 94.67 | 93.56 | 96.69 | 88.13 | 92.81 |

| S23 | 74.67 | 77.83 | 83.92 | 87.00 | 87.13 | 69.63 | 66.50 |

| S24 | 78.29 | 81.92 | 85.25 | 81.38 | 82.31 | 67.81 | 79.44 |

| S25 | 76.75 | 80.00 | 81.33 | 57.19 | 69.69 | 47.88 | 56.88 |

| S26 | 82.25 | 84.92 | 85.17 | 73.00 | 83.56 | 54.88 | 65.63 |

| S27 | 88.16 | 94.16 | 94.25 | 86.13 | 90.50 | 78.75 | 86.06 |

| S28 | 73.16 | 84.08 | 90.50 | 85.06 | 92.19 | 68.44 | 88.69 |

| S29 | 32.41 | 46.75 | 49.50 | 43.88 | 50.25 | 20.88 | 26.06 |

| S30 | 67.67 | 83.00 | 81.50 | 78.69 | 83.81 | 72.19 | 75.19 |

| S31 | 87.13 | 96.50 | 96.58 | 72.75 | 97.44 | 67.50 | 88.56 |

| S32 | 91.92 | 94.00 | 95.92 | 99.25 | 98.56 | 95.13 | 99.25 |

| S33 | 22.58 | 28.72 | 36.25 | 27.50 | 32.13 | 10.81 | 13.56 |

| S34 | 64.48 | 76.75 | 76.00 | 80.50 | 82.06 | 69.31 | 75.06 |

| S35 | 74.58 | 74.08 | 76.17 | 84.44 | 87.50 | 73.31 | 83.25 |

| Average | 67.45 ± 17.62 | 75.26 ± 16.43 | 79.05 ± 15.86 | 75.47 ± 16.40 | 80.58 ± 14.43 | 64.92 ± 16.62 | 71.56 ± 18.69 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, D.; Tang, F.; Li, Y.; Zhang, Q.; Feng, X. FB-CCNN: A Filter Bank Complex Spectrum Convolutional Neural Network with Artificial Gradient Descent Optimization. Brain Sci. 2023, 13, 780. https://doi.org/10.3390/brainsci13050780

Xu D, Tang F, Li Y, Zhang Q, Feng X. FB-CCNN: A Filter Bank Complex Spectrum Convolutional Neural Network with Artificial Gradient Descent Optimization. Brain Sciences. 2023; 13(5):780. https://doi.org/10.3390/brainsci13050780

Chicago/Turabian StyleXu, Dongcen, Fengzhen Tang, Yiping Li, Qifeng Zhang, and Xisheng Feng. 2023. "FB-CCNN: A Filter Bank Complex Spectrum Convolutional Neural Network with Artificial Gradient Descent Optimization" Brain Sciences 13, no. 5: 780. https://doi.org/10.3390/brainsci13050780

APA StyleXu, D., Tang, F., Li, Y., Zhang, Q., & Feng, X. (2023). FB-CCNN: A Filter Bank Complex Spectrum Convolutional Neural Network with Artificial Gradient Descent Optimization. Brain Sciences, 13(5), 780. https://doi.org/10.3390/brainsci13050780