Audiovisual Emotional Congruency Modulates the Stimulus-Driven Cross-Modal Spread of Attention

Abstract

1. Introduction

2. Materials and Methods

2.1. Subjects

2.2. Assessment Experiment

2.3. Experimental Materials and Procedures

2.4. Electrophysiological Recording and Preprocessing

2.5. Data Analysis

3. Results

3.1. Behavior Results

3.2. EEG Results

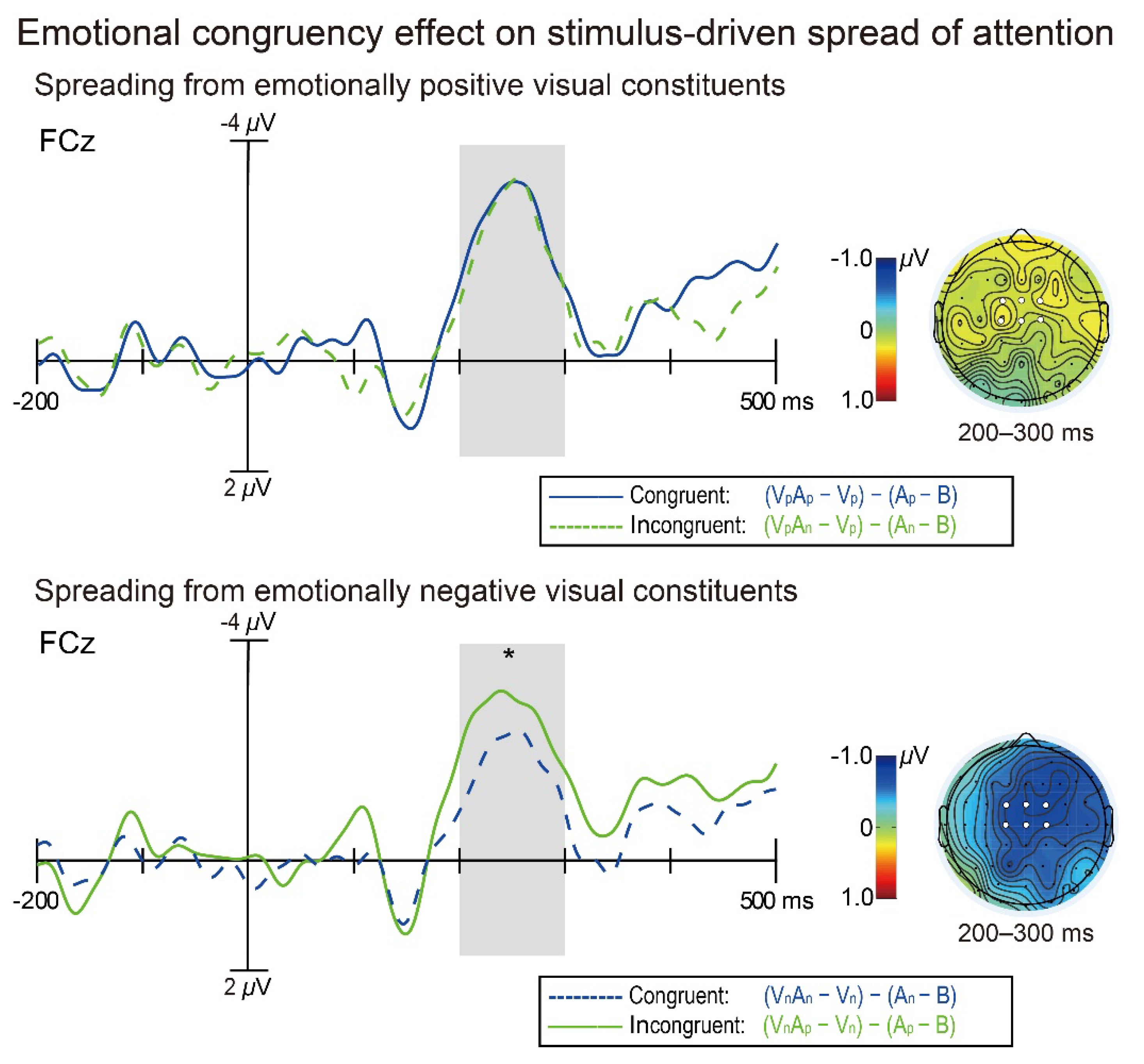

3.2.1. The Stimulus-Driven Spread of Attention Is Modulated by Audiovisual Emotional Congruency

3.2.2. Post Hoc Exploratory Analyses

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Talsma, D.; Senkowski, D.; Soto-Faraco, S.; Woldorff, M.G. The Multifaceted Interplay between Attention and Multisensory Integration. Trends Cogn. Sci. 2010, 14, 400–410. [Google Scholar] [CrossRef]

- De Meo, R.; Murray, M.M.; Clarke, S.; Matusz, P.J. Top-down Control and Early Multisensory Processes: Chicken vs. Egg. Front. Integr. Neurosci. 2015, 9, 17. [Google Scholar] [CrossRef]

- Tang, X.; Wu, J.; Shen, Y. The Interactions of Multisensory Integration with Endogenous and Exogenous Attention. Neurosci. Biobehav. Rev. 2016, 61, 208–224. [Google Scholar] [CrossRef]

- Macaluso, E.; Noppeney, U.; Talsma, D.; Vercillo, T.; Hartcher-O’Brien, J.; Adam, R. The Curious Incident of Attention in Multisensory Integration: Bottom-up vs. Top-Down. Multisens. Res. 2016, 29, 557–583. [Google Scholar] [CrossRef]

- Senkowski, D.; Talsma, D.; Herrmann, C.S.; Woldorff, M.G. Multisensory Processing and Oscillatory Gamma Responses: Effects of Spatial Selective Attention. Exp. Brain Res. 2005, 166, 411–426. [Google Scholar] [CrossRef]

- Li, Q.; Wu, J.; Touge, T. Audiovisual Interaction Enhances Auditory Detection in Late Stage: An Event-Related Potential Study. NeuroReport 2010, 21, 173–178. [Google Scholar] [CrossRef]

- Talsma, D.; Woldorff, M.G. Selective Attention and Multisensory Integration: Multiple Phases of Effects on the Evoked Brain Activity. J. Cogn. Neurosci. 2005, 17, 1098–1114. [Google Scholar] [CrossRef]

- Paszkiel, S. Analysis and Classification of EEG Signals for Brain–Computer Interfaces. In Studies in Computational Intelligence; Springer International Publishing: Cham, Switzerland, 2020; Volume 852, ISBN 978-3-030-30580-2. [Google Scholar]

- Luck, S.J. An Introduction to the Event-Related Potential Technique, 2nd ed.; The MIT Press: Cambridge, MA, USA, 2014; ISBN 978-0-262-52585-5. [Google Scholar]

- Busse, L.; Roberts, K.C.; Crist, R.E.; Weissman, D.H.; Woldorff, M.G. The Spread of Attention across Modalities and Space in a Multisensory Object. Proc. Natl. Acad. Sci. USA 2005, 102, 18751–18756. [Google Scholar] [CrossRef]

- Talsma, D.; Doty, T.J.; Woldorff, M.G. Selective Attention and Audiovisual Integration: Is Attending to Both Modalities a Prerequisite for Early Integration? Cereb. Cortex 2007, 17, 679–690. [Google Scholar] [CrossRef]

- Molholm, S.; Martinez, A.; Shpaner, M.; Foxe, J.J. Object-Based Attention Is Multisensory: Co-Activation of an Object’s Representations in Ignored Sensory Modalities: Multisensory Transfer of Object-Based Attention. Eur. J. Neurosci. 2007, 26, 499–509. [Google Scholar] [CrossRef]

- Fiebelkorn, I.C.; Foxe, J.J.; Molholm, S. Dual Mechanisms for the Cross-Sensory Spread of Attention: How Much Do Learned Associations Matter? Cereb. Cortex 2010, 20, 109–120. [Google Scholar] [CrossRef]

- Fiebelkorn, I.C.; Foxe, J.J.; Schwartz, T.H.; Molholm, S. Staying within the Lines: The Formation of Visuospatial Boundaries Influences Multisensory Feature Integration: Multisensory Feature Integration. Eur. J. Neurosci. 2010, 31, 1737–1743. [Google Scholar] [CrossRef]

- Hansen, J.C.; Hillyard, S.A. Endogenous Brain Potentials Associated with Selective Auditory Attention. Electroencephalogr. Clin. Neurophysiol. 1980, 49, 277–290. [Google Scholar] [CrossRef]

- Naatanen, R. Processing Negativity: An Evoked-Potential Reflection of Selective Attention. Psychol. Bull. 1982, 92, 605–640. [Google Scholar] [CrossRef]

- Singhal, A.; Fowler, B. The Differential Effects of Sternberg Short- and Long-Term Memory Scanning on the Late Nd and P300 in a Dual-Task Paradigm. Cogn. Brain Res. 2004, 21, 124–132. [Google Scholar] [CrossRef]

- Zimmer, U.; Itthipanyanan, S.; Grent-’t-Jong, T.; Woldorff, M.G. The Electrophysiological Time Course of the Interaction of Stimulus Conflict and the Multisensory Spread of Attention: Attentional Spread Precedes Multisensory Conflict. Eur. J. Neurosci. 2010, 31, 1744–1754. [Google Scholar] [CrossRef]

- Zimmer, U.; Roberts, K.C.; Harshbarger, T.B.; Woldorff, M.G. Multisensory Conflict Modulates the Spread of Visual Attention across a Multisensory Object. NeuroImage 2010, 52, 606–616. [Google Scholar] [CrossRef][Green Version]

- Donohue, S.E.; Roberts, K.C.; Grent-’t-Jong, T.; Woldorff, M.G. The Cross-Modal Spread of Attention Reveals Differential Constraints for the Temporal and Spatial Linking of Visual and Auditory Stimulus Events. J. Neurosci. 2011, 31, 7982–7990. [Google Scholar] [CrossRef]

- Zhao, S.; Feng, C.; Liao, Y.; Huang, X.; Feng, W. Attentional Blink Suppresses Both Stimulus-driven and Representation-driven Cross-modal Spread of Attention. Psychophysiology 2021, 58, e13761. [Google Scholar] [CrossRef]

- Zhao, S.; Li, Y.; Wang, C.; Feng, C.; Feng, W. Updating the Dual-mechanism Model for Cross-sensory Attentional Spreading: The Influence of Space-based Visual Selective Attention. Hum. Brain Mapp. 2021, 42, 6038–6052. [Google Scholar] [CrossRef]

- Klasen, M.; Chen, Y.-H.; Mathiak, K. Multisensory Emotions: Perception, Combination and Underlying Neural Processes. Rev. Neurosci. 2012, 23, 381–392. [Google Scholar] [CrossRef]

- Kokinous, J.; Kotz, S.A.; Tavano, A.; Schröger, E. The Role of Emotion in Dynamic Audiovisual Integration of Faces and Voices. Soc. Cogn. Affect. Neurosci. 2015, 10, 713–720. [Google Scholar] [CrossRef]

- Zinchenko, A.; Kanske, P.; Obermeier, C.; Schröger, E.; Kotz, S.A. Emotion and Goal-Directed Behavior: ERP Evidence on Cognitive and Emotional Conflict. Soc. Cogn. Affect. Neurosci. 2015, 10, 1577–1587. [Google Scholar] [CrossRef]

- Zinchenko, A.; Obermeier, C.; Kanske, P.; Schröger, E.; Kotz, S.A. Positive Emotion Impedes Emotional but Not Cognitive Conflict Processing. Cogn. Affect. Behav. Neurosci. 2017, 17, 665–677. [Google Scholar] [CrossRef]

- Föcker, J.; Röder, B. Event-Related Potentials Reveal Evidence for Late Integration of Emotional Prosody and Facial Expression in Dynamic Stimuli: An ERP Study. Multisens. Res. 2019, 32, 473–497. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, M.; Goerlich, K.S.; Aleman, A.; Xu, P.; Luo, Y. Deficient Auditory Emotion Processing but Intact Emotional Multisensory Integration in Alexithymia. Psychophysiology 2021, 58, e13806. [Google Scholar] [CrossRef]

- Campbell, J.I.D.; Thompson, V.A. MorePower 6.0 for ANOVA with Relational Confidence Intervals and Bayesian Analysis. Behav. Res. Methods 2012, 44, 1255–1265. [Google Scholar] [CrossRef]

- Lu, B.; Hui, M.; Yu-Xia, H. The Development of Native Chinese Affective Picture System–A Pretest in 46 College Students. Chin. Ment. Health J. 2005, 19, 712–719. [Google Scholar]

- Lang, P.J.; Greenwald, M.K.; Bradley, M.M.; Hamm, A.O. Looking at Pictures: Affective, Facial, Visceral, and Behavioral Reactions. Psychophysiology 1993, 30, 261–273. [Google Scholar] [CrossRef]

- Baucom, L.B.; Wedell, D.H.; Wang, J.; Blitzer, D.N.; Shinkareva, S.V. Decoding the Neural Representation of Affective States. NeuroImage 2012, 59, 718–727. [Google Scholar] [CrossRef]

- Shinkareva, S.V.; Wang, J.; Kim, J.; Facciani, M.J.; Baucom, L.B.; Wedell, D.H. Representations of Modality-Specific Affective Processing for Visual and Auditory Stimuli Derived from Functional Magnetic Resonance Imaging Data: Modality-Specific Affective Processing. Hum. Brain Mapp. 2014, 35, 3558–3568. [Google Scholar] [CrossRef]

- Bradley, M.M.; Lang, P.J. International Affective Digitized Sounds (IADS): Stimuli, Instruction Manual and Affective Ratings (Tech. Rep. No. B-2); University of Florida: Gainesville, FL, USA, 1999. [Google Scholar]

- Peirce, J.; Gray, J.R.; Simpson, S.; MacAskill, M.; Höchenberger, R.; Sogo, H.; Kastman, E.; Lindeløv, J.K. PsychoPy2: Experiments in behavior made easy. Behav. Res. 2019, 51, 195–203. [Google Scholar] [CrossRef]

- Bradley, M.M.; Lang, P.J. Measuring Emotion: The Self-Assessment Manikin and the Semantic Differential. J. Behav. Ther. Exp. Psychiatry 1994, 25, 49–59. [Google Scholar] [CrossRef]

- Marchewka, A.; Żurawski, Ł.; Jednoróg, K.; Grabowska, A. The Nencki Affective Picture System (NAPS): Introduction to a Novel, Standardized, Wide-Range, High-Quality, Realistic Picture Database. Behav. Res. Methods 2014, 46, 596–610. [Google Scholar] [CrossRef]

- Bertelson, P.; Aschersleben, G. Automatic Visual Bias of Perceived Auditory Location. Psychon. Bull. Rev. 1998, 5, 482–489. [Google Scholar] [CrossRef]

- Zhao, S.; Wang, C.; Feng, C.; Wang, Y.; Feng, W. The Interplay between Audiovisual Temporal Synchrony and Semantic Congruency in the Cross-modal Boost of the Visual Target Discrimination during the Attentional Blink. Hum. Brain Mapp. 2022, 43, 2478–2494. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An Open Source Toolbox for Analysis of Single-Trial EEG Dynamics Including Independent Component Analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Lopez-Calderon, J.; Luck, S.J. ERPLAB: An Open-Source Toolbox for the Analysis of Event-Related Potentials. Front. Hum. Neurosci. 2014, 8, 213. [Google Scholar] [CrossRef]

- Walter, W.G.; Cooper, R.; Aldridge, V.J.; McCALLUM, W.C.; Winter, A.L. Contingent Negative Variation: An Electric Sign of Sensori-Motor Association and Expectancy in the Human Brain. Nature 1964, 203, 380–384. [Google Scholar] [CrossRef]

- Luck, S.J.; Gaspelin, N. How to Get Statistically Significant Effects in Any ERP Experiment (and Why You Shouldn’t): How to Get Significant Effects. Psychophysiology 2017, 54, 146–157. [Google Scholar] [CrossRef]

- Zeelenberg, R.; Bocanegra, B.R. Auditory Emotional Cues Enhance Visual Perception. Cognition 2010, 115, 202–206. [Google Scholar] [CrossRef]

- Smith, N.K.; Cacioppo, J.T.; Larsen, J.T.; Chartrand, T.L. May I Have Your Attention, Please: Electrocortical Responses to Positive and Negative Stimuli. Neuropsychologia 2003, 41, 171–183. [Google Scholar] [CrossRef]

- Delplanque, S.; Lavoie, M.E.; Hot, P.; Silvert, L.; Sequeira, H. Modulation of Cognitive Processing by Emotional Valence Studied through Event-Related Potentials in Humans. Neurosci. Lett. 2004, 356, 1–4. [Google Scholar] [CrossRef]

- Carretié, L.; Hinojosa, J.A.; López-Martín, S.; Tapia, M. An Electrophysiological Study on the Interaction between Emotional Content and Spatial Frequency of Visual Stimuli. Neuropsychologia 2007, 45, 1187–1195. [Google Scholar] [CrossRef]

- Luo, W.; Feng, W.; He, W.; Wang, N.-Y.; Luo, Y.-J. Three Stages of Facial Expression Processing: ERP Study with Rapid Serial Visual Presentation. NeuroImage 2010, 49, 1857–1867. [Google Scholar] [CrossRef]

- Zhang, D.; He, W.; Wang, T.; Luo, W.; Zhu, X.; Gu, R.; Li, H.; Luo, Y. Three Stages of Emotional Word Processing: An ERP Study with Rapid Serial Visual Presentation. Soc. Cogn. Affect. Neurosci. 2014, 9, 1897–1903. [Google Scholar] [CrossRef]

- Keil, A.; Bradley, M.M.; Hauk, O.; Rockstroh, B.; Elbert, T.; Lang, P.J. Large-Scale Neural Correlates of Affective Picture Processing. Psychophysiology 2002, 39, 641–649. [Google Scholar] [CrossRef]

- De Cesarei, A.; Codispoti, M. When Does Size Not Matter? Effects of Stimulus Size on Affective Modulation. Psychophysiology 2006, 43, 207–215. [Google Scholar] [CrossRef]

- Donohue, S.E.; Todisco, A.E.; Woldorff, M.G. The Rapid Distraction of Attentional Resources toward the Source of Incongruent Stimulus Input during Multisensory Conflict. J. Cogn. Neurosci. 2013, 25, 623–635. [Google Scholar] [CrossRef]

- Lin, Y.; Ding, H.; Zhang, Y. Unisensory and Multisensory Stroop Effects Modulate Gender Differences in Verbal and Nonverbal Emotion Perception. J. Speech Lang. Hear. Res. 2021, 64, 4439–4457. [Google Scholar] [CrossRef]

- Lin, Y.; Ding, H.; Zhang, Y. Gender Differences in Identifying Facial, Prosodic, and Semantic Emotions Show Category- and Channel-Specific Effects Mediated by Encoder’s Gender. J. Speech Lang. Hear. Res. 2021, 64, 2941–2955. [Google Scholar] [CrossRef] [PubMed]

- Kuznetsova, A.; Brockhoff, P.B.; Christensen, R.H. lmerTest package: Tests in linear mixed effects models. J. Stat. Softw. 2017, 82, 1–26. [Google Scholar] [CrossRef]

- Lenth, R. Emmeans: Estimated Marginal Means, Aka Leastsquares Means. R Package. 2017. Available online: https://rdrr.io/cran/emmeans/man/emmeans-package.html (accessed on 1 September 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, M.; Zhao, S.; Yu, J.; Leng, X.; Zhai, M.; Feng, C.; Feng, W. Audiovisual Emotional Congruency Modulates the Stimulus-Driven Cross-Modal Spread of Attention. Brain Sci. 2022, 12, 1229. https://doi.org/10.3390/brainsci12091229

Chen M, Zhao S, Yu J, Leng X, Zhai M, Feng C, Feng W. Audiovisual Emotional Congruency Modulates the Stimulus-Driven Cross-Modal Spread of Attention. Brain Sciences. 2022; 12(9):1229. https://doi.org/10.3390/brainsci12091229

Chicago/Turabian StyleChen, Minran, Song Zhao, Jiaqi Yu, Xuechen Leng, Mengdie Zhai, Chengzhi Feng, and Wenfeng Feng. 2022. "Audiovisual Emotional Congruency Modulates the Stimulus-Driven Cross-Modal Spread of Attention" Brain Sciences 12, no. 9: 1229. https://doi.org/10.3390/brainsci12091229

APA StyleChen, M., Zhao, S., Yu, J., Leng, X., Zhai, M., Feng, C., & Feng, W. (2022). Audiovisual Emotional Congruency Modulates the Stimulus-Driven Cross-Modal Spread of Attention. Brain Sciences, 12(9), 1229. https://doi.org/10.3390/brainsci12091229