The Effects of Spatial Attention Focus and Visual Awareness on the Processing of Fearful Faces: An ERP Study

Abstract

:1. Introduction

1.1. The Role of Spatial Attention Focus in Emotion Processing

1.2. The Role of Awareness in Emotion Processing

2. Materials and Method

2.1. Participants

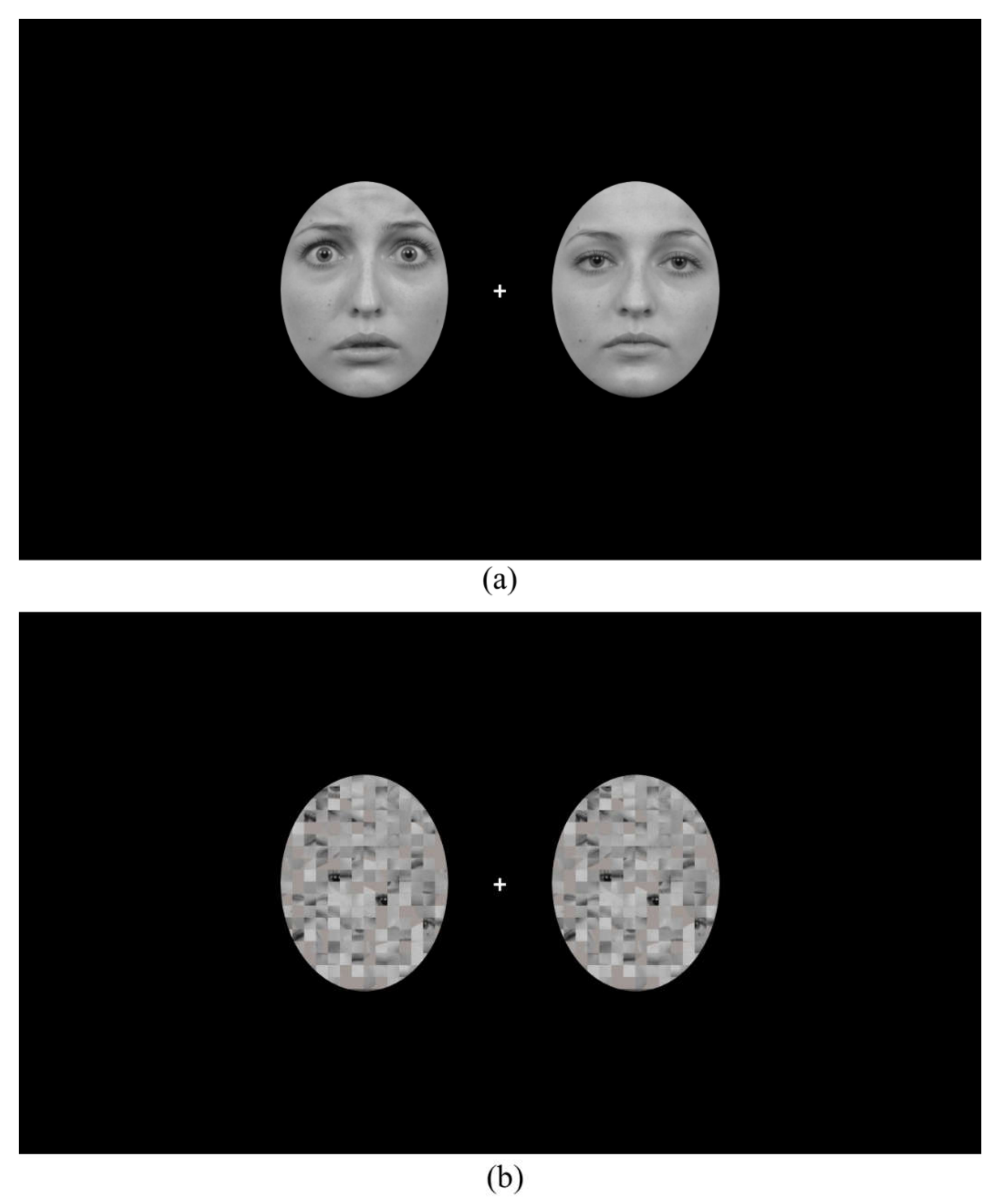

2.2. Apparatus and Stimuli

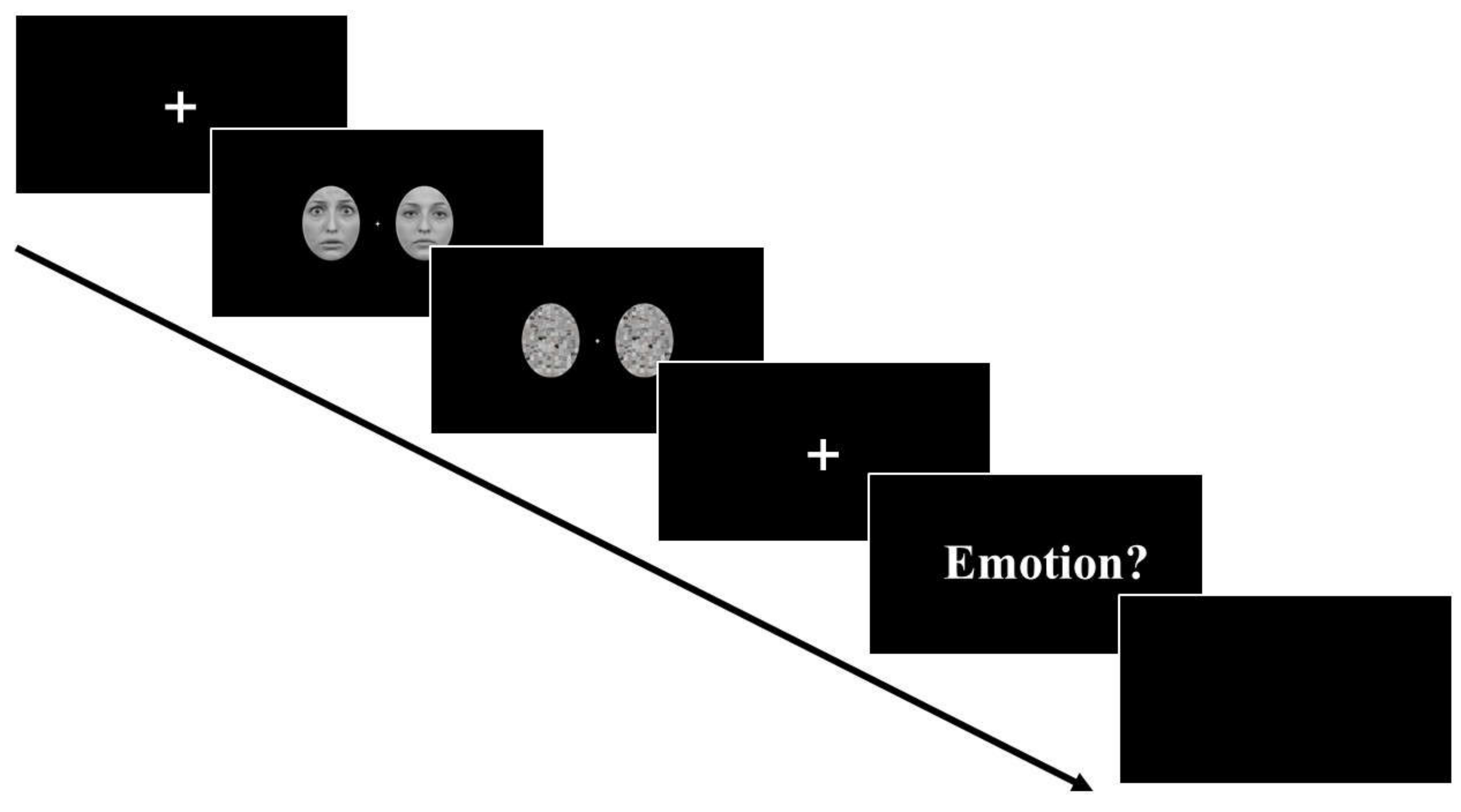

2.3. Procedure

2.4. EEG Recording and Pre-Processing

2.5. ERP Data Analysis

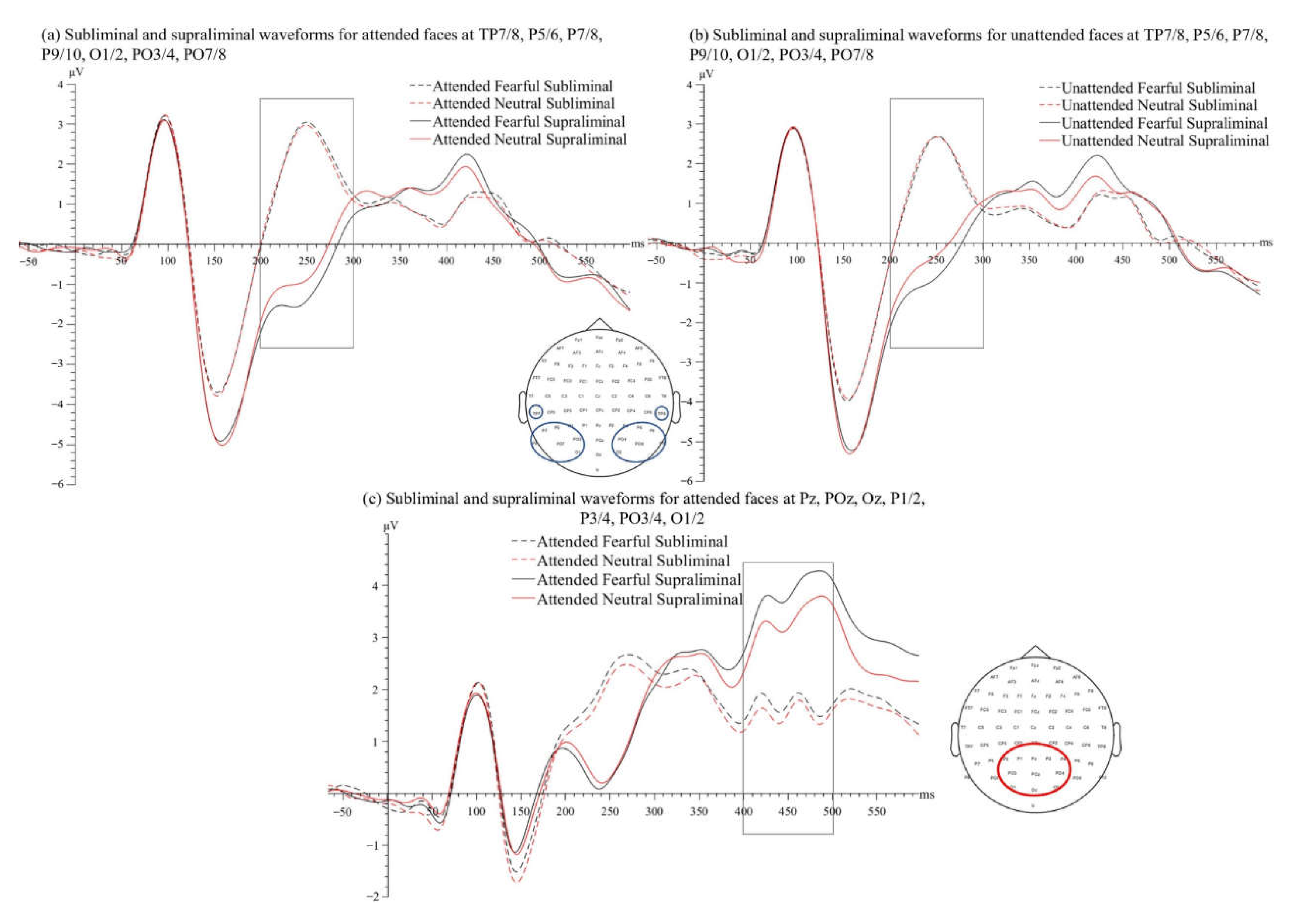

2.5.1. VAN and P3

2.5.2. N170

3. Results

3.1. Behavioural Results

3.2. ERP Amplitudes

3.2.1. VAN Time Window (200–300 ms)

3.2.2. P3 Time Window (400–500 ms)

3.2.3. N170 Time Window (130–190 ms)

4. Discussion

4.1. VAN Does Not Require Spatial Attention Focus but Can Be Enhanced by It

4.2. Early Emotion Processing Needs Awareness but Not Spatial Attention

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Compton, R.J. The interface between emotion and attention: A review of evidence from psychology and neuroscience. Behav. Cogn. Neurosci. Rev. 2003, 2, 115–129. [Google Scholar] [CrossRef] [PubMed]

- Schindler, S.; Bublatzky, F. Attention and emotion: An integrative review of emotional face processing as a function of attention. Cortex 2020. [Google Scholar] [CrossRef]

- Yiend, J. The effects of emotion on attention: A review of attentional processing of emotional information. Cogn. Emot. 2010, 24, 3–47. [Google Scholar] [CrossRef]

- Pizzagalli, D.; Regard, M.; Lehmann, D. Rapid emotional face processing in the human right and left brain hemispheres: An ERP study. Neuroreport 1999, 10, 2691–2698. [Google Scholar] [CrossRef] [PubMed]

- Eimer, M.; Holmes, A.; McGlone, F.P. The role of spatial attention in the processing of facial expression: An ERP study of rapid brain responses to six basic emotions. Cogn. Affect. Behav. Neurosci. 2003, 3, 97–110. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Holmes, A.; Vuilleumier, P.; Eimer, M. The processing of emotional facial expression is gated by spatial attention: Evidence from event-related brain potentials. Cogn. Brain Res. 2003, 16, 174–184. [Google Scholar] [CrossRef]

- Huang, J.; Zhou, R.; Hu, S. Effects on automatic attention due to exposure to pictures of emotional faces while performing Chinese word judgment tasks. PLoS ONE 2013, 8, e75386. [Google Scholar] [CrossRef] [Green Version]

- Doallo, S.; Cadaveira, F.; Holguín, S.R. Time course of attentional modulations on automatic emotional processing. Neurosci. Lett. 2007, 418, 111–116. [Google Scholar] [CrossRef]

- Doallo, S.; Holguín, S.R.; Cadaveira, F. Attentional load affects automatic emotional processing: Evidence from event-related potentials. Neuroreport 2006, 17, 1797–1801. [Google Scholar] [CrossRef]

- Del Zotto, M.D.; Pegna, A.J. Processing of masked and unmasked emotional faces under different attentional conditions: An electrophysiological investigation. Front. Psychol. 2015, 6, 1691. [Google Scholar] [CrossRef] [Green Version]

- Pegna, A.J.; Darque, A.; Berrut, C.; Khateb, A. Early ERP modulation for task-irrelevant subliminal faces. Front. Psychol. 2011, 2, 88. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pegna, A.J.; Landis, T.; Khateb, A. Electrophysiological evidence for early non-conscious processing of fearful facial expressions. Int. J. Psychophysiol. 2008, 70, 127–136. [Google Scholar] [CrossRef] [PubMed]

- Förster, J.; Koivisto, M.; Revonsuo, A. ERP and MEG correlates of visual consciousness: The second decade. Conscious. Cogn. 2020, 80, 102917. [Google Scholar] [CrossRef] [PubMed]

- Dehaene, S. Consciousness and the Brain: Deciphering How the Brain Codes Our Thoughts; Penguin: London, UK, 2014. [Google Scholar]

- Cohen, M.A.; Ortego, K.; Kyroudis, A.; Pitts, M. Distinguishing the neural correlates of perceptual awareness and postperceptual processing. J. Neurosci. 2020, 40, 4925–4935. [Google Scholar] [CrossRef] [PubMed]

- Polich, J. Updating P300: An integrative theory of P3a and P3b. Clin. Neurophysiol. 2007, 118, 2128–2148. [Google Scholar] [CrossRef] [Green Version]

- Railo, H.; Koivisto, M.; Revonsuo, A. Tracking the processes behind conscious perception: A review of event-related potential correlates of visual consciousness. Conscious. Cogn. 2011, 20, 972–983. [Google Scholar] [CrossRef]

- Wilenius, M.E.; Revonsuo, A.T. Timing of the earliest ERP correlate of visual awareness. Psychophysiology 2007, 44, 703–710. [Google Scholar] [CrossRef]

- Bola, M.; Paź, M.; Doradzińska, Ł.; Nowicka, A. The self-face captures attention without consciousness: Evidence from the N2pc ERP component analysis. Psychophysiology 2021, 58, e13759. [Google Scholar] [CrossRef]

- Qiu, Z.; Becker, S.I.; Pegna, A.J. Spatial attention shifting to fearful faces depends on visual awareness in attentional blink: An ERP study. Neuropsychologia 2022, 172, 108283. [Google Scholar] [CrossRef]

- Busch, N.A.; Fründ, I.; Herrmann, C.S. Electrophysiological evidence for different types of change detection and change blindness. J. Cogn. Neurosci. 2010, 22, 1852–1869. [Google Scholar] [CrossRef] [Green Version]

- Qiu, Z.; Becker, S.I.; Pegna, A.J. Spatial attention shifting to emotional faces is contingent on awareness and task relevancy. Cortex 2022, 151, 30–48. [Google Scholar] [CrossRef]

- Koivisto, M.; Kainulainen, P.; Revonsuo, A. The relationship between awareness and attention: Evidence from ERP responses. Neuropsychologia 2009, 47, 2891–2899. [Google Scholar] [CrossRef] [PubMed]

- Lamme, V.A.; Roelfsema, P.R. The distinct modes of vision offered by feedforward and recurrent processing. Trends Neurosci. 2000, 23, 571–579. [Google Scholar] [CrossRef]

- Lamme, V.A.; Zipser, K.; Spekreijse, H. Masking interrupts figure-ground signals in V1. J. Cogn. Neurosci. 2002, 14, 1044–1053. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Campbell, J.I.; Thompson, V.A. MorePower 6.0 for ANOVA with relational confidence intervals and Bayesian analysis. Behav. Res. 2012, 44, 1255–1265. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peirce, J.W.; Gray, J.R.; Simpson, S.; MacAskill, M.R.; Höchenberger, R.; Sogo, H.; Kastman, E.; Lindeløv, J. PsychoPy2: Experiments in behavior made easy. Behav. Res. Methods 2019, 51, 195–203. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Goeleven, E.; De Raedt, R.; Leyman, L.; Verschuere, B. The Karolinska directed emotional faces: A validation study. Cogn. Emot. 2008, 22, 1094–1118. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [Green Version]

- Lopez-Calderon, J.; Luck, S.J. ERPLAB: An open-source toolbox for the analysis of event-related potentials. Front. Hum. Neurosci. 2014, 8, 213. [Google Scholar] [CrossRef] [Green Version]

- Groppe, D.M.; Urbach, T.P.; Kutas, M. Mass univariate analysis of event-related brain potentials/fields I: A critical tutorial review. Psychophysiology 2011, 48, 1711–1725. [Google Scholar] [CrossRef] [Green Version]

- Donaldson, W. Measuring recognition memory. J. Exp. Psychol. Gen. 1992, 121, 275. [Google Scholar] [CrossRef] [PubMed]

- Hautus, M.J.; Macmillan, N.A.; Creelman, C.D. Detection Theory: A User’s Guide; Routledge: London, UK, 2021. [Google Scholar]

- Eimer, M. Mechanisms of visuospatial attention: Evidence from event-related brain potentials. Vis. Cogn. 1998, 5, 257–286. [Google Scholar] [CrossRef]

- Luck, S.J.; Hillyard, S.A. Electrophysiological correlates of feature analysis during visual search. Psychophysiology 1994, 31, 291–308. [Google Scholar] [CrossRef]

- Koch, C.; Massimini, M.; Boly, M.; Tononi, G. Neural correlates of consciousness: Progress and problems. Nat. Rev. Neurosci. 2016, 17, 307–321. [Google Scholar] [CrossRef] [PubMed]

- Koivisto, M.; Grassini, S. Neural processing around 200 ms after stimulus-onset correlates with subjective visual awareness. Neuropsychologia 2016, 84, 235–243. [Google Scholar] [CrossRef]

- Pitts, M.A.; Metzler, S.; Hillyard, S.A. Isolating neural correlates of conscious perception from neural correlates of reporting one’s perception. Front. Psychol. 2014, 5, 1078. [Google Scholar] [CrossRef] [Green Version]

- Koivisto, M.; Ruohola, M.; Vahtera, A.; Lehmusvuo, T.; Intaite, M. The effects of working memory load on visual awareness and its electrophysiological correlates. Neuropsychologia 2018, 120, 86–96. [Google Scholar] [CrossRef]

- Dembski, C.; Koch, C.; Pitts, M. Perceptual awareness negativity: A physiological correlate of sensory consciousness. Trends Cogn. Sci. 2021, 25, 660–670. [Google Scholar] [CrossRef]

- Lamme, V.A. Why visual attention and awareness are different. Trends Cogn. Sci. 2003, 7, 12–18. [Google Scholar] [CrossRef]

- Lamme, V.A. How neuroscience will change our view on consciousness. Cogn. Neurosci. 2010, 1, 204–220. [Google Scholar] [CrossRef]

- Revonsuo, A.; Koivisto, M. Electrophysiological evidence for phenomenal consciousness. Cogn. Neurosci. 2010, 1, 225–227. [Google Scholar] [CrossRef]

- Dellert, T.; Müller-Bardorff, M.; Schlossmacher, I.; Pitts, M.; Hofmann, D.; Bruchmann, M.; Straube, T. Dissociating the neural correlates of consciousness and task relevance in face perception using simultaneous EEG-fMRI. J. Neurosci. 2021, 41, 7864–7875. [Google Scholar] [CrossRef] [PubMed]

- Shafto, J.P.; Pitts, M.A. Neural signatures of conscious face perception in an inattentional blindness paradigm. J. Neurosci. 2015, 35, 10940–10948. [Google Scholar] [CrossRef] [PubMed]

- Koivisto, M.; Revonsuo, A. Electrophysiological correlates of visual consciousness and selective attention. Neuroreport 2007, 18, 753–756. [Google Scholar] [CrossRef] [PubMed]

- Pazo-Álvarez, P.; Roca-Fernández, A.; Gutiérrez-Domínguez, F.J.; Amenedo, E. Attentional modulation of change detection ERP components by peripheral retro-cueing. Front. Hum. Neurosci. 2017, 11, 76. [Google Scholar] [CrossRef] [Green Version]

- Shin, K.; Stolte, M.; Chong, S.C. The effect of spatial attention on invisible stimuli. Atten. Percept. Psychophys. 2009, 71, 1507–1513. [Google Scholar] [CrossRef] [Green Version]

- Webb, T.W.; Igelström, K.M.; Schurger, A.; Graziano, M.S. Cortical networks involved in visual awareness independent of visual attention. Proc. Natl. Acad. Sci. USA 2016, 113, 13923–13928. [Google Scholar] [CrossRef] [Green Version]

- Wyart, V.; Tallon-Baudry, C. Neural dissociation between visual awareness and spatial attention. J. Neurosci. 2008, 28, 2667–2679. [Google Scholar] [CrossRef] [Green Version]

- Kiss, M.; Van Velzen, J.; Eimer, M. The N2pc component and its links to attention shifts and spatially selective visual processing. Psychophysiology 2008, 45, 240–249. [Google Scholar] [CrossRef] [Green Version]

- Seiss, E.; Kiss, M.; Eimer, M. Does focused endogenous attention prevent attentional capture in pop-out visual search? Psychophysiology 2009, 46, 703–717. [Google Scholar] [CrossRef] [Green Version]

- Woodman, G.F.; Arita, J.T.; Luck, S.J. A cuing study of the N2pc component: An index of attentional deployment to objects rather than spatial locations. Brain Res. 2009, 1297, 101–111. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Theeuwes, J. Top–down and bottom–up control of visual selection. Acta Psychol. 2010, 135, 77–99. [Google Scholar] [CrossRef] [PubMed]

- Cohen, M.A.; Cavanagh, P.; Chun, M.M.; Nakayama, K. The attentional requirements of consciousness. Trends Cogn. Sci. 2012, 16, 411–417. [Google Scholar] [CrossRef]

- Eimer, M.; Holmes, A. Event-related brain potential correlates of emotional face processing. Neuropsychologia 2007, 45, 15–31. [Google Scholar] [CrossRef] [Green Version]

- Schupp, H.T.; Flaisch, T.; Stockburger, J.; Junghöfer, M. Emotion and attention: Event-related brain potential studies. Prog. Brain Res. 2006, 156, 31–51. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sergent, C.; Baillet, S.; Dehaene, S. Timing of the brain events underlying access to consciousness during the attentional blink. Nat. Neurosci. 2005, 8, 1391–1400. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, Z.; Becker, S.I.; Pegna, A.J. The Effects of Spatial Attention Focus and Visual Awareness on the Processing of Fearful Faces: An ERP Study. Brain Sci. 2022, 12, 823. https://doi.org/10.3390/brainsci12070823

Qiu Z, Becker SI, Pegna AJ. The Effects of Spatial Attention Focus and Visual Awareness on the Processing of Fearful Faces: An ERP Study. Brain Sciences. 2022; 12(7):823. https://doi.org/10.3390/brainsci12070823

Chicago/Turabian StyleQiu, Zeguo, Stefanie I. Becker, and Alan J. Pegna. 2022. "The Effects of Spatial Attention Focus and Visual Awareness on the Processing of Fearful Faces: An ERP Study" Brain Sciences 12, no. 7: 823. https://doi.org/10.3390/brainsci12070823

APA StyleQiu, Z., Becker, S. I., & Pegna, A. J. (2022). The Effects of Spatial Attention Focus and Visual Awareness on the Processing of Fearful Faces: An ERP Study. Brain Sciences, 12(7), 823. https://doi.org/10.3390/brainsci12070823