A Circuit Model for Working Memory Based on Hybrid Positive and Negative-Derivative Feedback Mechanism

Abstract

1. Introduction

2. Materials and Methods

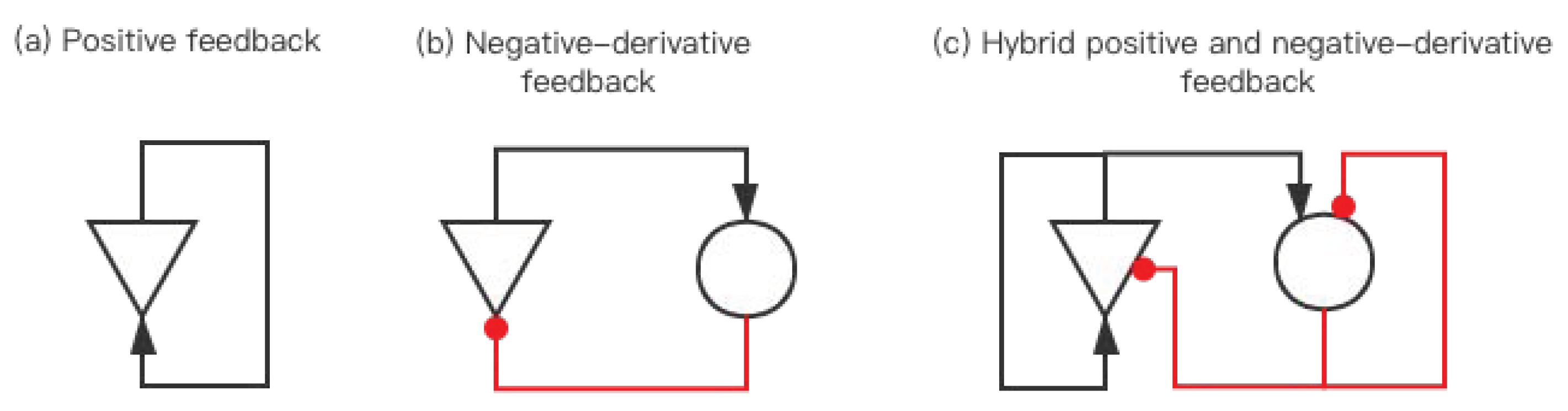

2.1. Hybrid Positive and Negative-Derivative Feedback

2.1.1. Positive Feedback

2.1.2. Negative-Derivative Feedback

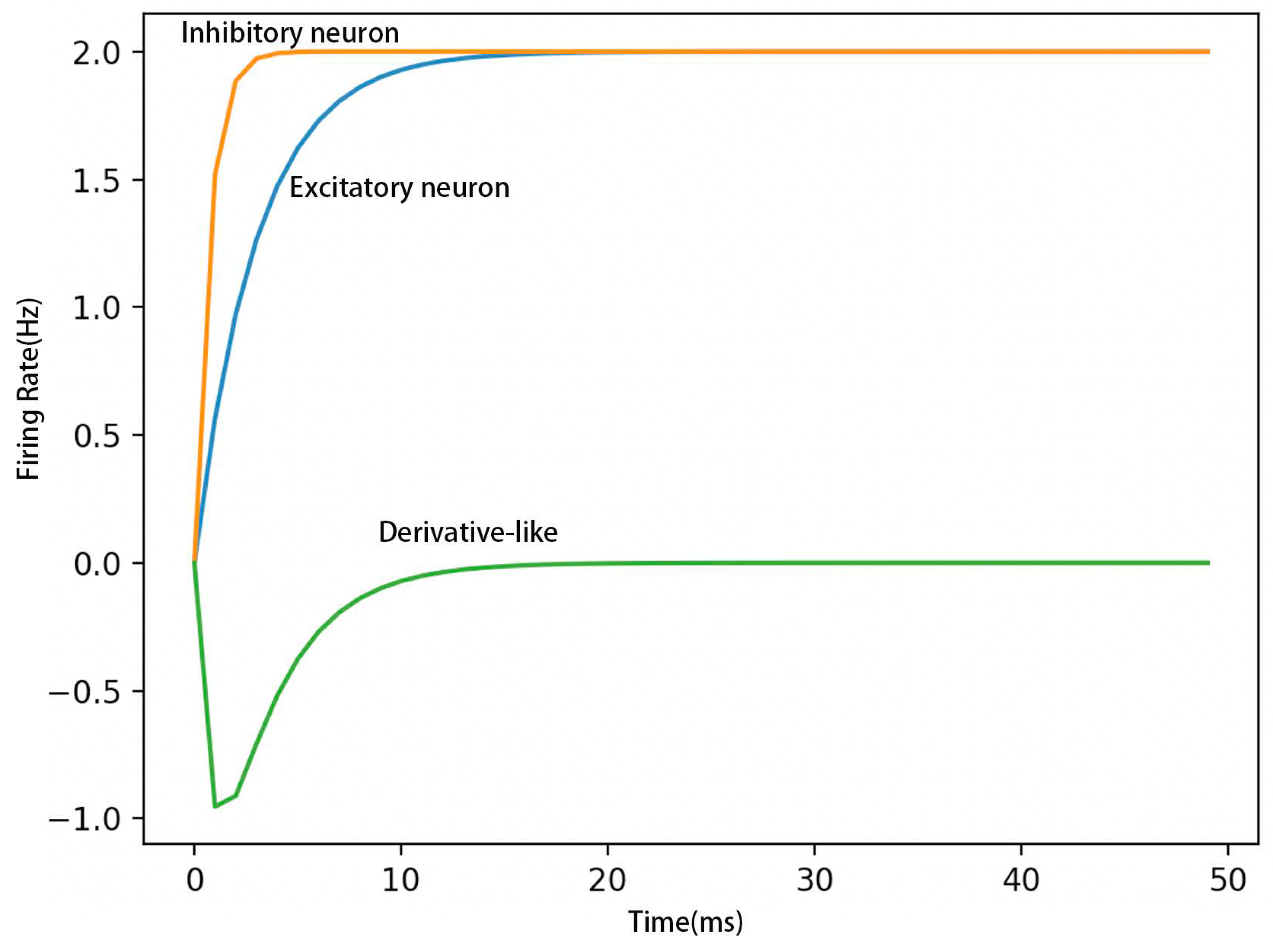

2.1.3. Hybrid Positive and Negative-Derivative Feedback

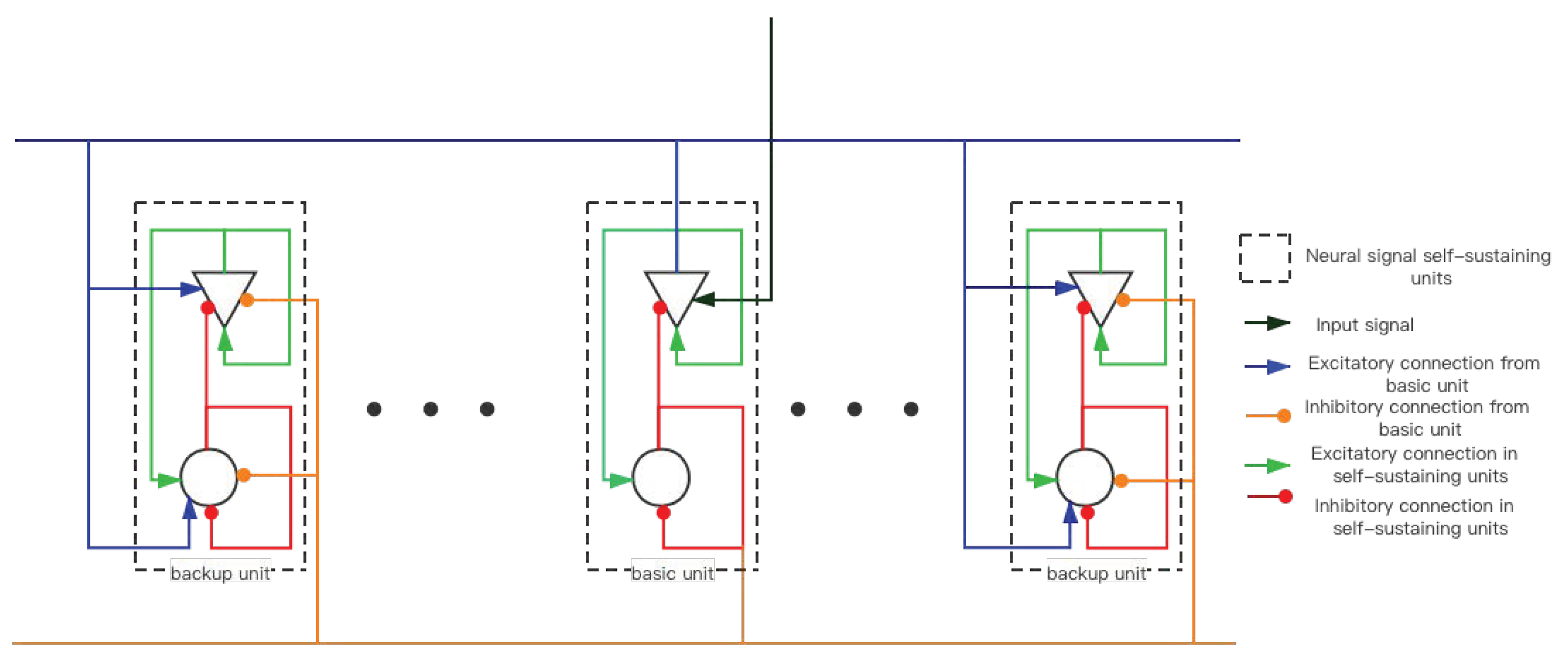

2.2. Memory-Storage Sub-Network Based on HPNF Model—SET Network

2.2.1. Mean Field Model

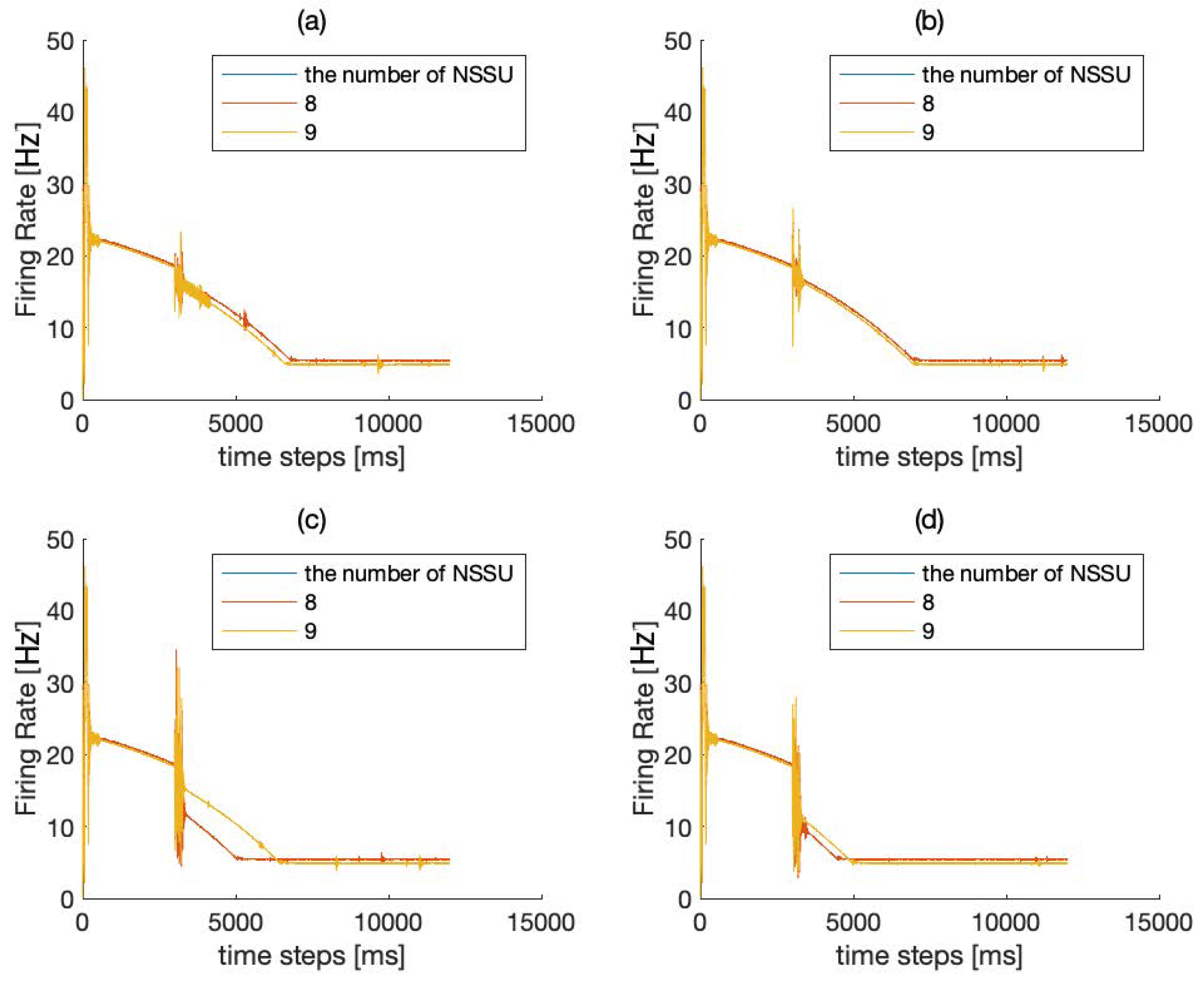

2.2.2. Reasons for Choosing the Number of NSSU (NEURAL Signal Self-Sustaining Unit)

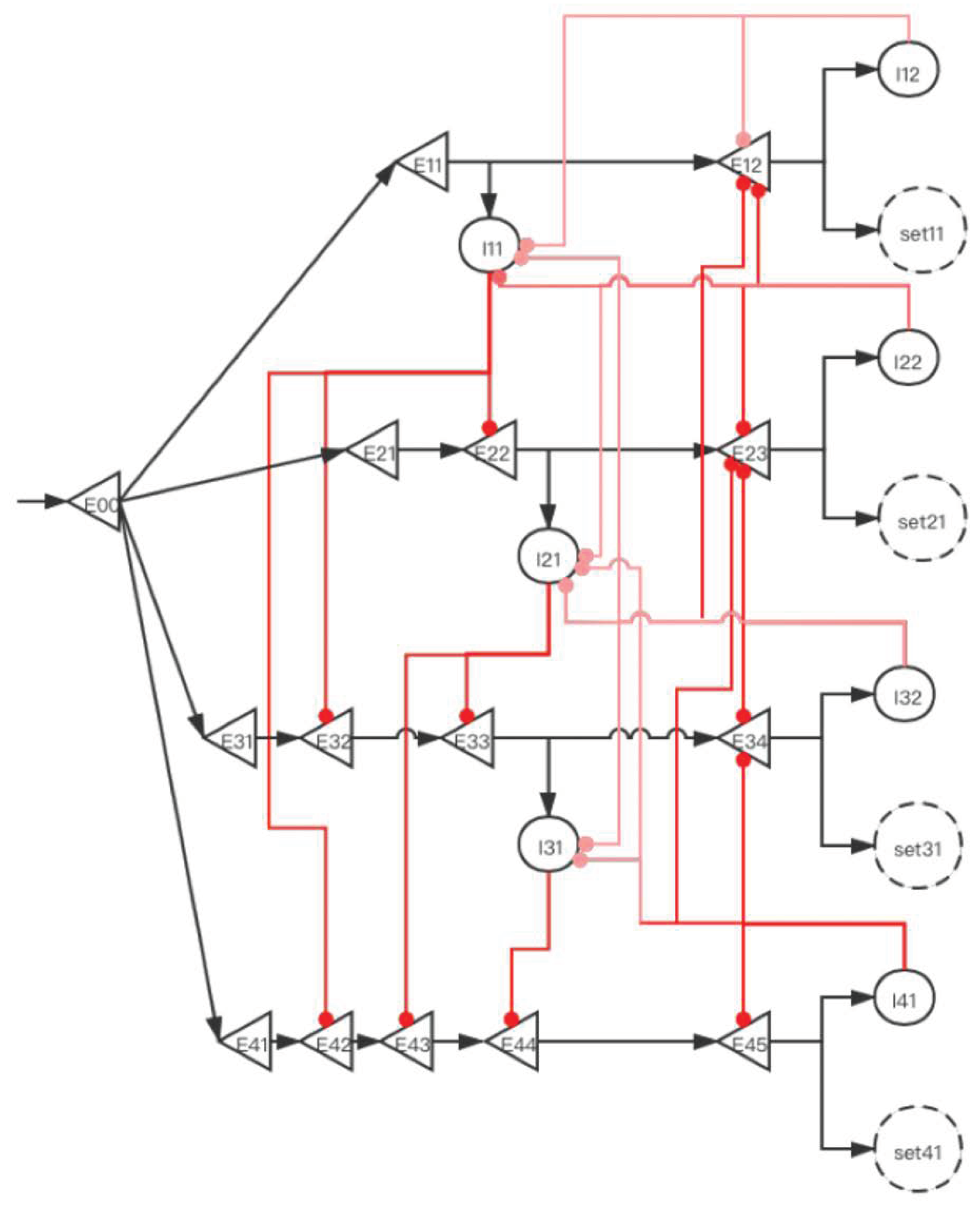

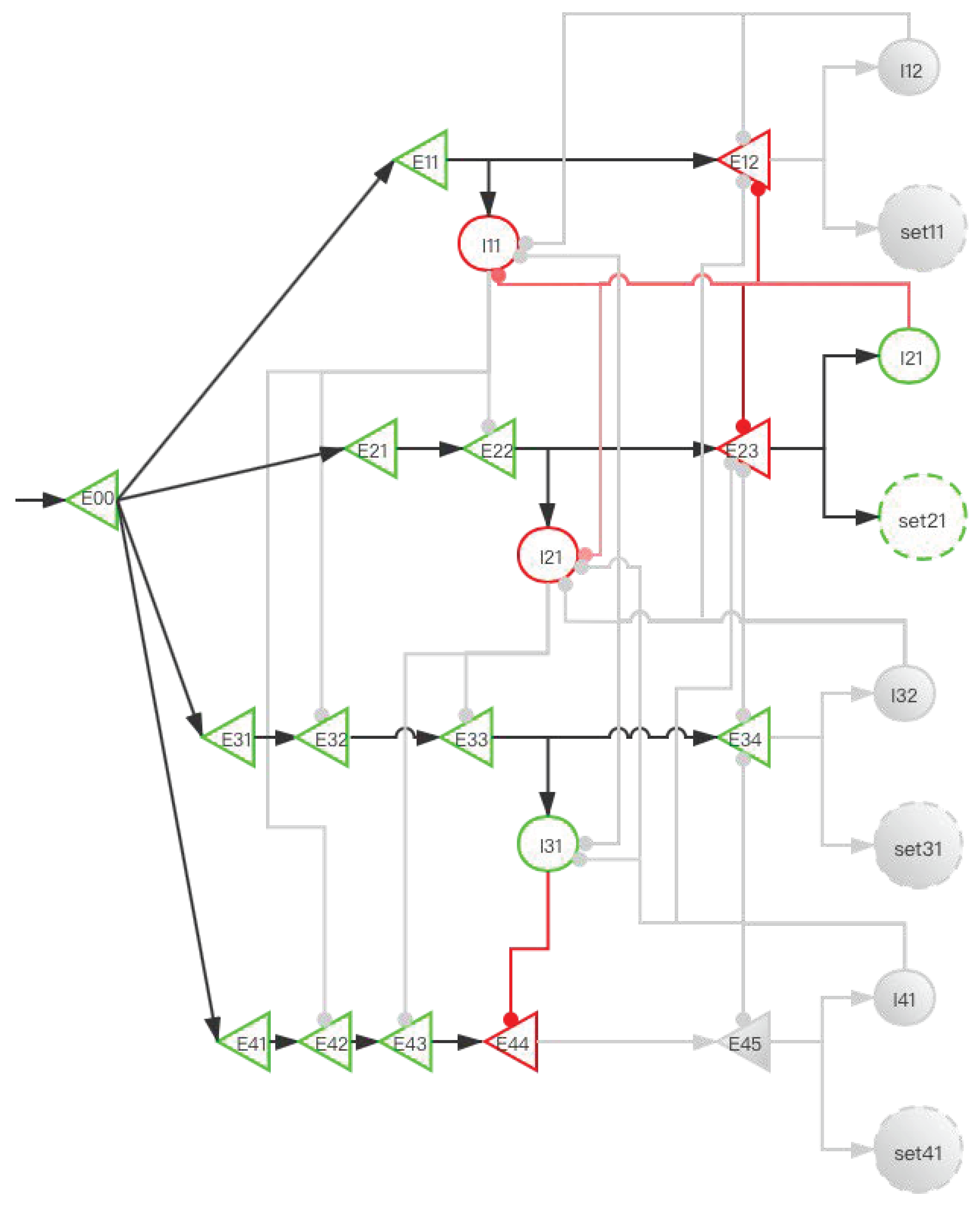

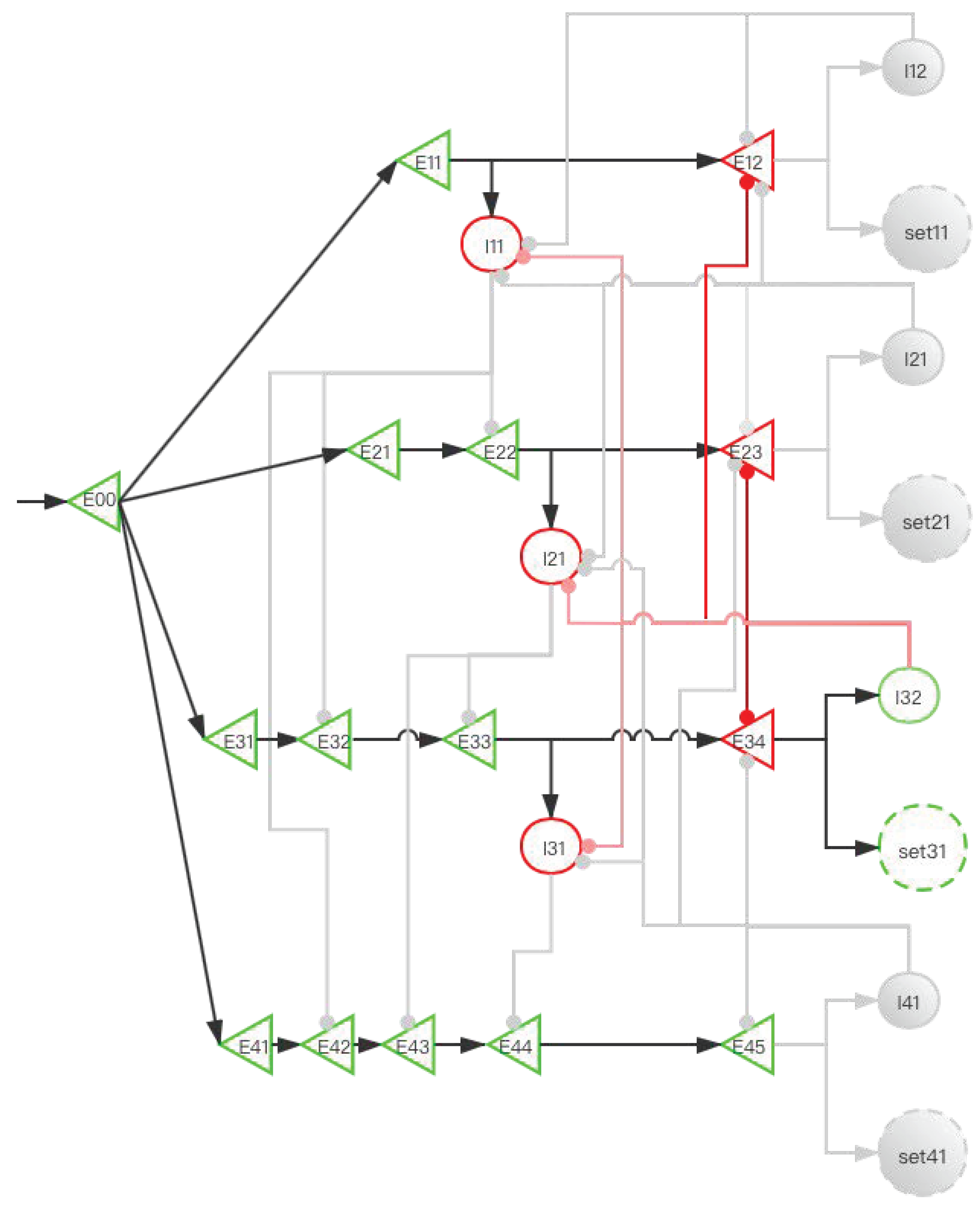

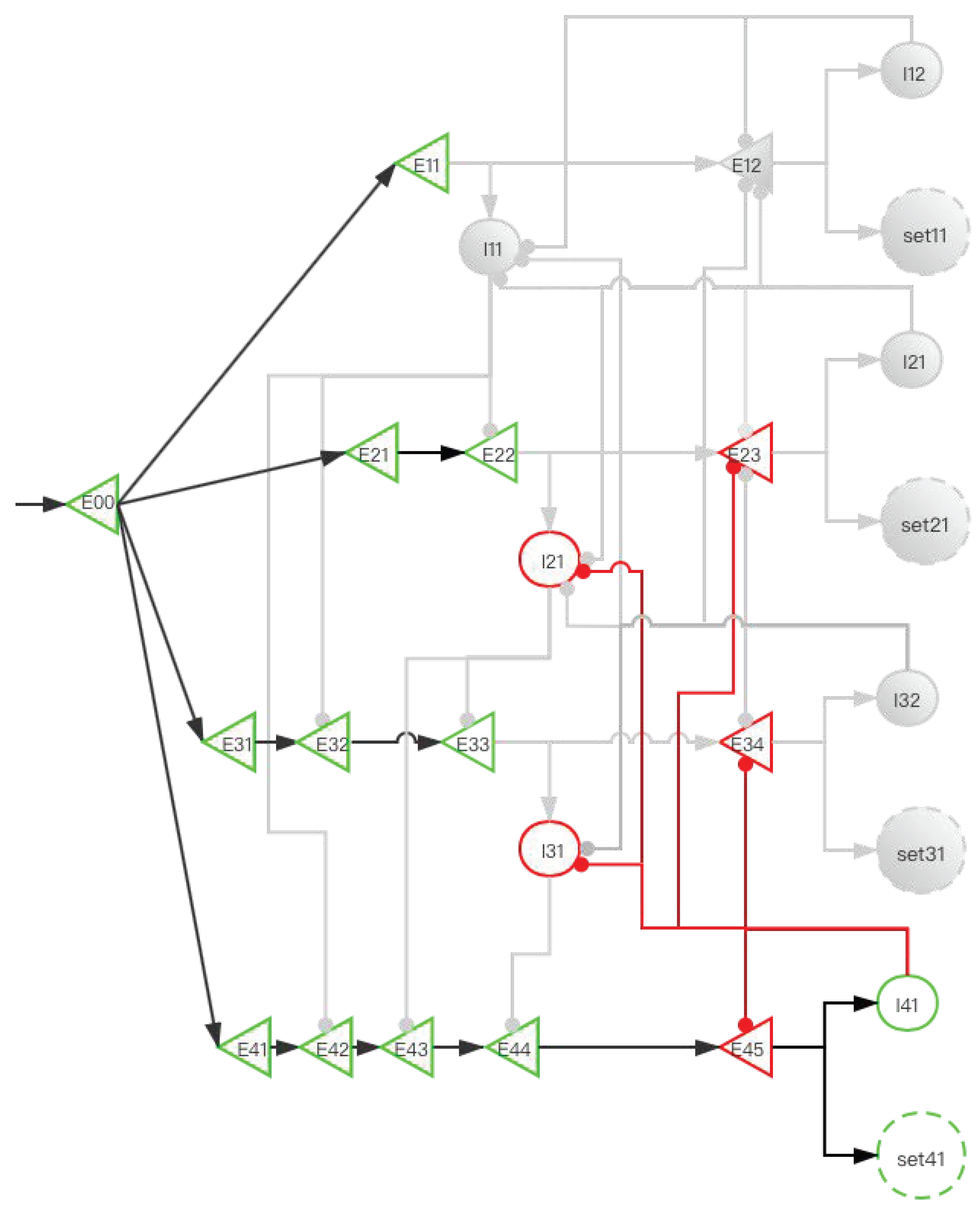

2.3. Storage Distribution Network (SDN)

2.3.1. FIFO Mechanism

2.3.2. Implementation of Storage Distribution Network at the Neural Circuit Level

3. Results

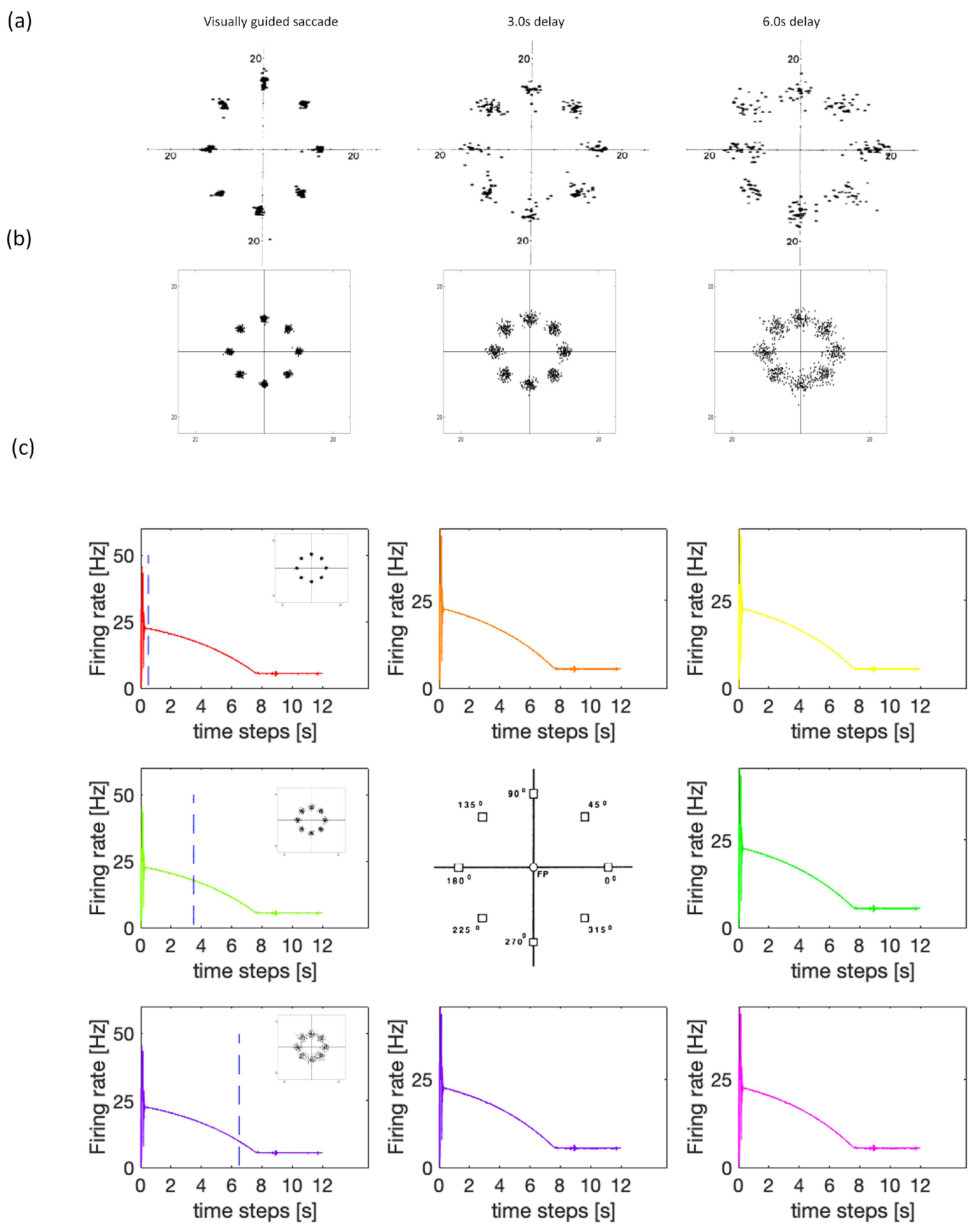

3.1. Experiment Study A

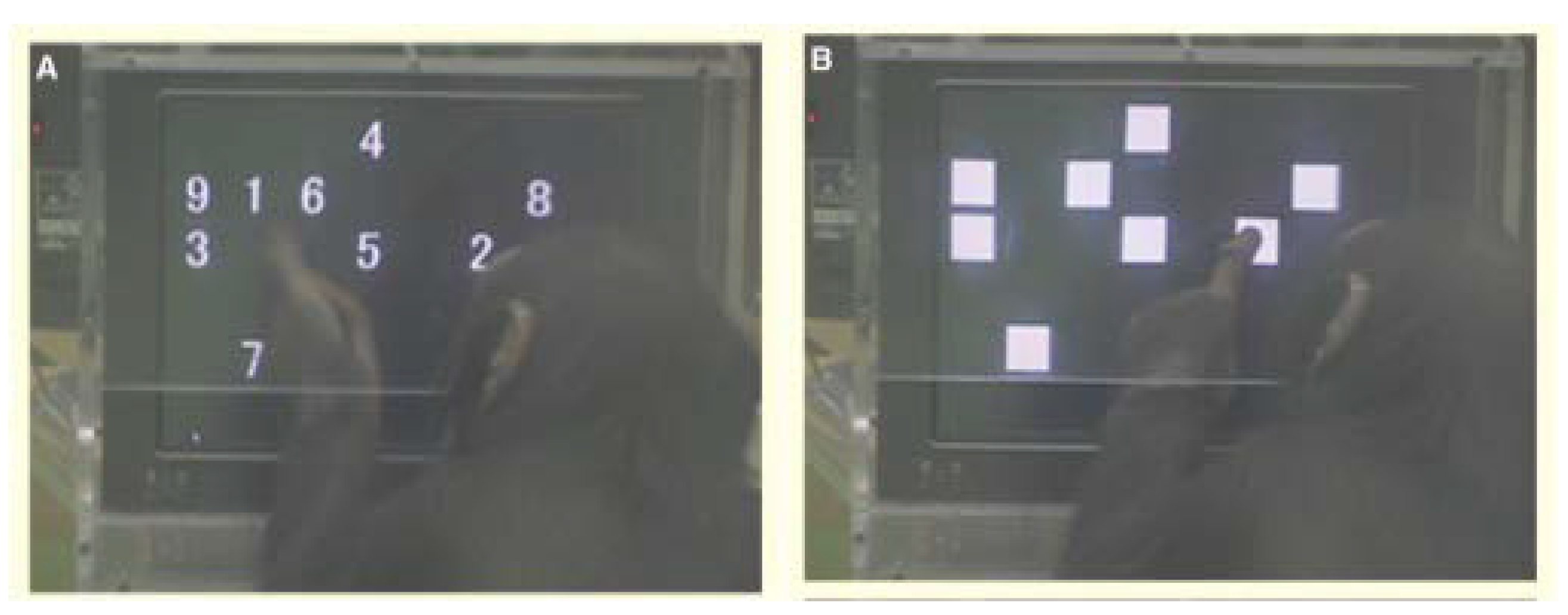

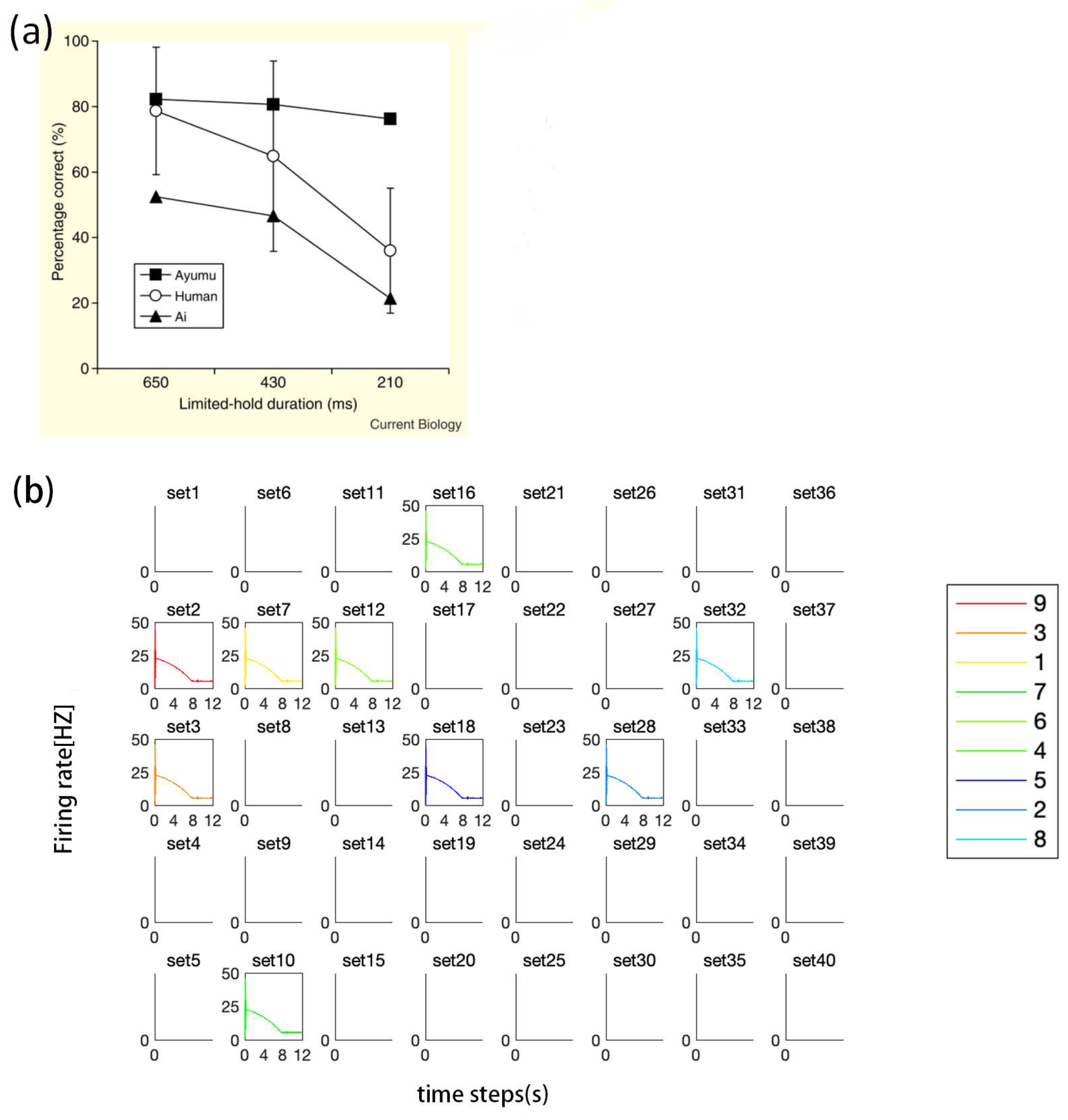

3.2. Experiment Study B

3.3. Experiment Study C

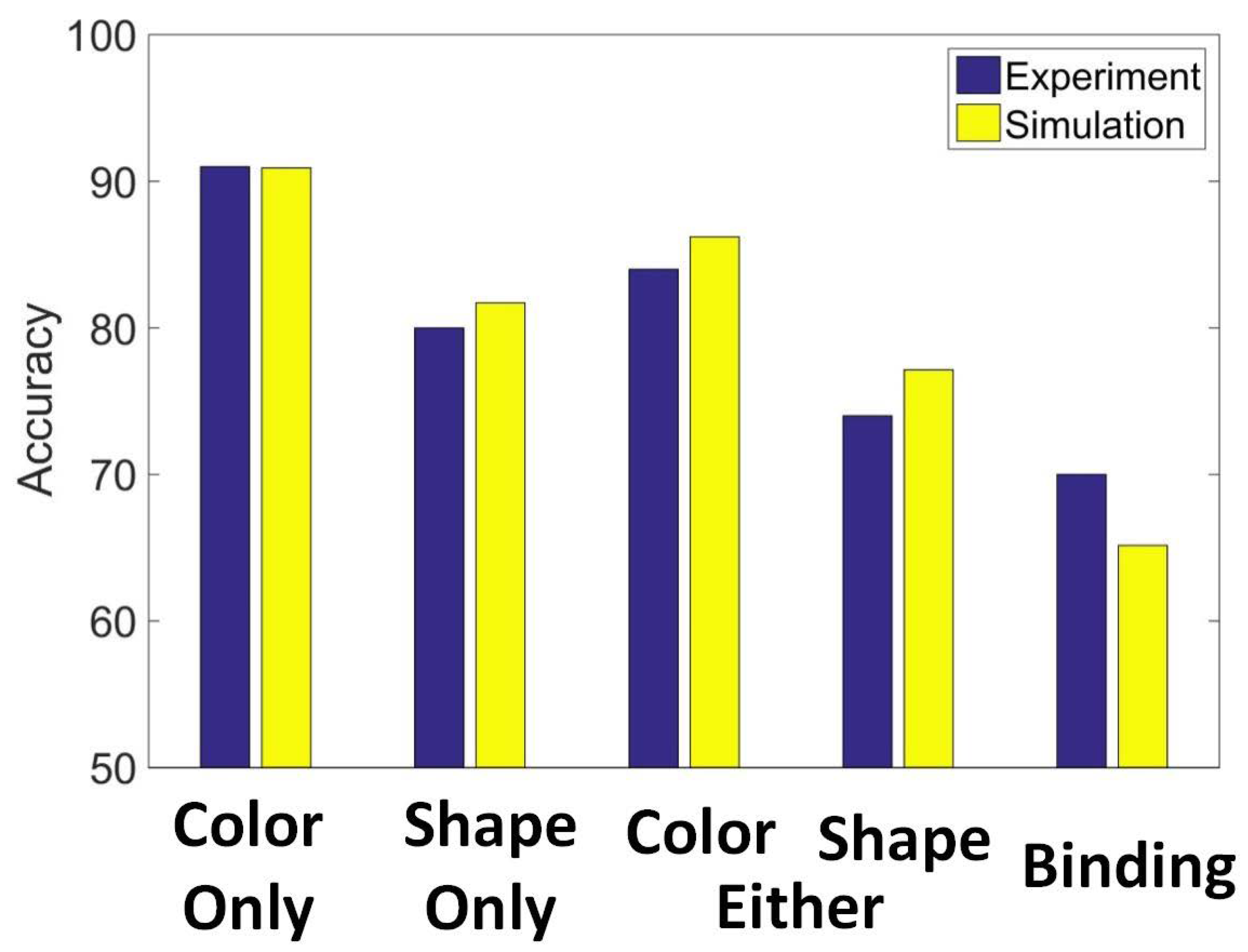

3.4. Experiment Study D

- (1)

- Color only: Participants were told that only the color of items could be changed. In different trials of the test, the same shapes were present, but two items had changed to two new colors. These colors were not previously presented in the initial display.

- (2)

- Shape only: Participants were told that only the shape of items could be changed. In different trials of the test, the same colors were present, but two items had changed to two new shapes. These colors were not previously presented in the initial display.

- (3)

- Either shape or color: Participants were told that either the color or the shape of items could be changed. Half the trials were shape trials in which two squares changed to two new shapes. Half the trials were color trials in which two new colors were presented. These color and shape trials were randomly intermixed with no indication of which type of information would be probed until the test.

- (4)

- Binding shape and color: All the same colors and shapes were presented. However, in different trials, the binding, that is, the relationship between color and shape, changed for two items. In effect, two shapes switched their colors or shapes with each other.

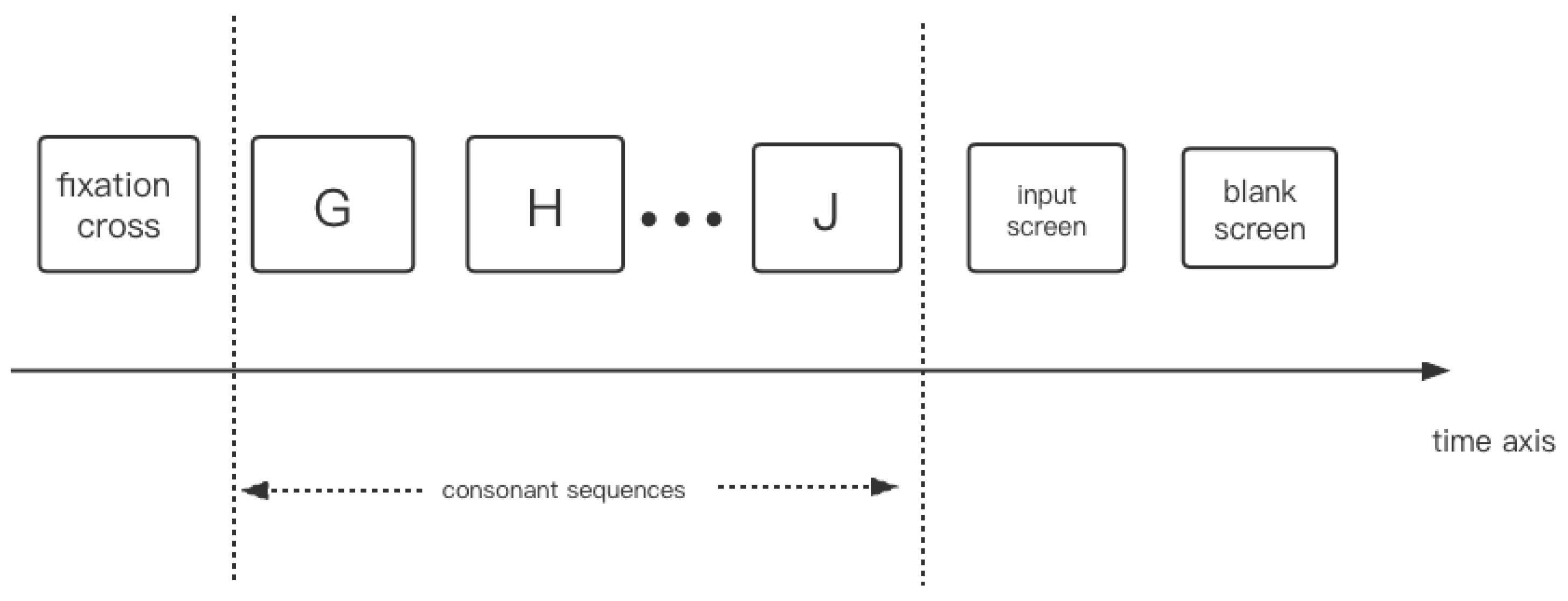

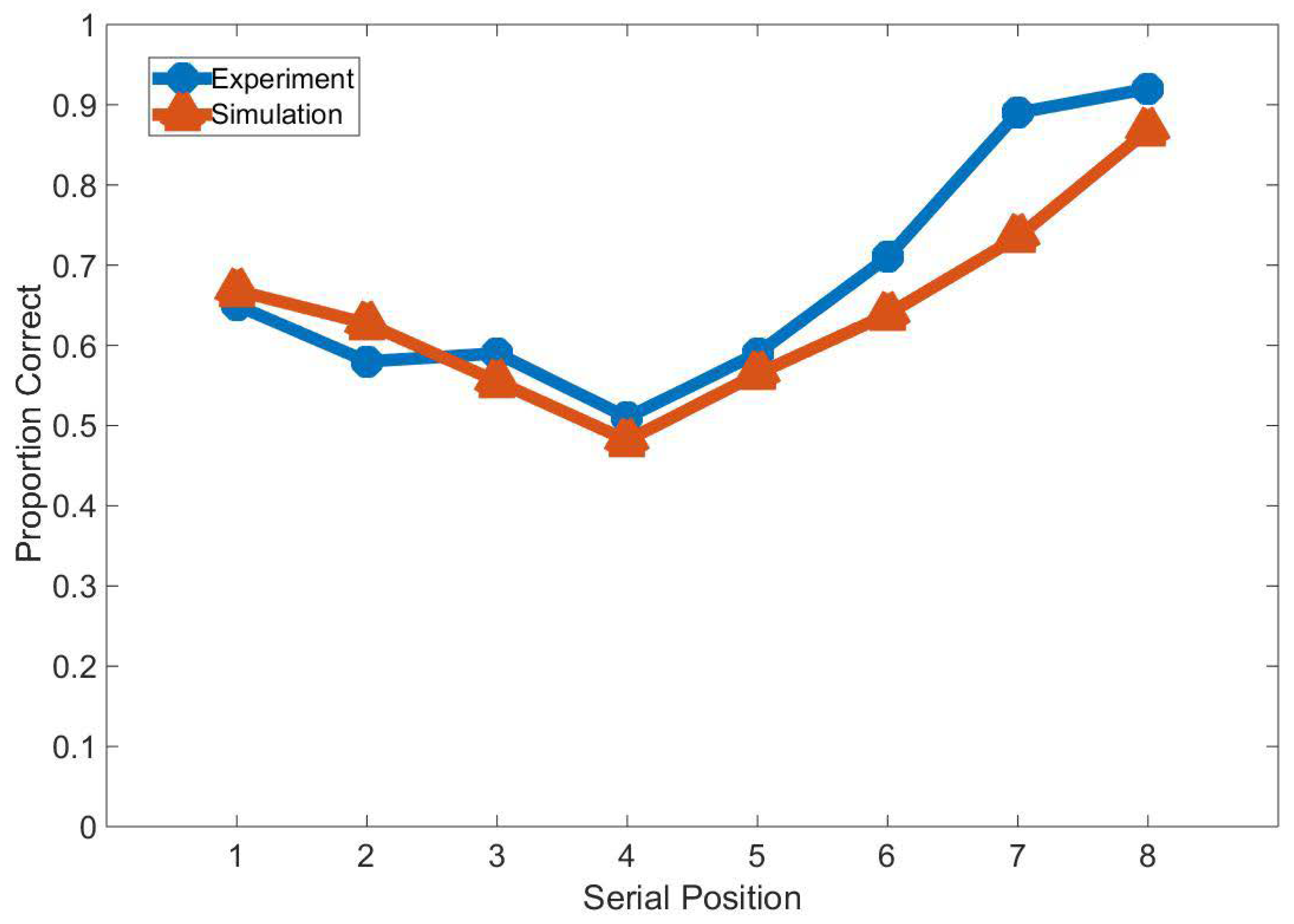

3.5. Experiment Study E

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alloway, T.; Alloway, R. The Working Memory Advantage: Train Your Brain to Function Stronger, Smarter, Faster; Simon and Schuster: New York, NY, USA, 2014. [Google Scholar]

- Cowan, N. Metatheory of storage capacity limits. Behav. Brain Sci. 2001, 24, 154–176. [Google Scholar] [CrossRef]

- Cowan, N. The magical mystery four: How is working memory capacity limited, and why? Curr. Dir. Psychol. Sci. 2010, 19, 51–57. [Google Scholar] [CrossRef] [PubMed]

- Daneman, M.; Carpenter, P.A. Individual differences in working memory and reading. J. Verbal Learn. Verbal Behav. 1980, 19, 450–466. [Google Scholar] [CrossRef]

- Lake, B.M.; Ullman, T.D.; Tenenbaum, J.B.; Gershman, S.J. Building machines that learn and think like people. Behav. Brain Sci. 2017, 40, e253. [Google Scholar] [CrossRef] [PubMed]

- Chaudhuri, R. Computational principles of memory. Nat. Neurosci. 2016, 19, 394–403. [Google Scholar] [CrossRef]

- Compte, A.; Brunel, N.; Goldman-Rakic, P.S.; Wang, X.-J. Synaptic Mechanisms and Network Dynamics Underlying Spatial Working Memory in a Cortical Network Model. Cereb. Cortex 2000, 10, 910–923. [Google Scholar] [CrossRef]

- Rolls, E.T.; Dempere-Marco, L.; Deco, G. Holding multiple items in short term memory: A neural mechanism. PLoS ONE 2013, 8, e61078. [Google Scholar] [CrossRef]

- Kriete, T.; Noelle, D.C.; Cohen, J.D.; O’Reilly, R.C. Indirection and symbol-like processing in the prefrontal cortex and basal ganglia. Proc. Natl. Acad. Sci. USA 2013, 110, 16390–16395. [Google Scholar] [CrossRef]

- Haider, B.; Duque, A.; Hasenstaub, A.R.; McCormick, D.A. Neocortical network activity in vivo is generated through a dynamic balance of excitation and inhibition. J. Neurosci. 2006, 26, 4535–4545. [Google Scholar] [CrossRef]

- Haider, B.; McCormick, D.A. Rapid neocortical dynamics: Cellular and network mechanisms. Neuron 2009, 62, 171–189. [Google Scholar] [CrossRef]

- Shu, Y.; Hasenstaub, A.; McCormick, D.A. Turning on and off recurrent balanced cortical activity. Nature 2003, 423, 288–293. [Google Scholar] [CrossRef] [PubMed]

- Schultz, W. A neural substrate of prediction and reward. Science 1997, 275, 1593–1599. [Google Scholar] [CrossRef]

- Rudolph, M.; Pospischil, M.; Timofeev, I.; Destexhe, A. Inhibition determines membrane potential dynamics and controls action potential generation in awake and sleeping cat cortex. J. Neurosci. 2007, 27, 5280–5290. [Google Scholar] [CrossRef] [PubMed]

- Rotaru, D.C.; Yoshino, H.; Lewis, D.A.; Ermentrout, G.B.; Gonzalez-Burgos, G. Glutamate receptor subtypes mediating synaptic activation of prefrontal cortex neurons: Relevance for schizophrenia. J. Neurosci. 2011, 31, 142–156. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Stradtman, G.G.; Wang, X.-J.; Gao, W.-J. A specialized nmda receptor function in layer 5 recurrent microcircuitry of the adult rat prefrontal cortex. Proc. Natl. Acad. Sci. USA 2008, 105, 16791–16796. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.-X.; Gao, W.-J. Cell type-specific development of nmda receptors in the interneurons of rat prefrontal cortex. Neuropsychopharmacology 2009, 34, 142–156. [Google Scholar] [CrossRef] [PubMed]

- Lim, S.; Goldman, M.S. Balanced cortical microcircuitry for spatial working memory based on corrective feedback control. J. Neurosci. 2014, 34, 6790–6806. [Google Scholar] [CrossRef]

- Gazzaniga, M.S. The Cognitive Neurosciences; MIT Press: Cambridge, MA, USA, 2004. [Google Scholar]

- Kriegeskorte, N.; Douglas, P.K. Cognitive computational neuroscience. Nat. Neurosci. 2018, 21, 1148–1160. [Google Scholar] [CrossRef]

- Kriegeskorte, N.; Mok, R.M. Building machines that adapt and compute like brains. arXiv 2017, arXiv:1711.04203. [Google Scholar] [CrossRef]

- Simon, H.A.; Newell, A. Human problem solving: The state of the theory in 1970. Am. Psychol. 1971, 26, 145–159. [Google Scholar] [CrossRef]

- Rolls, E.T. The storage and recall of memories in the hippocampo-cortical system features: FMRI correlates of sustained attention. Cell Tissue Res. 2018, 373, 577–604. [Google Scholar] [CrossRef] [PubMed]

- Rolls, E.T. Regional and cellular fractionation of working memory. Proc. Natl. Acad. Sci. USA 2018, 93, 13473–13480. [Google Scholar] [CrossRef]

- Ma, W. Changing concepts of working memory. Nat. Neurosci. 2014, 17, 347–356. [Google Scholar] [CrossRef] [PubMed]

- Lara, A.H. The Role of Prefrontal Cortex in Working Memory: A Mini Review. Front. Syst. Neurosci. 2015, 9, 173. [Google Scholar] [CrossRef] [PubMed]

- Kanwisher, N. The fusiform face area: A module in human extrastriate cortex specialized for face perception. J. Neurosci. 1997, 17, 4302–4311. [Google Scholar] [CrossRef]

- Tsao, D.Y. A cortical region consisting entirely of face-selective cells. Science 2006, 311, 670–674. [Google Scholar] [CrossRef]

- Seung, H.; Lee, D.D.; Reis, B.Y.; Tank, D.W. Stability of the memory of eye position in a recurrent network of conductance-based model neurons. Neuron 2000, 26, 259–271. [Google Scholar] [CrossRef]

- Wang, X. Neural coding strategies in auditory cortex. Hear. Res. 2007, 229, 81–93. [Google Scholar] [CrossRef]

- Rombouts, J.O.; Bohte, S.M.; Roelfsema, P.R. How attention can create synaptic tags for the learning of working memories in sequential tasks. PLoS Comput. Biol. 2015, 11, e1004060. [Google Scholar] [CrossRef]

- Baddeley, A.D.; Hitch, G. Working memory. In Psychology of Learning and Motivation; Academic Press: Cambridge, MA, USA, 1974; Volume 8, pp. 47–89. [Google Scholar] [CrossRef]

- Wilson, H.R. Spikes, Decisions and Actions; Oxford University Press: Oxford, UK, 1999. [Google Scholar]

- Shuai, Y. Forgetting is regulated through Rac activity in Drosophila. Cell 2009, 140, 579–589. [Google Scholar] [CrossRef]

- Funahashi, S.; Bruce, C.J.; Goldman-Rakic, P.S. Mnemonic coding of visual space in the monkey’s dorsolateral prefrontal cortex. J. Neurophysiol. 1989, 61, 331–349. [Google Scholar] [CrossRef] [PubMed]

- Kusak, G.; Grune, K.; Hagendorf, H.; Metz, A.-M. Updating of working memory in a running memory task: An event-related potential study. Int. J. Psychophysiol. 2000, 39, 51–65. [Google Scholar] [CrossRef]

- Wheeler, M.E.; Treisman, A.M. Binding in short-term visual memory. J. Exp. Psychol. 2002, 131, 48–64. [Google Scholar] [CrossRef]

- Bhatarah, P.; Ward, G.; Tan, L. Examining the relationship between free recall and immediate serial recall: The serial nature of recall and the effect of test expectancy. Mem. Cogn. 2008, 36, 20–34. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Koch, C. Biophysics of Computation: Information Processing in Single Neurons; Oxford University Press: Oxford, UK, 2004. [Google Scholar]

- Sepede, G.; Chiacchiaretta, P.; Gambi, F.; Di Iorio, G.; De Berardis, D.; Ferretti, A.; Perrucci, M.G.; Di Giannantonio, M. Bipolar disorder with and without a history of psychotic features: FMRI correlates of sustained attention. Neuro-Psychopharmacol. Biol. Psychiatry 2019, 98, 109817. [Google Scholar] [CrossRef] [PubMed]

- Sepede, G.; De Berardis, D.; Campanella, D.; Perrucci, M.G.; Ferretti, A.; Serroni, N.; Moschetta, F.S.; Del Gratta, C.; Salerno, R.M.; Ferro, F.M.; et al. Impaired sustained attention in euthymic bipolar disorder patients and non-affected relatives: An fMRI study. Bipolar Disord. 2012, 14, 764–779. [Google Scholar] [CrossRef]

- Aurtenetxe, S.; García-Pacios, J.; Del Rio, D.; López, M.E.; Pineda-Pardo, J.A.; Marcos, A.; Delgado Losada, M.L.; López-Frutos, J.M.; Maestú, F. Interference Impacts Working Memory in Mild Cognitive Impairment. Front. Neurosci. 2016, 10, 443. [Google Scholar] [CrossRef] [PubMed]

| Operating System | Human Brain |

|---|---|

| (1) When the program is running, the operating system needs to allocate memory space in the memory. The memory space takes the block as the basic storage unit. When the process is executing, it applies to the block space in the memory one by one. (2) If there is no target data in the memory, the operating system will schedule the required data block from the hard disk and put it into the memory block. (3) If there is no memory space for storing data, it will use the scheduling algorithm to replace the certain data stored by some previous processes. | (1) The human brain needs to temporarily store each memory item in the process of working memory. The memory items are stored in the hippocampus of the brain [23]. Understanding the interactions between the major cellular constituents of cortical circuits—pyramidal cells and inhibitory neurons—is considered a necessary step in unraveling the cellular mechanisms subserving working memory mechanisms and, ultimately, cognitive processes [24]. (2) In the process of working memory in the human brain, little is known about the structure of a single memory item stored in the hippocampus at the neural circuit level. (3) It has recently been proposed that working memory might better be conceptualized as a limited resource that is distributed flexibly among all items to be stored in memory [25]. At the neural circuit level, little is known about how the hippocampus distributes multiple memory items to multiple neurons (groups). |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, H.; Jin, X.; Su, Z. A Circuit Model for Working Memory Based on Hybrid Positive and Negative-Derivative Feedback Mechanism. Brain Sci. 2022, 12, 547. https://doi.org/10.3390/brainsci12050547

Wei H, Jin X, Su Z. A Circuit Model for Working Memory Based on Hybrid Positive and Negative-Derivative Feedback Mechanism. Brain Sciences. 2022; 12(5):547. https://doi.org/10.3390/brainsci12050547

Chicago/Turabian StyleWei, Hui, Xiao Jin, and Zihao Su. 2022. "A Circuit Model for Working Memory Based on Hybrid Positive and Negative-Derivative Feedback Mechanism" Brain Sciences 12, no. 5: 547. https://doi.org/10.3390/brainsci12050547

APA StyleWei, H., Jin, X., & Su, Z. (2022). A Circuit Model for Working Memory Based on Hybrid Positive and Negative-Derivative Feedback Mechanism. Brain Sciences, 12(5), 547. https://doi.org/10.3390/brainsci12050547