Artificial Visual System for Orientation Detection Based on Hubel–Wiesel Model

Abstract

:1. Introduction

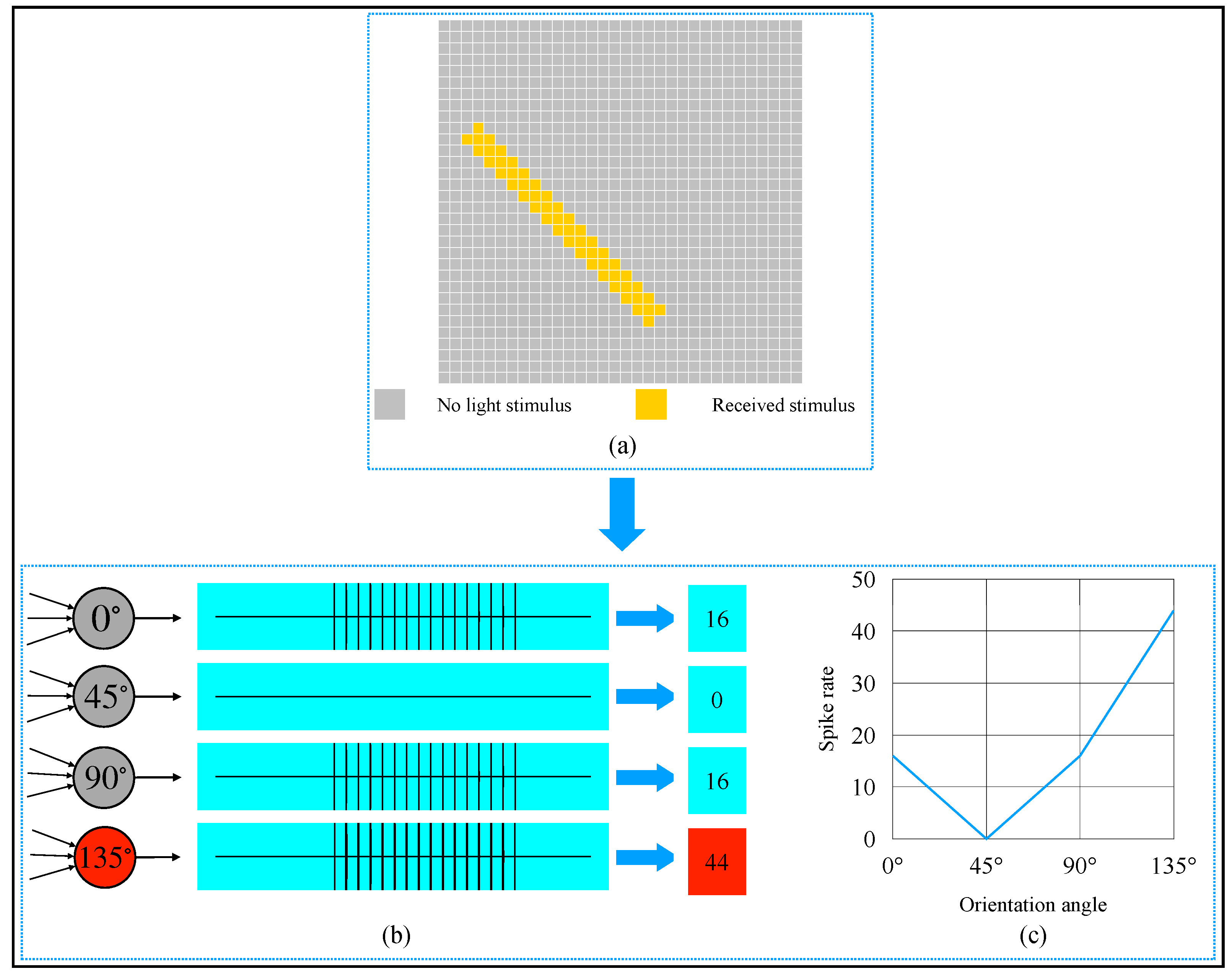

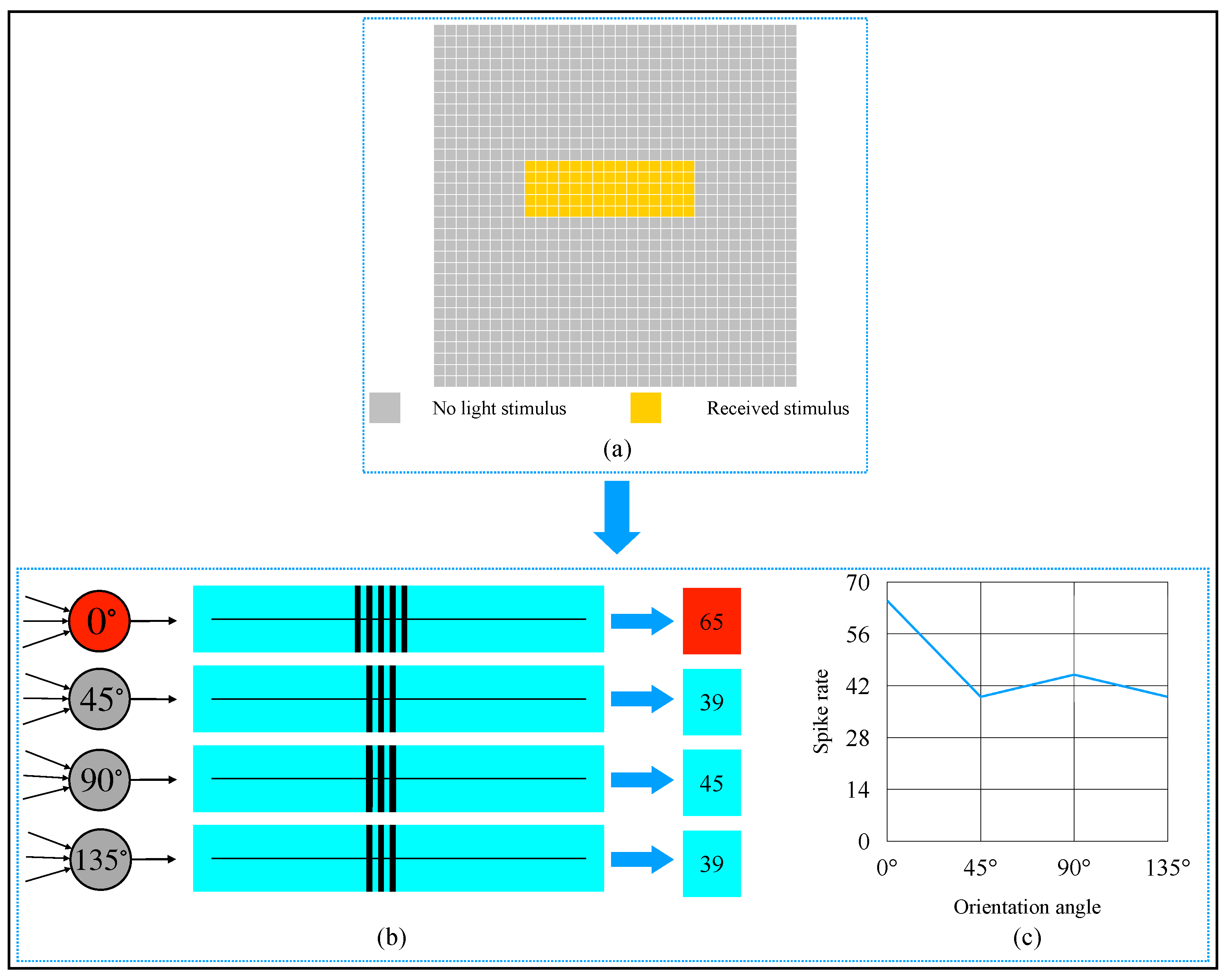

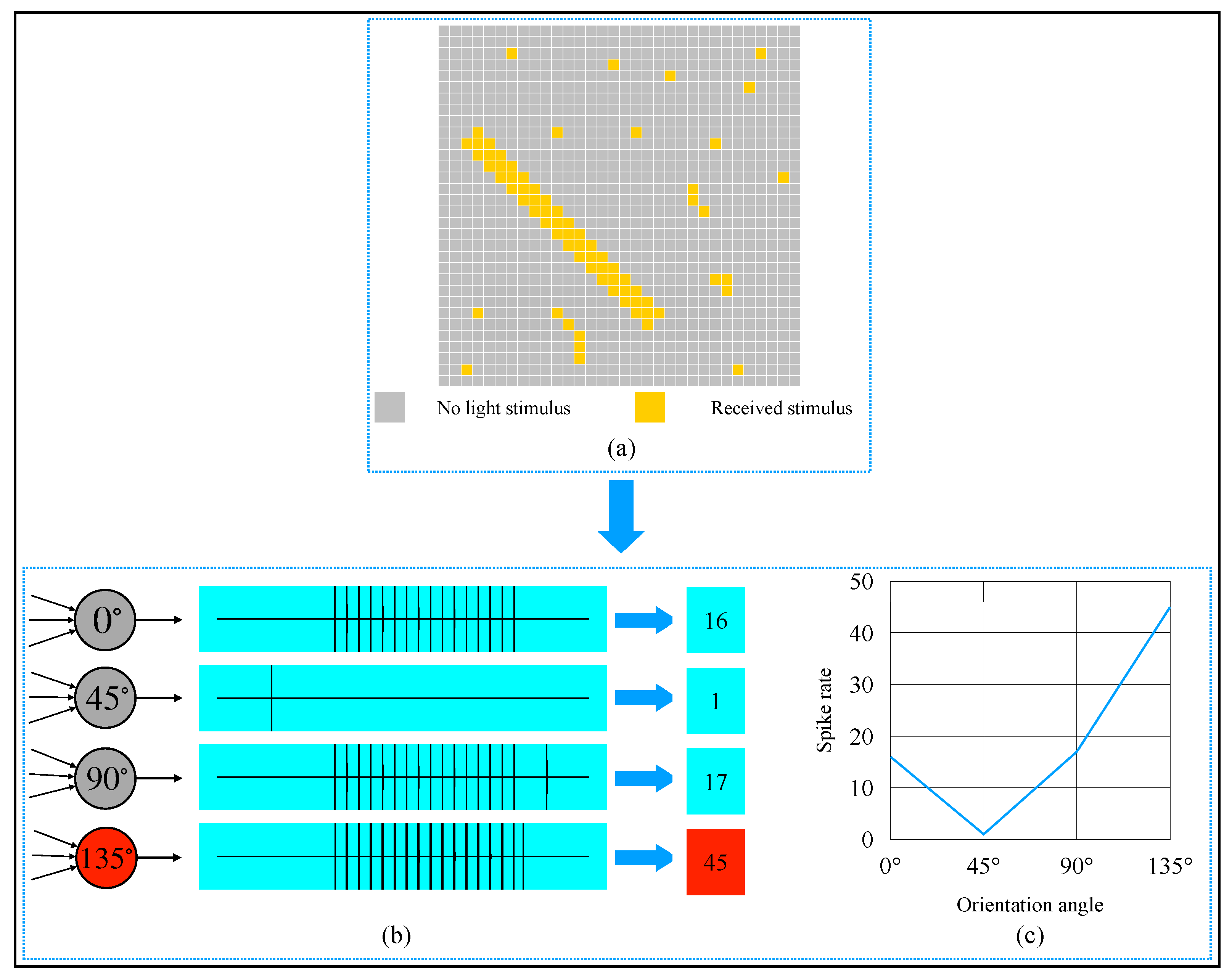

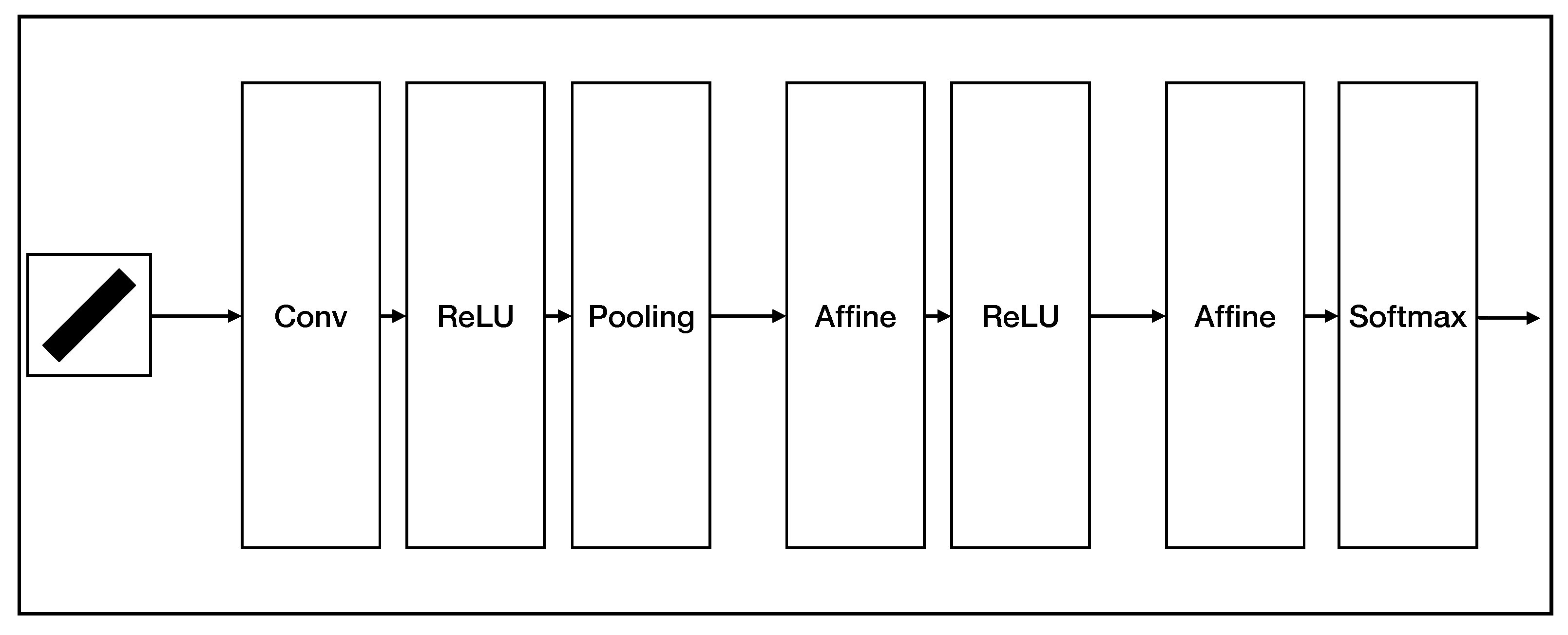

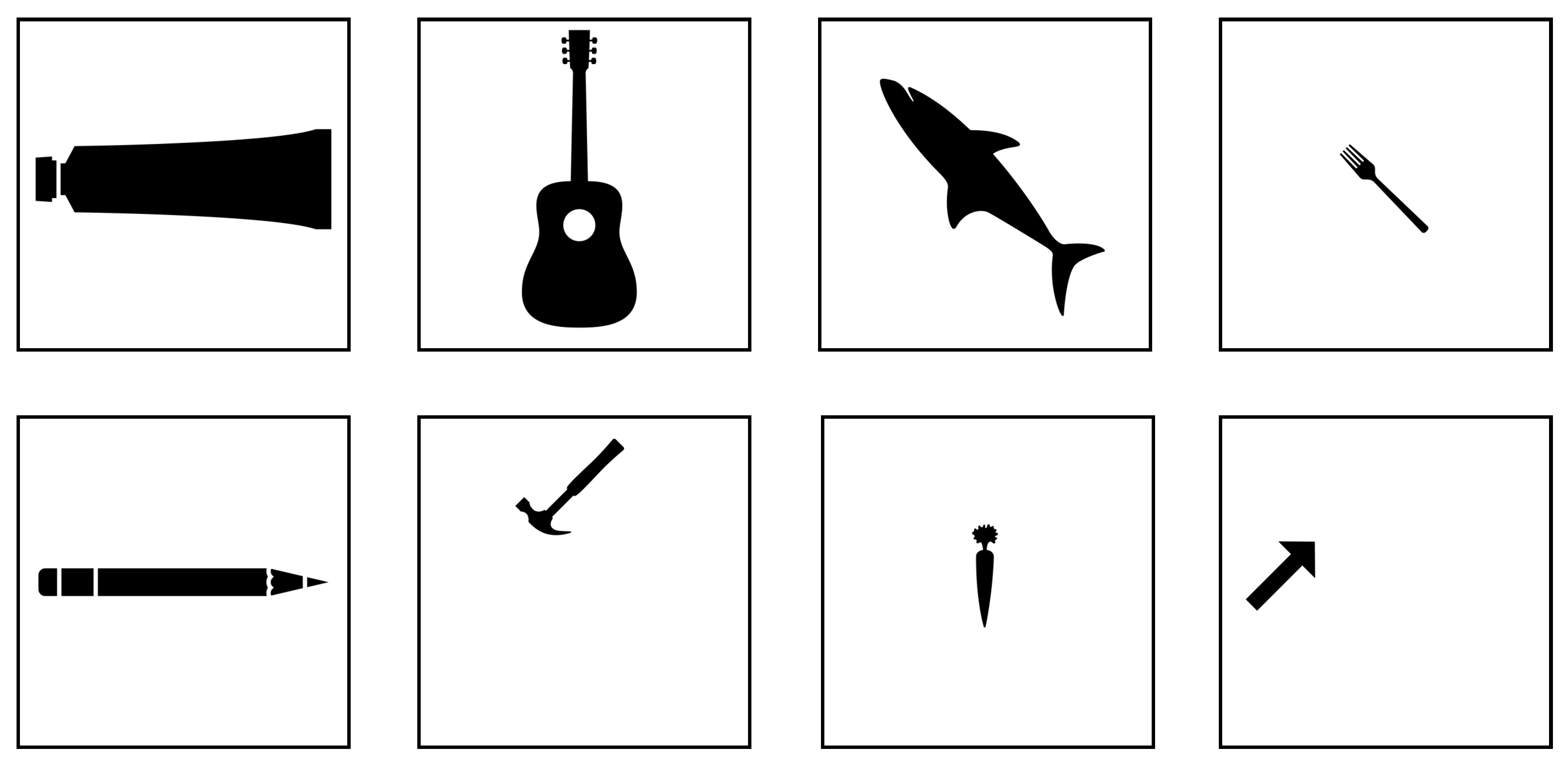

2. Mechanism and System

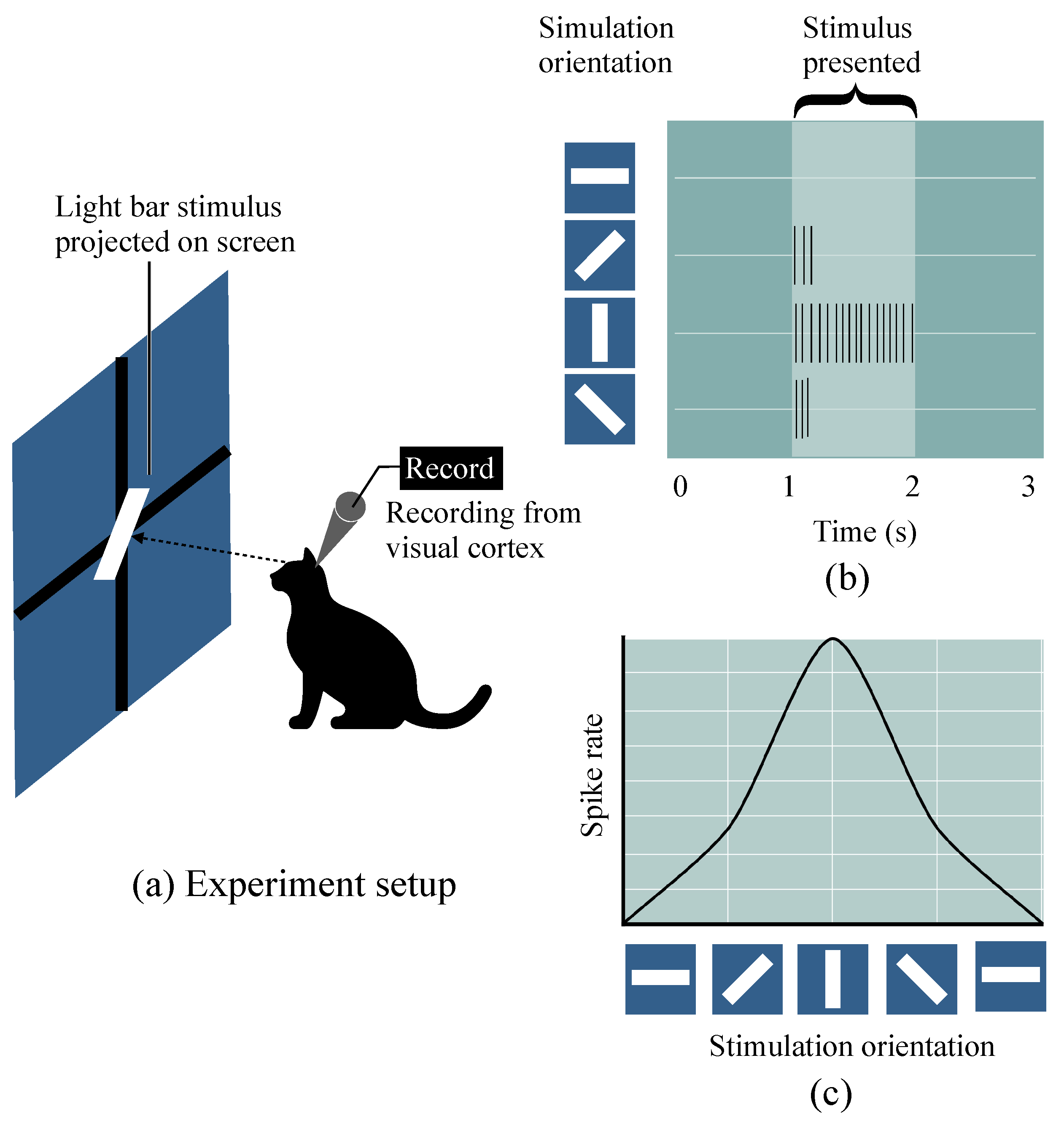

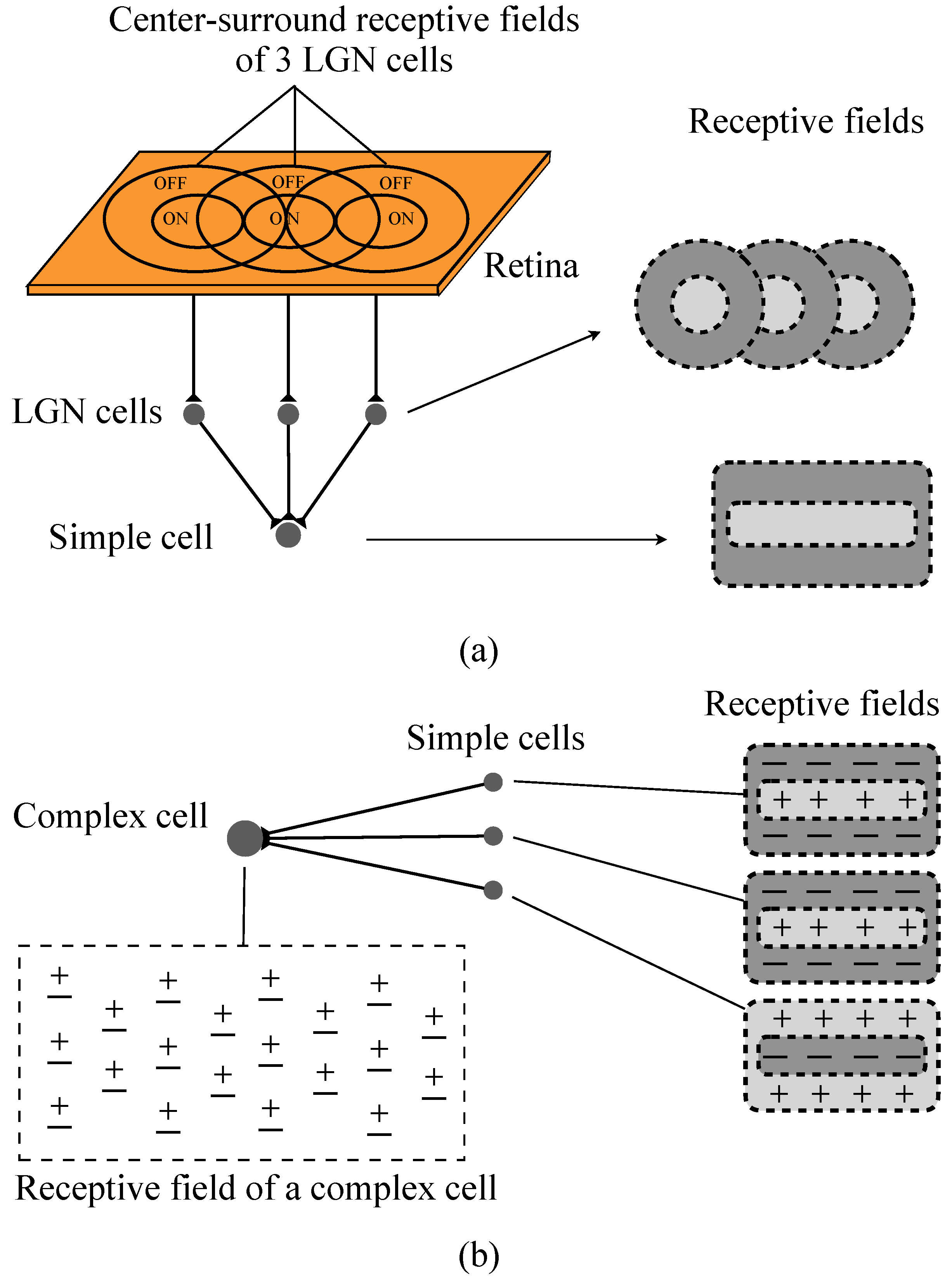

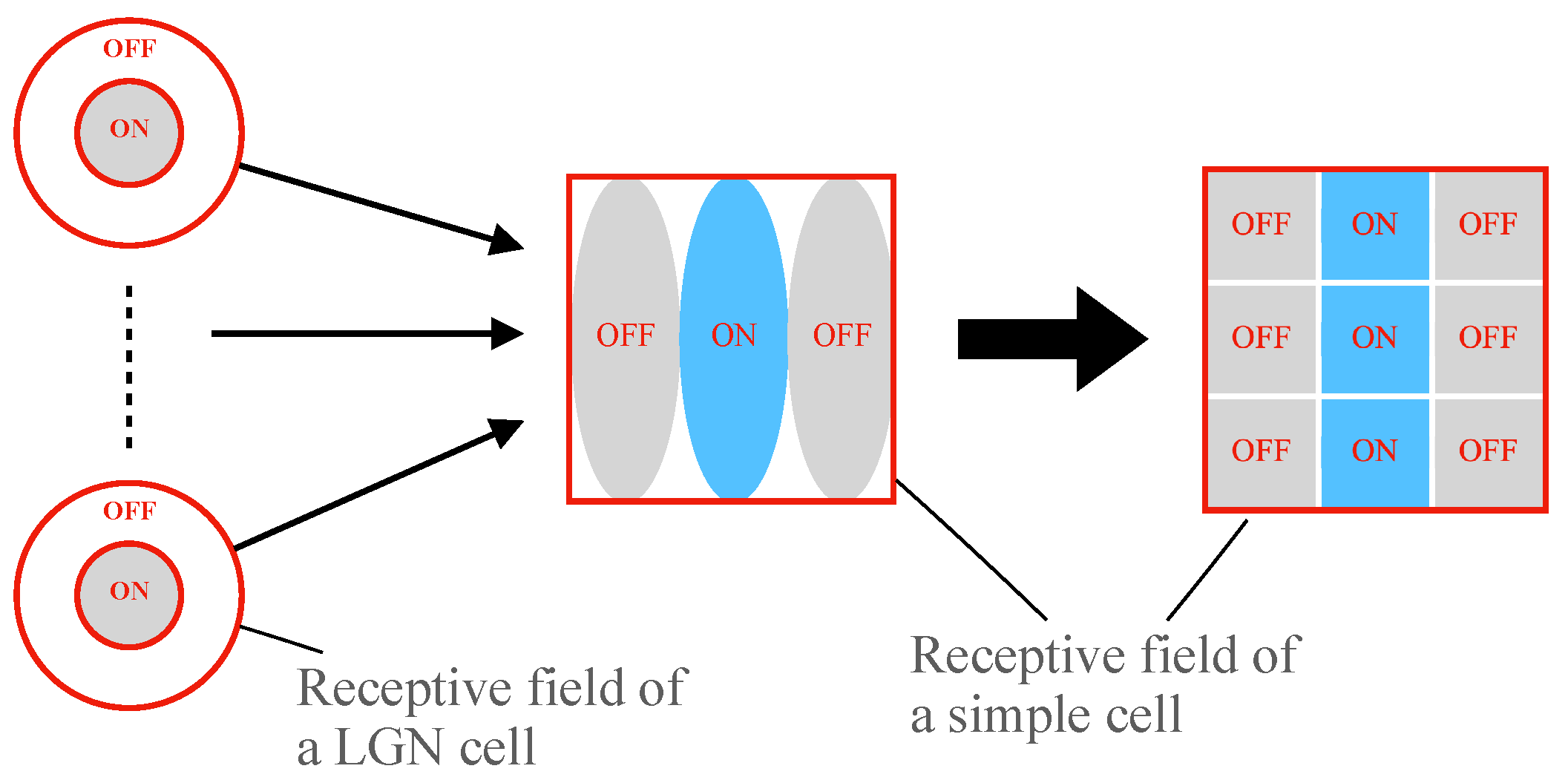

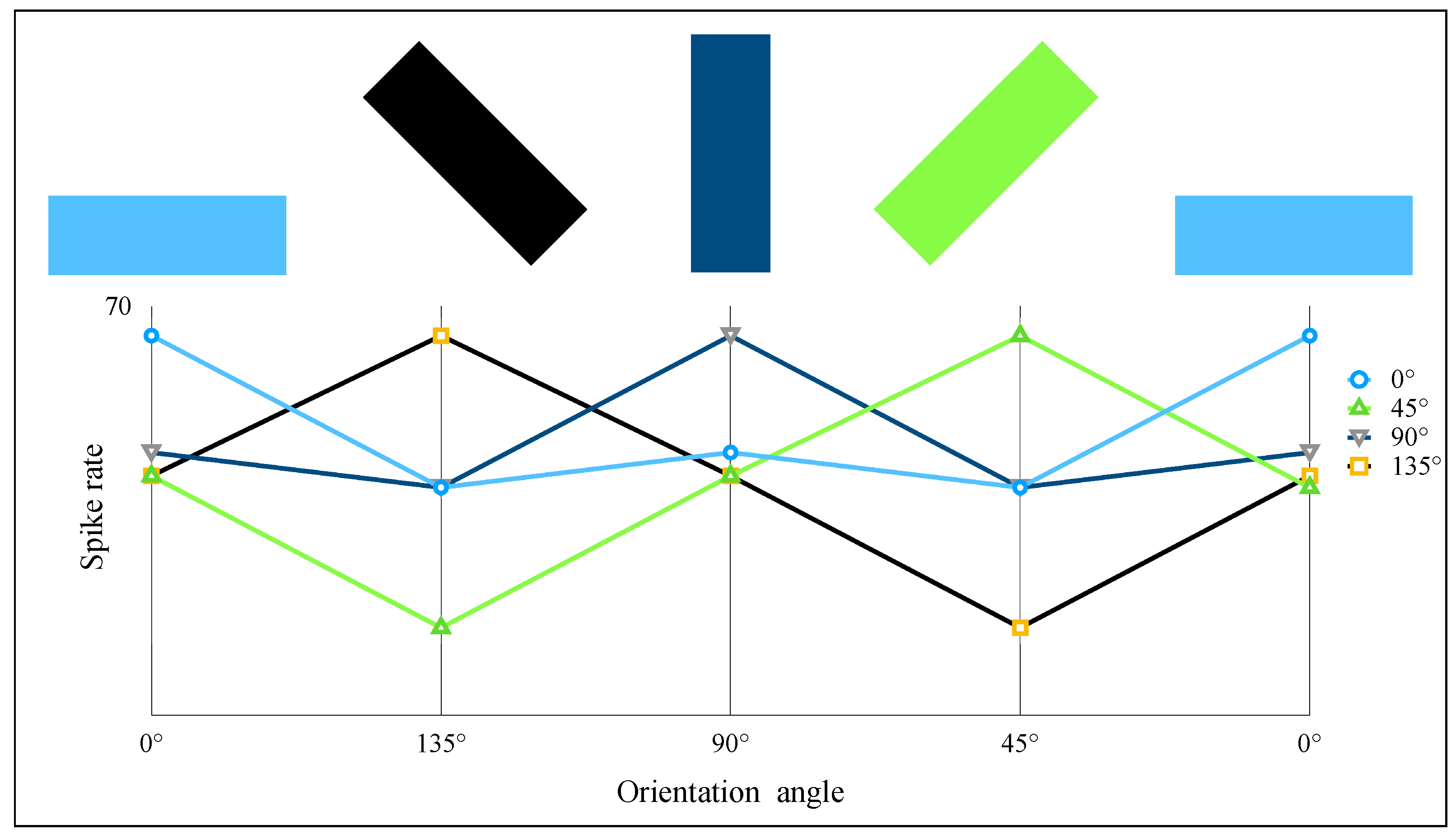

2.1. Hubel–Wiesel Model

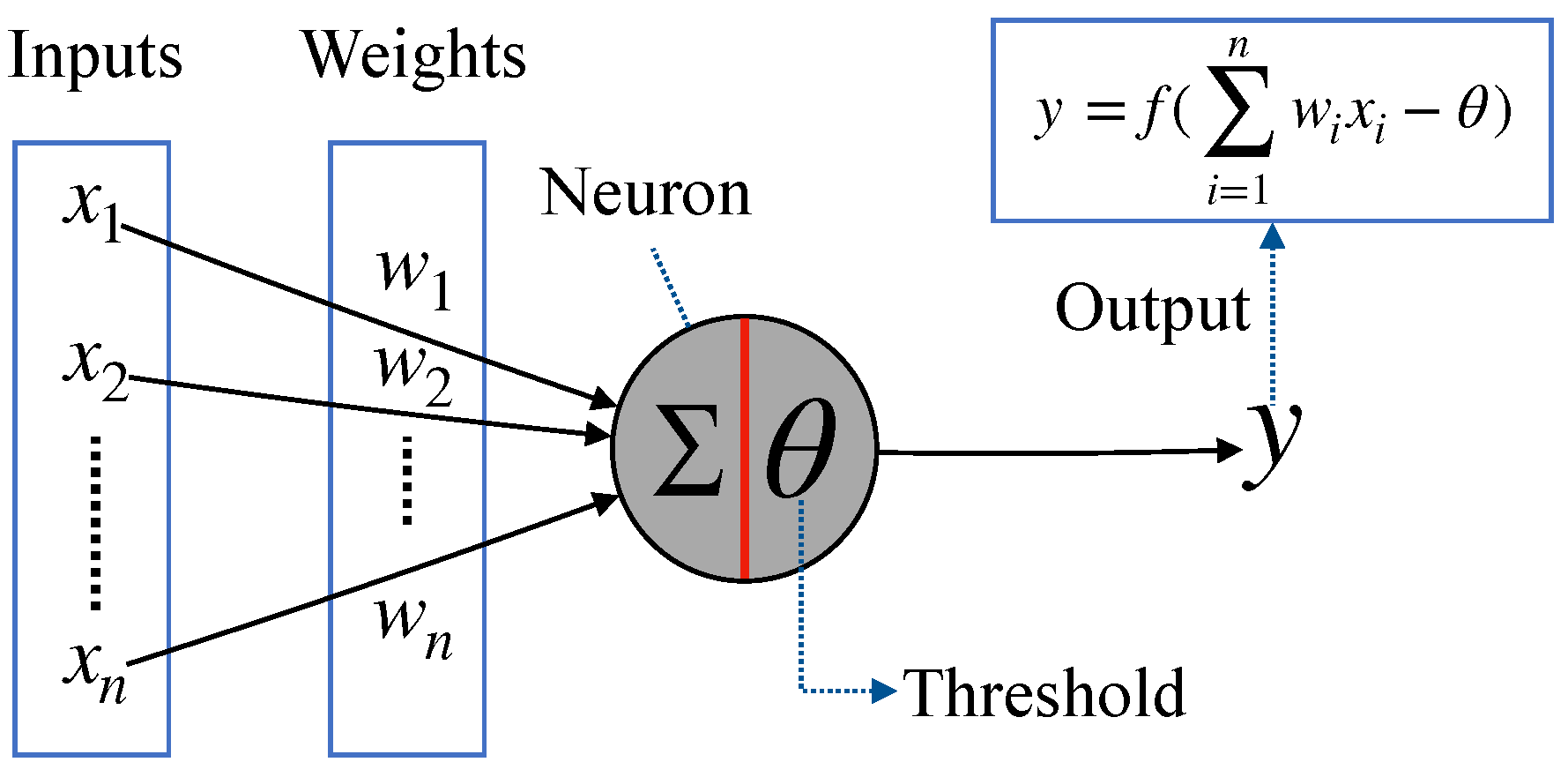

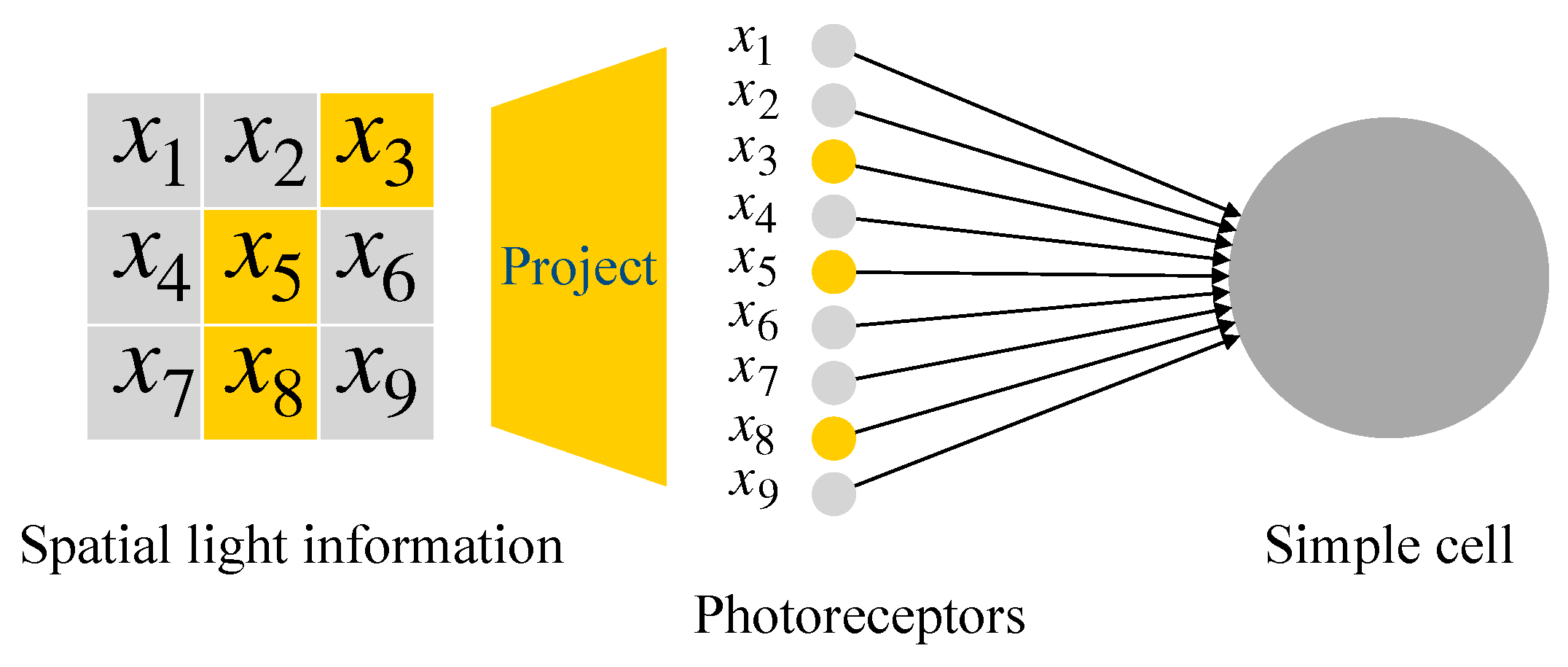

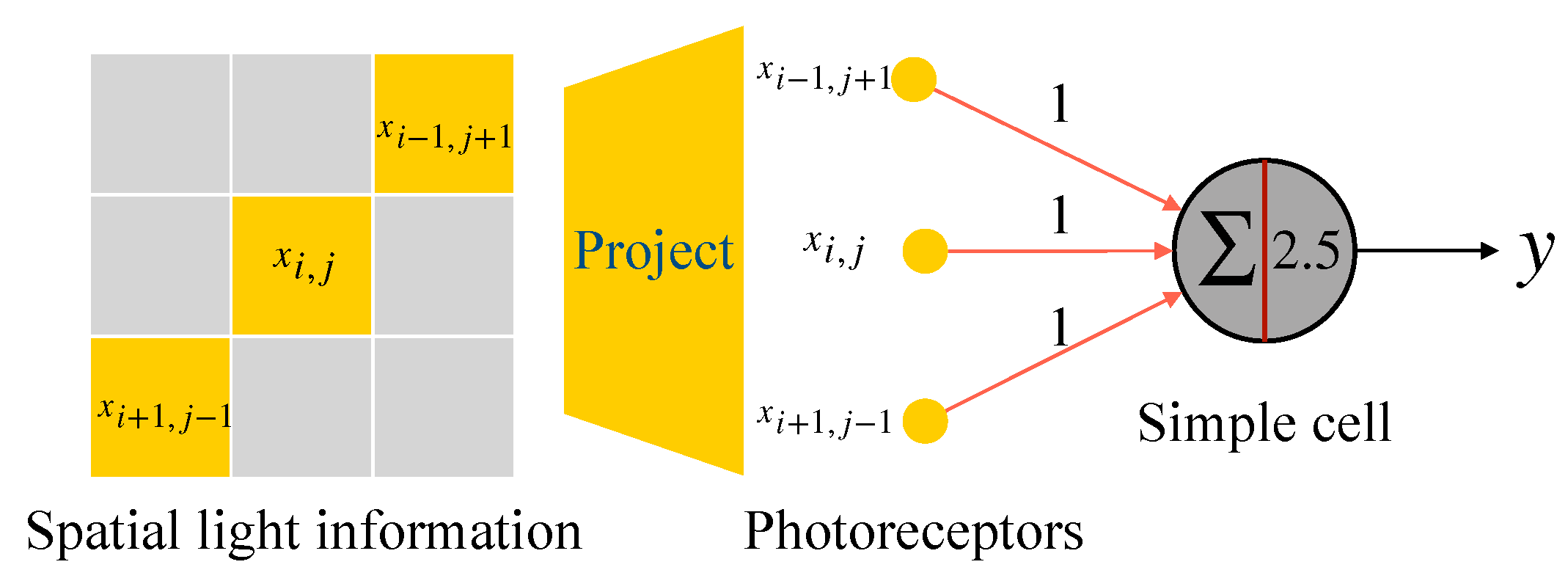

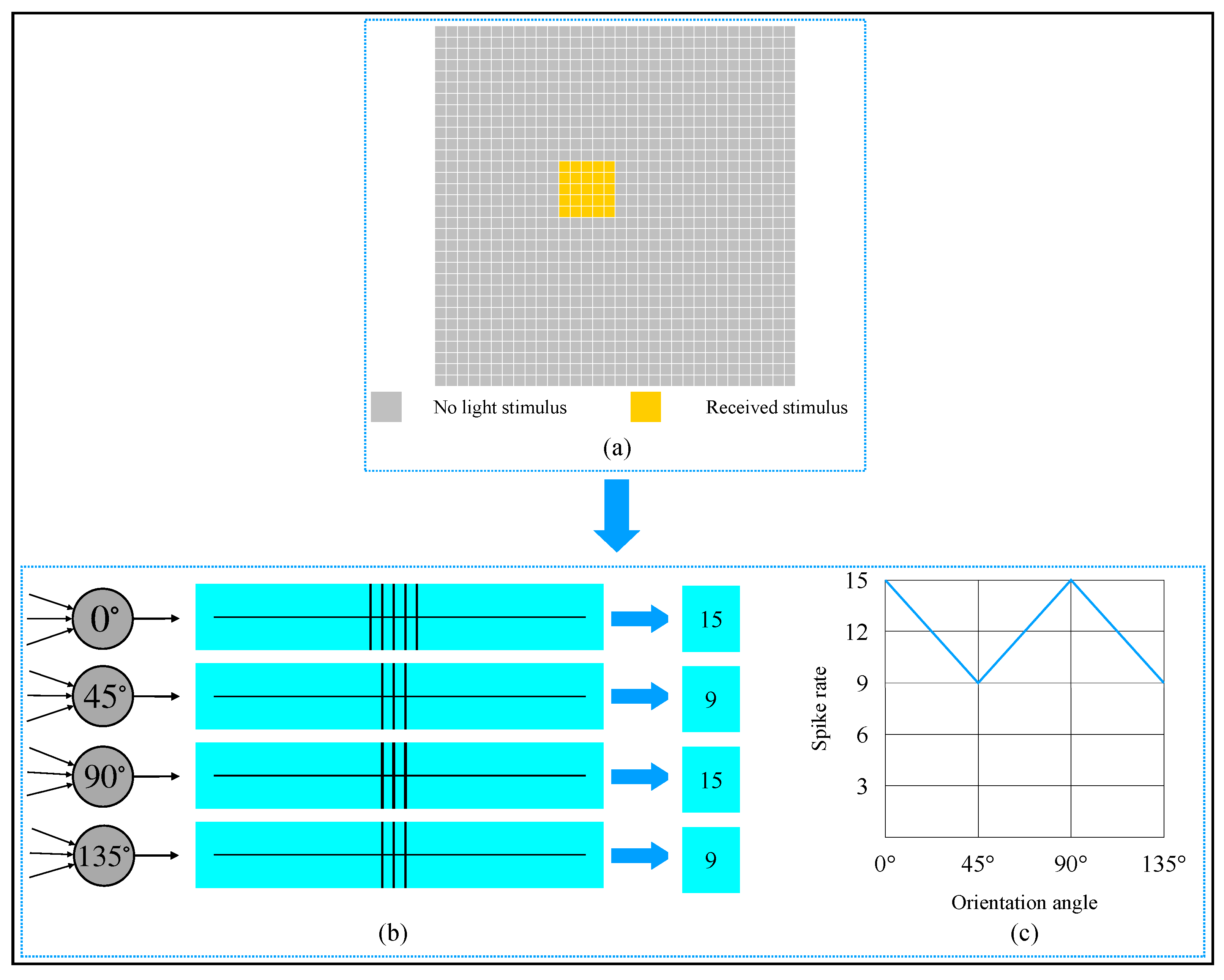

2.2. McCulloch-Pitts Neuron Model

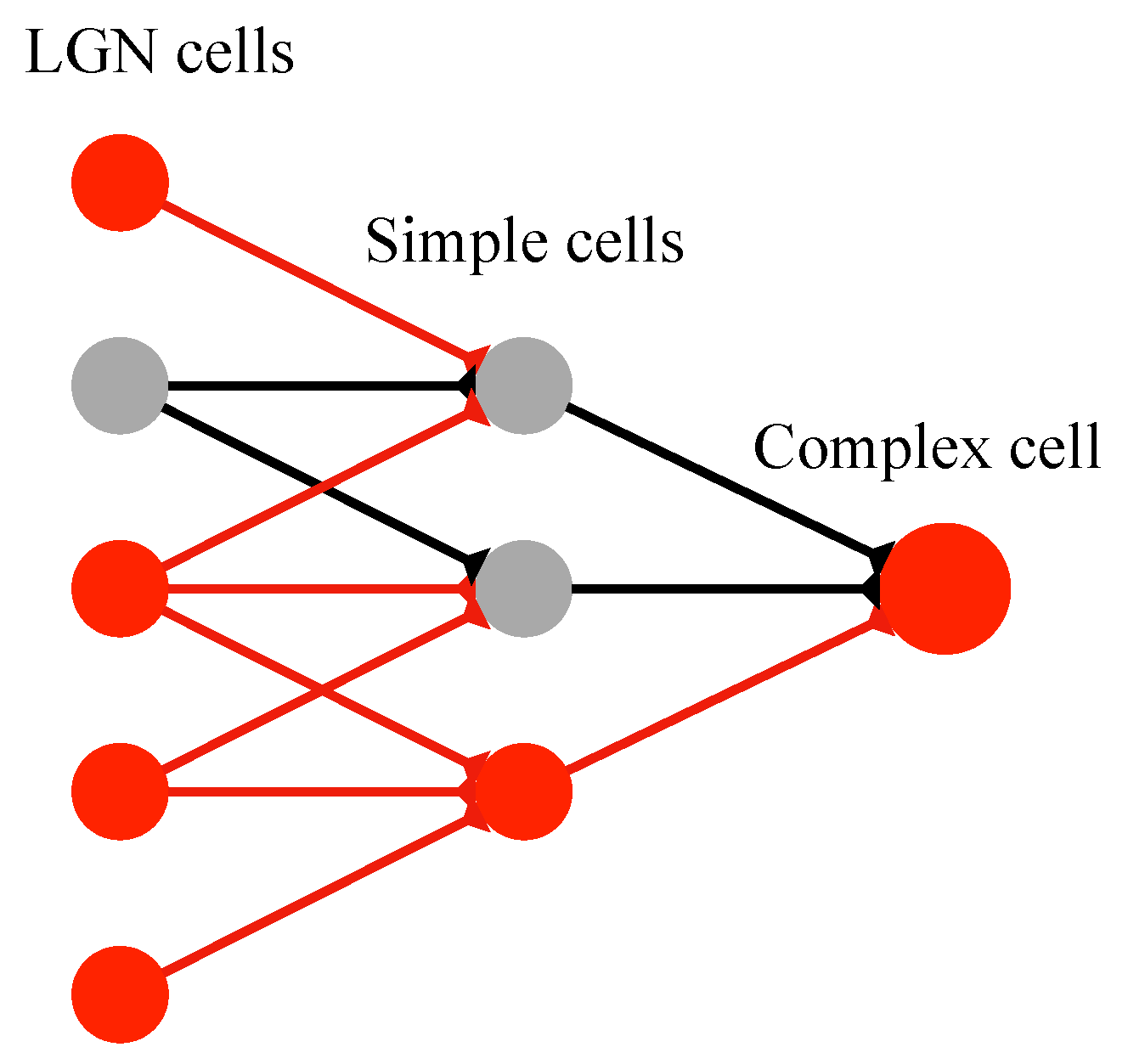

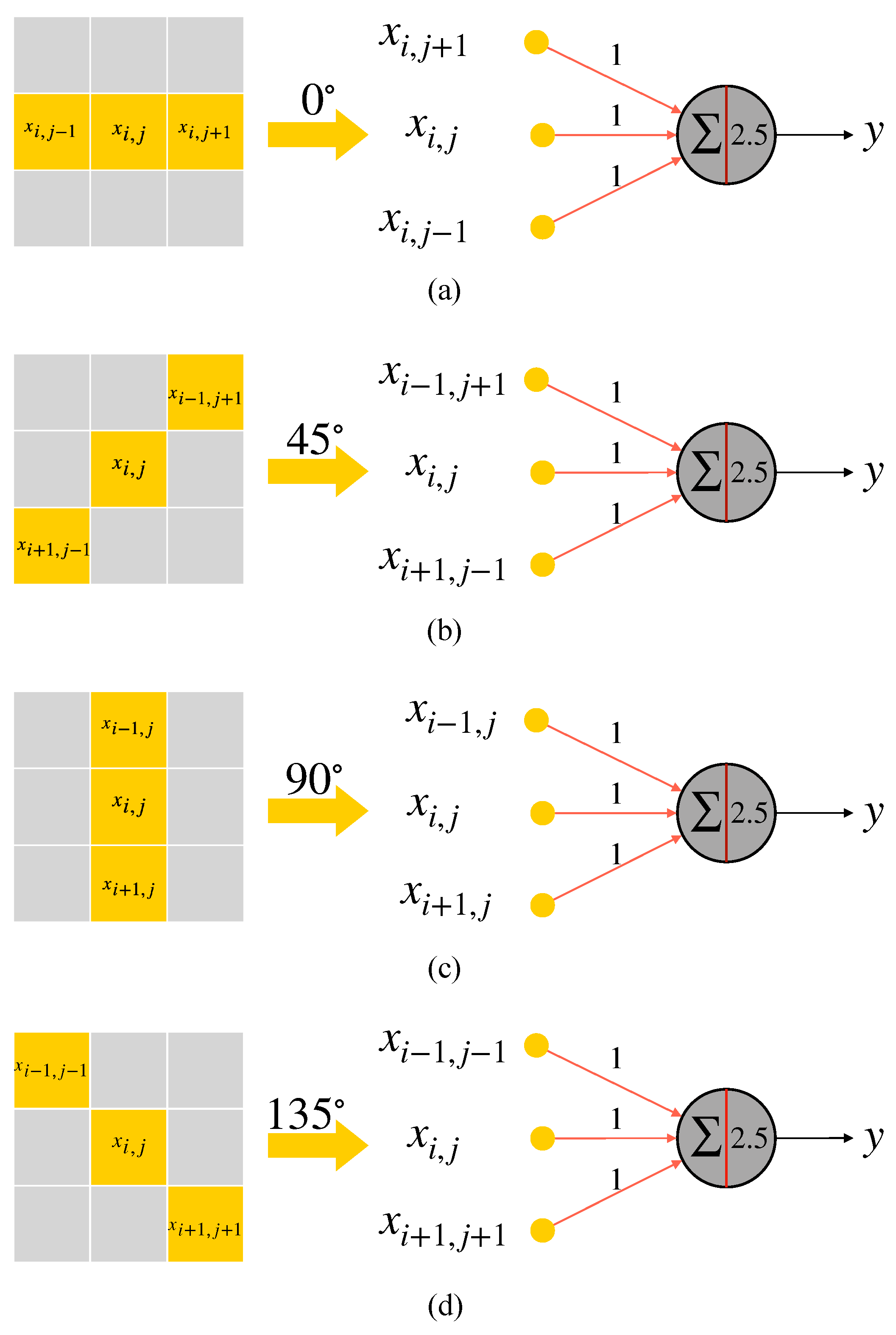

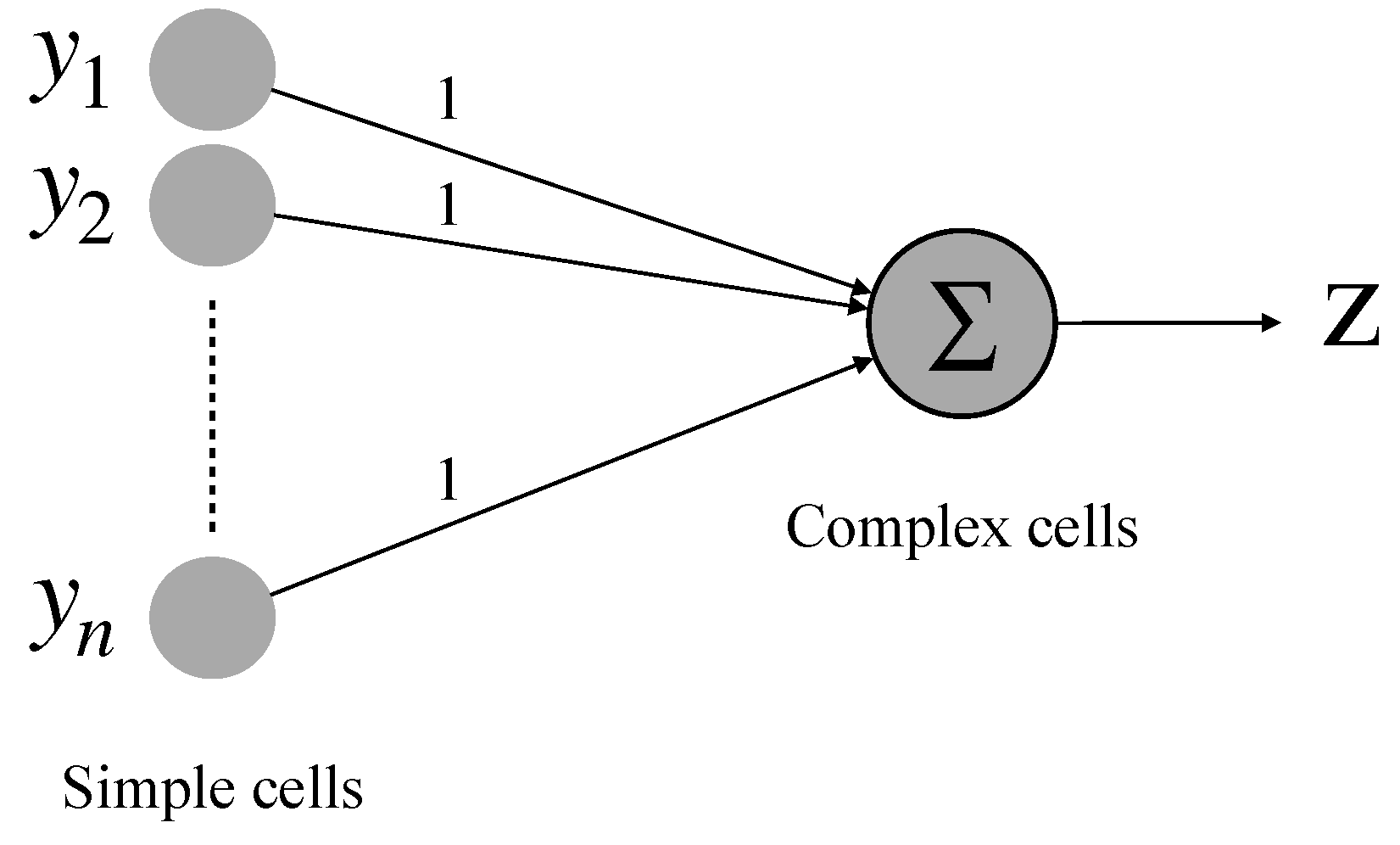

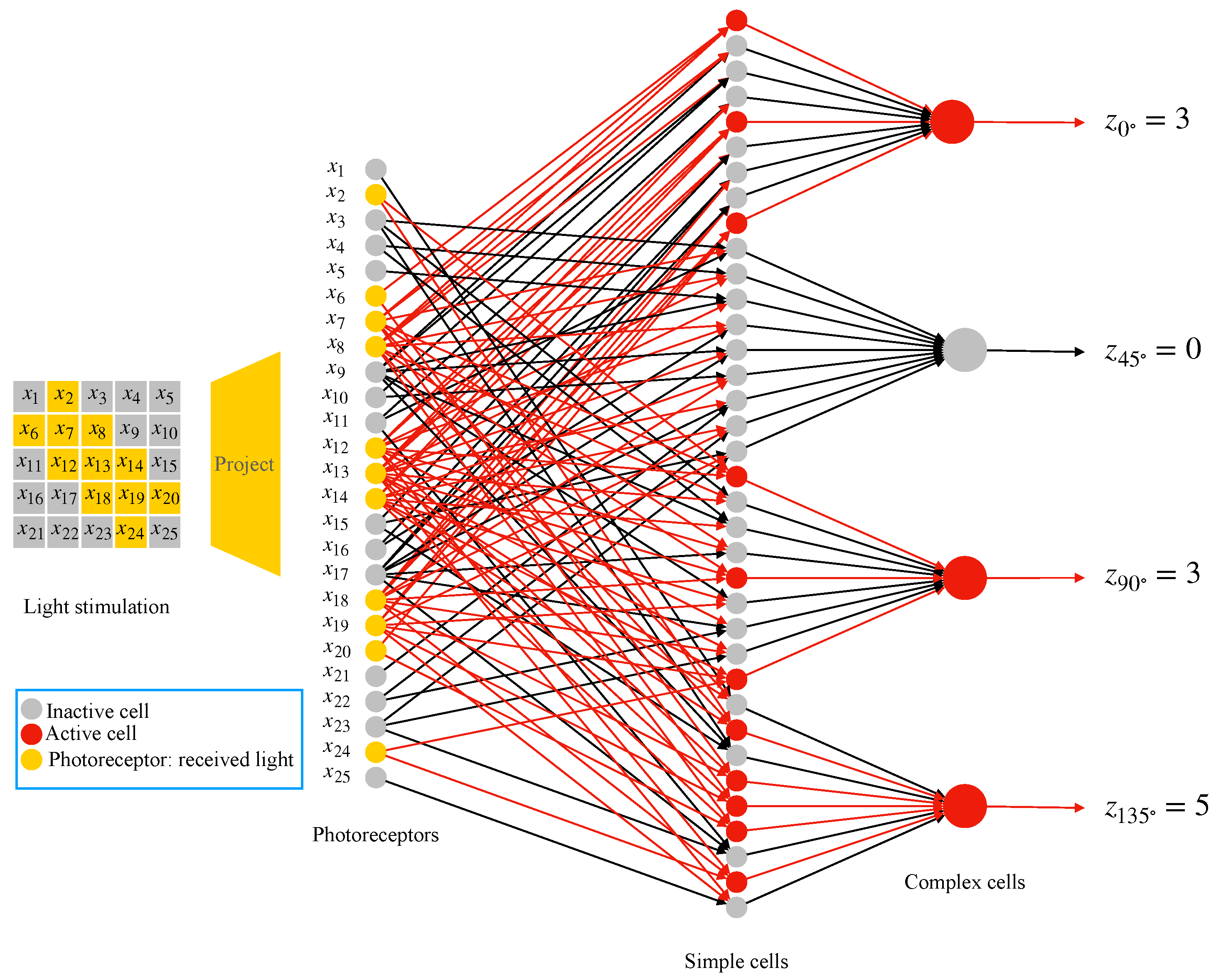

2.3. Realization of Simple Cell and Complex Cell

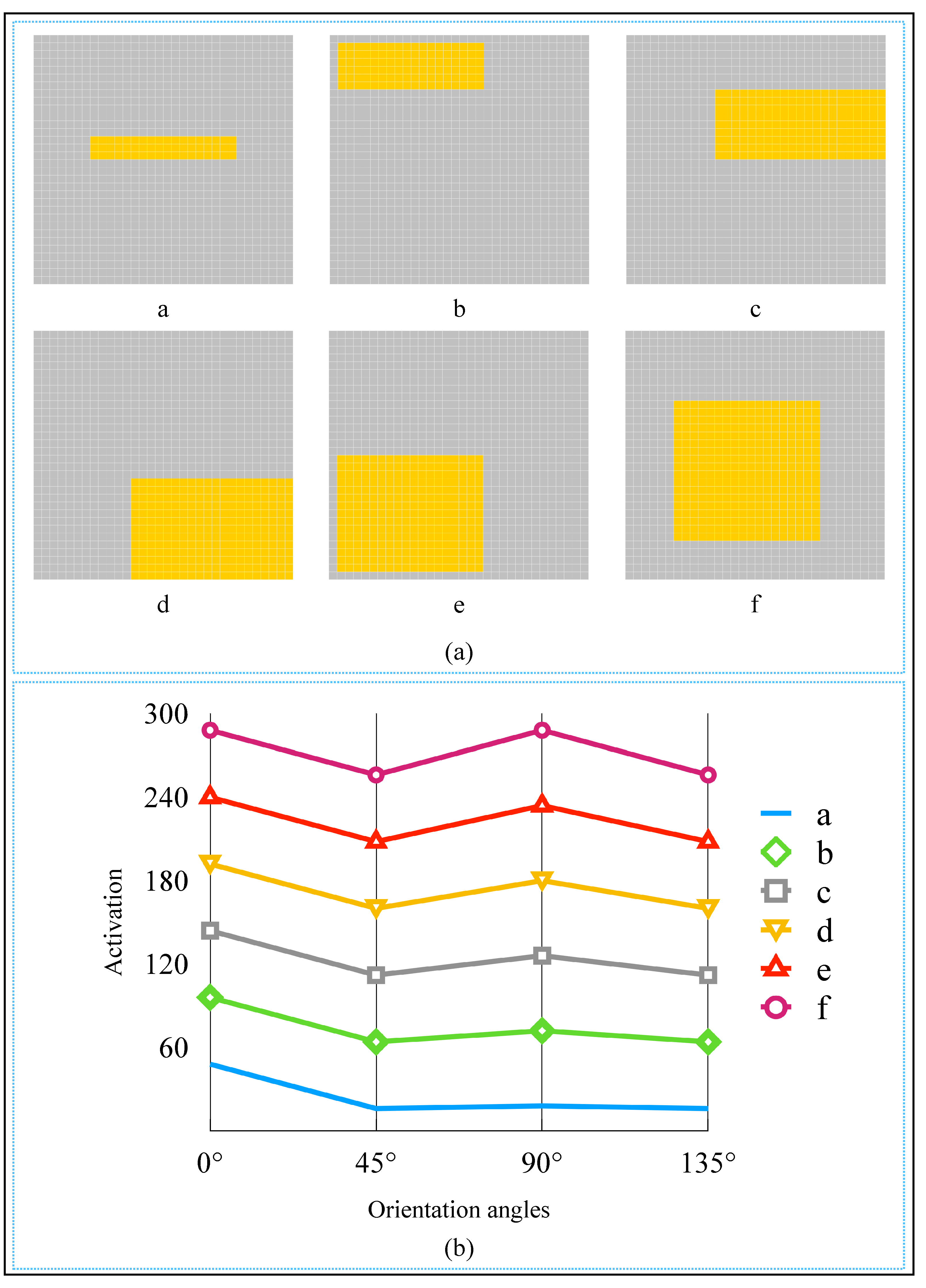

2.4. AVS for Global Orientation Detection

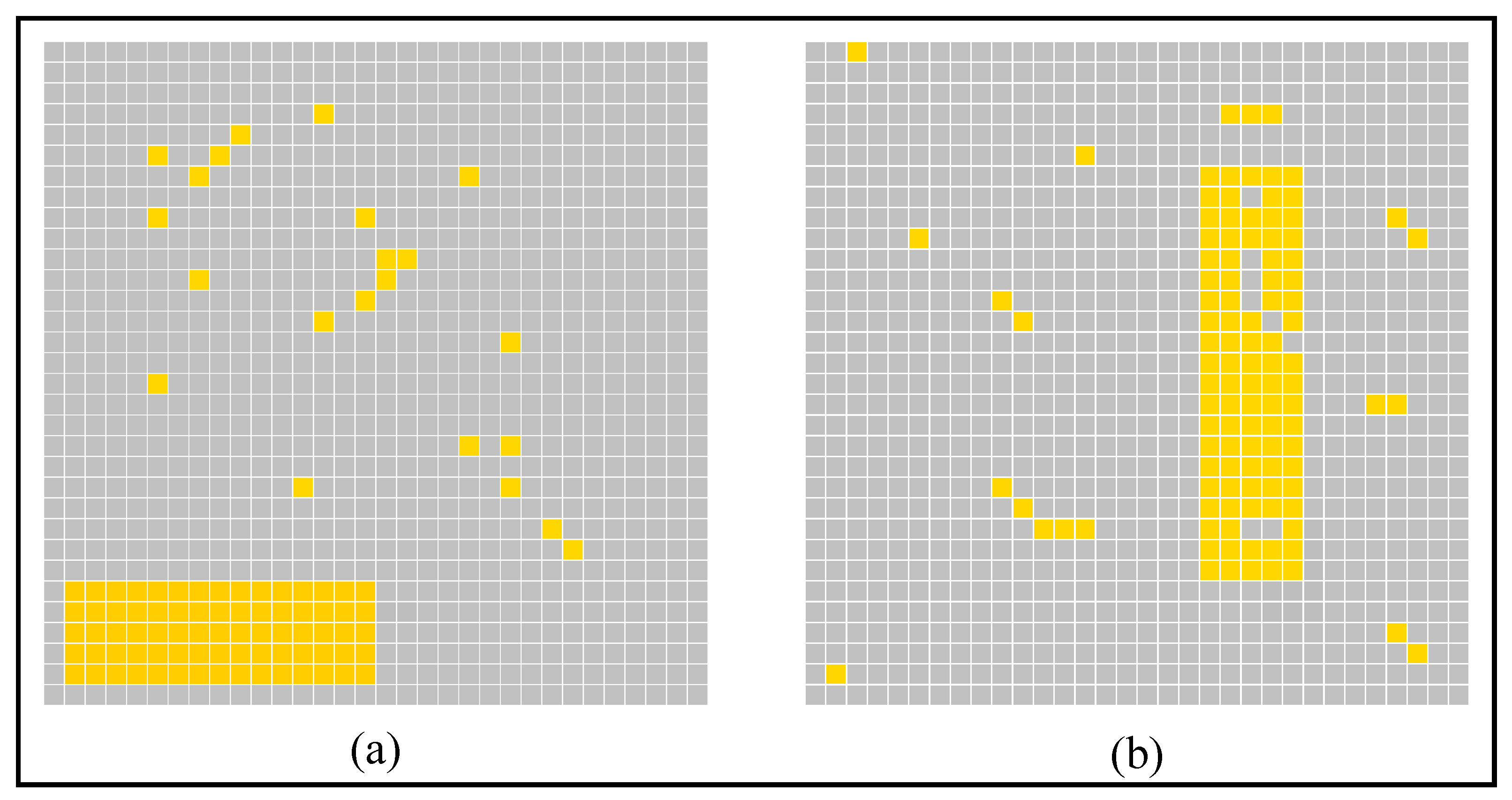

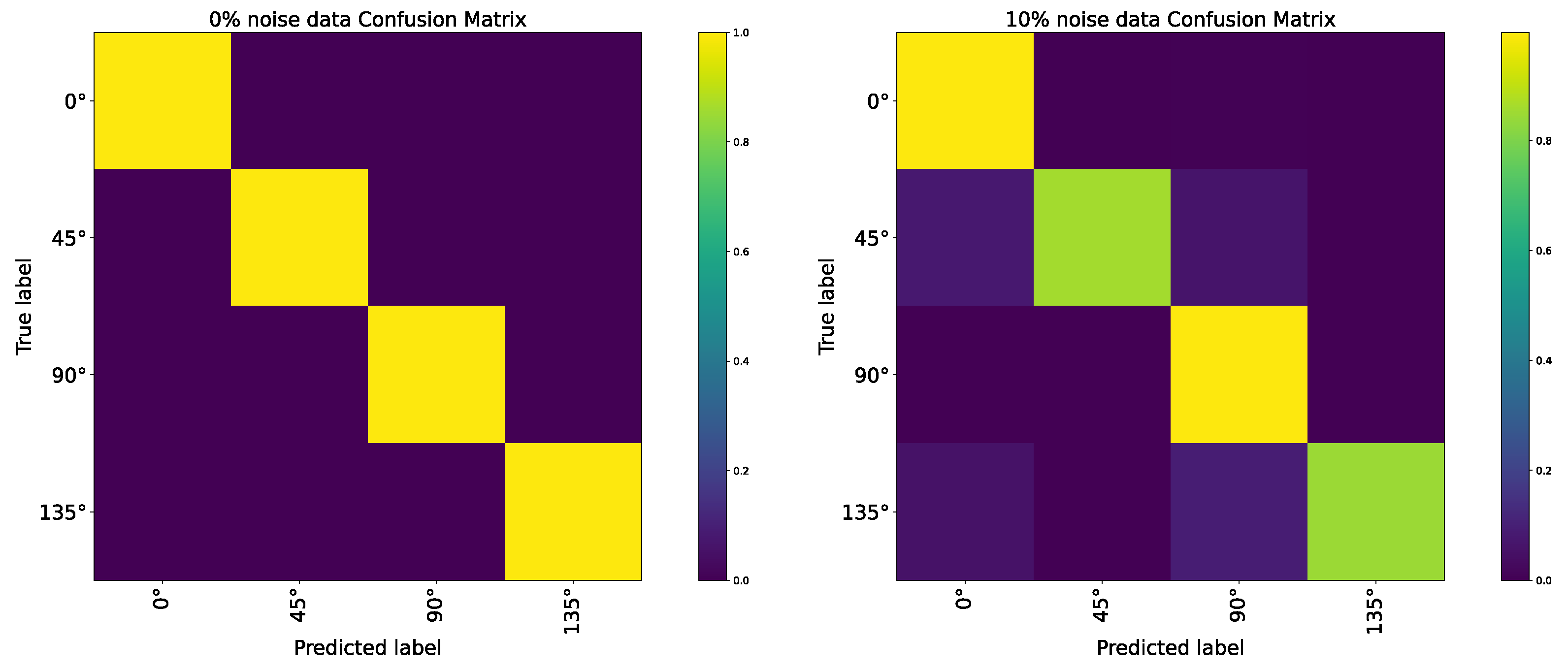

3. Simulation and Result

4. Discussion

- Effectiveness; AVS could achieve 100% accuracy on ideal shape object datasets and natural objects with a particular orientation, which showed that our detection mechanism and the mechanism-based AVS could effectively detect the orientation of an object with distinct locations and sizes. The simulation results of biology-inspired experiments also showed that AVS is effective and highly consistent with real physiological experiments [12].

- Robustness; compared with CNN on orientation detection tasks, AVS costs fewer computation resources than CNN but has better performance and noise resistance.

- Interpretability; the mechanism, structure, and parameters of the AVS for global orientation detection were all designed from HW physiological model, so AVS does not need learning and saves many training resources. The CNN method is a black-box operation and usually requires more training data or deeper networks to improve noise immunity and has requirements on input data’s size. The AVS method does not need more layers and is easier to be accepted and trust. The calculation of the AVS is straightforward, and the image size is no need to fit the AVS so that its hardware implementation is also more straightforward than that of CNN. Even if we want to train AVS, we can use the perceptron algorithm instead of the MP neuron model. The AVS training can start from a better and reasonable initial condition to accelerate the learning process and prevent local minimums.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AVS | Artificial Visual System |

| CNN | Convolutional Neural Network |

| HW | Hubel–Wiesel |

| MP | McCulloch-Pitts |

References

- Todo, Y.; Tang, Z.; Todo, H.; Ji, J.; Yamashita, K. Neurons with Multiplicative Interactions of Nonlinear Synapses. Int. J. Neural Syst. 2019, 29, 1950012. [Google Scholar] [CrossRef] [PubMed]

- Fiske, S.T.; Taylor, S.E. Social Cognition, 2nd ed.; McGraw-Hill Education: New York, NY, USA, 1991. [Google Scholar]

- Medina, J. Brain Rules: 12 Principles for Surviving and Thriving at Work, Home, and School; Pear Press: Seattle, WA, USA, 2009. [Google Scholar]

- Viviani, P.; Aymoz, C. Colour, form, and movement are not perceived simultaneously. Vis. Res. 2001, 41, 2909–2918. [Google Scholar] [CrossRef] [Green Version]

- Mauss, A.S.; Vlasits, A.; Borst, A.; Feller, M. Visual Circuits for Direction Selectivity. Annu. Rev. Neurosci. 2017, 40, 211–230. [Google Scholar] [CrossRef]

- Nguyen, G.; Freeman, A.W. A model for the origin and development of visual orientation selectivity. PLoS Comput. Biol. 2019, 15, e1007254. [Google Scholar] [CrossRef] [Green Version]

- Chariker, L.; Shapley, R.; Young, L. Orientation Selectivity from Very Sparse LGN Inputs in a Comprehensive Model of Macaque V1 Cortex. J. Neurosci. 2016, 36, 12368–12384. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Roth, Z.N.; Heeger, D.J.; Merriam, E.P. Stimulus vignetting and orientation selectivity in human visual cortex. eLife 2018, 7, e37241. [Google Scholar] [CrossRef]

- Garg, A.K.; Li, P.; Rashid, M.S.; Callaway, E.M. Color and orientation are jointly coded and spatially organized in primate primary visual cortex. Science 2019, 364, 1275–1279. [Google Scholar] [CrossRef]

- Hubel, D.H. Exploration of the primary visual cortex, 1955–1978. Nature 1982, 299, 515–524. [Google Scholar] [CrossRef]

- Hubel, D.H.; Wiesel, T.N. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 1962, 160, 106–154. [Google Scholar] [CrossRef]

- Hubel, D.H.; Wiesel, T.N. Receptive fields of single neurones in the cat’s striate cortex. J. Physiol. 1959, 148, 574–591. [Google Scholar] [CrossRef]

- Hubel, D.H.; Wiesel, T.N. Receptive fields and functional architecture of monkey striate cortex. J. Physiol. 1968, 195, 215–243. [Google Scholar] [CrossRef] [PubMed]

- Alonso, J.M.; Martinez, L.M. Functional connectivity between simple cells and complex cells in cat striate cortex. Nat. Neurosci. 1998, 1, 395–403. [Google Scholar] [CrossRef] [PubMed]

- Antolik, J.; Bednar, J. Development of Maps of Simple and Complex Cells in the Primary Visual Cortex. Front. Comput. Neurosci. 2011, 5, 17. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Blasdel, G. Orientation selectivity, preference, and continuity in monkey striate cortex. J. Neurosci. 1992, 12, 3139–3161. [Google Scholar] [CrossRef] [PubMed]

- Kandel, E.R. Principles of Neural Science; McGraw-Hill Medical: New York, NY, USA, 2012. [Google Scholar]

- Reid, R.C.; Alonso, J.M. Specificity of monosynaptic connections from thalamus to visual cortex. Nature 1995, 378, 281. [Google Scholar] [CrossRef] [PubMed]

- Ferster, D.; Chung, S.; Wheat, H. Orientation selectivity of thalamic input to simple cells of cat visual cortex. Nature 1996, 380, 249–252. [Google Scholar] [CrossRef] [PubMed]

- Hubel, D. A big step along the visual pathway. Nature 1996, 380, 197–198. [Google Scholar] [CrossRef]

- Reid, R.C.; Alonso, J.M. Introduction to Principal Components Analysis. Quasars Cosmol. 1999, 162, 363–372. [Google Scholar]

- Knutsson, H. Filtering and Reconstruction in Image Processing. Ph.D. Thesis, Linköping University, Linköping, Sweden, 1982. [Google Scholar]

- Veeser, S.; Cumming, D. Object Position and Orientation Detection System. U.S. Patent 9,536,163, 3 January 2017. [Google Scholar]

- Chen, Y.; Gong, W.; Chen, C.; Li, W. Learning Orientation-Estimation Convolutional Neural Network for Building Detection in Optical Remote Sensing Image. In Proceedings of the 2018 Digital Image Computing: Techniques and Applications (DICTA), Canberra, ACT, Australia, 10–13 December 2018; pp. 1–8. [Google Scholar] [CrossRef] [Green Version]

- Fischer, P.; Dosovitskiy, A.; Brox, T. Image Orientation Estimation with Convolutional Networks. In Pattern Recognition; Gall, J., Gehler, P., Leibe, B., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 368–378. [Google Scholar]

- De Aliva, A. Object Orientation Detection and Correction Using Computer Vision. Master’s Thesis, St. Cloud State University, St. Cloud, MN, USA, 2020. [Google Scholar]

- Xu, Y.; Lv, C.; Li, S.; Xin, P.; Ma, S.; Zou, H.; Zhang, W. Review of development of visual neural computing. Comput. Eng. Appl. 2017, 24, 30–34. [Google Scholar]

- Kumar, M.; Som, T.; Kim, J. A transparent photonic artificial visual cortex. Adv. Mater. 2019, 31, 1903095. [Google Scholar] [CrossRef]

- Kwon, S.M.; Cho, S.W.; Kim, M.; Heo, J.S.; Kim, Y.H.; Park, S.K. Environment-adaptable artificial visual perception behaviors using a light-adjustable optoelectronic neuromorphic device array. Adv. Mater. 2019, 31, 1906433. [Google Scholar] [CrossRef] [PubMed]

- Hao, D.; Zhang, J.; Dai, S.; Zhang, J.; Huang, J. Perovskite/organic semiconductor-based photonic synaptic transistor for artificial visual system. ACS Appl. Mater. Interfaces 2020, 12, 39487–39495. [Google Scholar] [CrossRef] [PubMed]

- Lian, Y.; Almasi, A.; Grayden, D.B.; Kameneva, T.; Burkitt, A.N.; Meffin, H. Learning receptive field properties of complex cells in V1. PLoS Comput. Biol. 2021, 17, e1007957. [Google Scholar] [CrossRef] [PubMed]

- Karklin, Y.; Lewicki, M.S. Emergence of complex cell properties by learning to generalize in natural scenes. Nature 2009, 457, 83–86. [Google Scholar] [CrossRef] [PubMed]

- Miller, K.D. A model for the development of simple cell receptive fields and the ordered arrangement of orientation columns through activity-dependent competition between ON-and OFF-center inputs. J. Neurosci. 1994, 14, 409–441. [Google Scholar] [CrossRef] [PubMed]

- Olague, G.; Clemente, E.; Hernandez, D.E.; Barrera, A.; Chan-Ley, M.; Bakshi, S. Artificial visual cortex and random search for object categorization. IEEE Access 2019, 7, 54054–54072. [Google Scholar] [CrossRef]

- Ullman, S. Artificial intelligence and the brain: Computational studies of the visual system. Annu. Rev. Neurosci. 1986, 9, 1–26. [Google Scholar] [CrossRef]

- Barranco, F.; Díaz, J.; Ros, E.; Del Pino, B. Visual system based on artificial retina for motion detection. IEEE Trans. Syst. Man Cybern. Part (Cybern.) 2009, 39, 752–762. [Google Scholar] [CrossRef]

- Purves, D.; Augustine, G.J.; Fitzpatrick, D.; Hall, W.C.; LaMantia, A.-S.; McNamara, J.O.; Williams, S.M. Neuroscience, 3rd ed.; Sinauer Associates: Sunderland, UK, 2004. [Google Scholar]

- Bear, M.; Connors, B.; Paradiso, M.A. Neuroscience: Exploring the Brain; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2002. [Google Scholar]

- Zhang, L.; Zhang, B. A geometrical representation of McCulloch-Pitts neural model and its applications. IEEE Trans. Neural Netw. 1999, 10, 925–929. [Google Scholar] [CrossRef]

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

| Image Data | 0 | 45 | 90 | 135 | |

|---|---|---|---|---|---|

| No. of samples | 960 | 900 | 960 | 900 | |

| 3 pixel | Correct numbers | 960 | 900 | 960 | 900 |

| Accuracy | 100% | 100% | 100% | 100% | |

| No. of samples | 928 | 841 | 928 | 841 | |

| 4 pixel | Correct numbers | 928 | 841 | 928 | 841 |

| Accuracy | 100% | 100% | 100% | 100% | |

| No. of samples | 1699 | 2249 | 1699 | 2249 | |

| 8 pixel | Correct numbers | 1699 | 2249 | 1699 | 2249 |

| Accuracy | 100% | 100% | 100% | 100% | |

| No. of samples | 2379 | 3411 | 2379 | 3411 | |

| 12 pixel | Correct numbers | 2379 | 3411 | 2379 | 3411 |

| Accuracy | 100% | 100% | 100% | 100% | |

| No. of samples | 1319 | 1489 | 1319 | 1489 | |

| 16 pixel | Correct numbers | 1319 | 1489 | 1319 | 1489 |

| Accuracy | 100% | 100% | 100% | 100% | |

| No. of samples | 1284 | 1645 | 1284 | 1645 | |

| 32 pixel | Correct numbers | 1284 | 1645 | 1284 | 1645 |

| Accuracy | 100% | 100% | 100% | 100% | |

| No. of samples | 2515 | 1275 | 2515 | 1275 | |

| ≥48 pixel | Correct numbers | 2515 | 1275 | 2515 | 1275 |

| Accuracy | 100% | 100% | 100% | 100% | |

| Type of Noise | Proportion of Noise | ||||||

|---|---|---|---|---|---|---|---|

| 0% | 5% | 10% | 15% | 20% | 25% | 30% | |

| Background noise | 100% | 100% | 100% | 99.911% | 98.571% | 95.289% | 91.851% |

| Whole-image noise | 100% | 99.970% | 98.772% | 95.036% | 87.602% | 78.382% | 67.771% |

| Method | Proportion of Noise | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0% | 1% | 2% | 3% | 4% | 5% | 6% | 7% | 8% | 9% | 10% | |

| AVS | 100% | 100% | 100% | 100% | 99.963% | 99.963% | 99.926% | 99.739% | 99.330% | 99.032% | 98.772% |

| CNN | 100% | 85.225% | 55.862% | 43.431% | 39.747% | 37.514% | 36.323% | 34.090% | 32.006% | 29.996% | 29.028% |

| Method | Proportion of Noise | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0% | 1% | 2% | 3% | 4% | 5% | 6% | 7% | 8% | 9% | 10% | |

| AVS | 100% | 99.609% | 99.219% | 98.906% | 97.578% | 97.109% | 96.094% | 95.547% | 94.766% | 92.813% | 92.422% |

| CNN | 92.813% | 54.766% | 48.705% | 42.500% | 38.281% | 36.484% | 34.922% | 33.828% | 32.734% | 31.719% | 31.406% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, B.; Todo, Y.; Tang, Z. Artificial Visual System for Orientation Detection Based on Hubel–Wiesel Model. Brain Sci. 2022, 12, 470. https://doi.org/10.3390/brainsci12040470

Li B, Todo Y, Tang Z. Artificial Visual System for Orientation Detection Based on Hubel–Wiesel Model. Brain Sciences. 2022; 12(4):470. https://doi.org/10.3390/brainsci12040470

Chicago/Turabian StyleLi, Bin, Yuki Todo, and Zheng Tang. 2022. "Artificial Visual System for Orientation Detection Based on Hubel–Wiesel Model" Brain Sciences 12, no. 4: 470. https://doi.org/10.3390/brainsci12040470

APA StyleLi, B., Todo, Y., & Tang, Z. (2022). Artificial Visual System for Orientation Detection Based on Hubel–Wiesel Model. Brain Sciences, 12(4), 470. https://doi.org/10.3390/brainsci12040470