A Framework for Instantaneous Driver Drowsiness Detection Based on Improved HOG Features and Naïve Bayesian Classification

Abstract

1. Introduction

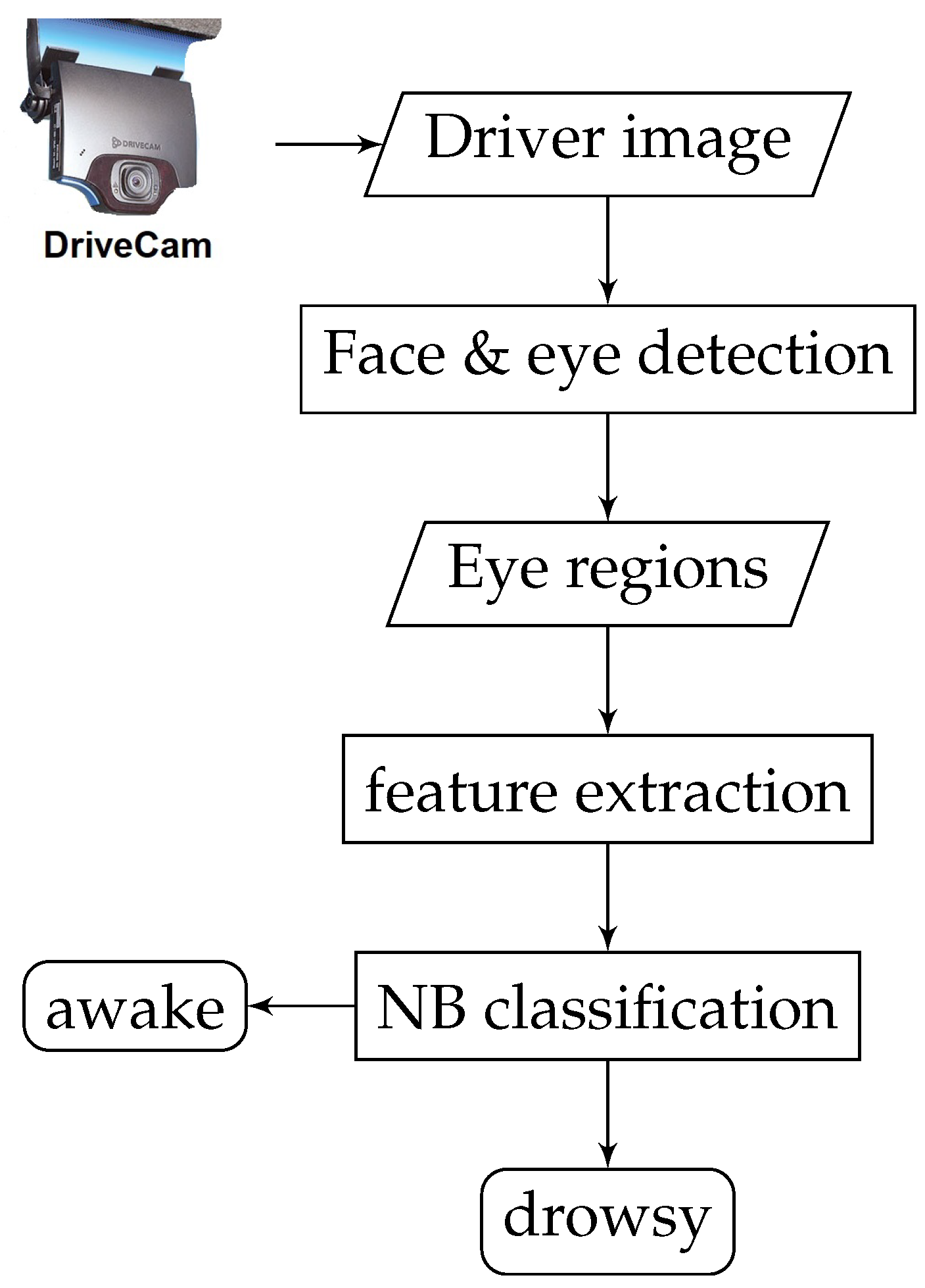

2. Proposed Architecture

2.1. Image Preprocessing

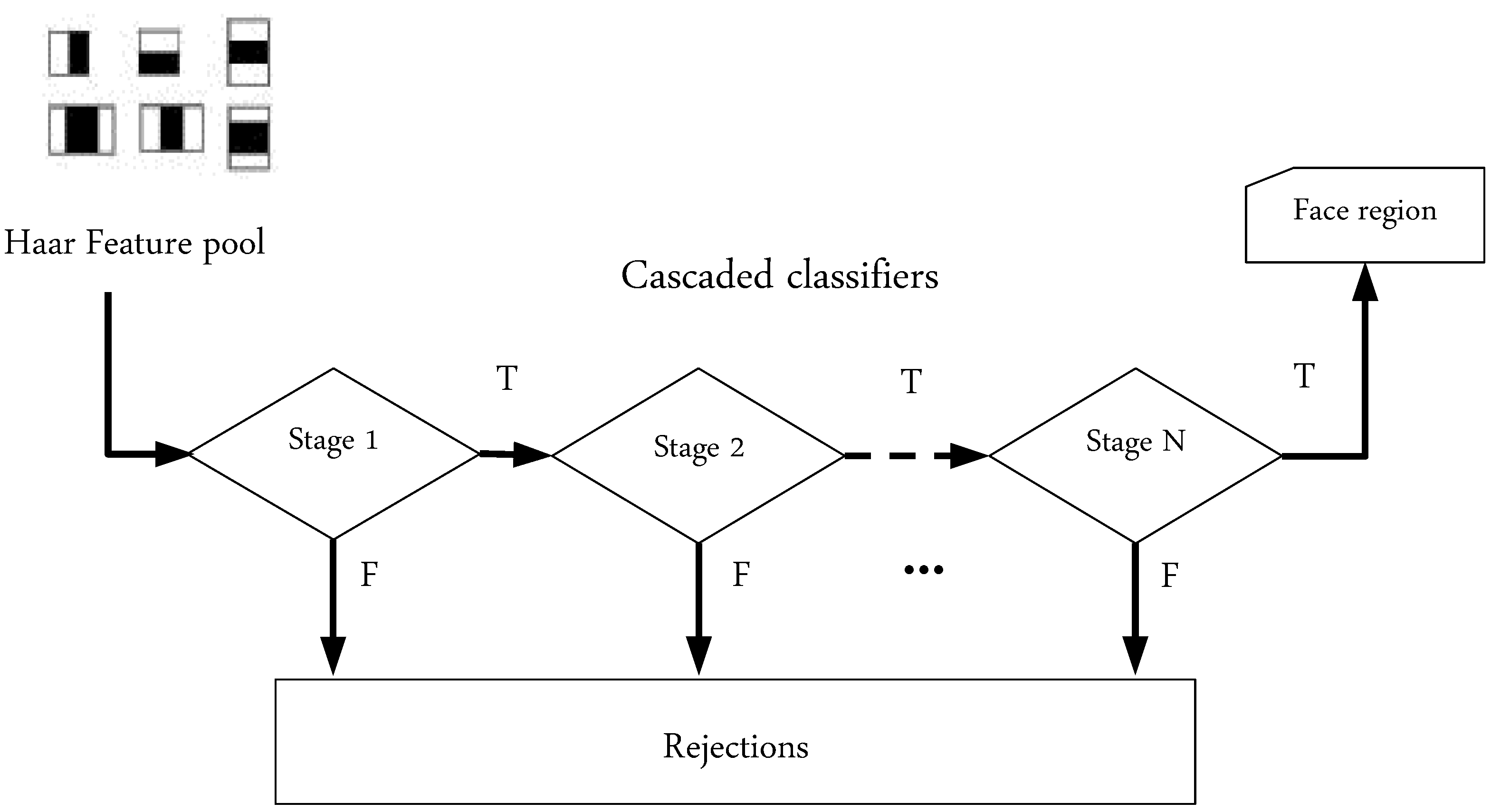

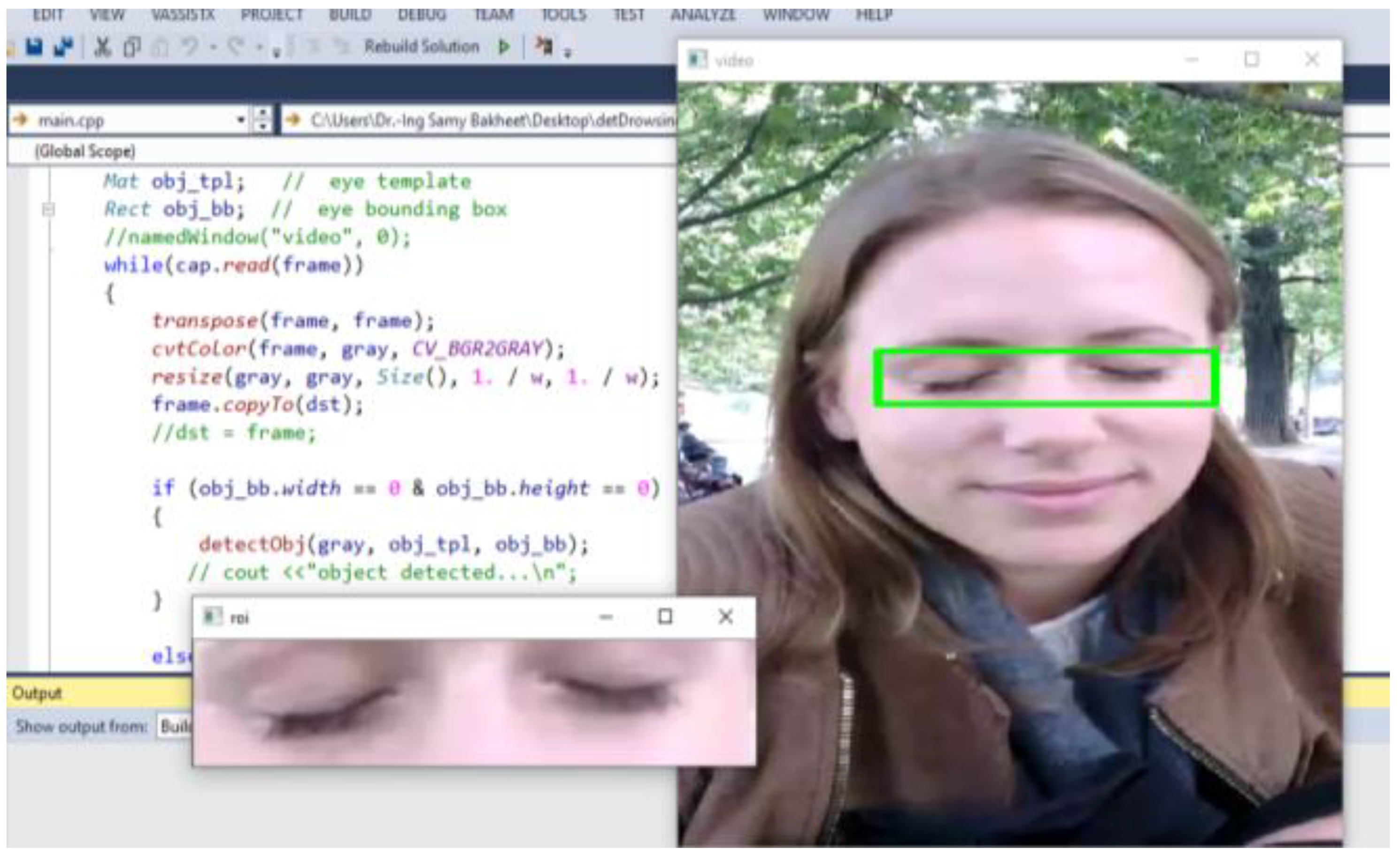

2.2. Eye Localization

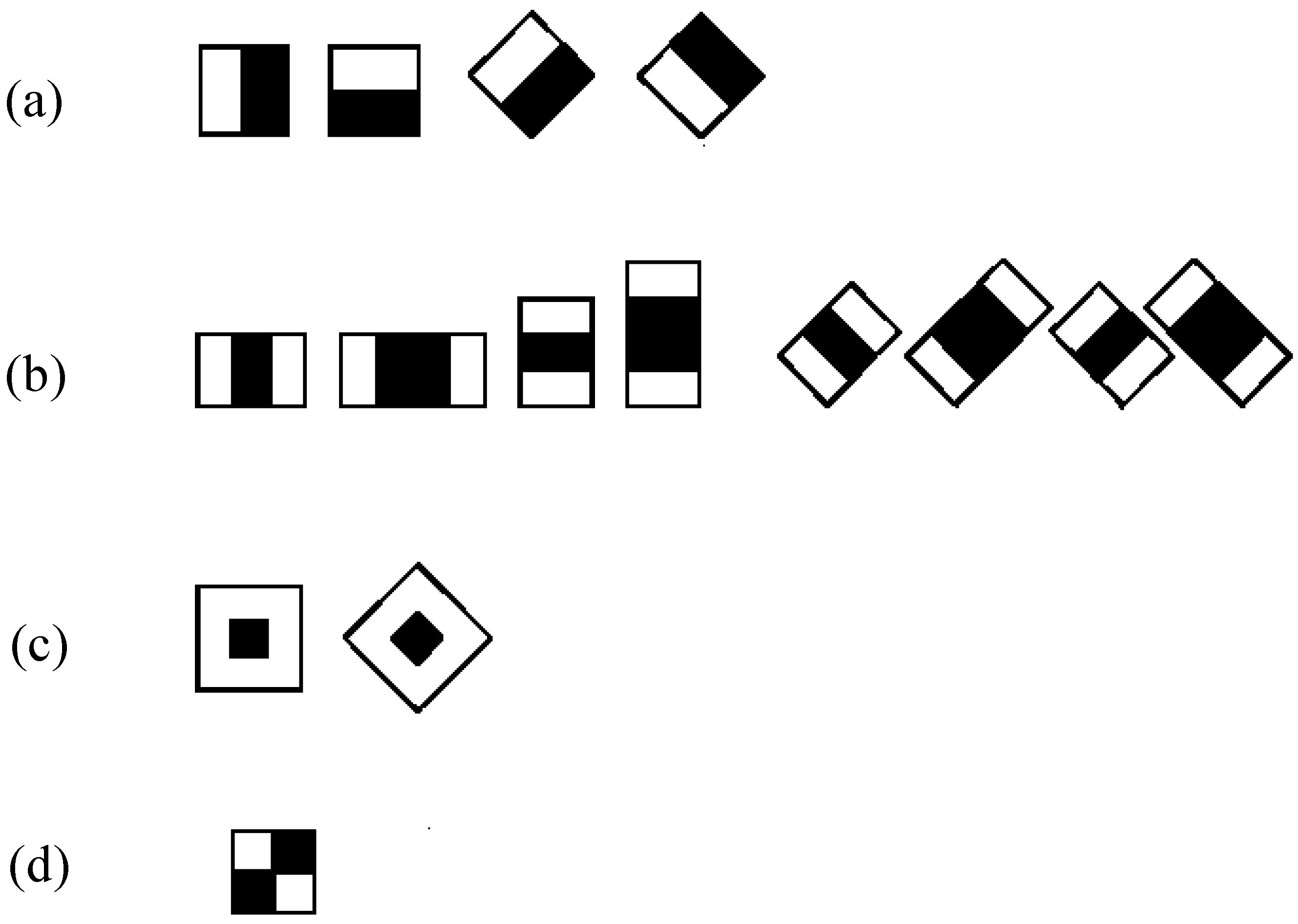

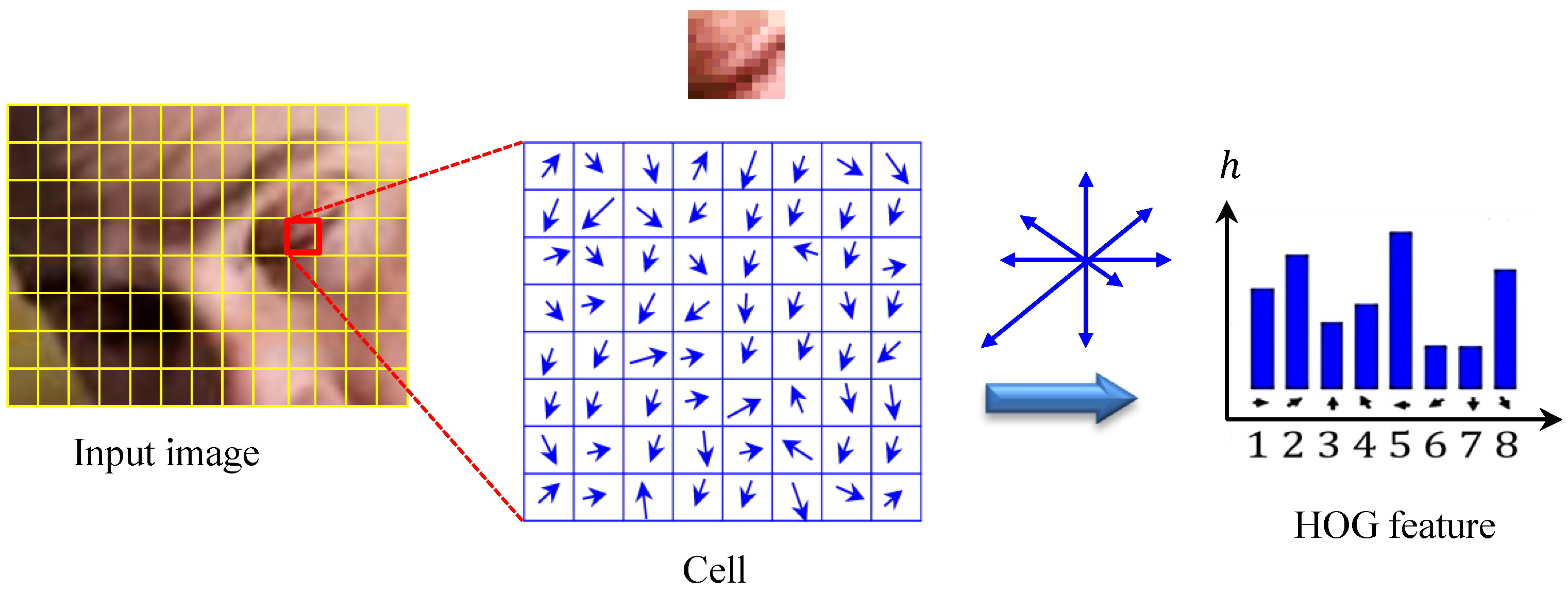

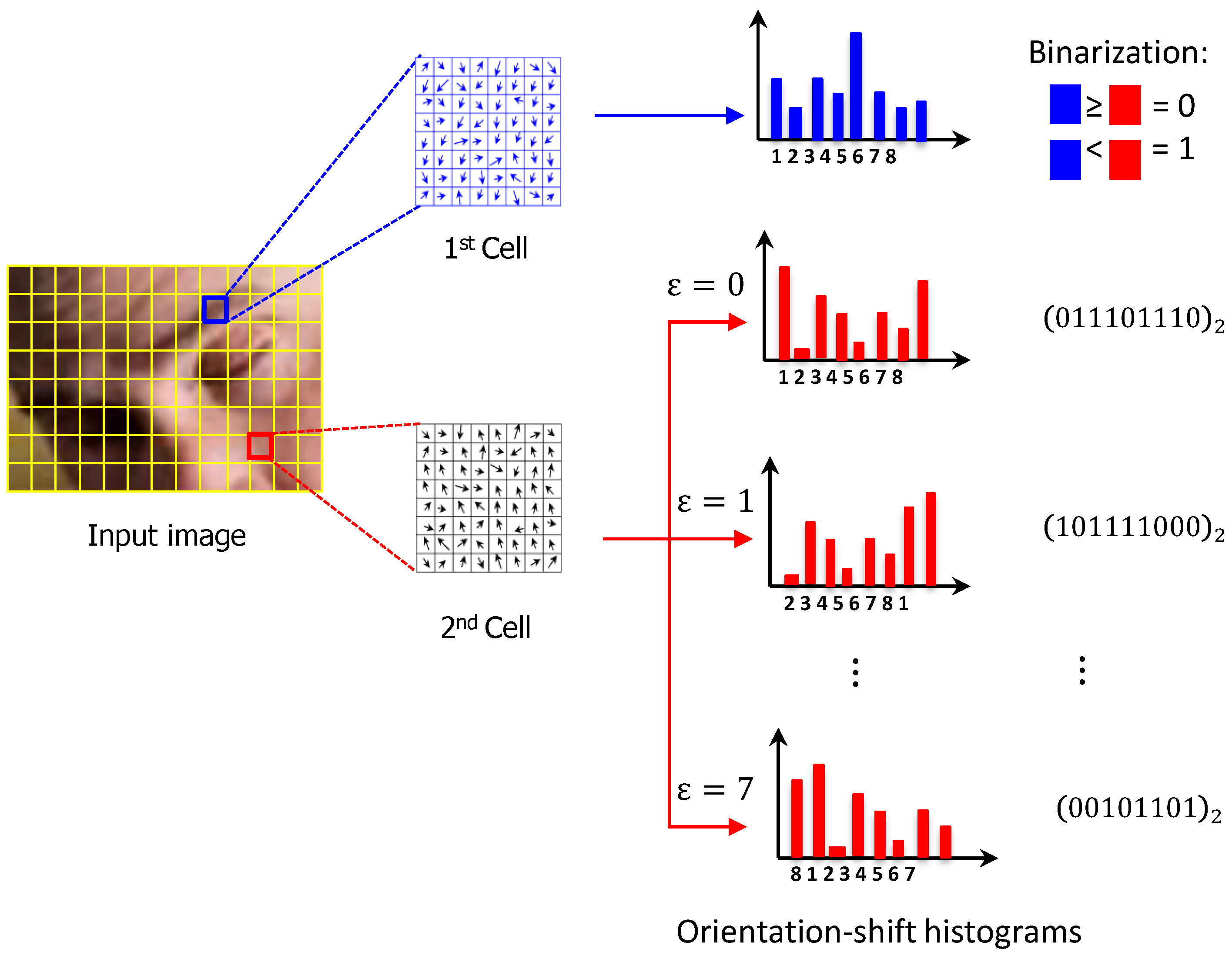

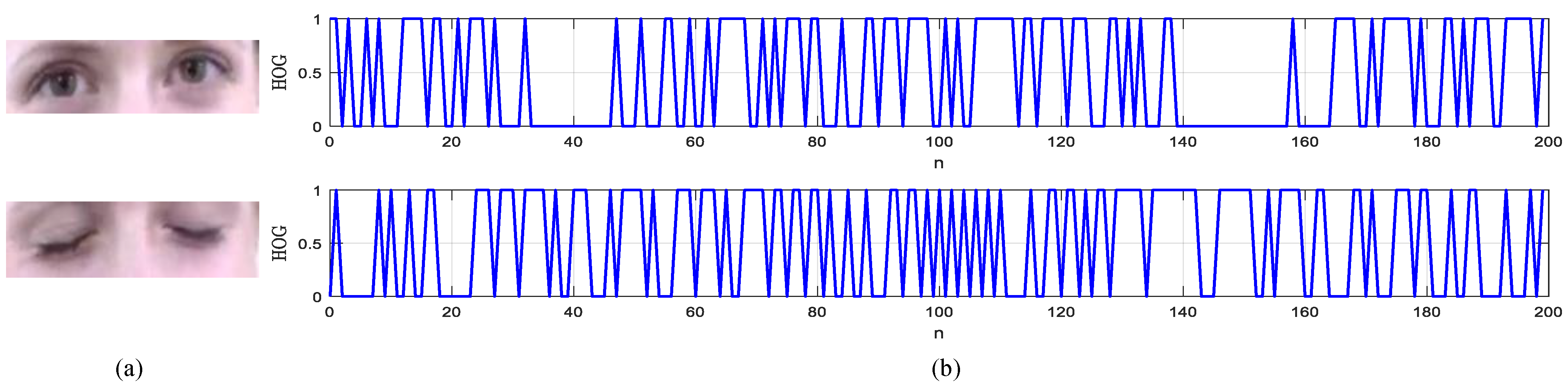

2.3. Feature Extraction

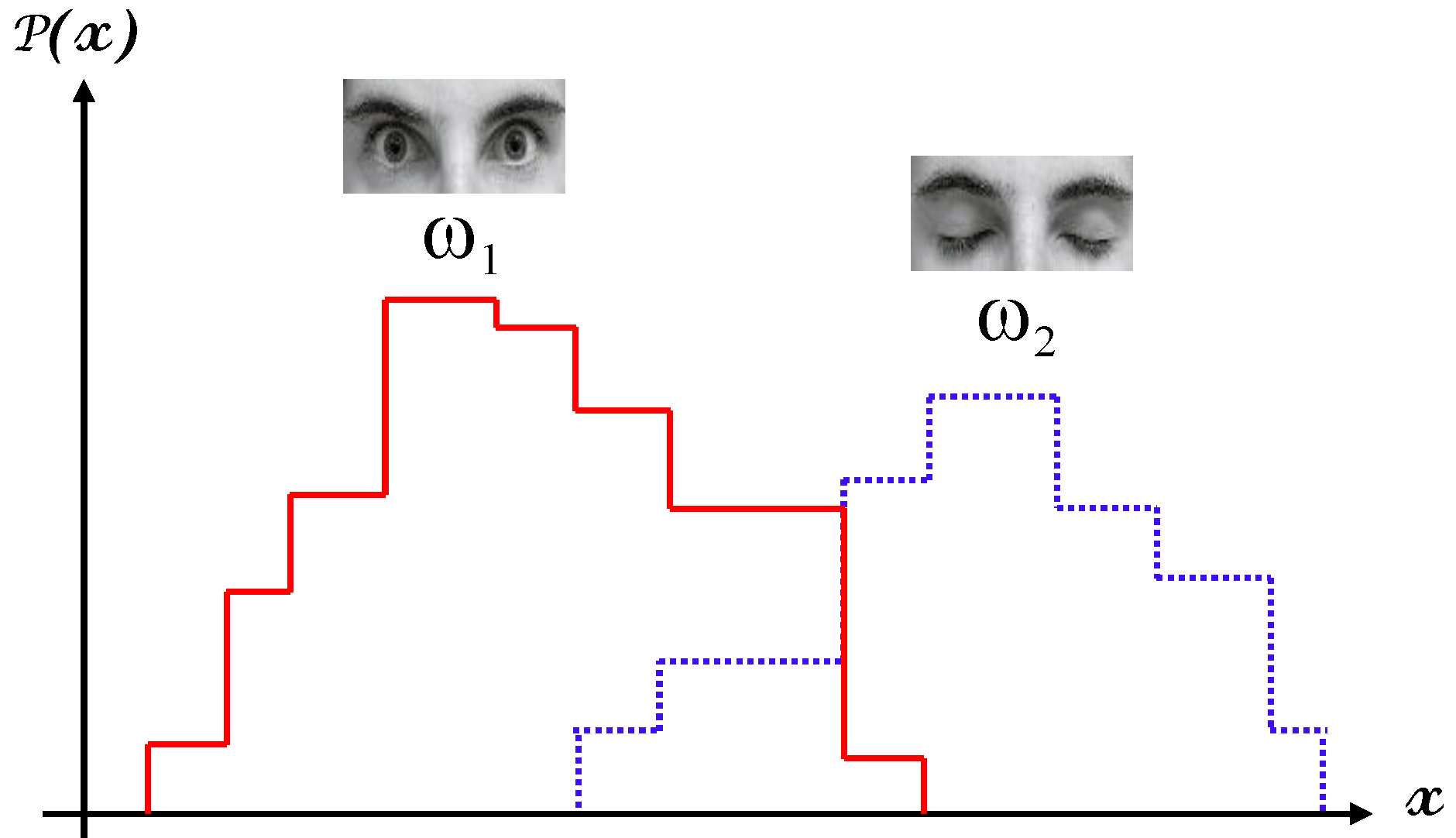

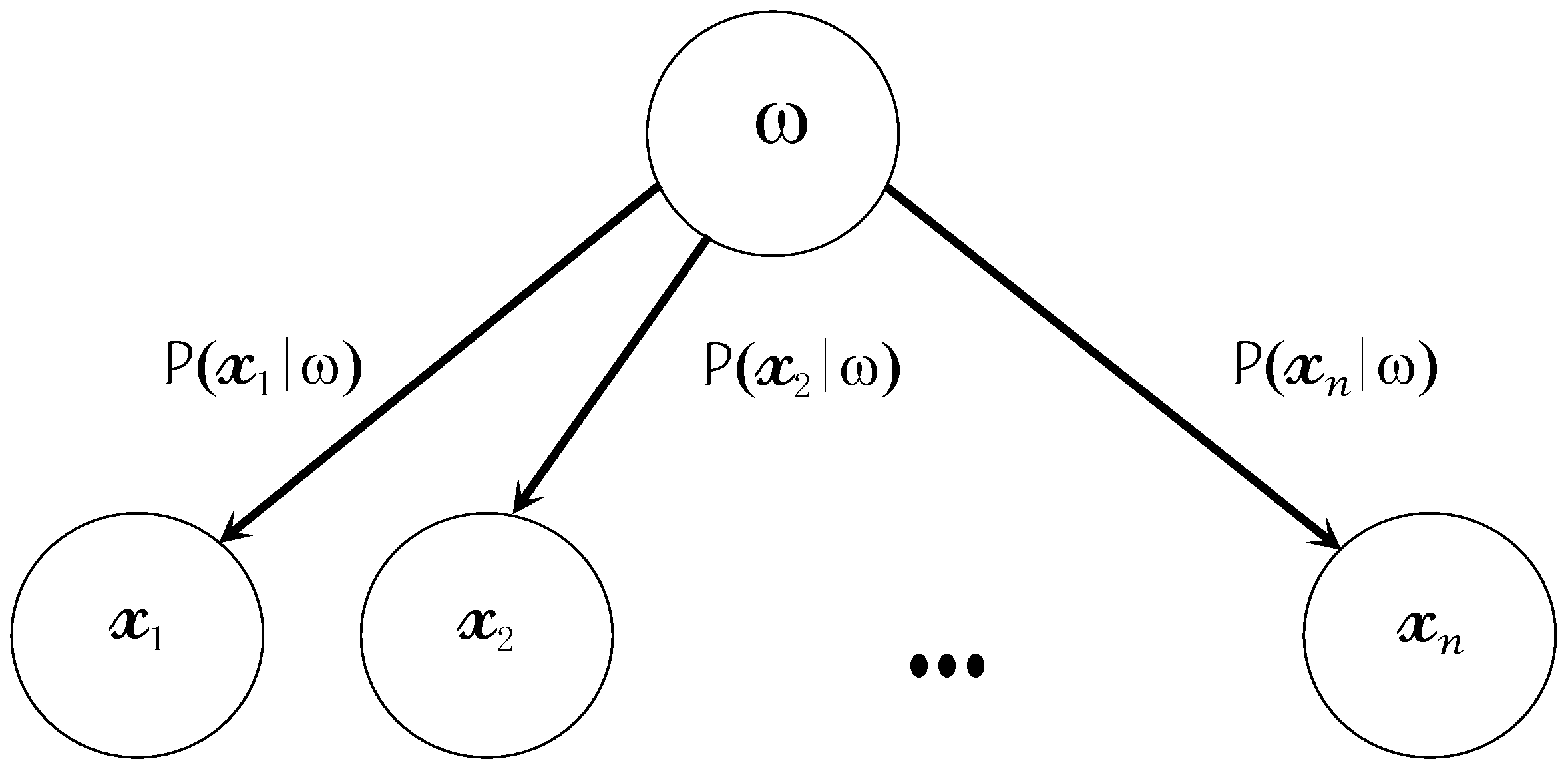

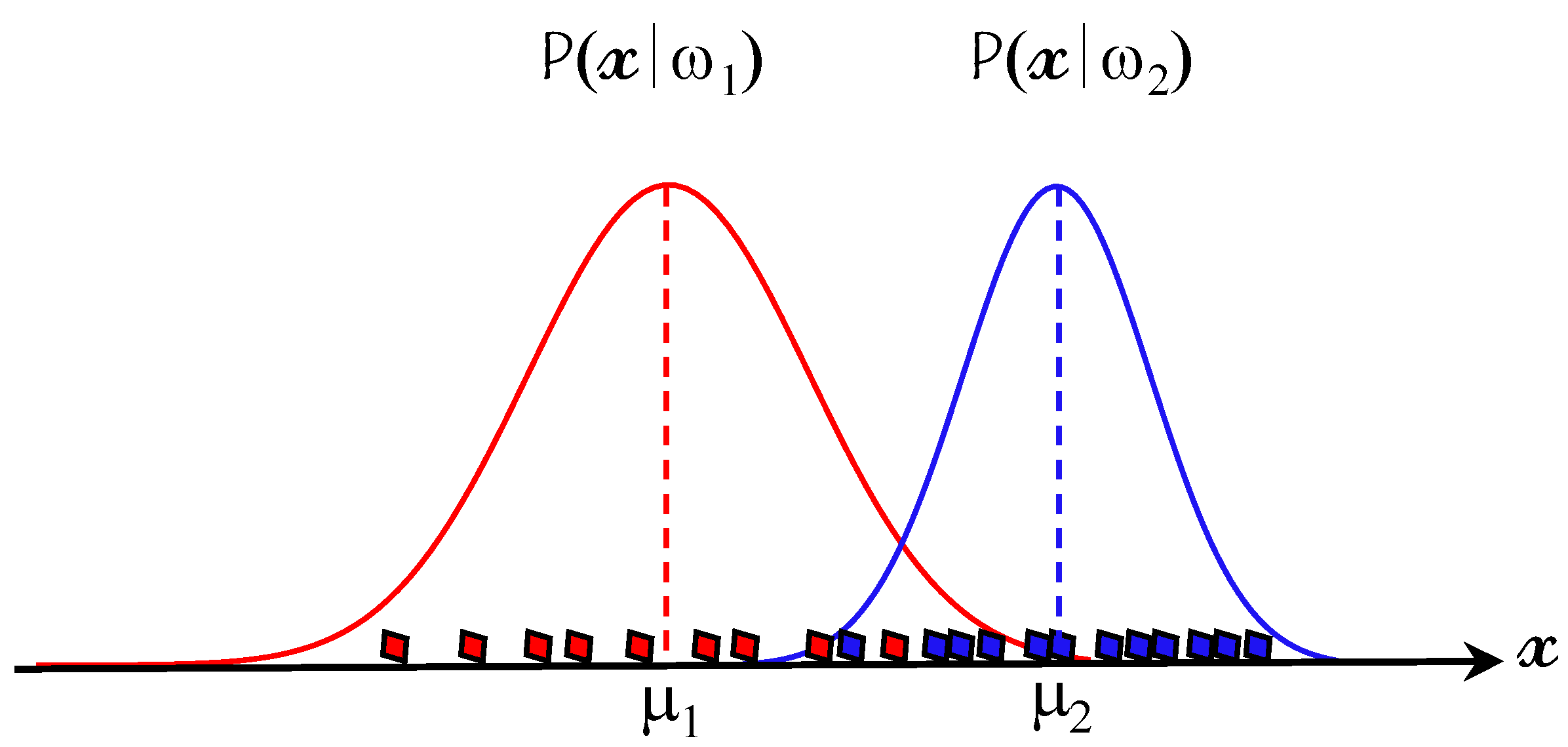

2.4. Bayesian Feature Classification

- independent probability of (i.e., prior probability);

- independent probability of (i.e., evidence);

- conditional probability of given (i.e., likelihood);

- conditional probability of given (i.e., posterior probability).

| Algorithm 1: Naïve Bayes (NB) classification algorithm. |

|

3. Experimental Results

4. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Singh, S. Critical Reasons for Crashes Investigated in the National Motor Vehicle Crash Causation Survey; Technical Report; (Traffic Safety Facts Crash•Stats. Report No. DOT HS 812 115); National Highway Traffic Safety Administration: Washington, DC, USA, 2015. [Google Scholar]

- National Center for Statistics and Analysis. Drowsy Driving 2015; (Crash•Stats Brief Statistical Summary. Report No. DOT HS 812 446); National Highway Traffic Safety Administration: Washington, DC, USA, 2017.

- Williamson, A.; Lombardi, D.A.; Folkard, S.; Stutts, J.; Courtney, T.K.; Connor, J.L. The link between fatigue and safety. Accid. Anal. Prev. 2011, 43, 498–515. [Google Scholar] [CrossRef]

- Stevenson, M.R.; Elkington, J.; Sharwood, L.; Meuleners, L.; Ivers, R.; Boufous, S.; Williamson, A.; Haworth, N.; Quinlan, M.; Grunstein, R.; et al. The role of sleepiness, sleep disorders, and the work environment on heavy-vehicle crashes in 2 Australian States. Am. J. Epidemiol. 2014, 179, 594–601. [Google Scholar] [CrossRef]

- Li, Z.; Li, S.E.; Li, R.; Cheng, B.; Shi, J. Online Detection of Driver Fatigue Using Steering Wheel Angles for Real Driving Conditions. Sensors 2017, 17, 495. [Google Scholar] [CrossRef] [PubMed]

- Fletcher, A.; McCulloch, K.; Baulk, S.D.; Dawson, D. Countermeasures to driver fatigue: A review of public awareness campaigns and legal approaches. Aust. N. Z. J. Public Health 2005, 29, 471–476. [Google Scholar] [CrossRef]

- Williamson, A.; Friswell, R.; Olivier, J.; Grzebieta, R. Are drivers aware of sleepiness and increasing crash risk while driving? Accid. Anal. Prev. 2014, 70, 225–234. [Google Scholar] [CrossRef] [PubMed]

- Blommer, M.; Curry, R.; Kozak, K.; Greenberg, J.; Artz, B. Implementation of controlled lane departures and analysis of simulator sickness for a drowsy driver study. In Proceedings of the 2006 Driving Simulation Conference Europe, Paris, France, 4–6 October 2006. [Google Scholar]

- Lawoyin, S. Novel Technologies for the Detection and Mitigation of Drowsy Driving. Ph.D. Thesis, Virginia Commonwealth University, Richmond, VA, USA, 2014. [Google Scholar]

- Sayed, R.; Eskandarian, A. Unobtrusive drowsiness detection by neural network learning of driver steering. Proc. Inst. Mech. Eng. J. Automob. Eng. 2001, 215, 969–975. [Google Scholar] [CrossRef]

- Tsuchida, A.; Bhuiyan, M.; Oguri, K. Estimation of driversdrive drowsiness level using a neural network based ‘Error Correcting Output Coding’ method. In Proceedings of the 13th International IEEE Conference on Intelligent Transportation Systems, Madeira, Portugal, 19–22 September 2010; pp. 1887–1892. [Google Scholar]

- LM, K.; Nguyen, H.; Lal, S. Early driver fatigue detection from electroencephalography signals using artificial neural networks. In Proceedings of the International IEEE Conference of the Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 2187–2190. [Google Scholar]

- Bakheet, S.; Al-Hamadi, A. Chord-length shape features for license plate character recognition. J. Russ. Laser Res. 2020, 41, 156–170. [Google Scholar] [CrossRef]

- Abdelwahab, H.T.; Abdel-Aty, M.A. Artificial neural networks and logit models for traffic safety analysis of toll plazas. Transp. Res. Rec. 2002, 1784, 115–125. [Google Scholar] [CrossRef]

- Wijnands, J.; Thompson, J.; Aschwanden, G.; Stevenson, M. Identifying behavioural change among drivers using long short-term memory recurrent neural networks. Transp. Res. Part F Traffic Psychol. Behav. 2018, 53, 34–49. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 117, 61–85. [Google Scholar] [CrossRef]

- Park, S.; Pan, F.; Kang, S.; Yoo, C.D. Driver Drowsiness Detection System Based on Feature Representation Learning Using Various Deep Networks. In Computer Vision—ACCV 2016 Workshops Part III; Chen, C.S., Lu, J., Ma, K.K., Eds.; Springer: Taipei, Taiwan, 2017; pp. 154–164. [Google Scholar]

- Huynh, X.; Park, S.; Kim, Y. Detection of driver drowsiness using 3D deep neural network and semi-supervised gradient boosting machine. In Computer Vision—ACCV 2016 Workshops Part III; Chen, C.S., Lu, J., Ma, K.K., Eds.; Springer: Taipei, Taiwan, 2017; pp. 134–145. [Google Scholar]

- Jabbar, R.; Al-Khalifa, K.; Kharbeche, M.; Alhajyaseen, W.; Jafari, M.; Jiang, S. Real-time Driver Drowsiness Detection for Android Application Using Deep Neural Networks Techniques. Procedia Comput. Sci. 2018, 130, 400–407. [Google Scholar] [CrossRef]

- Lenskiy, A.; Lee, J. Driver’s eye blinking detection using novel color and texture segmentation algorithms. Int. J. Control Autom. Syst. 2012, 10, 317–327. [Google Scholar] [CrossRef]

- Pauly, L.; Sankar, D. Detection of drowsiness based on HOG features and SVM classifiers. In Proceedings of the 2015 IEEE International Conference on Research in Computational Intelligence and Communication Networks (ICRCICN), Kolkata, India, 20–22 November 2015; pp. 181–186. [Google Scholar]

- Moujahid, A.; Dornaika, F.; Arganda-Carreras, I.; Reta, J. Efficient and compact face descriptor for driver drowsiness detection. Expert Syst. Appl. 2021, 168, 114334. [Google Scholar] [CrossRef]

- Singh, A.; Chandewar, C.; Pattarkine, P. Driver Drowsiness Alert System with Effective Feature Extraction. Int. J. Res. Emerg. Sci. Technol. 2018, 5, 14–19. [Google Scholar]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple feature. In Proceedings of the 2001 IEEE Computer Society Conference on CVPR, Kauai, Hawaii, 8–14 December 2001; pp. 11–518. [Google Scholar]

- Abdullah-Al-Wadud, M.; Kabir, M.H.; Dewan, M.; Chae, O. A dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 2007, 53, 593–600. [Google Scholar] [CrossRef]

- Sadek, S.; Al-Hamadi, A.; Michaelis, B.; Sayed, U. Image Retrieval using Cubic spline Neural Networks. Int. J. Video Image Process. Netw. Secur. 2009, 9, 17–22. [Google Scholar]

- Bakheet, S.; Al-Hamadi, A. Computer-Aided Diagnosis of Malignant Melanoma Using Gabor-Based Entropic Features and Multilevel Neural Networks. Diagnostics 2020, 10, 822. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Conference Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–26 June 2005; pp. 886–893. [Google Scholar]

- Bakheet, S. An SVM Framework for Malignant Melanoma Detection Based on Optimized HOG Features. Computation 2017, 5, 4. [Google Scholar] [CrossRef]

- Lewis, D.D. Naïve (Bayes) at forty: The independence assumption in information retrieval. In Proceedings of the 10th European Conference on Machine Learning (ECML-98), Chemnitz, Germany, 21–23 April 1998; pp. 4–15. [Google Scholar]

- Bolstad, W.M. Introduction to Bayesian Statistics; Wiley & Sons: New York, NY, USA, 2004; pp. 55–105. [Google Scholar]

- Khushaba, R.N.; Kodagoda, S.; Lal, S.; Dissanayake, G. Driver drowsiness classification using fuzzy wavelet-packet-based featureextraction algorithm. IEEE Trans. Biomed. Eng. 2011, 58, 121–131. [Google Scholar] [CrossRef]

- Takei, Y.; Furukawa, Y. Estimate of driver’s fatigue through steering motion. In Proceedings of the 2005 IEEE International Conference on Systems, Man and Cybernetics, Waikoloa, HI, USA, 10–12 October 2005; pp. 1765–1770. [Google Scholar]

- Wakita, T.; Ozawa, K.; Miyajima, C.; Igarashi, K.; Itou, K.; Takeda, I.K.; Itakura, F. Driver identification using driving behavior signals. IEICE Trans. Inf. Syst. 2006, 89, 1188–1194. [Google Scholar] [CrossRef]

- Ramzan, M.; Khan, H.U.; Awan, S.M.; Ismail, A.; Ilyas, M.; Mahmood, A. A Survey on State-of-the-Art Drowsiness Detection Techniques. IEEE Access 2019, 7, 61904–61919. [Google Scholar] [CrossRef]

- Weng, C.H.; Lai, Y.H.; Lai, S.H. Driver Drowsiness Detection via a Hierarchical Temporal Deep Belief Network. In Proceedings of the Asian Conference on Computer Vision Workshop on Driver Drowsiness Detection from Video, Taipei, Taiwan, 20–24 November 2016. [Google Scholar]

- Celona, L.; Mammana, L.; Bianco, S.; Schettini, R. A Multi-Task CNN Framework for Driver Face Monitoring. In Proceedings of the 2018 IEEE 8th International Conference on Consumer Electronics-Berlin (ICCE-Berlin), Berlin, Germany, 2–5 September 2018; pp. 1–4. [Google Scholar]

- Shih, T.H.; Hsu, C.T. MSTN: Multistage Spatial-Temporal Network for Driver Drowsiness Detection. In Computer Vision—ACCV 2016 Workshops; Springer International Publishing: Cham, Switzerland, 2017; pp. 146–153. [Google Scholar]

- Yao, H.; Zhang, W.; Malhan, R.; Gryak, J.; Najarian, A.K. Filter-Pruned 3D Convolutional Neural Network for Drowsiness Detection. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 17–21 July 2018; pp. 1258–1262. [Google Scholar]

- Yu, J.; Park, S.; Lee, S.; Jeon, M. Representation Learning, Scene Understanding, and Feature Fusion for Drowsiness Detection. In Computer Vision—ACCV 2016 Workshops; Springer International Publishing: Cham, Switzerland, 2017; pp. 165–177. [Google Scholar]

- Chen, S.; Wang, Z.; Chen, W. Driver Drowsiness Estimation Based on Factorized Bilinear Feature Fusion and a Long-Short-Term Recurrent Convolutional Network. Information 2021, 12, 3. [Google Scholar] [CrossRef]

- Lyu, J.; Zhang, H.; Yuan, Z. Joint Shape and Local Appearance Features for Real-Time Driver Drowsiness Detection. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2017; pp. 178–194. [Google Scholar]

| Scenario | Drowsiness F1-Score (%) | Non-Drowsiness F1-Score(%) | HOG AC (%) | advHOG AC (%) |

|---|---|---|---|---|

| Bareface | 90.99 | 87.19 | 86.21 | 89.35 |

| Glasses | 85.07 | 72.10 | 78.36 | 80.31 |

| Sunglasses | 81.74 | 67.14 | 75.69 | 76.30 |

| Night-BareFace | 93.49 | 85.37 | 87.64 | 90.64 |

| Night-Glasses | 87.93 | 93.68 | 88.24 | 91.48 |

| Average | 87.84 | 81.09 | 83.19 | 85.62 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bakheet, S.; Al-Hamadi, A. A Framework for Instantaneous Driver Drowsiness Detection Based on Improved HOG Features and Naïve Bayesian Classification. Brain Sci. 2021, 11, 240. https://doi.org/10.3390/brainsci11020240

Bakheet S, Al-Hamadi A. A Framework for Instantaneous Driver Drowsiness Detection Based on Improved HOG Features and Naïve Bayesian Classification. Brain Sciences. 2021; 11(2):240. https://doi.org/10.3390/brainsci11020240

Chicago/Turabian StyleBakheet, Samy, and Ayoub Al-Hamadi. 2021. "A Framework for Instantaneous Driver Drowsiness Detection Based on Improved HOG Features and Naïve Bayesian Classification" Brain Sciences 11, no. 2: 240. https://doi.org/10.3390/brainsci11020240

APA StyleBakheet, S., & Al-Hamadi, A. (2021). A Framework for Instantaneous Driver Drowsiness Detection Based on Improved HOG Features and Naïve Bayesian Classification. Brain Sciences, 11(2), 240. https://doi.org/10.3390/brainsci11020240