A Novel Indirect Calibration Approach for Robot Positioning Error Compensation Based on Neural Network and Hand-Eye Vision

Abstract

:Featured Application

Abstract

1. Introduction

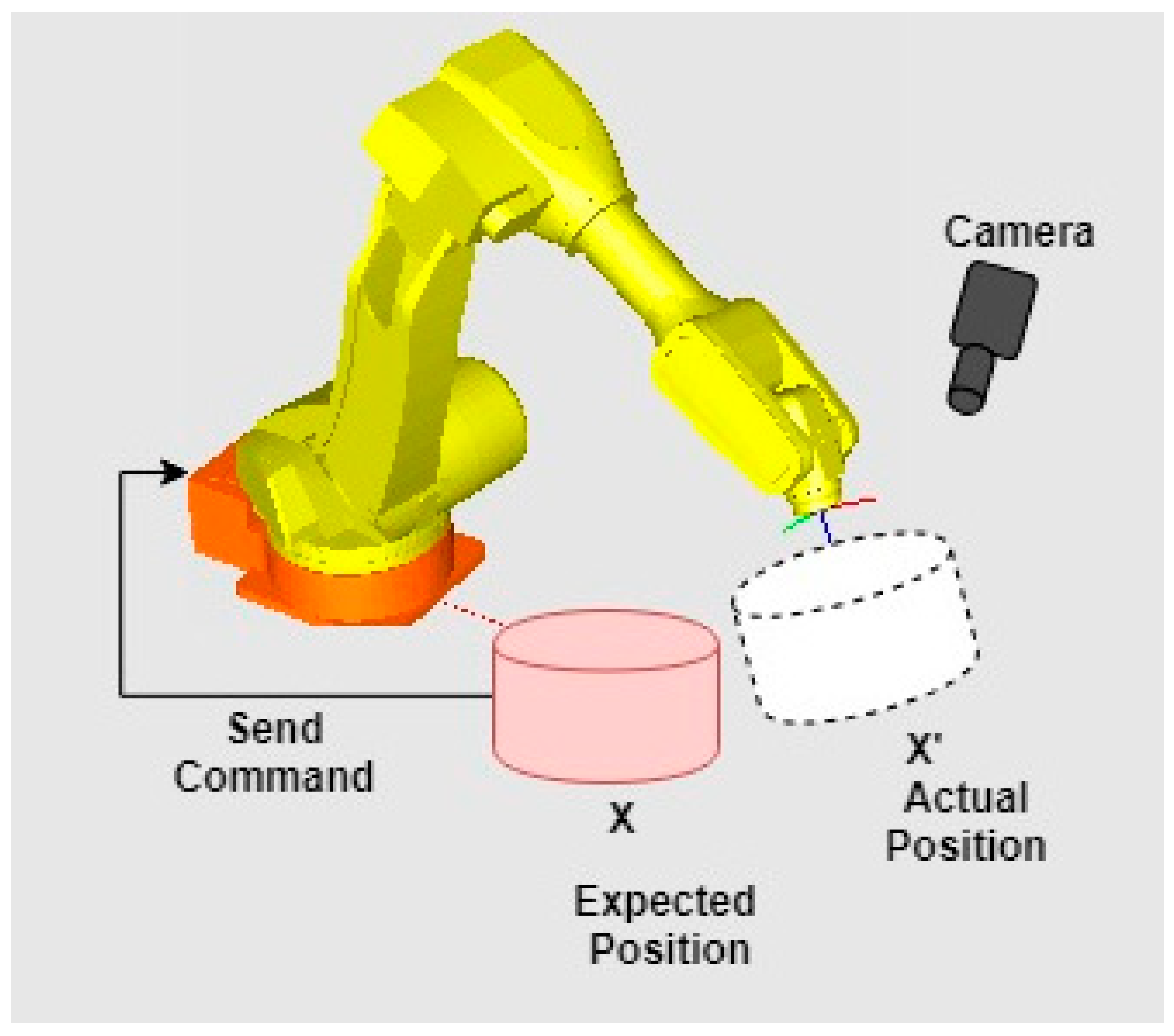

2. Overview of the Problem

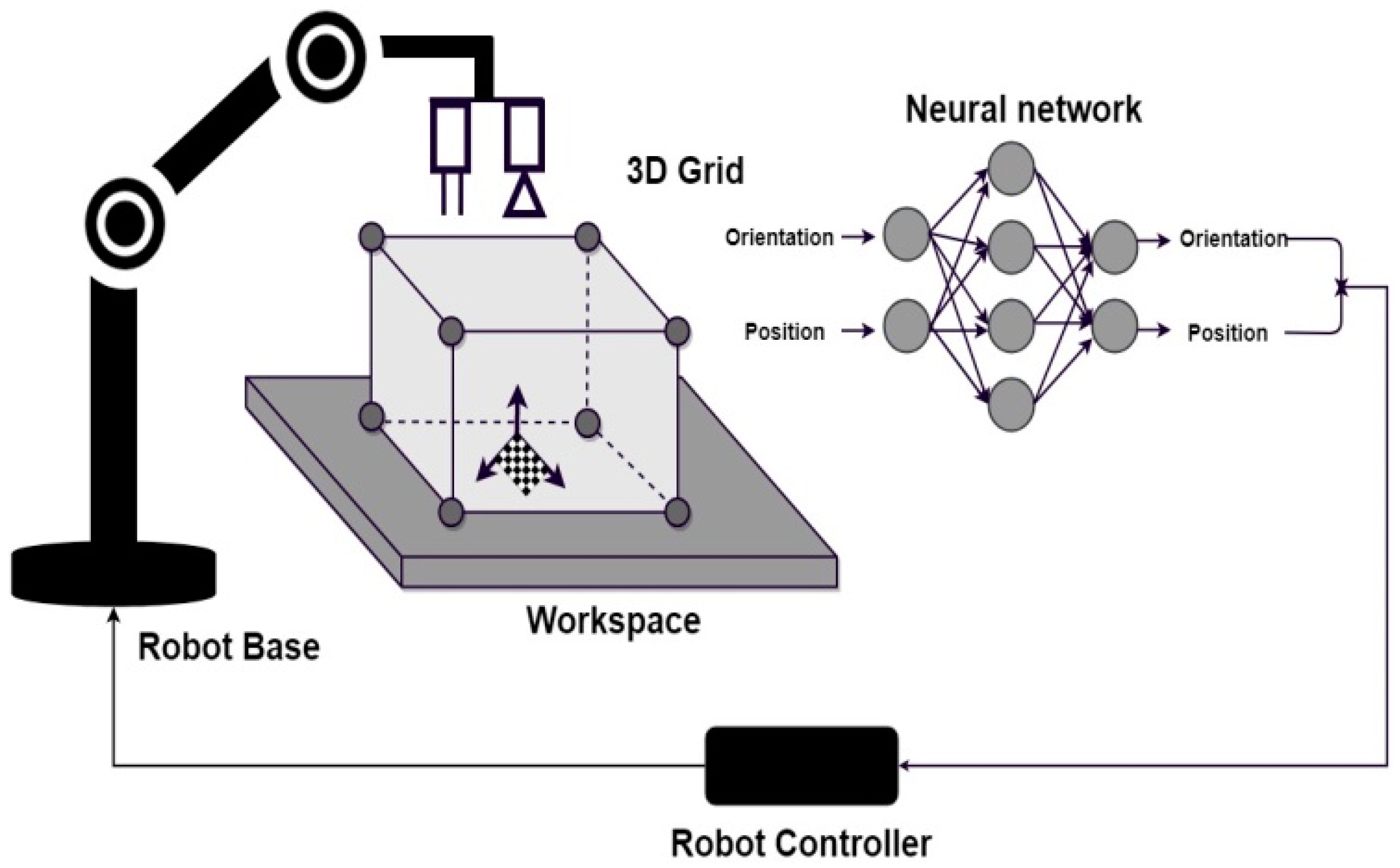

3. Proposed Error Compensation Method

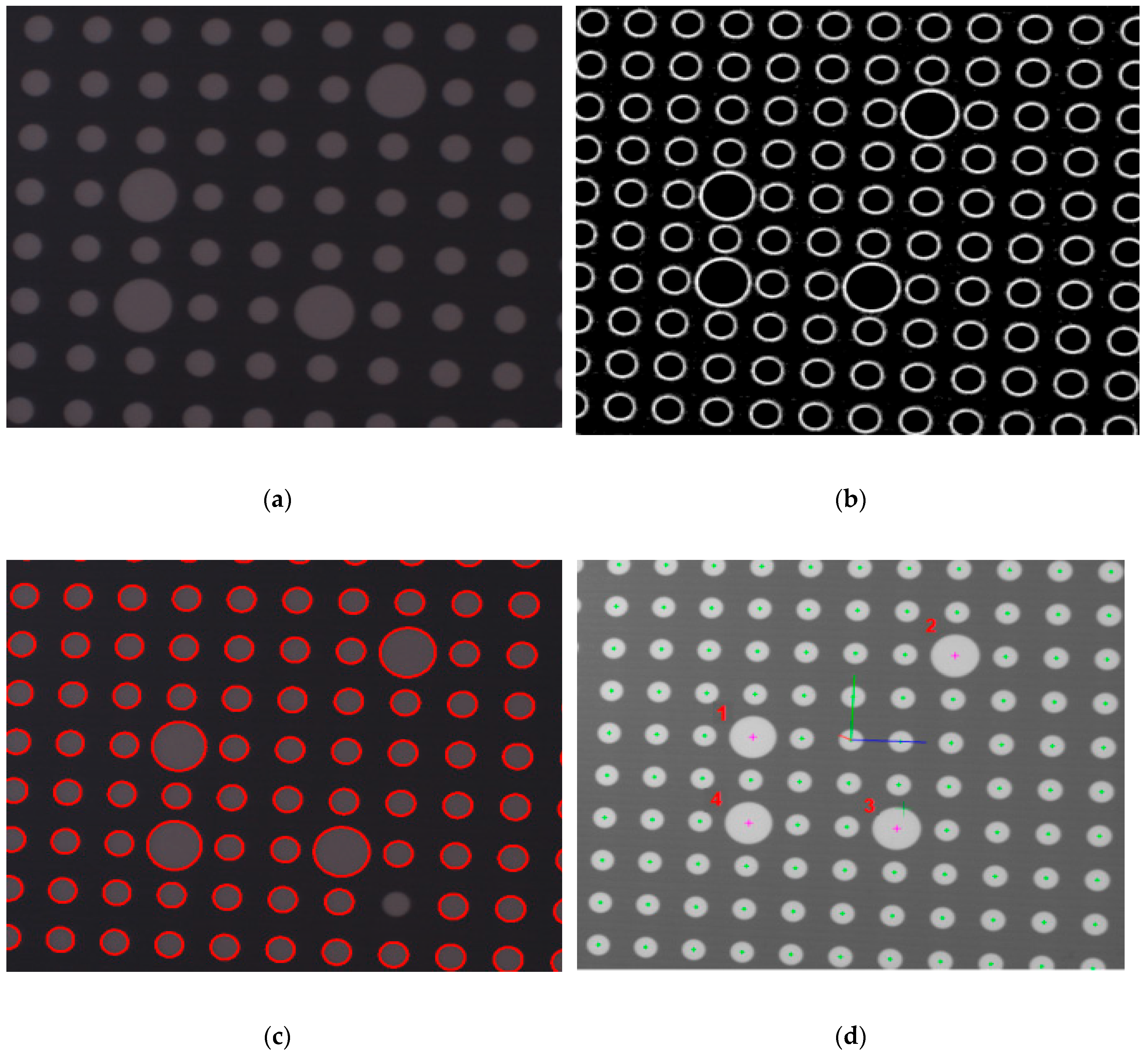

3.1. Pattern Detection and Pose Estimation

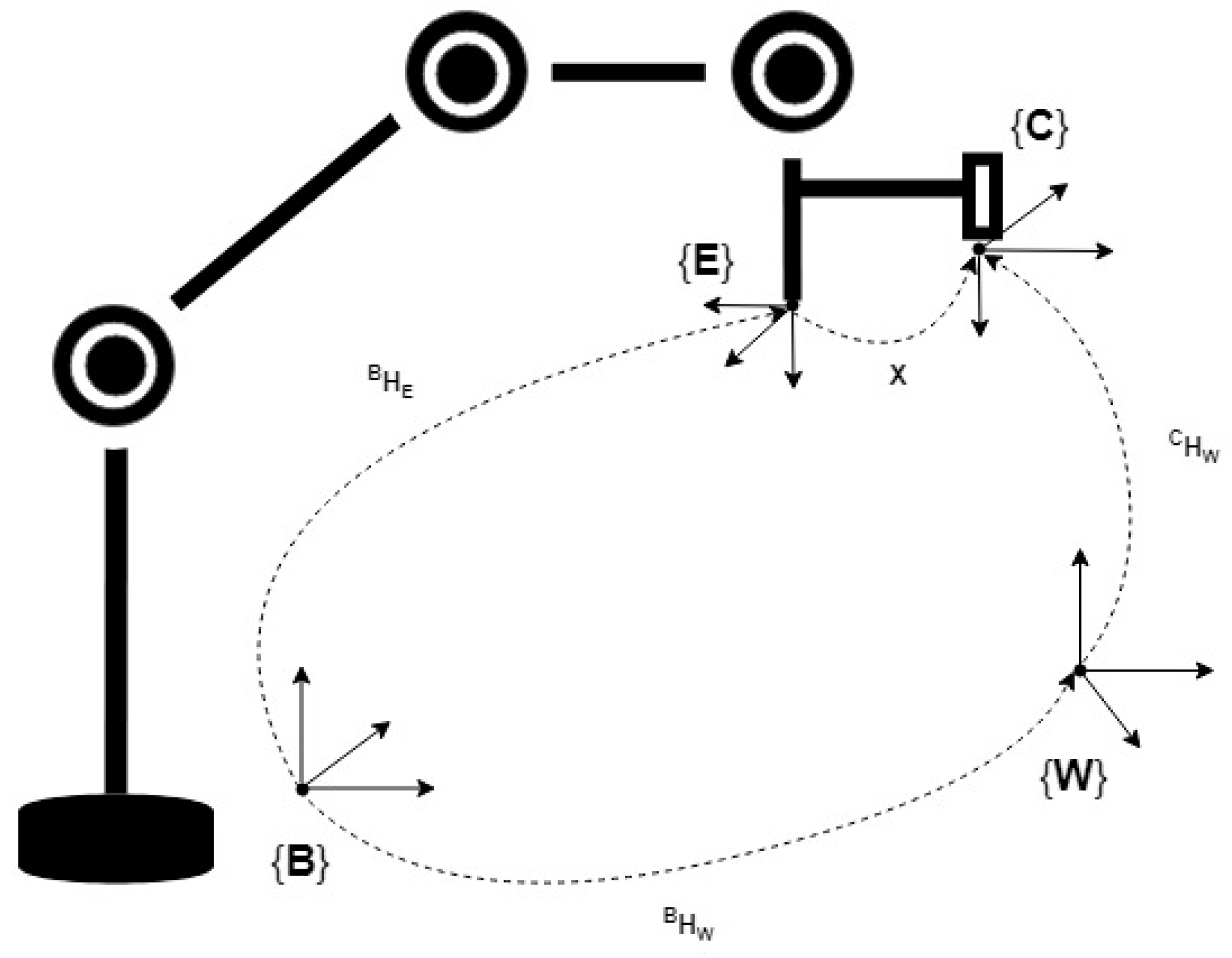

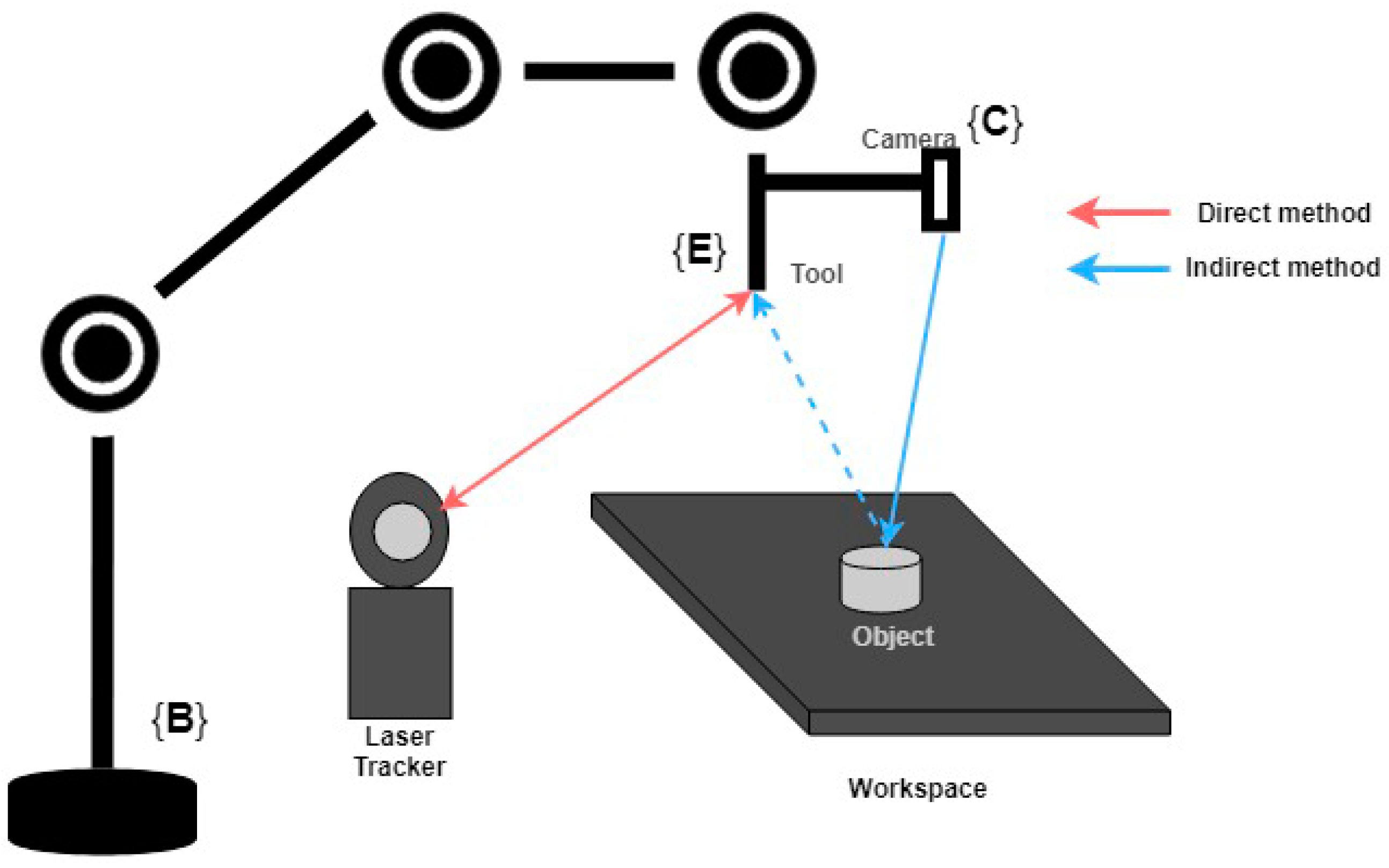

3.2. The Hand-Eye Calibration

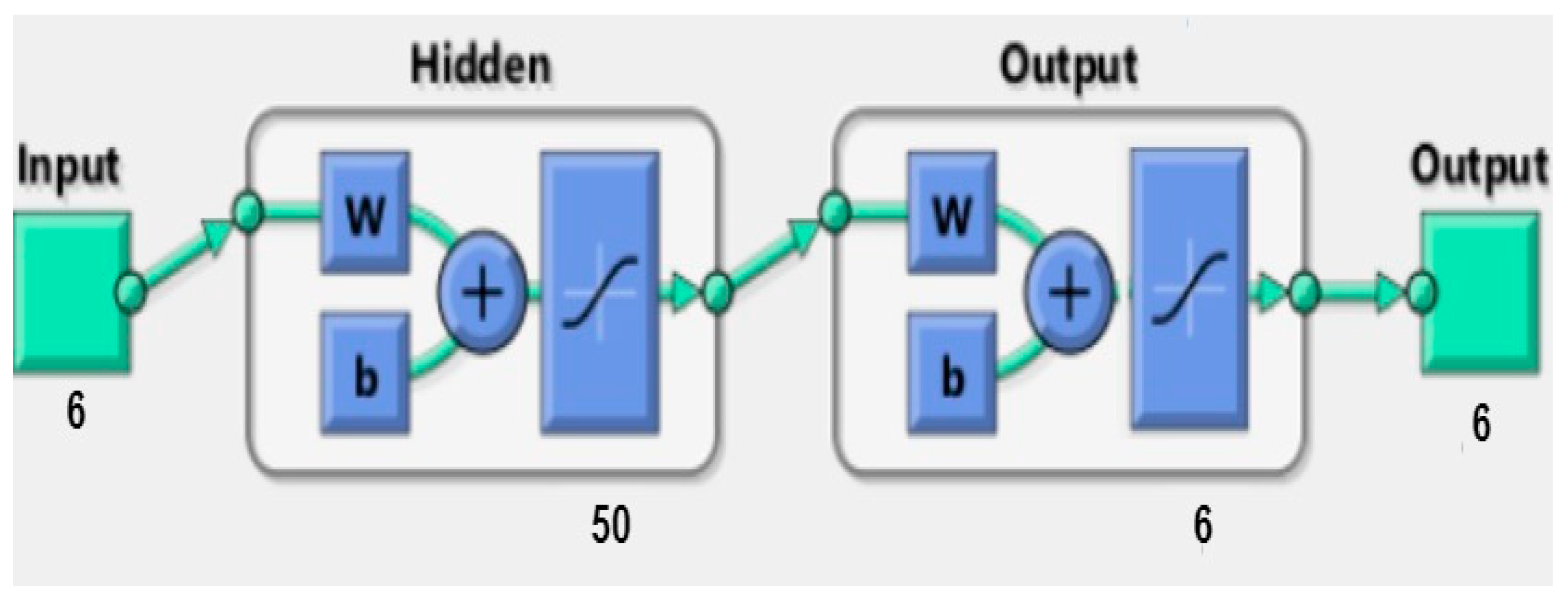

3.3. Feature Training Using Neural Network

4. Simulation for Robot Model

4.1. Simulation Procedure

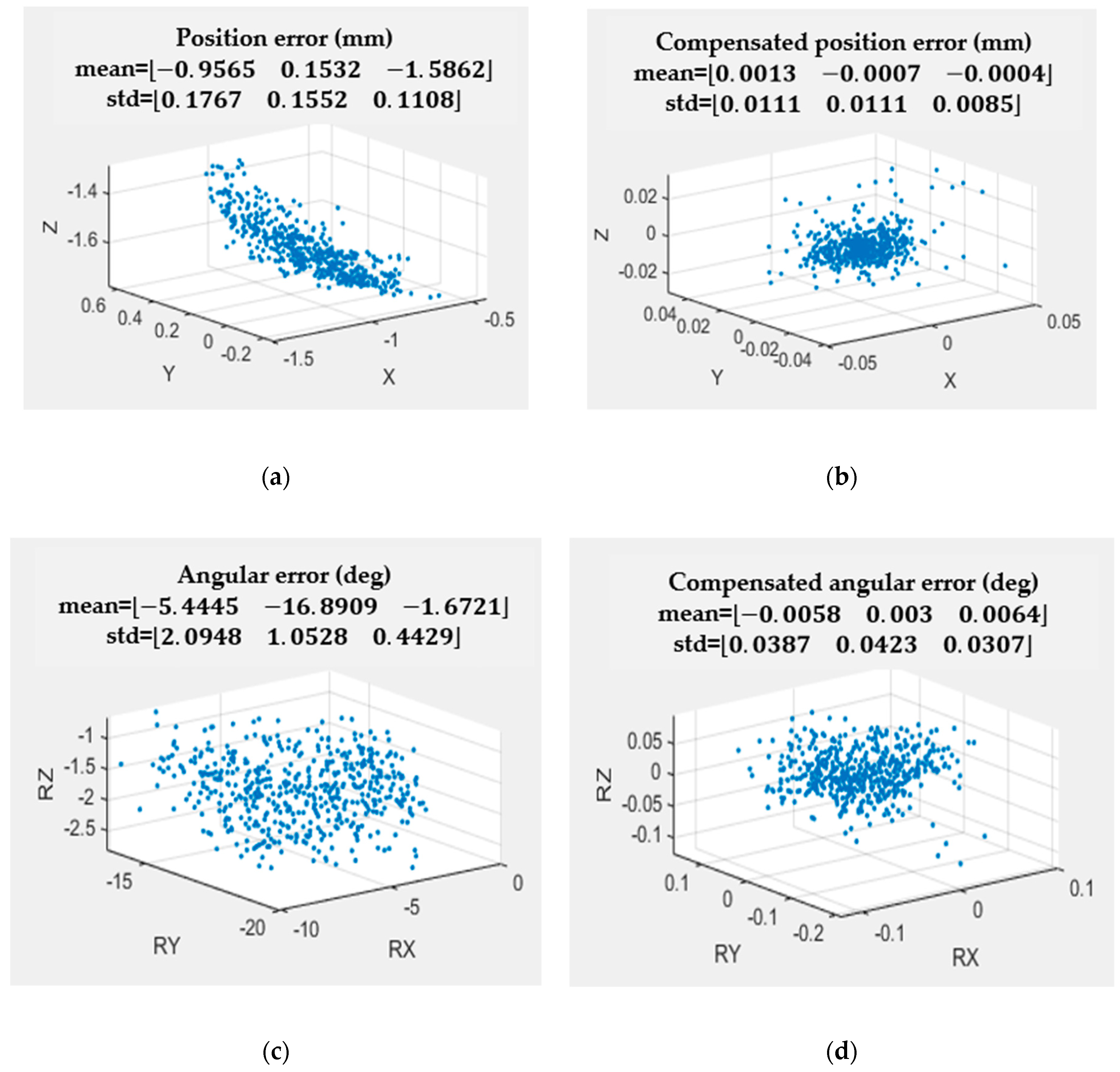

4.2. Simulation Results

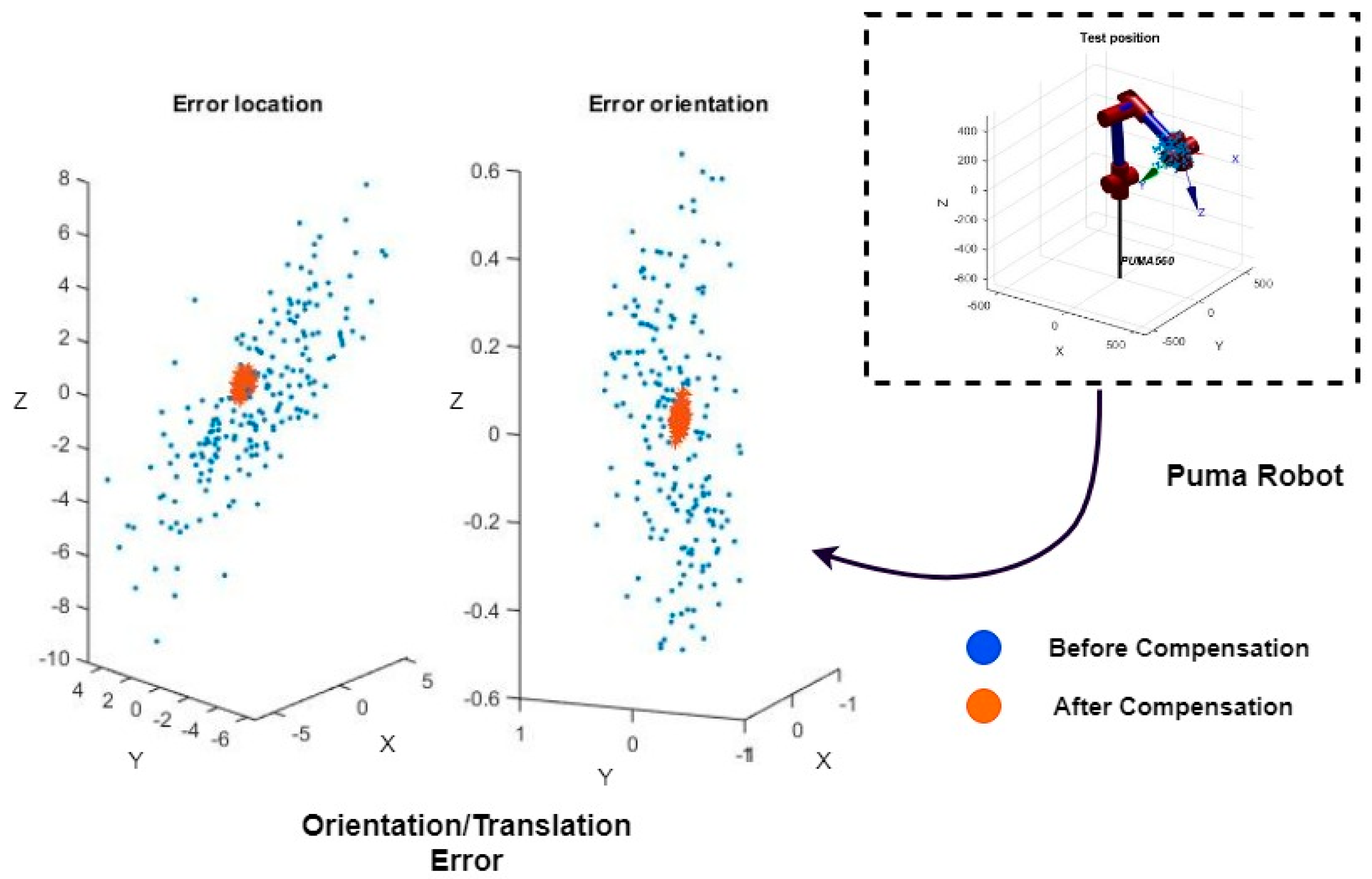

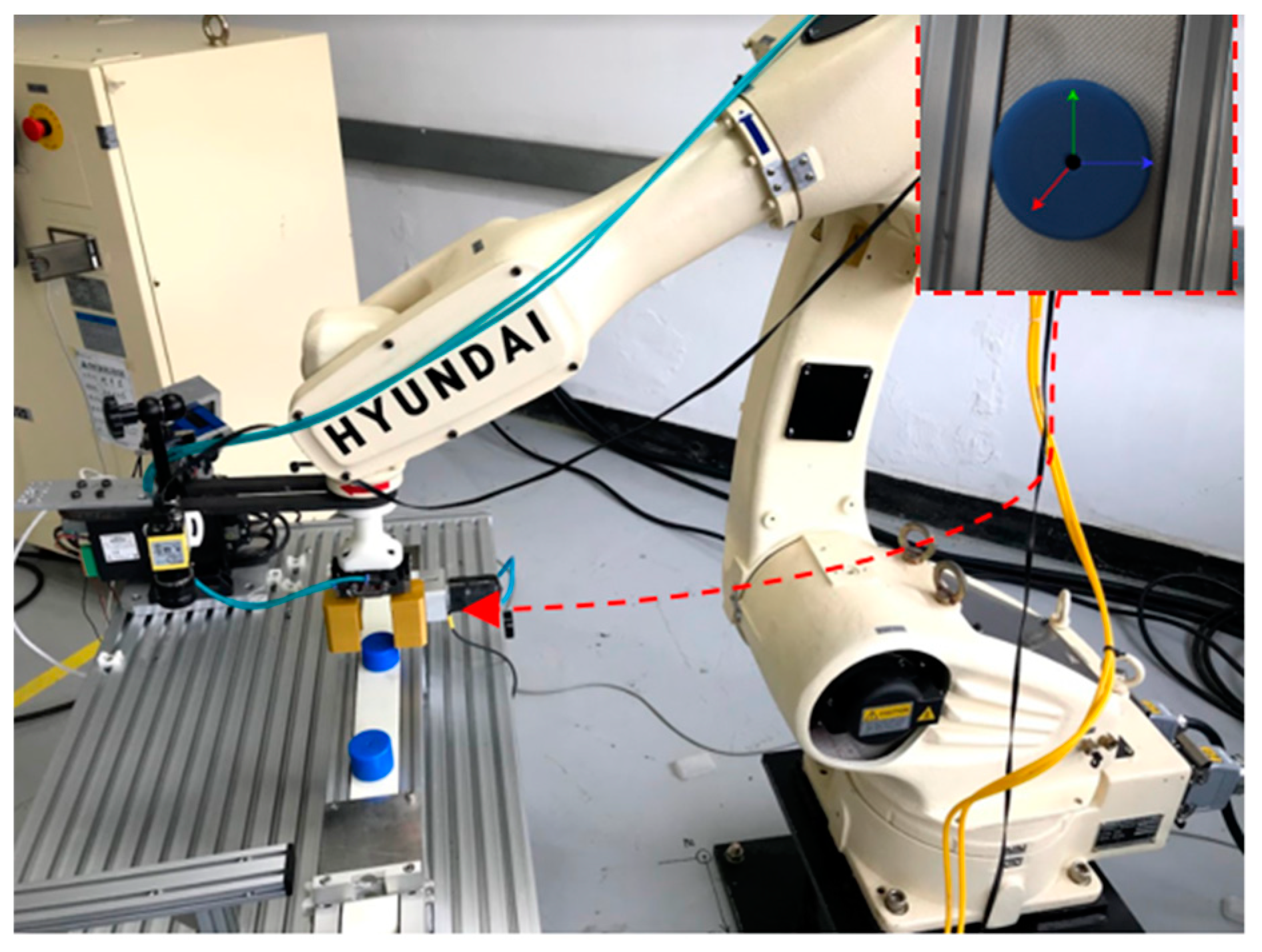

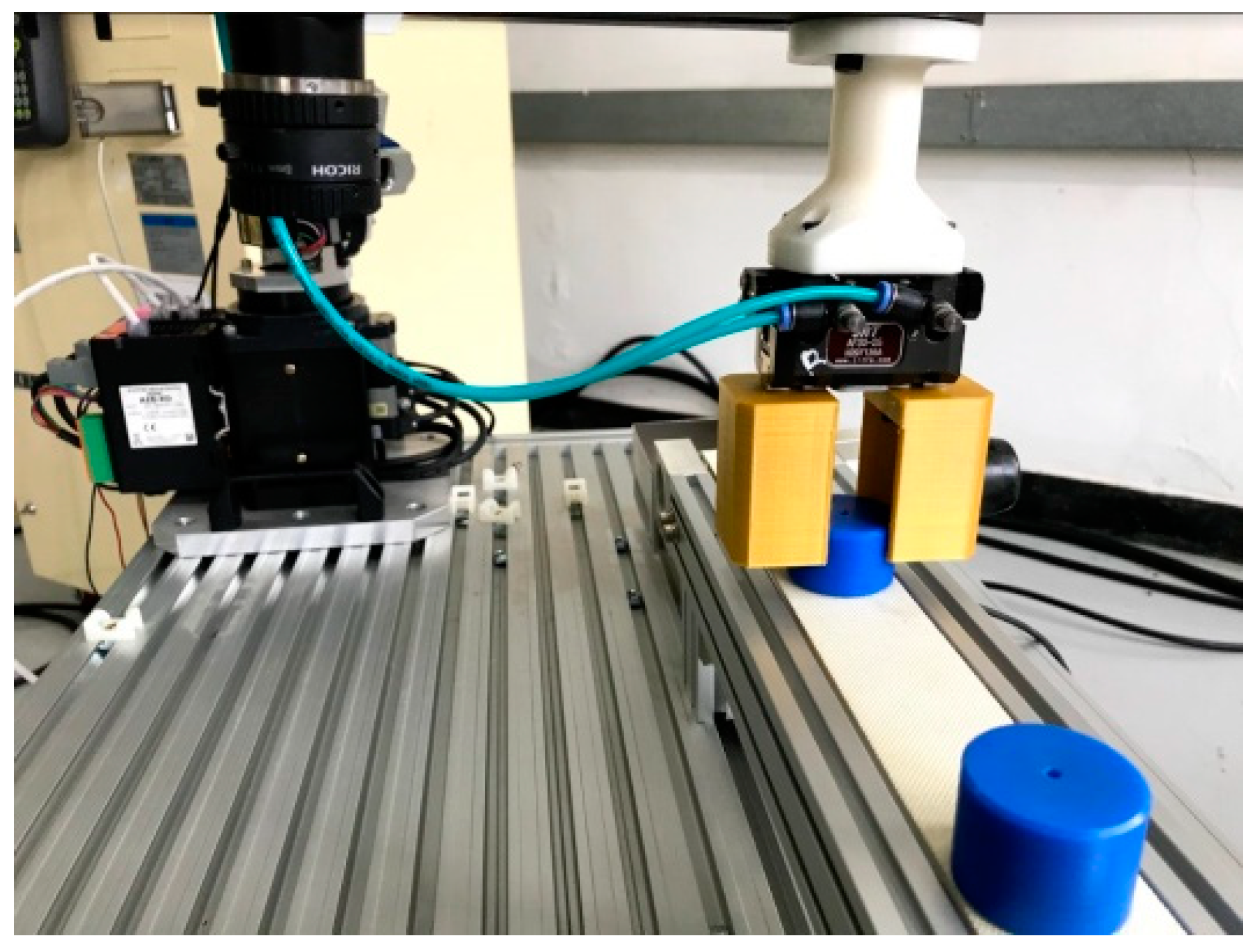

5. Experimental Results

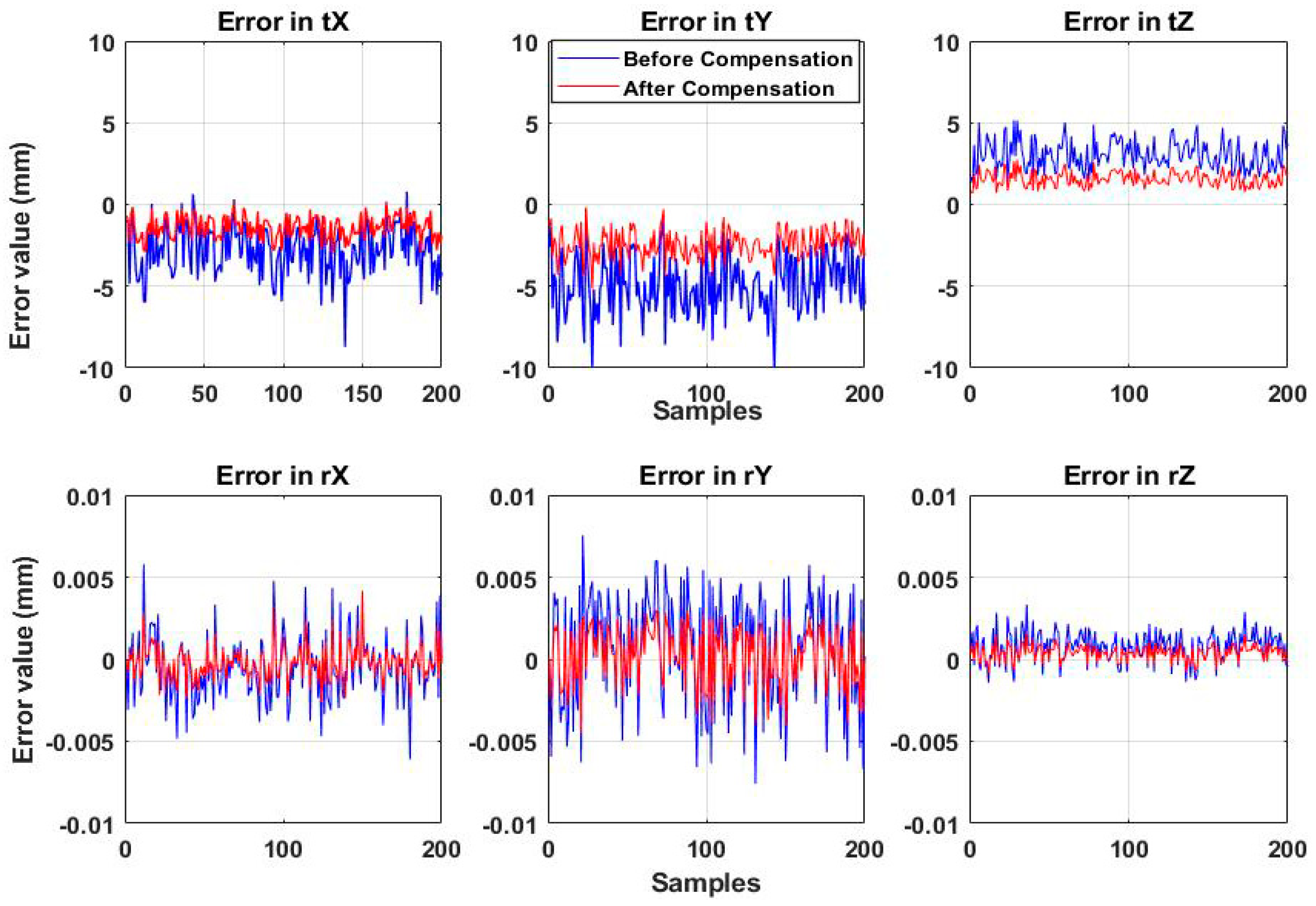

5.1. Experiments on Position/Orientation Error

5.2. The Qualitative Experiments Results

6. Conclusion

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Oh, J.-K.; Lee, S.; Lee, C.-H. Stereo vision based automation for a bin-picking solution. Int. J. Control Autom. Syst. 2012, 10, 362–373. [Google Scholar] [CrossRef]

- Yauheni, V.; Jerzy, K. Application of joint error mutual compensation for robot end-effector pose accuracy improvement. J. Intell. Robot. Syst. Theory Appl. 2003, 36, 315–329. [Google Scholar]

- Darmanin, R.N.; Bugeja, M.K. A review on multi-robot systems categorised by application domain. In Proceedings of the 2017 25th Mediterranean Conference on Control and Automation (MED), Valletta, Malta, 4–6 July 2017; pp. 701–706. [Google Scholar]

- Njaastad, E.B.; Egeland, O. Automatic Touch-Up of Welding Paths Using 3D Vision. IFAC-PapersOnLine 2016, 49, 73–78. [Google Scholar] [CrossRef]

- Pérez, L.; Rodríguez, Í.; Rodríguez, N.; Usamentiaga, R.; García, D. Robot Guidance Using Machine Vision Techniques in Industrial Environments: A Comparative Review. Sensors 2016, 16, 335. [Google Scholar] [CrossRef] [PubMed]

- Labudzki, R.; Legutko, S. Applications of Machine Vision. Manuf. Ind. Eng. 2011, 2, 27–29. [Google Scholar]

- Wöhler, C. 3D Computer Vision: Efficient Methods and Applications; Springer: Dortmund, Germany, 2009. [Google Scholar]

- Roth, Z.; Mooring, B.; Ravani, B. An overview of robot calibration. IEEE J. Robot. Autom. 1987, 3, 377–385. [Google Scholar] [CrossRef]

- Xuan, J.Q.; Xu, S.H. Review on kinematics calibration technology of serial robots. Int. J. Precis. Eng. Manuf. 2014, 15, 1759–1774. [Google Scholar] [CrossRef]

- Nubiola, A.; Bonev, I.A. Absolute calibration of an ABB IRB 1600 robot using a laser tracker. Robot. Comput. Integr. Manuf. 2013, 29, 236–245. [Google Scholar] [CrossRef]

- Nubiola, A.; Bonev, I.A. Absolute robot calibration with a single telescoping ballbar. Precis. Eng. 2014, 38, 472–480. [Google Scholar] [CrossRef]

- Kubota, T.; Aiyama, Y. Calibration of relative position between manipulator and work by Point-to-face touching method. In Proceedings of the 2009 IEEE International Symposium on Assembly and Manufacturing, Suwon, Korea, 17–20 November 2009; pp. 286–291. [Google Scholar]

- Bai, Y.; Zhuang, H.; Roth, Z.S. Experiment study of PUMA robot calibration using a laser tracking system. In Proceedings of the 2003 IEEE International Workshop on Soft Computing in Industrial Applications, Binghamton, NY, USA, 25 June 2003; pp. 139–144. [Google Scholar]

- Ha, I.-C. Kinematic parameter calibration method for industrial robot manipulator using the relative position. J. Mech. Sci. Technol. 2008, 22, 1084–1090. [Google Scholar] [CrossRef]

- Meng, Y.; Zhuang, H. Autonomous robot calibration using vision technology. Robot. Comput. Integr. Manuf. 2007, 23, 436–446. [Google Scholar] [CrossRef]

- Gong, C.; Yuan, J.; Ni, J. A Self-Calibration Method for Robotic Measurement System. J. Manuf. Sci. Eng. 2000, 122, 174–181. [Google Scholar] [CrossRef]

- Yin, S.; Ren, Y.; Zhu, J.; Yang, S.; Ye, S. A Vision-Based Self-Calibration Method for Robotic Visual Inspection Systems. Sensors 2013, 13, 16565–16582. [Google Scholar] [CrossRef] [PubMed]

- Chang, W.-C.; Wu, C.-H. Eye-in-hand vision-based robotic bin-picking with active laser projection. Int. J. Adv. Manuf. Technol. 2016, 85, 2873–2885. [Google Scholar] [CrossRef]

- Fitzgibbon, A.; Fisher, R. A Buyer’s Guide to Conic Fitting. In Proceedings of the British Machine Vision Conference 1995; British Machine Vision Association: Durham, UK, 1995; pp. 51.1–51.10. [Google Scholar]

- Marchand, E.; Uchiyama, H.; Spindler, F. Pose Estimation for Augmented Reality: A Hands-On Survey. IEEE Trans. Vis. Comput. Graph. 2016, 22, 2633–2651. [Google Scholar] [CrossRef] [PubMed]

- Park, F.C.; Martin, B.J. Robot sensor calibration: Solving AX=XB on the Euclidean group. IEEE Trans. Robot. Autom. 1994, 10, 717–721. [Google Scholar] [CrossRef]

- Strobl, K.H.; Hirzinger, G. Optimal Hand-Eye Calibration. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 4647–4653. [Google Scholar]

- Jianfei, M.; Qing, T.; Ronghua, L. A Direct Linear Solution with Jacobian Optimization to AX=XB for Hand-Eye Calibration. WSEAS Trans. Syst. Control 2010, 5, 509–518. [Google Scholar]

- Bai, Y.; Zhuang, H. Modeless robots calibration in 3D workspace with an on-line fuzzy interpolation technique. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No.04CH37583), The Hague, The Netherlands, 10–13 October 2004; Volume 6, pp. 5233–5239. [Google Scholar]

- Wang, D.; Bai, Y.; Zhao, J. Robot manipulator calibration using neural network and a camera-based measurement system. Trans. Inst. Meas. Control 2012, 34, 105–121. [Google Scholar] [CrossRef]

- Yang, S.; Zhang, C.; Wu, W. Binary output layer of feedforward neural networks for solving multi-class classification problems. arXiv 2018, arXiv:1801.07599. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, F.; Qu, X. A Method for Improving the Pose Accuracy of a Robot Manipulator Based on Multi-Sensor Combined Measurement and Data Fusion. Sensors 2015, 15, 7933–7952. [Google Scholar] [CrossRef] [PubMed]

| Link No. | ||||

|---|---|---|---|---|

| 1 | 0 | 0 | 1.5708 | |

| 2 | 0 | 432 | 0 | |

| 3 | 150 | 20 | −1.5708 | |

| 4 | 432 | 0 | 1.5808 | |

| 5 | 0 | 0 | −1.5708 | |

| 6 | 0 | 0 | 0 |

| Link No. | ||||

|---|---|---|---|---|

| 1 | 2.42463 | 3.433 | 1.65256 | |

| 2 | 5.92042 | 433.966 | 0.031139 | |

| 3 | 150.023 | 20.0033 | −1.441 | |

| 4 | 436.297 | 0.317539 | 1.5975 | |

| 5 | 5.57796 | 1.30921 | −1.4774 | |

| 6 | 4.17643 | 6.27515 | 0.1111 |

| Measurement | Mean Error | |||||

|---|---|---|---|---|---|---|

| Translation/mm | Rotation/rad | |||||

| Before | 0.9883 | −1.3173 | 2.3743 | 0.0008 | −0.0062 | −0.0032 |

| After | −0.0295 | −0.0079 | −0.0496 | −0.0000 | 0.0002 | 0.0000 |

| Reduced % | 97.01% | 99.4% | 97.9% | 100% | 96.77% | 100% |

| Measurement | Standard Deviation Error | |||||

|---|---|---|---|---|---|---|

| Translation/mm | Rotation/rad | |||||

| Before | 12.4182 | 10.4763 | 8.7984 | 0.0202 | 0.0215 | 0.0171 |

| After | 0.2632 | 0.4256 | 0.3881 | 0.0006 | 0.0006 | 0.0006 |

| Reduced % | 97.88% | 95.93% | 95.58% | 97.02% | 97.21% | 96.49% |

| Measurement | Mean Error | |||||

|---|---|---|---|---|---|---|

| Translation/mm | Rotation/rad | |||||

| Before | −2.9269 | −4.9840 | 2.9249 | −0.0004 | 0.0007 | 0.0008 |

| After | −1.3897 | −2.4289 | 1.5540 | −0.0002 | 0.0004 | 0.00045 |

| Reduced % | 52.52% | 51.27% | 46.87% | 50% | 42.86% | 43.75% |

| Measurement | Standard Deviation Error | |||||

|---|---|---|---|---|---|---|

| Translation/mm | Rotation/rad | |||||

| Before | 2.2461 | 2.3726 | 1.7413 | 0.0019 | 0.0036 | 0.0010 |

| After | 0.6998 | 0.8826 | 0.4484 | 0.0010 | 0.0018 | 0.00055 |

| Reduced % | 68.84% | 62.80% | 74.25% | 47.37% | 50% | 45% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, C.-T.; Do, V.-P.; Lee, B.-R. A Novel Indirect Calibration Approach for Robot Positioning Error Compensation Based on Neural Network and Hand-Eye Vision. Appl. Sci. 2019, 9, 1940. https://doi.org/10.3390/app9091940

Cao C-T, Do V-P, Lee B-R. A Novel Indirect Calibration Approach for Robot Positioning Error Compensation Based on Neural Network and Hand-Eye Vision. Applied Sciences. 2019; 9(9):1940. https://doi.org/10.3390/app9091940

Chicago/Turabian StyleCao, Chi-Tho, Van-Phu Do, and Byung-Ryong Lee. 2019. "A Novel Indirect Calibration Approach for Robot Positioning Error Compensation Based on Neural Network and Hand-Eye Vision" Applied Sciences 9, no. 9: 1940. https://doi.org/10.3390/app9091940

APA StyleCao, C.-T., Do, V.-P., & Lee, B.-R. (2019). A Novel Indirect Calibration Approach for Robot Positioning Error Compensation Based on Neural Network and Hand-Eye Vision. Applied Sciences, 9(9), 1940. https://doi.org/10.3390/app9091940