Grapheme-to-Phoneme Conversion with Convolutional Neural Networks

Abstract

1. Introduction

2. Previous Works

3. Convolutional Neural Networks for Grapheme to Phoneme Conversion

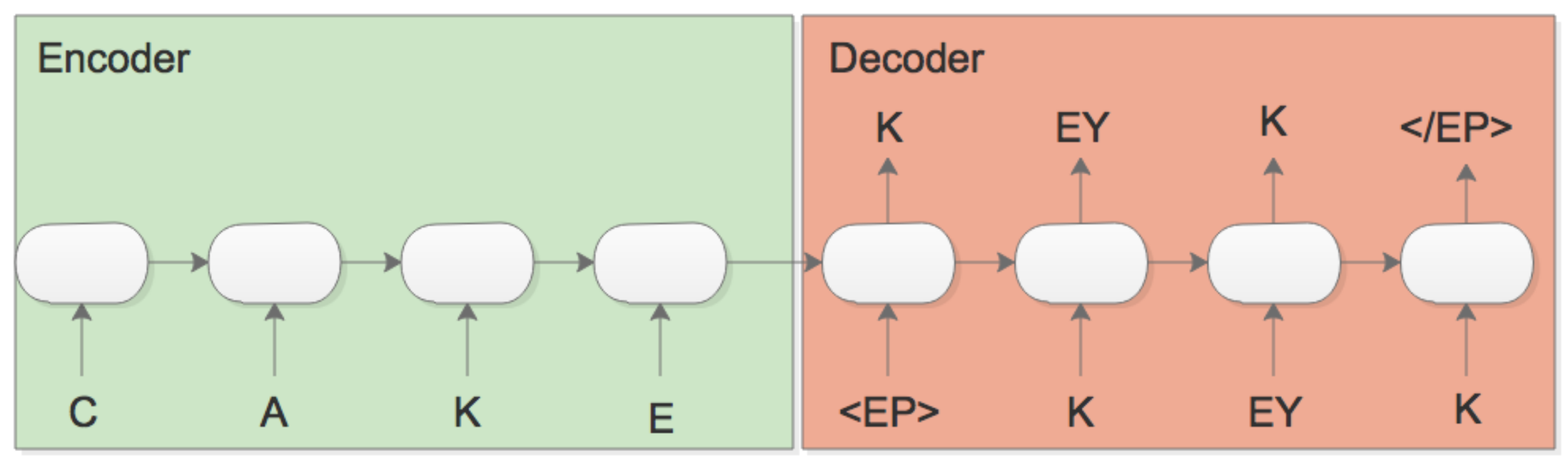

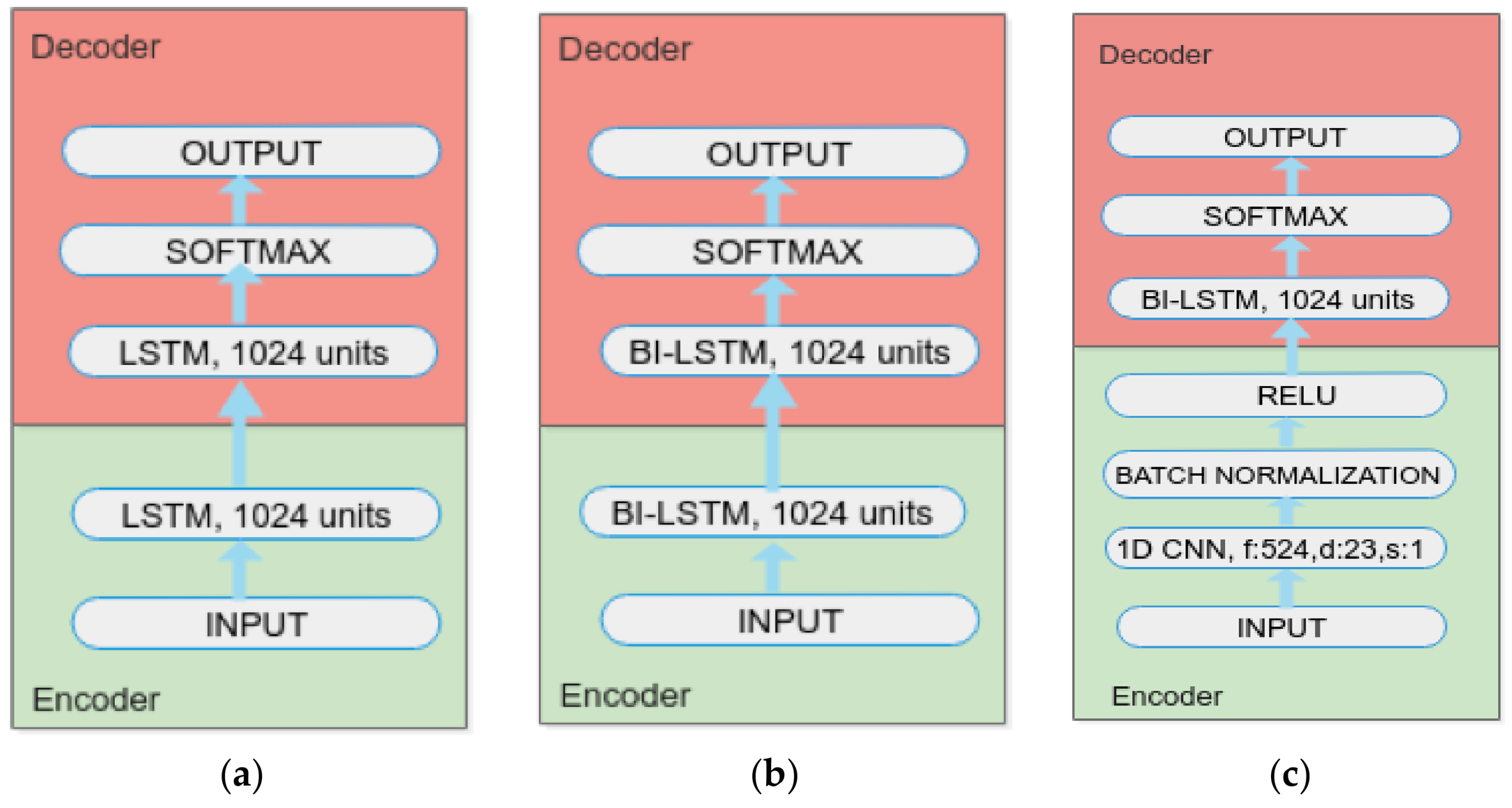

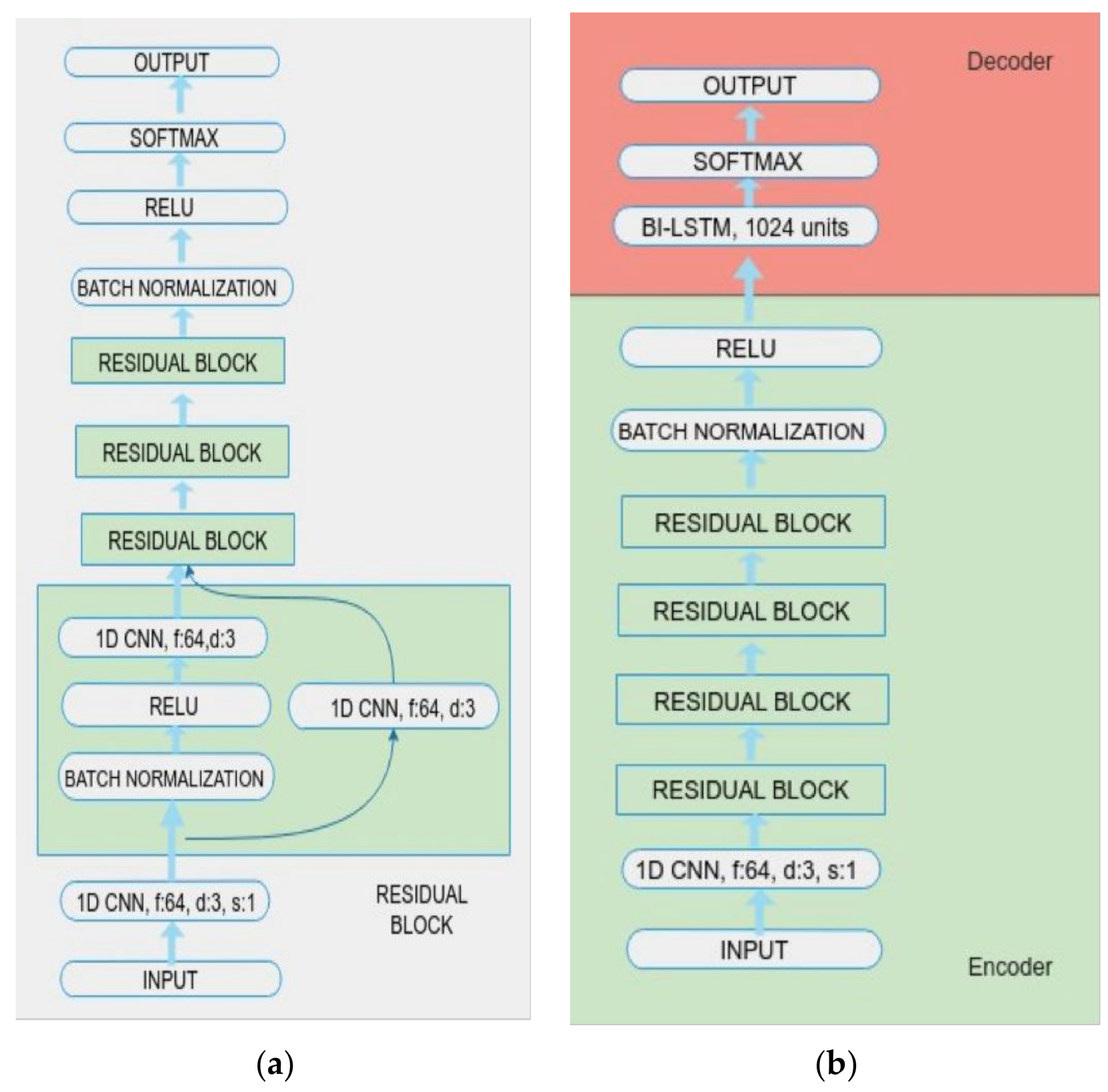

3.1. Models

- (1)

- if feature maps have the same size, then the blocks share the same hyperparameters.

- (2)

- each time when the feature map is halved, the number of filters is doubled.

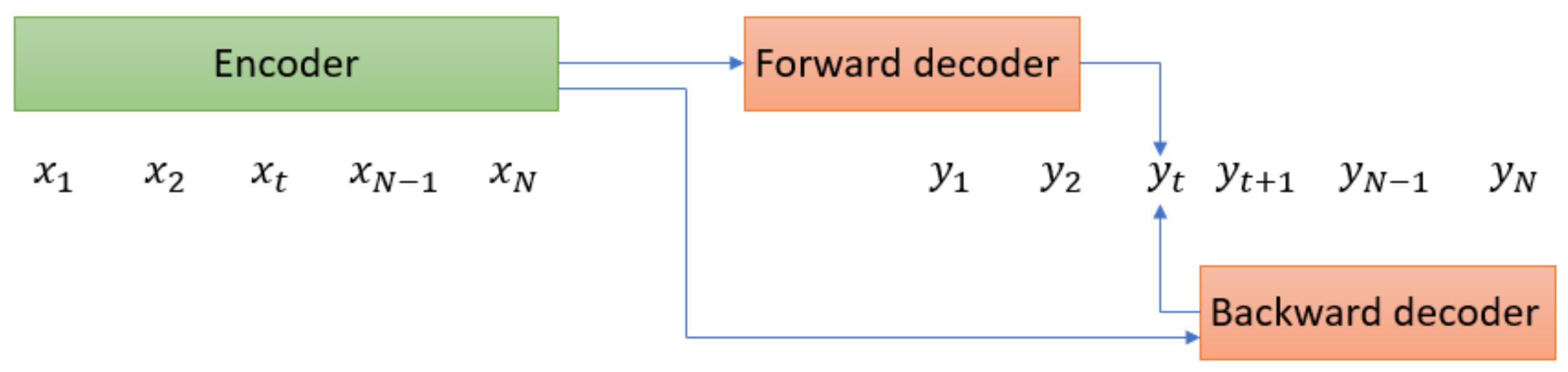

3.2. Details of the Bidirectional Decoder

4. Experiments

4.1. Datasets

4.2. Training

4.3. Evaluation and Results

5. Discussion

- Word: Acknowledgement

- Enumeration: 0 1 2 3 4 5 6 7 8 9 10 11 12

- Reference: [EP AE K N AA L IH JH M AH N T /EP]

- Prediction: [EP IH K N AA L IH JH IH JH AH N T /EP]

- Types of errors: S S I

6. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

Abbreviations

| G2P | Grapheme-to-phoneme |

| ASR | Automatic Speech Recognition |

| CNN | Convolutional neural network |

| PER | Phoneme error rate |

| WER | Word error rate |

| Bi-LSTM | bi-directional Long Short Term Memory |

References

- Elovitz, H.; Rodney, J.; McHugh, A.; Shore, J. Letter-to-Sound Rules for Automatic Translation of English Text to Phonetics. IEEE Trans. Acoust. Speech Signal Process. 1976, 24, 446–459. [Google Scholar] [CrossRef]

- Black, A.W.; Lenzo, K.; Page, V. Issues in Building General Letter to Sound Rules. In Proceedings of the 3rd ESCA Workshop on Speech Synthesis, Blue Mountains, Australia, 26–29 November 1998; pp. 77–80. [Google Scholar]

- Bisani, M.; Ney, H. Joint-Sequence Models for Grapheme-to- Phoneme Conversion. Speech Commun. 2008, 50, 434–451. [Google Scholar] [CrossRef]

- Galescu, L.; Allen, F.J. Pronunciation of Proper Names with a Joint N-Gram Model for Bi-Directional Grapheme-to-Phoneme Conversion. In Proceedings of the 7th International Conference on Spoken Language Processing, Denver, CO, USA, 16–20 September 2002; pp. 109–112. [Google Scholar]

- Chen, S.F. Conditional and Joint Models for Grapheme-to-Phoneme Conversion. In Proceedings of the 8th European Conference on Speech Communication and Technology, Geneva, Switzerland, 1–4 September 2003; pp. 2033–2036. [Google Scholar]

- Lehnen, P.; Allauzen, A.; Laverge, T.; Yvon, F.; Hahn, S.; Ney, H. Structure Learning in Hidden Conditional Random Fields for Grapheme-to-Phoneme Conversion. In Proceedings of the Annual Conference of the International Speech Communication Association, Lyon, France, 25–29 August 2013; pp. 2326–2330. [Google Scholar]

- Lehnen, P.; Hahn, S.; Guta, V.; Ney, H. Hidden Conditional Random Fields with M-to-N Alignments for Grapheme-to-Phoneme Conversion. In Proceedings of the 13th Annual Conference of the International Speech Communication Association, Portland, OR, USA, 9–13 September 2012; pp. 2554–2557. [Google Scholar]

- Wu, K.; Allauzen, C.; Hall, K.; Riley, M.; Roark, B. Encoding Linear Models as Weighted Finite-State Transducers. In Proceedings of the Annual Conference of the International Speech Communication Association, Singapore, 14–18 September 2014; pp. 1258–1262. [Google Scholar]

- Arik, S.Ö.; Chrzanowski, M.; Coates, A.; Diamos, G.; Gibiansky, A.; Kang, Y.; Li, X.; Miller, J.; Raiman, J.; Sengupta, S.; et al. Deep Voice: Real-Time Neural Text-to-Speech. In Proceedings of the 34th International Conference on Machine Learning, PMLR 70, Sydney, Australia, 6–11 August 2017; pp. 195–204. [Google Scholar]

- Yao, K.; Peng, B.; Zhang, Y.; Yu, D.; Zweig, G.; Shi, Y. Spoken Language Understanding Using Long Short-Term Memory Neural Networks. In Proceedings of the Spoken Language Technology Workshop (SLT’14), Lake Tahoe, CA, USA, 7–10 December 2014; pp. 189–194. [Google Scholar] [CrossRef]

- Schuster, M.; Paliwal, K.K. Bidirectional Recurrent Neural Networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Rao, K.; Peng, F.; Sak, H.; Beaufays, F. Grapheme-to-Phoneme Conversion Using Long Short-Term Memory Recurrent Neural Networks. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, South Brisbane, Queensland, Australia, 19–24 April 2015; pp. 4225–4229. [Google Scholar]

- Graves, A.; Fernandez, S.; Gomez, F.; Schmidhuber, J. Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23th International Conference on Machine Learning (ICML 2006), Pittsburgh, PA, USA, 25–29 June 2006; pp. 369–376. [Google Scholar]

- Hori, T.; Watanabe, S.; Zhang, Y.; Chan, W. Advances in Joint CTC-Attention based End-to-End Speech Recognition with a Deep CNN Encoder and RNN-LM. In Proceedings of the INTERSPEECH, Stockholm, Sweden, 20–24 August 2017; pp. 949–953. [Google Scholar]

- Sutskever, I.; Vinyals, O.V.; Le, Q. Sequence to Sequence Learning with Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 5–10 December 2014; pp. 3104–3112. [Google Scholar]

- Yao, K.; Zweig, G. Sequence-to-Sequence Neural Net Models for Grapheme-to-Phoneme Conversion. In Proceedings of the Annual Conference of the International Speech Communication Association, Dresden, Germany, 6–10 September 2015; pp. 3330–3334. [Google Scholar]

- Mousa, A.E.; Schuller, B. Deep Bidirectional Long Short-Term Memory Recurrent Neural Networks for Grapheme-to-Phoneme Conversion Utilizing Complex Many-to-Many Alignments. In Proceedings of the Annual Conference of the International Speech Communication Association, San Francisco, CA, USA, 8–12 September 2016; pp. 2836–2840. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems (NIPS’12), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the 13th European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Gehring, J.; Auli, M.; Grangier, D.; Dauphin, Y. A Convolutional Encoder Model for Neural Machine Translation. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 123–135. [Google Scholar]

- Gehring, J.; Auli, M.; Grangier, D.; Yarats, D.; Dauphin, Y. Convolutional Sequence to Sequence Learning. arXiv, 2017; arXiv:1705.03122. [Google Scholar]

- Kalchbrenner, N.; Blunsom, P. Recurrent Continuous Translation Models. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; pp. 1700–1709. [Google Scholar] [CrossRef]

- Cho, K.; Merrienboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations Using RNN Encoder-Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1724–1734. [Google Scholar] [CrossRef]

- Lu, L.; Zhang, X.; Renals, S. On Training the Recurrent Neural Network Encoder-Decoder for Large Vocabulary End-to-End Speech Recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Shanghai, China, 20–25 March 2016; pp. 5060–5064. [Google Scholar] [CrossRef]

- Wang, Y.; Skerry-Ryan, R.; Stanton, D.; Wu, Y.; Weiss, R.J.; Jaitly, N.; Yang, Z.; Xiao, Y.; Chen, Z.; Bengio, S.; et al. Tacotron: Towards End-to-End Speech Synthesis. In Proceedings of the INTERSPEECH, Stockholm, Sweden, 20–24 August 2017; pp. 4006–4010. [Google Scholar] [CrossRef]

- Milde, B.; Schmidt, C.; Köhler, J. Multitask Sequence-to-Sequence Models for Grapheme-to-Phoneme Conversion. In Proceedings of the INTERSPEECH, Stockholm, Sweden, 20–24 August 2017; pp. 2536–2540. [Google Scholar] [CrossRef]

- Wang, W.; Xu, S.; Xu, B. First Step Towards End-to-End Parametric TTS Synthesis: Generating Spectral Parameters with Neural Attention. In Proceedings of the INTERSPEECH, San Francisco, CA, USA, 8–12 September 2016; pp. 2243–2247. [Google Scholar]

- Nguyen, D.Q.; Nguyen, D.Q.; Chu, C.X.; Thater, S.; Pinkal, M. Sequence to Sequence Learning for Event Prediction. In Proceedings of the 8th International Joint Conference on Natural Language Processing, Taipei, Taiwan, 27 November–1 December 2017; pp. 37–42. [Google Scholar]

- Zhang, X.; Su, J.; Qin, Y.; Liu, Y.; Ji, R.; Wang, H. Asynchronous Bidirectional Decoding for Neural Machine Translation. In Proceedings of the Association for the Advancement of Artificial Intelligence (AAAI-2018), Edmonton, AB, Canada, 13–17 November 2018; pp. 5698–5705. [Google Scholar]

- Prabhavalkar, R.; Rao, K.; Sainath, T.N.; Li, B.; Johnson, L.; Jaitly, N. A Comparison of Sequence-to-Sequence Models for Speech Recognition. In Proceedings of the INTERSPEECH, Stockholm, Sweden, 20–24 August 2017; pp. 939–943. [Google Scholar]

- Bai, S.; Kolter, J.Z.; Koltun, V. Convolutional Sequence Modeling Revisited. In Proceedings of the 6th International Conference on Learning Representations (ICLR 2018) Workshop, Vancouver Convention Center, Vancouver, BC, Canada, 30 April–3 May 2018; pp. 1–20. [Google Scholar]

- Toshniwal, S.; Livescu, K. Jointly learning to align and convert graphemes to phonemes with neural attention models. In Proceedings of the IEEE Spoken Language Technology Workshop (SLT), San Diego, CA, USA, 13–16 December 2016. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2818–2826. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Bluche, T.; Ney, H.; Kermorvant, C. Tandem HMM with Convolutional Neural Network for Handwritten Word Recognition. In Proceedings of the IEEE International Conference on Acoustics Speech and Signal Processing (ICASSP2013), Vancouver, BC, Canada, 26–31 May 2013; pp. 2390–2394. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2323. [Google Scholar] [CrossRef]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A Unified Embedding for Face Recognition and Clustering. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar] [CrossRef]

- Conneau, A.; Schwenk, H.; Barrault, L.; Lecun, Y. Very Deep Convolutional Networks for Text Classification. Ki Künstliche Intell. 2016, 26, 357–363. [Google Scholar]

- Zhou, P.; Qi, Z.; Zheng, S.; Xu, J.; Bao, H.; Xu, B. Text Classification Improved by Integrating Bidirectional LSTM with Two-Dimensional Max Pooling. In Proceedings of the 26th International Conference on Computational Linguistics (COLING): Technical Papers, Osaka, Japan, 11–16 December 2016; pp. 3485–3495. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32 International Conference on Machine Learning, PMLR 37, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Greff, K.; KSrivastava, R.; Schmidhuber, J. Highway and Residual Networks Learn Unrolled Iterative Estimation. arXiv, 2017; arXiv:1612.07771. [Google Scholar]

- Luong, T.; Pham, H.; Manning, C.D. Effective Approaches to Attention-based Neural Machine Translation. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing (EMNLP), Lisbon, Portugal, 17–21 September 2015; pp. 1412–1421. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. CoRR 2015. abs/1409.0473. [Google Scholar]

- Sundermeyer, M.; Alkhouli, T.; Wuebker, J.; Ney, H. Translation Modeling with Bidirectional Recurrent Neural Networks. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 14–25. [Google Scholar] [CrossRef]

- Fan, Y.; Qian, Y.; Xie, F.; Soong, F.K. TTS Synthesis with Bidirectional LSTM Based Recurrent Neural Networks. In Proceedings of the Annual Conference of the International Speech Communication Association, Singapore, 14–18 September 2014; pp. 1964–1968. [Google Scholar]

- Saining, X.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Graves, A.; Jaitly, N.; Mohamed, A. Hybrid speech recognition with Deep Bidirectional LSTM. In Proceedings of the 2013 IEEE Workshop on Automatic Speech Recognition and Understanding, Olomouc, Czech Republic, 8–12 December 2013; pp. 273–278. [Google Scholar]

- Kingma, D.P.; Jimmy, L.B. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Levenshtein, V.I. Binary Codes Capable of Correcting Deletions, Insertions, and Reversals. Sov. Phys. Dokl. 1966, 10, 707–710. [Google Scholar]

- Zhu, R.; Huang, Y. Efficient Privacy-Preserving General Edit Distance and Beyond, Cryptology ePrint Archive, Report 2017/683. 2017.

| Model | Method | PER (%) | WER (%) | Time (s) | Number of Epochs | Model Size |

|---|---|---|---|---|---|---|

| 1 | Encoder-Decoder LSTM | 5.68 | 28.44 | 467.73 | 185 | 12.7 M |

| 1A | Encoder-Decoder LSTM with attention layer | 5.23 | 28.36 | 688.9 | 136 | 13.9 M |

| 2 | Encoder-Decoder Bi-LSTM | 5.26 | 27.07 | 858.93 | 177 | 33.8 M |

| 2A | Encoder-Decoder Bi-LSTM with attention layer | 4.86 | 25.67 | 1045.5 | 114 | 35.3 M |

| 3 | Encoder CNN, decoder Bi-LSTM | 5.17 | 26.82 | 518.3 | 115 | 13.1 M |

| 4 | End-to-end CNN (with res. connections) | 5.84 | 29.74 | 176.1 | 142 | 7.62 M |

| 5 | Encoder CNN with res. connections, decoder Bi-LSTM | 4.81 | 25.13 | 573.5 | 147 | 14.5 M |

| Data | Method | PER (%) | WER (%) |

|---|---|---|---|

| NetTalk | Joint sequence model [3] | 8.26 | 33.67 |

| Bi-LSTM [16] | 7.38 | 30.77 | |

| Encoder-decoder with global attention [32] | 7.14 | 29.20 | |

| Encoder CNN with residual connections, decoder Bi-LSTM (Model 5) | 5.69 | 30.10 | |

| CMUDict | LSTM with Full-delay [12] | 9.11 | 30.1 |

| Joint sequence model [3] | 5.88 | 24.53 | |

| Encoder-decoder LSTM [16] | 7.63 | 28.61 | |

| Bi-LSTM + Alignment [16] | 5.45 | 23.55 | |

| Combination of sequitur G2P and seq2seq-attention and multitask learning [26] | 5.76 | 24.88 | |

| Ensemble of 5 [Encoder-decoder + global attention] models [32] | 4.69 | 20.24 | |

| Encoder-decoder with global attention [32] | 5.04 | 21.69 | |

| Joint multi-gram + CRF [8] | 5.5 | 23.4 | |

| Joint n-gram model [4] | 7.0 | 28.5 | |

| Joint maximum entropy (ME) n-gram model [5] | 5.9 | 24.7 | |

| Encoder-Decoder GRU [9] | 5.8 | 28.7 | |

| Encoder CNN with residual con., decoder Bi-LSTM (fifth model) | 4.81 | 25.13 |

| Word from Test Data | Reference of Given Word | Prediction of Model 5 |

|---|---|---|

| YELLOWKNIFE | Y EH L OW N AY F | Y EH L OW K N N F |

| ZANGHI | Z AA N G IY | Z AE N G |

| GDANSK | G AH D AE N S K | D AE N AE K EH K |

| SCICCHITANO | S IH K AH T AA N OW | S CH CH Y K IY IY |

| KOVACIK | K AA V AH CH IH K | K AH V AA CH IH K |

| LPN | EH L P IY EH N | L L N N P IY E |

| INES | IH N IH S | AY N Z |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yolchuyeva, S.; Németh, G.; Gyires-Tóth, B. Grapheme-to-Phoneme Conversion with Convolutional Neural Networks. Appl. Sci. 2019, 9, 1143. https://doi.org/10.3390/app9061143

Yolchuyeva S, Németh G, Gyires-Tóth B. Grapheme-to-Phoneme Conversion with Convolutional Neural Networks. Applied Sciences. 2019; 9(6):1143. https://doi.org/10.3390/app9061143

Chicago/Turabian StyleYolchuyeva, Sevinj, Géza Németh, and Bálint Gyires-Tóth. 2019. "Grapheme-to-Phoneme Conversion with Convolutional Neural Networks" Applied Sciences 9, no. 6: 1143. https://doi.org/10.3390/app9061143

APA StyleYolchuyeva, S., Németh, G., & Gyires-Tóth, B. (2019). Grapheme-to-Phoneme Conversion with Convolutional Neural Networks. Applied Sciences, 9(6), 1143. https://doi.org/10.3390/app9061143