An ARCore Based User Centric Assistive Navigation System for Visually Impaired People

Abstract

Featured Application

Abstract

1. Introduction

2. Related Works

2.1. Assistive Navigation System Frameworks

2.2. Positioning and Tracking

2.3. Path Planning

2.4. Human–Machine Interaction

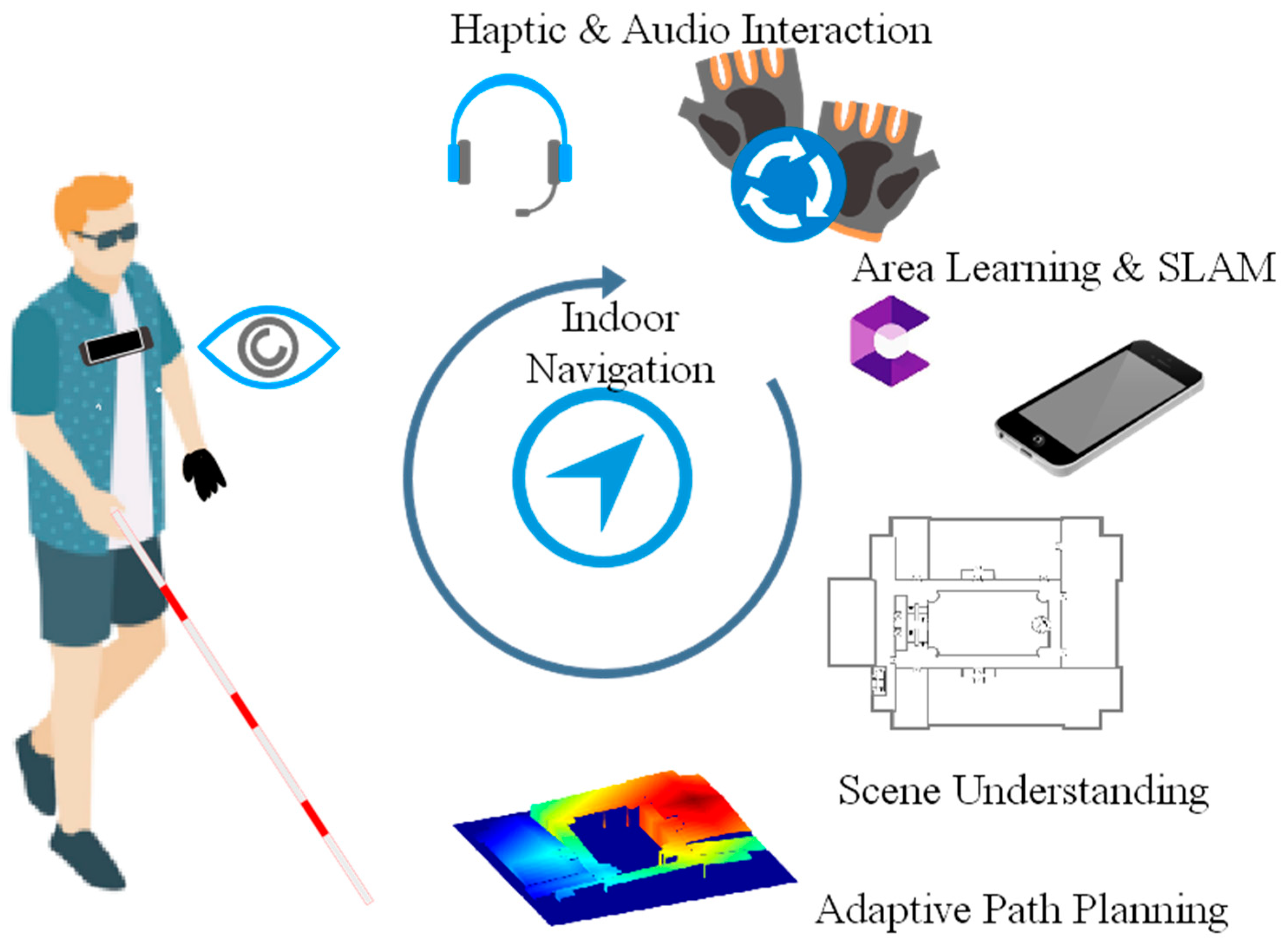

3. Design of ANSVIP

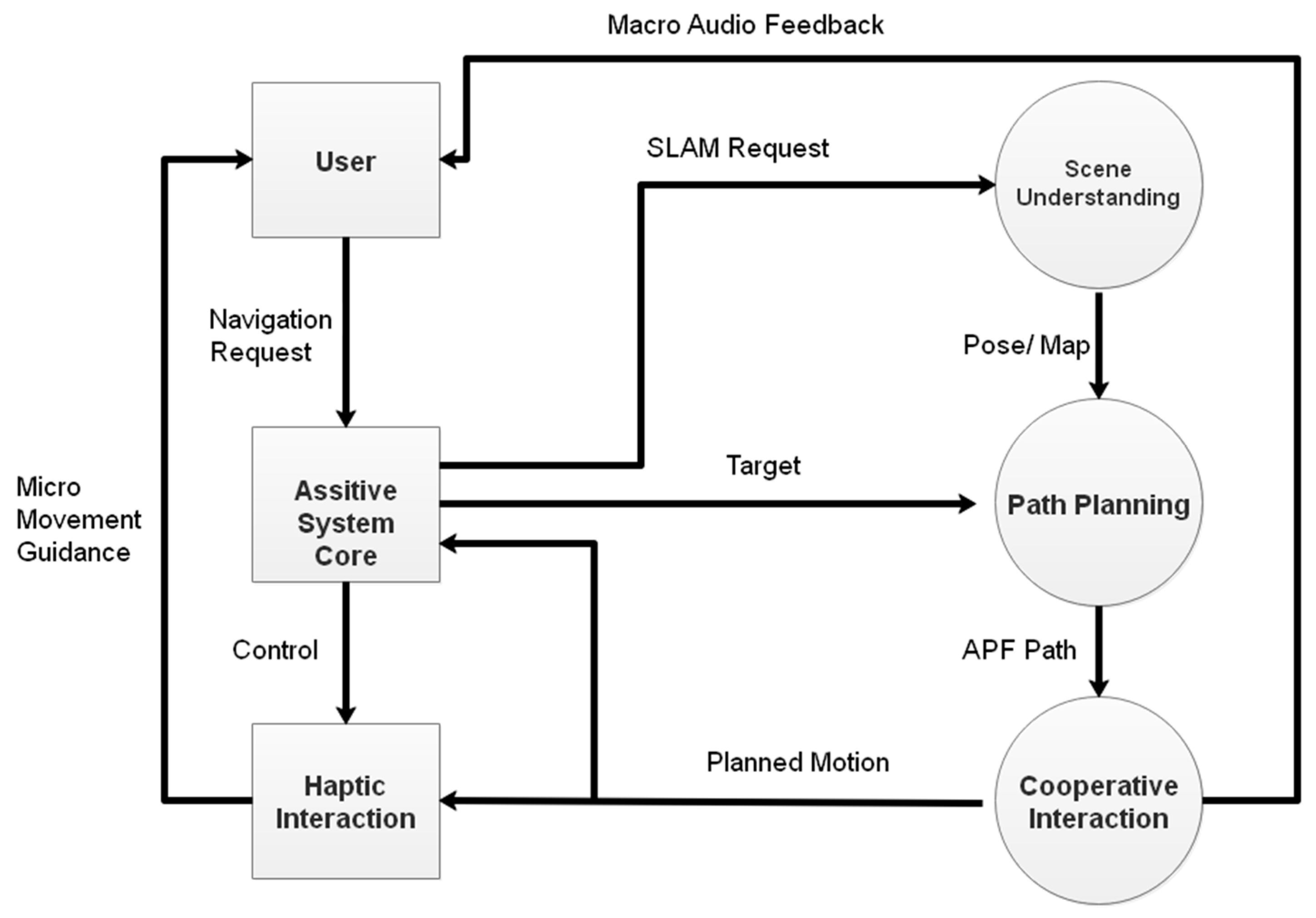

3.1. Information Flow in System Design

3.2. Real-Time Area Learning-Based Localization

3.3. Area Learning in ANSVIP

3.4. Adaptive Artificial Potential Field-Based Path Planning

3.5. Dual-Channel Human–Machine Interaction

4. System Prototyping and Evaluation

4.1. System Prototyping

4.2. Localization

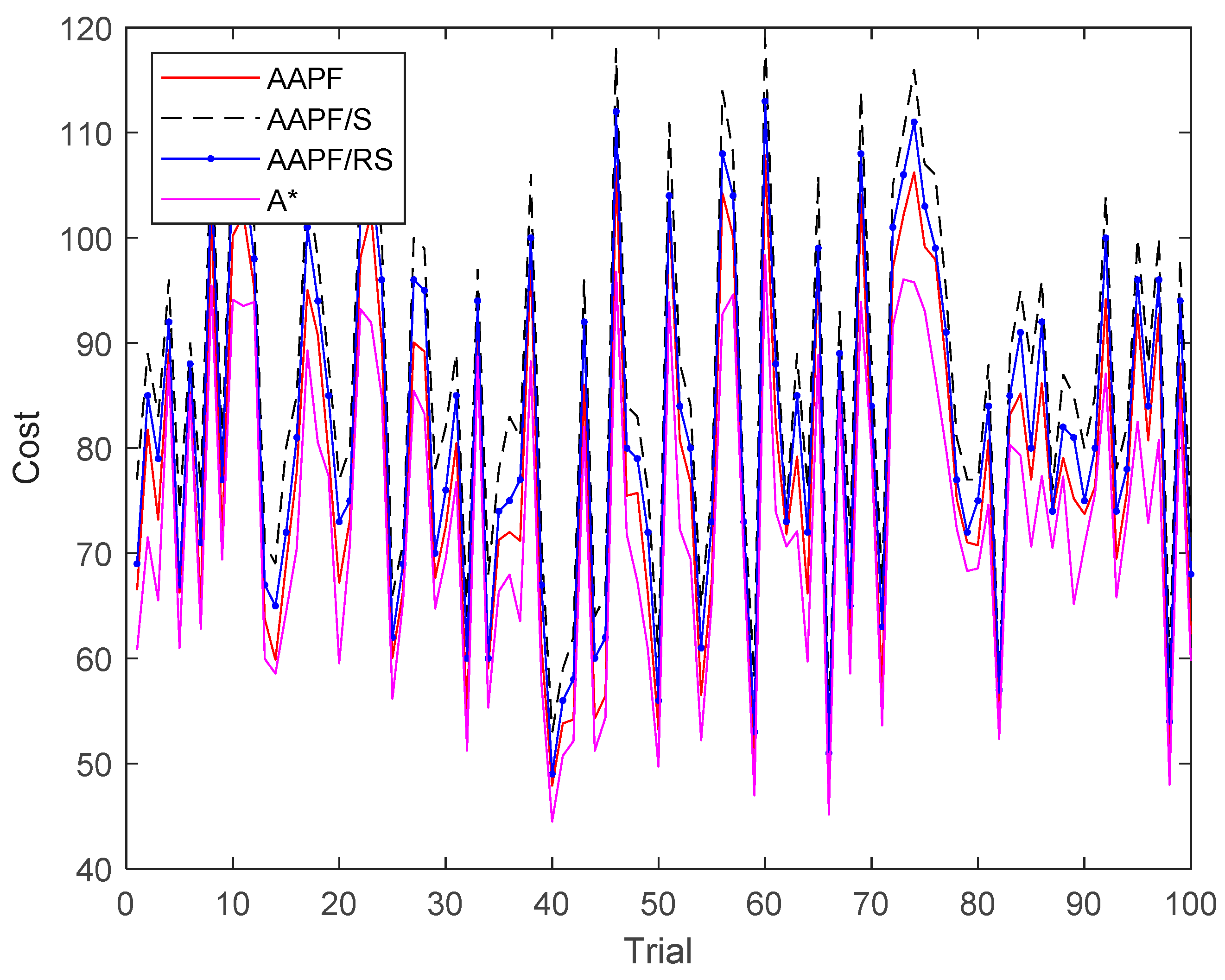

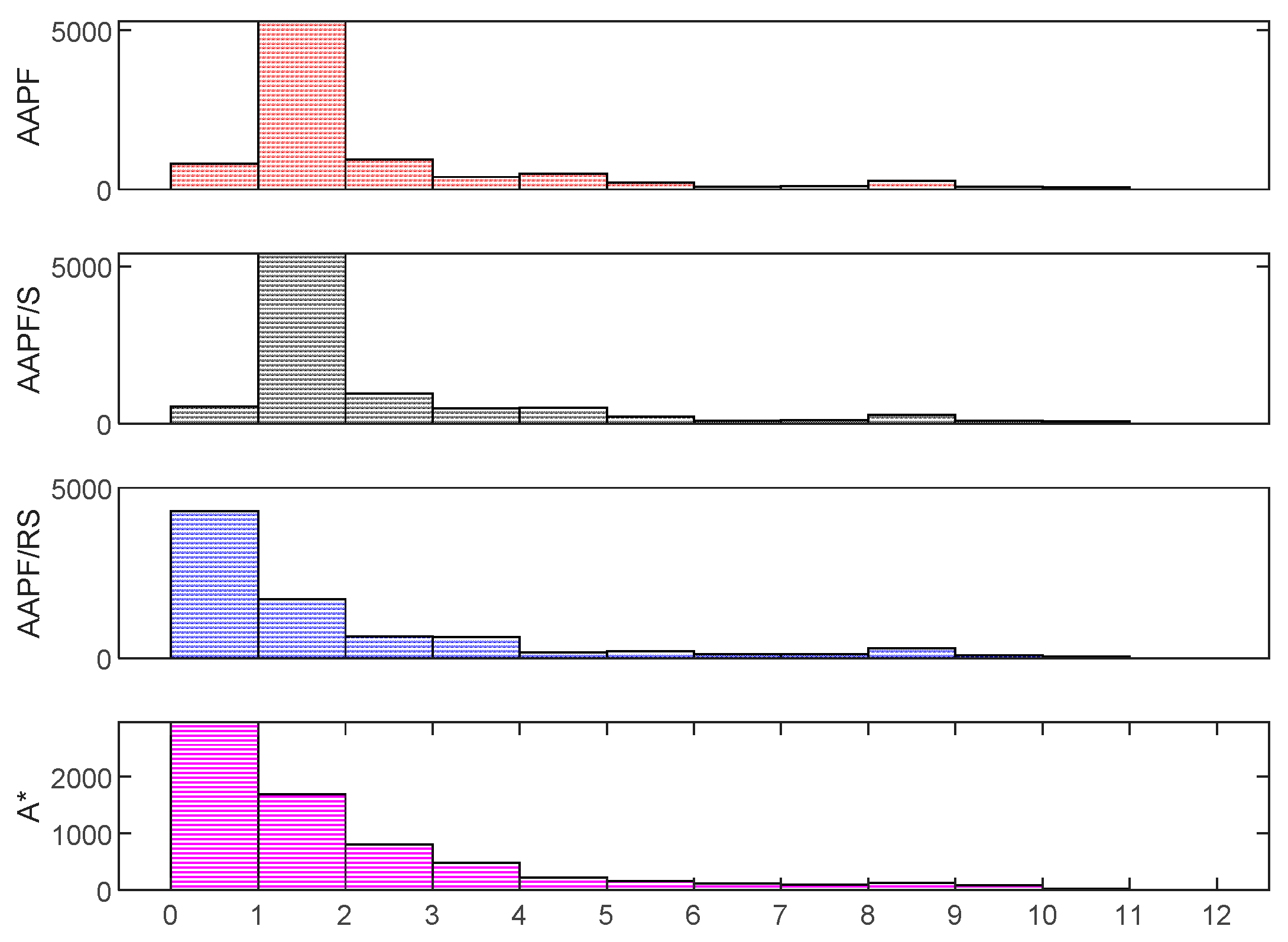

4.3. Path Planning

4.4. Haptic Guidance

4.5. Integration Test

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Horton, E.L.; Renganathan, R.; Toth, B.N.; Cohen, A.J.; Bajcsy, A.V.; Bateman, A.; Jennings, M.C.; Khattar, A.; Kuo, R.S.; Lee, F.A.; et al. A review of principles in design and usability testing of tactile technology for individuals with visual impairments. Assist. Technol. 2017, 29, 28–36. [Google Scholar] [CrossRef] [PubMed]

- Katz, B.F.G.; Kammoun, S.; Parseihian, G.; Gutierrez, O.; Brilhault, A.; Auvray, M.; Truillet, P.; Denis, M.; Thorpe, S.; Jouffrais, C. NAVIG: Augmented reality guidance system for the visually impaired. Virtual Reality 2012, 16, 253–269. [Google Scholar] [CrossRef]

- Zhang, X. A Wearable Indoor Navigation System with Context Based Decision Making for Visually Impaired. Int. J. Adv. Robot. Autom. 2016, 1, 1–11. [Google Scholar] [CrossRef]

- Ahmetovic, D.; Gleason, C.; Kitani, K.M.; Takagi, H.; Asakawa, C. NavCog: Turn-by-turn smartphone navigation assistant for people with visual impairments or blindness. In Proceedings of the 13th Web for All Conference Montreal, Montreal, QC, Canada, 11–13 April 2016; pp. 90–99. [Google Scholar] [CrossRef]

- Bing, L.; Munoz, J.P.; Rong, X.; Chen, Q.; Xiao, J.; Tian, Y.; Arditi, A.; Yousuf, M. Vision-based Mobile Indoor Assistive Navigation Aid for Blind People. IEEE Trans. Mobile Comput. 2019, 18, 702–714. [Google Scholar]

- Nair, V.; Budhai, M.; Olmschenk, G.; Seiple, W.H.; Zhu, Z. ASSIST: Personalized Indoor Navigation via Multimodal Sensors and High-Level Semantic Information. In Proceedings of the 2018 European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Volume 11134, pp. 128–143. [Google Scholar] [CrossRef]

- Fernandes, H.; Costa, P.; Filipe, V.; Paredes, H.; Barroso, J. A review of assistive spatial orientation and navigation technologies for the visually impaired. In Universal Access in the Information Society; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar] [CrossRef]

- Yang, Z.; Ganz, A. A Sensing Framework for Indoor Spatial Awareness for Blind and Visually Impaired Users. IEEE Access 2019, 7, 10343–10352. [Google Scholar] [CrossRef]

- Jiao, J.C.; Yuan, L.B.; Deng, Z.L.; Zhang, C.; Tang, W.H.; Wu, Q.; Jiao, J. A Smart Post-Rectification Algorithm Based on an ANN Considering Reflectivity and Distance for Indoor Scenario Reconstruction. IEEE Access 2018, 6, 58574–58586. [Google Scholar] [CrossRef]

- Joseph, S.L.; Xiao, J.Z.; Zhang, X.C.; Chawda, B.; Narang, K.; Rajput, N.; Mehta, S.; Subramaniam, L.V. Being Aware of the World: Toward Using Social Media to Support the Blind with Navigation. IEEE Trans. Hum.-Mach. Syst. 2015, 45, 399–405. [Google Scholar] [CrossRef]

- Xiao, J.; Joseph, S.L.; Zhang, X.; Li, B.; Li, X.; Zhang, J. An Assistive Navigation Framework for the Visually Impaired. IEEE Trans. Hum.-Mach. Syst. 2017, 45, 635–640. [Google Scholar] [CrossRef]

- Zhang, X.; Bing, L.; Joseph, S.L.; Xiao, J.; Yi, S.; Tian, Y.; Munoz, J.P.; Yi, C. A SLAM Based Semantic Indoor Navigation System for Visually Impaired Users. Proceedings of 2015 IEEE International Conference on Systems, Man, and Cybernetics, Kowloon, China, 9–12 October 2015. [Google Scholar]

- Bing, L.; Muñoz, J.P.; Rong, X.; Xiao, J.; Tian, Y.; Arditi, A. ISANA: Wearable Context-Aware Indoor Assistive Navigation with Obstacle Avoidance for the Blind. In Proceedings of the 2016 European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Zhao, Y.; Zheng, Z.; Liu, Y. Survey on computational-intelligence-based UAV path planning. Knowl.-Based Syst. 2018, 158, 54–64. [Google Scholar] [CrossRef]

- Ahmetovic, D.; Oh, U.; Mascetti, S.; Asakawa, C. Turn Right: Analysis of Rotation Errors in Turn-by-Turn Navigation for Individuals with Visual Impairments. In Proceedings of the 20th International ACM Sigaccess Conference on Computers and Accessibility, Assets’18, Galway, Ireland, 22–24 October 2018; pp. 333–339. [Google Scholar] [CrossRef]

- Balata, J.; Mikovec, Z.; Slavik, P. Landmark-enhanced route itineraries for navigation of blind pedestrians in urban environment. J. Multimodal User Interfaces 2018, 12, 181–198. [Google Scholar] [CrossRef]

- Soltani, A.R.; Tawfik, H.; Goulermas, J.Y.; Fernando, T. Path planning in construction sites: Performance evaluation of the Dijkstra, A*, and GA search algorithms. Adv. Eng. Inform. 2002, 16, 291–303. [Google Scholar] [CrossRef]

- Sato, D.; Oh, U.; Naito, K.; Takagi, H.; Kitani, K.; Asakawa, C. NavCog3 An Evaluation of a Smartphone-Based Blind Indoor Navigation Assistant with Semantic Features in a Large-Scale Environment. In Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility, Baltimore, MD, USA, 20 October–1 November 2017. [Google Scholar] [CrossRef]

- Epstein, R.A.; Patai, E.Z.; Julian, J.B.; Spiers, H.J. The cognitive map in humans: Spatial navigation and beyond. Nat. Neurosci. 2017, 20, 1504–1513. [Google Scholar] [CrossRef] [PubMed]

- Marianne, F.; Sturla, M.; Witter, M.P.; Moser, E.I.; May-Britt, M. Spatial representation in the entorhinal cortex. Science 2004, 305, 1258–1264. [Google Scholar]

- Papadopoulos, K.; Koustriava, E.; Koukourikos, P.; Kartasidou, L.; Barouti, M.; Varveris, A.; Misiou, M.; Zacharogeorga, T.; Anastasiadis, T. Comparison of three orientation and mobility aids for individuals with blindness: Verbal description, audio-tactile map and audio-haptic map. Assist. Technol. 2017, 29, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Rector, K.; Bartlett, R.; Mullan, S. Exploring Aural and Haptic Feedback for Visually Impaired People on a Track: A Wizard of Oz Study. In Proceedings of the 20th International ACM Sigaccess Conference on Computers and Accessibility, Assets’18, Galway, Ireland, 22–24 October 2018. [Google Scholar] [CrossRef]

- Papadopoulos, K.; Koustriava, E.; Koukourikos, P. Orientation and mobility aids for individuals with blindness: Verbal description vs. audio-tactile map. Assist. Technol. 2018, 30, 191–200. [Google Scholar] [CrossRef] [PubMed]

- Guerreiro, J.; Ohn-Bar, E.; Ahmetovic, D.; Kitani, K.; Asakawa, C. How Context and User Behavior Affect Indoor Navigation Assistance for Blind People. In Proceedings of the 2018 Internet of Accessible Things, Lyon, France, 23–25 April 2018. [Google Scholar] [CrossRef]

- Kacorri, H.; Ohn-Bar, E.; Kitani, K.M.; Asakawa, C. Environmental Factors in Indoor Navigation Based on Real-World Trajectories of Blind Users. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–12. [Google Scholar] [CrossRef]

- Boerema, S.T.; van Velsen, L.; Vollenbroek-Hutten, M.M.R.; Hermens, H.J. Value-based design for the elderly: An application in the field of mobility aids. Assist. Technol. 2017, 29, 76–84. [Google Scholar] [CrossRef] [PubMed]

- Mone, G. Feeling Sounds, Hearing Sights. Commun. ACM 2018, 61, 15–17. [Google Scholar] [CrossRef]

- Martins, L.B.; Lima, F.J. Analysis of Wayfinding Strategies of Blind People Using Tactile Maps. Procedia Manuf. 2015, 3, 6020–6027. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Yao, X.; Zhu, Y.; Hu, F. An ARCore Based User Centric Assistive Navigation System for Visually Impaired People. Appl. Sci. 2019, 9, 989. https://doi.org/10.3390/app9050989

Zhang X, Yao X, Zhu Y, Hu F. An ARCore Based User Centric Assistive Navigation System for Visually Impaired People. Applied Sciences. 2019; 9(5):989. https://doi.org/10.3390/app9050989

Chicago/Turabian StyleZhang, Xiaochen, Xiaoyu Yao, Yi Zhu, and Fei Hu. 2019. "An ARCore Based User Centric Assistive Navigation System for Visually Impaired People" Applied Sciences 9, no. 5: 989. https://doi.org/10.3390/app9050989

APA StyleZhang, X., Yao, X., Zhu, Y., & Hu, F. (2019). An ARCore Based User Centric Assistive Navigation System for Visually Impaired People. Applied Sciences, 9(5), 989. https://doi.org/10.3390/app9050989