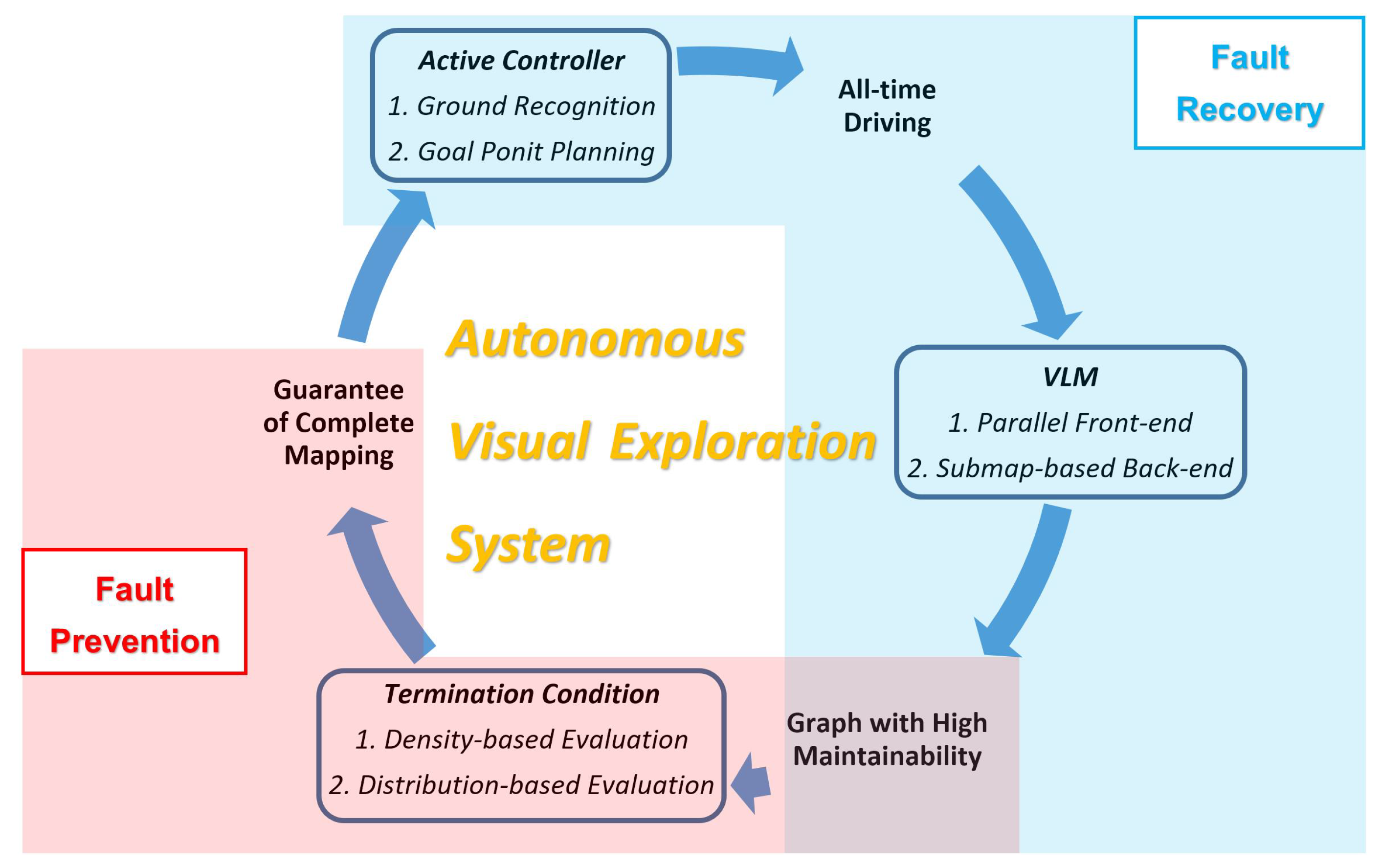

Figure 1.

Structure of our visual exploration system, three modules are contained: visual localization and mapping (VLM), active controller and termination condition.

Figure 1.

Structure of our visual exploration system, three modules are contained: visual localization and mapping (VLM), active controller and termination condition.

Figure 2.

Illustration of the set-up of the camera; it is fixed on the mobile robot platform in the heading direction.

Figure 2.

Illustration of the set-up of the camera; it is fixed on the mobile robot platform in the heading direction.

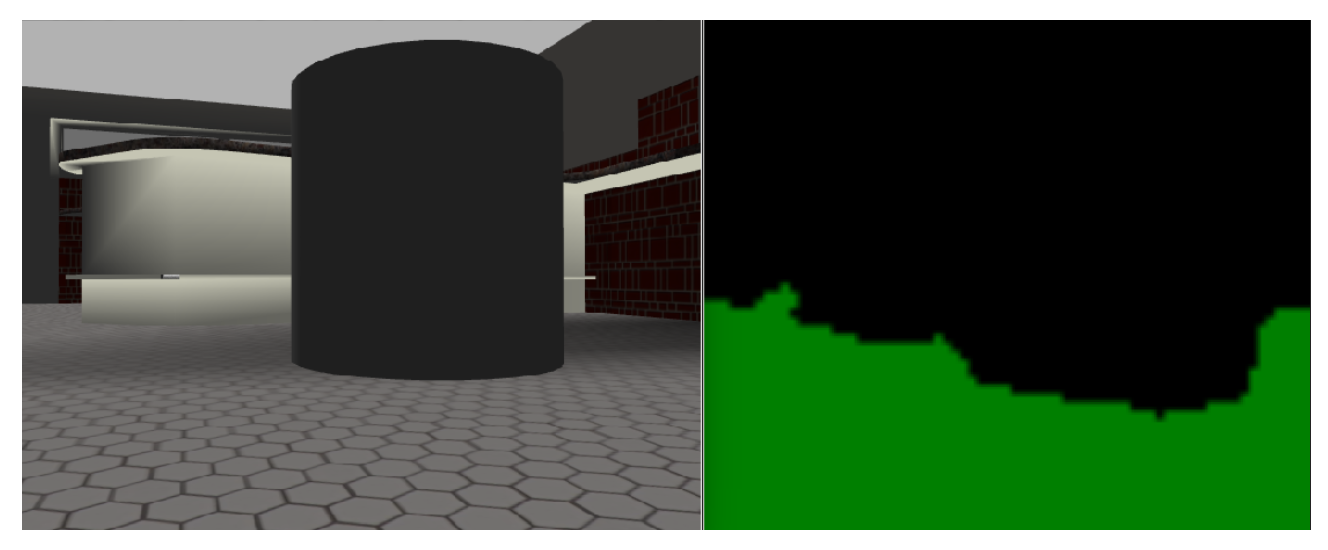

Figure 3.

Example of segmentation result, where a cylinder-style obstacle is detected and segmented from the ground. The segmented ground is shown as the green region at the bottom in the right image.

Figure 3.

Example of segmentation result, where a cylinder-style obstacle is detected and segmented from the ground. The segmented ground is shown as the green region at the bottom in the right image.

Figure 4.

Example of goal point planning, the red point is the goal point and is projected to the raw image in the left figure.

Figure 4.

Example of goal point planning, the red point is the goal point and is projected to the raw image in the left figure.

Figure 5.

Illustration of parallel design of new keyframe (middle image); besides a constraint from the last keyframe (blue arrow), multiple loop-closure constraints to existing reference frames (red arrows) are built in parallel.

Figure 5.

Illustration of parallel design of new keyframe (middle image); besides a constraint from the last keyframe (blue arrow), multiple loop-closure constraints to existing reference frames (red arrows) are built in parallel.

Figure 6.

Illustration of submap-based back-end, three submaps are shown in the figure (red, green and blue), and they are connected by constraints (arrows) between/within submaps, where the yellow points are the switchable factors for robustness loop-closure detection.

Figure 6.

Illustration of submap-based back-end, three submaps are shown in the figure (red, green and blue), and they are connected by constraints (arrows) between/within submaps, where the yellow points are the switchable factors for robustness loop-closure detection.

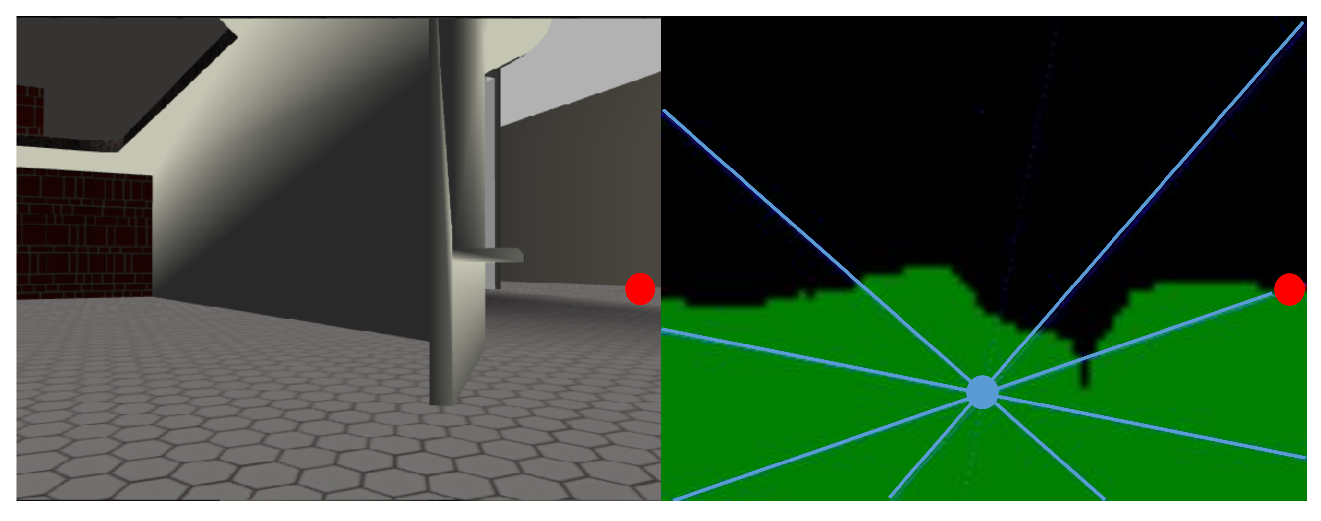

Figure 7.

Organization of proposed termination condition, where the input is a built graph (including vertexes and edges), both density-based evaluation and distribution-based evaluation are contained in a coarse-to-fine mechanism.

Figure 7.

Organization of proposed termination condition, where the input is a built graph (including vertexes and edges), both density-based evaluation and distribution-based evaluation are contained in a coarse-to-fine mechanism.

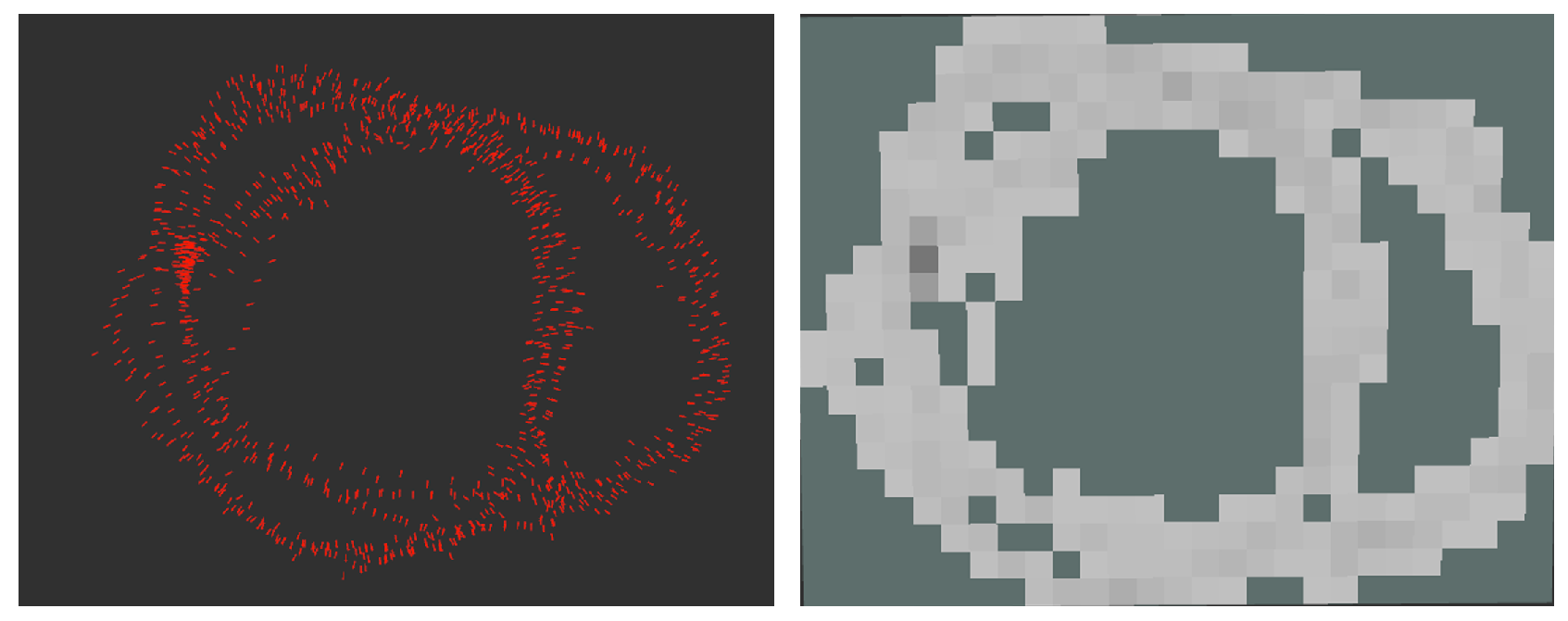

Figure 8.

Example of grid map in the distribution-based evaluation. Arrows in the left figure are the pose of collected keyframes and the value of grid in the right figure is determined by the exploration score.

Figure 8.

Example of grid map in the distribution-based evaluation. Arrows in the left figure are the pose of collected keyframes and the value of grid in the right figure is determined by the exploration score.

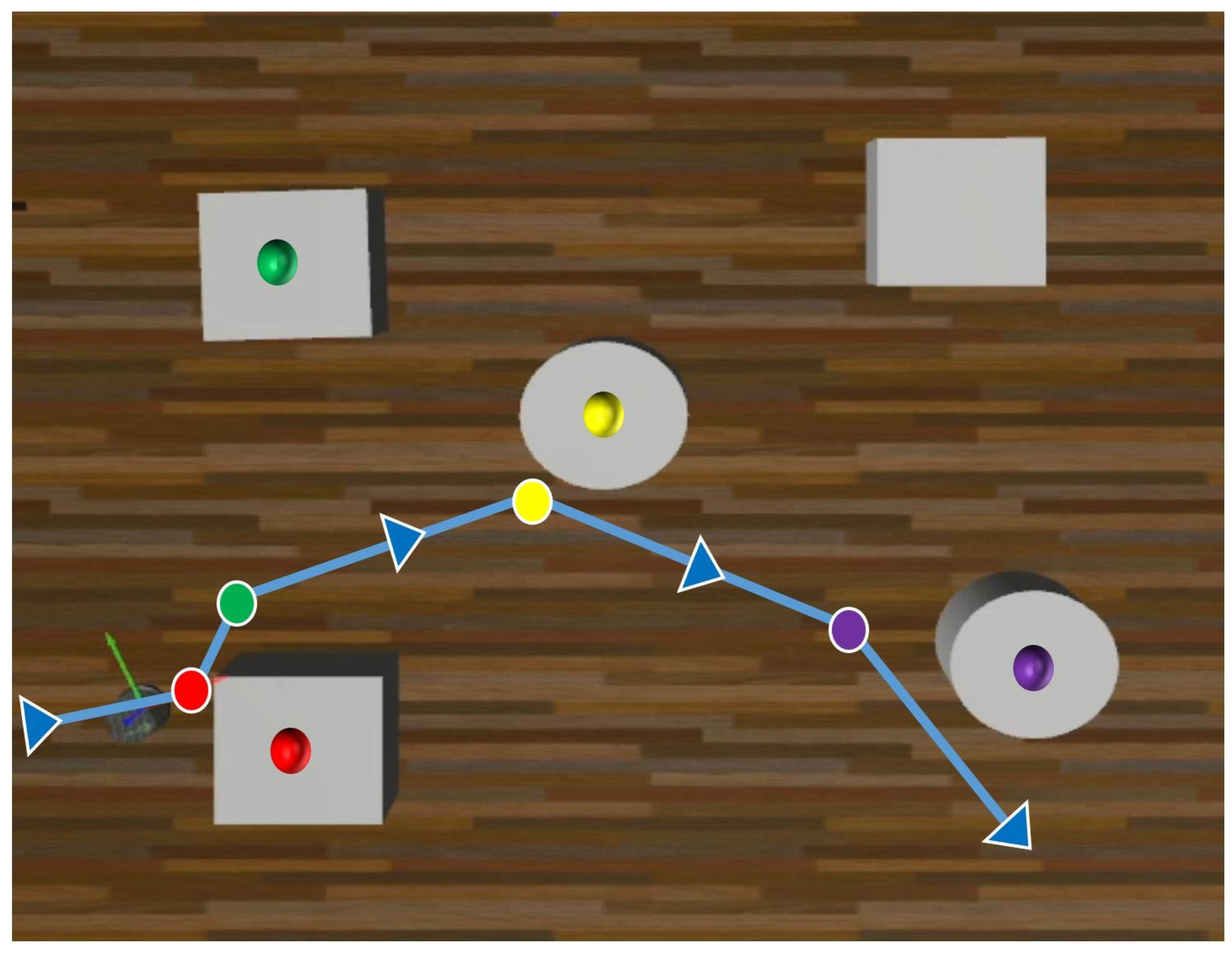

Figure 9.

Trajectory of obstacle avoidance in simulation. The blue line with arrow is the trajectory, and the colored points in the trajectory are the places where the avoidances of correspondent colored obstacles happen.

Figure 9.

Trajectory of obstacle avoidance in simulation. The blue line with arrow is the trajectory, and the colored points in the trajectory are the places where the avoidances of correspondent colored obstacles happen.

Figure 10.

The results of covering in simulation. The trajectory of the robot is represented by a set of red arrows. The green point is the start point of exploration and the blue one is the end point.

Figure 10.

The results of covering in simulation. The trajectory of the robot is represented by a set of red arrows. The green point is the start point of exploration and the blue one is the end point.

Figure 11.

Real world experiment in the sunny morning. The array is the robot trajectory, where the red dotted line in the upper figure is the place where obstacle avoidance happens.

Figure 11.

Real world experiment in the sunny morning. The array is the robot trajectory, where the red dotted line in the upper figure is the place where obstacle avoidance happens.

Figure 12.

Environment of the experiment on rainy nights, for evaluation of illumination invariance.

Figure 12.

Environment of the experiment on rainy nights, for evaluation of illumination invariance.

Figure 13.

Left figure, turning at the corner; mid figure, obstacle avoidance in morning; Right figure, obstacle avoidance at night. The blue dotted array is the motion before avoidance, and the red array is the motion during obstacle avoidance.

Figure 13.

Left figure, turning at the corner; mid figure, obstacle avoidance in morning; Right figure, obstacle avoidance at night. The blue dotted array is the motion before avoidance, and the red array is the motion during obstacle avoidance.

Figure 14.

Curve of localization error of submap-based back-end, which shows the ability of fault recovery after tracking is lost and verifies the ability of fault recovery by maintaining tracking. The deep blue curve is the error, the light blue curve is the response of tracking failure and the red curve is the response of loop-closure detection.

Figure 14.

Curve of localization error of submap-based back-end, which shows the ability of fault recovery after tracking is lost and verifies the ability of fault recovery by maintaining tracking. The deep blue curve is the error, the light blue curve is the response of tracking failure and the red curve is the response of loop-closure detection.

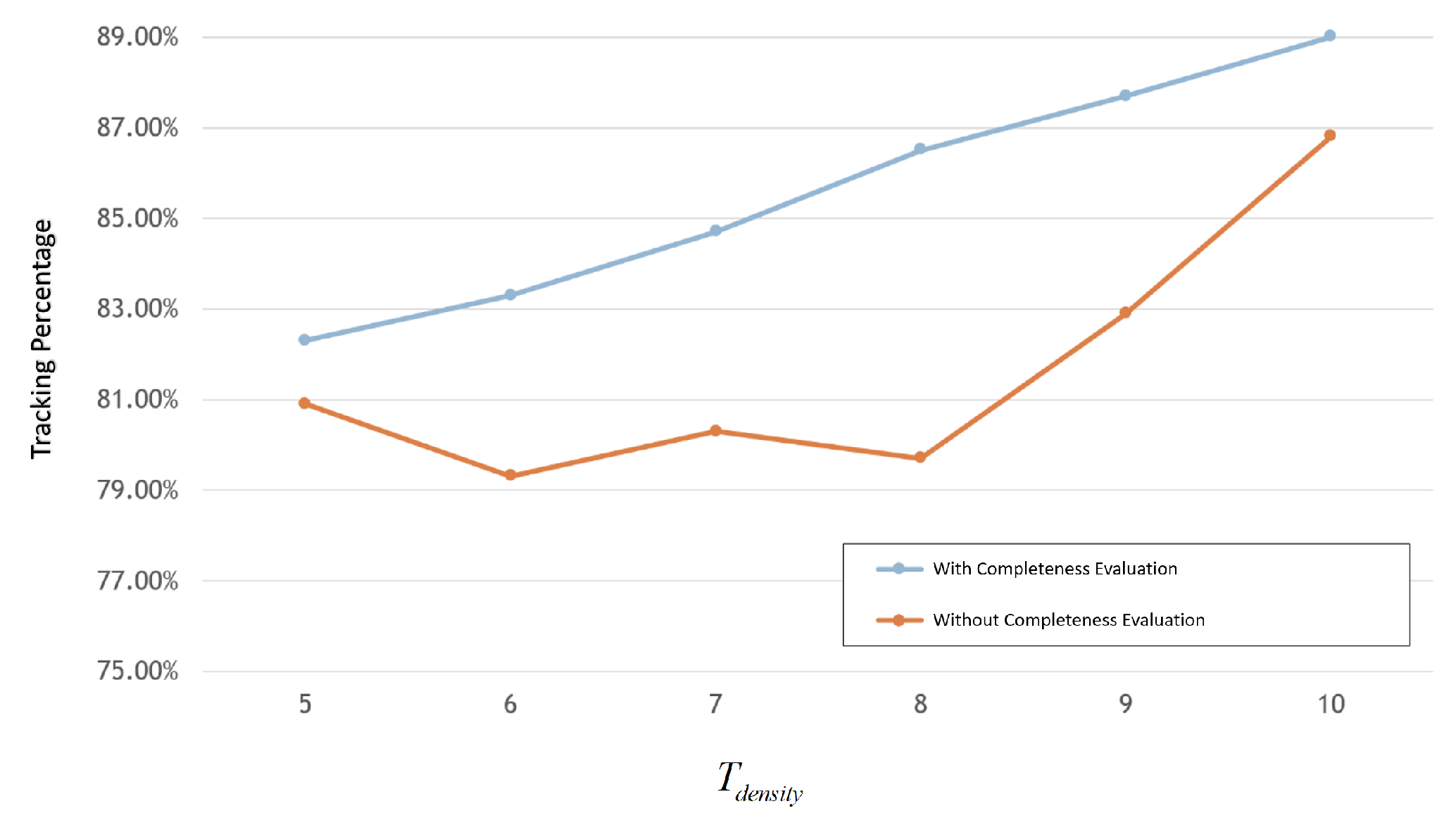

Figure 15.

Localization performance with/without completeness evaluation.

Figure 15.

Localization performance with/without completeness evaluation.

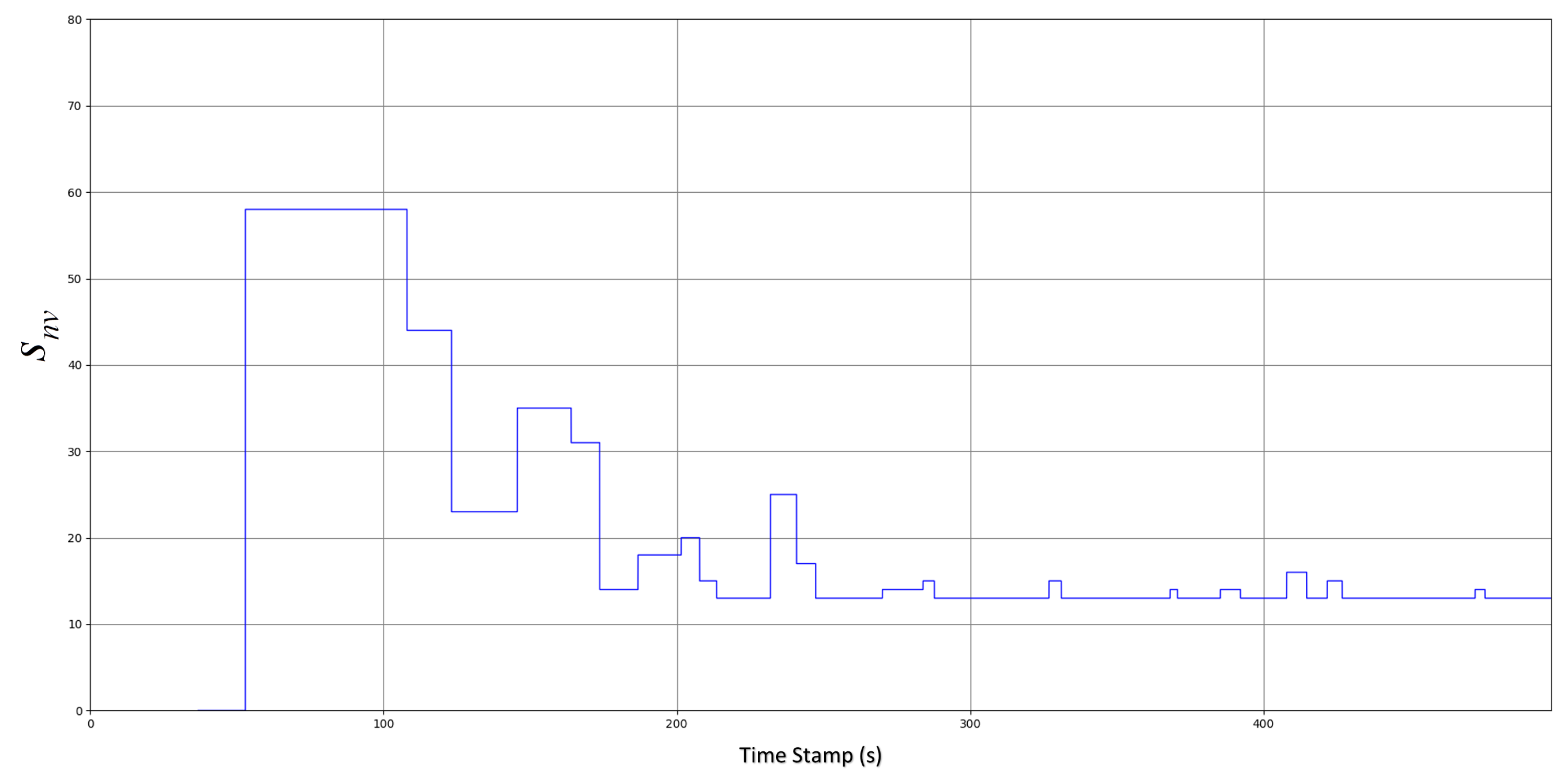

Figure 16.

Convergence of termination condition in density-based evaluation is verified, and the density-based evaluation can be satisfied after a certain time of exploration.

Figure 16.

Convergence of termination condition in density-based evaluation is verified, and the density-based evaluation can be satisfied after a certain time of exploration.

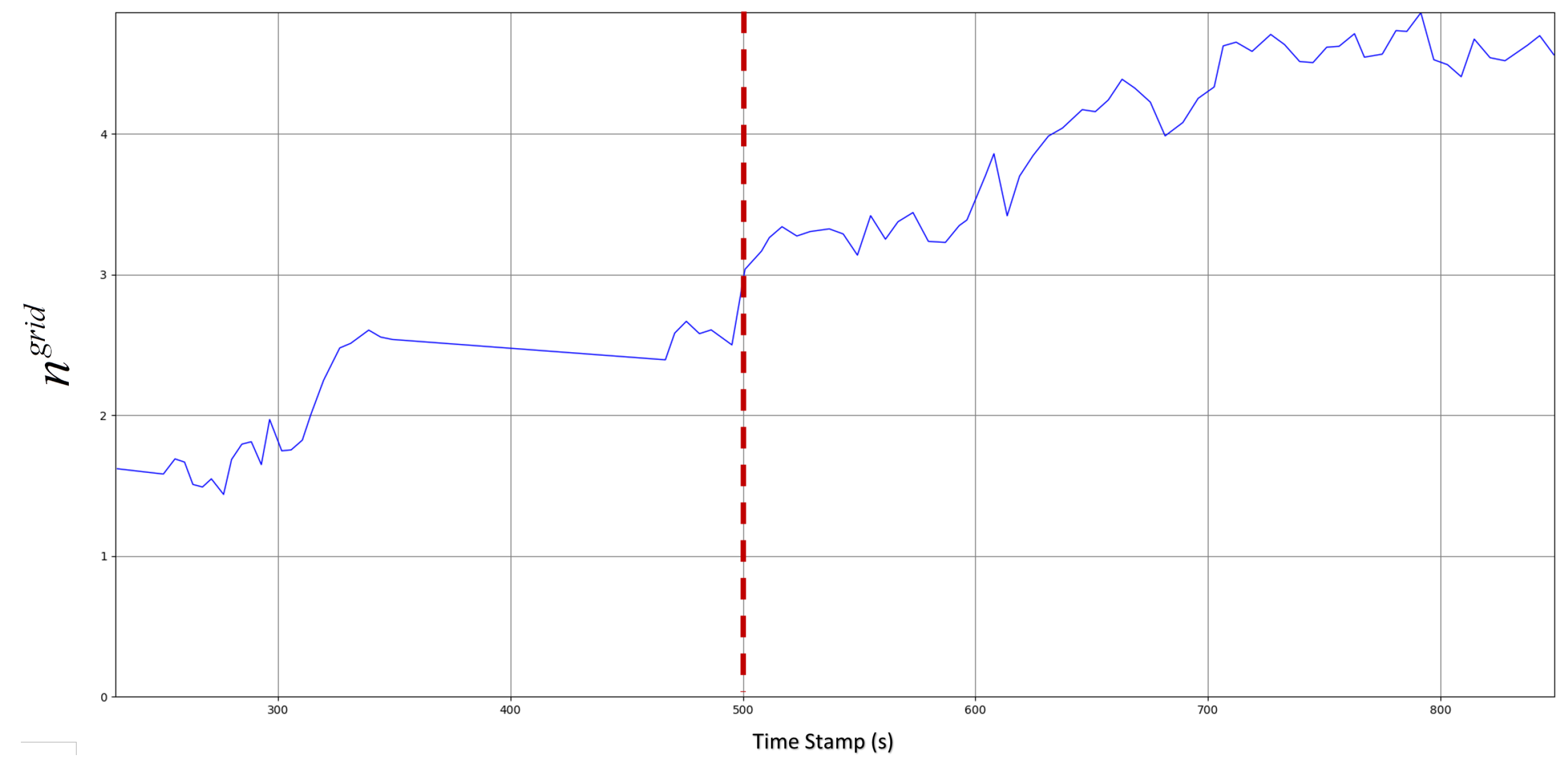

Figure 17.

Completeness evaluation in distribution-based evaluation.

Figure 17.

Completeness evaluation in distribution-based evaluation.

Table 1.

Achievement of fault tolerance in all the modules within our proposed system, where VLM is the visual localization and mapping module, A-C is the Active Controller and T-C is the Termination Condition.

Table 1.

Achievement of fault tolerance in all the modules within our proposed system, where VLM is the visual localization and mapping module, A-C is the Active Controller and T-C is the Termination Condition.

| Fault Tolerance | Module | Solution |

|---|

| Fault Recovery | VLM | Submap-based back-end, maintaining mapping within one of the submaps |

| Fault Recovery | A-C | All-time driving of mobile robot, keeping image capturing |

| Fault Prevention | VLM | Parallel constraint building, higher frame rate for easier data association |

| Fault Prevention | T-C | Completeness evaluation, rich environment information for localization |

Table 2.

Parametric distribution with interval sampling, where the ideal one is regarded as an evaluation standard for completeness calculation. A series of parametric results are shown with different sampling intervals, and they are applied in our implementation.

Table 2.

Parametric distribution with interval sampling, where the ideal one is regarded as an evaluation standard for completeness calculation. A series of parametric results are shown with different sampling intervals, and they are applied in our implementation.

|

Distribution | Mean | Standard Deviation |

|---|

|

Interval | |

|---|

| Ideal | 0 | 1.813 |

| 1 degree | 0 | 1.821 |

| 10 degree | 0 | 1.889 |

| 20 degree | 0 | 1.964 |

| 30 degree | 0 | 2.039 |

Table 3.

Continuous tracking performance, our submap-based system is able to map with a much higher A-KF than ORB-SLAM, since a fault recovery design is involved in VLM.

Table 3.

Continuous tracking performance, our submap-based system is able to map with a much higher A-KF than ORB-SLAM, since a fault recovery design is involved in VLM.

| | Method | A-KFs | SD-KFs |

|---|

| UA-CSC1 | ORB | 13.88 | 2.37 |

| VLM | 1069.20 | 23.60 |

| UA-CSC2 | ORB | 33.54 | 3.04 |

| VLM | 836.80 | 20.80 |

Table 4.

Frame rate comparison of parallel multi-constraint front-end, where a higher frame rate is obtained by our parallel framework. Such a higher frame rate is beneficial to data association due to a shorter baseline.

Table 4.

Frame rate comparison of parallel multi-constraint front-end, where a higher frame rate is obtained by our parallel framework. Such a higher frame rate is beneficial to data association due to a shorter baseline.

| | Non-Parallel | P-F&B | P-MC+P-F&B |

|---|

| Framerate | 12.57 | 24.66 | 32.60 |

| RMSE | 0.407 | 0.411 | 0.407 |