3.1. Considerations for the Elaboration of the Parallel Algorithm

Our model starts from a sequential algorithm that has been previously described in Reference [

21], to which some improvements and modifications were made to elaborate on the parallel algorithm.

The search space for the discrete knapsack problem 0–1 is given by the power set of the elements of the initial problem. If these elements are in the set

then the number of subsets of the power set or parts is |

P(

I)| = 2

m. The power set can be represented using the terms of the empty set

ϕ and the set

I, whereby the power set can be denoted by

P (

m) = [

ϕ,

I]. If the fixed elements are in the set

, and the elements assigned to the node are in the set

, then

P(

m) can also be denoted by

P(

m)

[

22,

23].

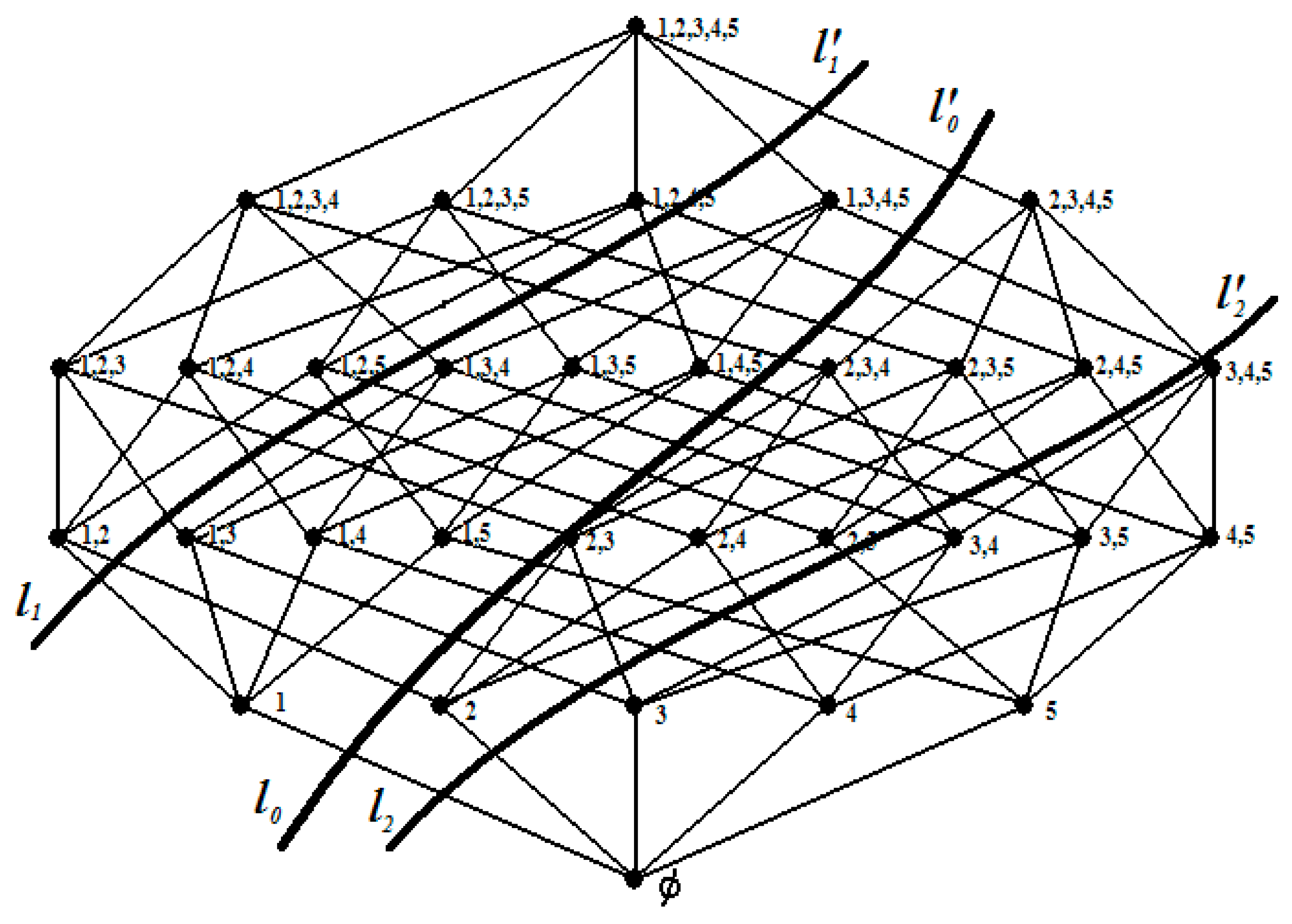

An example of the initial search space of a KP 0–1 with five elements is shown in

Figure 1. The search space is for set

.

This space is divided into two when an item is fixed or removed. In

Figure 1, the line

divides the search space in two by setting and removing element 1: the left side is the left child and the right side is the right child. As can be seen in

Figure 1, the search space of the left child consists of all the subsets containing element 1. On the other hand, in the search space of the right child, no subset has element 1 as its element. These two spaces correspond to level 1 of the binary tree, as shown in

Figure 2.

In turn, these search spaces are divided in two by setting and removing element 2. The line

shows the spaces generated with the left child, and line

shows the spaces generated with the right child. Therefore, at level 2, there will be four nodes. Each of these children is divided into two sets whose search space is a smaller dimension than the search space of the node from which they come. There are two sets of level 3 for each of the sets of level 2. In level 3, there will be eight sets. In general, at the level of

, subsets can be formed, where the search spaces of every two sets are disjointed sets and the union of all spaces of the same level is the initial power set

P(

m). Following this procedure, at the last level, a subset of the initial power set is assigned to each leaf [

25].

In the last levels of the binary tree, most of the elements will be fixed at

ω1, leaving a small number of free elements. Taking into account the above, the KP 0–1 is formulated below:

where

is the benefit of element

is the element weight

i, and

is the knapsack capacity.

Equation (7) is divided into two terms: fixed

and free elements

. Therefore, the formulation of the problem is adapted to the part of the problem that contains free elements: this is because it is not known which of them will be part of the solution. The modification consists of calculating the capacity of the available knapsack, once the fixed elements have been stored in the knapsack, according to Equation (9):

This algorithm begins with the calculation of a feasible solution and its upper and lower limits: these are obtained as proposed by Dantzig (1957) [

18] with Equation (10). The algorithm calculates them only once at the beginning of the calculations:

Subsequently, for free items

, the critical variable

xs is determined by Equation (11), the upper bound (

UB) limit by Equation (12), and the lower bound (

LB) limit by Equation (13), where the

LB will be the first optimal integer solution (

fopt):

where

is the available capacity of the knapsack that will be filled with free items

,

is the minimum number of free elements that cause the sum of the weights

to be greater than

,

pi is the benefit of the critical element, and

is the weight of that critical element.

After determining the critical variable and the root limits (

and

), two children are generated using the procedure described previously, and each of the children will have a

that is used to determine if that child is pruned or branched. In order for the child to branch out, the following must be fulfilled:

If Equation (14) is met, there is a possible that is better than the current one and, consequently, a better optimal solution if . On the other hand, if , then that branch of the tree is pruned, since there will be no greater than the best that has so far been calculated. The optimal solution is the control variable.

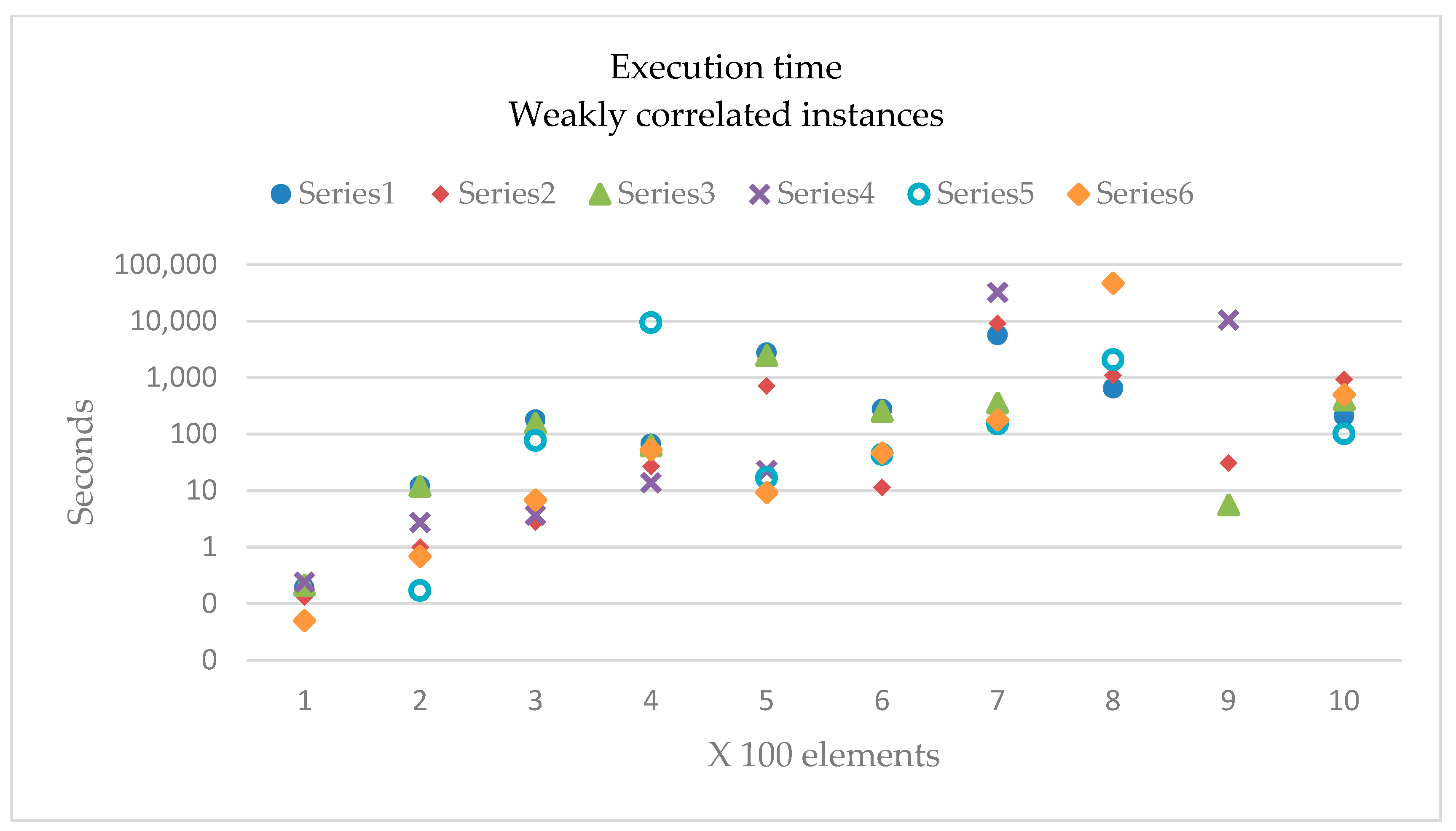

3.2. Multi-BB Parallel Model

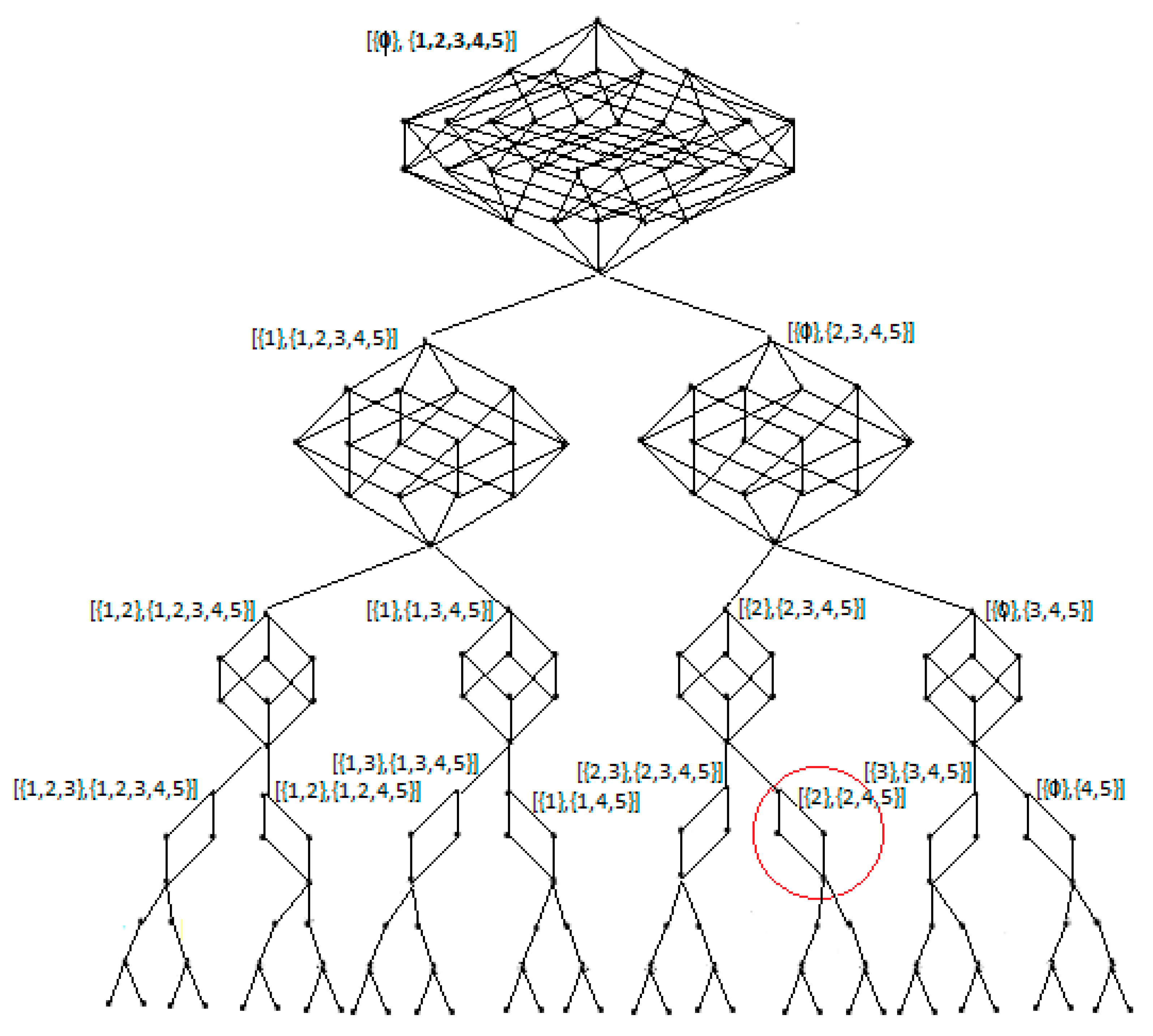

The sequential BB algorithm uses a breadth search to traverse the binary tree and calculate the optimal solution. This algorithm progresses level by level, calculating all the subsets generated. Therefore, to explain the proposal of the parallel model, the following example is presented. The subset {2, 4} is supposed to be the optimal solution to the problem. The subset {2, 4, 5} is at level 3, as is shown in the tree in

Figure 2, where elements 2 and 4 are in the first locations. Thus, the algorithm will have to reach level 3 to calculate the solution. In the worst case, the algorithm will require 2

0 + 2

1 +2

2 + 2

3 subsets, so it will also be necessary to perform calculus for the subsets of level 4 (2

4) to determine that the solution is optimal. These subsets are stored in a list and are resolved sequentially. In the worst case, the calculated subsets will be 31.

When at the start of the problem, element 3 is fixed and removed instead of 1, the subsets by level are level 0 [ϕ, {1,2,3,4,5}]; level 1 [{3}, {1,2,3,4,5}] and [ϕ, {1,2,4,5}]; and level 2 [{1,3}, {1,2,3,4,5}], [{3}, {2,3,4,5}], [{1}, {1,2,4,5}], and [ϕ, {2,4,5}]. The solution is in the last subset. The number of subsets needed to reach the solution is 20 + 21 + 22, and the subsets of level 3 (23) will be required to determine that the solution is optimal. In the worst case, 15 subsets will be calculated, which is 24 operations less than in the previous case.

Because the fixed or removed element that helps determine the optimal solution in fewer iterations is not known in advance, we propose generating different binary trees. In each tree, a different element is fixed or removed. When the element is fixed, that element is forced to be part of the solution, and when it is removed in another tree, that element will not be part of the solution, meaning that other elements must form the solution. In each binary tree, the process described previously is followed. The processing units share their best solution to accelerate the calculation, thereby increasing the number of branches that are pruned in each tree. Consequently, it is possible to reduce the parallel computation time. Communications among the processing units influence the synchronization of the processing units, an aspect that is resolved, as indicated in the following paragraphs. The ideal number of binary trees is equal to twice the number of elements of the problem, because in one-half of the trees, a different element is fixed, and in the other half, each element is removed.

Therefore, we propose generating different trees to increase the spaces of the feasible solutions, with the possibility that in some binary trees, the optimal solution is found in its first levels.

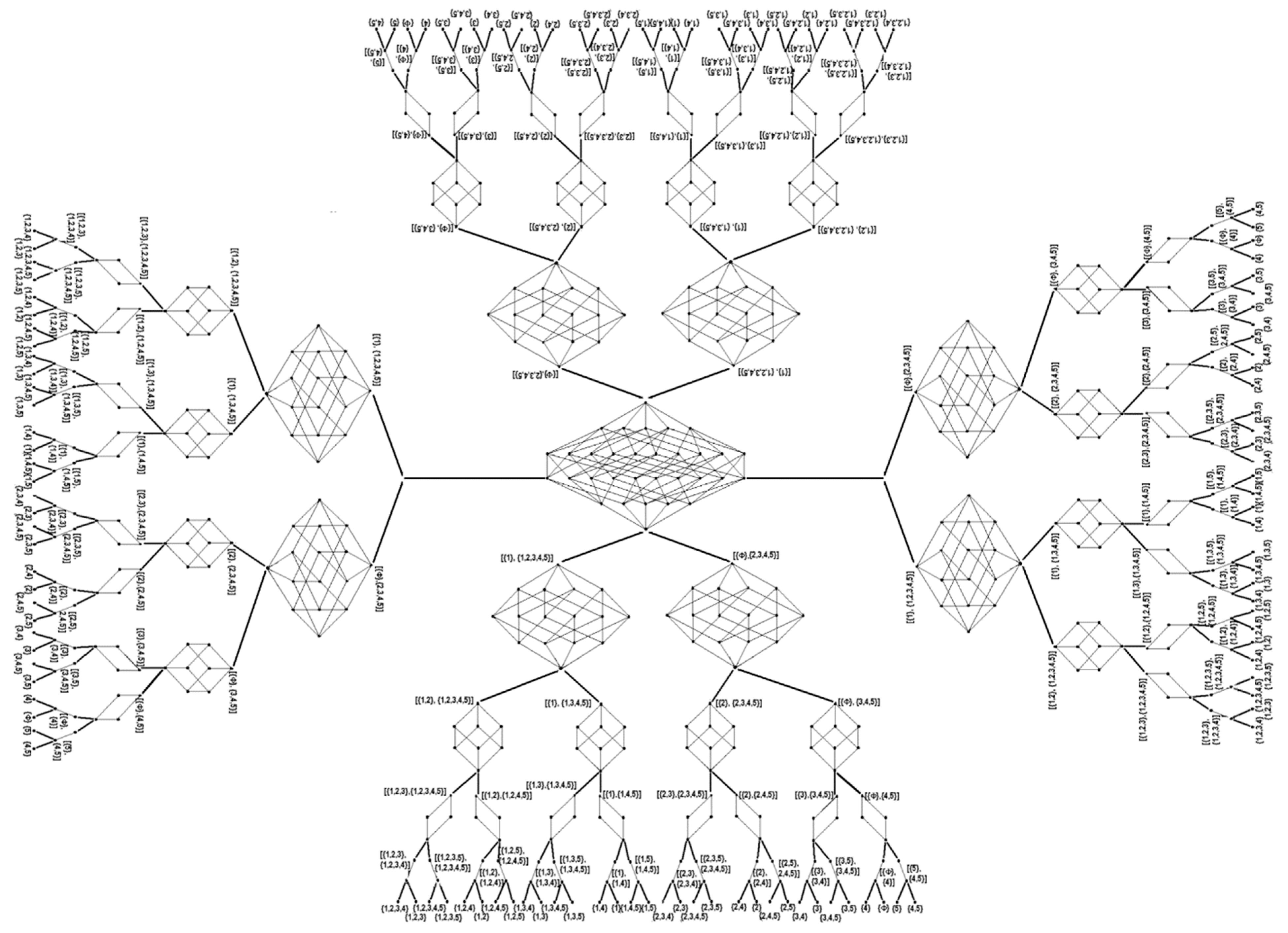

An outline of the algorithm is shown in

Figure 3, where the trees that are generated do not constitute a forest [

24] because they share the same leaves.

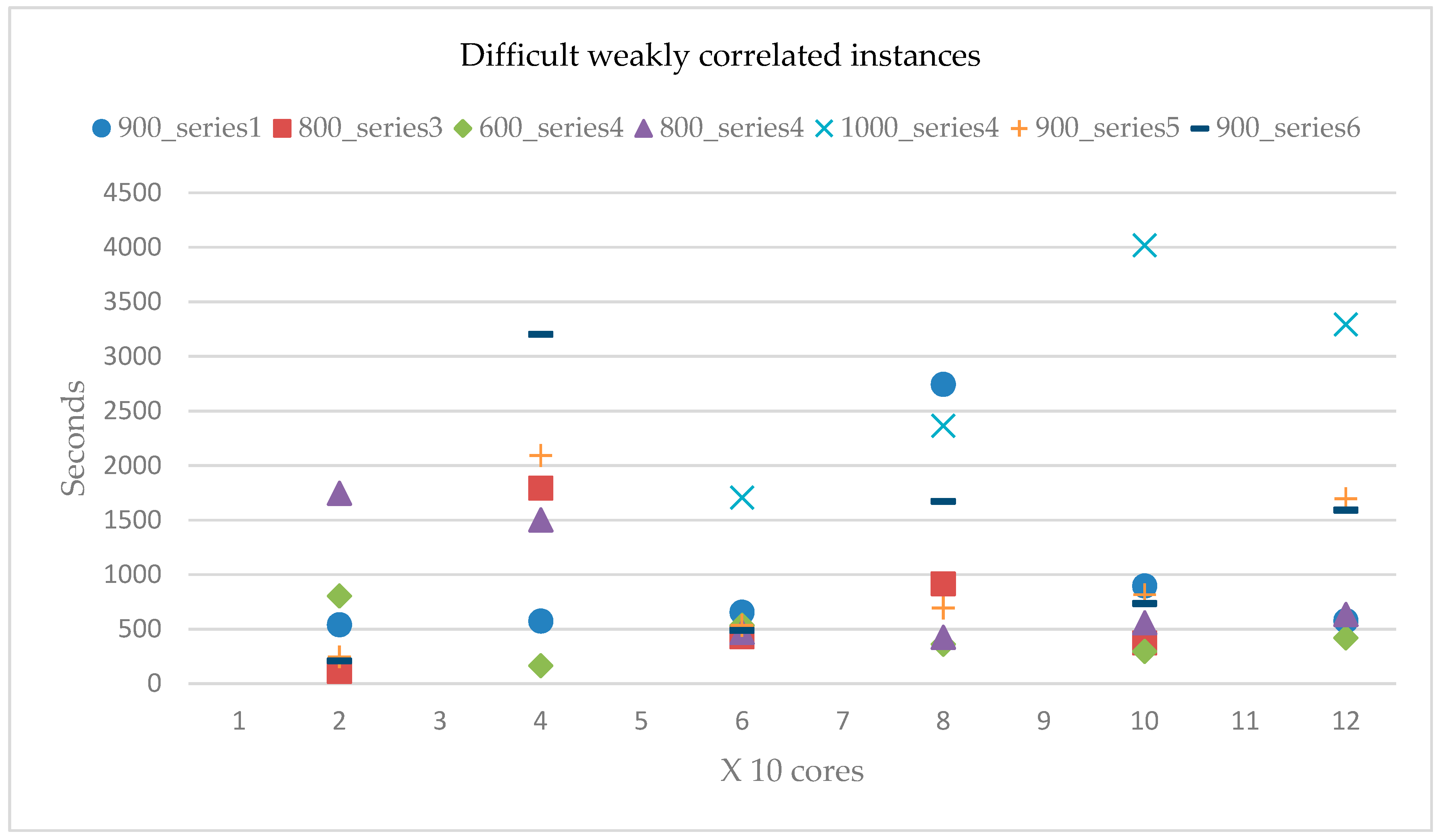

3.3. Multi-BB Algorithm for a Multicore Cluster

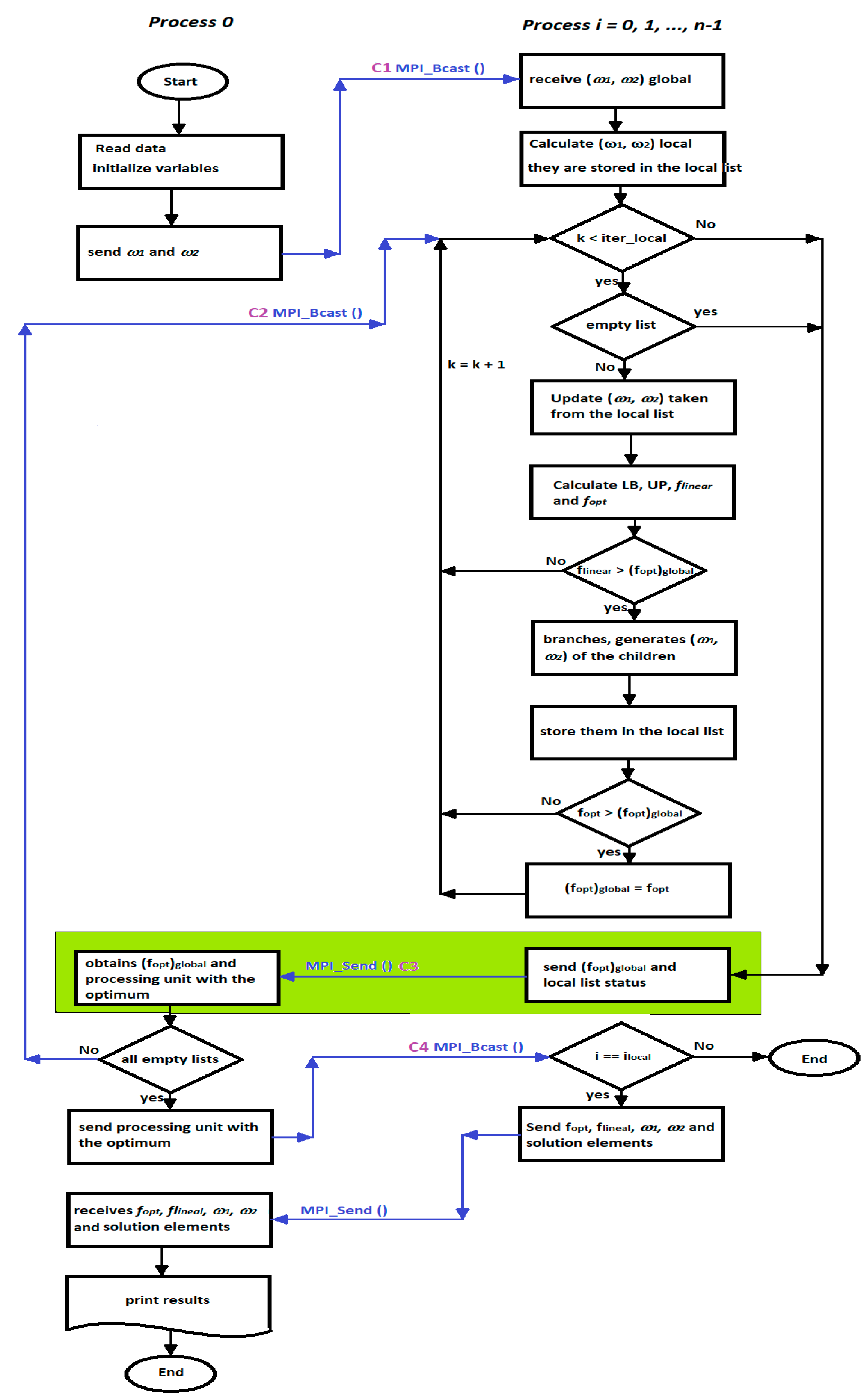

In the implementation of the multi-BB algorithm in the multicore cluster (

Figure 4), four factors that influence its efficiency are considered: shared knowledge, knowledge utilization, work division, and synchronization [

13]. These are defined to have a good load balance, a minimum number of synchronization points, and the shortest communications times.

Shared knowledge, or the subsets that are generated by the branching of trees, is stored in local lists in each processing unit because each of the multi-BB trees is independent of the others. An Exclusive Read, Exclusive Write, Parallel Random Access Machine (EREW PRAM) model is used for access to the subsets and to write the partial solution.

Figure 4 shows how the local lists are used in each processing unit, from how they are started and updated to when the subsets are taken.

For division of the work, both in a static form and at the beginning of the calculation, a tree is assigned to each processing unit. Each decision tree is expected to grow, and a good load balance is achieved. This is the first activity that the processes perform, as is seen in

Figure 4.

With regard to the use of knowledge and synchronization, the optimal solution is shared knowledge. The processing units are synchronized to exchange partial solutions and determine the optimal solution to the problem. A processing unit receives partial solutions, obtains the optimal solution, and stores the identification of the processing unit within that solution. The above process is presented in a green box in

Figure 4. The number of local iterations to carry out the synchronization is based on the proposal by Zavala-Díaz [

24].

The algorithm is asynchronous when each processing unit travels through its search space. Synchronization occurs when the processes send and receive information (blue lines in

Figure 4). Communications C1, C4, and C5 are executed only once during the entire calculation process. In communication C1, the elements in

and

of the problem are sent. In communication C4, the master processor sends data to all others and the number of the processing unit that calculated the optimal solution. In communication C5, the processing unit with the optimal solution sends the data to the master processor of the optimal solution, such as

,

(the set of solution elements for

and

). Communications C2 and C3 are made each time the maximum number of local iterations is reached. In both cases, only two datapoints are sent. In communication C2, the master sends the updated global solution and the signal to the processing units to continue the calculations. In communication C3, each processing unit sends its

solution and the status of its local list.

The multi-BB algorithm was programmed in ANSI C, and the Message Passing Interface (MPI) library is used to pass messages. The number of maximum local iterations is one-tenth the size of the problem [

24]. The proposed distribution for the parallel processes number is described in

Section 4.2. The remaining variables have been previously described.