The Effects of Augmented Reality Interaction Techniques on Egocentric Distance Estimation Accuracy

Abstract

1. Introduction

1.1. Direct vs. Indirect Interaction Methods

1.2. Related Work

2. Methods

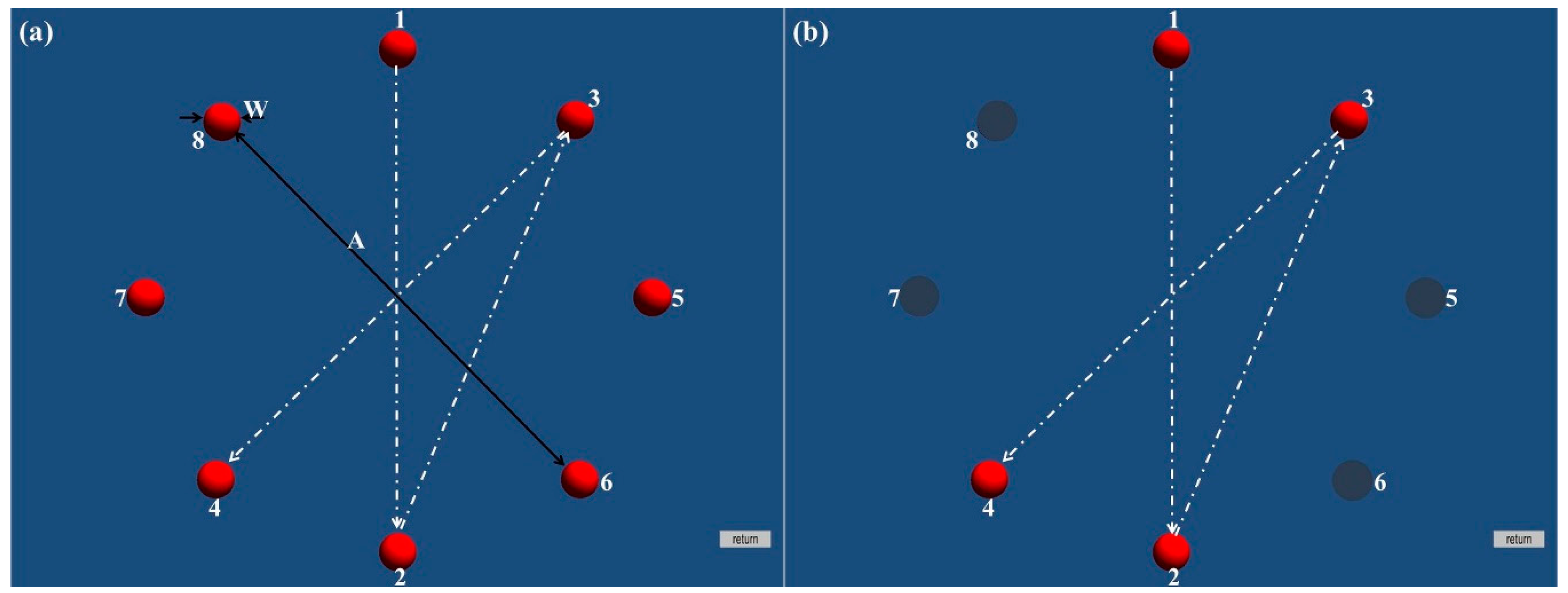

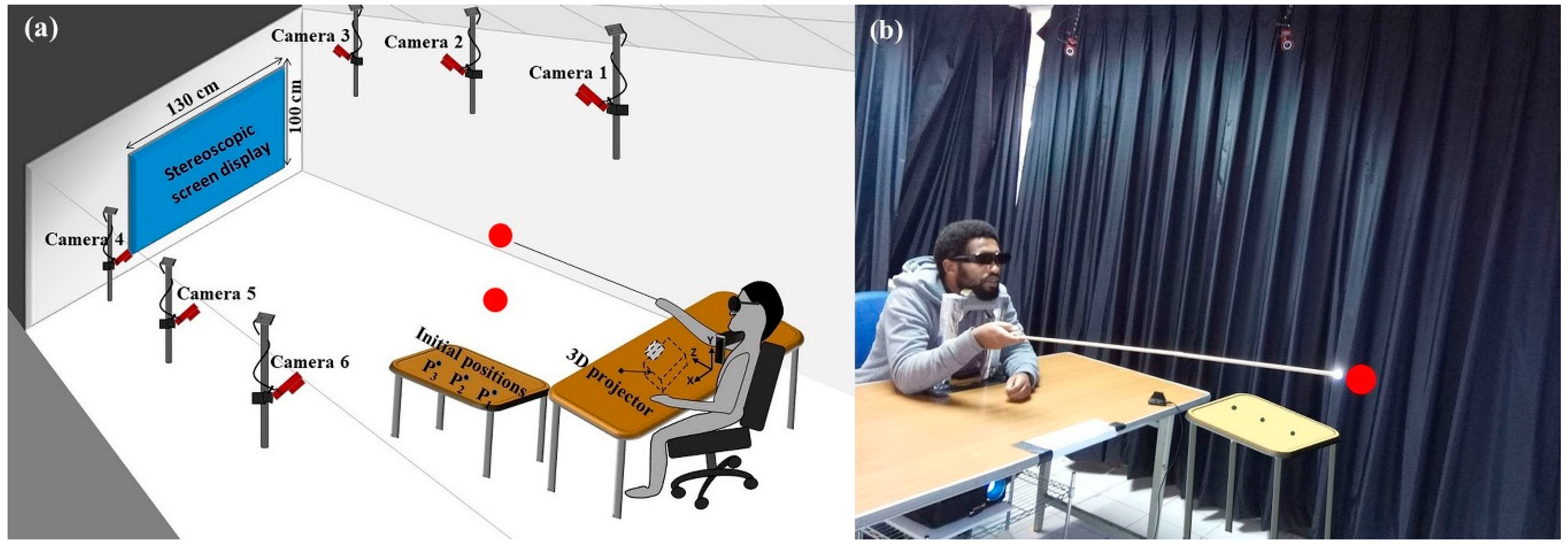

2.1. Direct Interaction with Virtual Objects

2.2. Indirect Interaction with Virtual Objects

2.3. Experimental Design and Variables

2.3.1. Independent Variables

2.3.2. Dependent Variables

2.4. Experimental Settings and Stimuli

2.5. Procedure

2.6. Participants

3. Results

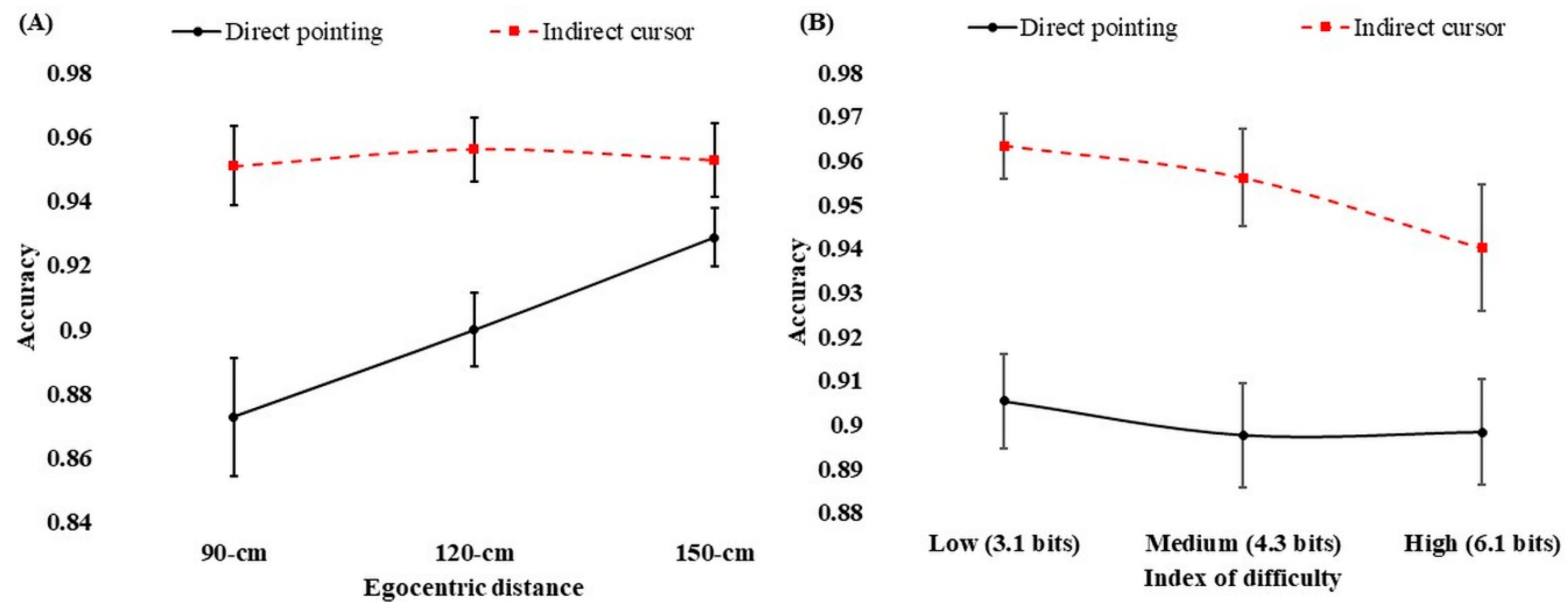

3.1. Accuracy of Estimation

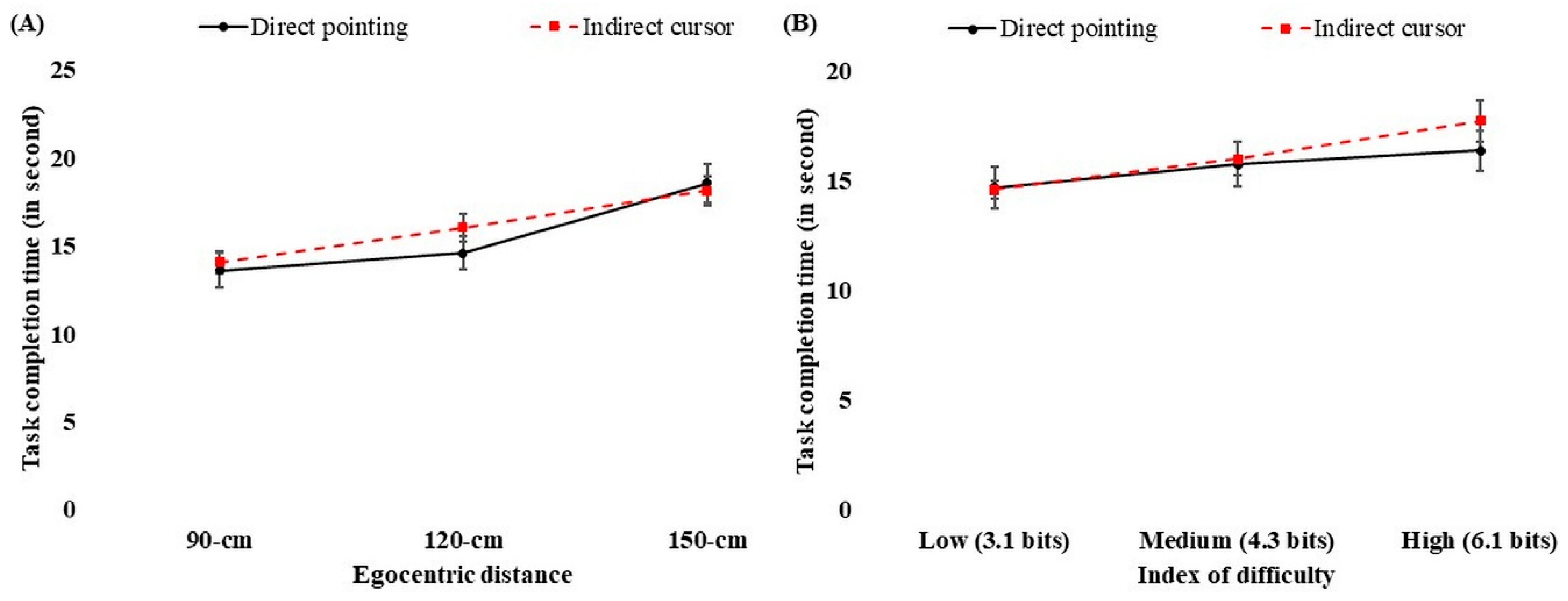

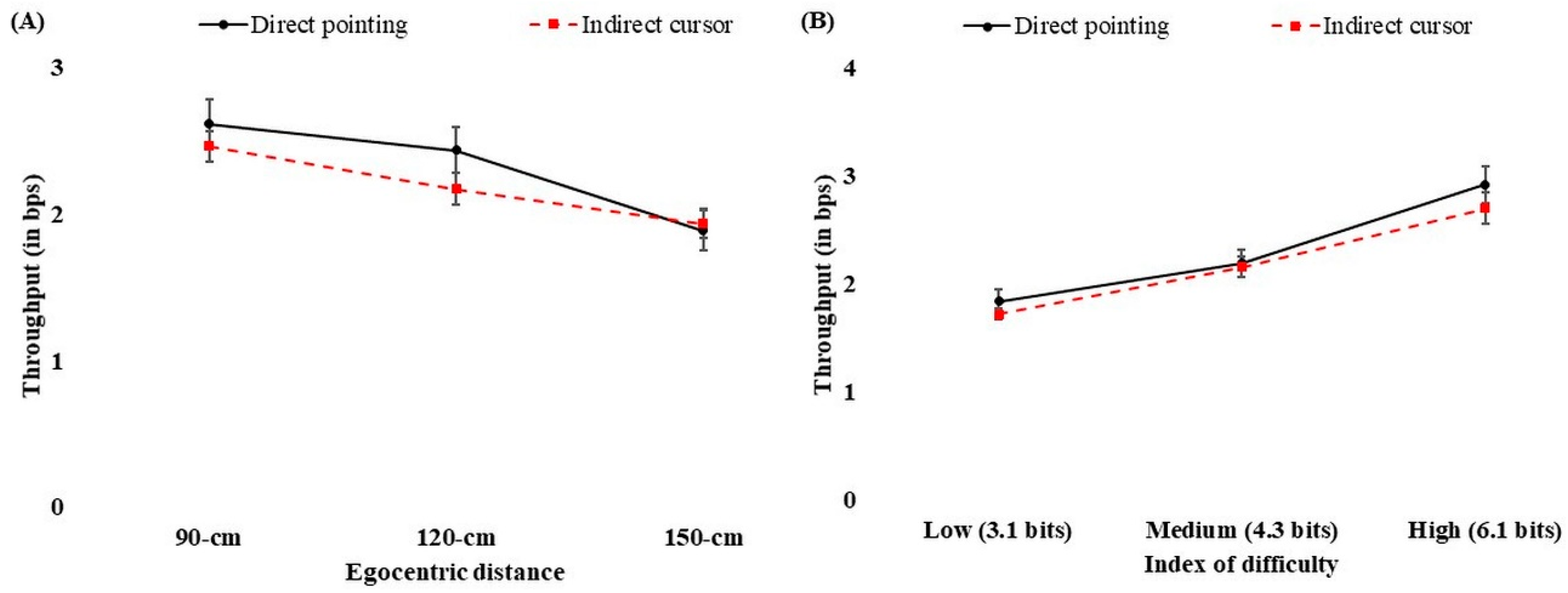

3.2. Task Completion Time and Throughput

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Huber, T.; Paschold, M.; Hansen, C.; Wunderling, T.; Lang, H.; Kneist, W. New dimensions in surgical training: Immersive virtual reality laparoscopic simulation exhilarates surgical staff. Surg. Endosc. 2017, 31, 4472–4477. [Google Scholar] [CrossRef] [PubMed]

- Buttussi, F.; Chittaro, L. Effects of Different Types of Virtual Reality Display on Presence and Learning in a Safety Training Scenario. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1063–1076. [Google Scholar] [CrossRef] [PubMed]

- Matsas, E.; Vosniakos, G.-C. Design of a virtual reality training system for human–robot collaboration in manufacturing tasks. Int. J. Interact. Des. Manuf. 2017, 11, 139–153. [Google Scholar] [CrossRef]

- Sun, H.-M.; Li, S.-P.; Zhu, Y.-Q.; Hsiao, B. The effect of user’s perceived presence and promotion focus on usability for interacting in virtual environments. Appl. Ergon. 2015, 50, 126–132. [Google Scholar] [CrossRef]

- Sharples, S.; Cobb, S.; Moody, A.; Wilson, J.R. Virtual reality induced symptoms and effects (VRISE): Comparison of head mounted display (HMD), desktop and projection display systems. Displays 2008, 29, 58–69. [Google Scholar] [CrossRef]

- McIntire, J.P.; Havig, P.R.; Geiselman, E.E. Stereoscopic 3D displays and human performance: A comprehensive review. Displays 2014, 35, 18–26. [Google Scholar] [CrossRef]

- Zhou, F.; Duh, H.; Billinghurst, M. Trends in Augmented Reality Tracking, Interaction and Display: A Review of Ten Years of ISMAR. In Proceedings of the 7th IEEE/ACM International Symposium on Mixed and Augmented Reality, Cambridge, UK, 15–18 September 2008; pp. 193–202. [Google Scholar]

- Fuhrmann, A.; Hesina, G.; Faure, F.; Gervautz, M. Occlusion in collaborative augmented environments. Comput. Gr. 1999, 23, 809–819. [Google Scholar] [CrossRef]

- Erazo, O.; Pino, J.A. Predicting Task Execution Time on Natural User Interfaces based on Touchless Hand Gestures. In Proceedings of the 20th International Conference on Intelligent User Interfaces, Atlanta, GA, USA, 29 March–1 April 2015; pp. 97–109. [Google Scholar]

- Lin, C.J.; Woldegiorgis, B.H. Kinematic analysis of direct pointing in projection-based stereoscopic environments. Hum. Mov. Sci. 2018, 57, 21–31. [Google Scholar] [CrossRef]

- Lin, C.J.; Woldegiorgis, B.H. Egocentric distance perception and performance of direct pointing in stereoscopic displays. Appl. Ergon. 2017, 64, 66–74. [Google Scholar] [CrossRef]

- Bruder, G.; Steinicke, F.; Sturzlinger, W. To touch or not to touch?: Comparing 2D touch and 3D mid-air interaction on stereoscopic tabletop surfaces. In Proceedings of the 1st symposium on Spatial user interaction, Los Angeles, CA, USA, 20–21 July 2013; pp. 9–16. [Google Scholar]

- Swan, J.E.; Singh, G.; Ellis, S.R. Matching and Reaching Depth Judgments with Real and Augmented Reality Targets. IEEE Trans. Vis. Comput. Graph. 2015, 21, 1289–1298. [Google Scholar] [CrossRef]

- Mine, M.R. Virtual Environment Interaction Techniques; University of North Carolina at Chapel Hill: Chapel Hill, CA, USA, 1995. [Google Scholar]

- Poupyrev, I.; Ichikawa, T. Manipulating Objects in Virtual Worlds: Categorization and Empirical Evaluation of Interaction Techniques. J. Vis. Lang. Comput. 1999, 10, 19–35. [Google Scholar] [CrossRef]

- Werkhoven, P.J.; Groen, J. Manipulation Performance in Interactive Virtual Environments. Hum. Fact. 1998, 40, 432–442. [Google Scholar] [CrossRef]

- Deng, C.-L.; Geng, P.; Hu, Y.-F.; Kuai, S.-G. Beyond Fitts’s Law: A Three-Phase Model Predicts Movement Time to Position an Object in an Immersive 3D Virtual Environment. Hum. Fact. 2019, 61, 879–894. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, A.; Banic, A. 3DTouch: A wearable 3D input device for 3D applications. In Proceedings of the IEEE Virtual Reality (VR), Arles, France, 23–27 March 2015; pp. 55–61. [Google Scholar]

- Jang, Y.; Noh, S.; Chang, H.J.; Kim, T.; Woo, W. 3D Finger CAPE: Clicking Action and Position Estimation under Self-Occlusions in Egocentric Viewpoint. IEEE Trans. Vis. Comput. Graph. 2015, 21, 501–510. [Google Scholar] [CrossRef]

- Cha, Y.; Myung, R. Extended Fitts’ law for 3D pointing tasks using 3D target arrangements. Int. J. Ind. Ergon. 2013, 43, 350–355. [Google Scholar] [CrossRef]

- Chen, J.; Or, C. Assessing the use of immersive virtual reality, mouse and touchscreen in pointing and dragging-and-dropping tasks among young, middle-aged and older adults. Appl. Ergon. 2017, 65, 437–448. [Google Scholar] [CrossRef]

- Bi, X.; Li, Y.; Zhai, S. FFitts law: Modeling finger touch with fitts’ law. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 1363–1372. [Google Scholar]

- MacKenzie, I.S.; Teather, R.J. FittsTilt: The application of Fitts’ law to tilt-based interaction. In Proceedings of the 7th Nordic Conference on Human-Computer Interaction: Making Sense Through Design, Copenhagen, Denmark, 14–17 October 2012; pp. 568–577. [Google Scholar]

- Lin, C.J.; Widyaningrum, R. Eye Pointing in Stereoscopic Displays. J. Eye Mov. Res. 2016, 9, 1–14. [Google Scholar] [CrossRef]

- Murata, A.; Iwase, H. Extending Fitts’ law to a three-dimensional pointing task. Hum. Mov. Sci. 2001, 20, 791–805. [Google Scholar] [CrossRef]

- Dey, A.; Jarvis, G.; Sandor, C.; Reitmayr, G. Tablet versus phone: Depth perception in handheld augmented reality. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, GA, USA, 5–8 November 2012; pp. 187–196. [Google Scholar]

- Cutting, J.E. Reconceiving perceptual space. In Looking into Pictures: An Interdisciplinary Approach to Pictorial Space; MIT Press: Cambridge, MA, USA, 2003; pp. 215–238. [Google Scholar]

- Naceri, A.; Chellali, R.; Dionnet, F.; Toma, S. Depth Perception within Virtual Environments: A Comparative Study Between Wide Screen Stereoscopic Displays and Head Mounted Devices. In Proceedings of the Computation World: Future Computing, Service Computation, Cognitive, Adaptive, Content, Patterns, Washington, DC, USA, 15–20 November 2009; pp. 460–466. [Google Scholar]

- Grechkin, T.Y.; Nguyen, T.D.; Plumert, J.M.; Cremer, J.F.; Kearney, J.K. How does presentation method and measurement protocol affect distance estimation in real and virtual environments? ACM Trans. Appl. Percept. 2010, 7, 1–18. [Google Scholar] [CrossRef]

- Iosa, M.; Fusco, A.; Morone, G.; Paolucci, S. Walking there: Environmental influence on walking-distance estimation. Behav. Brain Res. 2012, 226, 124–132. [Google Scholar] [CrossRef]

- Knapp, J.M.; Loomis, J.M. Limited field of view of head-mounted displays is not the cause of distance underestimation in virtual environments. Presence: Teleoper. Virtual Environ. 2004, 13, 572–577. [Google Scholar] [CrossRef]

- Lin, C.J.; Woldegiorgis, B.H. Interaction and visual performance in stereoscopic displays: A review. J. Soc. Inf. Disp. 2015, 23, 319–332. [Google Scholar] [CrossRef]

- Waller, D.; Richardson, A.R. Correcting distance estimates by interacting with immersive virtual environments: Effects of task and available sensory information. J. Exp. Psychol. Appl. 2008, 14, 61–72. [Google Scholar] [CrossRef] [PubMed]

- Willemsen, P.; Gooch, A.A.; Thompson, W.B.; Creem-Regehr, S.H. Effects of Stereo Viewing Conditions on Distance Perception in Virtual Environments. Presence 2008, 17, 91–101. [Google Scholar] [CrossRef]

- Renner, R.S.; Velichkovsky, B.M.; Helmert, J.R. The perception of egocentric distances in virtual environments—A review. ACM Comput. Surv. 2013, 46, 1–40. [Google Scholar] [CrossRef]

- Kelly, J.W.; Hammel, W.; Sjolund, L.A.; Siegel, Z.D. Frontal extents in virtual environments are not immune to underperception. Atten. Percept. Psychophys. 2015, 77, 1848–1853. [Google Scholar] [CrossRef][Green Version]

- Stefanucci, J.K.; Creem-Regehr, S.H.; Thompson, W.B.; Lessard, D.A.; Geuss, M.N. Evaluating the accuracy of size perception on screen-based displays: Displayed objects appear smaller than real objects. J. Exp. Psychol. Appl. 2015, 21, 215–223. [Google Scholar] [CrossRef]

- Wartenberg, C.; Wiborg, P. Precision of Exocentric Distance Judgments in Desktop and Cube Presentation. Presence 2003, 12, 196–206. [Google Scholar] [CrossRef]

- Bruder, G.; Steinicke, F.; Stuerzlinger, W. Touching the Void Revisited: Analyses of Touch Behavior on and above Tabletop Surfaces. In Proceedings of the 14th IFIP Conference on Human-Computer Interaction, Cape Town, South Africa, 2–6 September 2013; pp. 278–296. [Google Scholar]

- Bruder, G.; Steinicke, F.; Sturzlinger, W. Effects of visual conflicts on 3D selection task performance in stereoscopic display environments. In Proceedings of the IEEE Symposium on 3D User Interfaces (3DUI), Orlando, FL, USA, 16–17 March 2013; pp. 115–118. [Google Scholar]

- Lin, C.J.; Abreham, B.T.; Woldegiorgis, B.H. Effects of displays on a direct reaching task: A comparative study of head mounted display and stereoscopic widescreen display. Int. J. Ind. Ergon. 2019, 72, 372–379. [Google Scholar] [CrossRef]

- Lin, C.J.; Ho, S.-H.; Chen, Y.-J. An investigation of pointing postures in a 3D stereoscopic environment. Appl. Ergon. 2015, 48, 154–163. [Google Scholar] [CrossRef]

- Napieralski, P.E.; Altenhoff, B.M.; Bertrand, J.W.; Long, L.O.; Babu, S.V.; Pagano, C.C.; Kern, J.; Davis, T.A. Near-field distance perception in real and virtual environments using both verbal and action responses. ACM Trans. Appl. Percept. 2011, 8, 1–19. [Google Scholar] [CrossRef]

- Poupyrev, I.; Weghorst, S.; Fels, S. Non-isomorphic 3D rotational techniques. In Proceedings of the SIGCHI conference on Human Factors in Computing Systems, The Hague, The Netherlands, 1–6 April 2000; pp. 540–547. [Google Scholar]

- Singh, G.; Swan, J.E.; Jones, J.A.; Ellis, S.R. Depth judgments by reaching and matching in near-field augmented reality. In Proceedings of the IEEE Virtual Reality Workshops (VRW), Costa Mesa, CA, USA, 4–8 March 2012; pp. 165–166. [Google Scholar]

- Lin, C.J.; Caesaron, D.; Woldegiorgis, B.H. The accuracy of the frontal extent in stereoscopic environments: A comparison of direct selection and virtual cursor techniques. PLoS ONE 2019, 14, e0222751. [Google Scholar] [CrossRef] [PubMed]

- Singh, G.; Swan, E.J., II; Jones, J.A.; Ellis, S. Depth judgment measures and occluding surfaces in near-field augmented reality. In Proceedings of the 7th Symposium on Applied Perception in Graphics and Visualization, Los Angeles, CA, USA, 23–24 July 2010; pp. 149–156. [Google Scholar]

- Hutchins, E.L.; Hollan, J.D.; Norman, D.A. Direct manipulation interfaces. Hum.-Comput. Interact. 1985, 1, 311–338. [Google Scholar] [CrossRef]

- Steinicke, F.; Benko, H.; Kruger, A.; Keefe, D.; Riviere, J.-B.d.l.; Anderson, K.; Hakkila, J.; Arhippainen, L.; Pakanen, M. The 3rd dimension of CHI (3DCHI): Touching and designing 3D user interfaces. In Proceedings of the CHI ’12 Extended Abstracts on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 2695–2698. [Google Scholar]

- Chan, L.-W.; Kao, H.-S.; Chen, M.Y.; Lee, M.-S.; Hsu, J.; Hung, Y.-P. Touching the void: Direct-touch interaction for intangible displays. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; pp. 2625–2634. [Google Scholar]

- Lemmerman, D.K.; LaViola, J.J., Jr. Effects of Interaction-Display Offset on User Performance in Surround Screen Virtual Environments. In Proceedings of the 2007 IEEE Virtual Reality Conference, Charlotte, NC, USA, 10–14 March 2007; pp. 303–304. [Google Scholar]

- Wang, Y.; MacKenzie, C. Effects of orientation disparity between haptic and graphic displays of objects in virtual environments. In Proceedings of the IFIP Conference on Human-Computer Interaction, Edinburgh, Scotland, 30 August–3 September 1999; pp. 391–398. [Google Scholar]

- Mine, M.R.; Frederick, P.; Brooks, J.; Sequin, C.H. Moving objects in space: Exploiting proprioception in virtual-environment interaction. In Proceedings of the 24th annual conference on Computer graphics and interactive techniques, San Jose, CA, USA, 20–22 July 1997; pp. 19–26. [Google Scholar]

- Lubos, P.; Bruder, G.; Steinicke, F. Analysis of direct selection in head-mounted display environments. In Proceedings of the IEEE Symposium on 3D User Interfaces (3DUI), Minneapolis, MN, USA, 29–30 March 2014; pp. 11–18. [Google Scholar]

- Jerald, J. The VR Book: Human-Centered Design for Virtual Reality; Association for Computing Machinery and Morgan Claypool: New York, USA, 2016; p. 635. [Google Scholar]

- Poupyrev, I.; Billinghurst, M.; Weghorst, S.; Ichikawa, T. The go-go interaction technique: Non-linear mapping for direct manipulation in VR. In Proceedings of the 9th annual ACM symposium on User interface software and technology, Seattle, WA, USA, 6–8 November 1996; pp. 79–80. [Google Scholar]

- ISO. Ergonomic Requirements for Office Work with Visual Display Terminals (VDTs)-Part 9-Requirements for Non-Keyboard Input Devices., 1st ed.; International Organization for Standardisation: Geneva, Switzerland, 2000; p. 57. [Google Scholar]

- Soukoreff, R.W.; MacKenzie, I.S. Towards a standard for pointing device evaluation, perspectives on 27 years of Fitts’ law research in HCI. Int. J. Hum.-Comput. Stud. 2004, 61, 751–789. [Google Scholar] [CrossRef]

- Armbrüster, C.; Wolter, M.; Kuhlen, T.; Spijkers, W.; Fimm, B. Depth Perception in Virtual Reality: Distance Estimations in Peri- and Extrapersonal Space. CyberPsychology Behav. 2008, 11, 9–15. [Google Scholar] [CrossRef] [PubMed]

- Dey, A.; Cunningham, A.; Sandor, C. Evaluating depth perception of photorealistic mixed reality visualizations for occluded objects in outdoor environments. In Proceedings of the IEEE Symposium on 3D User Interfaces (3DUI), Waltham, MA, USA, 20–21 March 2010; pp. 127–128. [Google Scholar]

- Fitts, P.M. The information capacity of the human motor system in controlling the amplitude of movement. J. Exp. Psychol. Gen. 1992, 121, 262–269. [Google Scholar] [CrossRef] [PubMed]

- Burno, R.A.; Wu, B.; Doherty, R.; Colett, H.; Elnaggar, R. Applying Fitts’ Law to Gesture Based Computer Interactions. Procedia Manuf. 2015, 3, 4342–4349. [Google Scholar] [CrossRef]

- Thompson, W.; Fleming, R.; Creem-Regehr, S.; Stefanucci, J.K. Visual Perception from a Computer Graphics Perspective; A. K. Peters: Natick, MA, USA, 2011; p. 540. [Google Scholar]

- Kunz, B.R.; Wouters, L.; Smith, D.; Thompson, W.B.; Creem-Regehr, S.H. Revisiting the effect of quality of graphics on distance judgments in virtual environments: A comparison of verbal reports and blind walking. Atten. Percept. Psychophys. 2009, 71, 1284–1293. [Google Scholar] [CrossRef]

- Richardson, A.R.; Waller, D. The effect of feedback training on distance estimation in virtual environments. Appl. Cogn. Psychol. 2005, 19, 1089–1108. [Google Scholar] [CrossRef]

- Jones, J.A.; Swan, J.E.; Bolas, M. Peripheral Stimulation and its Effect on Perceived Spatial Scale in Virtual Environments. IEEE Trans. Vis. Comput. Graph. 2013, 19, 701–710. [Google Scholar] [CrossRef]

- Bruder, G.; Sanz, F.A.; Olivier, A.H.; Lecuyer, A. Distance estimation in large immersive projection systems, revisited. In Proceedings of the IEEE Virtual Reality (VR), Arles, France, 23–27 March 2015; pp. 27–32. [Google Scholar]

- Bridgeman, B.; Gemmer, A.; Forsman, T.; Huemer, V. Processing spatial information in the sensorimotor branch of the visual system. Vis. Res. 2000, 40, 3539–3552. [Google Scholar] [CrossRef]

- Parks, T.E. Visual-illusion distance paradoxes: A resolution. Atten. Percept. Psychophys. 2012, 74, 1568–1569. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Hoffman, D.M.; Girshick, A.R.; Akeley, K.; Banks, M.S. Vergence–accommodation conflicts hinder visual performance and cause visual fatigue. J. Vis. 2008, 8, 33. [Google Scholar] [CrossRef] [PubMed]

| Author/s, year [number in reference list] | Display 1 | Motion plane (lateral, frontal, transversal) | Interaction Technique | Experimental Conditions | Results (findings) 2 |

|---|---|---|---|---|---|

| Bruder et al. 2013. [39] | Stereoscopic tabletop | Transversal plane | Direct mid-air selection and direct-2D touch screen | Direct mid-air selection vs. direct-2D touch screen. | Direct-2D touch screen was more accurate than 3D mid-air touching. |

| Bruder et al. 2013. [40] | Stereoscopic tabletop | Transversal plane | Direct mid-air selection with the tip of the user’s index finger. | Direct input with the user’s fingertip vs. offset-based input with a virtual offset cursor. | Direct input with the user’s fingertip was more accurate than offset-based input with the virtual offset cursor. |

| Lin, et al. 2019. [41] | Stereoscopic projection screen and HMD | Frontal plane | Direct mid-air selection using a pointing stick | Stereoscopic vs. immersive environments. | The immersive environment was less accurate than the stereoscopic environment. Overestimation was found in distance estimates. |

| Lin and Woldegiorgis 2017. [11] | Stereoscopic projection screen and real world | Frontal plane | Direct mid-air selection using a pointing stick | Stereoscopic vs. real environments. Pointing by vision vs. memory. | The stereoscopic environment was less accurate than the real world. Not significant. Overestimation was found in distance estimates. |

| Lin, et al. 2015. [42] | Stereoscopic projection screen | All three planes | Direct pointing with hand- and gaze-directed. | Direct pointing with hand-directed vs. direct pointing with gaze-directed. | Gaze-directed pointing was less accurate than hand-directed. Hand-directed pointing is suggested for tapping and tracking tasks. |

| Napieralski et al. 2011. [43] | HMD and real world | Frontal plane | Direct reaching using a stylus and verbal response | Direct reaching vs. verbal responses. Immersive virtual environment vs. real world. | Direct reaching tended to be more accurate and consistent than verbal responses. Underestimation was found more in IVE as compared to the real world. |

| Poupyrev et al. 2000. [44] | Desktop monitor | Frontal plane | Indirect-input with 6DOF controller | Absolute mapping control vs. relative mapping control. | Not significant. |

| Werkhoven and Groen 1998. [16] | HMD | Frontal plane | Direct input with virtual hand control and indirect input with 3D cursor control | Virtual hand control vs. 3D cursor control. Monoscopic virtual environment vs. stereoscopic virtual environment | 3D cursor control was less accurate than virtual hand control in the positioning task. The stereoscopic condition was more accurate than the monoscopic condition. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, C.J.; Caesaron, D.; Woldegiorgis, B.H. The Effects of Augmented Reality Interaction Techniques on Egocentric Distance Estimation Accuracy. Appl. Sci. 2019, 9, 4652. https://doi.org/10.3390/app9214652

Lin CJ, Caesaron D, Woldegiorgis BH. The Effects of Augmented Reality Interaction Techniques on Egocentric Distance Estimation Accuracy. Applied Sciences. 2019; 9(21):4652. https://doi.org/10.3390/app9214652

Chicago/Turabian StyleLin, Chiuhsiang Joe, Dino Caesaron, and Bereket Haile Woldegiorgis. 2019. "The Effects of Augmented Reality Interaction Techniques on Egocentric Distance Estimation Accuracy" Applied Sciences 9, no. 21: 4652. https://doi.org/10.3390/app9214652

APA StyleLin, C. J., Caesaron, D., & Woldegiorgis, B. H. (2019). The Effects of Augmented Reality Interaction Techniques on Egocentric Distance Estimation Accuracy. Applied Sciences, 9(21), 4652. https://doi.org/10.3390/app9214652