Hyperspectral Super-Resolution Technique Using Histogram Matching and Endmember Optimization

Abstract

1. Introduction

2. Problem Formulation

3. Proposed Solution

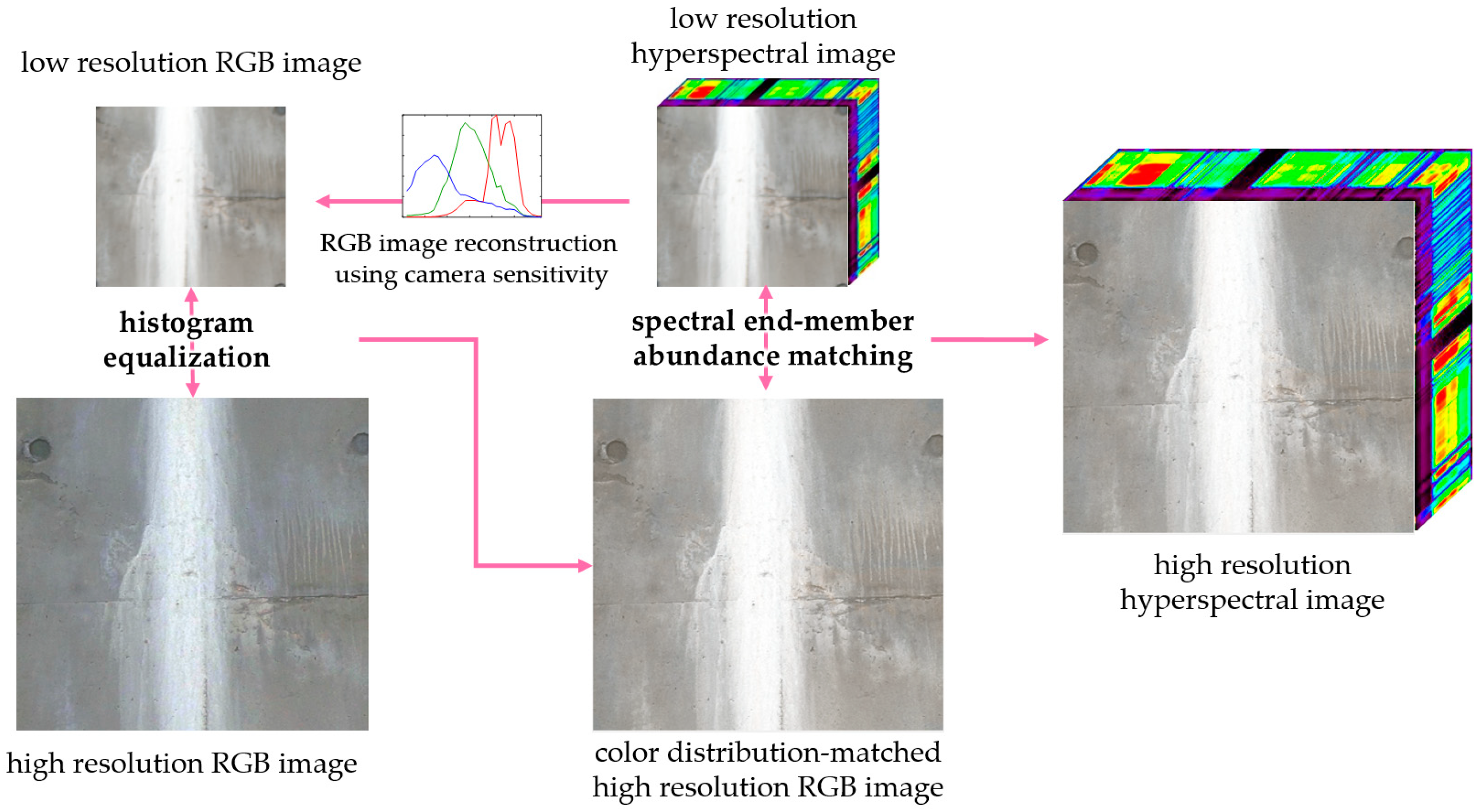

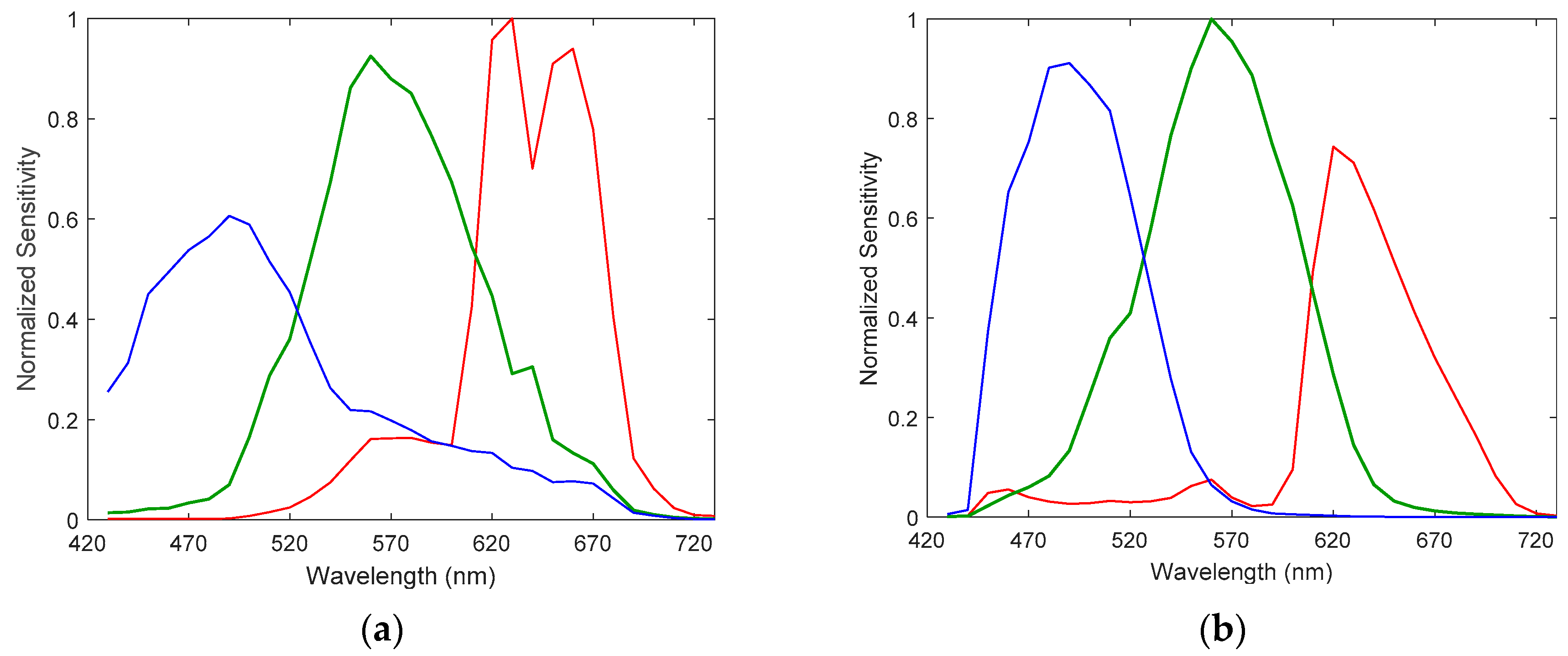

3.1. Overall Scheme

3.2. Overall Algorithm and Implementation

4. Experiment

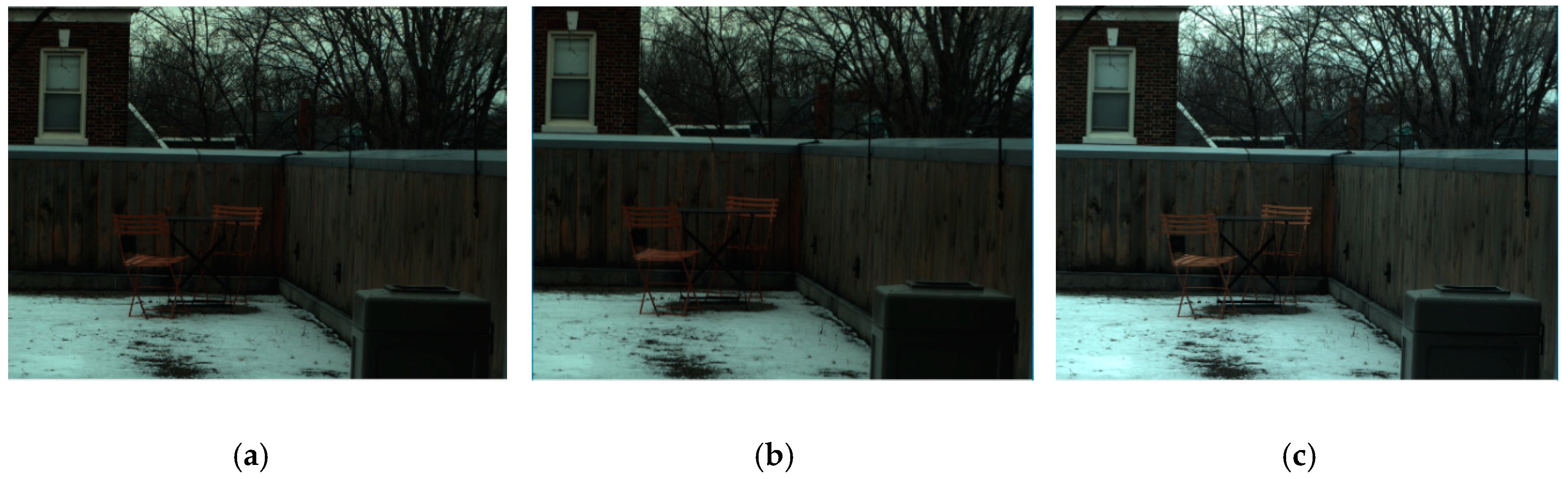

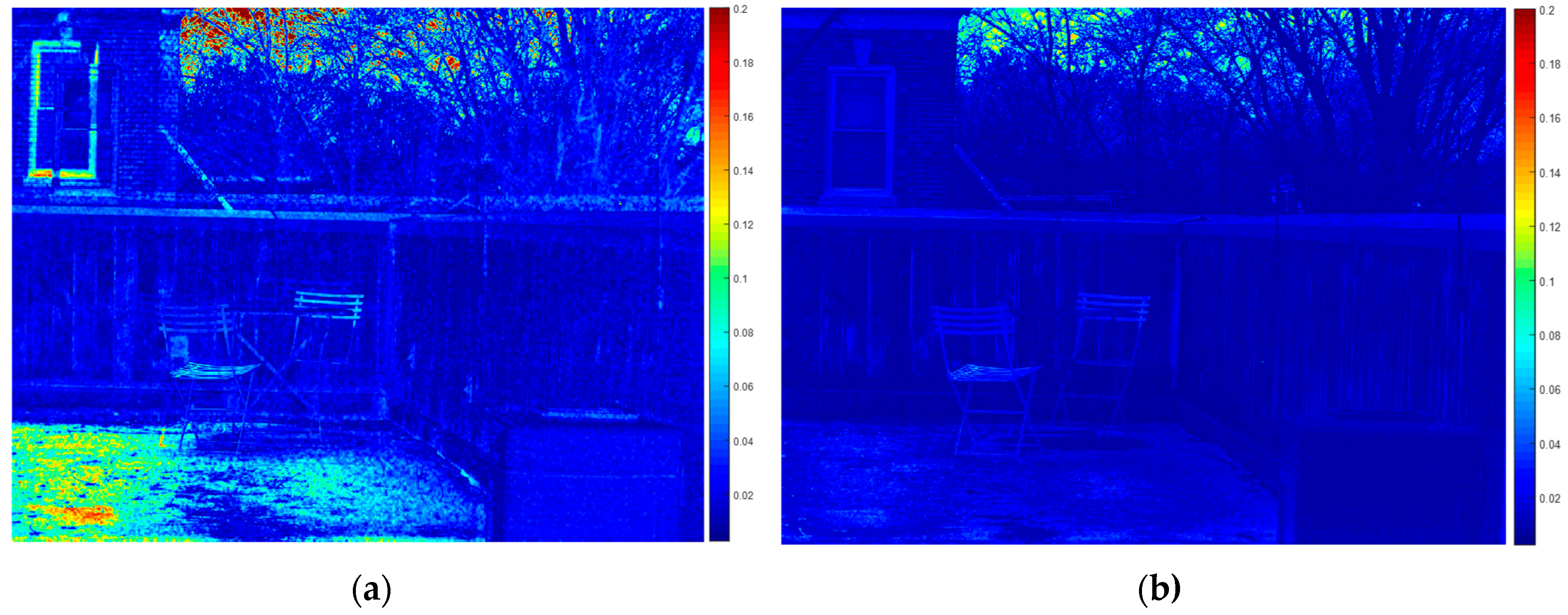

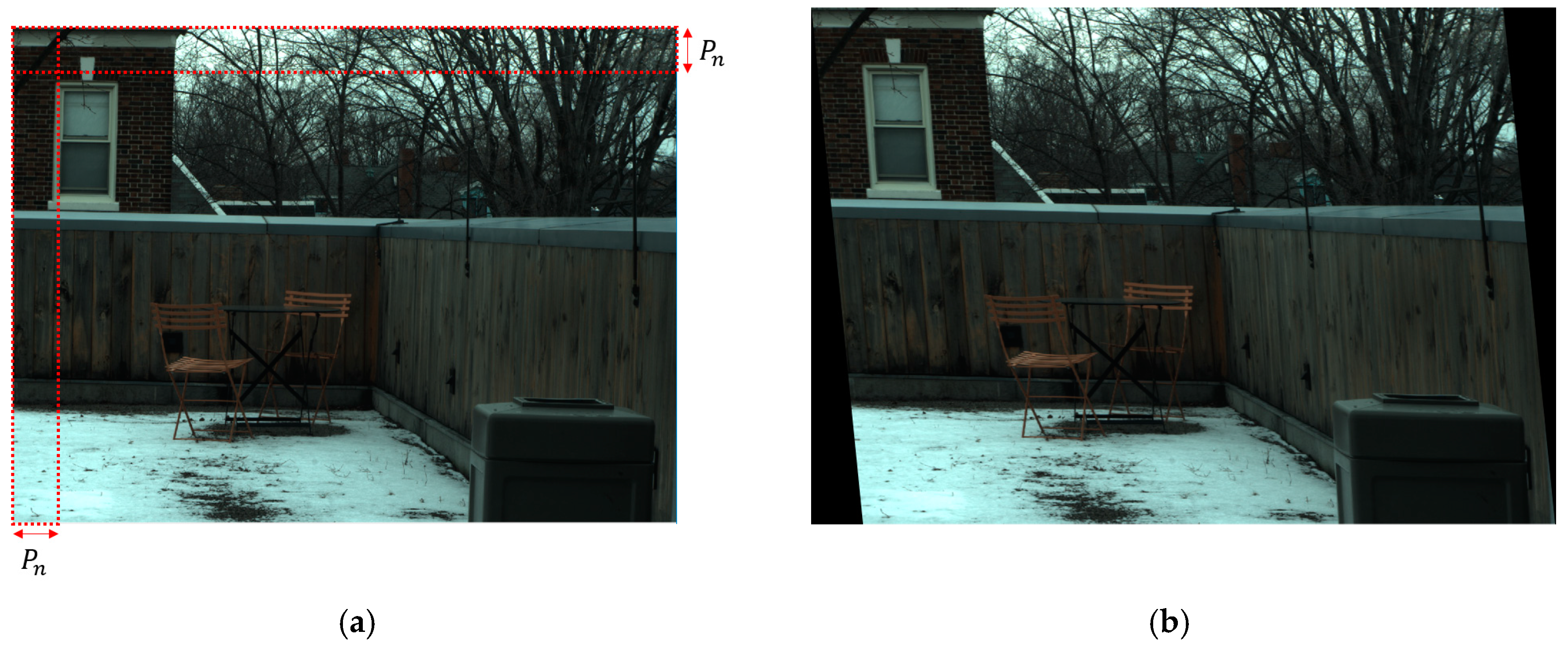

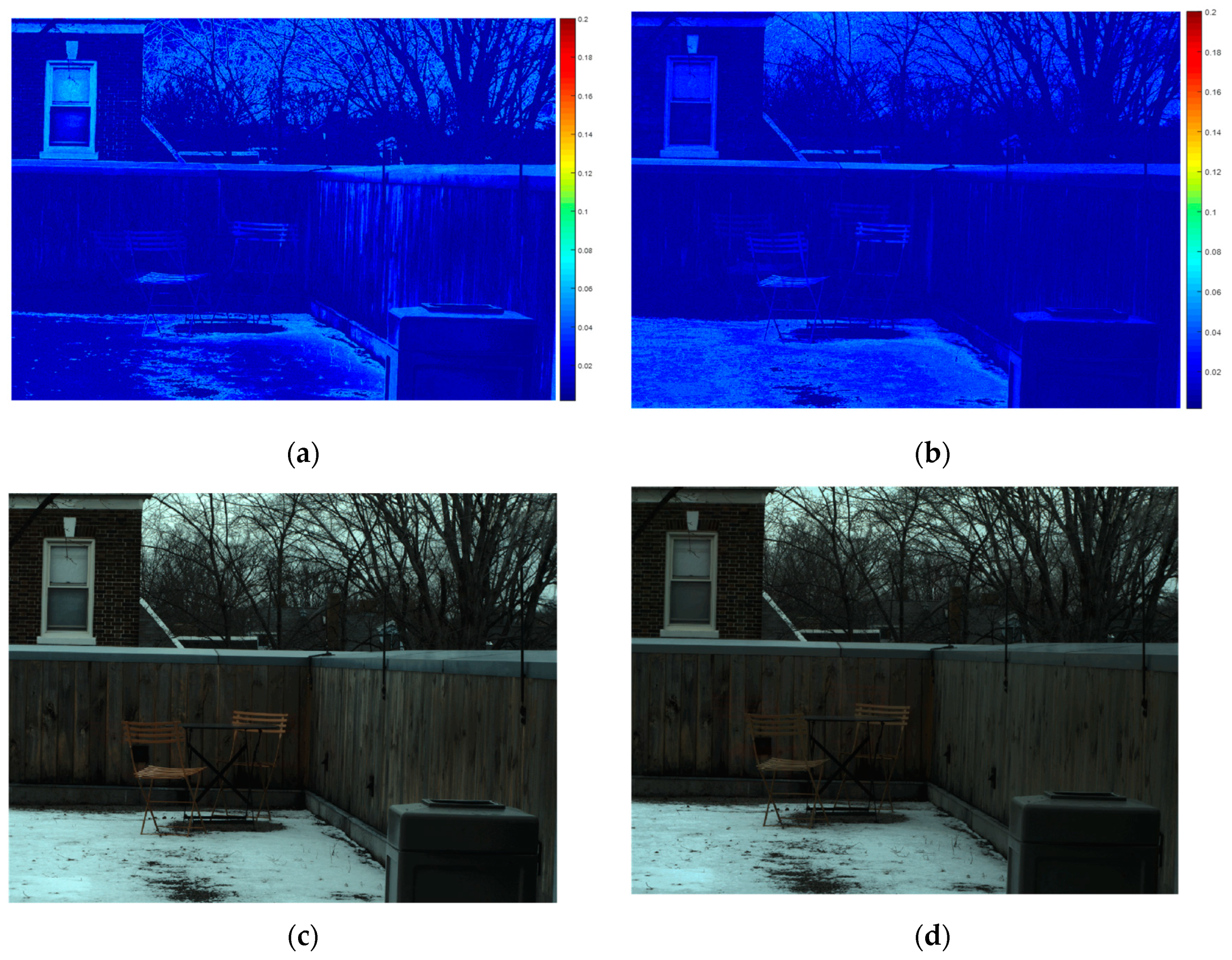

4.1. Baseline Study for Spatial Information Mismatch

4.2. Proposed Method Evaluation

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Mahesh, S.; Jayas, D.S.; Paliwal, J.; White, N.D.G. Hyperspectral imaging to classify and monitor quality of agricultural materials. J. Stored Prod. Res. 2015, 61, 17–26. [Google Scholar] [CrossRef]

- Brackx, M.; Van Wittenberghe, S.; Verhelst, J.; Scheunders, P.; Samson, R. Hyperspectral leaf reflectance of Carpinus betulus L. saplings for urban air quality estimation. Environ. Pollut. 2017, 220, 159–167. [Google Scholar] [CrossRef] [PubMed]

- Balzarolo, M.; Peñuelas, J.; Filella, I.; Portillo-Estrada, M.; Ceulemans, R. Assessing Ecosystem Isoprene Emissions by Hyperspectral Remote Sensing. Remote Sens. 2018, 10, 1086. [Google Scholar] [CrossRef]

- Fossi, A.P.; Ferrec, Y.; Roux, N.; D’almeida, O.; Guerineau, N.; Sauer, H. Miniature and cooled hyperspectral camera for outdoor surveillance applications in the mid-infrared. Opt. Lett. 2016, 41, 1901. [Google Scholar] [CrossRef] [PubMed]

- Vanegas, F.; Bratanov, D.; Powell, K.; Weiss, J.; Gonzalez, F. A Novel Methodology for Improving Plant Pest Surveillance in Vineyards and Crops Using UAV-Based Hyperspectral and Spatial Data. Sensors 2018, 18, 260. [Google Scholar] [CrossRef] [PubMed]

- Ray, A.; Kopelman, R.; Chon, B.; Briggman, K.; Hwang, J. Scattering based hyperspectral imaging of plasmonic nanoplate clusters towards biomedical applications. J. Biophotonics 2016, 9, 721–729. [Google Scholar] [CrossRef]

- Weijtmans, P.J.C.; Shan, C.; Tan, T.; Brouwer de Koning, S.G.; Ruers, T.J.M. A Dual Stream Network for Tumor Detection in Hyperspectral Images. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019; pp. 1256–1259. [Google Scholar]

- Mielke, C.; Rogass, C.; Boesche, N.; Segl, K.; Altenberger, U. EnGeoMAP 2.0—Automated Hyperspectral Mineral Identification for the German EnMAP Space Mission. Remote Sens. 2016, 8, 127. [Google Scholar] [CrossRef]

- Scafutto, R.D.M.; de Souza Filho, C.R.; Rivard, B. Characterization of mineral substrates impregnated with crude oils using proximal infrared hyperspectral imaging. Remote Sens. Environ. 2016, 179, 116–130. [Google Scholar] [CrossRef]

- Wang, J.; Hu, P.; Lu, Q.; Shu, R. An airborne pushbroom hyperspectral imager with wide field of view. Chin. Opt. Lett. 2005, 3, 689–691. [Google Scholar]

- Johnson, W.R.; Wilson, D.W.; Fink, W.; Humayun, M.; Bearman, G. Snapshot hyperspectral imaging in ophthalmology. J. Biomed. Opt. 2007, 12, 014036. [Google Scholar] [CrossRef] [PubMed]

- Wei, Q.; Bioucas-Dias, J.; Dobigeon, N.; Tourneret, J.Y. Hyperspectral and Multispectral Image Fusion Based on a Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3658–3668. [Google Scholar] [CrossRef]

- Wang, Q.; Shen, Y.; Zhang, Y.; Zhang, J.Q. A quantitative method for evaluating the performances of hyperspectral image fusion. IEEE Trans. Instrum. Meas. 2003, 52, 1041–1047. [Google Scholar] [CrossRef]

- Wilson, T.A. Perceptual-based hyperspectral image fusion using multiresolution analysis. Opt. Eng. 1995, 34, 3154. [Google Scholar] [CrossRef]

- Akgun, T.; Altunbasak, Y.; Mersereau, R.M. Super-resolution reconstruction of hyperspectral images. IEEE Trans. Image Process. 2005, 14, 1860–1875. [Google Scholar] [CrossRef]

- Zhao, Y.; Yang, J.; Zhang, Q.; Song, L.; Cheng, Y.; Pan, Q. Hyperspectral imagery super-resolution by sparse representation and spectral regularization. EURASIP J. Adv. Signal Process. 2011, 2011. [Google Scholar] [CrossRef]

- Kwon, H.; Tai, Y.W. RGB-guided hyperspectral image upsampling. In Proceedings of the the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 307–315. [Google Scholar]

- Mitani, Y.; Hamamoto, Y. A Consideration of Pan-Sharpen Images by HSI Transformation Approach. In Proceedings of the SICE Annual Conference 2010, Taipei, Taiwan, 8–21 August 2010; pp. 1283–1284. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS+Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, G. A Pan-Sharpening Method Based on Evolutionary Optimization and IHS Transformation. Math. Probl. Eng. 2017, 2017, 1–8. [Google Scholar] [CrossRef]

- Li, Y.; Xie, W.; Li, H. Hyperspectral image reconstruction by deep convolutional neural network for classification. Pattern Recognit. 2017, 63, 371–383. [Google Scholar] [CrossRef]

- Yuan, Y.; Zheng, X.; Lu, X. Hyperspectral Image Superresolution by Transfer Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1963–1974. [Google Scholar] [CrossRef]

- Wycoff, E.; Chan, T.H.; Jia, K.; Ma, W.K.; Ma, Y. A non-negative sparse promoting algorithm for high resolution hyperspectral imaging. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 1409–1413. [Google Scholar]

- Huang, B.; Member, A.; Song, H.; Cui, H.; Peng, J.; Xu, Z. Spatial and Spectral Image Fusion Using Sparse Matrix Factorization. IEEE Trans. Geosci. Remote Sens. 2013, 52, 1693–1703. [Google Scholar] [CrossRef]

- Simoes, M.; Bioucas-Dias, J.; Almeida, L.B.; Chanussot, J. A convex formulation for hyperspectral image superresolution via subspace-based regularization. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3373–3388. [Google Scholar] [CrossRef]

- Fang, L.; Zhuo, H.; Li, S. Neurocomputing Super-resolution of hyperspectral image via superpixel-based sparse representation. Neurocomputing 2018, 273, 171–177. [Google Scholar] [CrossRef]

- Lanaras, C.; Baltsavias, E.; Schindler, K. Hyperspectral super-resolution by coupled spectral unmixing. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3586–3594. [Google Scholar]

- Jiang, J.; Liu, D.; Gu, J.; Susstrunk, S. What is the space of spectral sensitivity functions for digital color cameras? In Proceedings of the IEEE Workshop on Applications of Computer Vision, Tampa, FL, USA, 5–17 January 2013; pp. 168–179. [Google Scholar]

- Enhance Contrast Using Histogram Equalization - MATLAB Histeq. Available online: https://www.mathworks.com/help/images/ref/histeq.html (accessed on 4 September 2019).

- Bioucas-Dias, J.M. A variable splitting augmented Lagrangian approach to linear spectral unmixing. In Proceedings of the 2009 First Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing, Grenoble, France, 26–28 August 2009; pp. 1–4. [Google Scholar]

- Iordache, M.; Bioucas-dias, J.M.; Plaza, A.; Member, S. Sparse Unmixing of Hyperspectral Data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2014–2039. [Google Scholar] [CrossRef]

- Jose, M. Bioucas Dias Welcome to the Home Page of Jose. Available online: http://www.lx.it.pt/~bioucas/ (accessed on 4 September 2019).

- Chakrabarti, A.; Zickler, T. Statistics of Real-World Hyperspectral Images. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 193–200. [Google Scholar]

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.W.; Heidebrecht, K.B.; Shapiro, A.T.; Barloon, P.J.; Goetz, A.F.H. The spectral image processing system (SIPS)-interactive visualization and analysis of imaging spectrometer data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

- Akhtar, N.; Shafait, F.; Mian, A. Bayesian sparse representation for hyperspectral image super resolution. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3631–3640. [Google Scholar]

- Akhtar, N.; Shafait, F.; Mian, A. Sparse Spatio-spectral Representation for Hyperspectral Image Super-resolution. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 63–78. [Google Scholar]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled nonnegative matrix factorization unmixing for hyperspectral and multispectral data fusion. IEEE Trans. Geosci. Remote Sens. 2012, 50, 528–537. [Google Scholar] [CrossRef]

| Requires: H (low-resolution hyperspectral image) I (high-resolution RGB image) C (RGB camera sensitivity) S (upsampling rate) |

| Reconstruct L by applying C to H Match histogram of I to that of L Initialize with SISAL and with SUnSAL from H Initialize by upsampling with S k ← 0 while not converged do k ← k + 1 ← ; Estimate with (8a) and (8b) end while return |

| Method | RMSE | SAM | ||

|---|---|---|---|---|

| Average | Median | Average | Median | |

| Original results for the entire dataset | 1.7 | 1.5 | 2.9 | 2.7 |

| Translation by cutting off | 11.6 | 10.36 | 7.72 | 8.11 |

| Histogram not matched | 8.88 | 8.55 | 8.06 | 7.15 |

| Method of Field of View Mismatch | Lanaras et al. [28] | Proposed Method | |||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE | SAM | RMSE | SAM | ||||||

| Average | Median | Average | Median | Average | Median | Average | Median | ||

| Without Transformation | 2.68 | 2.11 | 5.58 | 5.47 | 2.68 | 2.57 | 6.69 | 5.32 | |

| Shear Transformation Using Affine Matrix | 0.1 | 9.79 | 7.70 | 7.16 | 7.35 | 3.64 | 2.96 | 6.73 | 6.03 |

| 0.2 | 11.76 | 9.33 | 7.88 | 8.00 | 4.4 | 3.56 | 6.9 | 6.26 | |

| 0.3 | 12.89 | 10.62 | 8.37 | 8.68 | 5.19 | 4.30 | 7.2 | 6.18 | |

| Translation by Cutting off Upper and Left Sides | 9.12 | 6.93 | 6.71 | 6.83 | 3.56 | 2.87 | 6.65 | 5.65 | |

| 10.95 | 8.17 | 7.31 | 7.40 | 4.25 | 3.27 | 6.86 | 5.80 | ||

| 12.02 | 9.27 | 7.80 | 8.03 | 4.93 | 3.68 | 7.08 | 5.98 | ||

| 12.81 | 9.99 | 8.24 | 8.52 | 5.60 | 4.13 | 7.24 | 6.20 | ||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, B.; Cho, S. Hyperspectral Super-Resolution Technique Using Histogram Matching and Endmember Optimization. Appl. Sci. 2019, 9, 4444. https://doi.org/10.3390/app9204444

Kim B, Cho S. Hyperspectral Super-Resolution Technique Using Histogram Matching and Endmember Optimization. Applied Sciences. 2019; 9(20):4444. https://doi.org/10.3390/app9204444

Chicago/Turabian StyleKim, Byunghyun, and Soojin Cho. 2019. "Hyperspectral Super-Resolution Technique Using Histogram Matching and Endmember Optimization" Applied Sciences 9, no. 20: 4444. https://doi.org/10.3390/app9204444

APA StyleKim, B., & Cho, S. (2019). Hyperspectral Super-Resolution Technique Using Histogram Matching and Endmember Optimization. Applied Sciences, 9(20), 4444. https://doi.org/10.3390/app9204444